-

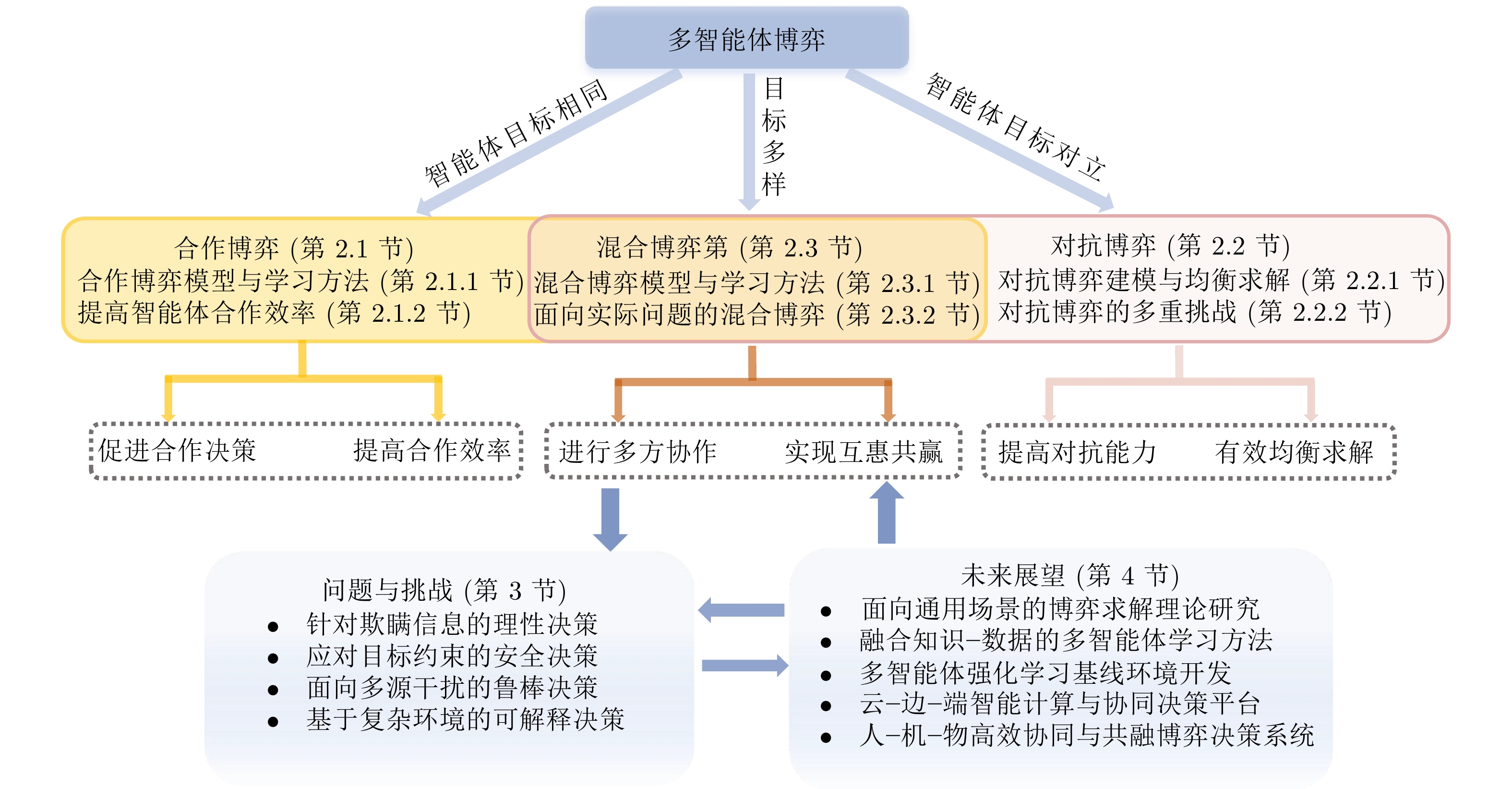

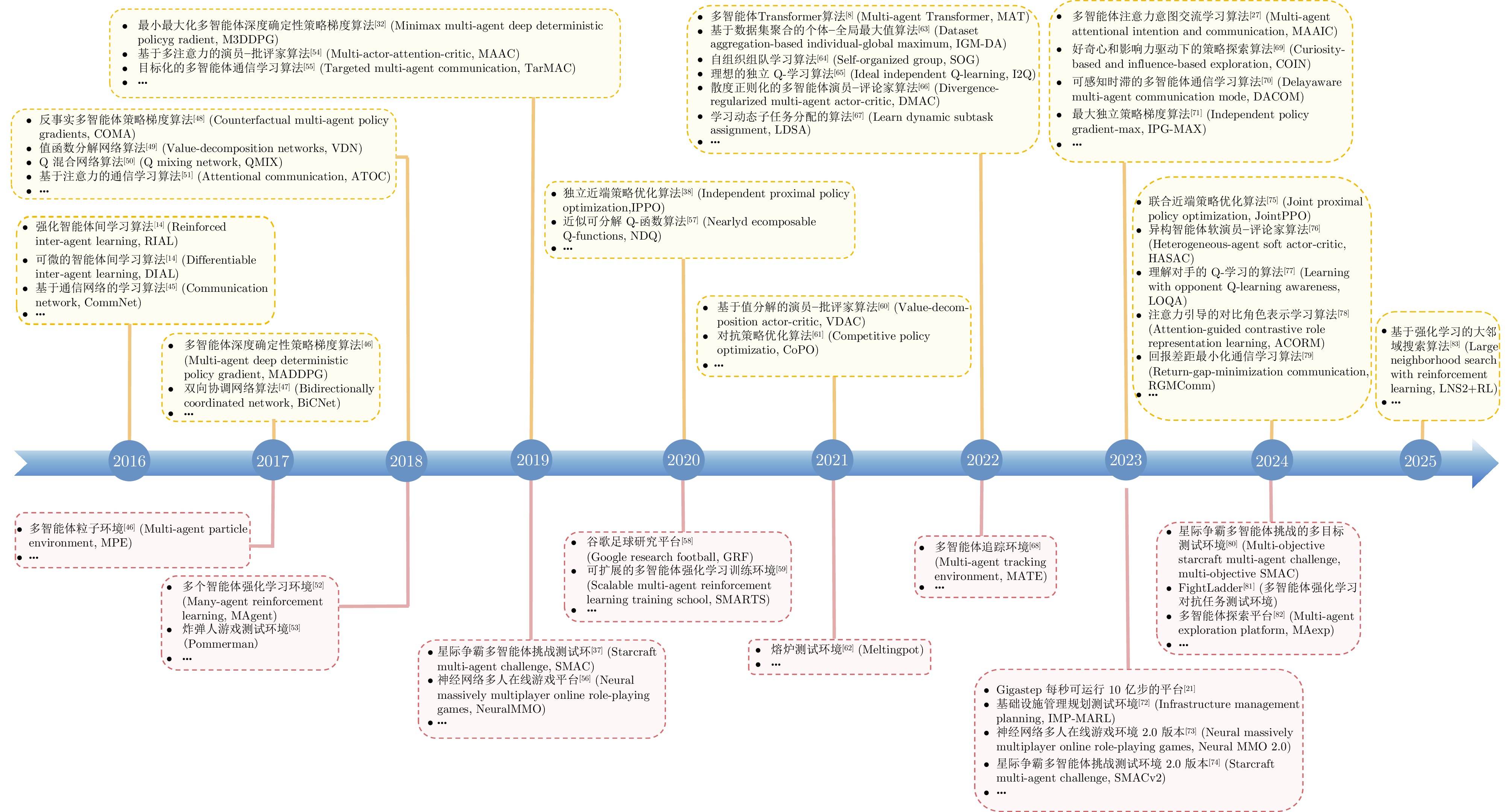

摘要: 多智能体强化学习(Multi-agent reinforcement learning, MARL)作为博弈论、控制论和多智能体学习的交叉研究领域, 是多智能体系统(Multi-agent systems, MASs)研究中的前沿方向, 赋予智能体在动态多维的复杂环境中通过交互和决策完成多样化任务的能力. 多智能体强化学习正在向应用对象开放化、应用问题具身化、应用场景复杂化的方向发展, 并逐渐成为解决现实世界中博弈决策问题的最有效工具. 本文对基于多智能体强化学习的博弈进行系统性综述. 首先, 介绍多智能体强化学习的基本理论, 梳理多智能体强化学习算法与基线测试环境的发展进程. 其次, 针对合作、对抗以及混合三种多智能体强化学习任务, 从提高智能体合作效率、提升智能体对抗能力的维度来介绍多智能体强化学习的最新进展, 并结合实际应用探讨混合博弈的前沿研究方向. 最后, 对多智能体强化学习的应用前景和发展趋势进行总结与展望.Abstract: Multi-agent reinforcement learning (MARL), which stands at the intersection of game theory, cybernetics and multi-agent learning, represents the cutting-edge domain within the realm of multi-agent systems (MASs) research. MARL empowers the agents with the capability to complete a variety of complex tasks through interaction and decision-making in the dynamic multi-dimensional and complicated practical environment. When progressing towards the openness of application objects, the embodiment of application issues and the complication of application contexts, MARL is gradually becoming the most effective tool for solving game and decision-making problems in the real world. This paper systematically reviews the game based on MARL. First, the basic theory of MARL is introduced, and the development process of MARL algorithms and the baseline testing environment have been introduced and summarized. Then, we focus on three types of tasks within MARL, which are cooperation, competition and mixed tasks. The latest progress in MARL is introduced by concentrating on improving the cooperative efficiency and enhancing the adversarial abilities of agents, and the most recent researches on mixed games, in combination with their practical applications, are investigated. Finally, the prospects of application and the trends of development for MARL are summarized and prospected.

-

表 1 多智能体强化学习的最新测试环境介绍

Table 1 Introduction of the latest test environments of multi-agent reinforcement learning

测试环境 任务类型 适用场景/特点 动作空间 连续 离散 MATE[68] (2022) 混合 针对多智能体目标覆盖控制, 如无线传感器网络 $\checkmark$ $\checkmark$ Gigastep[21] (2023) 混合 支持具有随机性和部分可观性的3D动态环境 $\checkmark$ $\checkmark$ IMP-MARL[72] (2023) 合作 针对基础设施管理规划, 如海上风力发电机组维护 $\checkmark$ Neural MMO 2.0[73] (2023) 混合 在Neural MMO环境上增加自定义的目标和奖励 $\checkmark$ SMACv2[74] (2023) 合作 在SMAC环境上增加随机性和部分可观察性 $\checkmark$ Multi-objective SMAC[80] (2024) 混合 在SMAC环境上增加长期任务和多个对抗目标 $\checkmark$ FightLadder[81] (2024) 对抗 针对多种跨平台视频格斗游戏, 如街霸、拳皇 $\checkmark$ MAexp[82] (2024) 合作 用于多规模、多类型机器人团队合作探索策略 $\checkmark$ 表 2 合作多智能体强化学习中通信机制分类

Table 2 Classification of communication mechanisms in cooperative multi-agent reinforcement learning

维度 分类 通信机制 通信约束 带宽约束 DIAL[14], RIAL[16], NDQ[57], ETCNet[118], TCOM[125] 信息时延 DACOM[70], RGMComm[79] 噪声干扰 MAGI[119], DACOM[70] 通信策略 预设定的 DIAL[14], RIAL[16], CommNet[45], TarMAC[55] 可学习的 NDQ[57], ATOC[51], ETCNet[118], MAGI[119], TEM[120], DACOM[70], RGMComm[79], TCOM[125] 通信对象 所有智能体 CommNet[45], TarMAC[55], ETCNet[118], DACOM[70] 邻居智能体 MAGI[119], RGMComm[79] 特定智能体 ATOC[51], TEM[120], TCOM[125] -

[1] 缪青海, 王兴霞, 杨静, 赵勇, 王雨桐, 陈圆圆, 等. 从基础智能到通用智能: 基于大模型的GenAI和AGI之现状与展望. 自动化学报, 2024, 50(4): 674−687Miao Qing-Hai, Wang Xing-Xia, Yang Jing, Zhao Yong, Wang Yu-Tong, Chen Yuan-Yuan, et al. From foundation intelligence to general intelligence: The state-of-art and perspectives of GenAI and AGI based on foundation models. Acta Automatica Sinica, 2024, 50(4): 674−687 [2] 施伟, 冯旸赫, 程光权, 黄红蓝, 黄金才, 刘忠, 等. 基于深度强化学习的多机协同空战方法研究. 自动化学报, 2021, 47(7): 1610−1623Shi Wei, Feng Yang-He, Cheng Guang-Quan, Huang Hong-Lan, Huang Jin-Cai, Liu Zhong, et al. Research on multi-aircraft cooperative air combat method based on deep reinforcement learning. Acta Automatica Sinica, 2021, 47(7): 1610−1623 [3] 刘华平, 郭迪, 孙富春, 张新钰. 基于形态的具身智能研究: 历史回顾与前沿进展. 自动化学报, 2023, 49(6): 1131−1154Liu Hua-Ping, Guo Di, Sun Fu-Chun, Zhang Xin-Yu. Morphology-based embodied intelligence: Historical retrospect and research progress. Acta Automatica Sinica, 2023, 49(6): 1131−1154 [4] 熊珞琳, 毛帅, 唐漾, 孟科, 董朝阳, 钱锋. 基于强化学习的综合能源系统管理综述. 自动化学报, 2021, 47(10): 2321−2340Xiong Luo-Lin, Mao Shuai, Tang Yang, Meng Ke, Dong Zhao-Yang, Qian Feng. Reinforcement learning based integrated energy system management: A survey. Acta Automatica Sinica, 2021, 47(10): 2321−2340 [5] Kiran B R, Sobh I, Talpaert V, Mannion P, Al Sallab A A, Yogamani S, et al. Deep reinforcement learning for autonomous driving: A survey. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(6): 4909−4926 doi: 10.1109/TITS.2021.3054625 [6] Qu Y, Ma J M, Wu F. Safety constrained multi-agent reinforcement learning for active voltage control. In: Proceedings of the 33rd International Joint Conference on Artificial Intelligence. Jeju, South Korea: IJCAI, 2024. 184−192 [7] 王龙, 黄锋. 多智能体博弈、学习与控制. 自动化学报, 2023, 49(3): 580−613Wang Long, Huang Feng. An interdisciplinary survey of multi-agent games, learning, and control. Acta Automatica Sinica, 2023, 49(3): 580−613 [8] Wen M N, Kuba G J, Lin R J, Zhang W N, Wen Y, Wang J, et al. Multi-agent reinforcement learning is a sequence modeling problem. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 1201 [9] Littman M L. Markov games as a framework for multi-agent reinforcement learning. In: Proceedings of the 11th International Conference on Machine Learning. New Brunswick, USA: Morgan Kaufmann Publishers Inc., 1994. 157−163 [10] Sivagnanam A, Pettet A, Lee H, Mukhopadhyay A, Dubey A, Laszka A. Multi-agent reinforcement learning with hierarchical coordination for emergency responder stationing. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: PMLR, 2024. 45813−45834 [11] Puterman M L. Markov decision processes. Handbooks in Operations Research and Management Science. Amsterdam: Elsevier, 1990. 331−434 [12] 蒲志强, 易建强, 刘振, 丘腾海, 孙金林, 李非墨. 知识和数据协同驱动的群体智能决策方法研究综述. 自动化学报, 2022, 48(3): 627−643Pu Zhi-Qiang, Yi Jian-Qiang, Liu Zhen, Qiu Teng-Hai, Sun Jin-Lin, Li Fei-Mo. Knowledge-based and data-driven integrating methodologies for collective intelligence decision making: A survey. Acta Automatica Sinica, 2022, 48(3): 627−643 [13] Wen M N, Wan Z Y, Zhang W N, Wang J, Wen Y. Reinforcing language agents via policy optimization with action decomposition. In: Proceedings of the 38th Annual Conference on Neural Information Processing Systems. Vancouver, Canada: NeurIPS, 2024. [14] Foerster J N, Assael Y M, de Freitas N, Whiteson S. Learning to communicate with deep multi-agent reinforcement learning. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 2145−2153 [15] 郝建业, 邵坤, 李凯, 李栋, 毛航宇, 胡舒悦, 等. 博弈智能的研究与应用. 中国科学: 信息科学, 2023, 53(10): 1892−1923 doi: 10.1360/SSI-2023-0010Hao Jian-Ye, Shao Kun, Li Kai, Li Dong, Mao Hang-Yu, Hu Shu-Yue, et al. Research and applications of game intelligence. Scientia Sinica Informationis, 2023, 53(10): 1892−1923 doi: 10.1360/SSI-2023-0010 [16] Hausknecht M, Stone P. Deep recurrent Q-learning for partially observable MDPs. In: Proceedings of the AAAI Fall Symposium on Sequential Decision Making for Intelligent Agents. Arlington, USA: AAAI, 2015. 29−37 [17] Li X X, Meng M, Hong Y G, Chen J. A survey of decision making in adversarial games. Science China Information Sciences, 2024, 67(4): Article No. 141201 doi: 10.1007/s11432-022-3777-y [18] Qin R J, Yu Y. Learning in games: A systematic review. Science China Information Sciences, 2024, 67(7): Article No. 171101 doi: 10.1007/s11432-023-3955-x [19] Zhu C X, Dastani M, Wang S H. A survey of multi-agent deep reinforcement learning with communication. Autonomous Agents and Multi-agent Systems, 2024, 38(1): Article No. 4 doi: 10.1007/s10458-023-09633-6 [20] Meng Q, Nian X H, Chen Y, Chen Z. Attack-resilient distributed Nash equilibrium seeking of uncertain multiagent systems over unreliable communication networks. IEEE Transactions on Neural Networks and Learning Systems, 2024, 35(5): 6365−6379 doi: 10.1109/TNNLS.2022.3209313 [21] Lechner M, Yin L H, Seyde T, Wang T H, Xiao W, Hasani R, et al. Gigastep-one billion steps per second multi-agent reinforcement learning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 9 [22] Qu Y, Wang B Y, Shao J Z, Jiang Y H, Chen C, Ye Z B, et al. Hokoff: Real game dataset from honor of kings and its offline reinforcement learning benchmarks. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 974 [23] Mazzaglia P, Verbelen T, Dhoedt B, Courville A, Rajeswar S. Multimodal foundation world models for generalist embodied agents. In: Proceedings of the ICML Workshop: Multi-modal Foundation Model Meets Embodied AI. Vienna, Austria: ICML, 2024. [24] Li M L, Zhao S Y, Wang Q N, Wang K R, Zhou Y, Srivastava S, et al. Embodied agent interface: Benchmarking LLMs for embodied decision making. In: Proceedings of the 38th Annual Conference on Neural Information Processing Systems. Vancouver, Canada: NeurIPS, 2024. [25] Heinrich J, Lanctot M, Silver D. Fictitious self-play in extensive-form games. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: JMLR, 2015. 805−813 [26] Wang J R, Hong Y T, Wang J L, Xu J P, Tang Y, Han Q L, et al. Cooperative and competitive multi-agent systems: From optimization to games. IEEE/CAA Journal of Automatica Sinica, 2022, 9(5): 763−783 doi: 10.1109/JAS.2022.105506 [27] 俞文武, 杨晓亚, 李海昌, 王瑞, 胡晓惠. 面向多智能体协作的注意力意图与交流学习方法. 自动化学报, 2023, 49(11): 2311−2325Yu Wen-Wu, Yang Xiao-Ya, Li Hai-Chang, Wang Rui, Hu Xiao-Hui. Attentional intention and communication for multi-agent learning. Acta Automatica Sinica, 2023, 49(11): 2311−2325 [28] Xu Z W, Zhang B, Li D P, Zhang Z R, Zhou G C, Chen H, et al. Consensus learning for cooperative multi-agent reinforcement learning. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI, 2023. 11726−11734 [29] Huang B H, Lee J, Wang Z R, Yang Z R. Towards general function approximation in zero-sum Markov games. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022. [30] Alacaoglu A, Viano L, He N, Cevher V. A natural actor-critic framework for zero-sum Markov games. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 307−366 [31] Li C J, Zhou D R, Gu Q Q, Jordan M I. Learning two-player mixture Markov games: Kernel function approximation and correlated equilibrium. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 2410 [32] Li S H, Wu Y, Cui X Y, Dong H H, Fang F, Russell S. Robust multi-agent reinforcement learning via minimax deep deterministic policy gradient. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 4213−4220 [33] Song Z A, Mei S, Bai Y. When can we learn general-sum Markov games with a large number of players sample-efficiently? In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022. [34] Jiang H Z, Cui Q W, Xiong Z H, Fazel M, Du S S. A black-box approach for non-stationary multi-agent reinforcement learning. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [35] Silver D, Huang A, Maddison C J, Guez A, Sifre L, van den Driessche G, et al. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587): 484−489 [36] Jaderberg M, Czarnecki W M, Dunning I, Marris L, Lever G, Castañeda A G, et al. Human-level performance in 3D multiplayer games with population-based reinforcement learning. Science, 2019, 364(6443): 859−865 doi: 10.1126/science.aau6249 [37] Samvelyan M, Rashid T, de Witt C S, Farquhar G, Nardelli N, Rudner T G J, et al. The StarCraft multi-agent challenge. In: Proceedings of the 18th International Conference on Autonomous Agents and Multiagent Systems. Montreal, Canada: AAMAS, 2019. 2186−2188 [38] de Witt C S, Gupta T, Makoviichuk D, Makoviychuk V, Torr P H S, Sun M F, et al. Is independent learning all you need in the StarCraft multi-agent challenge? arXiv: 2011.09533, 2020. [39] Seraj E, Xiong J, Schrum M, Gombolay M. Mixed-initiative multiagent apprenticeship learning for human training of robot teams. In: Proceedings of the 37th Conference on Neural Information Processing Systems. New Orleans, USA: NeurIPS, 2023. [40] 温广辉, 杨涛, 周佳玲, 付俊杰, 徐磊. 强化学习与自适应动态规划: 从基础理论到多智能体系统中的应用进展综述. 控制与决策, 2023, 38(5): 1200−1230Wen Guang-Hui, Yang Tao, Zhou Jia-Ling, Fu Jun-Jie, Xu Lei. Reinforcement learning and adaptive/approximate dynamic programming: A survey from theory to applications in multi-agent systems. Control and Decision, 2023, 38(5): 1200−1230 [41] Yang Y D, Wang J. An overview of multi-agent reinforcement learning from game theoretical perspective. arXiv preprint arXiv: 2011.00583, 2020. [42] 王涵, 俞扬, 姜远. 基于通信的多智能体强化学习进展综述. 中国科学: 信息科学, 2022, 52(5): 742−764 doi: 10.1360/SSI-2020-0180Wang Han, Yu Yang, Jiang Yuan. Review of the progress of communication-based multi-agent reinforcement learning. Scientia Sinica Informationis, 2022, 52(5): 742−764 doi: 10.1360/SSI-2020-0180 [43] 王雪松, 王荣荣, 程玉虎. 安全强化学习综述. 自动化学报, 2023, 49(9): 1813−1835Wang Xue-Song, Wang Rong-Rong, Cheng Yu-Hu. Safe reinforcement learning: A survey. Acta Automatica Sinica, 2023, 49(9): 1813−1835 [44] 孙悦雯, 柳文章, 孙长银. 基于因果建模的强化学习控制: 现状及展望. 自动化学报, 2023, 49(3): 661−677Sun Yue-Wen, Liu Wen-Zhang, Sun Chang-Yin. Causality in reinforcement learning control: The state of the art and prospects. Acta Automatica Sinica, 2023, 49(3): 661−677 [45] Sukhbaatar S, Szlam A, Fergus R. Learning multiagent communication with backpropagation. In: Proceedings of 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 2252−2260 [46] Lowe R, Wu Y, Tamar A, Harb J, Abbeel P, Mordatch I. Multi-agent actor-critic for mixed cooperative-competitive environments. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 6382−6393 [47] Peng P, Wen Y, Yang Y D, Yuan Q, Tang Z K, Tang H T, et al. Multiagent bidirectionally-coordinated nets: Emergence of human-level coordination in learning to play StarCraft combat games. arXiv: 1703.10069, 2017. [48] Peng B, Rashid T, Schroeder de Witt C A. Kamienny P A, Torr P H S, Böhmer W, et al. FACMAC: Factored multi-agent centralised policy gradients. In: Proceedings of the 35th Conference on Neural Information Processing Systems. Virtual Event: NeurIPS, 2021. [49] Sunehag P, Lever G, Gruslys A, Czarnecki W M, Zambaldi V, Jaderberg M, et al. Value-decomposition networks for cooperative multi-agent learning based on team reward. In: Proceedings of the 17th International Conference on Autonomous Agents and Multiagent Systems. Stockholm, Sweden: AAMAS, 2018. 2085−2087 [50] Rashid T, Samvelyan M, Schroeder C, Farquhar G, Foerster J, Whiteson S. QMIX: Monotonic value function factorisation for deep multi-agent reinforcement learning. In: Proceedings of the 35th International Conference on Machine Learning. Stockholm, Sweden: PMLR, 2018. 4295−4304 [51] Jiang J C, Lu Z Q. Learning attentional communication for multi-agent cooperation. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: Curran Associates Inc., 2018. 7265−7275 [52] Zheng L M, Yang J C, Cai H, Zhou M, Zhang W N, Wang Jun, et al. MAgent: A many-agent reinforcement learning platform for artificial collective intelligence. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. 8222−8223 [53] Resnick C, Eldridge W, Ha D, Britz D, Foerster J, Togelius J, et al. Pommerman: A multi-agent playground. arXiv preprint arXiv: 1809.07124, 2018. [54] Iqbal S, Sha F. Actor-attention-critic for multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 2961−2970 [55] Das A, Gervet T, Romof J, Batra D, Parikh D, Rabbat M, et al. TarMAC: Targeted multi-agent communication. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, California: PMLR, 2019. 1538−1546 [56] Suarez J, Du Y L, Isola P, Mordatch I. Neural MMO: A massively multiagent game environment for training and evaluating intelligent agents. arXiv: 1903.00784, 2019. [57] Zheng C Y, Wang J H, Zhang C J, Wang T H. Learning nearly decomposable value functions via communication minimization. In: Proceedings of the 8th International Conference on Learning Representations. Addis Ababa, Ethiopia: ICLR, 2020. [58] Kurach K, Raichuk A, Stańczyk P, Zajac M, Bachem O, Espeholt L, et al. Google research football: A novel reinforcement learning environment. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. New York, USA: AAAI, 2020. 4501−4510 [59] Zhou M, Luo J, Villella J, Yang Y D, Rusu D, Miao J Y, et al. SMARTS: Scalable multi-agent reinforcement learning training school for autonomous driving. arXiv preprint arXiv: 2010.09776, 2020. [60] Su J Y, Adams S, Beling P. Value-decomposition multi-agent actor-critics. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI, 2021. 11352−11360 [61] Prajapat M, Azizzadenesheli K, Liniger A, Yue Y S, Anandkumar A. Competitive policy optimization. In: Proceedings of the 37th Conference on Uncertainty in Artificial Intelligence. Virtual Event: PMLR, 2021. 64−74 [62] Leibo J Z, Duéñez-Guzmán E A, Vezhnevets A, Agapiou J P, Sunehag P, Koster R, et al. Scalable evaluation of multi-agent reinforcement learning with melting pot. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 6187−6199 [63] Hong Y T, Jin Y C, Tang Y. Rethinking individual global max in cooperative multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 2350 [64] Shao J Z, Lou Z Q, Zhang H C, Jiang Y H, He S C, Ji X Y. Self-organized group for cooperative multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. 5711−5723 [65] Jiang J C, Lu Z Q. I2Q: A fully decentralized Q-learning algorithm. In: Proceedings of the 36th Conference on Neural Information Processing Systems. New Orleans, USA: NeurIPS, 2022. 20469−20481 [66] Su K F, Lu Z Q. Divergence-regularized multi-agent actor-critic. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 20580−20603 [67] Yang M Y, Zhao J, Hu X H, Zhou W G, Zhu J C, Li H Q. LDSA: Learning dynamic subtask assignment in cooperative multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 124 [68] Pan X H, Liu M, Zhong F W, Yang Y D, Zhu S C, Wang Y Z. MATE: Benchmarking multi-agent reinforcement learning in distributed target coverage control. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 2021 [69] Li J H, Kuang K, Wang B X, Li X C, Wu F, Xiao J, et al. Two heads are better than one: A simple exploration framework for efficient multi-agent reinforcement learning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 878 [70] Yuan T T, Chung H M, Yuan J, Fu X M. DACOM: Learning delay-aware communication for multi-agent reinforcement learning. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI, 2023. 11763−11771 [71] Kalogiannis F, Anagnostides I, Panageas I, Vlatakis-Gkaragkounis E V, Chatziafratis V, Stavroulakis S. Efficiently computing Nash equilibria in adversarial team Markov games. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [72] Leroy P, Morato P G, Pisane J, Kolios A, Ernst D. IMP-MARL: A suite of environments for large-scale infrastructure management planning via MARL. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 2329 [73] Suárez J, Isola P, Choe K W, Bloomin D, Li H X, Pinnaparaju N, et al. Neural MMO 2.0: A massively multi-task addition to massively multi-agent learning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 2178 [74] Ellis B, Cook J, Moalla S, Samvelyan M, Sun M F, Mahajan A, et al. SMACv2: An improved benchmark for cooperative multi-agent reinforcement learning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1634 [75] Liu C X, Liu G Z. JointPPO: Diving deeper into the effectiveness of PPO in multi-agent reinforcement learning. arXiv: 2404.11831, 2024. [76] Liu J R, Zhong Y F, Hu S Y, Fu H B, Fu Q, Chang X J, et al. Maximum entropy heterogeneous-agent reinforcement learning. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [77] Aghajohari M, Duque J A, Cooijmans T, Courville A. LOQA: Learning with opponent Q-Learning awareness. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [78] Hu Z C, Zhang Z Z, Li H X, Chen C L, Ding H Y, Wang Z. Attention-guided contrastive role representations for multi-agent reinforcement learning. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [79] Chen J D, Lan T, Joe-Wong C. RGMComm: Return gap minimization via discrete communications in multi-agent reinforcement learning. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence. Vancouver, Canada: AAAI, 2024. 17327−17336 [80] Geng M H, Pateria S, Subagdja B, Tan A H. Benchmarking MARL on long horizon sequential multi-objective tasks. In: Proceedings of the 23rd International Conference on Autonomous Agents and Multiagent Systems. Auckland, New Zealand: AAMAS, 2024. 2279−2281 [81] Li W Z, Ding Z H, Karten S, Jin C. FightLadder: A benchmark for competitive multi-agent reinforcement learning. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: PMLR, 2024. 27653−27674 [82] Zhu S H, Zhou J C, Chen A J, Bai M M, Chen J M, Xu J M. MAexp: A generic platform for RL-based multi-agent exploration. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Yokohama, Japan: IEEE, 2024. 5155−5161 [83] Wang Y T, Duhan T, Li J Y, Sartoretti G. LNS2+RL: Combining multi-agent reinforcement learning with large neighborhood search in multi-agent path finding. In: Proceedings of the 39th AAAI Conference on Artificial Intelligence. Philadelphia, USA: AAAI, 2025. [84] Watkins C J C H, Dayan P. Q-learning. Machine Learning, 1992, 8(3−4): 279−292 doi: 10.1007/BF00992698 [85] Silver D, Lever G, Heess N, Degris T, Wierstra D, Riedmiller M. Deterministic policy gradient algorithms. In: Proceedings of the 31st International Conference on Machine Learning. Beijing, China: JMLR, 2014. I-387−I-395 [86] Konda V R, Tsitsiklis J N. Actor-citic agorithms. In: Proceedings of the 12th International Conference on Neural Information Processing Systems. Denver, USA: MIT Press, 1999. 1008−1014 [87] McClellan J, Haghani N, Winder J, Huang F R, Tokekar P. Boosting sample efficiency and generalization in multi-agent reinforcement learning via equivariance. In: Proceedings of the 38th Annual Conference on Neural Information Processing Systems. Vancouver, Canada: NeurIPS, 2024. [88] Shapley L S. Stochastic games. Proceedings of the National Academy of Sciences, 1953, 39(10): 1095−1100 doi: 10.1073/pnas.39.10.1095 [89] Hu J L, Wellman M P. Nash Q-learning for general-sum stochastic games. Journal of Machine Learning Research, 2003, 4: 1039−1069 [90] Hu S C, Shen L, Zhang Y, Tao D C. Learning multi-agent communication from graph modeling perspective. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [91] Wen G H, Fu J J, Dai P C, Zhou J L. DTDE: A new cooperative multi-agent reinforcement learning framework. The Innovation, 2021, 2(4): Article No. 100162 doi: 10.1016/j.xinn.2021.100162 [92] Foerster J N, Farquhar G, Afouras T, Nardelli N, Whiteson S. Counterfactual multi-agent policy gradients. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. Article No. 363 [93] Li W H, Wang X F, Jin B, Luo D J, Zha H Y. Structured cooperative reinforcement learning with time-varying composite action space. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(11): 8618−8634 [94] Tan M. Multi-agent reinforcement learning: Independent versus cooperative agents. In: Proceedings of the 10th International Conference on Machine Learning. Amherst, USA: Morgan Kaufmann Publishers Inc., 1993. 330−337 [95] Jin C, Liu Q H, Yu T C. The power of exploiter: Provable multi-agent RL in large state spaces. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 10251−10279 [96] Jiang H B, Ding Z L, Lu Z Q. Settling decentralized multi-agent coordinated exploration by novelty sharing. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence. Vancouver, Canada: AAAI, 2024. 17444−17452 [97] Hsu H L, Wang W X, Pajic M, Xu P. Randomized exploration in cooperative multi-agent reinforcement learning. arXiv: 2404.10728, 2024. [98] Shin W, Kim Y. Guide to control: Offline hierarchical reinforcement learning using subgoal generation for long-horizon and sparse-reward tasks. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. 4217−4225 [99] Wang J H, Zhang Y, Kim T K, Gu Y J. Shapley Q-value: A local reward approach to solve global reward games. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. New York, USA: AAAI, 2020. 7285−7292 [100] Wang X S, Xu H R, Zheng Y N, Zhan X Y. Offline multi-agent reinforcement learning with implicit global-to-local value regularization. In: Proceedings of the 37th Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 2282 [101] Boggess K, Kraus S, Feng L. Explainable multi-agent reinforcement learning for temporal queries. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. 55−63 [102] Zhang H, Chen H G, Boning D S, Hsieh C J. Robust reinforcement learning on state observations with learned optimal adversary. In: Proceedings of the 9th International Conference on Learning Representations. Virtual Event: ICLR, 2021. [103] Li Z, Wellman M P. A meta-game evaluation framework for deep multiagent reinforcement learning. In: Proceedings of the 33rd International Joint Conference on Artificial Intelligence. Jeju, South Korea: IJCAI, 2024. 148−156 [104] Yang Z X, Jin H M, Ding R, You H Y, Fan G Y, Wang X B, et al. DeCOM: Decomposed policy for constrained cooperative multi-agent reinforcement learning. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI, 2023. 10861−10870 [105] García J, Fernández F. A comprehensive survey on safe reinforcement learning. The Journal of Machine Learning Research, 2015, 16(1): 1437−1480 [106] Chen Z Y, Zhou Y, Huang H. On the duality gap of constrained cooperative multi-agent reinforcement learning. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [107] Bukharin A, Li Y, Yu Y, Zhang Q R, Chen Z H, Zuo S M, et al. Robust multi-agent reinforcement learning via adversarial regularization: Theoretical foundation and stable algorithms. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 2979 [108] Mao W C, Qiu H R, Wang C, Franke H, Kalbarczyk Z, Iyer R K, et al. Multi-agent meta-reinforcement learning: Sharper convergence rates with task similarity. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 2906 [109] 谭晓阳, 张哲. 元强化学习综述. 南京航空航天大学学报, 2021, 53(5): 653−663Tan Xiao-Yang, Zhang Zhe. Review on meta reinforcement learning. Journal of Nanjing University of Aeronautics Astronautics, 2021, 53(5): 653−663 [110] Tang X L, Yu H. Competitive-cooperative multi-agent reinforcement learning for auction-based federated learning. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. 4262−4270 [111] Cao K, Xie L H. Trust-region inverse reinforcement learning. IEEE Transactions on Automatic Control, 2024, 69(2): 1037−1044 doi: 10.1109/TAC.2023.3274629 [112] Deng Y, Wang Z R, Zhang Y. Improving multi-agent reinforcement learning with stable prefix policy. In: Proceedings of the 33rd International Joint Conference on Artificial Intelligence. Jeju, South Korea: IJCAI, 2024. 49−57 [113] Hu Y F, Fu J J, Wen G H, Lv Y Z, Ren W. Distributed entropy-regularized multi-agent reinforcement learning with policy consensus. Automatica, 2024, 164: Article No. 111652 doi: 10.1016/j.automatica.2024.111652 [114] Kok J R, Vlassis N. Collaborative multiagent reinforcement learning by payoff propagation. Journal of Machine Learning Research, 2006, 7: 1789−1828 [115] Son K, Kim D, Kang W J, Hostallero D, Yi Y. QTRAN: Learning to factorize with transformation for cooperative multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 5887−5896 [116] Li W H, Wang X F, Jin B, Sheng J J, Hua Y, Zha H Y. Structured diversification emergence via reinforced organization control and hierachical consensus learning. In: Proceedings of the 20th International Conference on Autonomous Agents and Multiagent Systems. Virtual Event: AAMAS, 2021. 773−781 [117] Mnih V, Badia A P, Mirza M, Graves A, Harley T, Lillicrap T P, et al. Asynchronous methods for deep reinforcement learning. In: Proceedings of the 33rd International Conference on Machine Learning. New York, USA: JMLR, 2016. 1928−1937 [118] Hu G Z, Zhu Y H, Zhao D B, Zhao M C, Hao J Y. Event-triggered communication network with limited-bandwidth constraint for multi-agent reinforcement learning. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(8): 3966−3978 [119] Ding S F, Du W, Ding L, Zhang J, Guo L L, An B. Robust multi-agent communication with graph information bottleneck optimization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(5): 3096−3107 doi: 10.1109/TPAMI.2023.3337534 [120] Guo X D, Shi D M, Fan W H. Scalable communication for multi-agent reinforcement learning via transformer-based email mechanism. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. 126−134 [121] Tang Y J, Ren Z L, Li N. Zeroth-order feedback optimization for cooperative multi-agent systems. Automatica, 2023, 148: Article No. 110741 doi: 10.1016/j.automatica.2022.110741 [122] Rachmut B, Nelke S A, Zivan R. Asynchronous communication aware multi-agent task allocation. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. Article No. 30 [123] Yu L B, Qiu Y B, Yao Q M, Shen Y, Zhang X D, Wang J. Robust communicative multi-agent reinforcement learning with active defense. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence. Vancouver, Canada: AAAI, 2024. 17575−17582 [124] Lin Y, Li W H, Zha H Y, Wang B X. Information design in multi-agent reinforcement learning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1113 [125] Wang X C, Yang L, Chen Y Z, Liu X T, Hajiesmaili M, Towsley D, et al. Achieving near-optimal individual regret & low communications in multi-agent bandits. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [126] Xie Q M, Chen Y D, Wang Z R, Yang Z R. Learning zero-sum simultaneous-move Markov games using function approximation and correlated equilibrium. In: Proceedings of the 33rd Conference on Learning Theory. Graz, Austria: PMLR, 2020. 3674−3682 [127] Chen Z X, Zhou D R, Gu Q Q. Almost optimal algorithms for two-player zero-sum linear mixture Markov games. In: Proceedings of the 33rd International Conference on Algorithmic Learning Theory. Paris, France: PMLR, 2022. 227−261 [128] Bai Y, Jin C, Mei S, Yu T C. Near-optimal learning of extensive-form games with imperfect information. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 1337−1382 [129] Zhang Y Z, An B. Converging to team-maxmin equilibria in zero-sum multiplayer games. In: Proceedings of the 37th International Conference on Machine Learning. Vienna, Austria: JMLR, 2020. Article No. 1023 [130] Chen Y Q, Mao H Y, Mao J X, Wu S G, Zhang T L, Zhang B, et al. PTDE: Personalized training with distilled execution for multi-agent reinforcement learning. In: Proceedings of the 33rd International Joint Conference on Artificial Intelligence. Jeju, South Korea: IJCAI, 2024. 31−39 [131] Zheng L Y, Fiez T, Alumbaugh Z, Chasnov B, Ratliff L J. Stackelberg actor-critic: Game-theoretic reinforcement learning algorithms. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI, 2022. 9217−9224 [132] Wang J L, Jin X, Tang Y. Optimal strategy analysis for adversarial differential games. Electronic Research Archive, 2022, 30(10): 3692−3710 doi: 10.3934/era.2022189 [133] Plaksin A, Kalev V. Zero-sum positional differential games as a framework for robust reinforcement learning: Deep Q-learning approach. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: PMLR, 2024. 40869−40885 [134] Wang J L, Zhou Z, Jin X, Mao S, Tang Y. Matching-based capture-the-flag games for multiagent systems. IEEE Transactions on Cognitive and Developmental Systems, 2024, 16(3): 993−1005 [135] Daskalakis C, Foster D J, Golowich N. Independent policy gradient methods for competitive reinforcement learning. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2020. Article No. 464 [136] Ian O, Benjamin V R, Daniel R. (More) Efficient reinforcement learning via posterior sampling. In: Proceedings of the 26th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: Curran Associates Inc., 2013. 3003−3011 [137] Xiong W, Zhong H, Shi C S, Shen C, Zhang T. A self-play posterior sampling algorithm for zero-sum Markov games. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 24496−24523 [138] Zhou Y C, Li J L, Zhu J. Posterior sampling for multi-agent reinforcement learning: Solving extensive games with imperfect information. In: Proceedings of the 8th International Conference on Learning Representations. Addis Ababa, Ethiopia: ICLR, 2020. [139] Wei C Y, Lee C W, Zhang M X, Luo H P. Last-iterate convergence of decentralized optimistic gradient descent/ascent in infinite-horizon competitive Markov games. In: Proceedings of the 34th Annual Conference on Learning Theory. Boulder, USA: PMLR, 2021. 4259−4299 [140] Wang X R, Yang C, Li S X, Li P D, Huang X, Chan H, et al. Reinforcement Nash equilibrium solver. In: Proceedings of the 33rd International Joint Conference on Artificial Intelligence. Jeju, South Korea: IJCAI, 2024. 265−273 [141] Xu H, Li K, Fu H B, Fu Q, Xing J L. AutoCFR: Learning to design counterfactual regret minimization algorithms. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Vancouver, Canada: AAAI, 2022. 5244−5251 [142] Zinkevich M, Johanson M, Bowling M, Piccione C. Regret minimization in games with incomplete information. In: Proceedings of the 20th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2007. 1729−1736 [143] Heinrich J, Silver D. Deep reinforcement learning from self-play in imperfect-information games. arXiv: 1603.01121, 2016. [144] Wang H, Luo H, Jiang Y C, Kaynak O. A performance recovery approach for multiagent systems with actuator faults in noncooperative games. IEEE Transactions on Industrial Informatics, 2024, 20(5): 7853−7861 doi: 10.1109/TII.2024.3363060 [145] Zhong Y F, Yuan Y, Yuan H H. Nash equilibrium seeking for multi-agent systems under DoS attacks and disturbances. IEEE Transactions on Industrial Informatics, 2024, 20(4): 5395−5405 doi: 10.1109/TII.2023.3332951 [146] Wang J M, Zhang J F, He X K. Differentially private distributed algorithms for stochastic aggregative games. Automatica, 2022, 142: Article No. 110440 doi: 10.1016/j.automatica.2022.110440 [147] Wang J M, Zhang J F. Differentially private distributed stochastic optimization with time-varying sample sizes. IEEE Transactions on Automatic Control, 2024, 69(9): 6341−6348 doi: 10.1109/TAC.2024.3379387 [148] Huang S J, Lei J L, Hong Y G. A linearly convergent distributed Nash equilibrium seeking algorithm for aggregative games. IEEE Transactions on Automatic Control, 2023, 68(3): 1753−1759 doi: 10.1109/TAC.2022.3154356 [149] 王健瑞, 黄家豪, 唐漾. 基于深度强化学习的不完美信息群智夺旗博弈. 中国科学: 技术科学, 2023, 53(3): 405−416 doi: 10.1360/SST-2021-0382Wang Jian-Rui, Huang Jia-Hao, Tang Yang. Swarm intelligence capture-the-flag game with imperfect information based on deep reinforcement learning. Scientia Sinica Technologica, 2023, 53(3): 405−416 doi: 10.1360/SST-2021-0382 [150] Erez L, Lancewicki T, Sherman U, Koren T, Mansour Y. Regret minimization and convergence to equilibria in general-sum Markov games. In: Proceedings of the 40th International Conference on Machine Learning. Honolulu, USA: JMLR, 2023. 9343−9373 [151] Jin C, Liu Q H, Wang Y H, Yu T C. V-learning——A simple, efficient, decentralized algorithm for multiagent RL. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022. [152] Zhang B, Li L J, Xu Z W, Li D P, Fan G L. Inducing stackelberg equilibrium through spatio-temporal sequential decision-making in multi-agent reinforcement learning. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. Article No. 40 [153] Mao W C, Yang L, Zhang K Q, Basar T. On improving model-free algorithms for decentralized multi-agent reinforcement learning. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 15007−15049 [154] Ding D S, Wei C Y, Zhang K Q, Jovanovic M. Independent policy gradient for large-scale Markov potential games: Sharper rates, function approximation, and game-agnostic convergence. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 5166−5220 [155] Cui Q W, Zhang K Q, Du S S. Breaking the curse of multiagents in a large state space: RL in Markov games with independent linear function approximation. In: Proceedings of the 36th Annual Conference on Learning Theory. Bangalore, India: PMLR, 2023. 2651−2652 [156] Bakhtin A, Wu D J, Lerer A, Gray J, Jacob A P, Farina G, et al. Mastering the game of no-press diplomacy via human-regularized reinforcement learning and planning. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [157] Tennant E, Hailes S, Musolesi M. Modeling moral choices in social dilemmas with multi-agent reinforcement learning. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2023. Article No. 36 [158] Yang D K, Yang K, Wang Y Z, Liu J, Xu Z, Yin R B, et al. How2comm: Communication-efficient and collaboration-pragmatic multi-agent perception. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1093 -

下载:

下载: