-

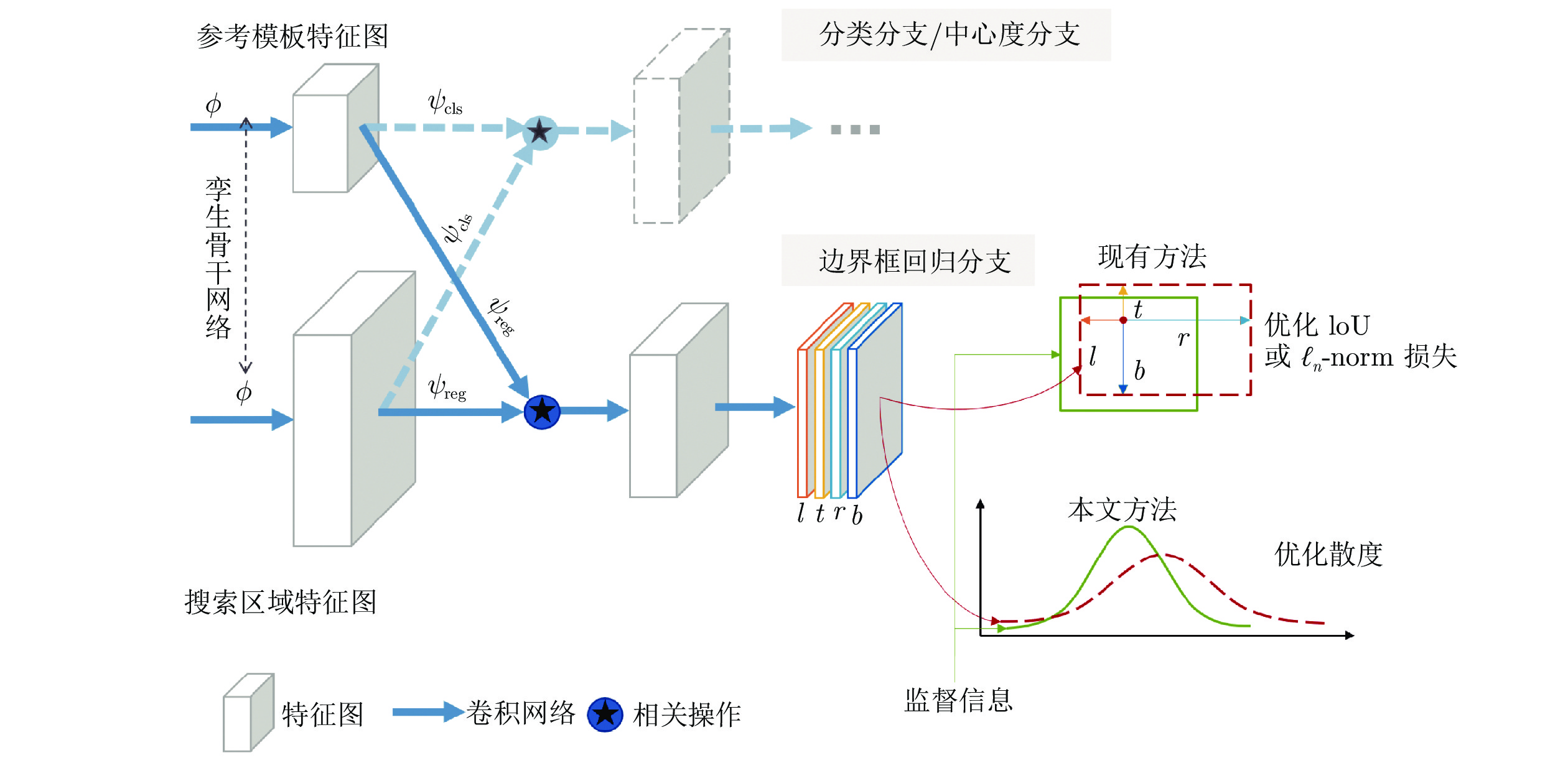

摘要: 边界框回归分支是深度目标跟踪器的关键模块, 其性能直接影响跟踪器的精度. 评价精度的指标之一是交并比(Intersection over union, IoU). 基于IoU的损失函数取代了

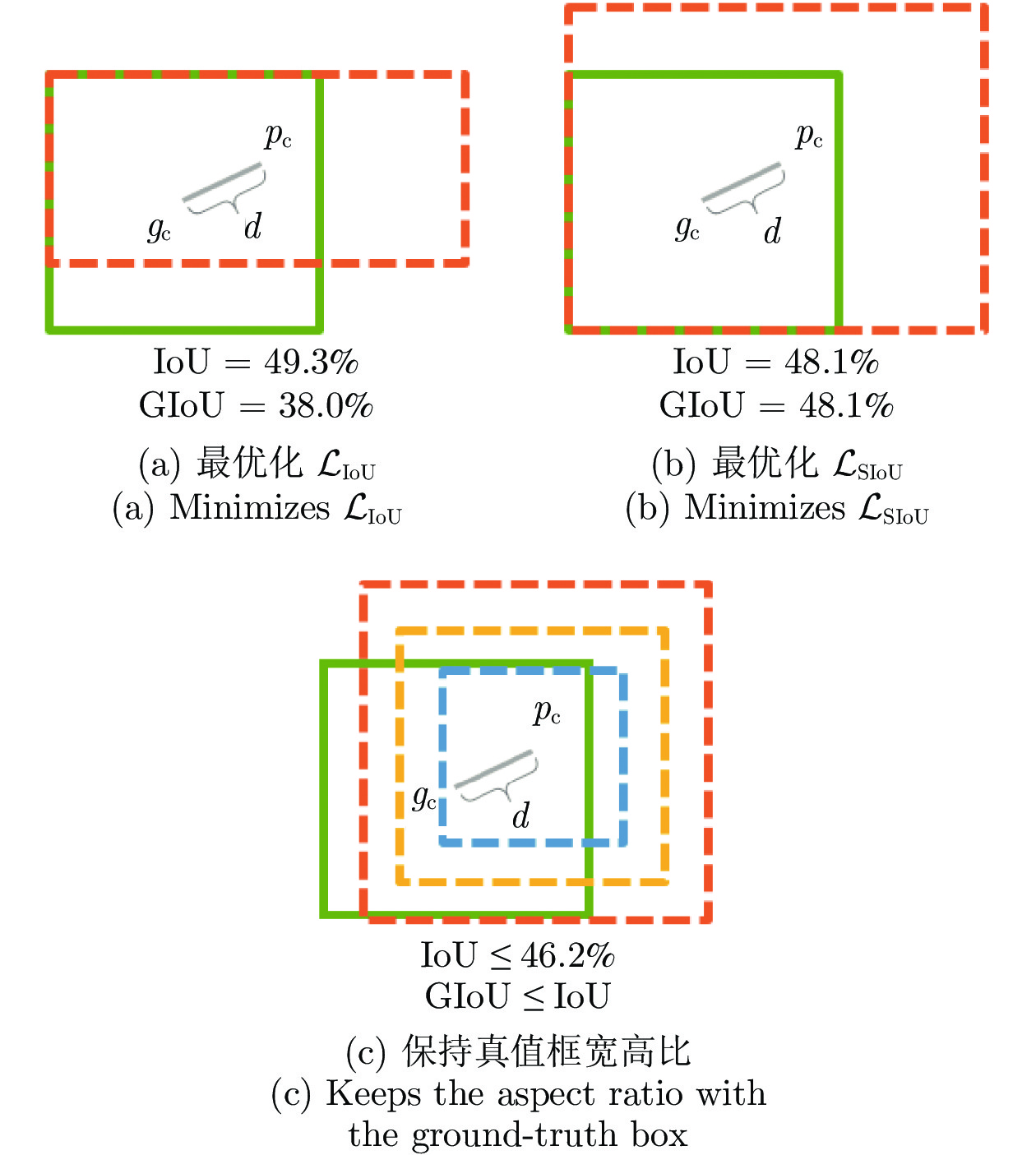

$ \ell_n $ -norm损失成为目前主流的边界框回归损失函数, 然而IoU损失函数存在2个固有缺陷: 1)当预测框与真值框不相交时IoU为常量 0, 无法梯度下降更新边界框的参数; 2)在IoU取得最优值时其梯度不存在, 边界框很难收敛到 IoU 最优处. 揭示了在回归过程中IoU最优的边界框各参数之间蕴含的定量关系, 指出在边界框中心处于特定位置时存在多种尺寸不同的边界框使IoU损失最优的情况, 这增加了边界框尺寸回归的不确定性. 从优化两个统计分布之间散度的视角看待边界框回归问题, 提出了光滑IoU (Smooth-IoU, SIoU)损失, 即构造了在全局上光滑(即连续可微)且极值唯一的损失函数, 该损失函数自然蕴含边界框各参数之间特定的最优关系, 其唯一取极值的边界框可使IoU达到最优. 光滑性确保了在全局上梯度存在使得边界框更容易回归到极值处, 而极值唯一确保了在全局上可梯度下降更新参数, 从而避开了IoU损失的固有缺陷. 提出的光滑损失可以很容易取代IoU损失集成到现有的深度目标跟踪器上训练边界框回归, 在 LaSOT、GOT-10k、TrackingNet、OTB2015和VOT2018测试基准上所取得的结果, 验证了光滑IoU损失的易用性和有效性.-

关键词:

- 光滑IoU损失 /

- $ \ell_n $ -norm损失 /

- 边界框回归 /

- 目标跟踪

Abstract: The branch of bounding box regression is a critical module in visual object trackers, and its performance directly affects accuracy of a tracker. One of evaluation metrics used to measure accuracy is intersection over union (IoU). The IoU loss which was proposed to replace$ \ell_n $ -norm loss for bounding box regression is increasingly popular. However, there are two inherent issues in IoU loss: One is that the parameters of bounding box can not be updated via gradient descent if the predicted box does not intersect with ground-truth box; the other is the gradient of the optimal IoU does not exist, so it is difficult to make the predicted box regressed to the IoU optimum. We reveal the explicit relationship among the parameters of IoU optimal bounding box in regression process, and point out that the size of a predicted box which makes IoU loss optimal is not unique when its center is in specific areas, increasing the uncertainty of bounding box regression. From the perspective of optimizing divergence between two distributions, we propose a smooth-IoU (SIoU) loss, which is a globally smooth (continuously differentiable) loss function with unique extremum. The smooth-IoU loss naturally implicates a specific optimal relationship among the parameters of bounding box, and its gradient over the global domain exists, making it easier to regress the predicted box to the extremal bounding box, and the unique extremum ensures that the parameters can be updated via gradient descent. In addition, the proposed smooth-IoU loss can be easily incorporated into existing trackers by replacing the IoU-based loss to train bounding box regression. Extensive experiments on visual tracking benchmarks including LaSOT, GOT-10k, TrackingNet, OTB2015, and VOT2018 demonstrate that smooth-IoU loss achieves state-of-the-art performance, confirming its effectiveness and efficiency.-

Key words:

- Smooth-IoU loss /

- $ \ell_n $-norm loss /

- bounding box regression /

- visual tracking

-

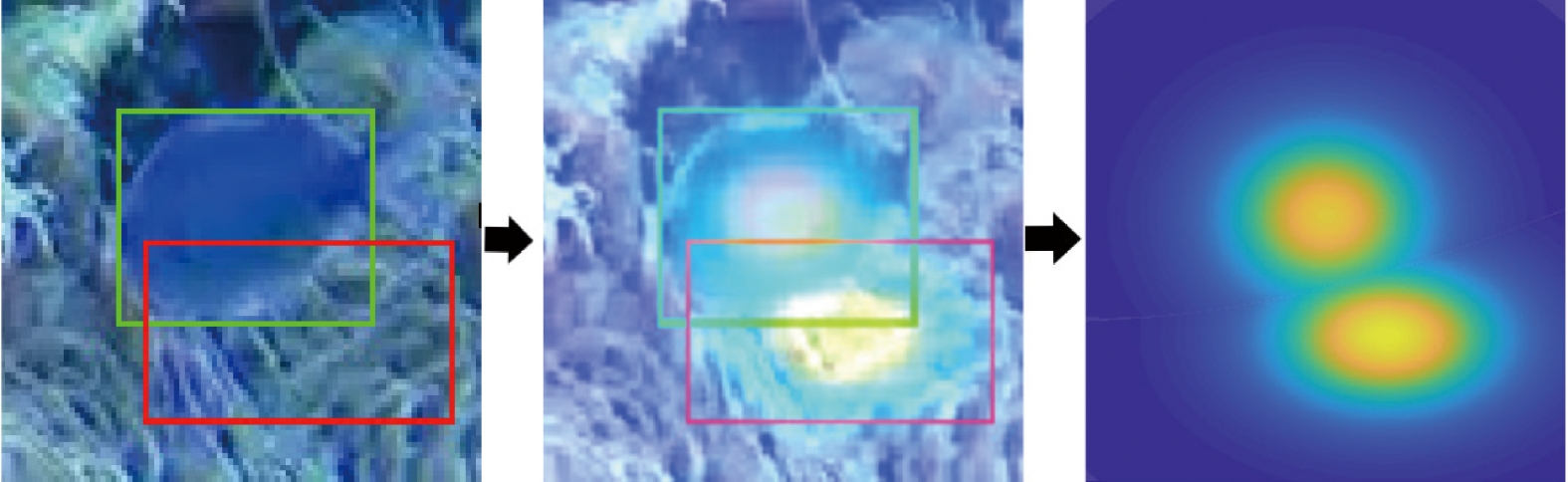

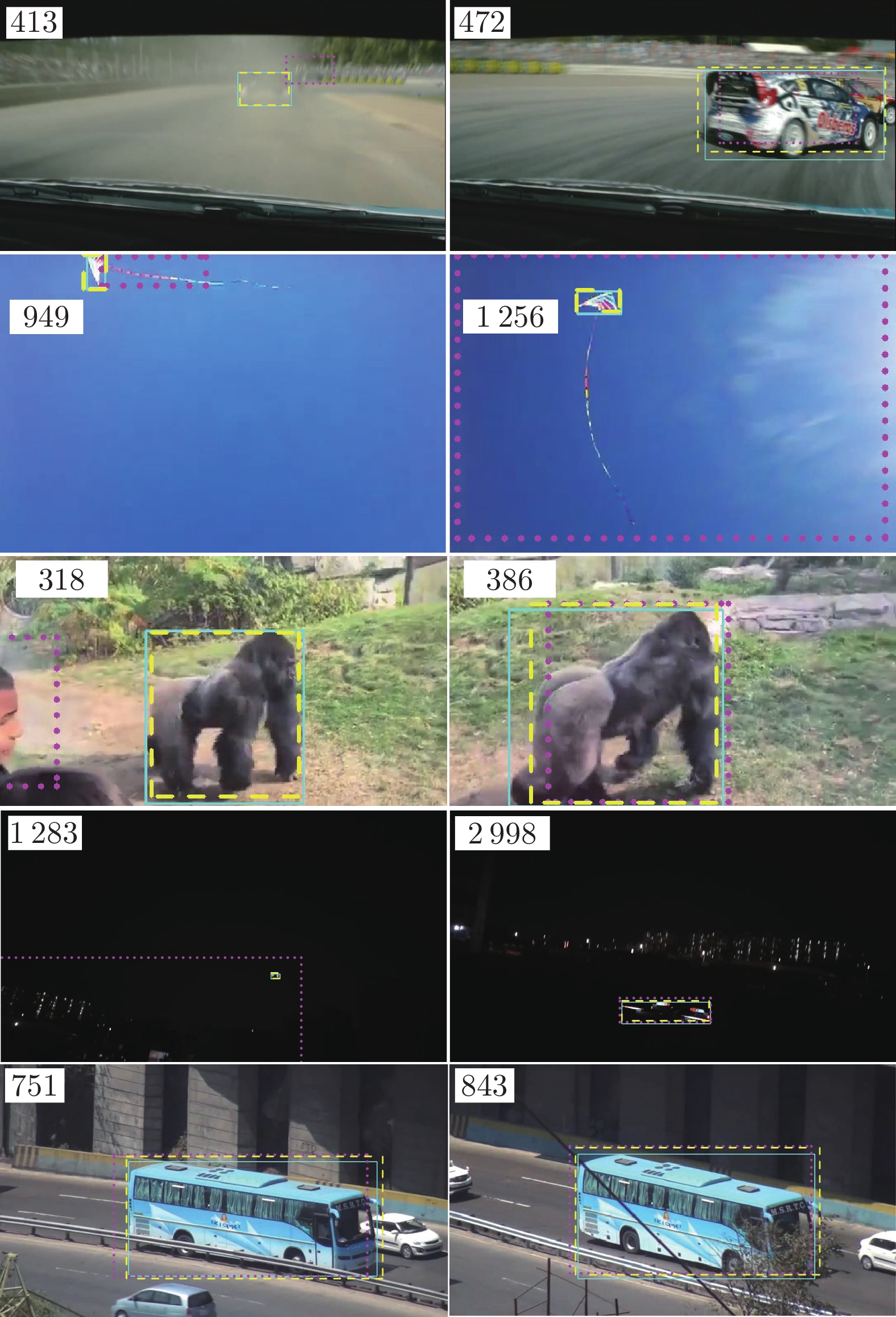

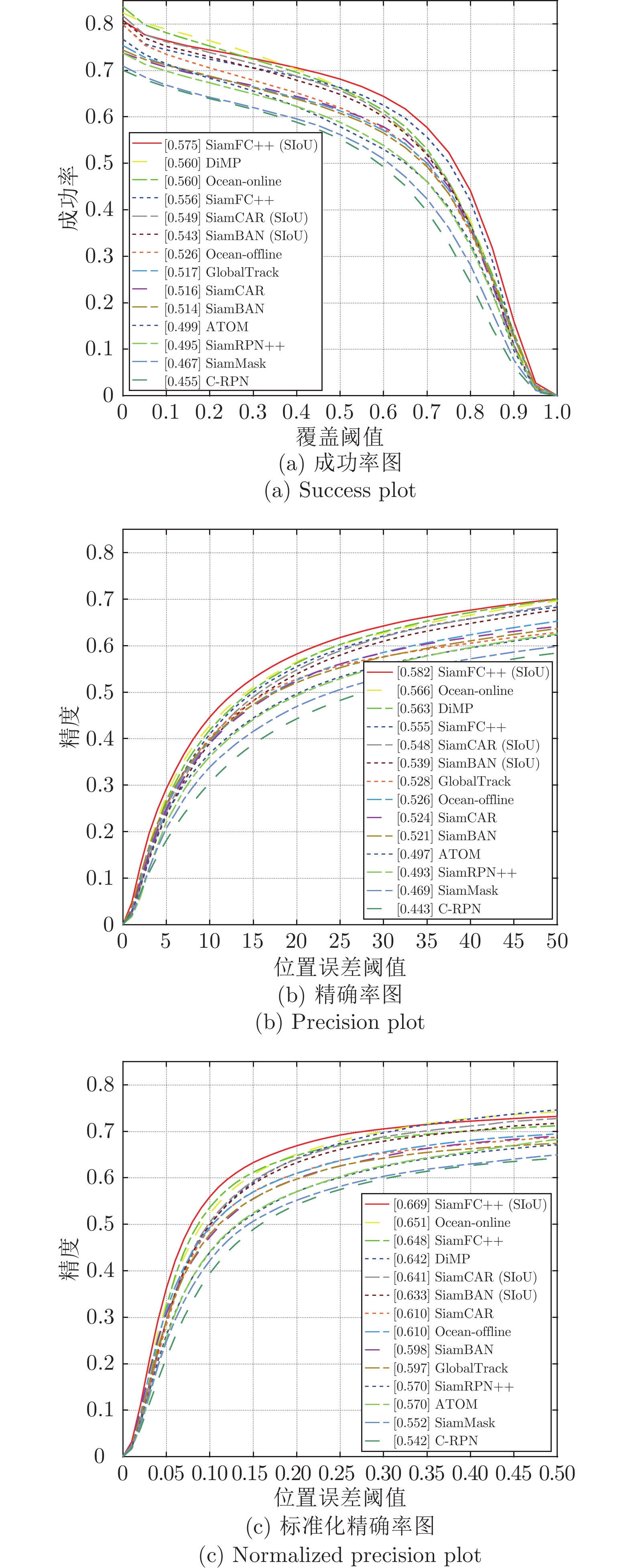

图 11 在LaSOT测试集上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (点线框标出)和$ {\cal{L}}_{{\rm{SIoU}}} $ (虚线框标出)训练的模型 SiamFC++ 的可视化结果示例 (实线框为真值标签)Fig. 11 Visualized tracking results of SiamFC++trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (marked in dotted box) and$ {\cal{L}}_{{\rm{SIoU}}} $ (marked in dashed box) on LaSOT(solid box denotes groundtruth)表 1 在基准 LaSOT 上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型 SiamFC++的测试结果(%)Table 1 Comparison between the performance of SiamFC++ trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test set of LaSOT (%)评价指标 成功率 精确度 标准化精确度 ${\cal{L} }_{{\rm{IoU}}}$ 55.6 55.5 64.8 ${\cal{L} }_{{\rm{SIoU}}}$ 57.6 58.3 66.9 相对增益 3.60 5.05 3.24 表 2 在基准LaSOT上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamBAN的测试对比(%)Table 2 Comparison between the performance of SiamBAN trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test set of LaSOT (%)评价指标 成功率 精确度 标准化精确度 ${\cal{L} }_{{\rm{IoU}}}$ 51.4 52.1 59.8 ${\cal{L} }_{{\rm{SIoU}}}$ 54.3 53.9 63.3 相对增益 5.64 3.45 4.85 表 3 在基准LaSOT上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练模型SiamCAR的测试对比(%)Table 3 Comparison between the performance of SiamCAR trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test set of LaSOT (%)评价指标 成功率 精确率 标准化精确率 ${\cal{L} }_{{\rm{IoU}}}$ 51.6 52.4 61.0 ${\cal{L} }_{{\rm{SIoU}}}$ 54.9 54.8 63.1 相对增益 6.39 4.58 3.44 表 4 在基准LaSOT上, 与先进方法的性能评估对比

Table 4 Performance evaluation for state-of-the-artalgorithms on LaSOT

方法 成功率 精确率 标准化精确率 SiamBAN 51.4 52.1 59.8 ATOM 51.5 50.5 57.6 SiamCAR 51.6 52.4 61.0 SiamRPN++ 49.6 49.1 56.9 Ocean-online 56.0 56.6 65.1 SiamFC++ 55.6 55.5 64.8 DiMP 56.8 56.4 64.3 SiamBAN (SIoU) 54.3 53.9 63.3 SiamCAR (SIoU) 54.2 53.7 63.1 SiamFC++ (SIoU) 57.6 58.3 66.9 表 5 在GOT-10k上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamFC++ 测试对比(%)Table 5 Comparison between the performance of SiamFC++ trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test set of GOT-10k (%)评价指标 $ {\rm{AO}} $ ${\rm{SR} }_{0.50}$ ${\rm{SR} }_{0.75}$ ${\cal{L} }_{{\rm{IoU}}}$ 59.5 69.5 47.9 ${\cal{L} }_{{\rm{SIoU}}}$ 61.7 74.7 46.8 相对增益 3.69 7.48 −2.29 表 6 在GOT-10k上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamCAR测试结果(%)Table 6 Comparison between the performance of SiamCAR trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test set of GOT-10k (%)评价指标 $ {\rm{AO}} $ ${\rm{SR} }_{0.50}$ ${\rm{SR} }_{0.75}$ ${\cal{L} }_{{\rm{IoU}}}$ 58.1 68.3 44.1 ${\cal{L} }_{{\rm{SIoU}}}$ 60.2 72.6 46.4 相对增益 3.61 6.29 5.22 表 7 在基准GOT-10k上, 与先进方法的性能评估对比 (%)

Table 7 Performance evaluation for state-of-the-artalgorithms on GOT-10k (%)

方法 $ {\rm{AO}} $ ${\rm{SR} }_{0.50}$ MDNet 29.9 30.3 SPM 51.3 59.3 ATOM 55.6 63.4 SiamCAR 56.9 67.0 SiamRPN++ 51.7 61.8 Ocean-online 61.1 72.1 D3S 59.7 67.6 SiamFC++ 59.5 69.5 DiMP-50 61.1 71.2 SiamCAR (SIoU) 60.2 72.6 SiamFC++ (SIoU) 61.7 74.7 表 8 在TrackingNet上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamFC++的测试结果(%)Table 8 Comparison between the performance of SiamFC++ trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test of TrackingNet (%)评价指标 精确率 标准化精确率 成功率 ${\cal{L} }_{\rm{{IoU}}}$ 70.5 80.0 75.4 ${\cal{L} }_{{\rm{SIoU}}}$ 72.1 81.9 76.2 相对增益 2.27 2.37 1.06 表 9 在基准TrackingNet上, 与先进方法的性能评估对比 (%)

Table 9 Performance evaluation for state-of-the-artalgorithms on TrackingNet (%)

方法 成功率 标准化精确率 MDNet 60.6 70.5 ATOM 70.3 77.1 DaSiamRPN 63.8 73.3 SiamRPN++ 73.3 80.0 UpdateNet 67.7 75.2 SPM 71.2 77.8 SiamFC++ 75.4 80.0 DiMP 74.0 80.1 SiamFC++ (SIoU) 76.2 81.9 表 10 在OTB2015上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamFC++ 的测试结果 (%)Table 10 Comparison between the performance of SiamFC++ trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on the test of OTB2015 (%)评价指标 成功率 标准化精确率 ${\cal{L} }_{ {\rm{IoU} } }$ 68.2 89.5 ${\cal{L} }_{{\rm{SIoU}}}$ 68.7 89.8 相对增益 0.74 0.34 表 11 在OTB2015上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamBAN测试结果 (%)Table 11 Comparison between the performance of SiamBAN trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on on the test of OTB2015 (%)评价指标 成功率 标准化精确率 ${\cal{L} }_{ {\rm{IoU} } }$ 69.6 91.0 ${\cal{L} }_{{\rm{SIoU}}}$ 69.9 91.5 相对增益 0.43 0.55 表 12 在VOT2018上, 分别以

$ {\cal{L}}_{{\rm{IoU}}} $ (原本的)和$ {\cal{L}}_{{\rm{SIoU}}} $ 训练的模型SiamFC++ 测试结果(%)Table 12 Comparison between the performance of SiamFC++ trained using

$ {\cal{L}}_{{\rm{IoU}}} $ (original),$ {\cal{L}}_{{\rm{SIoU}}} $ on on the test of VOT2018 (%)评价指标 ${\rm{准确率} }$ ${\rm{鲁棒性} }$ ${ {\rm{EAO} } }$ ${\cal{L} }_{{\rm{IoU}}}$ 0.586 0.201 0.427 ${\cal{L} }_{{\rm{SIoU}}}$ 0.582 0.196 0.400 表 13 在基准LaSOT 上, 与其他基于IoU损失训练得到的满足不同IoU阈值的测试集图像帧数占比的对比结果 (%)

Table 13 Comparison results with other IoU-based loss for the ratio of frames exceeding different IoU thresholdson the test set of LaSOT (%)

IoU阈值 ≥ 0.95 ≥ 0.90 ≥ 0.85 ≥ 0.80 ≥ 0.75 ≥ 0.70 ≥ 0.65 ≥ 0.60 ≥ 0.55 ≥ 0.50 SiamFC++ (SIoU) 2.75 15.93 31.83 44.05 52.33 57.71 61.71 64.41 66.52 68.14 SiamFC++ (DIoU) 1.60 13.19 29.72 42.84 51.48 57.09 61.28 64.17 66.31 67.95 SiamFC++ (GIoU) 2.45 16.18 31.10 42.26 50.39 55.81 59.56 62.37 64.58 66.34 SiamFC++ 1.52 12.73 29.10 41.90 50.20 55.63 59.72 62.51 64.68 66.31 SiamBAN (SIoU) 1.18 10.79 24.86 36.64 45.50 51.87 56.45 60.03 62.77 64.84 SiamBAN (GIoU) 1.49 11.77 24.71 35.15 44.77 50.79 54.93 57.76 60.70 63.98 SiamBAN 1.98 12.89 25.40 35.57 43.46 49.29 53.38 56.53 58.92 60.78 SiamCAR (SIoU) 1.20 10.80 24.81 36.74 45.75 52.29 56.97 60.71 63.53 65.66 SiamCAR (DIoU) 1.20 10.91 25.10 36.62 45.04 51.47 56.18 59.89 62.70 64.83 SiamCAR 1.27 10.62 23.90 35.98 44.93 50.87 55.08 57.86 59.94 61.61 表 14 在基准GOT-10k上, 与其他基于IoU损失训练得到的满足不同IoU阈值的测试集图像帧数占比的对比结果 (%)

Table 14 Comparison results with other IoU-based loss for the ratio of frames exceeding different IoU thresholdson the test set of GOT-10k (%)

IoU阈值 ≥ 0.95 ≥ 0.90 ≥ 0.85 ≥ 0.80 ≥ 0.75 ≥ 0.70 ≥ 0.65 ≥ 0.60 ≥ 0.55 ≥ 0.50 SiamFC++ (SIoU) 0.94 8.18 22.01 35.76 46.83 55.71 62.16 67.40 71.39 74.68 SiamFC++ (DIoU) 0.72 7.56 21.10 35.11 46.86 55.45 61.68 66.82 70.74 73.86 SiamFC++ (GIoU) 0.97 7.20 21.80 34.19 45.85 54.50 59.24 63.48 66.02 69.49 SiamCAR (SIoU) 0.94 8.58 22.20 35.83 46.46 54.74 60.66 65.49 69.30 72.62 SiamCAR (GIoU) 1.13 6.72 19.23 34.78 45.37 53.73 59.98 64.96 68.95 71.96 SiamCAR (DIoU) 0.92 6.19 18.85 32.54 43.73 52.51 58.86 64.04 68.09 71.33 SiamCAR 0.81 8.02 20.76 33.88 44.07 51.87 57.21 61.35 64.99 68.31 表 15 在GOT-10k上, 对

${\cal{L}}_{{\rm{SIoU}}}$ 的正则项和代理函数的消融实验(%)Table 15 Ablation studies about the regulariztion and surrogate function on GOT-10k (%)

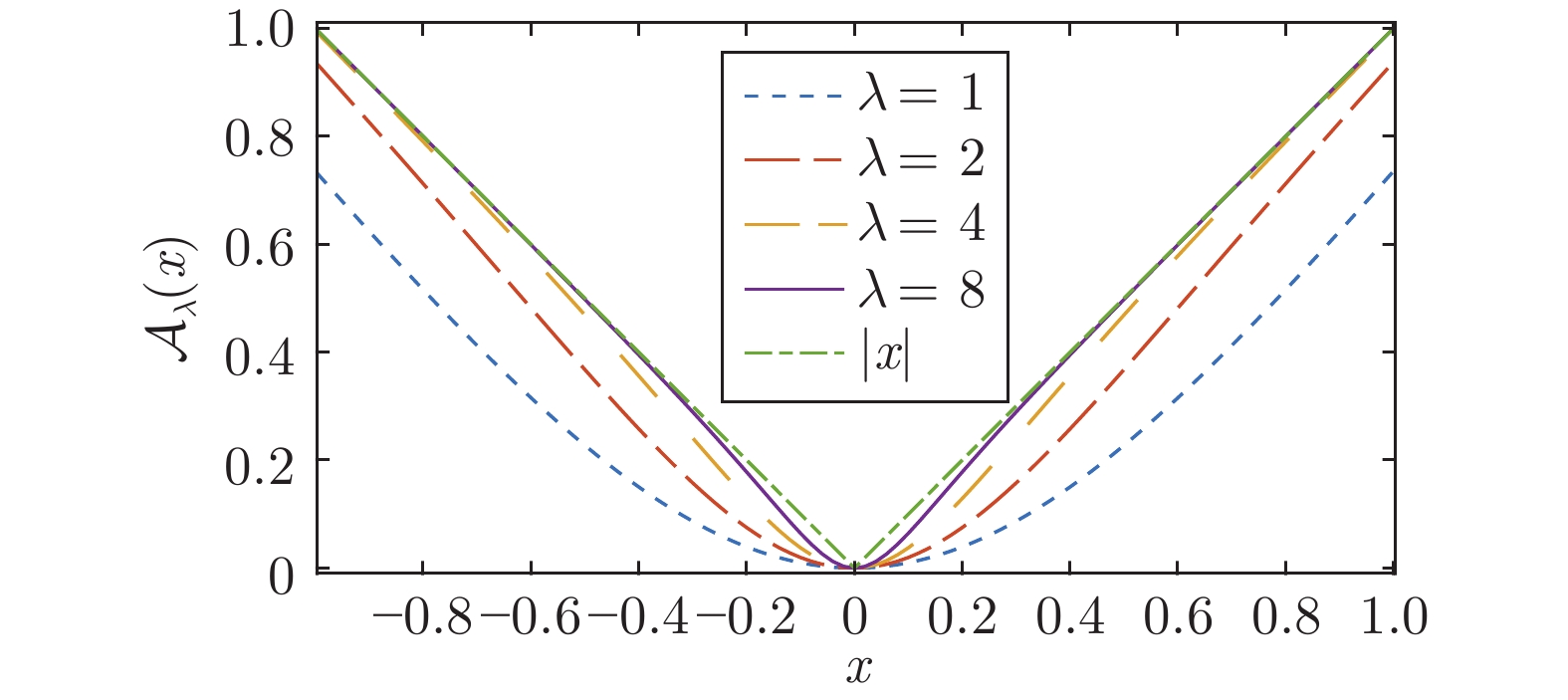

评价指标 $ {\rm{AO}} $ ${\rm{SR} }_{0.50}$ ${\rm{SR} }_{0.75}$ ${\cal{L} }_{ {\rm{SIoU} } }\;({\rm{w/o} } \, {\cal {AR} })$ 59.9 72.1 46.3 ${\cal{L} }_{ {\rm{SIoU} } }\; ({\rm{w/} } \;{ \cal{AR} })$ 61.7 74.7 46.8 相对增益 3.01 3.61 1.08 ${\cal{L} }_{ {\rm{SIoU} } }\;({\rm{w/} } \;{\cal {R} })$ 61.4 74.5 46.7 相对增益 2.51 3.33 0.86 ${\cal{L} }_{ {\rm{SIoU} } } \;({\rm{w/} } \;{ {\cal A} }_2)$ 60.3 72.6 46.5 相对增益 0.67 0.69 0.43 ${\cal{L} }_{ {\rm{SIoU} } }\; ({\rm{w/} } \;{ {\cal A} }_4)$ 60.6 73.4 46.3 相对增益 1.17 1.80 0 ${\cal{L} }_{ {\rm{SIoU} } }\; ({\rm{w/} } \;{ {\cal A} }_8)$ 60.4 73.4 46.0 相对增益 0.83 1.80 −0.65 ${\cal{L} }_{ {\rm{SIoU} } }\; ({\rm{w/} } \;{ {\cal A} }_1)$ 58.9 71.3 43.7 相对增益 −1.17 −1.11 −5.62 -

[1] 孟琭, 杨旭. 目标跟踪算法综述. 自动化学报, 2019, 45(7):1244-1260Meng Lu, Yang Xu. A survey of object tracking algorithms. Acta Automatica Sinica, 2019, 45(7):1244-1260 [2] 蒋弘毅, 王永娟, 康锦煜. 目标检测模型及其优化方法综述. 自动化学报, 2021, 47(6): 1232-1255Jiang Hong-Yi, Wang Yong-Juan, Kang Jin-Yu. A survey of object detection models and its optimization methods. Acta Automatica Sinica, 2021, 47(6):1232-1255 [3] Girshick R B. Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 1440−1448 [4] Yu J, Jiang Y, Wang Z, Cao Z, Huang T S. Unitbox: An advanced object detection network. In: Proceedings of the ACM Conference on Multimedia Conference. Amsterdam, Netherland: 2016. 516−520 [5] Rezatofighi H, Tsoi N, Gwak J, Sadeghian A, Reid I D, Savarese S. Generalized intersection over union: A metric and a loss for bounding box regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Bea-ch, USA: 2019. 658−666 [6] Zheng Z, Wang P, Li J, Ye R, Ren D. Distance-IOU loss: Faster and better learning for bounding box regression. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. New York, USA: AAAI Press, 2020. 12993−13000 [7] Zhang Y, Ren W, Zhang Z, Jia Z, Wang L, Tan T. Focal and efficient IOU loss for accurate bounding box regression [Online], available: https://arxiv.org/abs/2101.08158, August 11, 2021 [8] Li B, Yan J, Wu W, Zhu Z, Hu X. High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: 2018. 8971−8980 [9] Li B, Wu W, Wang Q, Wu W, Yan J, Hu W. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 4282−4291 [10] Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W. Distractor-aware siamese networks for visual object tracking. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: 2018. 103−119 [11] He Y, Zhu C, Wang J, Savvides M, Zhang X. Bounding box regression with uncertainty for accurate object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 2888−2897 [12] Law H, Deng J. Cornernet: Detecting objects as paired keypoints. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: 2018. 765−781 [13] Gidaris S, Komodakis N. Locnet: Improving localization accuracy for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 789−798 [14] Zhou X, Koltun V, Krähenbühl P. Tracking objects as points. In: Proceedings of the 16th European Conference on Computer Vision. Glasgow, UK: 2020. 474−490 [15] Lin T.-Y, Goyal P, Girshick R, et al. Focal loss for dense object detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2):318-327 doi: 10.1109/TPAMI.2018.2858826 [16] Held D, Thrun S, Savarese S. Learning to track at 100 FPS with deep regression networks. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, Netherlands: 2016. 749−765 [17] Bertinetto L, Valmadre J, Henriques J F, Vedaldi A, Torr H S P. Fully-convolutional siamese networks for object tracking. In: Proceedings of the European Conference on Computer Vision Workshops. Amsterdam, Netherlands: 2016. 850−865 [18] Jiang B, Luo R, Mao J, Xiao T, Jiang Y. Acquisition of localization confidence for accurate object detection. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: 2018. 816−832 [19] Wang G, Luo C, Xiong Z, Zeng W. SPM-tracker: Series-parallel matching for real-time visual object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 3643−3652 [20] Xu Y, Wang Z, Li Z, Ye Y, Yu G. SiamFC++: Towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. New York, USA: AAAI Press, 2020. 12549−12556 [21] Chen Z, Zhong B, Li G, Zhang S, Ji R. Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Sea-ttle, USA: IEEE, 2020. 6667−6676 [22] Guo D, Wang J, Cui Y, Wang Z, Chen S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2020. 6268−6276 [23] Zhang Z, Peng H, Fu J, Li B, Hu W. Ocean: Object-aware anchor-free tracking. In: Proceedings of the 16th European Conference on Computer Vision. Glasgow, UK: 2020. 771−787 [24] 谭建豪, 郑英帅, 王耀南, 马小萍. 基于中心点搜索的无锚框全卷积孪生跟踪器. 自动化学报, 2021, 47(4): 801-812Tan Jian-Hao, Zheng Ying-Shuai, Wang Yao-Nan, et al. AFST: Anchor-free fully convolutional siamese tracker with searching center point. Acta Automatica Sinica, 2021, 47(4):801-812 [25] Szegedy C, Liu W, Jia Y Q, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: 2015. 1−9 [26] He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 770−778 [27] Russakovsky O, Deng J, Su H, et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 2015, 115(3):211-252 doi: 10.1007/s11263-015-0816-y [28] Lin T Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft COCO: Common objects in context. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: 2014. 740−755 [29] Real E, Shlens J, Mazzocchi S, Pan X, Vanhoucke V. Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: 2017. 7464−7473 [30] Fan H, Lin L, Yang F, Chu P, Deng G, Yu S, et al. Lasot: A high-quality benchmark for large-scale single object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 5374−5383 [31] Müller M, Bibi A, Giancola S, Al-Subaihi S, Ghanem B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: 2018. 310−327 [32] Huang L, Zhao X, Huang K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(5):1562-1577 doi: 10.1109/TPAMI.2019.2957464 [33] Wu Y, Lim J, Yang M. Object tracking benchmark. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9):1834-1848 doi: 10.1109/TPAMI.2014.2388226 [34] Kristan M, He Z. The sixth visual object tracking VOT2018 challenge results. In: Proceedings of the European Conference on Computer Vision Workshops. Munich, Germany: 2018. 3−53 [35] Wang Q, Zhang L, Bertinetto L, Hu W, Torr P H S. Fast online object tracking and segmentation: A unifying approach. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 1328−1338 [36] Huang L, Zhao X, Huang K. Globaltrack: A simple and strong baseline for long-term tracking. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. New York, USA: AAAI Press, 2020. 11037−11044 [37] Fan H, Ling H. Siamese cascaded region proposal networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 7952−7961 [38] Danelljan M, Bhat G, Khan F S, Felsberg M. ATOM: Accurate tracking by overlap maximization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 4660−4669 [39] Bhat G, Danelljan M, Gool L V, Timofte R. Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Seo-ul, South Korea: IEEE, 2019. 6181−6190 [40] Nam H, Han B. Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 4293−4302 [41] Lukezic A, Matas J, Kristan M. D3S: A discriminative single shot segmentation tracker. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Sea-ttle, USA: IEEE, 2020. 7131−7140 [42] Zhang L, Gonzalez-Garcia A, Weijervan De J, Danelljan M, Khan F S. Learning the model update for siamese trackers. In: Proceedings of the IEEE/CVF International Conference on Com-puter Vision. Seoul, South Korea: IEEE, 2019. 4009−4018 -

AAS-CN-2021-0525数据.zip

AAS-CN-2021-0525数据.zip

-

下载:

下载: