-

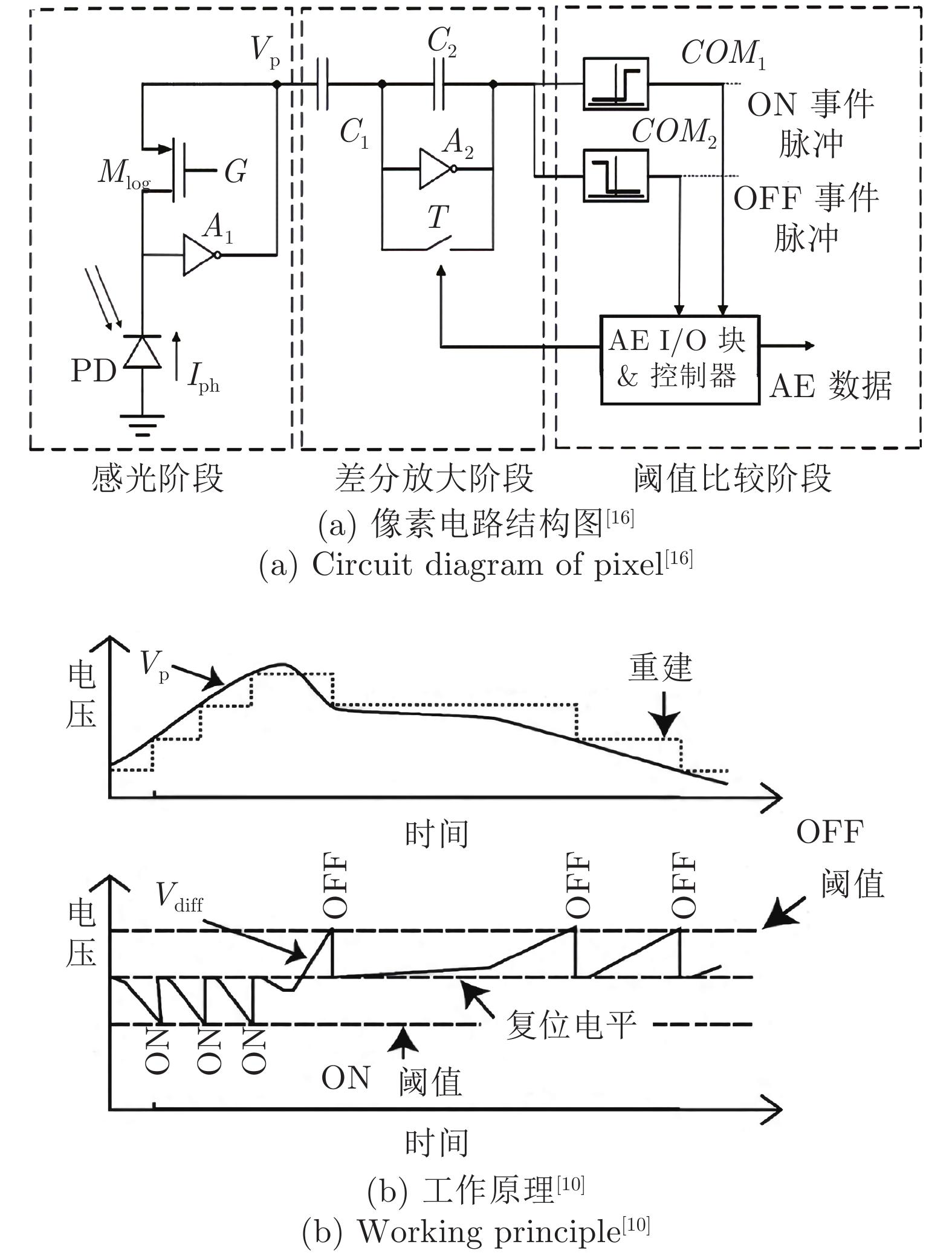

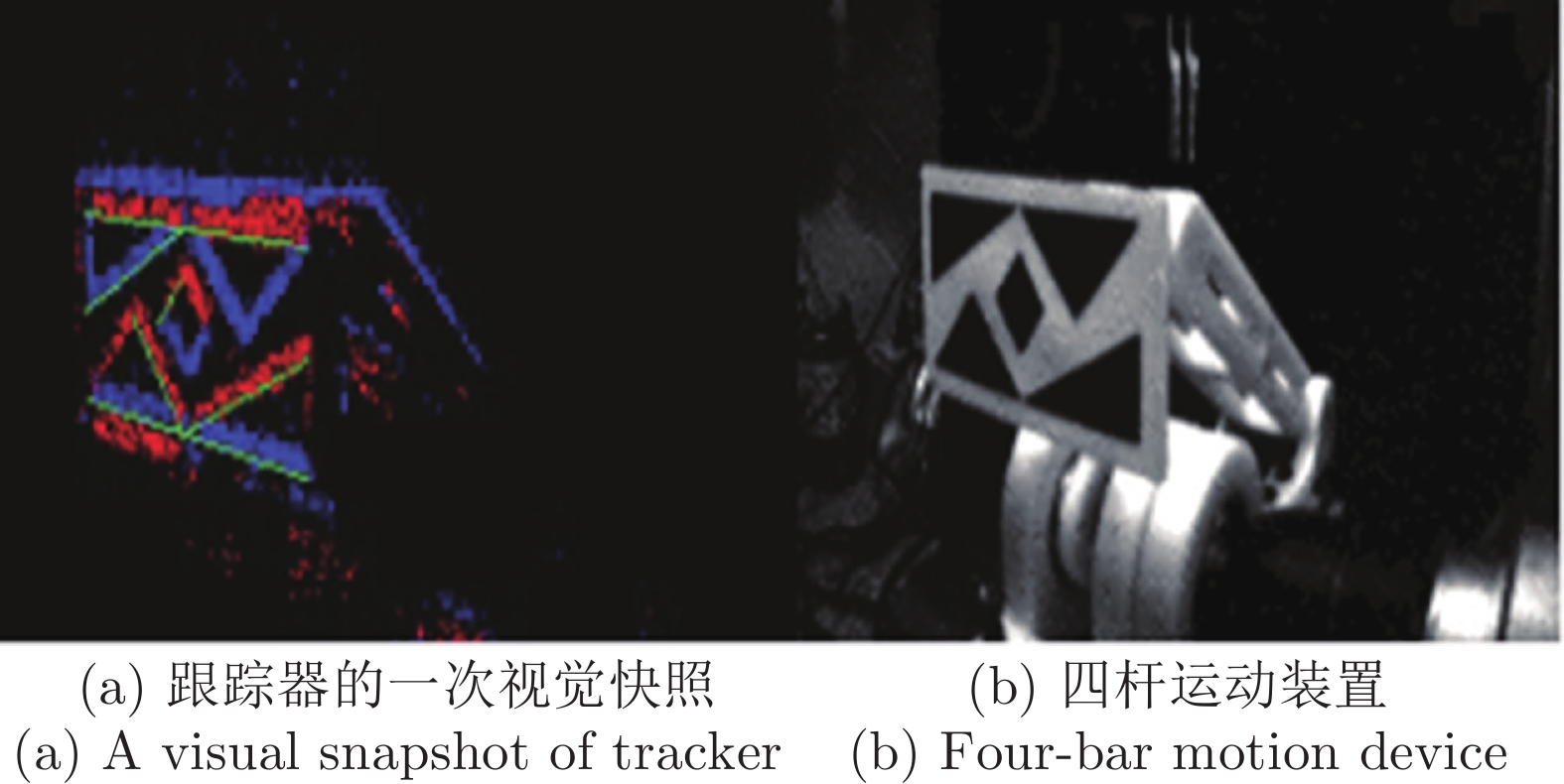

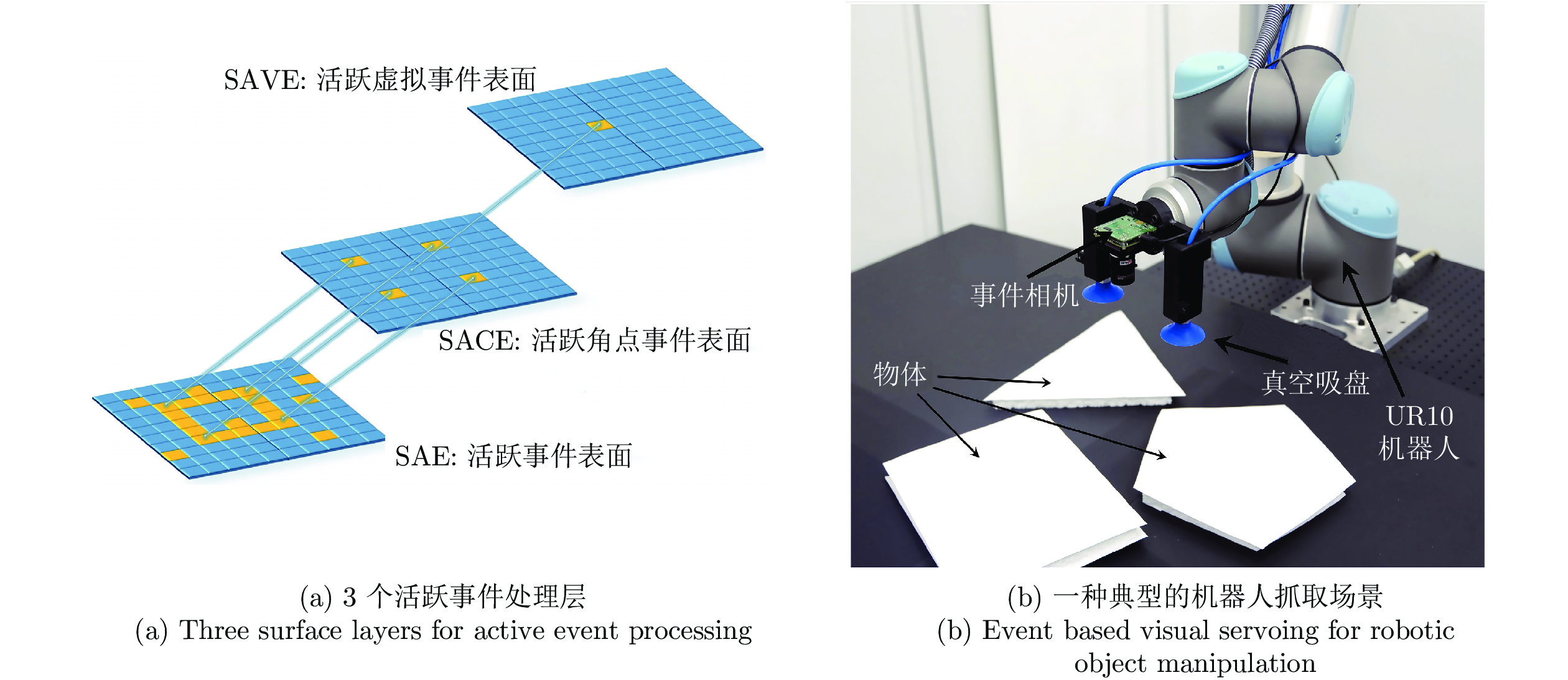

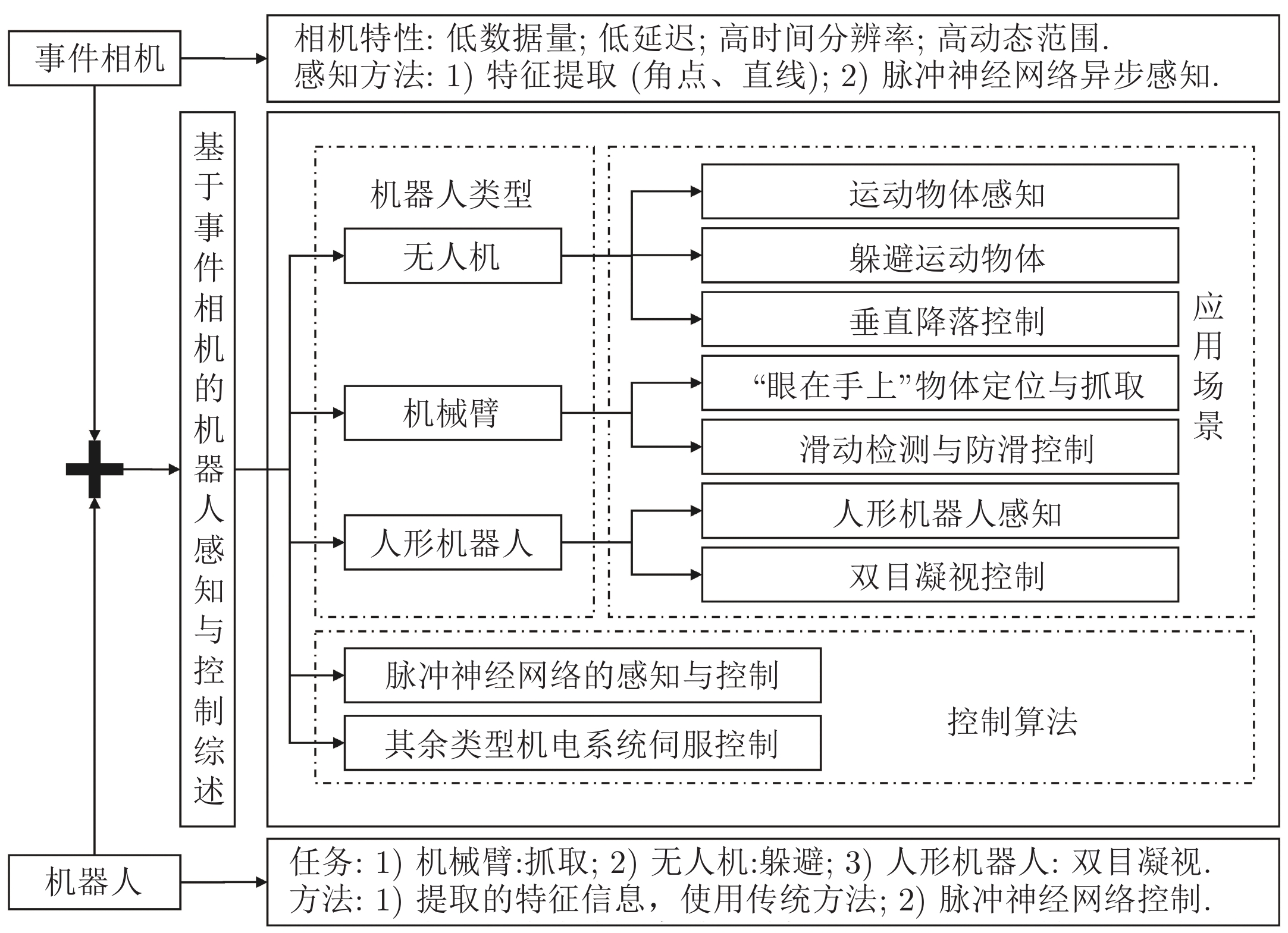

摘要: 事件相机作为一种新型动态视觉传感器, 通过各个像素点独立检测光照强度变化并异步输出“事件流”信号, 具有数据量小、延迟低、动态范围高等优秀特性, 给机器人控制带来新的可能. 本文主要介绍了近年来涌现的一系列事件相机与无人机、机械臂和人形机器人等机器人感知与运动控制结合的研究成果, 同时聚焦基于事件相机的控制新方法、新原理以及控制效果, 并指出基于事件相机的机器人控制的应用前景和发展趋势.Abstract: As a new type of dynamic vision sensor, the event camera detects the change of illumination intensity independently through each pixel and outputs the “event stream” signal asynchronously. It has excellent characteristics such as small amount of data, low latency and high dynamic range, which brings new possibilities for robot control. In this survey, a series of the latest research results on the combination of event camera and robot perception and motion control, including unmanned aerial vehicles, manipulators and humanoid robots, are introduced. At the same time, the new control methods, new principles and control effects based on event camera are focused, and the application prospect and development trend of robot control based on event camera are pointed out.

-

Key words:

- Event camera /

- low latency /

- robot control /

- perception and motion /

- new control algorithm

-

图 2 旋转圆盘场景下帧相机与事件相机输出对比[2-3]. 帧相机在每一帧图像中记录全部像素点上的数据, 即使圆盘中大部分区域的信息是无用的; 事件相机仅记录圆盘中的黑点位置, 因此仅对运动的有效信息输出事件

Fig. 2 Comparison of outputs between frame camera and event camera in rotating disc scene[2-3]. The frame camera records the data on all pixels in each frame image, even if the information in most areas of the disc is useless; The event camera only records the position of black spots in the disc, so it only outputs events for the effective information of motion

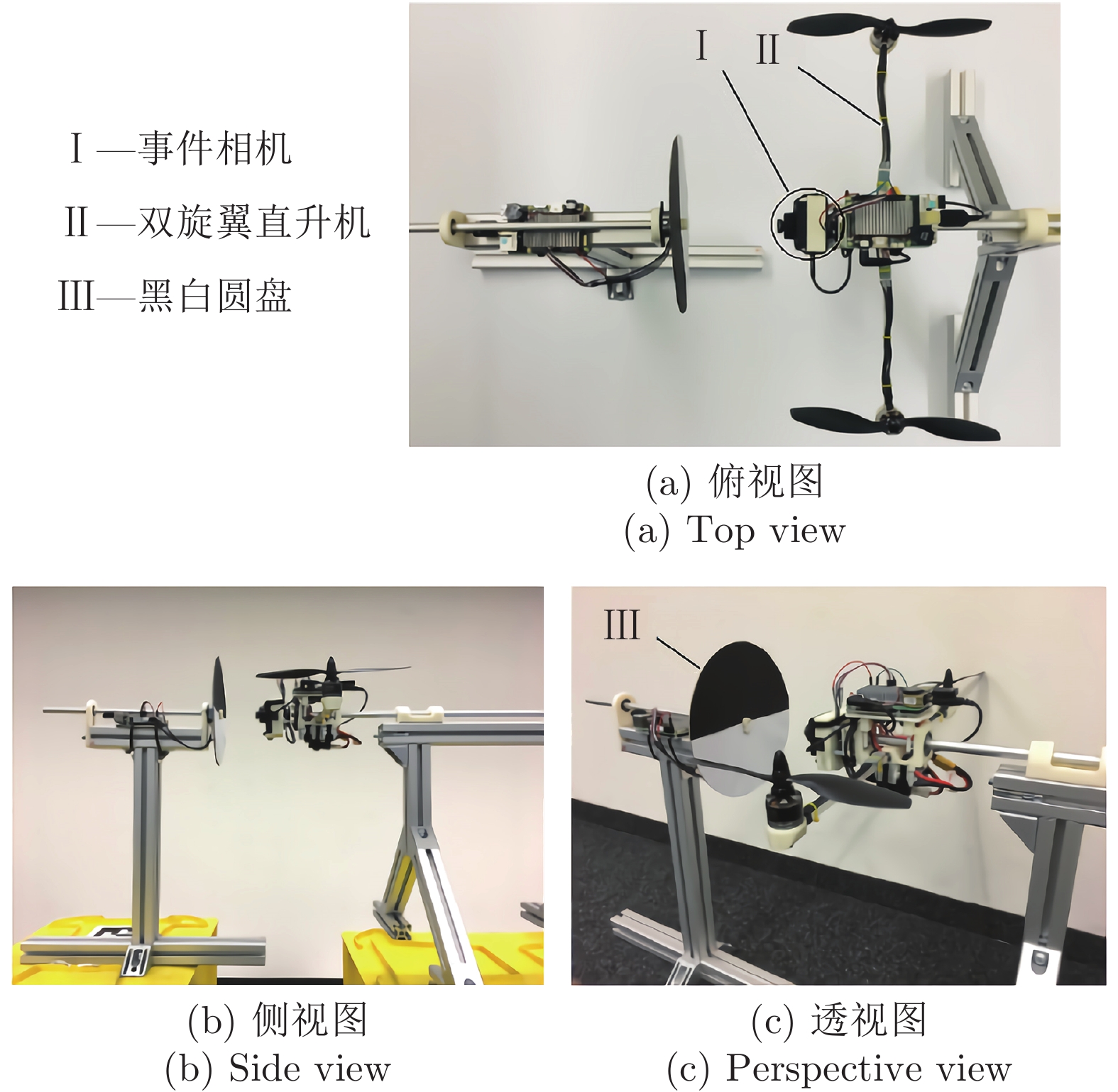

图 6 无人机躲避高速运动物体的相关实验((a)无人机实验平台[26]; (b)真实无人机躲避两个飞行障碍物, 四旋翼无人机使用EVDodgeNet进行障碍物躲避, 其中无人机左右两侧的弧线轨迹为同时掷向无人机的两个障碍物, 中间的轨迹为无人机闪躲轨迹[27]; (c)神经网络架构图[27], 其中EVDeblurNet: 事件帧去噪网络, 在模拟数据集上进行训练就可推广到真实场景, 无需重新训练或者微调; EVHomographyNet: 单应性估计网络, 用于自我运动估计, 计算相机的自我运动. 第一个使用事件相机的单应性估计方案; EVSegFlowNet: 物体分割与光流计算网络, 该网络可分割场景中的运动物体并获取其光流信息)

Fig. 6 Experiments of UAV avoiding high-speed moving obstacles ((a) UAV experimental platform[26]; (b) The real UAV avoids two flying obstacles, and the quadrotor UAV uses EVDodgeNet to avoid obstacles, in which the arc trajectory on left and right sides of the UAV is two obstacles thrown at the same time, and the middle trajectory is the UAV dodge trajectory[27]; (c) Neural network architecture diagram[27], in which EVDeblurNet: event frame denoising network, which can be extended to real scenes by training on simulated datasets without retraining or fine tuning; EVHomographyNet: homography estimation network, which is used to estimate self-motion and calculate self-motion of camera. The first homography estimation scheme using event camera; EVSegFlowNet: an object segmentation and optical flow computing network, which can segment moving objects in the scene and obtain their optical flow information)

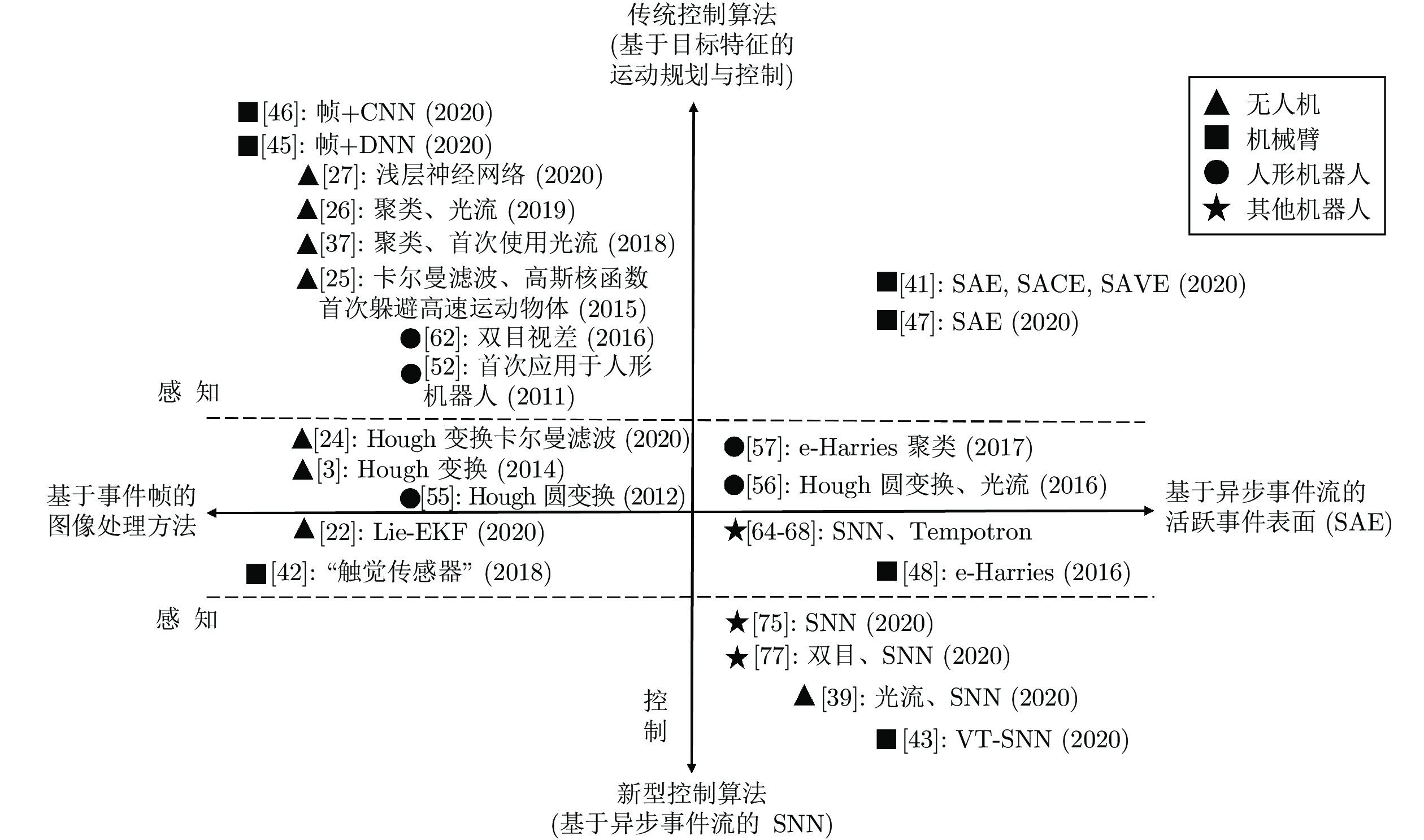

图 18 技术发展脉络图 (横轴为感知技术分类, 负横轴为基于事件帧的图像感知算法, 正横轴为基于异步事件流的感知算法; 纵轴为控制技术分类, 正纵轴为基于目标特征的运动规划与控制的传统方法, 负纵轴为基于异步事件流的新型控制算法,其中使用相似技术路线的文献处于同一象限, 标注是根据机器人类型进行分类并按时间顺序排列, 虚线内文献只涉及基于事件相机的感知技术)

Fig. 18 Development venation map of technology (The horizontal axis is the classification of perception technology, the negative horizontal axis is the image perception algorithm based on event frame, and the positive horizontal axis is the perception algorithm based on asynchronous event stream. The vertical axis is the classification of control technology, the positive vertical axis is the traditional method of motion planning and control based on object features, and the negative vertical axis is a new control algorithm based on asynchronous event stream. The literatures using similar technical routes are in the same quadrant, and the labels are classified according to robot types and arranged in chronological order. The literatures in the dotted line only involve the sensing technology based on event cameras)

表 1 几种事件相机的性能比较表

Table 1 Performance comparison table of several event cameras

文献 事件相机型号 产品年份 分辨率 (pixel) 最高动态范围 (dB) 功耗 (mW) 延迟 (μs) 像素大小 (μm2) [10] DVS128 2008 128×128 120 132 ~ 231 12 40 × 40 [17] DVS1280 2020 1280×960 120 150 — 4.95 × 4.95 [15] ATIS 2011 304×240 143 50 ~ 175 3 30 × 30 [19] DAVIS240 2014 240×180 130 5 ~ 14 12 18.5 × 18.5 [20] DAVIS346 2018 346×260 120 10 ~ 170 20 18.5 × 18.5 [21] CeleX-V 2019 1280×800 120 390 ~ 470 8 9.8 × 9.8 表 2 无人机部分实验场景

Table 2 Experimental scenarios of UAV

文献 无人机型号 事件相机 处理器或控制器 事件流处理方法 备注 基于事件帧 [2] Parrot AR.Drone 2.0 DVS Odroid U2 onboard computer Hough 变换 跟踪速度高达 1200°/s [22] — DVS Standard PC 卡尔曼滤波, Lie-EKF 公式 跟踪速度超过 2.5 m/s,

加速度超过 25.8 g[24] 组装 DAVIS 240C Intel Upboard Lumenier F4

AIO flight controllerHough 变换, 卡尔曼滤波 跟踪速度高达 1600°/s [27] Intel Aero Ready to

Fly DroneDAVIS 240C

DAVIS 240BNVIDIA TX2 CPU+GPU 浅层神经网络 碰撞预测时间 60 ms [37] 定制 MavTec DVS128 Lisa/MX Odroid XU4 聚类, 光流算法 — 基于事件流 [25] 组装 双目 DVS 128 Odroid U3 quad-core computer 高斯核函数, 卡尔曼滤波 碰撞预测时间 250 ms [26] 基于 DJI F330 定制 Insigthness SEEM1 Intel Upboard Lumenier F4

AIO flight controllerDBSCAN 聚类, 光流算法 碰撞预测时间 68 ms (2 m 处)

183 ms (3 m 处)[28] 定制 Insightness SEEM1 Qualcomm Snapdragon Flight

NVIDIA Jetson TX2DBSCAN 聚类, 光流

算法, 卡尔曼滤波碰撞预测时间 3.5 ms [35] 基于 DJI F450 DAVIS 346 PixRacer Khadas VIM3 Hough 变换, 卡尔曼滤波 规划时间 2.95 ms [39] Parrot Bebop2 — Dual-core ARM cortex A9 processor. 脉冲神经网络, 光流算法 神经形态控制器效果良好 表 3 部分基于事件相机的实验设备

Table 3 Experimental equipment based on event camera

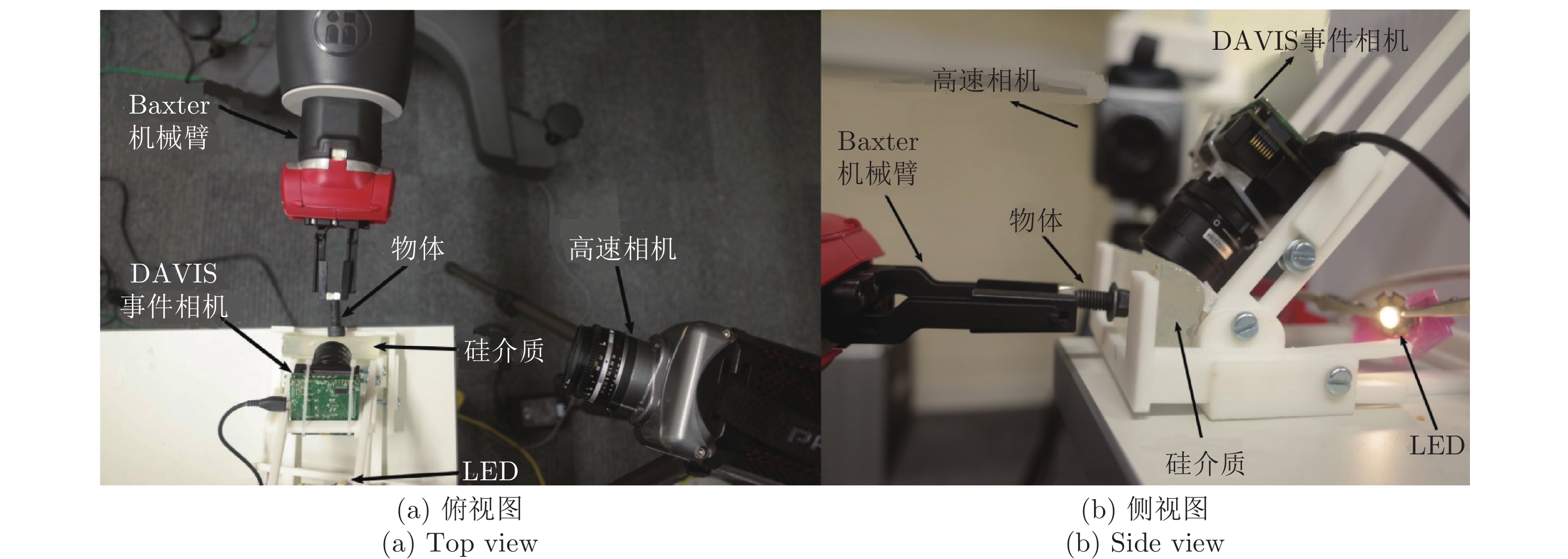

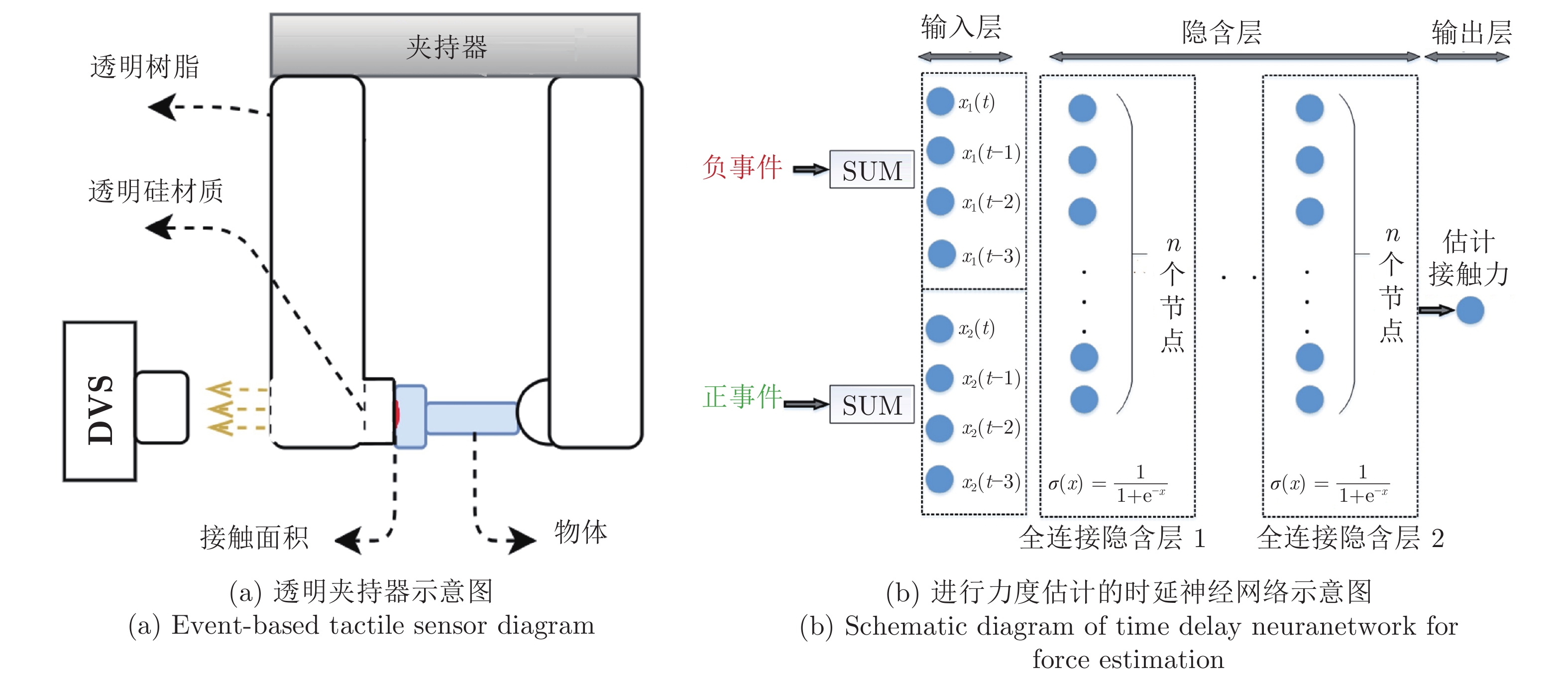

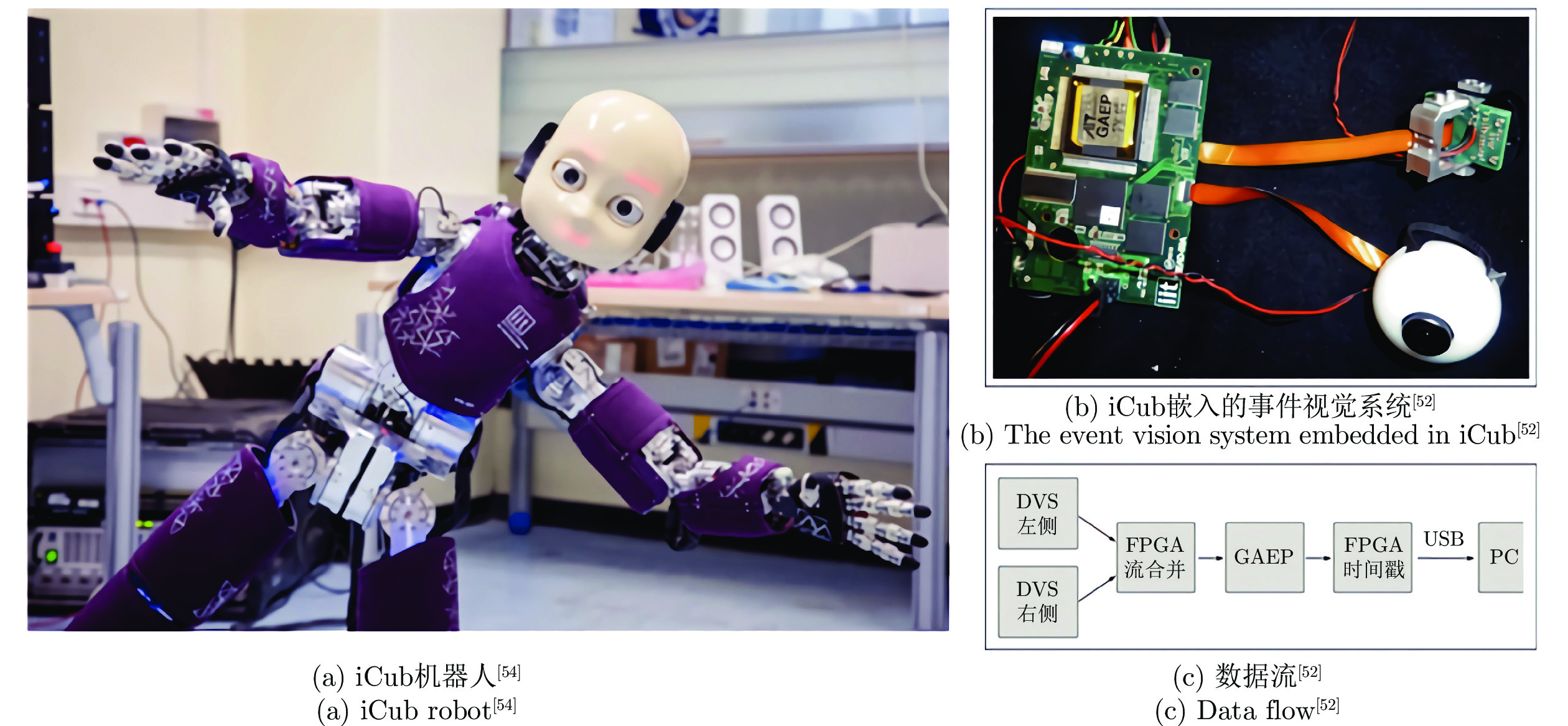

文献 使用传感器 机械臂设备 方法 [41] DAVIS240C UR10 事件流 [43] Prophesee Gen3, NeuTouch 7 DOF Franka Emika Panda arm 事件流 + SNN [42] DAVIS240C Baxter robot arm 事件帧 [45] DAVIS240C AX-12A Dynamixel servo motor 事件帧 + DNN [46] DAVIS240C, ATI F/T sensor (Nano17) Baxter robot arm 事件帧 + CNN [47] DAVIS240C, ATI F/T sensor (Nano17) Baxter robot daul-arm 事件帧 [49] DVS128 — 事件帧 -

[1] Posch C, Serrano-Gotarredona T, Linares-Barranco B, Delbruck T. Retinomorphic event-based vision sensors: Bioinspired cameras with spiking output. Proceedings of the IEEE, 2014, 102(10): 1470-1484 doi: 10.1109/JPROC.2014.2346153 [2] Mueggler E, Huber B, Scaramuzza D. Event-based, 6-DOF pose tracking for high-speed maneuvers. In: Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. Chicago, USA: IEEE, 2014. 2761−2768 [3] Cho D I, Lee T J. A review of bioinspired vision sensors and their applications. Sensors and Materials, 2015, 27(6): 447-463 [4] Mahowald M. VLSI Analogs of Neuronal Visual Processing: A Synthesis of form and Function [Ph.D. dissertation], California Institute of Technology, USA, 1992. [5] Boahen K. A retinomorphic chip with parallel pathways: Encoding increasing, on, decreasing, and off visual signals. Analog Integrated Circuits and Signal Processing, 2002, 30(2): 121-135 doi: 10.1023/A:1013751627357 [6] Kramer J. An integrated optical transient sensor. IEEE Transactions on Circuits and Systems II: Analog and Digital Signal Processing, 2002, 49(9): 612-628 doi: 10.1109/TCSII.2002.807270 [7] Rüedi P F, Heim P, Kaess F, Grenet E, Heitger F, Burgi P Y, et al. A 128 × 128 Pixel 120-dB dynamic-range vision-sensor chip for image contrast and orientation extraction. IEEE Journal of Solid-State Circuits, 2003, 38(12): 2325-2333 doi: 10.1109/JSSC.2003.819169 [8] Luo Q, Harris J G, Chen Z J. A time-to-first spike CMOS image sensor with coarse temporal sampling. Analog Integrated Circuits and Signal Processing, 2006, 47(3): 303-313 doi: 10.1007/s10470-006-5676-5 [9] Chi Y M, Mallik U, Clapp M A, Choi E, Cauwenberghs G, Etienne-Cummings R. CMOS camera with in-pixel temporal change detection and ADC. IEEE Journal of Solid-State Circuits, 2007, 42(10): 2187-2196 doi: 10.1109/JSSC.2007.905295 [10] Lichtsteiner P, Posch C, Delbruck T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE Journal of Solid-State Circuits, 2008, 43(2): 566-576 doi: 10.1109/JSSC.2007.914337 [11] Gallego G, Delbrück T, Orchard G, Bartolozzi C, Taba B, Censi A, et al. Event-based vision: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(1): 154-180 doi: 10.1109/TPAMI.2020.3008413 [12] Amir A, Taba B, Berg D, Melano T, McKinstry J, Di Nolfo C, et al. A low power, fully event-based gesture recognition system. In: Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 7388−7397 [13] Conradt J, Cook M, Berner R, Lichtsteiner P, Douglas R J, Delbruck T. A pencil balancing robot using a pair of AER dynamic vision sensors. In: Proceedings of the 2009 IEEE International Symposium on Circuits and Systems. Taipei, China: IEEE, 2009. 781−784 [14] Xu H Z, Gao Y, Yu F, Darrell T. End-to-end learning of driving models from large-scale video datasets. In: Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 3530−3538 [15] Posch C, Matolin D, Wohlgenannt R. A QVGA 143 dB dynamic range frame-free PWM image sensor with lossless pixel-level video compression and time-domain CDS. IEEE Journal of Solid-State Circuits, 2011, 46(1): 259-275 doi: 10.1109/JSSC.2010.2085952 [16] 胡生龙. 城市内动态场景下基于双目视觉的无人车同步定位与建图 [硕士学位论文], 哈尔滨工业大学, 中国, 2020.Hu Sheng-Long. Slam for Autonomouse Vehicles Based on Stereo Vision in the City Scene with Dynamic Objects [Master thesis], Harbin Institute of Technology, China, 2020. [17] Suh Y, Choi S, Ito M, Kim J, Lee Y, Seo J, et al. A 1 280 × 960 dynamic vision sensor with a 4.95-μm pixel pitch and motion artifact minimization. In: Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS). Seville, Spain: IEEE, 2020. 1−5 [18] Posch C, Matolin D, Wohlgenannt R. An asynchronous time-based image sensor. In: Proceedings of the 2008 IEEE International Symposium on Circuits and Systems (ISCAS). Seattle, USA: IEEE, 2008. 2130−2133 [19] Brandli C, Berner R, Yang M H, Liu S C, Delbruck T. A 240 × 180 130 dB 3μs latency global shutter spatiotemporal vision sensor. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333-2341 doi: 10.1109/JSSC.2014.2342715 [20] Taverni G, Moeys D P, Li C H, Cavaco C, Motsnyi V, Bello D S S, Delbruck T. Front and back illuminated dynamic and active pixel vision sensors comparison. IEEE Transactions on Circuits and Systems II: Express Briefs, 2018, 65(5): 677-681 [21] Chen S S, Guo M H. Live demonstration: CeleX-V: A 1 M pixel multi-mode event-based sensor. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Long Beach, USA: IEEE, 2019. 1682−1683 [22] Chamorro Hernández W O, Andrade-Cetto J, Solà Ortega J. High-speed event camera tracking. In: Proceedings of the 31st British Machine Vision Virtual Conference (BMVC). Virtual Event, UK, 2020. 1−12 [23] Deray J, Solà J. Manif: A micro Lie theory library for state estimation in robotics applications. The Journal of Open Source Software. 2020, 5(46): 1371 [24] Dimitrova R S, Gehrig M, Brescianini D, Scaramuzza D. Towards low-latency high-bandwidth control of quadrotors using event cameras. In: Proceedings of the 2020 IEEE International Conference on Robotics and Automation. Paris, France: IEEE, 2020. 4294−4300 [25] Mueggler E, Baumli N, Fontana F, Scaramuzza D. Towards evasive maneuvers with quadrotors using dynamic vision sensors. In: Proceedings of the 2015 European Conference on Mobile Robots (ECMR). Lincoln, UK: IEEE, 2015. 1−8 [26] Falanga D, Kim S, Scaramuzza D. How fast is too fast? The role of perception latency in high-speed sense and avoid. IEEE Robotics and Automation Letters, 2019, 4(2): 1884-1891 doi: 10.1109/LRA.2019.2898117 [27] Sanket N J, Parameshwara C M, Singh C D, Kuruttukulam A V, Fermüller C, Scaramuzza D, et al. EVDodgeNet: Deep dynamic obstacle dodging with event cameras. In: Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020. 10651−10657 [28] Falanga D, Kleber K, Scaramuzza D. Dynamic obstacle avoidance for quadrotors with event cameras. Science Robotics, 2020, 5(40): Article No. eaaz9712 [29] Mitrokhin A, Fermüller C, Parameshwara C, Aloimonos Y. Event-based moving object detection and tracking. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid, Spain: IEEE, 2018. 1−9 [30] Khatib O. Real-time obstacle avoidance for manipulators and mobile robots. Autonomous Robot Vehicles. New York: Springer, 1986. 396−404 [31] Lin Y, Gao F, Qin T, Gao W L, Liu T B, Wu W, et al. Autonomous aerial navigation using monocular visual-inertial fusion. Journal of Field Robotics, 2018, 35(1): 23-51 doi: 10.1002/rob.21732 [32] Oleynikova H, Honegger D, Pollefeys M. Reactive avoidance using embedded stereo vision for MAV flight. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA). Seattle, USA: IEEE, 2015. 50−56 [33] Barry A J, Florence P R, Tedrake R. High-speed autonomous obstacle avoidance with pushbroom stereo. Journal of Field Robotics, 2018, 35(1): 52-68 doi: 10.1002/rob.21741 [34] Huang A S, Bachrach A, Henry P, Krainin M, Maturana D, Fox D, et al. Visual odometry and mapping for autonomous flight using an RGB-D camera. Robotics Research. Cham: Springer, 2016. 235−252 [35] Eguíluz A G, Rodríguez-Gómez J P, Martínez-de Dios J R, Ollero A. Asynchronous event-based line tracking for time-to-contact maneuvers in UAS. In: Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Las Vegas, USA: IEEE, 2020. 5978−5985 [36] Lee D N, Bootsma R J, Land M, Regan D, Gray R. Lee$’$s 1976 paper. Perception, 2009, 38(6): 837-858 doi: 10.1068/pmklee [37] Pijnacker Hordijk B J, Scheper K Y W, De Croon G C H E. Vertical landing for micro air vehicles using event-based optical flow. Journal of Field Robotics, 2018, 35(1): 69-90 doi: 10.1002/rob.21764 [38] Scheper K Y W, De Croon G C H E. Evolution of robust high speed optical-flow-based landing for autonomous MAVs. Robotics and Autonomous Systems, 2020, 124: Article No. 103380 [39] Hagenaars J J, Paredes-Vallés F, Bohté S M, de Croon G C H E. Evolved neuromorphic control for high speed divergence-based landings of MAVs. IEEE Robotics and Automation Letters, 2020, 5(4): 6239-6246 doi: 10.1109/LRA.2020.3012129 [40] Tavanaei A, Ghodrati M, Kheradpisheh S R, Masquelier T, Maida A. Deep learning in spiking neural networks. Neural Networks, 2019, 111: 47-63 doi: 10.1016/j.neunet.2018.12.002 [41] Muthusamy R, Ayyad A, Halwani M, Swart D, Gan D M, Seneviratne L, et al. Neuromorphic eye-in-hand visual servoing. IEEE Access, 2021, 9: 55853-55870 doi: 10.1109/ACCESS.2021.3071261 [42] Rigi A, Baghaei Naeini F, Makris D, Zweiri Y. A novel event-based incipient slip detection using dynamic active-pixel vision sensor (DAVIS). Sensors, 2018, 18(2): Article No. 333 [43] Taunyazov T, Sng W, Lim B, See H H, Kuan J, Ansari A F, et al. Event-driven visual-tactile sensing and learning for robots. In: Proceedings of the 16th Robotics: Science and Systems 2020. Virtual Event/Corvalis, Oregon, USA, 2020. [44] Davies M, Srinivasa N, Lin T H, Chinya G, Cao Y Q, Choday S H, et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro, 2018, 38(1): 82-99 doi: 10.1109/MM.2018.112130359 [45] Baghaei Naeini F, AlAli A M, Al-Husari R, Rigi A, Al-Sharman M K, Makris D, et al. A novel dynamic-vision-based approach for tactile sensing applications. IEEE Transactions on Instrumentation and Measurement, 2020, 69(5): 1881-1893 doi: 10.1109/TIM.2019.2919354 [46] Baghaei Naeini F, Makris D, Gan D M, Zweiri Y. Dynamic-vision-based force measurements using convolutional recurrent neural networks. Sensors, 2020, 20(16): Article No. 4469 [47] Muthusamy R, Huang X Q, Zweiri Y, Seneviratne L, Gan D M. Neuromorphic event-based slip detection and suppression in robotic grasping and manipulation. IEEE Access, 2020, 8: 153364-153384 doi: 10.1109/ACCESS.2020.3017738 [48] Vasco V, Glover A, Bartolozzi C. Fast event-based Harris corner detection exploiting the advantages of event-driven cameras. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Daejeon, Korea (South): IEEE, 2016. 4144−4149 [49] Kumagai K, Shimonomura K. Event-based tactile image sensor for detecting spatio-temporal fast phenomena in contacts. In: Proceedings of the 2019 IEEE World Haptics Conference (WHC). Tokyo, Japan: IEEE, 2019. 343−348 [50] Monforte M, Arriandiaga A, Glover A, Bartolozzi C. Where and when: Event-based spatiotemporal trajectory prediction from the iCub′s point-of-view. In: Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020. 9521−9527 [51] Iacono M, Weber S, Glover A, Bartolozzi C. Towards event-driven object detection with off-the-shelf deep learning. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid, Spain: IEEE, 2018. 1−9 [52] Bartolozzi C, Rea F, Clercq C, Fasnacht D B, Indiveri G, Hofstatter M, et al. Embedded neuromorphic vision for humanoid robots. In: Proceedings of the 2011 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Colorado Springs, USA: IEEE, 2011. 129−135 [53] Glover A, Vasco V, Iacono M, Bartolozzi C. The event-driven software library for YARP-with algorithms and iCub applications. Frontiers in Robotics and AI, 2018, 4: Article No. 73 [54] IIT. ICub robot [Online], available: https://icub.iit.it/products/icub-robot, July 20, 2021 [55] Wiesmann G, Schraml S, Litzenberger M, Belbachir A N, Hofstätter M, Bartolozzi C. Event-driven embodied system for feature extraction and object recognition in robotic applications. In: Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. Providence, USA: IEEE, 2012. 76−82 [56] Glover A, Bartolozzi C. Event-driven ball detection and gaze fixation in clutter. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Daejeon, Korea (South): IEEE, 2016. 2203−2208 [57] Vasco V, Glover A, Mueggler E, Scaramuzza D, Natale L, Bartolozzi C. Independent motion detection with event-driven cameras. In: Proceedings of the 18th International Conference on Advanced Robotics (ICAR). Hong Kong, China: IEEE, 2017. 530−536 [58] Rea F, Metta G, Bartolozzi C. Event-driven visual attention for the humanoid robot iCub. Frontiers in Neuroscience, 2013, 7: Article No. 234 [59] Iacono M, D′Angelo G, Glover A, Tikhanoff V, Niebur E, Bartolozzi C. Proto-object based saliency for event-driven cameras. In: Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems. Macau, China: IEEE, 2019. 805−812 [60] Glover A, Bartolozzi C. Robust visual tracking with a freely-moving event camera. In: Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Vancouver, Canada: IEEE, 2017. 3769−3776 [61] Gibaldi A, Canessa A, Chessa M, Sabatini S P, Solari F. A neuromorphic control module for real-time vergence eye movements on the iCub robot head. In: Proceedings of the 11th IEEE-RAS International Conference on Humanoid Robots. Bled, Slovenia: IEEE, 2011. 543−550 [62] Vasco V, Glover A, Tirupachuri Y, Solari F, Chessa M, Bartolozzi C. Vergence control with a neuromorphic iCub. In: Proceedings of the 16th IEEE-RAS International Conference on Humanoid Robots (Humanoids). Cancun, Mexico: IEEE, 2016. 732−738 [63] Akolkar H, Valeiras D R, Benosman R, Bartolozzi C. Visual-Auditory saliency detection using event-driven visual sensors. In: Proceedings of the 2015 International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP). Krakow, Poland: IEEE, 2015. 1−6 [64] Zhao B, Ding R X, Chen S S, Linares-Barranco B, Tang H J. Feedforward categorization on AER motion events using cortex-like features in a spiking neural network. IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(9): 1963-1978 doi: 10.1109/TNNLS.2014.2362542 [65] Zhao B, Yu Q, Yu H, Chen S S, Tang H J. A bio-inspired feedforward system for categorization of AER motion events. In: Proceedings of the 2013 IEEE Biomedical Circuits and Systems Conference (BioCAS). Rotterdam, the Netherlands: IEEE, 2013. 9−12 [66] Zhao B, Chen S S, Tang H J. Bio-inspired categorization using event-driven feature extraction and spike-based learning. In: Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN). Beijing, China: IEEE, 2014. 3845−3852 [67] Nan Y, Xiao R, Gao S B, Yan R. An event-based hierarchy model for object recognition. In: Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI). Xiamen, China: IEEE, 2019. 2342−2347 [68] Shen J R, Zhao Y, Liu J K, Wang Y M. Recognizing scoring in basketball game from AER sequence by spiking neural networks. In: Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN). Glasgow, UK: IEEE, 2020. 1−8 [69] Massa R, Marchisio A, Martina M, Shafique M. An efficient spiking neural network for recognizing gestures with a DVS camera on the loihi neuromorphic processor. In: Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN). Glasgow, UK: IEEE, 2020. 1−9 [70] Camuñas-Mesa L A, Domínguez-Cordero Y L, Linares-Barranco A, Serrano-Gotarredona T, Linares-Barranco B. A configurable event-driven convolutional node with rate saturation mechanism for modular ConvNet systems implementation. Frontiers in Neuroscience, 2018, 12: Article No. 63 [71] Camuñas-Mesa L A, Linares-Barranco B, Serrano-Gotarredona T. Low-power hardware implementation of SNN with decision block for recognition tasks. In: Proceedings of the 26th IEEE International Conference on Electronics, Circuits and Systems (ICECS). Genoa, Italy: IEEE, 2019. 73−76 [72] Pérez-Carrasco J A, Zhao B, Serrano C, Acha B, Serrano-Gotarredona T, Chen S C, et al. Mapping from frame-driven to frame-free event-driven vision systems by low-rate rate coding and coincidence processing - Application to feedforward convnets. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(11): 2706-2719 doi: 10.1109/TPAMI.2013.71 [73] Diehl P U, Cook M. Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Frontiers in Computational Neuroscience, 2015, 9: Article No. 99 [74] Iyer L R, Basu A. Unsupervised learning of event-based image recordings using spike-timing-dependent plasticity. In: Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN). Anchorage, USA: IEEE, 2017: 1840−1846 [75] Cheng R, Mirza K B, Nikolic K. Neuromorphic robotic platform with visual input, processor and actuator, based on spiking neural networks. Applied System Innovation, 2020, 3(2): Article No. 28 [76] Ting J, Fang Y, Lele A, Raychowdhury A. Bio-inspired gait imitation of hexapod robot using event-based vision sensor and spiking neural network. In: Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN). Glasgow, UK: IEEE, 2020. 1−7 [77] Youssef I, Mutlu M, Bayat B, Crespi A, Hauser S, Conradt J, et al. A neuro-inspired computational model for a visually guided robotic lamprey using frame and event based cameras. IEEE Robotics and Automation Letters, 2020, 5(2): 2395-2402 doi: 10.1109/LRA.2020.2972839 [78] Blum H, Dietmüller A, Milde M B, Conradt J, Indiveri G, Sandamirskaya Y. A neuromorphic controller for a robotic vehicle equipped with a dynamic vision sensor. In: Proceedings of the 13th Robotics: Science and Systems 2017. Cambridge, MA, USA, 2017. 1−9 [79] Renner A, Evanusa M, Sandamirskaya Y. Event-based attention and tracking on neuromorphic hardware. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Long Beach, USA: IEEE, 2019. 1709−1716 [80] Gehrig M, Shrestha S B, Mouritzen D, Scaramuzza D. Event-based angular velocity regression with spiking networks. In: Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020. 4195−4202 [81] Conradt J, Berner R, Cook M, Delbruck T. An embedded AER dynamic vision sensor for low-latency pole balancing. In: Proceedings of the 12th International Conference on Computer Vision Workshops. Kyoto, Japan: IEEE, 2009. 780−785 [82] Moeys D P, Corradi F, Kerr E, Vance P, Das G, Neil D, et al. Steering a predator robot using a mixed frame/event-driven convolutional neural network. In: Proceedings of the 2016 Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP). Krakow, Poland: IEEE, 2016. 1−8 [83] Delbruck T, Lang M. Robotic goalie with 3 ms reaction time at 4% CPU load using event-based dynamic vision sensor. Frontiers in Neuroscience, 2013, 7: Article No. 223 [84] Delbruck T, Lichtsteiner P. Fast sensory motor control based on event-based hybrid neuromorphic-procedural system. In: Proceedings of the 2007 IEEE International Symposium on Circuits and Systems. New Orleans, USA: IEEE, 2007. 845−848 [85] Mueller E, Censi A, Frazzoli E. Low-latency heading feedback control with neuromorphic vision sensors using efficient approximated incremental inference. In: Proceedings of the 54th IEEE Conference on Decision and Control (CDC). Osaka, Japan: IEEE, 2015. 992−999 [86] Mueller E, Censi A, Frazzoli E. Efficient high speed signal estimation with neuromorphic vision sensors. In: Proceedings of the 2015 International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP). Krakow, Poland: IEEE, 2015. 1−8 [87] Delbruck T, Pfeiffer M, Juston R, Orchard G, Müggler E, Linares-Barranco A, et al. Human vs. computer slot car racing using an event and frame-based DAVIS vision sensor. In: Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS). Lisbon, Portugal: IEEE, 2015. 2409−2412 [88] Censi A. Efficient neuromorphic optomotor heading regulation. In: Proceedings of the 2015 American Control Conference (ACC). Chicago, USA: IEEE, 2015. 3854−3861 [89] Singh P, Yong S Z, Gregoire J, Censi A, Frazzoli E. Stabilization of linear continuous-time systems using neuromorphic vision sensors. In: Proceedings of the 55th IEEE Conference on Decision and Control (CDC). Las Vegas, USA: IEEE, 2016. 3030−3036 [90] Singh P, Yong S Z, Frazzoli E. Stabilization of stochastic linear continuous-time systems using noisy neuromorphic vision sensors. In: Proceedings of the 2017 American Control Conference (ACC). Seattle, USA: IEEE, 2017. 535−541 [91] Singh P, Yong S Z, Frazzoli E. Regulation of linear systems using event-based detection sensors. IEEE Transactions on Automatic Control, 2019, 64(1): 373-380 doi: 10.1109/TAC.2018.2876997 -

下载:

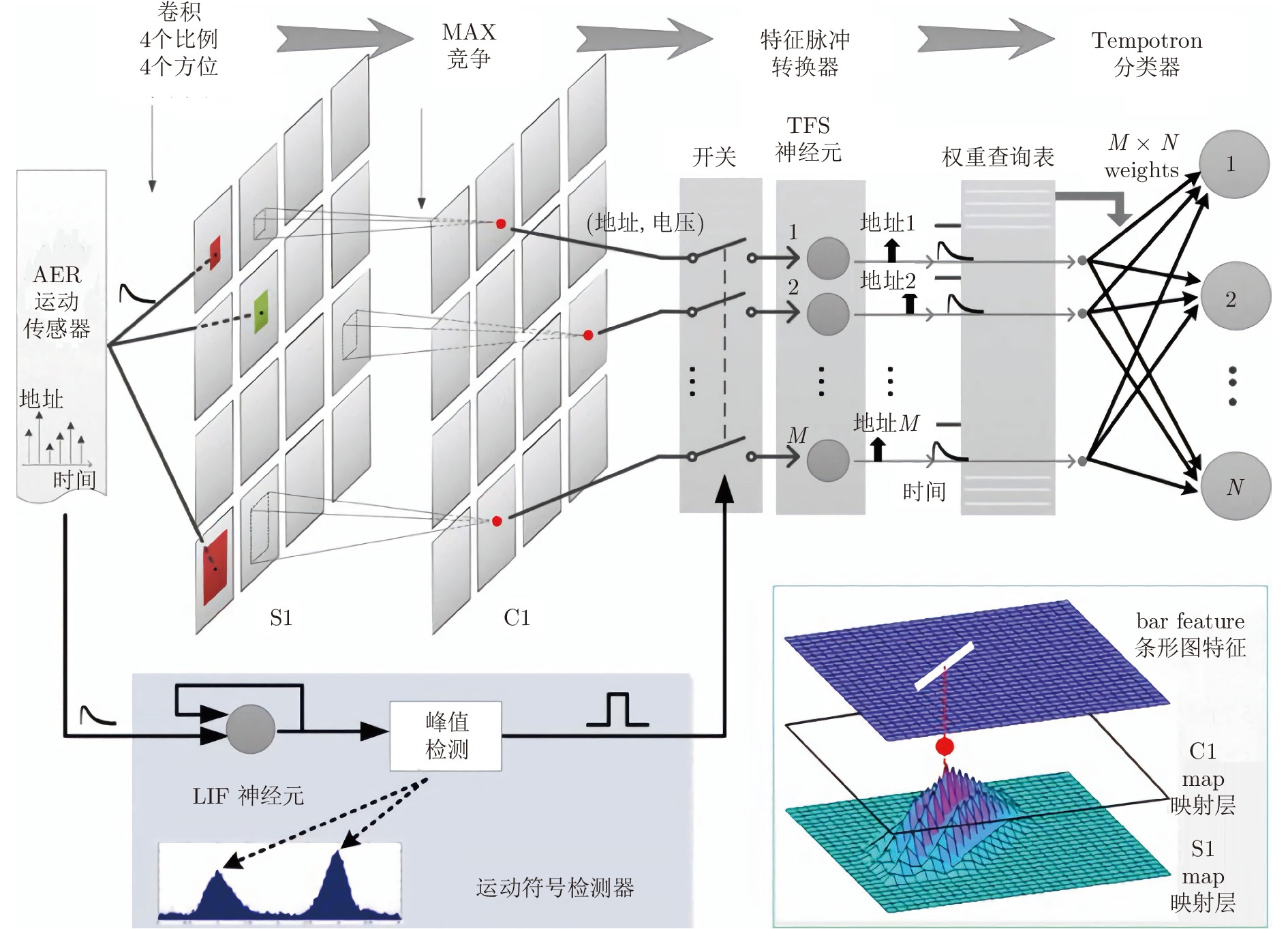

下载: