-

摘要:

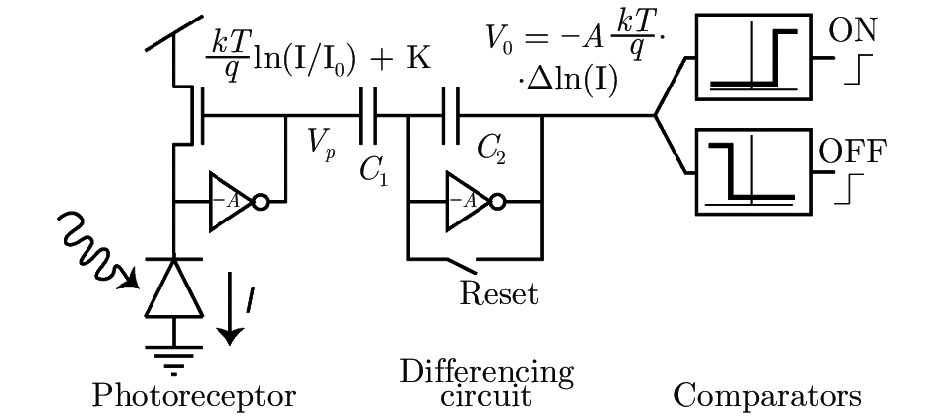

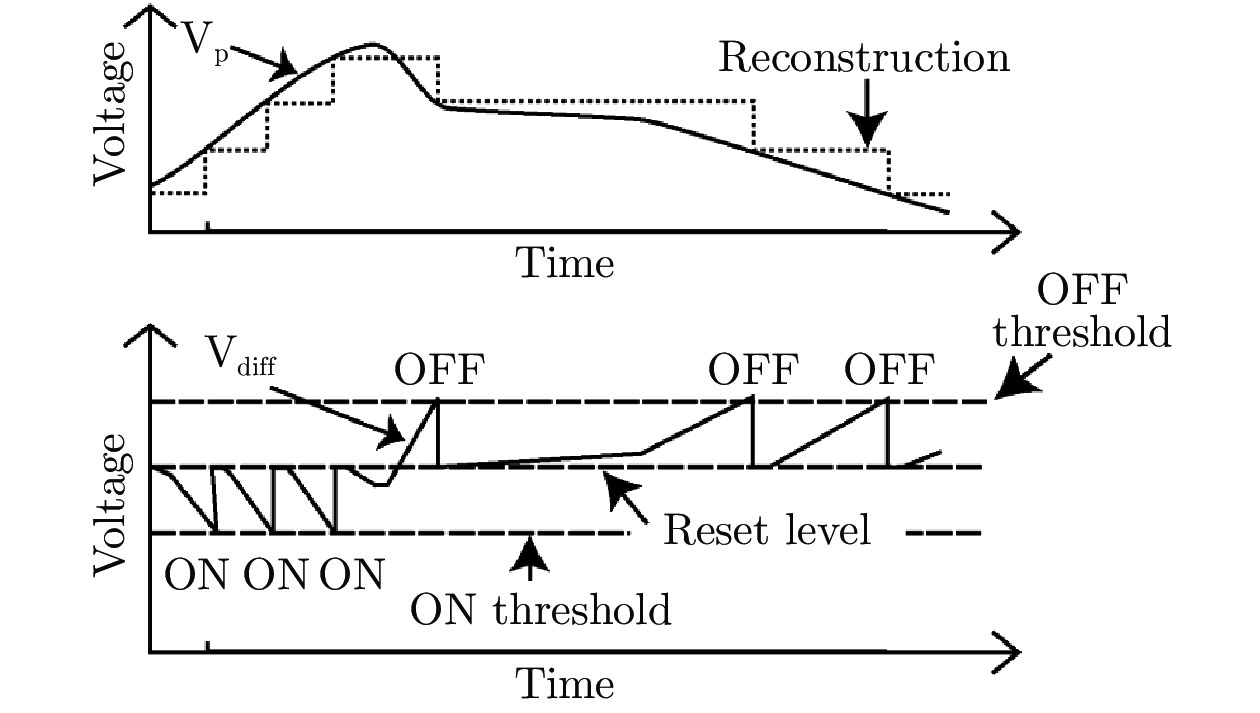

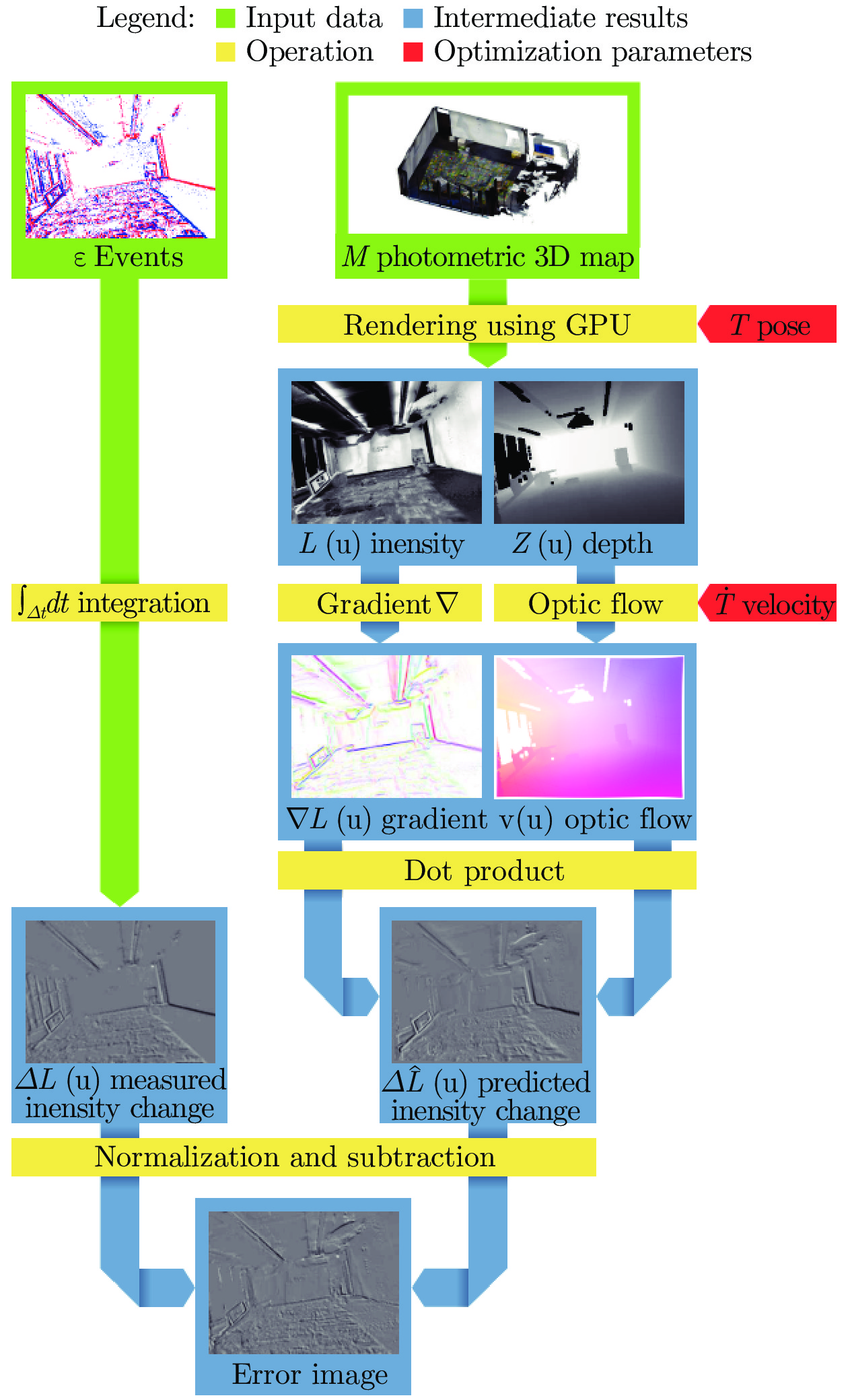

事件相机是一种新兴的视觉传感器, 通过检测单个像素点光照强度的变化来产生“事件”. 基于其工作原理, 事件相机拥有传统相机所不具备的低延迟、高动态范围等优良特性. 而如何应用事件相机来完成机器人的定位与建图则是目前视觉定位与建图领域新的研究方向. 本文从事件相机本身出发, 介绍事件相机的工作原理、现有的定位与建图算法以及事件相机相关的开源数据集. 其中, 本文着重对现有的、基于事件相机的定位与建图算法进行详细的介绍和优缺点分析.

Abstract:Event-based camera is a new type of visual sensor, which activates “events” by monitoring the changes of lighting intensity. Event camera offers low-latency output and tolerates high dynamic range, therefore, it is a new topic in the SLAM area applying event-based camera to robot localization and mapping. This work introduces the working principle of event-based camera and reviews present algorithms and dataset of event-based localization and mapping. Emphasis of this work is introducing and analysing event-based localization and mapping algorithms.

-

Key words:

- Event camera /

- low latency /

- pose estimation /

- localization and mapping

-

表 1 文中叙述的部分基于事件相机的SLAM算法及应用

Table 1 Event-based SLAM algorithms and applications

相关文献 所使用传感器 维度 算法类型 是否需要输入地图 发表时间 (年) [44] DVS 2D 定位 是 2012 [45] DVS 2D 定位与建图 否 2013 [47] DVS 3D 定位 是 2014 [48] DVS 3D 定位与建图 否 2016 [49] DVS 3D 定位与建图 否 2016 [51] DVS 3D 定位 是 2019 [52] DVS, 灰度相机 3D 定位 否 2014 [53] DVS, RGB-D相机 3D 定位与建图 否 2014 [55] DAVIS 3D 定位 否 2016 [56] DAVIS (内置IMU) 3D 定位 否 2017 [59] DAVIS (内置IMU) 3D 定位与建图 否 2017 [64] DAVIS (内置IMU), RGB相机 3D 定位与建图 否 2018 [65] DAVIS (内置IMU) 3D 定位 否 2018 表 2 DVS公开数据集

Table 2 Dataset provided by event cammera

相关文献 所使用传感器 相机运动自由度 数据采集场景 载具 是否提供真值 发表时间(年) [53] eDVS相机, RGB-D相机 6DOF 室内 手持 是 2014 [28] DAVIS (内置IMU) 3DOF(纯旋转) 室内, 仿真 旋转基座 是 2016 [68] DAVIS, RGB-D相机 4DOF 室内, 仿真 地面机器人和云台 是 2016 [69] DAVIS (内置IMU) 6DOF 室内 室外 仿真 手持 室内: 是 室外: 否 仿真: 是 2016 [70] DAVIS 6DOF 室外 汽车 是 2017 [71] 2×DAVIS (内置IMU) 2×RGB相机 (内置IMU) 16线激光雷达 6DOF 室内 室外 室内

到室外四轴飞行器 摩托车 汽车 手持 是 2018 [72] 2×DAVIS (内置IMU) RGB-D相机 3DOF 室内 3×地面机器人 是 2018 [73] DAVIS 6DOF 室内 手持 是 2019 [51] DAVIS, IMU 6DOF 室内, 仿真 手持 是 2019 -

[1] Burri M, Oleynikova H, Achtelik M W, Siegwart R. Realtime visual-inertial mapping, re-localization and planning onboard MAVs in unknown environments. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Hamburg, Germany: IEEE, 2015. 1872−1878 [2] Chatila R, Laumond J P. Position referencing and consistent world modeling for mobile robots. In: Proceedings of the 1985 IEEE International Conference on Robotics and Automation. Louis, Missouri, USA: IEEE, 1985. Vol. 2: 138−145 [3] Chatzopoulos D, Bermejo C, Huang Z, P Hui. Mobile augmented reality survey: From where we are to where we go. IEEE Access, 2017, 5: 6917−6950 doi: 10.1109/ACCESS.2017.2698164 [4] Taketomi T, Uchiyama H, Ikeda S. Visual SLAM algorithms: a survey from 2010 to 2016. Transactions on Computer Vision and Applications, 2017, 9(1): 16 doi: 10.1186/s41074-017-0027-2 [5] Strasdat H, Montiel J M M, Davison A J. Visual SLAM: Why filter? Image and Vision Computing, 2012, 30(2): 65−77 doi: 10.1016/j.imavis.2012.02.009 [6] Younes G, Asmar D, Shammas E, J Zelek. Keyframe-based monocular SLAM: Design, survey, and future directions. Robotics and Autonomous Systems, 2017, 98: 67−88 doi: 10.1016/j.robot.2017.09.010 [7] Olson C F, Matthies L H, Schoppers M, Maimore M W. Rover navigation using stereo ego-motion. Robotics and Autonomous Systems, 2003, 43(4): 215−229 doi: 10.1016/S0921-8890(03)00004-6 [8] Zhang Z. Microsoft kinect sensor and its effect. IEEE Multimedia, 2012, 19(2): 4−10 doi: 10.1109/MMUL.2012.24 [9] Huang A S, Bachrach A, Henry P, et al. Visual odometry and mapping for autonomous flight using an RGB-D camera. Robotics Research. Springer, Cham, 2017: 235−252 [10] Jones E S, Soatto S. Visual-inertial navigation, mapping and localization: A scalable real-time causal approach. The International Journal of Robotics Research, 2011, 30(4): 407−430 doi: 10.1177/0278364910388963 [11] Martinelli A. Vision and IMU data fusion: Closed-form solutions for attitude, speed, absolute scale, and bias determination. IEEE Transactions on Robotics, 2011, 28(1): 44−60 [12] Klein G, Murray D. Parallel tracking and mapping for small AR workspaces. In: Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality. Nara, Japan: IEEE, 2007. 1−10 [13] Mur-Artal R, Montiel J M M, Tardos J D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Transactions on Robotics, 2015, 31(5): 1147−1163 doi: 10.1109/TRO.2015.2463671 [14] Mur-Artal R, Tardós J D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Transactions on Robotics, 2017, 33(5): 1255−1262 doi: 10.1109/TRO.2017.2705103 [15] Forster C, PizzoliM, Scaramuzza D. SVO: Fast semi-direct monocular visual odometry. In: Proceedings of the 2014 IEEE international conference on robotics and automation (ICRA). Hong Kong, China: IEEE, 2014. 15−22 [16] Engel J, Schops T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM. In: Proceedings of the 2014 European conference on computer vision. Zurich, Switzerland: Springer, 2014. 834−849 [17] Engel J, Stückler J, Cremers D. Large-scale direct SLAM with stereo cameras. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Hamburg, Germany: IEEE, 2015. 1935−1942 [18] Li M, Mourikis A I. High-precision, consistent EKFbased visual-inertial odometry. The International Journal of Robotics Research, 2013, 32(6): 690−711 doi: 10.1177/0278364913481251 [19] Leutenegger S, Lynen S, Bosse M, Siegwart R, Furgale P. Keyframe-based visual inertial odometry using nonlinear optimization. The International Journal of Robotics Research, 2015, 34(3): 314−334 doi: 10.1177/0278364914554813 [20] Qin T, Li P, Shen S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Transactions on Robotics, 2018, 34(4): 1004−1020 doi: 10.1109/TRO.2018.2853729 [21] Fossum E R. CMOS image sensors: Electronic camera-ona-chip. IEEE Transactions on Electron Devices, 1997, 44(10): 1689−1698 doi: 10.1109/16.628824 [22] Delbruck T. Neuromorophic vision sensing and processing. In: Proceedings of the 46th European SolidState Device Research Conference (ESSDERC). Lansanne, Switzerland: IEEE, 2016. 7−14 [23] Delbruck T, Lichtsteiner P. Fast sensory motor control based on event-based hybrid neuromorphic-procedural system. In: Proceedings of the IEEE International Symposium on Circuits and Systems. New Orleans, USA: IEEE, 2007. 845−848 [24] Delbruck T, Lang M. Robotic goalie with 3 ms reaction time at 4% CPU load using event-based dynamic vision sensor. Frontiers in Neuroscience, 2013, 7: 223 [25] Glover A, Bartolozzi C. Event-driven ball detection and gaze fixation in clutter. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Daejeon, Korea: IEEE, 2016. 2203−2208 [26] Benosman R, Ieng S H, Clercq C, Bartolozzi C, Srinivasan M. Asynchronous frameless event-based optical flow. Neural Networks, 2012, 27: 32−37 doi: 10.1016/j.neunet.2011.11.001 [27] Benosman R, Clercq C, Lagorce X, leng S H, Bartolozzi C. Event-based visual flow. IEEE Transactions on Neural Networks and Learning Systems, 2013, 25(2): 407−417 [28] Rueckauer B, Delbruck T. Evaluation of event-based algorithms for optical flow with ground-truth from inertial measurement sensor. Frontiers in Neuroscience, 2016, 10: 176 [29] Bardow P, Davison A J, Leutenegger S. Simultaneous optical flow and intensity estimation from an event camera. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. LAS VEGAS, USA: IEEE, 2016. 884−892 [30] Reinbacher C, Graber G, Pock T. Real-time intensityimage reconstruction for event cameras using manifold regularisation. International Journal of Computer Vision, 2018, 126(12): 1381−1393 doi: 10.1007/s11263-018-1106-2 [31] Mahowald M. VLSI analogs of neuronal visual processing: A synthesis of form and function. California Institute of Technology, 1992. [32] Posch C, Serrano-Gotarredona T, Linares-Barranco B, Delbruck T. Retinomorphic event-based vision sensors: Bioinspired cameras with spiking output. Proceedings of the IEEE, 2014, 102(10): 1470−1484 doi: 10.1109/JPROC.2014.2346153 [33] Lichtsteiner P, Posch C, Delbruck T. A 128×128 120 db 30 mw asynchronous vision sensor that responds to relative intensity change. In: Proceedings of the 2006 IEEE International Solid State Circuits Conference-Digest of Technical Papers. San Francisco, CA, USA: IEEE, 2006. 2060−2069 [34] Lichtsteiner P, Posch C, Delbruck T. A 128×128 120 dB 15 μs Latency Asynchronous Temporal Contrast Vision Sensor. IEEE Journal of Solid-State Circuits, 2008, 43(2): 566−576 doi: 10.1109/JSSC.2007.914337 [35] Son B, Suh Y, Kim S, et al. 4. 1 A 640×480 dynamic vision sensor with a 9 μm pixel and 300 Meps address-event representation. In: Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC). San Francisco, CA, USA: IEEE, 2017. 66−67 [36] Posch C, Matolin D, Wohlgenannt R. A QVGA 143 dB Dynamic Range Frame-Free PWM Image Sensor With Lossless Pixel-Level Video Compression and Time-Domain CDS. IEEE Journal of Solid-State Circuits, 2010, 46(1): 259−275 [37] Posch C, Matolin D, Wohlgenannt R. A QVGA 143 dB dynamic range asynchronous address-event PWM dynamic image sensor with lossless pixel-level video compression. In: Proceedings of the 2010 IEEE International Solid-State Circuits Conference-(ISSCC). San Francisco, CA, USA: IEEE, 2010. 400−401 [38] Berner R, Brandli C, Yang M, Liu S C, Delbruck T. A 240×180 120 db 10 mw 12 us-latency sparse output vision sensor for mobile applications. In: Proceedings of the International Image Sensors Workshop. Snowbird, Utah, USA: IEEE, 2013. 41−44 [39] Brandli C, Berner R, Yang M, Liu S C, Delbruck T. A 240×180 130 db 3 μs latency global shutter spatiotemporal vision sensor. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333−2341 doi: 10.1109/JSSC.2014.2342715 [40] Guo M, Huang J, Chen S. Live demonstration: A 768×640 pixels 200 Meps dynamic vision sensor. In: Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS). Baltimore, Maryland, USA: IEEE, 2017. 1−1 [41] Li C, Brandli C, Berner R, et al. Design of an RGBW color VGA rolling and global shutter dynamic and active-pixel vision sensor. In: Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS). Liston, Portulgal: IEEE, 2015. 718−721 [42] Moeys D P, Li C, Martel J N P, et al. Color temporal contrast sensitivity in dynamic vision sensors. In: Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS). Baltimore, Maryland, USA: IEEE, 2017. 1−4 [43] Marcireau A, Ieng S H, Simon-Chane C, Benosman R B. Event-based color segmentation with a high dynamic range sensor. Frontiers in Neuroscience, 2018, 12: 135 doi: 10.3389/fnins.2018.00135 [44] Weikersdorfer D, Conradt J. Event-based particle filtering for robot self-localization. In: Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO). Guangzhou, China: IEEE, 2012. 866−870 [45] Weikersdorfer D, Hoffmann R, Conradt J. Simultaneous localization and mapping for event-based vision systems. In: Proceedings of the 2013 International Conference on Computer Vision Systems. St. Petersburg, Russia: Springer, 2013. 133−142 [46] Hoffmann R, Weikersdorfer D, Conradt J. Autonomous indoor exploration with an event-based visual SLAM system. In: Proceedings of the 2013 European Conference on Mobile Robots. Barcelona, Catalonia, Spain: IEEE, 2013. 38−43 [47] Mueggler E, Huber B, Scaramuzza D. Event-based, 6-DOF pose tracking for high-speed maneuvers. In: Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. Chicago, USA: IEEE, 2014. 2761−2768 [48] Kim H, Leutenegger S, Davison A J. Real-time 3D reconstruction and 6-DoF tracking with an event camera. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 349−364 [49] Rebecq H, Horstschafer T, Gallego G, Scaramuzza D. EVO: A geometric approach to event-based 6-DOF parallel tracking and mapping in real time. IEEE Robotics and Automation Letters, 2016, 2(2): 593−600 [50] Rebecq H, Gallego G, Scaramuzza D. EMVS: Event-based multi-view stereo. In: Proceedings of the 2016 British Machine Vision Conference (BMVC). York, UK: Springer, 2016(CONF). [51] Bryner S, Gallego G, Rebecq H, Scaramuzza D. Eventbased, direct camera tracking from a photometric 3D map using nonlinear optimization. In: the 2019 International Conference on Robotics and Automation. Montreal, Canada: IEEE, 2019. 2 [52] Censi A, Scaramuzza D. Low-latency event-based visual odometry. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA). Hong Kong, China: IEEE, 2014. 703−710 [53] Weikersdorfer D, Adrian D B, Cremers D, Conradt J. Eventbased 3D SLAM with a depth-augmented dynamic vision sensor. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA). Hong Kong, China: IEEE, 2014. 359−364 [54] Tedaldi D, Gallego G, Mueggler E, Scaramuzza D. Feature detection and tracking with the dynamic and active-pixel vision sensor (DAVIS). In: Proceedings of the 2016 Second International Conference on Event-based Control, Communication, and Signal Processing (EBCCSP). Krakow, Poland: IEEE, 2016. 1−7 [55] Kueng B, Mueggler E, Gallego G, Scaramuzza D. Lowlatency visual odometry using event-based feature tracks. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Daejeon, Korea: IEEE, 2016. 16−23 [56] Zhu A Z, Atanasov N, Daniilidis K. Event-based visual inertial odometry. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, Hawaii, USA: IEEE, 2017. 5816−5824 [57] Zhu A Z, Atanasov N, Daniilidis K. Event-based feature tracking with probabilistic data association. In: Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA). Marina Bay, Singapore: IEEE, 2017. 4465−4470 [58] Mourikis A I, Roumeliotis S I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In: Proceedings of the 2007 IEEE International Conference on Robotics and Automation (ICRA). Roma, Italy: IEEE, 2007. 3565−3572 [59] Rebecq H, Horstschaefer T, Scaramuzza D. Real-time Visual-Inertial Odometry for Event Cameras using Keyframe-based Nonlinear Optimization. In: Proceedings of the 2017 British Machine Vision Conference (BMVC). London, UK: Springer, 2017(CONF). [60] Gallego G, Scaramuzza D. Accurate angular velocity estimation with an event cameras. IEEE Robotics and Automation Letters, 2017, 2(2): 632−639 doi: 10.1109/LRA.2016.2647639 [61] Rosten E, Drummond T. Machine learning for high-speed corner detection. In: Proceedings of the 2006 European Conference on Computer Vision. Graz, Austria: Springer, 2006. 430−443 [62] Lucas B D, Kanade T. An Iterative Image Registration Technique with An Application to Stereo Vision. 1981. 121−130 [63] Leutenegger S, Furgale P, Rabaud V, et al. Keyframe-based visual-inertial slam using nonlinear optimization. In: Proceedings of the 2013 Robotis Science and Systems (RSS). Berlin, German, 2013. [64] Vidal A R, Rebecq H, Horstschaefer T, Scaramuzza D. Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high-speed scenarios. IEEE Robotics and Automation Letters, 2018, 3(2): 994−1001 doi: 10.1109/LRA.2018.2793357 [65] Mueggler E, Gallego G, Rebecq H, Scaramuzza D. Continuous-time visual-inertial odometry for event cameras. IEEE Transactions on Robotics, 2018, 34(6): 1425−1440 doi: 10.1109/TRO.2018.2858287 [66] Mueggler E, Gallego G, Scaramuzza D. Continuous-time trajectory estimation for event-based vision sensors. In: Proceedings of Robotics: Science and Systems XI (RSS). Rome, Italy: 2015. DOI: 10.15607/RSS.2015.XI.036 [67] Patron-Perez A, Lovegrove S, Sibley G. A spline-based trajectory representation for sensor fusion and rolling shutter cameras. International Journal of Computer Vision, 2015, 113(3): 208−219 doi: 10.1007/s11263-015-0811-3 [68] Barranco F, Fermuller C, Aloimonos Y, Delbruck T. A dataset for visual navigation with neuromorphic methods. Frontiers in Neuroscience, 2016, 10: 49 [69] Mueggler E, Rebecq H, Gallego G, Delbruck T, Scaramuzza D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. The International Journal of Robotics Research, 2017, 36(2): 142−149 doi: 10.1177/0278364917691115 [70] Binas J, Neil D, Liu S C, Delbruck T. DDD17: End-to-end DAVIS driving dataset. arXiv: 1711. 01458, 2017 [71] Zhu A Z, Thakur D, Ozaslan T, Pfrommer B, Kumar V, Daniilidis K. The multivehicle stereo event camera dataset: An event camera dataset for 3D perception. IEEE Robotics and Automation Letters, 2018, 3(3): 2032−2039 doi: 10.1109/LRA.2018.2800793 [72] Leung S, Shamwell E J, Maxey C, Nothwang W D. Toward a large-scale multimodal event-based dataset for neuromorphic deep learning applications. In: Proceedings of the 2018 Micro-and Nanotechnology Sensors, Systems, and Applications X. International Society for Optics and Photonics. Orlando, Florida, USA: SPIE, 2018. 10639: 106391T [73] Mitrokhin A, Ye C, Fermuller C, Aloimonos Y, Delbruck T. EV-IMO: Motion segmentation dataset and learning pipeline for event cameras. arXiv: 1903. 07520, 2019 -

下载:

下载: