|

[1]

|

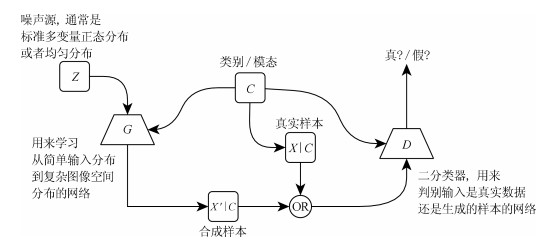

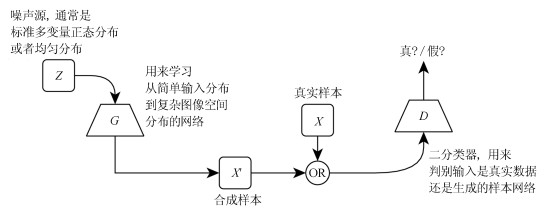

Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde- Farley D, Ozair S, et al. Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2014. 2672-2680

|

|

[2]

|

Kurach K, Lucic M, Zhai X, et al. The GAN Landscape: Losses, Architectures, Regularization, and Normalization. arXiv preprint, arXiv: 1807.04720, 2018.

|

|

[3]

|

王坤峰, 苟超, 段艳杰, 林懿伦, 郑心湖, 王飞跃.生成式对抗网络GAN的研究进展与展望.自动化学报, 2017, 43(3): 321-332 doi: 10.16383/j.aas.2017.y000003Wang Kun-Feng, Gou Chao, Duan Yan-Jie, Lin Yi-Lun, Zheng Xin-Hu, Wang Fei-Yue. Generative adversarial networks: the state of the art and beyond. Acta Electronica Sinica, 2017, 43(3): 321-332 doi: 10.16383/j.aas.2017.y000003

|

|

[4]

|

Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint, arXiv: 1511.06434, 2015.

|

|

[5]

|

Mirza M, Osindero S. Conditional generative adversarial nets. arXiv preprint, arXiv: 1411.1784, 2014.

|

|

[6]

|

Arjovsky M, Bottou L. Towards principled methods for training generative adversarial networks. arXiv preprint, arXiv: 1701.04862, 2017.

|

|

[7]

|

Arjovsky M, Chintala S, Bottou L. Wasserstein gan. arXiv preprint, arXiv: 1701.07875, 2017.

|

|

[8]

|

林懿伦, 戴星原, 李力, 王晓, 王飞跃.人工智能研究的新前线:生成式对抗网络.自动化学报, 2018, 44(5): 775-792 doi: 10.16383/j.aas.2018.y000002Lin Yi-Lun, Dai Xing-Yuan, Li Li, Wang Xiao, Wang Fei-Yue. The new frontier of AI research: generative adversarial networks. Acta Electronica Sinica, 2018, 44(5): 775-792 doi: 10.16383/j.aas.2018.y000002

|

|

[9]

|

LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11): 2278-2324 doi: 10.1109/5.726791

|

|

[10]

|

Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: Curran Associates, Inc., 2012. 1097-1105

|

|

[11]

|

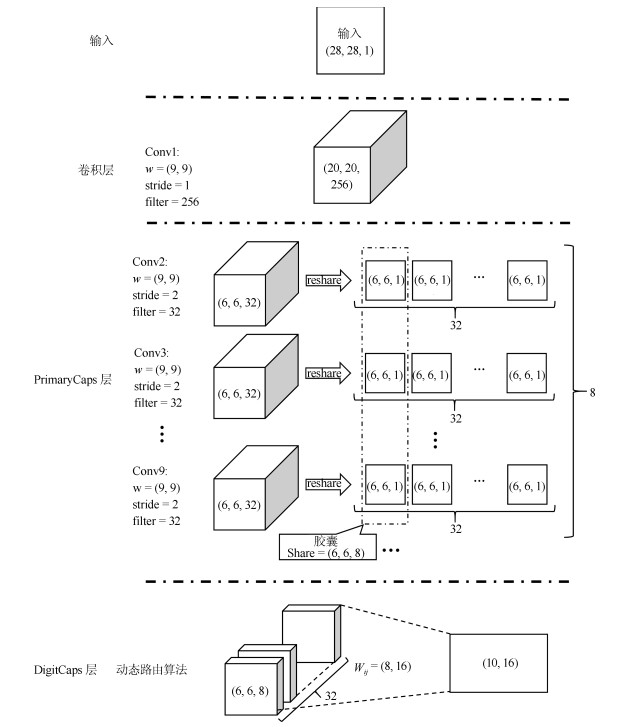

Sabour S, Frosst N, Hinton G E. Dynamic routing between capsules. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Long Beach, USA: Springer, 2017. 3856-3866

|

|

[12]

|

Hinton G E, Krizhevsky A, Wang S D. Transforming auto-encoders. In: Proceedings of the 21st International Conference on Artificial Neural Networks. Espoo, Finland: Springer, 2011. 44-51

|

|

[13]

|

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A. Improved training of wasserstein GANs. arXiv preprint, arXiv: 1704.00028, 2017.

|

|

[14]

|

王坤峰, 左旺孟, 谭营, 秦涛, 李力, 王飞跃.生成式对抗网络:从生成数据到创造智能.自动化学报, 2018, 44(5): 769-774 doi: 10.16383/j.aas.2018.y000001Wang Kun-Feng, Zuo Wang-Meng, Tan Ying, Qin Tao, Li Li, Wang Fei-Yue. Generative adversarial networks: from generating data to creating intelligence. Acta Electronica Sinica, 2018, 44(5): 769-774 doi: 10.16383/j.aas.2018.y000001

|

|

[15]

|

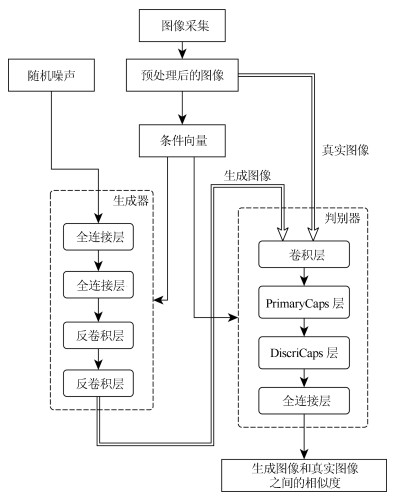

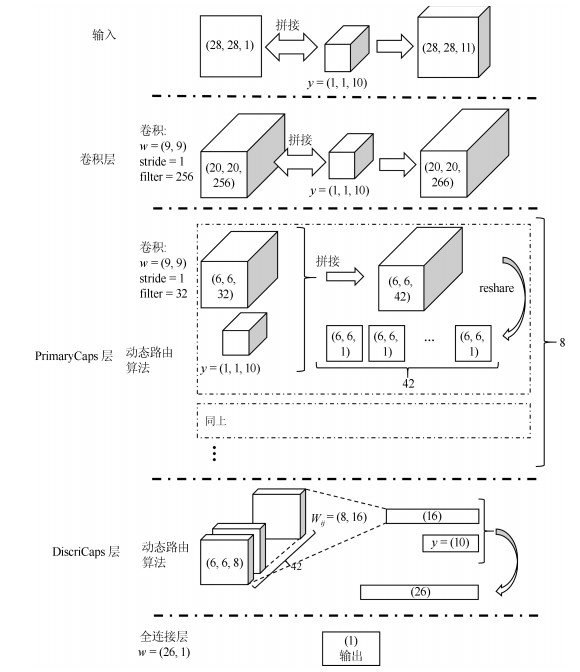

Jaiswal A, AbdAlmageed W, Natarajan P. CapsuleGAN: Generative Adversarial Capsule Network. arXiv preprint, arXiv: 1802.06167, 2018.

|

|

[16]

|

Kussul E, Baidyk T. Improved method of handwritten digit recognition tested on MNIST database. Image and Vision Computing, 2004, 22(12): 971-981 doi: 10.1016/j.imavis.2004.03.008

|

|

[17]

|

Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint, arXiv: 1502.03167, 2015.

|

|

[18]

|

Hinton G E, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov R R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv: 1207.0580, 2012

|

下载:

下载: