Research Advances and Perspectives on the Cocktail Party Problem and Related Auditory Models

-

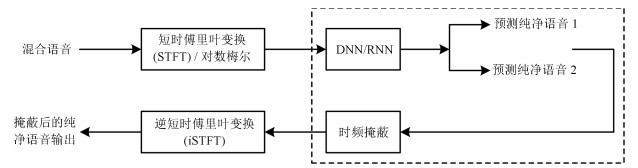

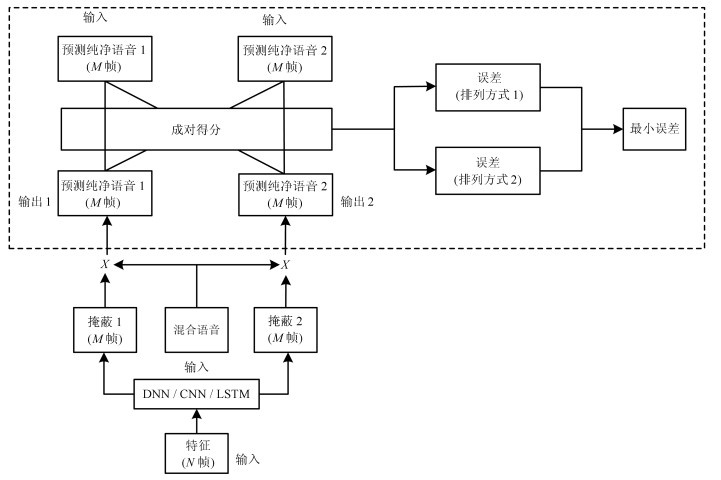

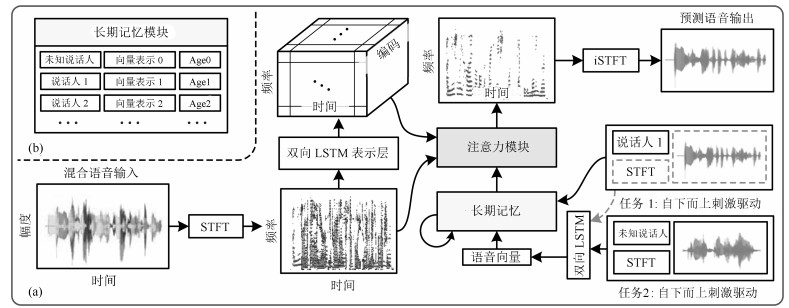

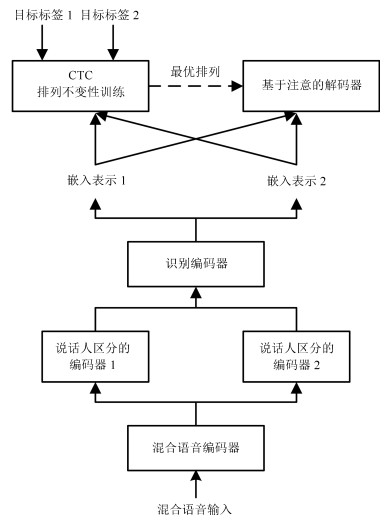

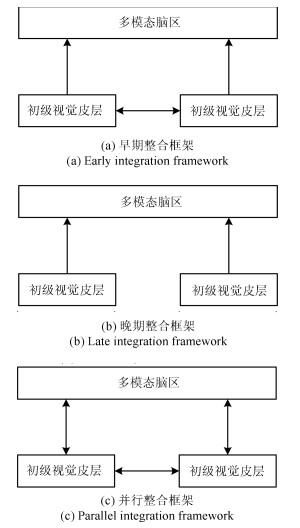

摘要: 近些年,随着电子设备和人工智能技术的飞速发展,人机语音交互的重要性日益凸显.然而,由于干扰声源的存在,在鸡尾酒会等复杂开放环境下的语音交互技术远没有达到令人满意的程度.现阶段,开发一个具备较强自适应性和鲁棒性的听觉计算系统仍然是一件极具挑战性的任务.因此,鸡尾酒会问题的深入探索对智能语音处理领域中的说话人识别、语音识别、关键词唤醒等一系列重要任务都具有非常重要的研究意义和应用价值.本文综述了鸡尾酒会问题相关听觉模型研究的现状与展望.在简要介绍了听觉机理的相关研究,并概括了解决鸡尾酒会问题的多说话人语音分离相关计算模型之后,本文还讨论了受听觉认知机理启发的听觉注意建模方法,认为融入声纹记忆和注意选择的听觉模型在复杂的听觉环境下具有更好的适应性.之后,本文简单回顾了近期的多说话人语音识别模型.最后,本文讨论了目前各类计算模型用于处理鸡尾酒会问题时遇到的困难和挑战,并对未来的研究方向进行了展望.Abstract: With the rapid development of electronic devices and artificial intelligence technologies, speech-based human-machine interaction has become increasingly prominent in recent years. However, the performance of these technologies in open complex environments, such as in the cocktail parties, is far from satisfactory. It is still a very challenging task to develop a computational auditory system with strong adaptivity and robustness at present. Therefore, the in-depth exploration of cocktail party problem plays an important role in the tasks of the intellectual speech processing field, such as speaker recognition, speech recognition, keyword spotting and so on. This paper reviews the auditory models related to the cocktail party problem and their developments. We first briefly introduce some relevant hearing research and computational models attacking the multi-speaker speech separation task for solving the cocktail party problem. Then we discuss the auditory attention modeling method inspired by cognitive science. We believe that the auditory model integrated with the memory of voiceprint information and selective attention is more suitable for complex auditory environments. Afterwards, we briefly review current works of multi-speaker speech recognition. Finally, the difficulties and challenges that the current computational models are confronted with are discussed and we give some views on the future research.

-

Key words:

- Cocktail party problem /

- auditory model /

- speech separation /

- auditory attention /

- speech recognition

1) 本文责任编委 党建武 -

表 1 对鸡尾酒会问题建模的单通道语音分离计算模型的回顾总结

Table 1 A review for single-channel speech separation models attacking the cocktail party problem

算法分类 描述 优势 劣势 代表模型或工作 基于信号处理的算法 假定语音服从一定的分布, 而噪音是平稳或慢变的, 估计噪音的功率谱或者理想维纳滤波器 满足条件下能取得较好分离性能 现实情况下难以满足假设条件, 因而分离性能大大下降 谱减法[36], 维纳滤波器[37-38] 基于分解的算法 假设声音的频谱具有低秩结构, 因此可以用一个数量比较小的基来进行表示 能够挖掘语音中的基本谱模式 1)线性模型, 难以捕捉语音的高度非线性. 2)计算代价昂贵, 计算复杂度高, 难以满足实时应用要求 1)浅层模型: NMF[40], 稀疏NMF[41-43], RNMF[44-45]. 2)深层模型: D-NMF[46], L-NMF[47]. 基于规则的算法 根据听觉场景分析研究中发现的一些规则或机制来对鸡尾酒会问题进行建模 以听觉研究得到的规则为支撑, 模型可解释性较强 1)听觉研究一般采用较简单的刺激作为输入, 得到的规律不一定适用于复杂听觉环境. 2)大部分CASA模型严重依赖于分组线索, 尤其是基音提取的准确性, 而这在复杂听觉环境下又难以保证, 因此语音分离效果并不理想. 3)大多数CASA目标是重现ASA实验范式中的实验结果, 难以用到实际问题中. 1)基于贝叶斯推断的模型: Barniv等[50]. 2)基于神经计算的模型: Wang等[52]. 3)基于时间相干性的模型: Mill等[53]. 基于深度学习的算法 利用深度神经网络的高度非线性对语音进行建模 1)数据驱动. 2)能够在大数据集上获得较好性能. 在真实复杂听觉环境中的表现和人类相比依旧有一定差距: 1)在开放数据集上的表现逊于封闭数据集. 2)在区分相似声音时有一定困难. 3)在处理声源数可变的混合语音时有一定困难. 1)只用听觉信息作为输入: Huang等[60], Du等[62-63], Weninger等[65], DC[71-72], PIT[73], DANet[70]. 2)用视听觉信息作为输入: AVDCNN[84], Gabbay等[76, 84], Owens等[88], AVSpeech[89]. -

[1] Cherry E C. Some experiments on the recognition of speech, with one and with two ears. Journal of the Acoustical Society of America, 1953, 25(5):975-979 doi: 10.1121/1.1907229 [2] Mesgarani N, Chang E F. Selective cortical representation of attended speaker in multi-talker speech perception. Nature, 2012, 485(7397):233-236 doi: 10.1038/nature11020 [3] Pannese A, Grandjean D, Frühholz S. Subcortical processing in auditory communication. Hearing Research, 2015, 328:67-77 doi: 10.1016/j.heares.2015.07.003 [4] Moore B C J. An Introduction to the Psychology of Hearing (Sixth edition). Leiden:Brill, 2013. [5] 赵凌云.皮层下听觉通路处理复杂时频信息的特性与机理研究[硕士学位论文], 清华大学, 中国, 2010 http://cdmd.cnki.com.cn/Article/CDMD-10003-1012036360.htmZhao Ling-Yun. Characteristics and Mechanisms of the Processing of Complex Time-frequency Information in Sub-cortical Auditory Pathway[Master thesis], Tsinghua University, China, 2010 http://cdmd.cnki.com.cn/Article/CDMD-10003-1012036360.htm [6] Bizley J K, Cohen Y E. The what, where and how of auditory-object perception. Nature Reviews Neuroscience, 2013, 14(10):693-707 doi: 10.1038/nrn3565 [7] Bregman A S. Auditory Scene Analysis:the Perceptual Organization of Sound. Cambridge:MIT Press, 1990. [8] O' Sullivan J A, Power A J, Mesgarani N, Rajaram S, Foxe J J, Shinn-Cunningham B G, et al. Attentional selection in a cocktail party environment can be decoded from singletrial EEG. Cerebral Cortex, 2014, 25(7):1697-1706 http://europepmc.org/abstract/med/24429136 [9] Shamma S A, Elhilali M, Micheyl C. Temporal coherence and attention in auditory scene analysis. Trends in Neurosciences, 2011, 34(3):114-123 doi: 10.1016/j.tins.2010.11.002 [10] Ayala Y A, Malmierca M S. Stimulus-speciflc adaptation and deviance detection in the inferior colliculus. Frontiers in Neural Circuits, 2012, 6: Article No. 89 Stimulus-speciflc adaptation and deviance detection in the inferior colliculus [11] Ulanovsky N, Las L, Farkas D, Nelken I. Multiple time scales of adaptation in auditory cortex neurons. The Journal of Neuroscience, 2004, 24(46):10440-10453 doi: 10.1523/JNEUROSCI.1905-04.2004 [12] Winkler I, Denham S L, Nelken I. Modeling the auditory scene:predictive regularity representations and perceptual objects. Trends in Cognitive Sciences, 2009, 13(12):532-540 doi: 10.1016/j.tics.2009.09.003 [13] Leonard M K, Baud M O, Sjerps M J, Chang E F. Perceptual restoration of masked speech in human cortex. Nature Communications, 2016, 7: Article No. 13619 http://www.ncbi.nlm.nih.gov/pubmed/27996973 [14] Gregory R L. Perceptions as hypotheses. Philosophical Transactions of the Royal Society B:Biological Sciences, 1980, 290(1038):181-197 doi: 10.1098/rstb.1980.0090 [15] Friston K. A theory of cortical responses. Philosophical Transactions of the Royal Society B:Biological Sciences, 2005, 360(1456):815-836 doi: 10.1098/rstb.2005.1622 [16] Bar M. The proactive brain:using analogies and associations to generate predictions. Trends in Cognitive Sciences, 2007, 11(7):280-289 doi: 10.1016/j.tics.2007.05.005 [17] Bubic A, Von Cramon D Y, Schubotz R I. Prediction, cognition and the brain. Frontiers in Human Neuroscience, 2010, 4: Article No. 25 http://www.ncbi.nlm.nih.gov/pubmed/20631856 [18] Pearce J M, Hall G. A model for Pavlovian learning:variations in the efiectiveness of conditioned but not of unconditioned stimuli. Psychological Review, 1980, 87(6):532-552 doi: 10.1037/0033-295X.87.6.532 [19] Kruschke J K. Toward a unifled model of attention in associative learning. Journal of Mathematical Psychology, 2001, 45(6):812-863 doi: 10.1006/jmps.2000.1354 [20] Mackintosh N J. A theory of attention:variations in the associability of stimuli with reinforcement. Psychological Review, 1975, 82(4):276-298 doi: 10.1037/h0076778 [21] Wills A J, Graham S, Koh Z, Mclaren I P L, Rolland M D. Efiects of concurrent load on feature- and rule-based generalization in human contingency learning. Journal of Experimental Psychology:Animal Behavior Processes, 2011, 37(3):308-316 doi: 10.1037/a0023120 [22] Koelewijn T, Bronkhorst A, Theeuwes J. Attention and the multiple stages of multisensory integration:a review of audiovisual studies. Acta Psychologica, 2010, 134(3):372-384 doi: 10.1016/j.actpsy.2010.03.010 [23] Shimojo S, Shams L. Sensory modalities are not separate modalities:plasticity and interactions. Current Opinion in Neurobiology, 2001, 11(4):505-509 doi: 10.1016/S0959-4388(00)00241-5 [24] Mcgurk H, Macdonald J. Hearing lips and seeing voices. Nature, 1976, 264(5588):746-748 doi: 10.1038/264746a0 [25] Golumbic E Z, Cogan G B, Schroeder C E, Poeppel D. Visual input enhances selective speech envelope tracking in auditory cortex at a "cocktail party". Journal of Neuroscience, 2013. 33(4):1417-1426 doi: 10.1523/JNEUROSCI.3675-12.2013 [26] Vroomen J, Bertelson P, De Gelder B. The ventriloquist efiect does not depend on the direction of automatic visual attention. Perception & Psychophysics, 2001, 63(4):651-659 http://www.onacademic.com/detail/journal_1000034800664310_3b26.html [27] Schwartz J L, Berthommier F, Savariaux C. Audio-visual scene analysis: evidence for a" very-early" integration process in audio-visual speech perception. In: Proceedings of the 7th International Conference on Spoken Language Processing. Denver, USA: DBLP, 2002 [28] Omata K, Mogi K. Fusion and combination in audiovisual integration. Proceedings of the Royal Society A:Mathematical, Physical and Engineering Sciences, 2008, 464(2090):319-340 doi: 10.1098/rspa.2007.1910 [29] Busse L, Roberts K C, Crist R E, Weissman D H, Woldorfi M G. The spread of attention across modalities and space in a multisensory object. Proceedings of the National Academy of Sciences of the United States of America, 2005, 102(51):18751-18756 doi: 10.1073/pnas.0507704102 [30] Talsma D, Woldorfi M G. Selective attention and multisensory integration:multiple phases of efiects on the evoked brain activity. Journal of Cognitive Neuroscience, 2005, 17(7):1098-1114 doi: 10.1162/0898929054475172 [31] Calvert G A, Thesen T. Multisensory integration:methodological approaches and emerging principles in the human brain. Journal of Physiology-Paris, 2004, 98(1-3):191-205 doi: 10.1016/j.jphysparis.2004.03.018 [32] Gannot S, Vincent E, Markovich-Golan S, Ozerov A. A consolidated perspective on multimicrophone speech enhancement and source separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2017, 25(4):692-730 doi: 10.1109/TASLP.2016.2647702 [33] Adel H, Souad M, Alaqeeli A, Hamid A. Beamforming techniques for multichannel audio signal separation. International Journal of Digital Content Technology and Its Applications, 2012, 6(20):659-667 doi: 10.4156/jdcta [34] Sawada H, Araki S, Mukai R, Makino S. Blind extraction of dominant target sources using ICA and time-frequency masking. IEEE Transactions on Audio, Speech, and Language Processing, 2006, 14(6):2165-2173 doi: 10.1109/TASL.2006.872599 [35] Chen Z. Single Channel Auditory Source Separation with Neural Network[Ph. D. dissertation], Columbia University, USA, 2017 http://gradworks.umi.com/10/27/10275945.html [36] Boll S. Suppression of acoustic noise in speech using spectral subtraction. IEEE Transactions on Acoustics, Speech, and Signal Processing, 1979, 27(2):113-120 doi: 10.1109/TASSP.1979.1163209 [37] Chen J D, Benesty J, Huang Y T, Doclo S. New insights into the noise reduction wiener fllter. IEEE Transactions on Audio, Speech, and Language Processing, 2006, 14(4):1218-1234 doi: 10.1109/TSA.2005.860851 [38] Loizou P C. Speech Enhancement:Theory and Practice. Boca Raton:CRC Press, Inc., 2007. [39] 刘文举, 聂帅, 梁山, 张学良.基于深度学习语音分离技术的研究现状与进展.自动化学报, 2016, 42(6):819-833 http://www.aas.net.cn/CN/abstract/abstract18873.shtmlLiu Wen-Ju, Nie Shuai, Liang Shan, Zhang Xue-Liang. Deep learning based speech separation technology and its developments. Acta Automatica Sinica, 2016, 42(6):819-833 http://www.aas.net.cn/CN/abstract/abstract18873.shtml [40] Lee D D, Seung H S. Algorithms for non-negative matrix factorization. In:Proceedings of the 13th International Conference on Neural Information Processing Systems (NIPS). Denver, USA:MIT Press, 2000. 535-541 [41] Hoyer P O. Non-negative matrix factorization with sparseness constraints. Journal of Machine Learning Research, 2004, 5:1457-1469 doi: 10.1007-11427445_19/ [42] Schmidt M N, Olsson R K. Single-channel speech separation using sparse non-negative matrix factorization. In: Proceedings of the 9th International Conference on Spoken Language Processing. Pittsburgh, USA: INTERSPEECH, 2006. [43] Virtanen T. Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Transactions on Audio, Speech, and Language Processing, 2007, 15(3):1066-1074 doi: 10.1109/TASL.2006.885253 [44] Chen Z, Ellis D P W. Speech enhancement by sparse, low-rank, and dictionary spectrogram decomposition. In:Proceedings of the 2013 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics. New Paltz, USA:IEEE, 2013. 1-4 http://ieeexplore.ieee.org/document/6701883/ [45] Zhang L J, Chen Z G, Zheng M, He X F. Robust nonnegative matrix factorization. Frontiers of Electrical and Electronic Engineering in China, 2011, 6(2):192-200 doi: 10.1007/s11460-011-0128-0 [46] Le Roux J, Hershey J R, Weninger F. Deep NMF for speech separation. In:Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Brisbane, Australia:IEEE, 2015. 66-70 [47] Hsu C C, Chi T S, Chien J T. Discriminative layered nonnegative matrix factorization for speech separation. In:Proceedings of the 17th Annual Conference of the International Speech Communication Association (INTERSPEECH 2016). San Francisco, USA:INTERSPEECH, 2015. 560-564 [48] Rouat J. Computational auditory scene analysis: principles, algorithms, and applications (Wang D. and Brown G.J., Eds.; 2006)[Book review]. IEEE Transactions on Neural Networks, 2008, 19(1): Article No. 199 [49] Szabò B T, Denham S L, Winkler I. Computational models of auditory scene analysis: a review. Frontiers in Neuroscience, 2016, 10: Article No. 524 [50] Barniv D, Nelken I. Auditory streaming as an online classiflcation process with evidence accumulation. PLoS One, 2015, 10(12): Article No. e0144788 [51] Llinàs R R. Intrinsic electrical properties of mammalian neurons and CNS function: a historical perspective. Frontiers in Cellular Neuroscience, 2014, 8: Article No. 320 [52] Wang D L, Chang P. An oscillatory correlation model of auditory streaming. Cognitive Neurodynamics, 2008, 2(1):7-19 doi: 10.1007/s11571-007-9035-8 [53] Mill R W, Böhm T M, Bendixen A, Winkler I, Denham S L. Modelling the emergence and dynamics of perceptual organisation in auditory streaming. PLoS Computational Biology, 2013, 9(3): Article No. e1002925 [54] Elhilali M, Shamma S A. A cocktail party with a cortical twist:how cortical mechanisms contribute to sound segregation. Journal of the Acoustical Society of America, 2008, 124(6):3751-3771 doi: 10.1121/1.3001672 [55] Ma L. Auditory Streaming: Behavior, Physiology, and Modeling[Ph. D. dissertation], University of Maryland, USA, 2011 [56] Krishnan L, Elhilali M, Shamma S. Segregating complex sound sources through temporal coherence. PLoS Computational Biology, 2014, 10(12): Article No. e1003985 [57] Wang Y X, Wang D L. Towards scaling up classiflcationbased speech separation. IEEE Transactions on Audio, Speech, and Language Processing, 2013, 21(7):1381-1390 doi: 10.1109/TASL.2013.2250961 [58] 王燕南.基于深度学习的说话人无关单通道语音分离[博士学位论文], 中国科学技术大学, 中国, 2017 http://www.wanfangdata.com.cn/details/detail.do?_type=degree&id=Y3226643Wang Yan-Nan. Speaker-Independent Single-Channel Speech Separation Based on Deep Learning[Ph. D. dissertation], University of Science and Technology of China, China, 2017 http://www.wanfangdata.com.cn/details/detail.do?_type=degree&id=Y3226643 [59] Narayanan A, Wang D L. Ideal ratio mask estimation using deep neural networks for robust speech recognition. In: Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Vancouver, Canada: IEEE, 2013. 7092-7096 [60] Huang P S, Kim M, Hasegawa-Johnson M, Smaragdis P. Deep learning for monaural speech separation. In: Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Florence, Italy: IEEE, 2014. 1562-1566 [61] Huang P S, Kim M, Hasegawa-Johnson M, Smaragdis P. Joint optimization of masks and deep recurrent neural networks for monaural source separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2015, 23(12):2136-2147 doi: 10.1109/TASLP.2015.2468583 [62] Du J, Tu Y H, Xu Y, Dai L R, Lee C H. Speech separation of a target speaker based on deep neural networks. In: Proceedings of the 12th International Conference on Signal Processing (ICSP). Hangzhou, China: IEEE, 2014. 473-477 [63] Tu Y H, Du J, Xu Y, Dai L R, Lee C H. Speech separation based on improved deep neural networks with dual outputs of speech features for both target and interfering speakers. In: Proceedings of the 9th International Symposium on Chinese Spoken Language Processing. Singapore: IEEE, 2014. 250-254 [64] Du J, Tu Y H, Dai L R, Lee C H. A regression approach to single-channel speech separation via highresolution deep neural networks. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2016, 24(8):1424-1437 doi: 10.1109/TASLP.2016.2558822 [65] Weninger F, Hershey J R, Le Roux J, Schuller B. Discriminatively trained recurrent neural networks for singlechannel speech separation. In: Proceedings of the 2014 IEEE Global Conference on Signal and Information Processing (GlobalSIP). Atlanta, USA: IEEE, 2014. 577-581 [66] Wang D L, Chen J T. Supervised speech separation based on deep learning:an overview. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2018, 26(10):1702-1726 doi: 10.1109/TASLP.2018.2842159 [67] Zhang X L, Wang D L. A deep ensemble learning method for monaural speech separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2016, 24(5):967-977 doi: 10.1109/TASLP.2016.2536478 [68] Sprechmann P, Bruna J, LeCun Y. Audio source separation with discriminative scattering networks. In: Proceedings of the 12th International Conference on Latent Variable Analysis and Signal Separation. Liberec, Czech Republic: Springer, 2015. 259-267 [69] Vincent E, Gribonval R, Fevotte C. Performance measurement in blind audio source separation. IEEE Transactions on Audio, Speech, and Language Processing, 2006, 14(4):1462-1469 doi: 10.1109/TSA.2005.858005 [70] Chen Z, Luo Y, Mesgarani N. Deep attractor network for single-microphone speaker separation. In: Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). New Orleans, USA: IEEE, 2017. 246-250 [71] Hershey J R, Chen Z, Le Roux J, Watanabe S. Deep clustering: discriminative embeddings for segmentation and separation. In: Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Shanghai, China: IEEE, 2016. 31-35 [72] Isik Y, Le Roux J, Chen Z, Watanabe S, Hershey J R. Single-channel multi-speaker separation using deep clustering. In: Proceedings of the 2016 INTERSPEECH. Broadway, USA: Mitsubishi Electric Research Laboratories, Inc., 2016. 545-549 [73] Yu D, Kolbaek M, Tan Z H, Jensen J. Permutation invariant training of deep models for speaker-independent multi-talker speech separation. In: Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). New Orleans, USA: IEEE, 2017. 241-245 [74] Kolbaek M, Yu D, Tan Z H, Jensen J. Multitalker speech separation with utterance-level permutation invariant training of deep recurrent neural networks. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2017, 25(10):1901-1913 doi: 10.1109/TASLP.2017.2726762 [75] Kuhl P K. Human adults and human infants show a "perceptual magnet efiect" for the prototypes of speech categories, monkeys do not. Perception & Psychophysics, 1991, 50(2):93-107 [76] Gabbay A, Ephrat A, Halperin T, Peleg S. Seeing through noise: speaker separation and enhancement using visuallyderived speech. arXiv preprint arXiv: 1708.06767, 2017. [77] Rajaram S, Neflan A V, Huang T S. Bayesian separation of audio-visual speech sources. In: Proceedings of the 2004 International Conference on Acoustics, Speech, and Signal Processing (ICASSP). Montreal, Canada: IEEE, 2004. 657-660 [78] Sodoyer D, Girin L, Jutten C, Schwartz J L. Developing an audio-visual speech source separation algorithm. Speech Communication, 2004, 44(1-4):113-125 doi: 10.1016/j.specom.2004.10.002 [79] Dansereau R M. Co-channel audiovisual speech separation using spectral matching constraints. In: Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP). Montreal, Canada: IEEE, 2004. 645-648 [80] Wang W W, Cosker D, Hicks Y, Sanei S, Chambers J. Video assisted speech source separation. In: Proceedings of the 2005 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP). Philadelphia, USA: IEEE, 2005. 425-428 [81] Rivet B, Girin L, Jutten C. Mixing audiovisual speech processing and blind source separation for the extraction of speech signals from convolutive mixtures. IEEE Transactions on Audio, Speech, and Language Processing, 2007, 15(1):96-108 doi: 10.1109/TASL.2006.872619 [82] Barzelay Z, Schechner Y Y. Harmony in motion. In: Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition. Minneapolis, USA: IEEE, 2007. 4358-4366 [83] Casanovas A L, Monaci G, Vandergheynst P, Gribonval R. Blind audiovisual source separation based on sparse redundant representations. IEEE Transactions on Multimedia, 2010, 12(5):358-371 doi: 10.1109/TMM.2010.2050650 [84] Hou J C, Wang S S, Lai Y H, Tsao Y, Chang H W, Wang H M. Audio-visual speech enhancement based on multimodal deep convolutional neural network. arXiv preprint arXiv: 1703.10893, 2017 [85] Ephrat A, Halperin T, Peleg S. Improved speech reconstruction from silent video. In: Proceedings of the 2017 International Conference on Computer Vision Workshops (ICCV). Venice, Italy: IEEE, 2017. 455-462 [86] Gabbay A, Shamir A, Peleg S. Visual speech enhancement. In: Proceedings of the 2018 INTERSPEECH. Hyderabad, India: INTERSPEECH, 2018. 1170-1174 [87] DeSa V R. Learning classiflcation with unlabeled data. In: Proceedings of the 6th International Conference on Neural Information Processing Systems (NIPS). Denver, USA: Morgan Kaufmann Publishers Inc., 1993. 112-119 [88] Owens A, Efros A A. Audio-visual scene analysis with selfsupervised multisensory features. arXiv preprint arXiv: 1804.03641, 2018 [89] Ephrat A, Mosseri I, Lang O, Dekel T, Wilson K W, Hassidim A, Freeman W T, Rubinstein M. Looking to listen at the cocktail party: a speaker-independent audio-visual model for speech separation. ACM Transactions on Graphics, 2018, 37(4): Article No. 112 [90] Senocak A, Oh T H, Kim J, Yang M H, Kweon I S. Learning to localize sound source in visual scenes. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 4358-4366 [91] Arandjelovic R, Zisserman A. Objects that sound. arXiv preprint arXiv: 1712.06651, 2017. [92] Zhao H, Gan C, Rouditchenko A, Vondrick C, McDermott J, Torralba A. The sound of pixels. In: Proceedings of the 15th European Conference on Computer Vision (ECCV). Munich, Germany: Springer, 2018. [93] Ciocca V. The auditory organization of complex sounds. Frontiers in Bioscience, 2008, 13(13):148-169 doi: 10.2741/2666 [94] Elhilali M. Modeling the cocktail party problem. The Auditory System at the Cocktail Party. Cham: Springer, 2017. [95] Xu J M, Shi J, Liu G C, Chen X Y, Xu B. Modeling attention and memory for auditory selection in a Cocktail Party environment. In: Proceedings of the 32nd AAAI Conference on Artiflcial Intelligence (AAAI). New Orleans, USA: AIAA, 2018. [96] Shi J, Xu J M, Liu G C, Xu B. Listen, think and listen again: capturing top-down auditory attention for speakerindependent speech separation. In: Proceedings of the 27th International Joint Conference on Artiflcial Intelligence (IJCAI). Stockholm, Sweden: IJCAI, 2018. 4353-4360 [97] Kristjansson T T, Hershey J R, Olsen P A, Rennie S J, Gopinath R. Super-human multi-talker speech recognition: The IBM 2006 speech separation challenge system. In: Proceedings of the 9th International Conference on Spoken Language Processing. Pittsburgh, USA: INTERSPEECH, 2006. [98] Qian Y M, Chang X K, Yu D. Single-channel multi-talker speech recognition with permutation invariant training. Speech Communication, 2018, 104:1-11 doi: 10.1016/j.specom.2018.09.003 [99] Qian Y M, Weng C, Chang X K, Wang S, Yu D. Past review, current progress, and challenges ahead on the cocktail party problem. Frontiers of Information Technology & Electronic Engineering, 2018, 19(1):40-63 http://d.old.wanfangdata.com.cn/Periodical/zjdxxbc-e201801007 [100] Seki H, Hori T, Watanabe S, Le Roux J, Hershey J R. A purely end-to-end system for multi-speaker speech recognition. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL). Melbourne, Australia: ACL, 2018. 2620-2630 [101] Settle S, Le Roux J, Hori T, Watanabe S, Hershey J R. End-to-end multi-speaker speech recognition. In: Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Calgary, Canada: IEEE, 2018. 4819-4823 [102] Weng C, Yu D, Seltzer M L, Droppo J. Deep neural networks for single-channel multi-talker speech recognition. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2015, 23(10):1670-1679 doi: 10.1109/TASLP.2015.2444659 [103] Yu D, Chang X K, Qian Y M. Recognizing multi-talker speech with permutation invariant training. In: Proceedings of INTERSPEECH. Stockholm, Sweden: INTERSPEECH, 2017. 2456-2460 [104] Chang X K, Qian Y M, Yu D. Adaptive permutation invariant training with auxiliary information for monaural multi-talker speech recognition. In: Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Calgary, Canada: IEEE, 2018. 5974-5978 [105] Chen Z H, Droppo J, Li J Y, Xiong W. Progressive Joint Modeling in Unsupervised Single-Channel Overlapped Speech Recognition. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2018, 26(1):184-196 doi: 10.1109/TASLP.2017.2765834 [106] Tan T, Qian Y M, Yu D. Knowledge transfer in permutation invariant training for single-channel multi-talker speech recognition. In: Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Calgary, Canada: IEEE, 2018. 5714-5718 [107] Chang X K, Qian Y M, Yu D. Monaural multi-talker speech recognition with attention mechanism and gated convolutional networks. In: Proceedings of INTERSPEECH. Hyderabad, India: INTERSPEECH, 2018. 1586-1590 [108] Chang X K, Qian Y M, Yu K, Watanabe S. End-to-end monaural multi-speaker ASR system without pretraining. arXiv preprint arXiv: 1811.02062, 2018. -

下载:

下载: