-

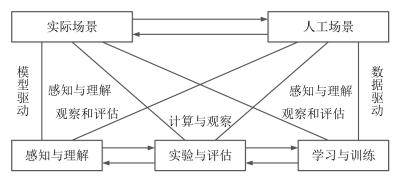

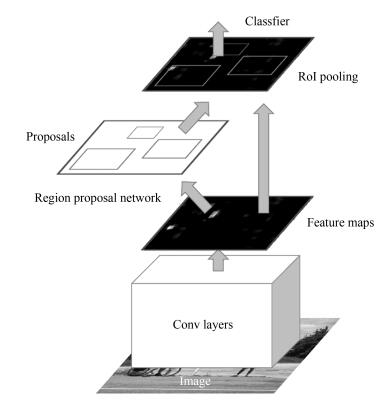

摘要: 在视觉计算研究中,对复杂环境的适应能力通常决定了算法能否实际应用,已经成为该领域的研究焦点之一.由人工社会(Artificial societies)、计算实验(Computational experiments)、平行执行(Parallel execution)构成的ACP理论在复杂系统建模与调控中发挥着重要作用.本文将ACP理论引入智能视觉计算领域,提出平行视觉的基本框架与关键技术.平行视觉利用人工场景来模拟和表示复杂挑战的实际场景,通过计算实验进行各种视觉模型的训练与评估,最后借助平行执行来在线优化视觉系统,实现对复杂环境的智能感知与理解.这一虚实互动的视觉计算方法结合了计算机图形学、虚拟现实、机器学习、知识自动化等技术,是视觉系统走向应用的有效途径和自然选择.Abstract: In vision computing, the adaptability of an algorithm to complex environments often determines whether it is able to work in the real world. This issue has become a focus of recent vision computing research. Currently, the ACP theory that comprises artificial societies, computational experiments, and parallel execution is playing an essential role in modeling and control of complex systems. This paper introduces the ACP theory into the vision computing field, and proposes parallel vision and its basic framework and key techniques. For parallel vision, photo-realistic artificial scenes are used to model and represent complex real scenes, computational experiments are utilized to train and evaluate a variety of visual models, and parallel execution is conducted to optimize the vision system and achieve perception and understanding of complex environments. This virtual/real interactive vision computing approach integrates many technologies including computer graphics, virtual reality, machine learning, and knowledge automation, and is developing towards practically effective vision systems.

-

Key words:

- Parallel vision /

- complex environments /

- ACP theory /

- data-driven /

- virtual/real interaction

-

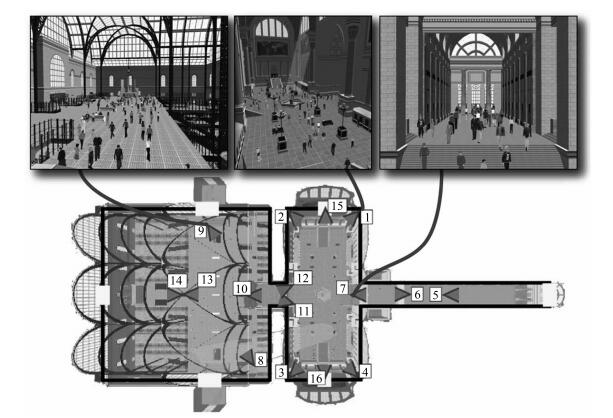

图 1 虚拟火车站的平面图[26](包括站台和火车轨道(左)、主候车室(中)和购物商场(右).该摄像机网络包括16台虚拟摄像机)

Fig. 1 Plan view of the virtual train station[26] (Revealing the concourses and train tracks (left), the main waiting room (middle), and the shopping arcade (right). An example camera network comprising 16 virtual cameras is illustrated.)

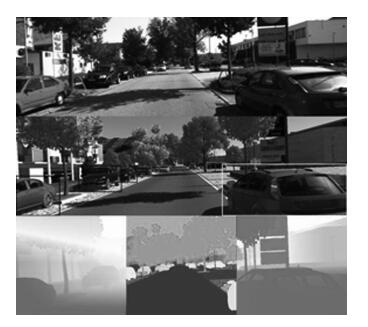

图 2 虚拟KITTI数据集[32] (上: KITTI多目标跟踪数据集中的一帧图像; 中:虚拟KITTI数据集中对应的图像帧, 叠加了被跟踪目标的标注边框; 下:自动标注的光流(左)、语义分割(中)和深度(右))

Fig. 2 The virtual KITTI dataset[32]. (Top: a frame of a video from the KITTI multi-object tracking benchmark. Middle: the corresponding synthetic frame from the virtual KITTI dataset with automatic tracking ground truth bounding boxes. Bottom: automatically generated ground truth for optical flow (left), semantic segmentation (middle), and depth (right).)

表 1 人工室外场景的构成要素

Table 1 Components for artificial outdoor scenes

场景要素 内容 静态物体 建筑物、天空、道路、人行道、围墙、植物、立柱、交通标志、路面标线等 动态物体 汽车(轿车、货车、公交车)、自行车、摩托车、行人等 季节 春、夏、秋、冬 天气 晴、阴、雨、雪、雾、霾等 光源 太阳、路灯、车灯等 -

[1] 王飞跃.平行系统方法与复杂系统的管理和控制.控制与决策, 2004, 19 (5):485-489, 514 http://www.cnki.com.cn/Article/CJFDTOTAL-KZYC200405001.htmWang Fei-Yue. Parallel system methods for management and control of complex systems. Control and Decision, 2004, 19(5):485-489, 514 http://www.cnki.com.cn/Article/CJFDTOTAL-KZYC200405001.htm [2] Wang F Y. Parallel control and management for intelligent transportation systems:concepts, architectures, and applications. IEEE Transactions on Intelligent Transportation Systems, 2010, 11(3):630-638 doi: 10.1109/TITS.2010.2060218 [3] 王飞跃.平行控制:数据驱动的计算控制方法.自动化学报, 2013, 39 (4):293-302 http://www.aas.net.cn/CN/Y2013/V39/I4/293Wang Fei-Yue. Parallel control:a method for data-driven and computational control. Acta Automatica Sinica, 2013, 39(4):293-302 http://www.aas.net.cn/CN/Y2013/V39/I4/293 [4] Wang K F, Liu Y Q, Gou C, Wang F Y. A multi-view learning approach to foreground detection for traffic surveillance applications. IEEE Transactions on Vehicular Technology, 2016, 65(6):4144-4158 doi: 10.1109/TVT.2015.2509465 [5] Wang K F, Yao Y J. Video-based vehicle detection approach with data-driven adaptive neuro-fuzzy networks. International Journal of Pattern Recognition and Artificial Intelligence, 2015, 29(7):1555015 doi: 10.1142/S0218001415550150 [6] Gou C, Wang K F, Yao Y J, Li Z X. Vehicle license plate recognition based on extremal regions and restricted Boltzmann machines. IEEE Transactions on Intelligent Transportation Systems, 2016, 17(4):1096-1107 doi: 10.1109/TITS.2015.2496545 [7] Liu Y Q, Wang K F, Shen D Y. Visual tracking based on dynamic coupled conditional random field model. IEEE Transactions on Intelligent Transportation Systems, 2016, 17(3):822-833 doi: 10.1109/TITS.2015.2488287 [8] Goyette N, Jodoin P M, Porikli F, Konrad J, Ishwar P. A novel video dataset for change detection benchmarking. IEEE Transactions on Image Processing, 2014, 23(11):4663-4679 doi: 10.1109/TIP.2014.2346013 [9] Felzenszwalb P F, Girshick R B, McAllester D, Ramanan D. Object detection with discriminatively trained part-based models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(9):1627-1645 doi: 10.1109/TPAMI.2009.167 [10] INRIA person dataset[Online], available:http://pascal.inrialpes.fr/data/human/, September26, 2016. [11] Caltech pedestrian detection benchmark[Online], available:http://www.vision.caltech.edu/Image_Datasets/Caltech-Pedestrians/, September26, 2016. [12] The KITTI vision benchmark suite[Online], available:http://www.cvlibs.net/datasets/kitti/, September26, 2016. [13] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In:Advances in Neural Information Processing Systems 25(NIPS 2012). Nevada:MIT Press, 2012. [14] LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521(7553):436-444 doi: 10.1038/nature14539 [15] Ren S Q, He K M, Girshick R, Sun J. Faster R-CNN:towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, to be published http://es.slideshare.net/xavigiro/faster-rcnn-towards-realtime-object-detection-with-region-proposal-networks [16] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In:Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV:IEEE, 2016. 770-778 [17] ImageNet[Online], available:http://www.image-net.org/, September26, 2016. [18] The PASCAL visual object classes homepage[Online], available:http://host.robots.ox.ac.uk/pascal/VOC/, September26, 2016. [19] COCO——Common objects in context[Online], available:http://mscoco.org/, September26, 2016. [20] Torralba A, Efros A A. Unbiased look at dataset bias. In:Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Colorado, USA:IEEE, 2011. 1521-1528 [21] Bainbridge W S. The scientific research potential of virtual worlds. Science, 2007, 317(5837):472-476 doi: 10.1126/science.1146930 [22] Miao Q H, Zhu F H, Lv Y S, Cheng C J, Chen C, Qiu X G. A game-engine-based platform for modeling and computing artificial transportation systems. IEEE Transactions on Intelligent Transportation Systems, 2011, 12(2):343-353 doi: 10.1109/TITS.2010.2103400 [23] Sewall J, van den Berg J, Lin M, Manocha D. Virtualized traffic:reconstructing traffic flows from discrete spatiotemporal data. IEEE Transactions on Visualization and Computer Graphics, 2011, 17(1):26-37 doi: 10.1109/TVCG.2010.27 [24] Prendinger H, Gajananan K, Zaki A B, Fares A, Molenaar R, Urbano D, van Lint H, Gomaa W. Tokyo Virtual Living Lab:designing smart cities based on the 3D Internet. IEEE Internet Computing, 2013, 17(6):30-38 doi: 10.1109/MIC.4236 [25] Karamouzas I, Overmars M. Simulating and evaluating the local behavior of small pedestrian groups. IEEE Transactions on Visualization and Computer Graphics, 2012, 18(3):394-406 doi: 10.1109/TVCG.2011.133 [26] Qureshi F, Terzopoulos D. Smart camera networks in virtual reality. Proceedings of the IEEE, 2008, 96(10):1640-1656 doi: 10.1109/JPROC.2008.928932 [27] Starzyk W, Qureshi F Z. Software laboratory for camera networks research. IEEE Journal on Emerging and Selected Topics in Circuits and Systems, 2013, 3(2):284-293 doi: 10.1109/JETCAS.2013.2256827 [28] Sun B C, Saenko K. From virtual to reality:fast adaptation of virtual object detectors to real domains. In:Proceedings of the 2014 British Machine Vision Conference. Jubilee Campus:BMVC, 2014. [29] Hattori H, Boddeti V N, Kitani K, Kanade T. Learning scene-specific pedestrian detectors without real data. In:Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, Massachusetts:IEEE, 2015. 3819-3827 [30] Vázquez D, López A M, Marín J, Ponsa D, Gerónimo D. Virtual and real world adaptation for pedestrian detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(4):797-809 doi: 10.1109/TPAMI.2013.163 [31] Xu J L, Vázquez D, López A M, Marín J, Ponsa D. Learning a part-based pedestrian detector in a virtual world. IEEE Transactions on Intelligent Transportation Systems, 2014, 15(5):2121-2131 doi: 10.1109/TITS.2014.2310138 [32] Gaidon A, Wang Q, Cabon Y, Vig E. Virtual worlds as proxy for multi-object tracking analysis. In:Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV:IEEE, 2016. 4340-4349 [33] Handa A, Párácean V, Badrinarayanan V, Stent S, Cipolla R. Understanding real world indoor scenes with synthetic data. In:Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV:IEEE, 2016. 4077-4085 [34] Ros G, Sellart L, Materzynska J, Vazquez D, López A M. The SYNTHIA dataset:a large collection of synthetic images for semantic segmentation of urban scenes. In:Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV:IEEE, 2016. 3234-3243 [35] Movshovitz-Attias Y, Kanade T, Sheikh Y. How useful is photo-realistic rendering for visual learning? arXiv:1603.08152, 2016. https://www.researchgate.net/publication/301837317_How_useful_is_photo-realistic_rendering_for_visual_learning [36] Haines T S F, Xiang T. Background subtraction with Dirichlet process mixture models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(4):670-683 doi: 10.1109/TPAMI.2013.239 [37] Sobral A, Vacavant A. A comprehensive review of background subtraction algorithms evaluated with synthetic and real videos. Computer Vision and Image Understanding, 2014, 122:4-21 doi: 10.1016/j.cviu.2013.12.005 [38] Morris B T, Trivedi M M. Trajectory learning for activity understanding:unsupervised, multilevel, and long-term adaptive approach. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011, 33(11):2287-2301 doi: 10.1109/TPAMI.2011.64 [39] Butler D J, Wulff J, Stanley G B, Black M J. A naturalistic open source movie for optical flow evaluation. In:Proceedings of the 12th European Conference on Computer Vision (ECCV). Berlin Heidelberg:Springer-Verlag, 2012. [40] Kaneva B, Torralba A, Freeman W T. Evaluation of image features using a photorealistic virtual world. In:Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV). Barcelona, Spain:IEEE, 2011. 2282-2289 [41] Taylor G R, Chosak A J, Brewer P C. OVVV:using virtual worlds to design and evaluate surveillance systems. In:Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Minneapolis, MN, USA:IEEE, 2007. 1-8 [42] Zitnick C L, Vedantam R, Parikh D. Adopting abstract images for semantic scene understanding. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(4):627-638 doi: 10.1109/TPAMI.2014.2366143 [43] Veeravasarapu V S R, Hota R N, Rothkopf C, Visvanathan R. Model validation for vision systems via graphics simulation. arXiv:1512.01401, 2015. http://arxiv.org/abs/1512.01401 [44] Veeravasarapu V S R, Hota R N, Rothkopf C, Visvanathan R. Simulations for validation of vision systems. arXiv:1512.01030, 2015. http://arxiv.org/abs/1512.01030?context=cs.cv [45] 国家发展改革委, 交通运输部.青岛市"多位一体"平行交通运用示范.国家发展改革委交通运输部关于印发《推进"互联网+"便捷交通促进智能交通发展的实施方案》的通知[Online], http://www.ndrc.gov.cn/zcfb/zcfbtz/201608/t20160805_814065.html, August5, 2016Qingdao "Integrated Multi-Mode" Parallel Transportation Operation Demo Project. Notice from National Development and Reform Commission.[Online], available:http://www.ndrc.gov.cn/zcfb/zcfbtz/201608/t20160805_814065.html, August 5, 2016 [46] Yuan G, Zhang X, Yao Q M, Wang K F. Hierarchical and modular surveillance systems in ITS. IEEE Intelligent Systems, 2011, 26(5):10-15 doi: 10.1109/MIS.2011.88 [47] Jones N. Computer science:the learning machines. Nature, 2014, 505(7482):146-148 doi: 10.1038/505146a [48] Silver D, Huang A, Maddison C J, Guez A, Sifre L, van den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, Dieleman S, Grewe D, Nham J, Kalchbrenner N, Sutskever I, Lillicrap T, Leach M, Kavukcuoglu K, Graepel T, Hassabis D. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587):484-489 doi: 10.1038/nature16961 [49] Wang F Y, Zhang J J, Zheng X H, Wang X, Yuan Y, Dai X X, Zhang J, Yang L Q. Where does AlphaGo go:from Church-Turing Thesis to AlphaGo Thesis and beyond. IEEE/CAA Journal of Automatica Sinica, 2016, 3(2):113-120 http://www.ieee-jas.org/CN/abstract/abstract145.shtml [50] Huang W L, Wen D, Geng J, Zheng N N. Task-specific performance evaluation of UGVs:case studies at the IVFC. IEEE Transactions on Intelligent Transportation Systems, 2014, 15(5):1969-1979 doi: 10.1109/TITS.2014.2308540 [51] Li L, Huang W L, Liu Y, Zheng N N, Wang F Y. Intelligence testing for autonomous vehicles:a new approach. IEEE Transactions on Intelligent Vehicles, 2016, to be published -

下载:

下载: