-

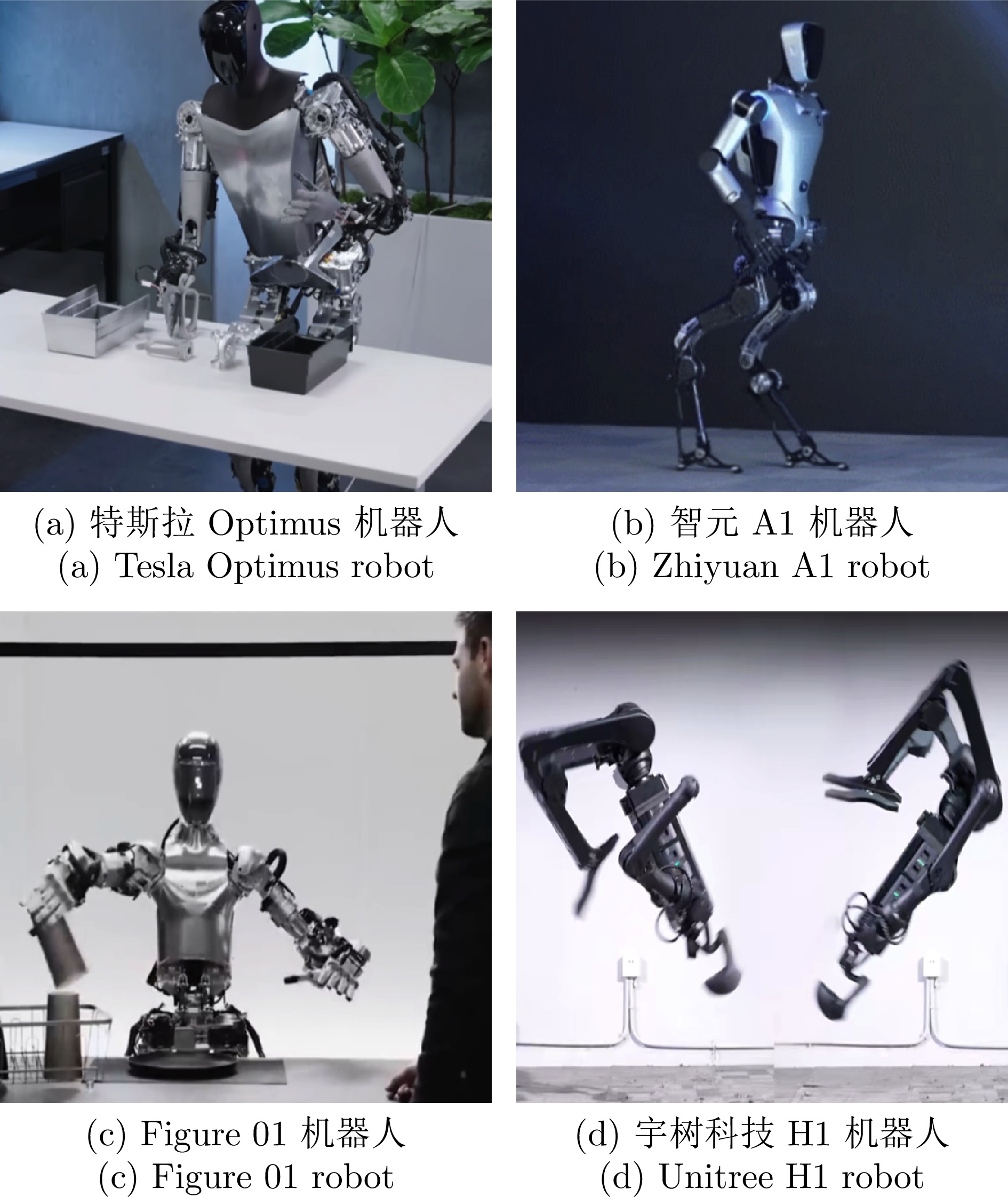

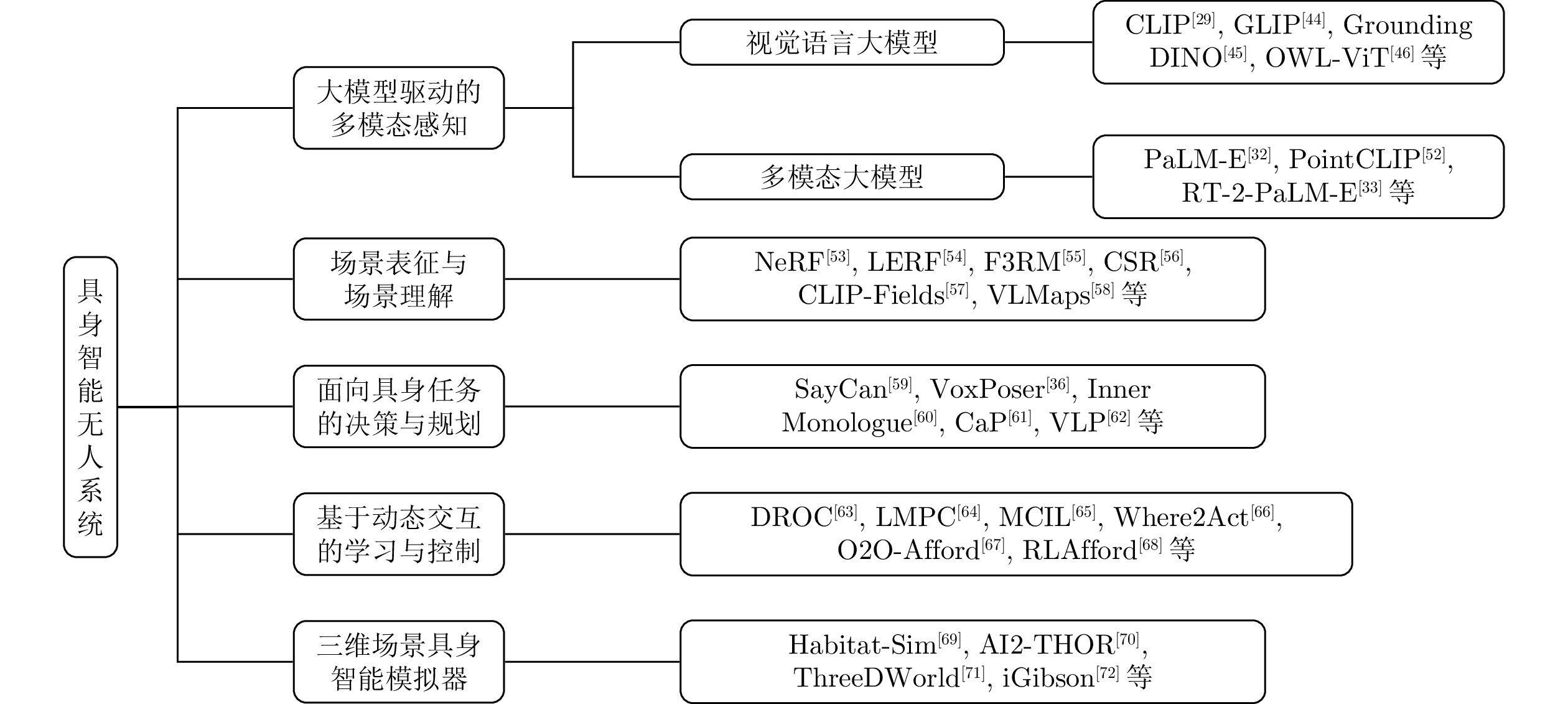

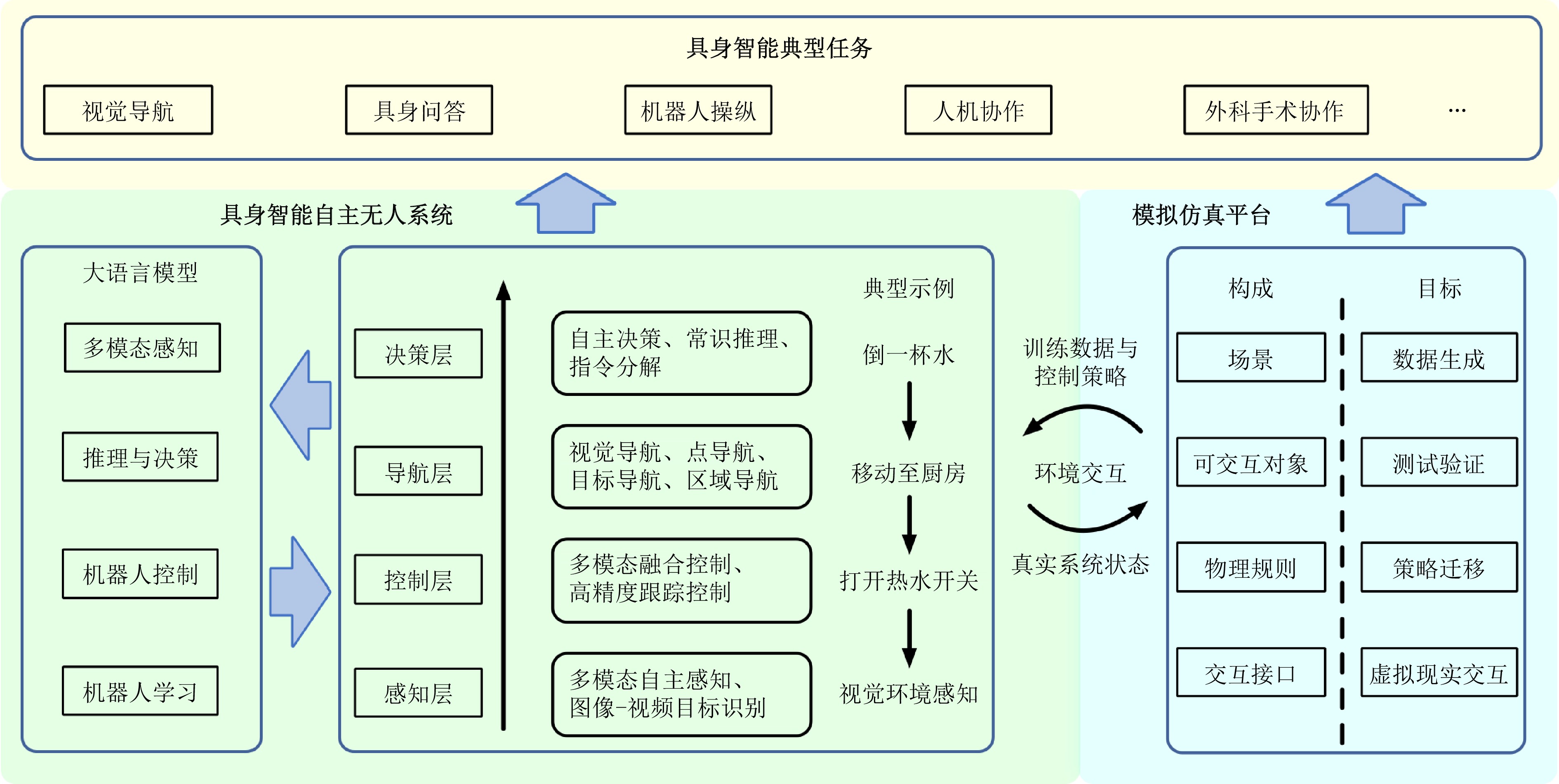

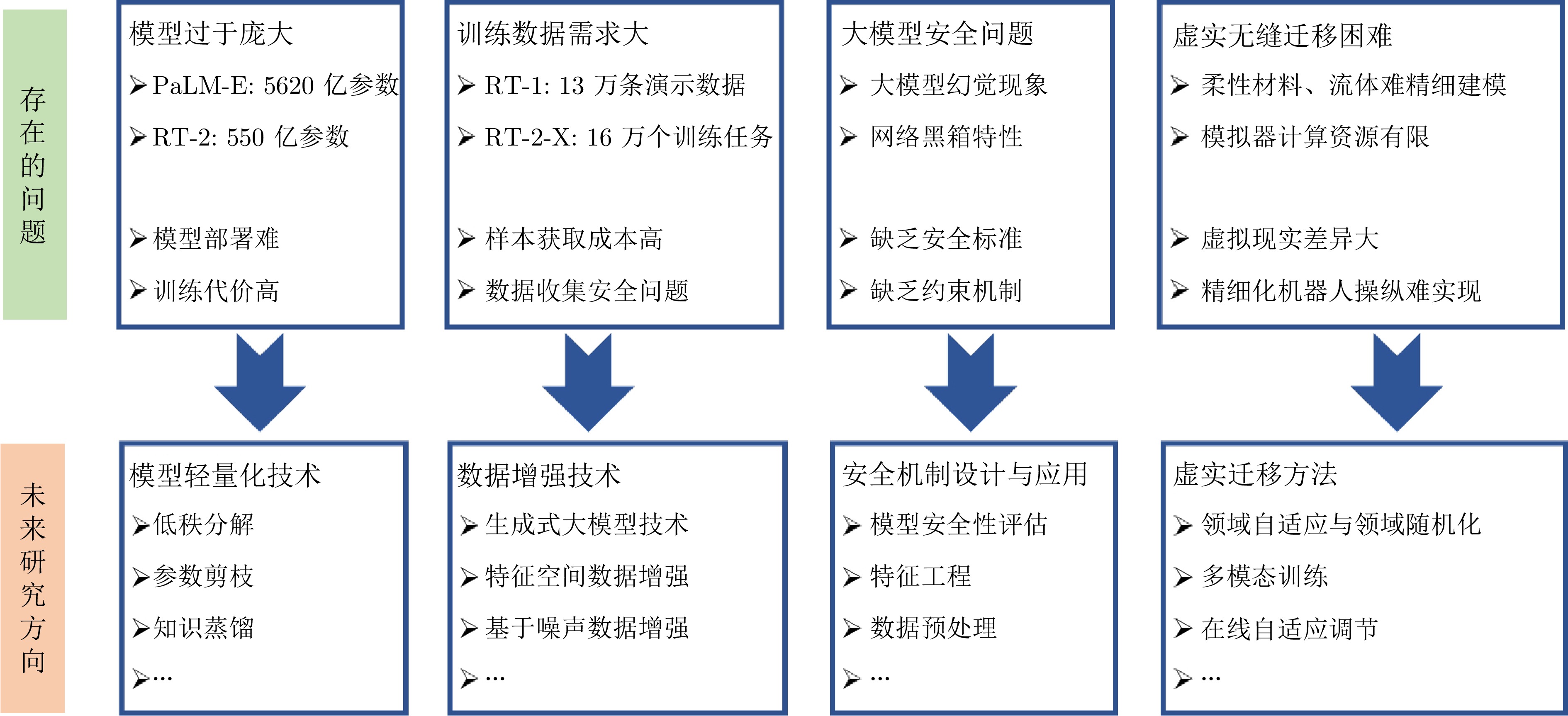

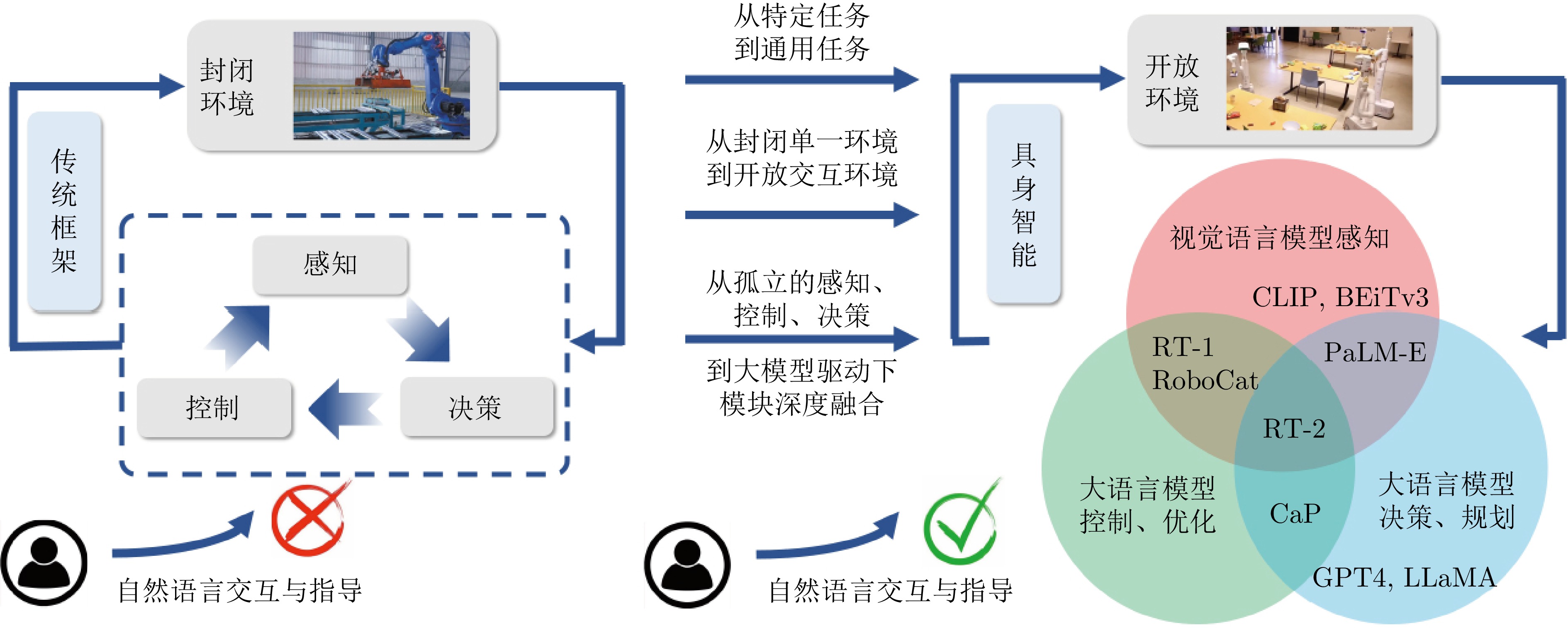

摘要: 自主无人系统是一类具有自主感知和决策能力的智能系统, 在国防安全、航空航天、高性能机器人等方面有着广泛的应用. 近年来, 基于Transformer架构的各类大模型快速革新, 极大地推动了自主无人系统的发展. 目前, 自主无人系统正迎来一场以“具身智能”为核心的新一代技术革命. 大模型需要借助无人系统的物理实体来实现“具身化”, 无人系统可以利用大模型技术来实现“智能化”. 本文阐述具身智能自主无人系统的发展现状, 详细探讨包含大模型驱动的多模态感知、面向具身任务的推理与决策、基于动态交互的机器人学习与控制、三维场景具身模拟器等具身智能领域的关键技术. 最后, 指出目前具身智能无人系统所面临的挑战, 并展望未来的研究方向.Abstract: Autonomous unmanned systems are intelligent systems with autonomous perception and decision-making capabilities, widely applied in areas such as defense security, aerospace, and high-performance robotics. In recent years, the rapid advancements of various large models based on the Transformer architecture have significantly accelerated the development of autonomous unmanned systems. Currently, these systems are undergoing a new technological revolution centered on “embodied intelligence”. Large models require the physical embodiment of unmanned systems to achieve “embodiment”, while unmanned systems can leverage large model technologies to achieve “intelligence”. This paper outlines the current state of development in embodied intelligent autonomous unmanned systems and provides a detailed discussion of key technologies in the field of embodied intelligence, including large-model-driven multimodal perception, reasoning and decision-making for embodied tasks, robot learning and control based on dynamic interaction, and 3D embodied simulators. Finally, the paper identifies existing challenges in embodied intelligence unmanned systems and explores future research directions.

-

表 1 具身智能模型架构

Table 1 Embodied intelligence model architecture

名称 模型参数 响应频率(Hz) 模型架构说明 SayCan[34] — — SayCan利用价值函数表示各个技能的可行性, 并由语言模型进行技能评分, 能够兼顾任务需求和机器人技能的可行性 RT-1[31] 350万 3 RT-1采用13万条机器人演示数据的数据集完成模仿学习训练, 能以97%的成功率执行超过700个语音指令任务 RoboCat[35] 12亿 10 ~ 20 RoboCat构建了基于目标图像的可迁移机器人操纵框架, 能够实现多个操纵任务的零样本迁移 PaLM-E[32] 5620 亿5 ~ 6 PaLM-E构建了当时最大的具身多模态大模型, 将机器人传感器模态融入语言模型, 建立了端到端的训练框架 RT-2[33] 550亿 1 ~ 3 RT-2首次构建了视觉−语言−动作的模型, 在多个具身任务上实现了多阶段的语义推理 VoxPoser[36] — — VoxPoser利用语言模型生成关于当前环境的价值地图, 并基于价值地图进行动作轨迹规划, 实现了高自由度的环境交互 RT-2-X[37] 550亿 1 ~ 3 RT-2-X构建了提供标准化数据格式、交互环境和模型的数据集, 包含527种技能和16万个任务 -

[1] Gupta A, Savarese S, Ganguli S, Li F F. Embodied intelligence via learning and evolution. Nature Communications, 2021, 12(1): Article No. 5721 doi: 10.1038/s41467-021-25874-z [2] 孙长银, 穆朝絮, 柳文章, 王晓. 自主无人系统的具身认知智能框架. 科技导报, 2024, 42(12): 157−166Sun Chang-Yin, Mu Chao-Xu, Liu Wen-Zhang, Wang Xiao. Embodied cognitive intelligence framework of unmanned autonomous systems. Science & Technology Review, 2024, 42(12): 157−166 [3] Wiener N. Cybernetics or Control and Communication in the Animal and the Machine. Cambridge: MIT Press, 1961. [4] Turing A M. Computing Machinery and Intelligence. Oxford: Oxford University Press, 1950. [5] 王耀南, 安果维, 王传成, 莫洋, 缪志强, 曾凯. 智能无人系统技术应用与发展趋势. 中国舰船研究, 2022, 17(5): 9−26Wang Yao-Nan, An Guo-Wei, Wang Chuan-Cheng, Mo Yang, Miao Zhi-Qiang, Zeng Kai. Technology application and development trend of intelligent unmanned system. Chinese Journal of Ship Research, 2022, 17(5): 9−26 [6] Kaufmann E, Bauersfeld L, Loquercio A, Müller M, Koltun V, Scaramuzza D. Champion-level drone racing using deep reinforcement learning. Nature, 2023, 620(7976): 982−987 doi: 10.1038/s41586-023-06419-4 [7] Feng S, Sun H W, Yan X T, Zhu H J, Zou Z X, Shen S Y, et al. Dense reinforcement learning for safety validation of autonomous vehicles. Nature, 2023, 615(7953): 620−627 doi: 10.1038/s41586-023-05732-2 [8] 张鹏飞, 程文铮, 米江勇, 和烨龙, 李亚文, 王力金. 反无人机蜂群关键技术研究现状及展望. 火炮发射与控制学报, DOI: 10.19323/j.issn.1673-6524.202311017Zhang Peng-Fei, Cheng Wen-Zheng, Mi Jiang-Yong, He Ye-Long, Li Ya-Wen, Wang Li-Jin. Research status and prospect of key technologies for counter UAV swarm. Journal of Gun Launch & Control, DOI: 10.19323/j.issn.1673-6524.202311017 [9] 张琳. 美军反无人机系统技术新解. 坦克装甲车辆, 2024(11): 22−29Zhang Lin. New insights into U.S. military anti-drone system technology. Tank & Armoured Vehicle, 2024(11): 22−29 [10] 董昭荣, 赵民, 姜利, 王智. 异构无人系统集群自主协同关键技术综述. 遥测遥控, 2024, 45(4): 1−11 doi: 10.12347/j.ycyk.20240314001Dong Zhao-Rong, Zhao Min, Jiang Li, Wang Zhi. Review on key technologies of autonomous collaboration in heterogeneous unmanned system cluster. Journal of Telemetry, Tracking and Command, 2024, 45(4): 1−11 doi: 10.12347/j.ycyk.20240314001 [11] 江碧涛, 温广辉, 周佳玲, 郑德智. 智能无人集群系统跨域协同技术研究现状与展望. 中国工程科学, 2024, 26(1): 117−126 doi: 10.15302/J-SSCAE-2024.01.015Jiang Bi-Tao, Wen Guang-Hui, Zhou Jia-Ling, Zheng De-Zhi. Cross-domain cooperative technology of intelligent unmanned swarm systems: Current status and prospects. Strategic Study of CAE, 2024, 26(1): 117−126 doi: 10.15302/J-SSCAE-2024.01.015 [12] Firoozi R, Tucker J, Tian S, Majumdar A, Sun J K, Liu W Y, et al. Foundation models in robotics: Applications, challenges, and the future. arXiv: 2312.07843, 2023. [13] 兰沣卜, 赵文博, 朱凯, 张涛. 基于具身智能的移动操作机器人系统发展研究. 中国工程科学, 2024, 26(1): 139−148 doi: 10.15302/J-SSCAE-2024.01.010Lan Feng-Bo, Zhao Wen-Bo, Zhu Kai, Zhang Tao. Development of mobile manipulator robot system with embodied intelligence. Strategic Study of CAE, 2024, 26(1): 139−148 doi: 10.15302/J-SSCAE-2024.01.010 [14] 刘华平, 郭迪, 孙富春, 张新钰. 基于形态的具身智能研究: 历史回顾与前沿进展. 自动化学报, 2023, 49(6): 1131−1154Liu Hua-Ping, Guo Di, Sun Fu-Chun, Zhang Xin-Yu. Morphology-based embodied intelligence: Historical retrospect and research progress. Acta Automatica Sinica, 2023, 49(6): 1131−1154 [15] 张钹, 朱军, 苏航. 迈向第三代人工智能. 中国科学: 信息科学, 2020, 50(9): 1281−1302 doi: 10.1360/SSI-2020-0204Zhang Ba, Zhu Jun, Su Hang. Toward the third generation of artificial intelligence. SCIENTIA SINICA Informationis, 2020, 50(9): 1281−1302 doi: 10.1360/SSI-2020-0204 [16] Radford A, Narasimhan K, Salimans T, Sutskever I. Improving language understanding by generative pre-training [Online], available: https://gwern.net/doc/www/s3-us-west-2.amazonaws.com/d73fdc5ffa8627bce44dcda2fc012da638ffb158.pdf, January 4, 2025 [17] Devlin J, Chang M W, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minnesota, USA: ACL, 2018. 4171−4186 [18] Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I. Language models are unsupervised multitask learners [Online], available: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf, January 4, 2025 [19] Brown T B, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P, et al. Language models are few-shot learners. arXiv: 2005.14165, 2020. [20] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: ACM, 2017. 6000−6010 [21] Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X H, Unterthiner T, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv: 2010.11929, 2021. [22] He K M, Chen X L, Xie S N, Li Y H, Dollár P, Girshick R, et al. Masked autoencoders are scalable vision learners. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 15979−15988 [23] Liu Z, Lin Y T, Cao Y, Hu H, Wei Y X, Zhang Z. Swin Transformer: Hierarchical vision Transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 9992−10002 [24] Touvron H, Lavril T, Izacard G, Martinet X, Lachaux M A, Lacroix T, et al. LLaMA: Open and efficient foundation language models. arXiv: 2302.13971, 2023. [25] Kim W, Son B, Kim I. ViLT: Vision-and-language Transformer without convolution or region supervision. arXiv: 2102.03334, 2021. [26] Li J N, Li D X, Xiong C M, Hoi S C H. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: ICML, 2022. 12888−12900 [27] Yu J H, Wang Z R, Vasudevan V, Yeung L, Seyedhosseini M, Wu Y H. CoCa: Contrastive captioners are image-text foundation models. arXiv: 2205.01917, 2022. [28] Bao H B, Wang W H, Dong L, Wei F R. VL-BEiT: Generative vision-language pretraining. arXiv: 2206.01127, 2022. [29] Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. arXiv: 2103.00020, 2021. [30] Ouyang L, Wu J, Jiang X, Almeida D, Wainwright C L, Mishkin P, et al. Training language models to follow instructions with human feedback. arXiv: 2203.02155, 2022. [31] Brohan A, Brown N, Carbajal J, Chebotar Y, Dabis J, Finn C, et al. RT-1: Robotics Transformer for real-world control at scale. arXiv: 2212.06817, 2022. [32] Driess D, Xia F, Sajjadi M S M, Lynch C, Chowdhery A, Ichter B, et al. PaLM-E: An embodied multimodal language model. In: Proceedings of the 40th International Conference on Machine Learning. Honolulu, USA: ICML, 2023. 8469−8488 [33] Brohan A, Brown N, Carbajal J, Chebotar Y, Chen X, Choromanski K, et al. RT-2: Vision-language-action models transfer web knowledge to robotic control. arXiv: 2307.15818, 2023. [34] Ichter B, Brohan A, Chebotar Y, Finn C, Hausman K, Herzog A, et al. Do as I can, not as I say: Grounding language in robotic affordances. In: Proceedings of the 6th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2022. 287−318 [35] Bousmalis K, Vezzani G, Rao D, Devin C, Lee A X, Bauza M, et al. RoboCat: A self-improving foundation agent for robotic manipulation. arXiv: 2306.11706, 2023. [36] Huang W L, Wang C, Zhang R H, Li Y Z, Wu J J, Li F F. VoxPoser: Composable 3D value maps for robotic manipulation with language models. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023. 540−562 [37] O'Neill A, Rehman A, Gupta A, Maddukuri A, Gupta A, Padalkar A, et al. Open X-embodiment: Robotic learning datasets and RT-X models. arXiv: 2310.08864, 2024. [38] Zeng F L, Gan W S, Wang Y H, Liu N, Yu P S. Large language models for robotics: A survey. arXiv: 2311.07226, 2023. [39] Bommasani R, Hudson D A, Adeli E, Altman E, Arora S, von Arx S, et al. On the opportunities and risks of foundation models. arXiv: 2108.07258, 2021. [40] Wang W H, Bao H B, Dong L, Bjorck J, Peng Z L, Liu Q, et al. Image as a foreign language: BEiT pretraining for all vision and vision-language tasks. arXiv: 2208.10442, 2022. [41] Bao H B, Wang W H, Dong L, Liu Q, Mohammed O K, Aggarwal K, et al. VLMo: Unified vision-language pre-training with mixture-of-modality-experts. arXiv: 2111.02358, 2022. [42] Chen F L, Zhang D Z, Han M L, Chen X Y, Shi J, Xu S, et al. VLP: A survey on vision-language pre-training. Machine Intelligence Research, 2023, 20(1): 38−56 doi: 10.1007/s11633-022-1369-5 [43] Peng F, Yang X S, Xiao L H, Wang Y W, Xu C S. SgVA-CLIP: Semantic-guided visual adapting of vision-language models for few-shot image classification. IEEE Transactions on Multimedia, 2024, 26: 3469−3480 doi: 10.1109/TMM.2023.3311646 [44] Li L H, Zhang P C, Zhang H T, Yang J W, Li C Y, Zhong Y W, et al. Grounded language-image pre-training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 10955−10965 [45] Liu S L, Zeng Z Y, Ren T H, Li F, Zhang H, Yang J, et al. Grounding DINO: Marrying DINO with grounded pre-training for open-set object detection. arXiv: 2303.05499, 2023. [46] Minderer M, Gritsenko A A, Stone A, Neumann M, Weissenborn D, Dosovitskiy A, et al. Simple open-vocabulary object detection. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 728−755 [47] Xu J R, de Mello S, Liu S F, Byeon W, Breuel T, Kautz J, et al. GroupViT: Semantic segmentation emerges from text supervision. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 18113−18123 [48] Li B Y, Weinberger K Q, Belongie S J, Koltun V, Ranftl R. Language-driven semantic segmentation. arXiv: 2201.03546, 2022. [49] Ghiasi G, Gu X Y, Cui Y, Lin T Y. Scaling open-vocabulary image segmentation with image-level labels. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 540−557 [50] Zhou C, Loy C C, Dai B. Extract free dense labels from clip. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 696−712 [51] Kirillov A, Mintun E, Ravi N, Mao H Z, Rolland C, Gustafson L, et al. Segment anything. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 3992−4003 [52] Wu Z R, Song S R, Khosla A, Yu F, Zhang L G, Tang X O, et al. 3D ShapeNets: A deep representation for volumetric shapes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 1912−1920 [53] Kerr J, Kim C M, Goldberg K, Kanazawa A, Tancik M. LERF: Language embedded radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 19672−19682 [54] Shen W, Yang G, Yu A L, Wong J, Kaelbling L P, Isola P. Distilled feature fields enable few-shot language-guided manipulation. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023. 405−424 [55] Gadre S Y, Ehsani K, Song S R, Mottaghi R. Continuous scene representations for embodied AI. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 14829−14839 [56] Shafiullah N M, Paxton C, Pinto L, Chintala S, Szlam A. CLIP-fields: Weakly supervised semantic fields for robotic memory. arXiv: 2210.05663, 2022. [57] Huang C G, Mees O, Zeng A, Burgard W. Visual language maps for robot navigation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). London, UK: IEEE, 2023. 10608−10615 [58] Gan Z, Li L J, Li C Y, Wang L J, Liu Z C, Gao J F. Vision-language pre-training: Basics, recent advances, and future trends. Foundations and Trends® in Computer Graphics and Vision, 2022, 14(3−4): 163−352 [59] Huang W L, Xia F, Xiao T, Chan H, Liang J, Florence P, et al. Inner monologue: Embodied reasoning through planning with language models. In: Proceedings of the 6th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2022. 1769−1782 [60] Sun Y W, Zhang K, Sun C Y. Model-based transfer reinforcement learning based on graphical model representations. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(2): 1035−1048 doi: 10.1109/TNNLS.2021.3107375 [61] Hao S, Gu Y, Ma H D, Hong J, Wang Z, Wang D, et al. Reasoning with language model is planning with world model. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Singapore: ACL, 2023. 8154−8173 [62] Zha L H, Cui Y C, Lin L H, Kwon M, Arenas M G, Zeng A, et al. Distilling and retrieving generalizable knowledge for robot manipulation via language corrections. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Yokohama, Japan: IEEE, 2024. 15172−15179 [63] Hassanin M, Khan S, Tahtali M. Visual affordance and function understanding: A survey. ACM Computing Surveys, 2022, 54(3): Article No. 47 [64] Luo H C, Zhai W, Zhang J, Cao Y, Tao D C. Learning visual affordance grounding from demonstration videos. IEEE Transactions on Neural Networks and Learning Systems, 2024, 35(11): 16857−16871 doi: 10.1109/TNNLS.2023.3298638 [65] Mo K C, Guibas L, Mukadam M, Gupta A, Tulsiani S. Where2Act: From pixels to actions for articulated 3D objects. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 6793−6803 [66] Geng Y R, An B S, Geng H R, Chen Y P, Yang Y D, Dong H. RLAfford: End-to-end affordance learning for robotic manipulation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). London, UK: IEEE, 2023. 5880−5886 [67] Kolve E, Mottaghi R, Han W, VanderBilt E, Weihs L, Herrasti A, et al. AI2-THOR: An interactive 3D environment for visual AI. arXiv: 1712.05474, 2017. [68] Gan C, Schwartz J, Alter S, Mrowca D, Schrimpf M, Traer J, et al. ThreeDWorld: A platform for interactive multi-modal physical simulation. arXiv: 2007.04954, 2020. [69] Deitke M, VanderBilt E, Herrasti A, Weihs L, Salvador J, Ehsani K, et al. ProcTHOR: Large-scale embodied AI using procedural generation. arXiv: 2206.06994, 2022. [70] Anderson P, Wu Q, Teney D, Bruce J, Johnson M, Sünderhauf N, et al. Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 3674−3683 [71] Wu Q, Wu C J, Zhu Y X, Joo J. Communicative learning with natural gestures for embodied navigation agents with human-in-the-scene. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Prague, Czech Republic: IEEE, 2021. 4095−4102 [72] Duan J F, Yu S, Tan H L, Zhu H Y, Tan C. A survey of embodied AI: From simulators to research tasks. IEEE Transactions on Emerging Topics in Computational Intelligence, 2022, 6(2): 230−244 doi: 10.1109/TETCI.2022.3141105 [73] Shah D, Osinski B, Levine S, Levine S. LM-Nav: Robotic navigation with large pre-trained models of language, vision, and action. In: Proceedings of the 6th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2022. 492−504 [74] Gadre S Y, Wortsman M, Ilharco G, Schmidt L, Song S R. CoWs on pasture: Baselines and benchmarks for language-driven zero-shot object navigation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 23171−23181 [75] Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S. End-to-end object detection with Transformers. In: Proceedings of the 16th European Conference on Computer Vision. Glasgow, UK: Springer, 2020. 213−229 [76] Ren S Q, He K M, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. In: Proceedings of the 28th International Conference on Neural Information Processing Systems. Montreal, Canada: ACM, 2015. 91−99 [77] Jiang P Y, Ergu D, Liu F Y, Cai Y, Ma B. A review of Yolo algorithm developments. Procedia Computer Science, 2022, 199: 1066−1073 doi: 10.1016/j.procs.2022.01.135 [78] Cheng H K, Alexander G S. XMem: Long-term video object segmentation with an Atkinson-Shiffrin memory model. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 640−658 [79] Zhu X Y, Zhang R R, He B W, Guo Z Y, Zeng Z Y, Qin Z P, et al. PointCLIP V2: Prompting CLIP and GPT for powerful 3D open-world learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 2639−2650 [80] Muzahid A A M, Wan W G, Sohel F, Wu L Y, Hou L. CurveNet: Curvature-based multitask learning deep networks for 3D object recognition. IEEE/CAA Journal of Automatica Sinica, 2021, 8(6): 1177−1187 doi: 10.1109/JAS.2020.1003324 [81] Xue L, Gao M F, Xing C, Martín-Martín R, Wu J J, Xiong C M, et al. ULIP: Learning a unified representation of language, images, and point clouds for 3D understanding. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 1179−1189 [82] Qi C R, Yi L, Su H, Guibas L J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: ACM, 2017. 5105−5114 [83] Ma X, Qin C, You H X, Ran H X, Fu Y. Rethinking network design and local geometry in point cloud: A simple residual MLP framework. arXiv: 2202.07123, 2022. [84] Mildenhall B, Srinivasan P P, Tancik M, Barron J T, Ramamoorthi R, Ng R. NeRF: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 2022, 65(1): 99−106 doi: 10.1145/3503250 [85] Zeng A, Attarian M, Ichter B, Choromanski K M, Wong A, Welker S, et al. Socratic models: Composing zero-shot multimodal reasoning with language. arXiv: 2204.00598, 2022. [86] Li B Z, Nye M, Andreas J. Implicit representations of meaning in neural language models. arXiv: 2106.00737, 2021. [87] Huang W L, Abbeel P, Pathak D, Mordatch I. Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 9118−9147 [88] Liu Y H, Ott M, Goyal N, Du J F, Joshi M, Chen D Q, et al. RoBERTa: A robustly optimized BERT pretraining approach. arXiv: 1907.11692, 2019. [89] Liang J, Huang W L, Xia F, Xu P, Hausman K, Ichter B, et al. Code as policies: Language model programs for embodied control. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). London, UK: IEEE, 2023. 9493−9500 [90] Du Y L, Yang M, Florence P, Xia F, Wahid A, Ichter B, et al. Video language planning. arXiv: 2310.10625, 2023. [91] Liang J, Xia F, Yu W H, Zeng A, Arenas M G, Attarian M, et al. Learning to learn faster from human feedback with language model predictive control. arXiv: 2402.11450, 2024. [92] Lynch C, Sermanet P. Language conditioned imitation learning over unstructured data. arXiv: 2005.07648, 2020. [93] Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Proceedings of the 18th International Conference on Medical Image Computing and Computer-assisted Intervention—MICCAI 2015. Munich, Germany: Springer, 2015. 234−241 [94] Mo K C, Qin Y Z, Xiang F B, Su H, Guibas L J. O2O-Afford: Annotation-free large-scale object-object affordance learning. In: Proceedings of the 5th Conference on Robot Learning. London, UK: PMLR, 2021. 1666−1677 [95] Savva M, Kadian A, Maksymets O, Zhao Y L, Wijmans E, Jain B, et al. Habitat: A platform for embodied AI research. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, South Korea: IEEE, 2019. 9338−9346 [96] Xia F, Shen W B, Li C S, Kasimbeg P, Tchapmi M E, Toshev A, et al. Interactive Gibson benchmark: A benchmark for interactive navigation in cluttered environments. IEEE Robotics and Automation Letters, 2020, 5(2): 713−720 doi: 10.1109/LRA.2020.2965078 [97] Anderson P, Chang A, Chaplot D S, Dosovitskiy A, Gupta S, Koltun V, et al. On evaluation of embodied navigation agents. arXiv: 1807.06757, 2018. [98] Paul S, Roy-Chowdhury A K, Cherian A. AVLEN: Audio-visual-language embodied navigation in 3D environments. arXiv: 2210.07940, 2022. [99] Tan S N, Xiang W L, Liu H P, Guo D, Sun F C. Multi-agent embodied question answering in interactive environments. In: Proceedings of the 16th European Conference on Computer Vision. Glasgow, UK: Springer, 2020. 663−678 [100] Majumdar A, Aggarwal G, Devnani B, Hoffman J, Batra D. ZSON: Zero-shot object-goal navigation using multimodal goal embeddings. arXiv: 2206.12403, 2023. [101] Zhou G Z, Hong Y C, Wu Q. NavGPT: Explicit reasoning in vision-and-language navigation with large language models. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI, 2024. 7641−7649 [102] Shah D, Eysenbach B, Kahn G, Rhinehart N, Levine S. ViNG: Learning open-world navigation with visual goals. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Xi'an, China: IEEE, 2021. 13215−13222 [103] Wen G H, Zheng W X, Wan Y. Distributed robust optimization for networked agent systems with unknown nonlinearities. IEEE Transactions on Automatic Control, 2023, 68(9): 5230−5244 doi: 10.1109/TAC.2022.3216965 -

下载:

下载: