-

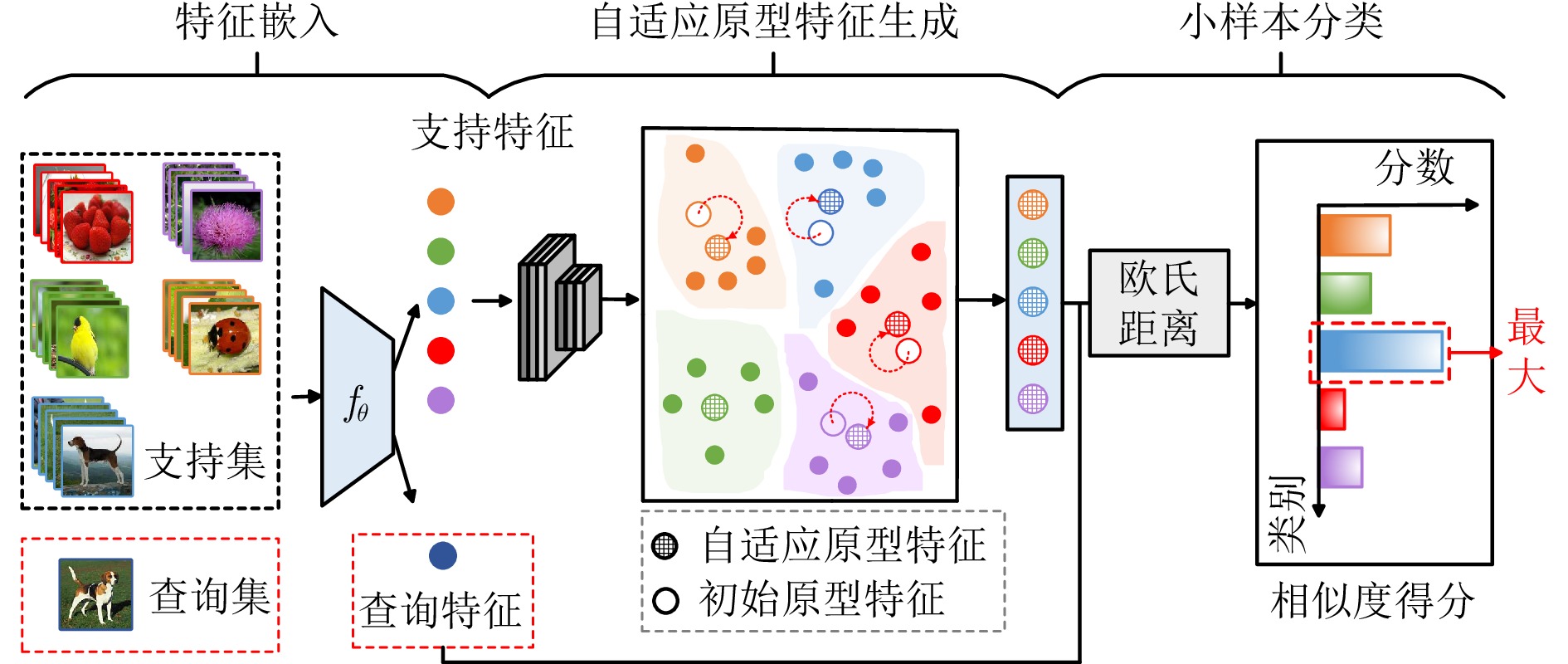

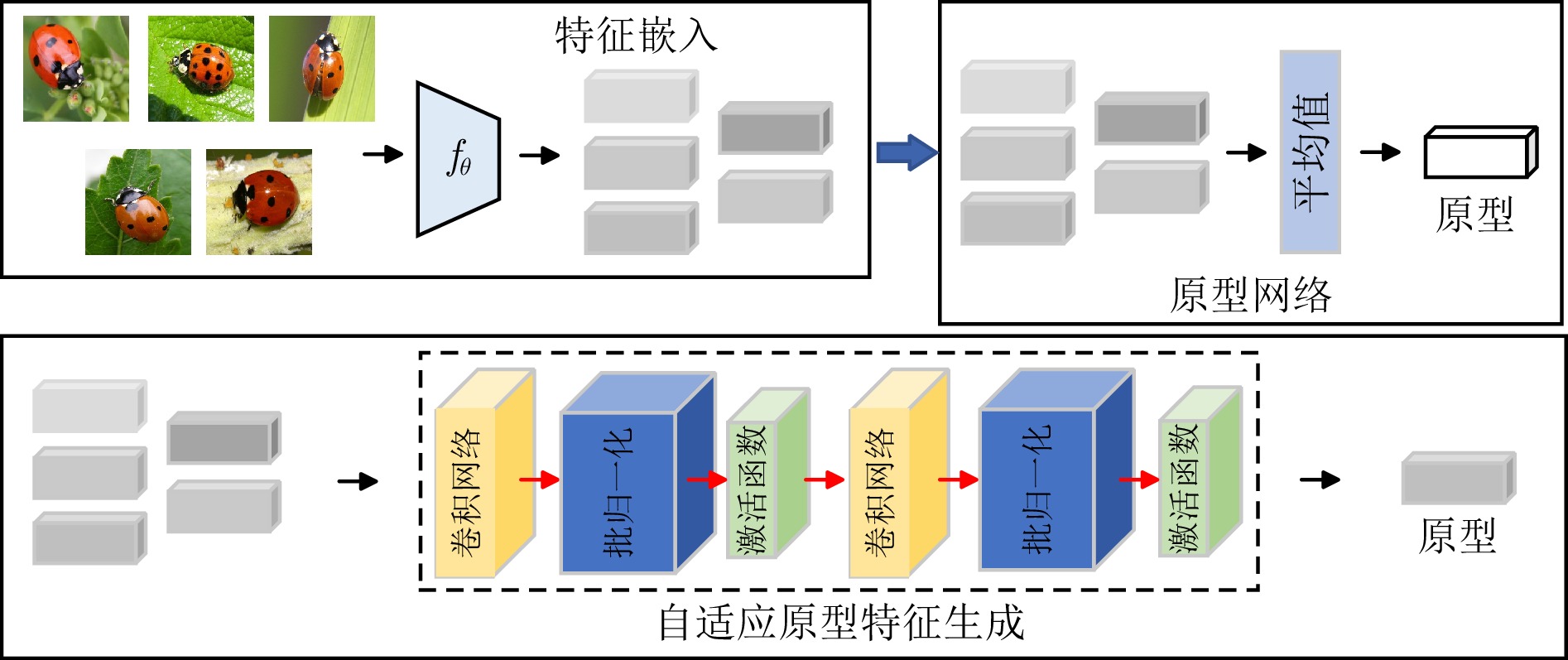

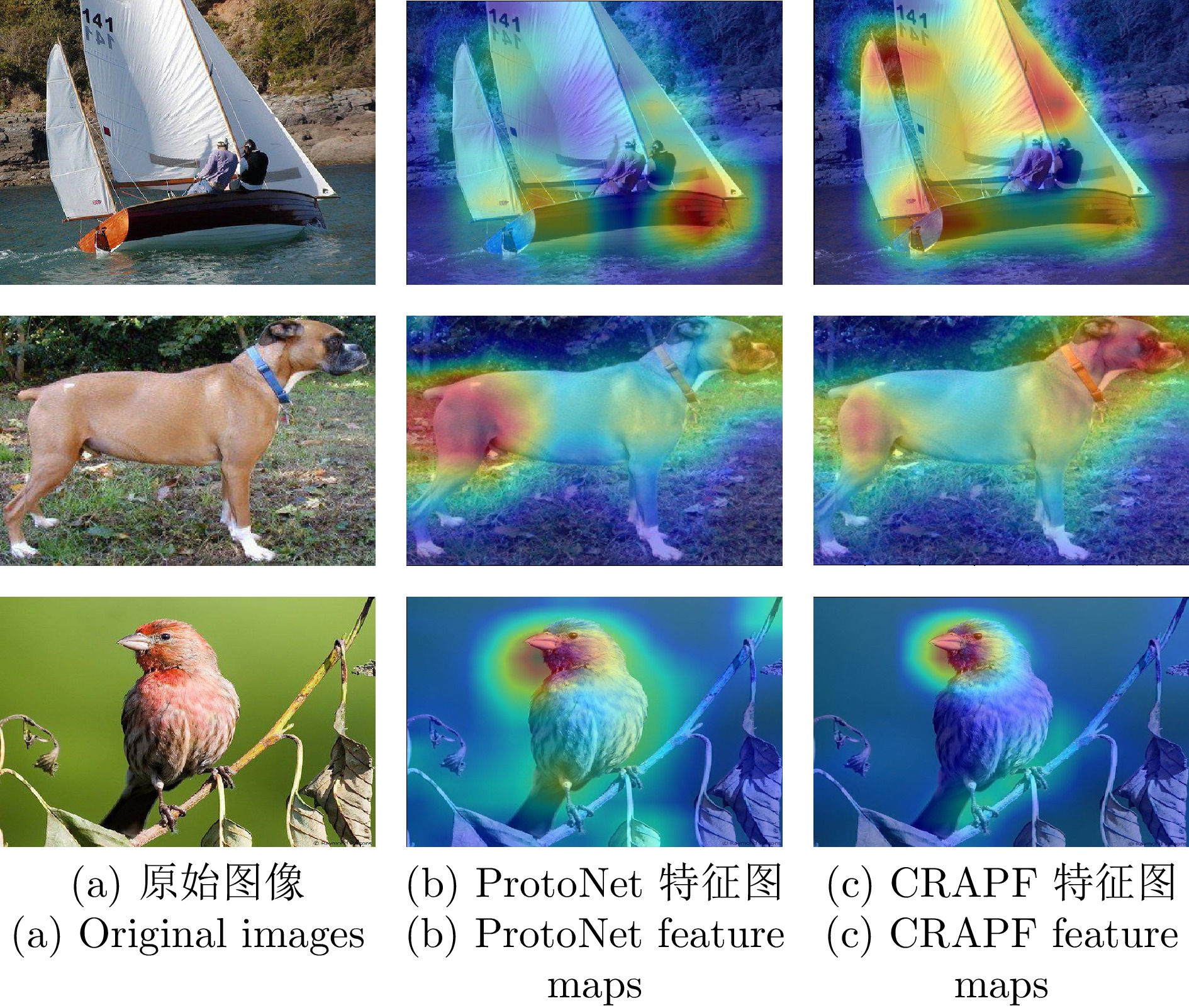

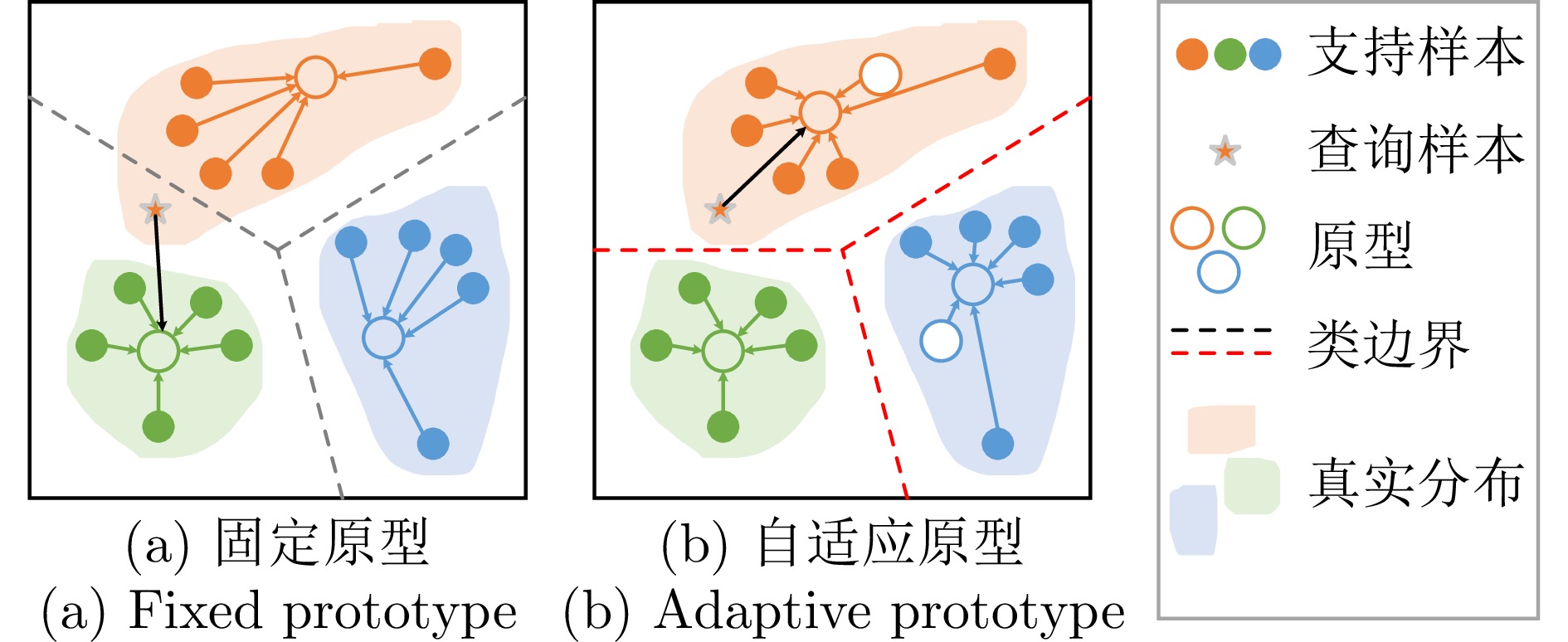

摘要: 针对小样本学习过程中样本数量不足导致的性能下降问题, 基于原型网络(Prototype network, ProtoNet)的小样本学习方法通过实现查询样本与支持样本原型特征间的距离度量, 从而达到很好的分类性能. 然而, 这种方法直接将支持集样本均值视为类原型, 在一定程度上加剧了对样本数量稀少情况下的敏感性. 针对此问题, 提出了基于自适应原型特征类矫正的小样本学习方法(Few-shot learning based on class rectification via adaptive prototype features, CRAPF), 通过自适应生成原型特征来缓解方法对数据细微变化的过度响应, 并同步实现类边界的精细化调整. 首先, 使用卷积神经网络构建自适应原型特征生成模块, 该模块采用非线性映射获取更为稳健的原型特征, 有助于减弱异常值对原型构建的影响; 然后, 通过对原型生成过程的优化, 提升不同类间原型表示的区分度, 进而强化原型特征对类别表征的整体效能; 最后, 在3个广泛使用的基准数据集上的实验结果显示, 该方法提升了小样本学习任务的表现.Abstract: In response to the performance degradation issue from inadequate sample sizes during few-shot learning, prototypical network (ProtoNet)-based few-shot learning methods achieve commendable classification capabilities by measuring the distance metrics between query sample features and the prototype features of support samples. However, this method directly treats the mean of the support set samples as class prototypes, exacerbating sensitivity to scarcity of samples. To address this issue, we propose a few-shot learning method based on class rectification via adaptive prototype features (CRAPF). This method mitigates the model's over-responsiveness to minor data variations by adaptively generating prototype features and simultaneously achieves fine-tuned adjustments of class boundaries. First, we construct an adaptive prototype feature generation module using convolutional neural networks. This module leverages nonlinear mappings to obtain more robust prototype features, thereby mitigating the impact of outliers on prototype construction. Second, by optimizing the prototype generation process, we enhance the discriminability of prototype representations across different classes, thus strengthening the overall efficacy of prototype features in class representation. Finally, experiments conducted on three extensively utilized benchmark datasets reveal that this method significantly enhances the performance of few-shot learning tasks.

-

表 1 数据集基本信息

Table 1 Basic information of datasets

数据集 样本数量 训练/验证/测试类别数量 图片尺寸(像素) MiniImageNet 60000 64/16/20 84$ \times $84 CIFAR-FS 60000 64/16/20 32$ \times $32 FC100 60000 60/20/20 32$ \times $32 表 2 应用不同网络的实验结果 (%)

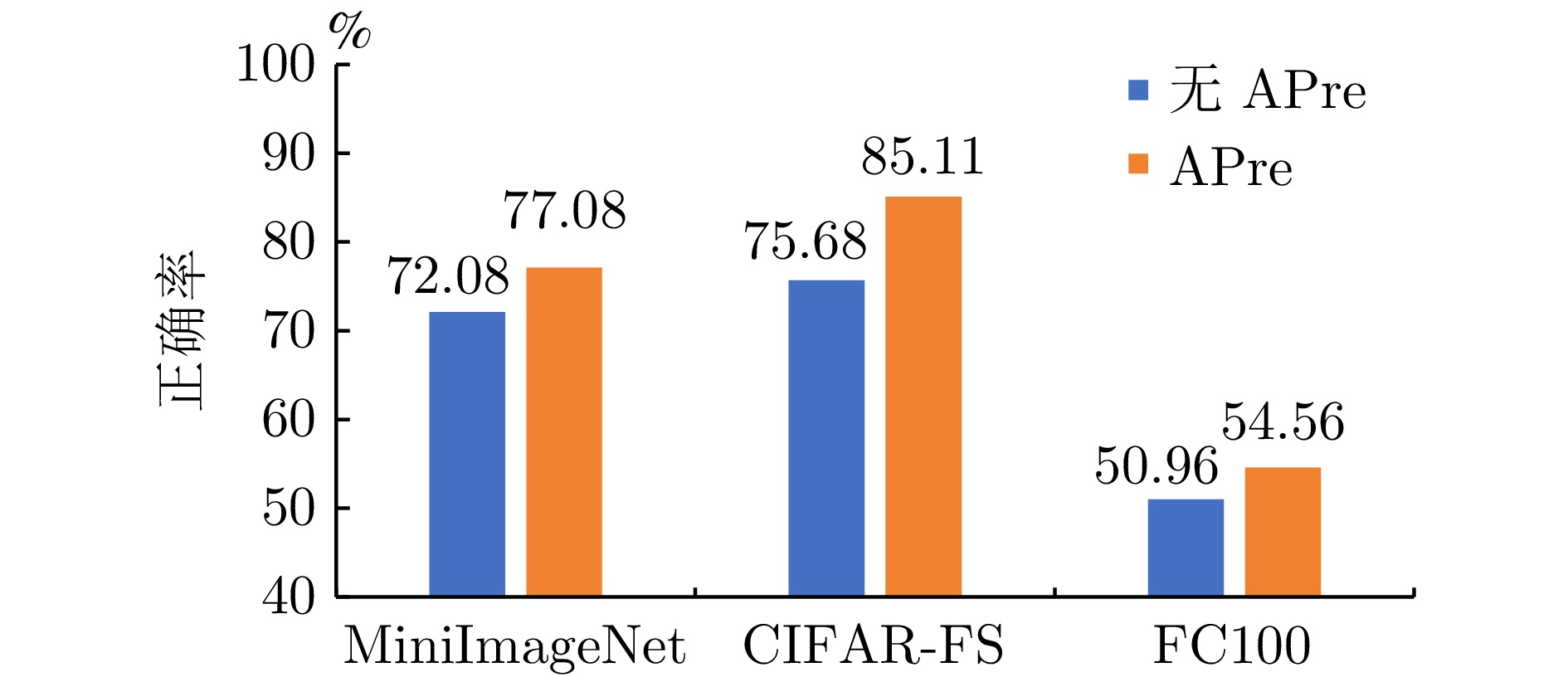

Table 2 Experimental results using different networks (%)

网络 数据集 ProtoNet CRAPF ResNet12 MiniImageNet 72.08 77.08 CIFAR-FS 75.68 85.11 FC100 50.96 54.56 ResNet18 MiniImageNet 73.68 74.40 CIFAR-FS 72.83 84.93 FC100 47.50 53.33 表 3 MiniImageNet数据集上的对比实验结果 (%)

Table 3 Comparative experimental results on the MiniImageNet dataset (%)

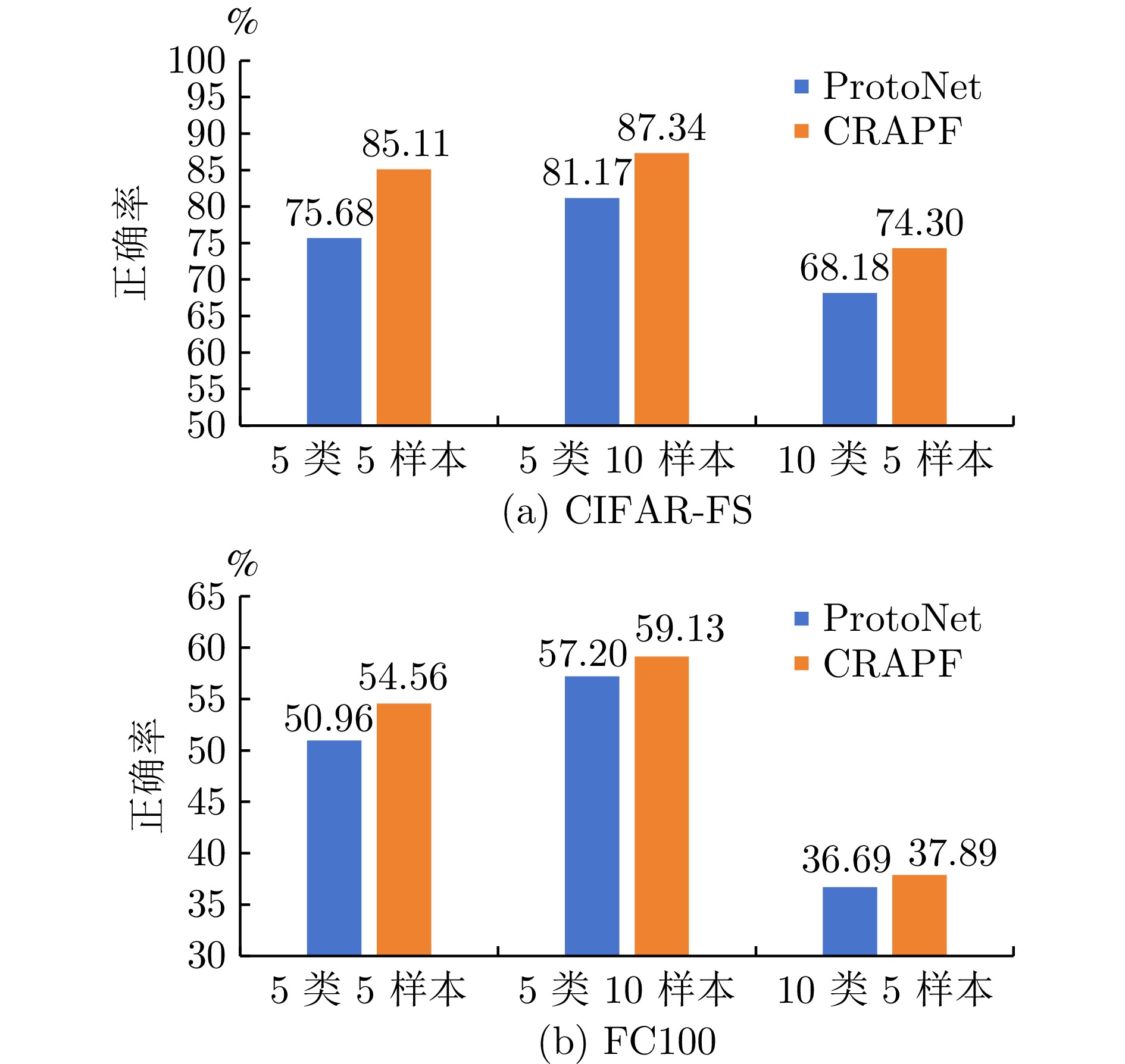

方法 网络 5类1样本 5类5样本 TEAM ResNet18 60.07 75.90 TransCNAPS ResNet18 55.60 73.10 MTUNet ResNet18 58.13 75.02 IPN ResNet10 56.18 74.60 ProtoNet* ResNet12 53.42 72.08 TADAM ResNet12 58.50 76.70 SSR ResNet12 68.10 76.90 MDM-Net ResNet12 59.88 76.60 CRAPF* ResNet12 59.38 77.08 表 4 CIFAR-FS数据集上的对比实验结果 (%)

Table 4 Comparative experimental results on the CIFAR-FS dataset (%)

方法 网络 5类1样本 5类5样本 ProtoNet* ResNet12 56.86 75.68 Shot-Free ResNet12 69.20 84.70 TEAM ResNet12 70.40 80.30 DeepEMD ResNet12 46.50 63.20 DSN ResNet12 72.30 85.10 MTL ResNet12 69.50 84.10 DSMNet ResNet12 60.66 79.26 MTUNet ResNet18 67.43 82.81 CRAPF* ResNet12 72.34 85.11 表 5 FC100数据集上的对比实验结果 (%)

Table 5 Comparative experimental results on the FC100 dataset (%)

方法 网络 5类1样本 5类5样本 ProtoNet* ResNet12 37.50 50.96 SimpleShot ResNet10 40.13 53.63 Baseline2020 ResNet12 36.82 49.72 TADAM ResNet12 40.10 56.10 CRAPF* ResNet12 40.44 54.56 -

[1] Jiang H, Diao Z, Shi T, Zhou Y, Wang F, Hu W, et al. A review of deep learning-based multiple-lesion recognition from medical images: Classification, detection and segmentation. Computers in Biology and Medicine, 2023, 157: Article No. 106726 [2] 赵凯琳, 靳小龙, 王元卓. 小样本学习研究综述. 软件学报, 2021, 32(2): 349−369Zhao Kai-Lin, Jin Xiao-Long, Wang Yuan-Zhuo. Survey on few-shot learning. Journal of Software, 2021, 32(2): 349−369 [3] 赵一铭, 王佩瑾, 刁文辉, 孙显, 邓波. 基于通道注意力机制的小样本SAR飞机图像分类方法. 南京大学学报(自然科学版), 2024, 60(3): 464−476Zhao Yi-Ming, Wang Pei-Jin, Diao Wen-Hui, Sun Xian, Deng Bo. Few-shot SAR aircraft image classification method based on channel attention mechanism. Journal of Nanjing University (Natural Science), 2024, 60(3): 464−476 [4] Li F F, Fergus R, Perona P. A Bayesian approach to unsupervised one-shot learning of object categories. In: Proceedings of the IEEE International Conference on Computer Vision. Nice, Franc: IEEE, 2003. 1134−1141 [5] 刘颖, 雷研博, 范九伦, 王富平, 公衍超, 田奇. 基于小样本学习的图像分类技术综述. 自动化学报, 2021, 47(2): 297−315Liu Ying, Lei Yan-Bo, Fan Jiu-Lun, Wang Fu-Ping, Gong Yan-Chao, Tian Qi. Survey on image classification technology based on small sample learning. Acta Automatica Sinica, 2021, 47(2): 297−315 [6] Li X, Yang X, Ma Z, Xue J. Deep metric learning for few-shot image classification: A review of recent developments. Pattern Recognition, 2023, 138: Article No. 109381 [7] Snell J, Swersky K, Zemel R. Prototypical networks for few-shot learning. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: 2017. 4077−4087 [8] Qiang W, Li J, Su B, Fu J, Xiong H, Wen J. Meta attention-generation network for cross-granularity few-shot learning. IEEE International Journal of Computer Vision, 2023, 131(5): 1211−1233 doi: 10.1007/s11263-023-01760-7 [9] Li W, Wang L, Xu J, Huo J, Gao Y, Luo J. Revisiting local descriptor based image-to-class measure for few-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 7260−7268 [10] Xu W, Xu Y, Wang H, Tu Z. Attentional constellation nets for few-shot learning. In: Proceedings of the International Conference on Learning Representations. Vienna, Austria: ICLR, 2021. 1−12 [11] Huang H, Zhang J, Yu L, Zhang J, Wu Q, Xu C. TOAN: Target-oriented alignment network for fine-grained image categorization with few labeled samples. IEEE Transactions on Circuits and Systems for Video Technology, 2021, 32(2): 853−866 [12] Xie J, Long F, Lv J, Wang Q, Li P. Joint distribution matters: Deep Brownian distance covariance for few-shot classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New Orleans, USA: IEEE, 2022. 7972−7981 [13] Luo X, Wei L, Wen L, Yang J, Xie L, Xu Z, et al. Rectifying the shortcut learning of background for few-shot learning. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: 2021. 13073−13085 [14] Kwon H, Jeong S, Kim S, Sohn K. Dual prototypical contrastive learning for few-shot semantic segmentation. arXiv preprint arXiv: 2111.04982, 2021. [15] Li J, Liu G. Few-shot image classification via contrastive self-supervised learning. arXiv preprint arXiv: 2008.09942, 2020. [16] Sung F, Yang Y, Zhang L, Xiang T, Torr P H, Hospedales T M. Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 1199− 1208 [17] Hao F, He F, Cheng J, Wang L, Cao J, Tao D. Collect and select: Semantic alignment metric learning for few-shot learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 8460−8469 [18] Li W, Xu J, Huo J, Wang L, Gao Y, Luo J. Distribution consistency based covariance metric networks for few-shot learning. In: Proceedings of the AAAI Conference on Artificial Intelligence. Honolulu, USA: 2019. 8642−8649 [19] Zhu W, Li W, Liao H, Luo J. Temperature network for few-shot learning with distribution-aware large margin metric. Pattern Recognition, 2021, 112: Article No. 109381 [20] Allen K, Shelhamer E, Shin H, Tennbaum J B. Infinite mixture prototypes for few-shot learning. In: Proceedings of the International Conference on Machine Learning. Long Beach, USA: ICML, 2019. 232−241 [21] Liu J, Song L, Qin Y. Prototype rectification for few-shot learning. In: Proceedings of the European Conference Computer Vision. Glasgow, UK: Springer, 2020. 741−756 [22] Li X, Li Y, Zheng Y, Zhu R, Ma Z, Xue J, et al. ReNAP: Relation network with adaptive prototypical learning for few-shot classification. Neurocomputing, 2023, 520: 356−364 doi: 10.1016/j.neucom.2022.11.082 [23] Vinyals O, Blundell C, Lillicrap T, Kavukcuoglu K, Wierstra D. Matching networks for one shot learning. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: 2016. 3637−3645 [24] Bertinetto L, Henriques J, Torr P, Vedaldi A. Meta-learning with differentiable closed-form solvers. In: Proceedings of the International Conference on Learning Representations. New Orleans, USA: ICLR, 2019. 1−13 [25] Oreshkin B, Rodríguez Loópez P, Lacoste A. TADAM: Task dependent adaptive metric for improved few-shot learning. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: 2018. 719−729 [26] Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet large scale visual recognition challenge. International Journal of Computer Vision, 2015, 115: 211−252 doi: 10.1007/s11263-015-0816-y [27] Ravi S, Larochelle H. Optimization as a model for few-shot learning. In: Proceedings of the International Conference on Learning Representations. San Juan, Puerto Rico: ICLR, 2016. 1−11 [28] Krizhevsky A. Learning Multiple Layers of Features From Tiny Images [Master thesis], Toronto University, Canada, 2009. [29] Bertinetto L, Henriques J F, Valmadre J, Torr P H S, Vedaldi A. Learning feed-forward one-shot learners. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: 2016. 523−531 [30] Qiao L, Shi Y, Li J, Wang Y, Huang T, Tian Y. Transductive episodic-wise adaptive metric for few-shot learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 3603−3612 [31] Bateni P, Barber J, van de meent J W, Wood F. Enhancing few-shot image classification with unlabelled examples. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. Waikoloa, USA: IEEE, 2022. 2796−2805 [32] Wang B, Li L, Verma M, Nakashima Y, Kawasaki R, Nagahara H. Match them up: Visually explainable few-shot image classification. Applied Intelligence, 2023, 53: 10956−10977 doi: 10.1007/s10489-022-04072-4 [33] Ji Z, Chai X, Yu Y, Pang Y, Zhang Z. Improved prototypical networks for few-shot learning. Pattern Recognition Letters, 2020, 140: 81−87 doi: 10.1016/j.patrec.2020.07.015 [34] Shen X, Xiao Y, Hu S X, Sbai O, Aubry M. Re-ranking for image retrieval and transductive few-shot classification. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: 2021. 25932−25943 [35] Gao F, Cai L, Yang Z, Song S, Wu C. Multi-distance metric network for few-shot learning. International Journal of Machine Learning and Cybernetics, 2022, 13(9): 2495−2506 doi: 10.1007/s13042-022-01539-1 [36] Ravichandran A, Bhotika R, Soatto S. Few-shot learning with embedded class models and shot-free meta training. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 331−339 [37] Zhang C, Cai Y, Lin G, Shen C. DeepEMD: Few-shot image classification with differentiable earth mover's distance and structured classifiers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2020. 12203−12213 [38] Simon C, Koniusz P, Nock R, Harandi M. Adaptive subspaces for few-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2020. 4136−4145 [39] Wang H, Zhao H, Li B. Bridging multi-task learning and meta-learning: Towards efficient training and effective adaptation. In: Proceedings of the International Conference on Machine Learning. Vienna, Austria: ICML, 2021. 10991−11002 [40] Yan L, Li F, Zhang L, Zheng X. Discriminant space metric network for few-shot image classification. Applied Intelligence, 2023, 53: 17444−17459 doi: 10.1007/s10489-022-04413-3 [41] Wang Y, Chao W L, Weinberger K Q, Maaten L. SimpleShot: Revisiting nearest-neighbor classification for few-shot learning. arXiv preprint arXiv: 1911.04623, 2019. [42] Ramprasaath R, Michael C, Abhishek D, Ramakrishna V, Devi P, Dhruv B. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 618−626 [43] Dhillon G S, Chaudhari P, Ravichandran A, Soattoet S. A baseline for few-shot image classification. In: Proceedings of the International Conference on Learning Representations. Addis Ababa, Ethiopia: ICLR, 2020. 1−12 -

下载:

下载: