-

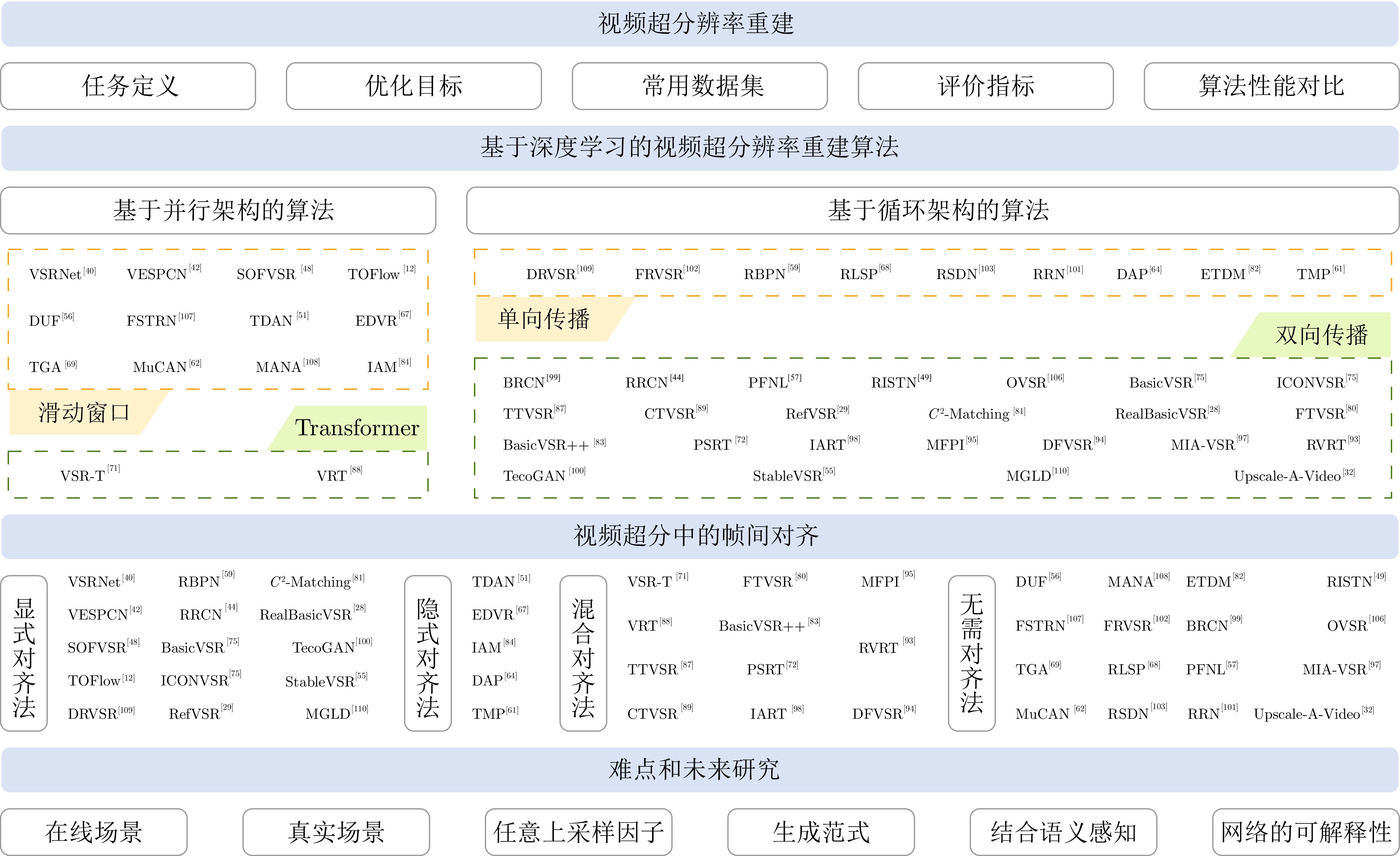

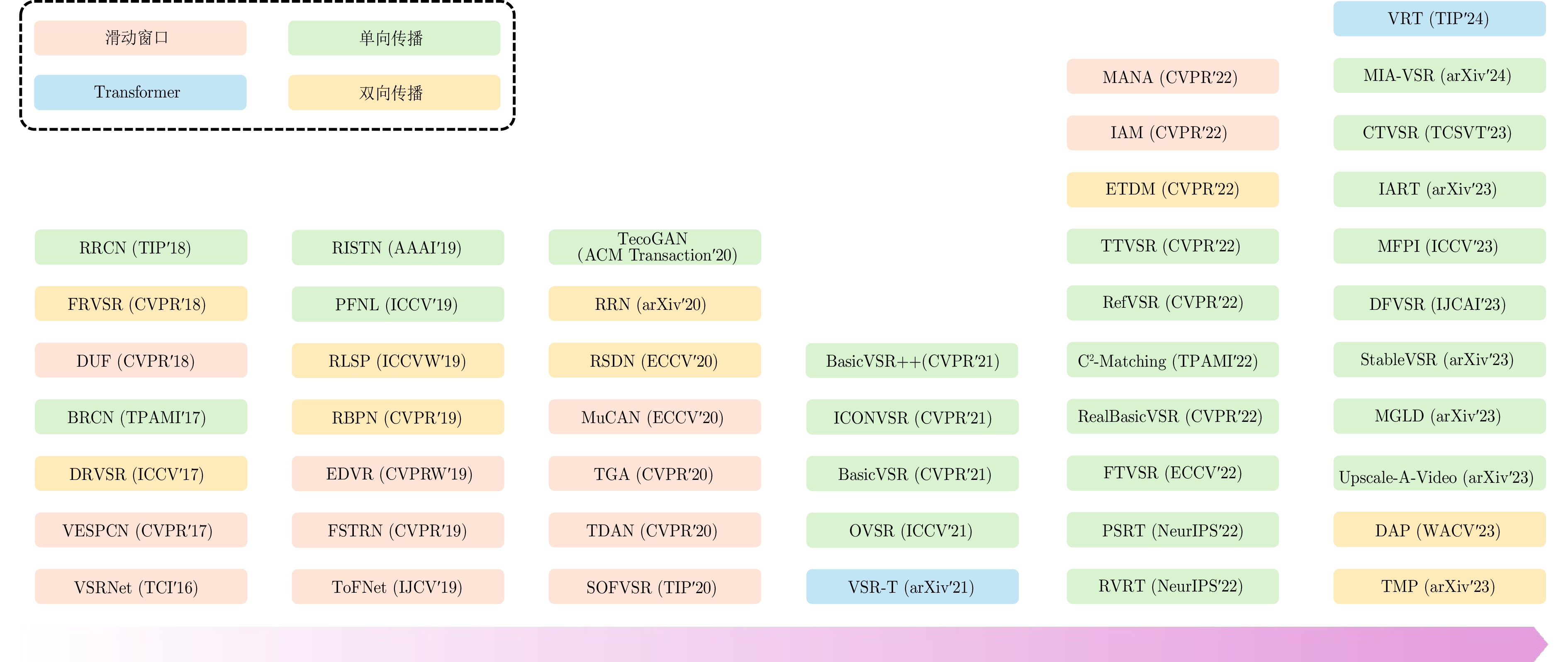

摘要: 视频超分辨率重建是底层计算机视觉任务中的一个重要研究方向, 旨在利用低分辨率视频的帧内和帧间信息, 重建具有更多细节和内容一致的高分辨率视频, 有助于提升下游任务性能和改善用户观感体验. 近年来, 基于深度学习的视频超分辨率重建算法大量涌现, 在帧间对齐、信息传播等方面取得突破性的进展. 首先, 在简述视频超分辨率重建任务的基础上, 梳理现有的视频超分辨率重建的公共数据集及相关算法; 接着, 详细综述基于深度学习的视频超分辨率重建算法的创新性工作进展情况; 最后, 总结视频超分辨率重建算法面临的挑战及未来的发展趋势.Abstract: Video super-resolution (VSR) is an essential research realm within low-level vision tasks. It aims to reconstruct high-resolution video with realistic details and coherent content by utilizing intra-frame and inter-frame information of low-resolution video, which positively impacts the performance of downstream tasks and the improvement of user's perception experience. In recent years, VSR base on deep learning has emerged abundantly, make breakthrough progress in inter-frame alignment, information propagation, and other aspects. On the basis of briefly describing the task of VSR, the existing VSR public datasets and related algorithms are combed. Subsequently, the innovative work progress of deep-learning-based VSR are reviewed in detail. Finally, the challenges and future development trends of VSR algorithms are outlined.

-

表 1 基于深度学习的视频超分辨率重建数据集

Table 1 Datasets of video super-resolution based on deep learning

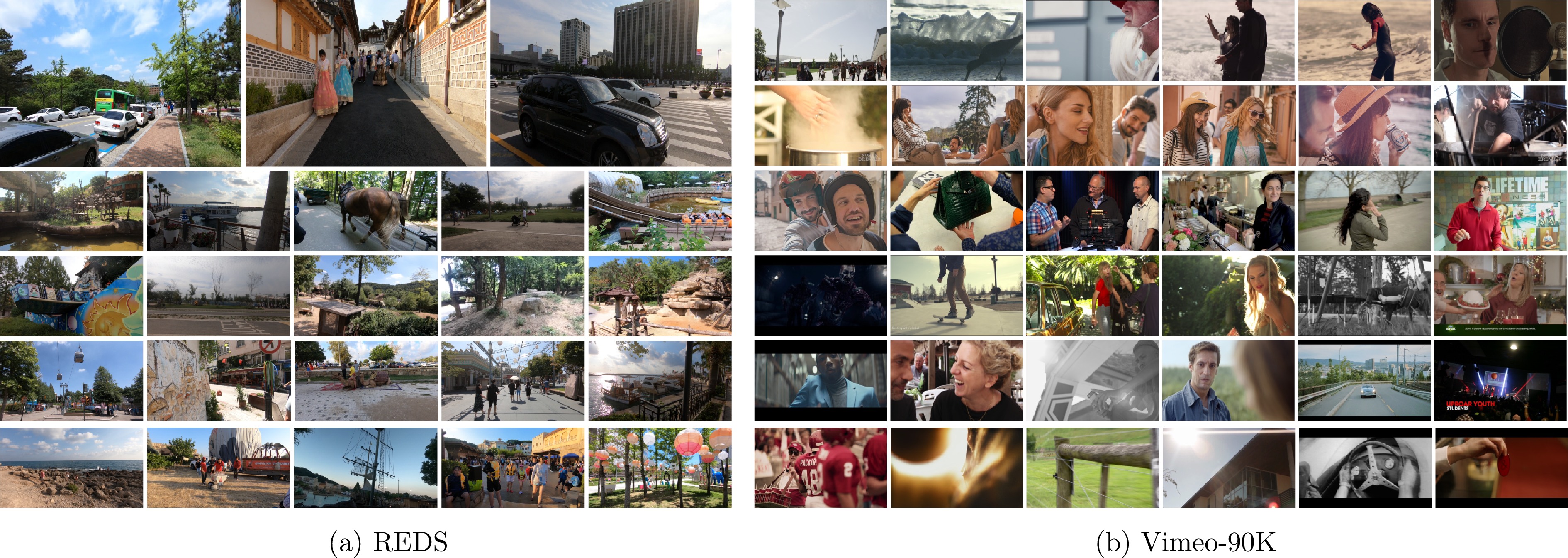

数据集 类型 视频数量 帧数 分辨率 (像素) 颜色空间 合成数据集 YUV25[15] 训练集 25 — 386 × 288 YUV TDTFF[16] Turbine 测试集 5 — 648 × 528 YUV Dancing 950 × 530 Treadmill 700 × 600 Flag 1000 × 580Fan 990 × 740 Vid4[13] Foliage 测试集 4 49 720 × 480 RGB Walk 47 720 × 480 Calendar 41 720 × 576 City 34 704 × 576 YUV21[17] 测试集 21 100 352 × 288 YUV Venice[18] 训练集 1 1 077 3 840 × 2 160 RGB Myanmar[19] 训练集 1 527 3 840 × 2 160 RGB CDVL[20] 训练集 100 30 1 920 × 1 080 RGB UVGD[21] 测试集 16 — 3 840 × 2 160 YUV LMT[22] 训练集 26 — 1 920 × 1 080 YCbCr SPMCS[23] 训练集和测试集 975 31 960 × 540 RGB MM542[24] 训练集 542 32 1 280 × 720 RGB UDM10[25] 测试集 10 32 1 272 × 720 RGB Vimeo-90K[12] 训练集和测试集 91 701 7 448 × 256 RGB REDS[14] 训练集和测试集 270 100 1 280 × 720 RGB Parkour[26] 测试集 14 — 960 × 540 RGB 真实数据集 RealVSR[27] 训练集和测试集 500 50 1 024 × 512 RGB/YCbCr VideoLQ[28] 测试集 50 100 1 024 × 512 RGB RealMCVSR[29] 训练集和测试集 161 — 1 920 × 1 080 RGB MVSR4$ \times $[30] 训练集和测试集 300 100 1 920 × 1 080 RGB DTVIT[31] 训练集和测试集 196 100 1 920 × 1 080 RGB YouHQ[32] 训练集和测试集 38 616 32 1 920 × 1 080 RGB 表 2 对双三次插值下采样后的视频进行VSR的性能(PSNR/SSIM)对比结果

Table 2 Performance (PSNR/SSIM) comparison of video super-resolution algorithm with bicubic downsampling

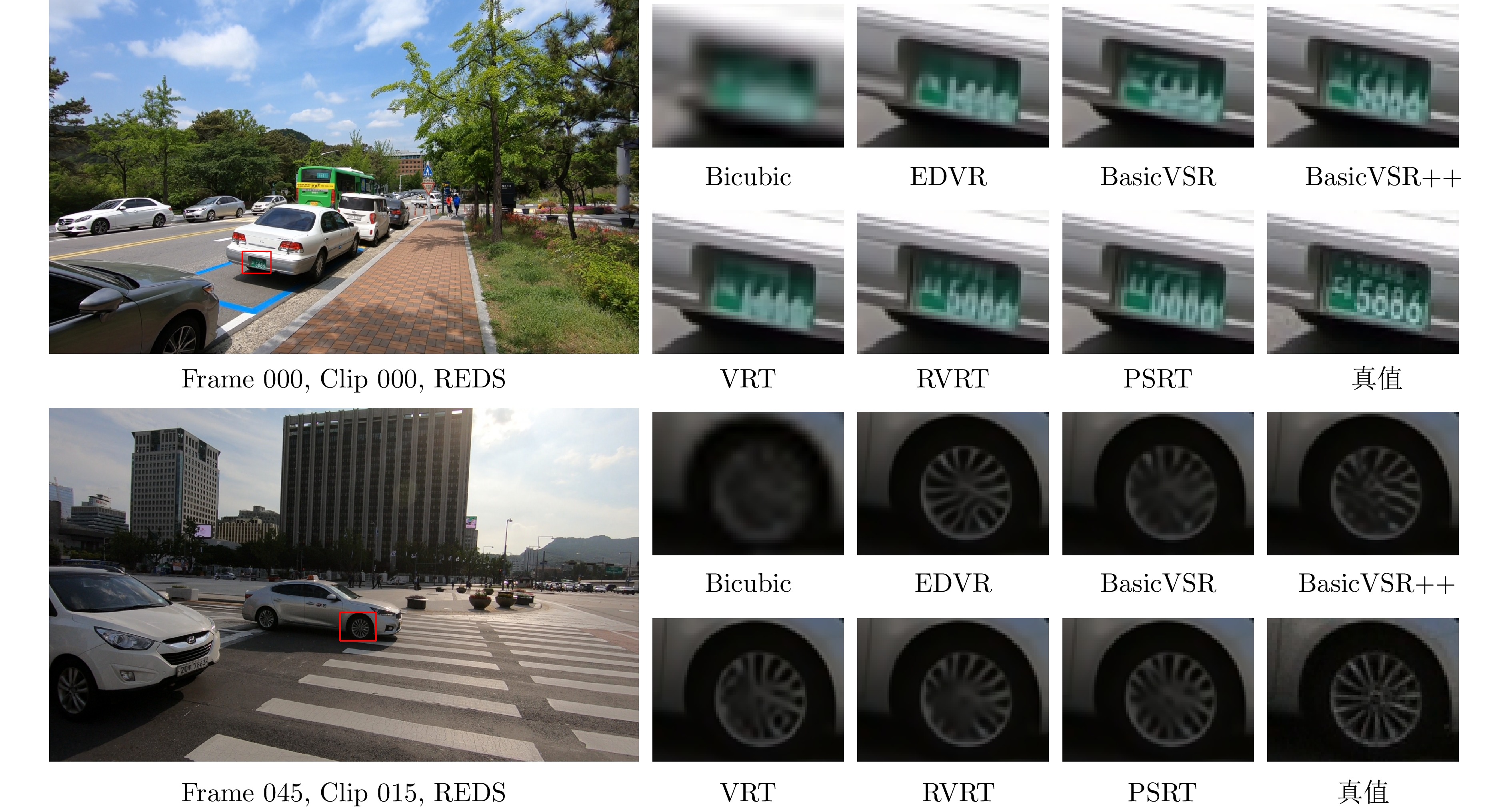

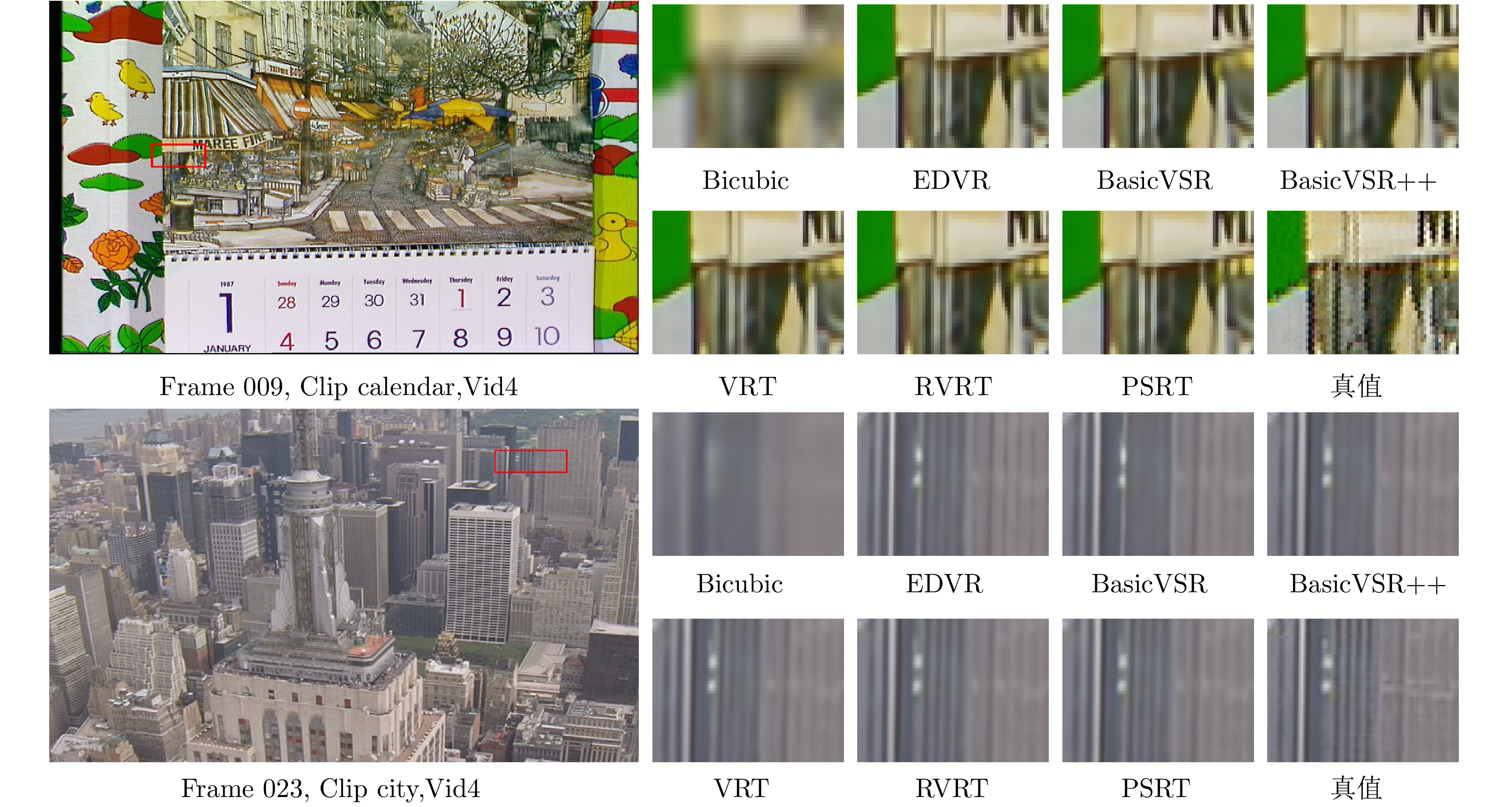

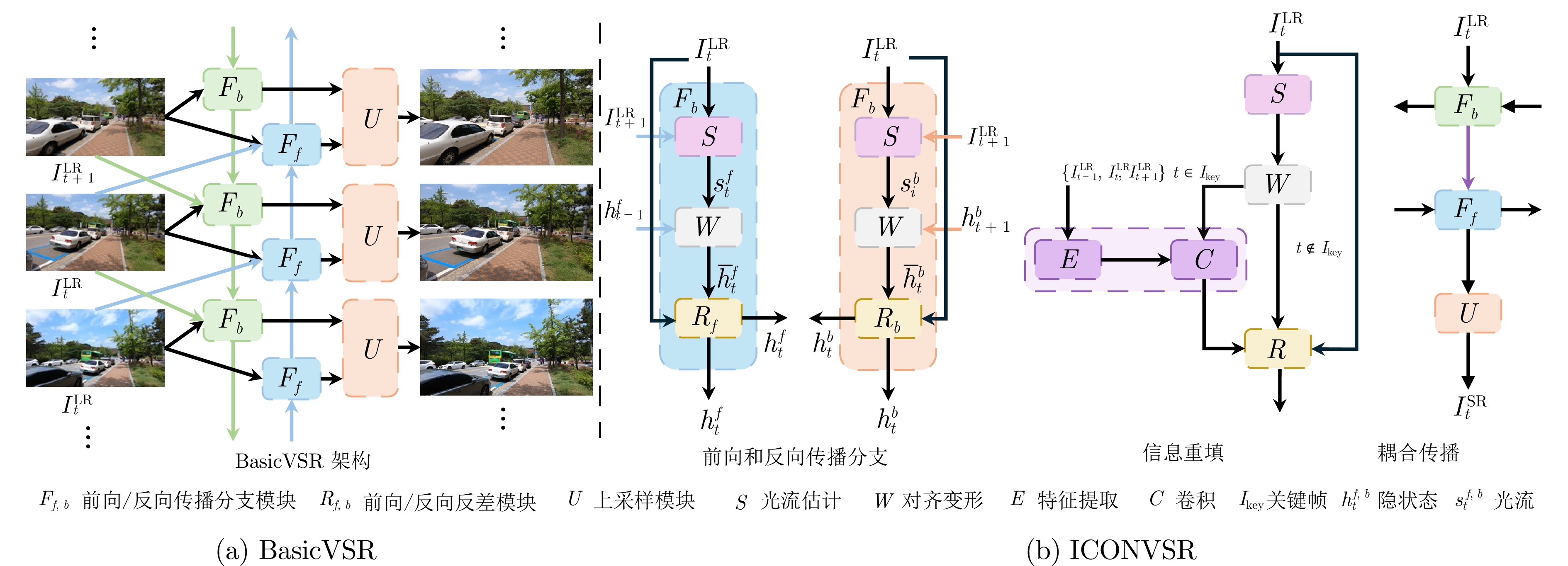

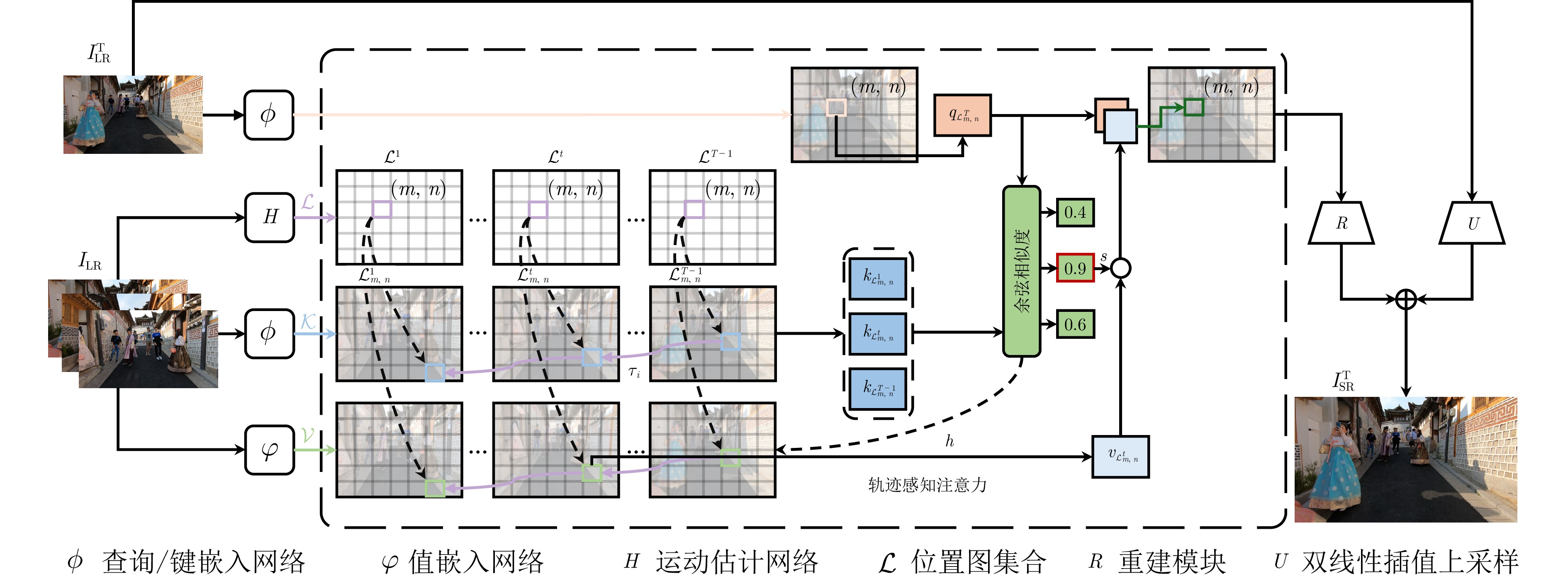

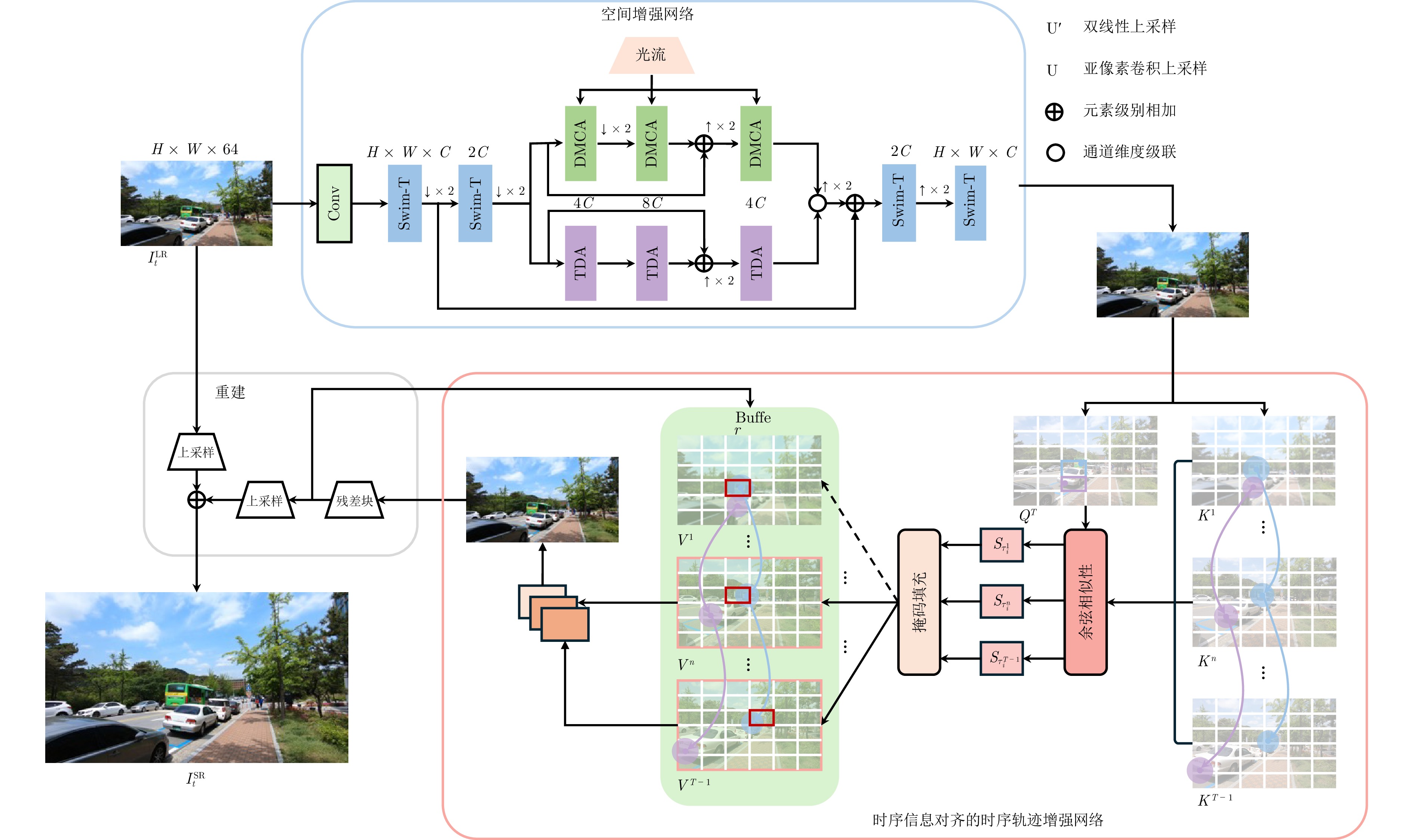

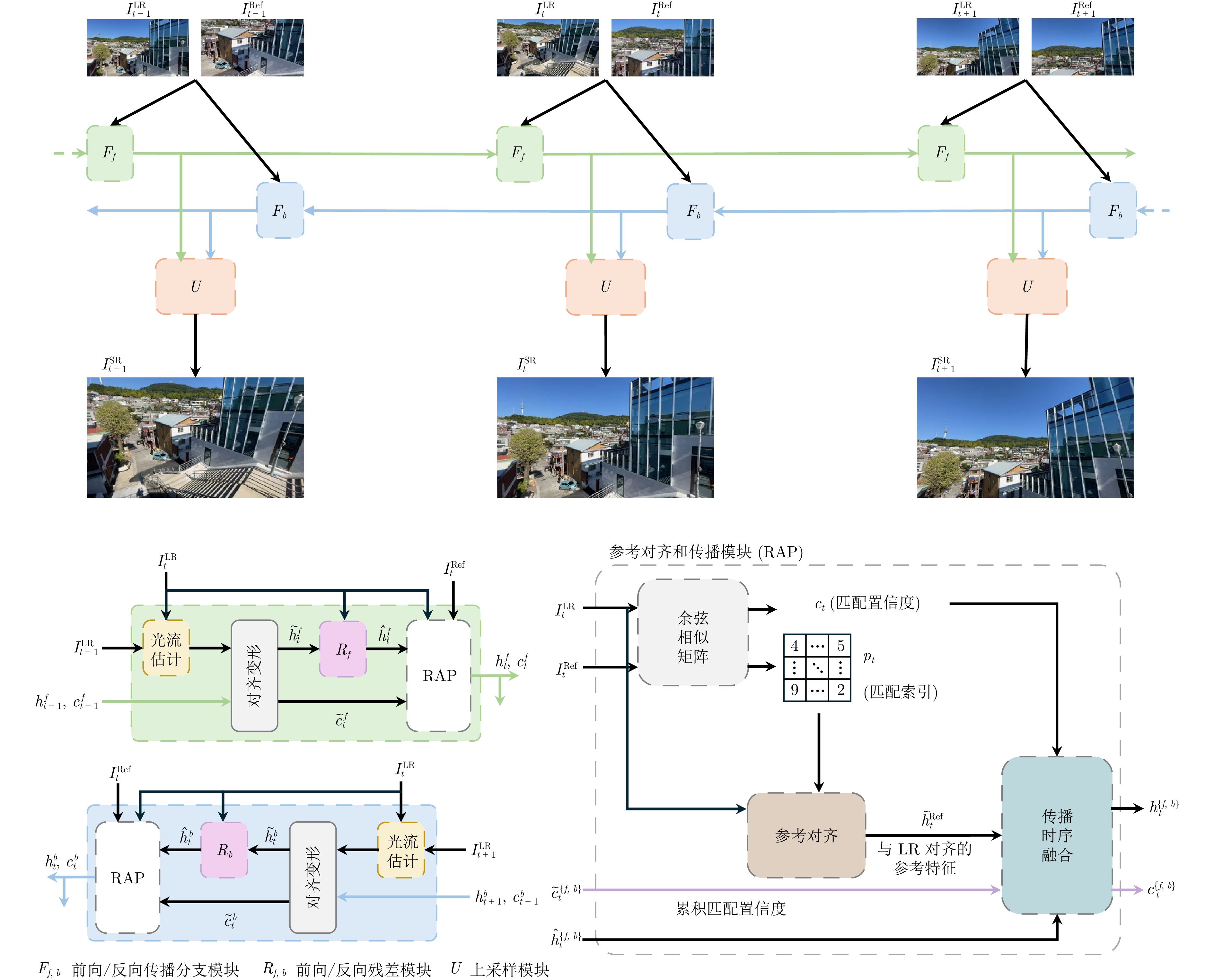

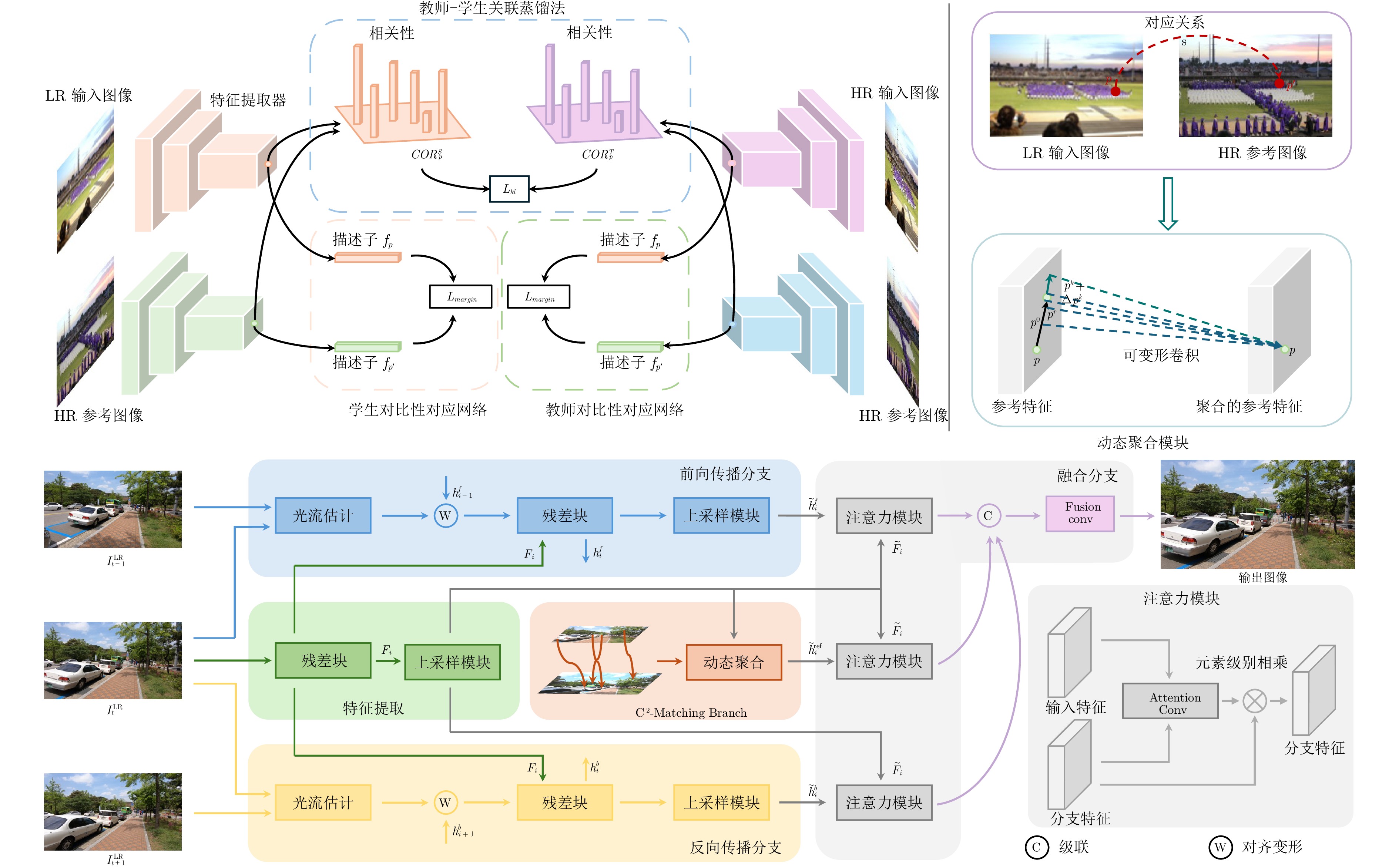

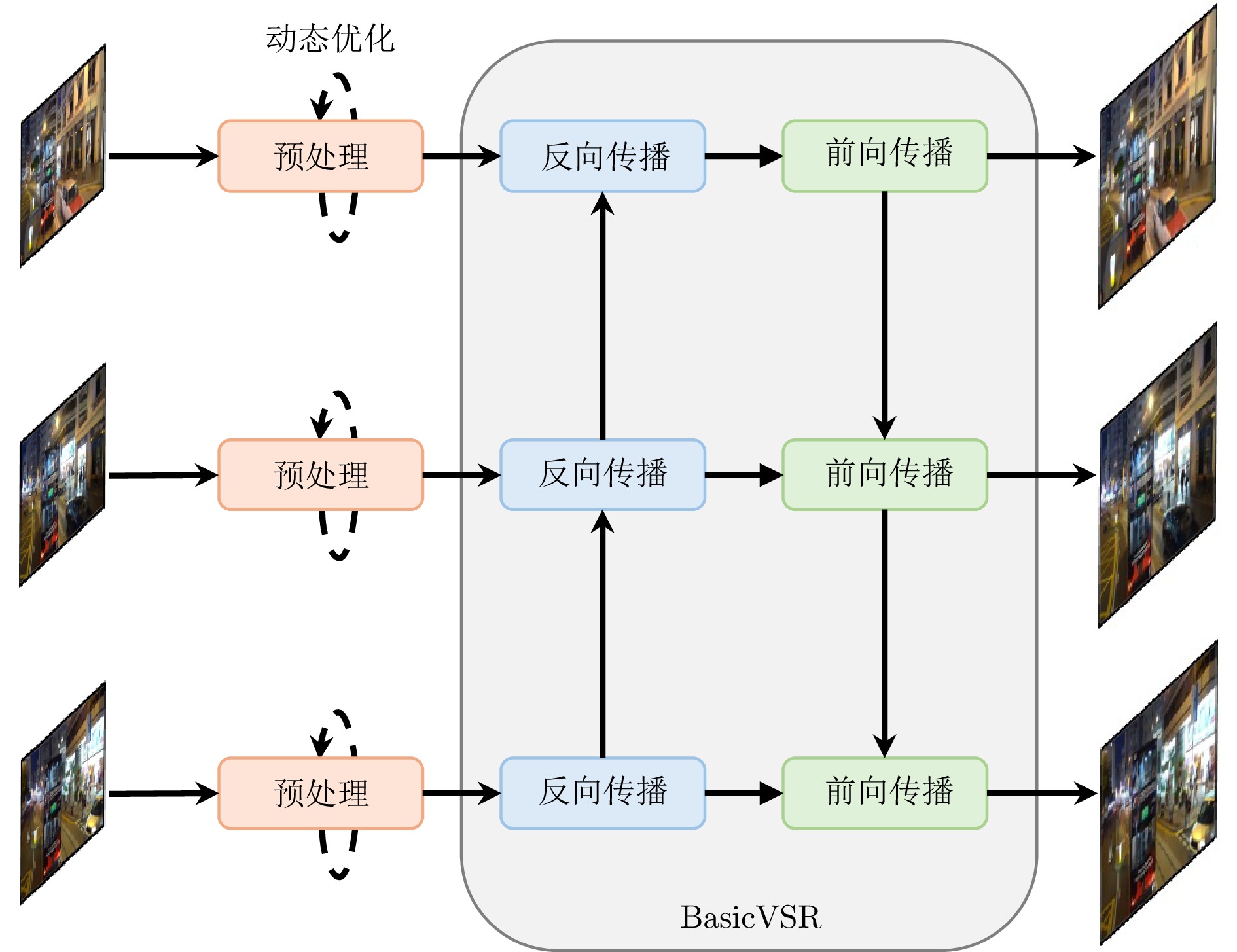

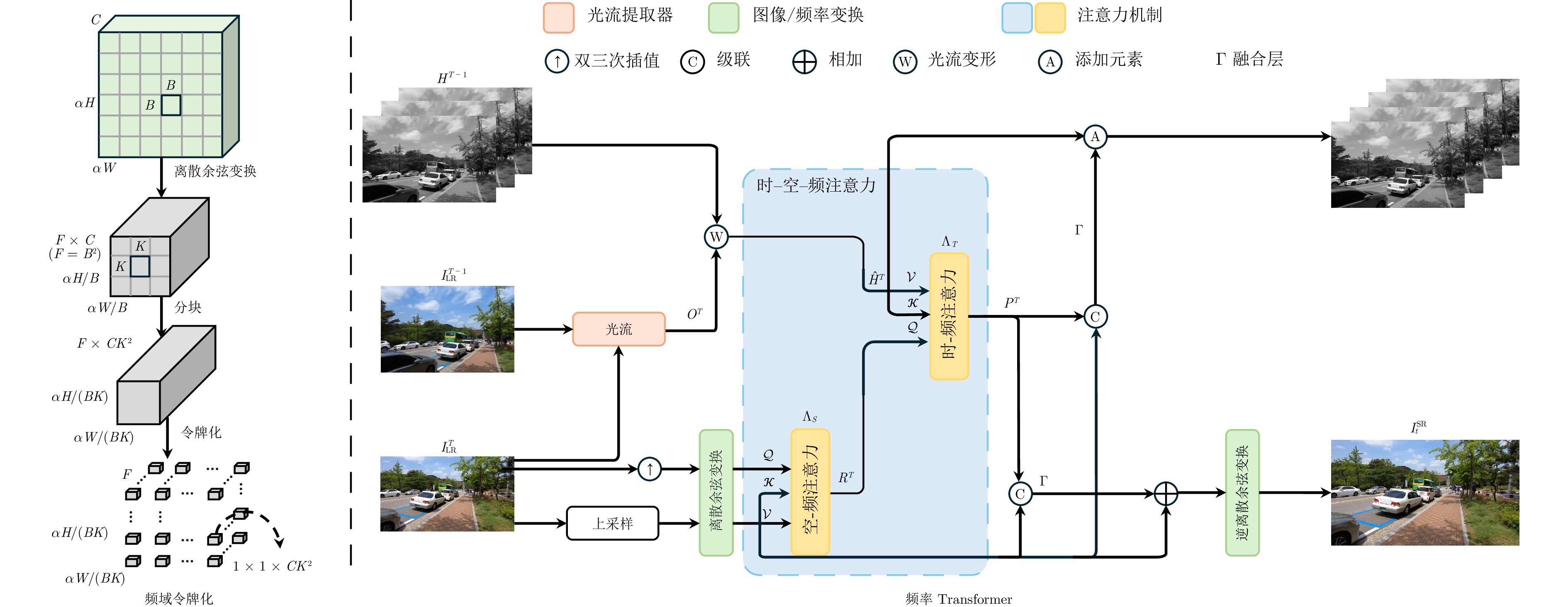

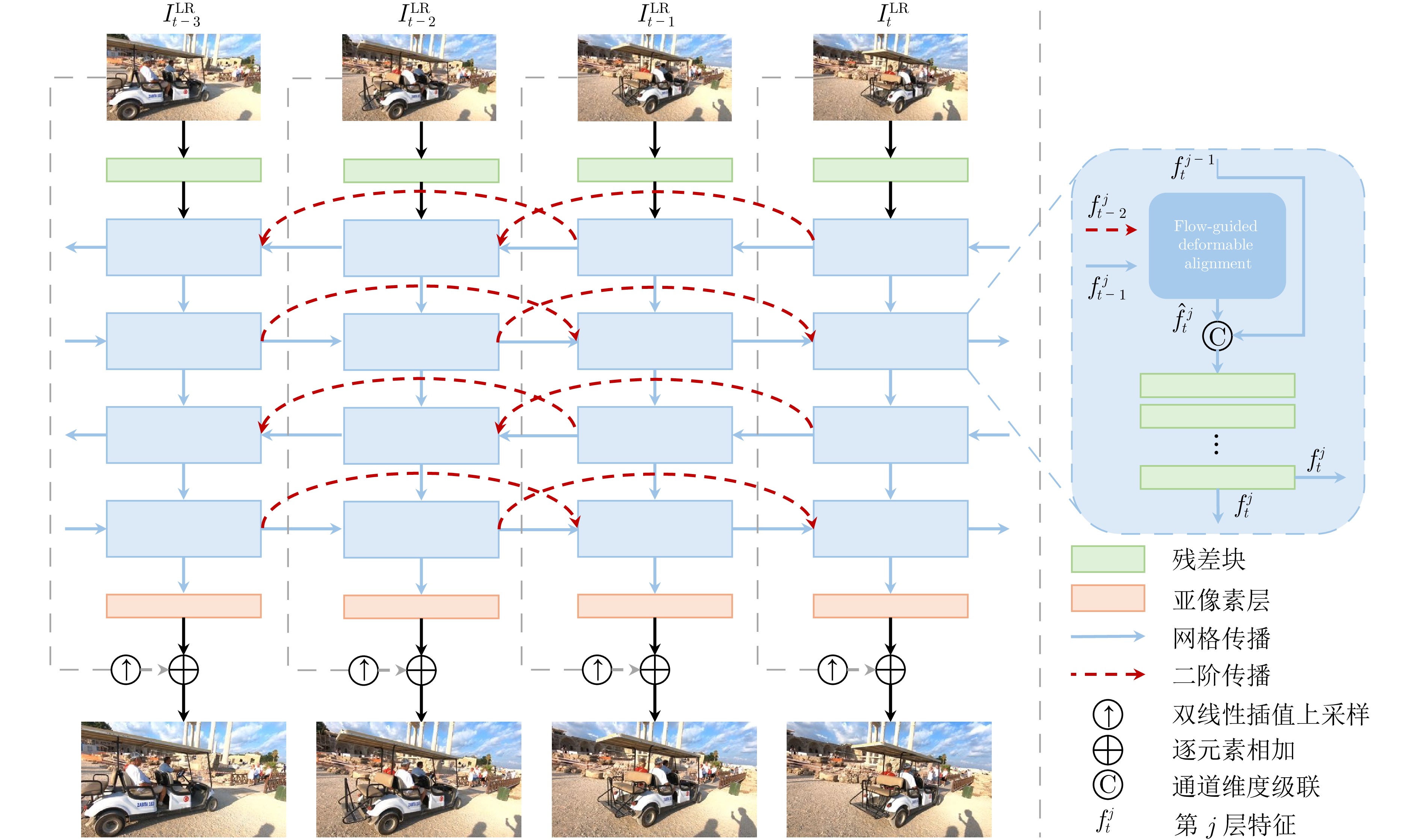

对比方法 训练帧数 参数量(M) 双三次插值下采样 REDS (RGB通道) Vimeo-90K (Y通道) Vid4 (Y通道) Bicubic — — 26.14/ 0.7292 31.32/ 0.8684 23.78/ 0.6347 VSRNet[40] — 0.27 −/− −/− 22.81/ 0.6500 VSRResFeatGAN[41] — — −/− −/− 24.50/ 0.7023 VESPCN[42] — — −/− −/− 25.35/ 0.7577 VSRResNet[41] — — −/− −/− 25.51/ 0.7530 SPMC[23] — 2.17 −/− −/− 25.52/ 0.7600 3DSRNet[43] — — −/− −/− 25.71/ 0.7588 RRCN[44] — — −/− −/− 25.86/ 0.7591 TOFlow[12] 5/7 1.41 27.98/ 0.7990 33.08/ 0.9054 25.89/ 0.7651 STARNet[45] — 111.61 −/− 30.83/ 0.9290 −/− MEMC-Net[46] — — −/− 33.47/ 0.9470 24.37/ 0.8380 STMN[47] — — −/− −/− 25.90/ 0.7878 SOFVSR[48] — 1.71 −/− −/− 26.01/ 0.7710 RISTN[49] — 3.67 −/− −/− 26.13/ 0.7920 MMCNN[24] — 10.58 −/− −/− 26.28/ 0.7844 RTVSR[50] — 15.00 −/− −/− 26.36/ 0.7900 TDAN[51] — 1.97 −/− −/− 26.42/ 0.7890 D3DNet[52] −/7 2.58 −/− 35.65/ 0.9330 26.52/ 0.7990 FFCVSR[53] — — −/− −/− 26.97/ 0.8300 EVSRNet[54] — — 27.85/ 0.8000 −/− −/− StableVSR[55] — — 27.97/ 0.8000 −/− −/− DUF[56] 7/7 5.8 28.63/ 0.8251 −/− 27.33/ 0.8319 PFNL[57] 7/7 3 29.63/ 0.8502 36.14/ 0.9363 26.73/ 0.8029 DNSTNet[58] — — −/− 36.86/ 0.9387 27.21/ 0.8220 RBPN[59] 7/7 12.2 30.09/ 0.8590 37.07/ 0.9435 27.12/ 0.8180 DSMC[60] — 11.58 30.29/ 0.8381 −/− 27.29/ 0.8403 Boosted EDVR[31] — — 30.53/ 0.8699 −/− −/− TMP[61] — 3.1 30.67/ 0.8710 −/− 27.10/ 0.8167 MuCAN[62] 5/7 — 30.88/ 0.8750 37.32/ 0.9465 −/− MSFFN[63] — — −/− 37.33/ 0.9467 27.23/ 0.8218 DAP[64] 15/5 — 30.59/ 0.8703 −/− −/− MultiBoot VSR[65] — 60.86 31.00/ 0.8822 −/− −/− SSL-bi[66] 15/14 1.0 31.06/ 0.8933 36.82/ 0.9419 27.15/ 0.8208 EDVR[67] 5/7 20.6 31.09/ 0.8800 37.61/ 0.9489 27.35/ 0.8264 RLSP[68] — 4.2 −/− 37.39/ 0.9470 27.15/ 0.8202 TGA[69] — 5.8 −/− 37.43/ 0.9480 27.19/ 0.8213 KSNet-bi[70] — 3.0 31.14/ 0.8862 37.54/ 0.9503 27.22/ 0.8245 VSR-T[71] 5/7 32.6 31.19/ 0.8815 37.71/ 0.9494 27.36/ 0.8258 PSRT-sliding[72] 5/− 14.8 31.32/ 0.8834 −/− −/− SeeClear[73] 5/5 229.23 31.32/ 0.8856 37.64/ 0.9503 27.80/ 0.840 4 DPR[74] — 6.3 31.38/ 0.8907 37.11/ 0.9446 27.19/ 0.824 3 BasicVSR[75] 15/14 6.3 31.42/ 0.8909 37.18/ 0.9450 27.24/ 0.825 1 Boosted BasicVSR[31] — — 31.42/ 0.8917 −/− −/− SATeCo[76] 6/6 — 31.62/ 0.8932 −/− 27.44/ 0.842 0 IconVSR[75] 15/14 8.7 31.67/ 0.8948 37.47/ 0.9476 27.39/ 0.8279 ICNet[77] — 18.34 31.71/ 0.8963 37.72/ 0.9477 27.43/ 0.8287 MSHPFNL[78] — 7.77 −/− 36.75/ 0.9406 27.70/ 0.8472 PA[79] 5/7 38.2 32.05/ 0.8941 −/− 28.02/ 0.8373 FTVSR[80] — 10.8 31.82/ 0.8960 −/− −/− $ {\mathrm{C}}^2 $-Matching[81] — — 32.05/ 0.9010 −/− 28.87/0.896 0 ETDM[82] — 8.4 32.15/ 0.9024 −/− −/− BasicVSR++[83] 30/14 7.3 32.39/ 0.9069 37.79/ 0.9500 27.79/ 0.8400 RTA[84] 5/7 17 31.30/ 0.8850 37.84/ 0.9498 27.90/ 0.8380 Semantic Lens[85] 5/− — 31.42/ 0.8881 −/− −/− TCNet[86] — 9.6 31.82/ 0.9002 37.94/ 0.9514 27.48/ 0.8380 TTVSR[87] 50/− 6.8 32.12/ 0.9021 −/− −/− VRT[88] 16/7 35.6 32.19/ 0.9006 38.20/ 0.9530 27.93/ 0.8425 CTVSR[89] 16/14 34.5 32.28/ 0.9047 −/− 28.03/ 0.8487 FTVSR++[90] — 10.8 32.42/ 0.9070 −/− −/− LGDFNet-BPP[91] — 9.0 32.53/ 0.9007 −/− 27.99/ 0.8409 PP-MSVSR-L[92] — 7.4 32.53/ 0.9083 −/− −/− CFD-BasicVSR++[127] 30/7 7.5 32.51/ 0.9083 37.90/ 0.9504 27.84/ 0.8406 RVRT[93] 30/14 10.8 32.75/ 0.9113 38.15/ 0.9527 27.99/ 0.8426 DFVSR[94] — 7.1 32.76/ 0.9081 38.25/0.955 6 27.92/ 0.8427 PSRT-recurrent[72] 16/14 13.4 32.72/ 0.9106 38.27/ 0.9536 28.07/ 0.8485 MFPI[95] −/− 7.3 32.81/ 0.9106 38.28/ 0.9534 28.11/ 0.8481 EvTexture[96] 15/− 8.9 32.79/ 0.9174 38.23/ 0.9544 29.51/ 0.8909 MIA-VSR[97] 16/14 16.5 32.78/0.922 0 38.22/ 0.9532 28.20/ 0.8507 CFD-PSRT[127] 30/7 13.6 32.83/ 0.9140 38.33/ 0.9548 28.18/ 0.8503 IART[98] 16/7 13.4 32.90/ 0.9138 38.14/ 0.9528 28.26/ 0.8517 EvTexture+[96] 15/− 10.1 32.93/0.9195 38.32/0.9558 29.78/0.8983 注: 加粗、下划线字体分别表示各性能的最优、次优结果. 表 3 对高斯模糊下采样后的视频进行VSR的性能(PSNR/SSIM)对比结果

Table 3 Performance (PSNR/SSIM) comparison of video super-resolution algorithm with Gaussian blur downsampling

对比方法 训练帧数 参数量(M) 高斯模糊下采样 UDM10 (Y通道) Vimeo-90K (Y通道) Vid4 (Y通道) Bicubic — — 28.47/ 0.8253 31.30/ 0.8687 21.80/ 0.5246 BRCN[99] — — −/− −/− 24.43/ 0.6334 ToFNet[12] 5/7 1.41 36.26/ 0.9438 34.62/ 0.9212 25.85/ 0.7659 TecoGAN[100] — 3.00 −/− −/− 25.89/− SOFVSR[48] — 1.71 −/− −/− 26.19/ 0.7850 RRN[101] — 3.4 38.96/ 0.9644 −/− 27.69/ 0.8488 TDAN[51] — 1.97 −/− −/− 26.86/ 0.8140 FRVSR[102] — 5.1 −/− −/− 26.69/ 0.8220 DUF[56] 7/7 5.8 38.48/ 0.9605 36.87/ 0.9447 27.38/ 0.8329 RLSP[68] — 4.2 38.48/ 0.9606 36.49/ 0.9403 27.48/ 0.8388 PFNL[57] 7/7 3 38.74/ 0.9627 −/− 27.16/ 0.8355 RBPN[59] 7/7 12.2 38.66/ 0.9596 37.20/ 0.9458 27.17/ 0.8205 TMP[61] — 3.1 −/− 37.33/ 0.9481 27.61/ 0.8428 TGA[69] — 5.8 38.74/ 0.9627 37.59/ 0.9516 27.63/ 0.8423 SSL-bi[66] 15/14 1.0 39.35/ 0.9665 37.06/ 0.9458 27.56/ 0.8431 RSDN[103] — 6.19 −/− 37.23/ 0.9471 27.02/ 0.8505 DAP[64] 15/5 — 39.50/ 0.9664 37.25/ 0.9472 −/− SeeClear[73] 5/5 229.23 39.72/ 0.9675 −/− −/− EDVR[67] 5/7 20.6 39.89/ 0.9686 37.81/ 0.9523 27.85/ 0.8503 DPR[74] — 6.3 39.72/ 0.9684 37.24/ 0.9461 27.89/ 0.8539 BasicVSR[75] 15/14 6.3 39.96/ 0.9694 37.53/ 0.9498 27.96/ 0.8553 IconVSR[75] 15/14 8.7 40.03/ 0.9694 37.84/ 0.9524 28.04/ 0.8570 R2D2[104] — 8.25 39.53/ 0.9670 −/− 28.13/0.9244 FTVSR[80] — 10.8 −/− −/− 28.31/ 0.8600 FDAN[105] — — 39.91/ 0.9686 37.75/ 0.9522 27.88/ 0.8508 PP-MSVSR[92] — 1.45 40.06/ 0.9699 37.54/ 0.9499 28.13/ 0.8604 GOVSR[106] — — 40.14/ 0.9713 37.63/ 0.9503 28.41/ 0.8724 ETDM[82] — 8.4 40.11/ 0.9707 −/− 28.81/ 0.8725 TTVSR[87] 50/− 6.8 40.41/ 0.9712 37.92/ 0.9526 28.40/ 0.8643 BasicVSR++[83] 30/14 7.3 40.72/ 0.9722 38.21/ 0.9550 29.04/ 0.8753 CFD-BasicVSR++[127] 30/7 7.5 40.77/ 0.9726 38.36/ 0.9557 29.14/ 0.8760 TCNet[86] — 9.6 −/− −/− 28.44/ 0.8730 VRT[88] 16/7 35.6 41.05/ 0.9737 38.72/0.9584 29.42/ 0.8795 CTVSR[89] 16/14 34.5 41.20/ 0.9740 38.83/0.9580 29.28/ 0.8811 FTVSR++[90] — 10.8 −/− −/− 28.80/ 0.8680 LGDFNet-BPP[91] — 9.0 40.81/0.9756 −/− 29.39/ 0.8798 RVRT[93] 30/14 10.8 40.90/ 0.9729 38.59/ 0.9576 29.54/ 0.8810 DFVSR[94] — 7.1 40.97/ 0.9733 38.51/ 0.9571 29.56/0.898 3 MFPI[95] −/− 7.3 41.08/0.974 1 38.70/ 0.9579 29.34/ 0.8781 表 4 真实场景下的VSR性能对比结果

Table 4 Performance comparison of real-world video super-resolution algorithm

对比方法 推理帧数 RealVSR MVSR $ 4\times $ PSNR (dB)/SSIM/LPIPS PSNR (dB)/SSIM/LPIPS RSDN[103] 之前帧 23.91/ 0.7743 /0.22423.15/ 0.7533 /0.279FSTRN[107] 7 23.36/ 0.7683 /0.24022.66/ 0.7433 /0.315TOF[12] 7 23.62/ 0.7739 /0.22022.80/ 0.7502 /0.279TDAN[51] 7 23.71/ 0.7737 /0.22923.07/ 0.7492 /0.282EDVR[67] 7 23.96/ 0.7781 /0.21623.51/ 0.7611 /0.268BasicVSR[75] 所有帧 24.00/ 0.7801 /0.20923.38/ 0.7594 /0.270MANA[108] 所有帧 23.89/ 0.7781 /0.22423.15/ 0.7513 /0.285TTVSR[87] 所有帧 24.08/ 0.7837 /0.21323.60/ 0.7686 /0.277ETDM[82] 所有帧 24.13/0.789 6/0.206 23.61/ 0.7662 /0.260BasicVSR++[83] 所有帧 24.24/ 0.7933 /0.21623.70/0.771 3/0.263 RealBasicVSR[28] 所有帧 23.74/ 0.7676 /0.17423.15/ 0.7603 /0.202EAVSR[30] 所有帧 24.20/ 0.7862 /0.20823.61/ 0.7618 /0.264EAVSR+[30] 所有帧 24.41/0.7953/0.212 23.94/0.772 6/0.259 EAVSRGAN+[30] 所有帧 23.99/ 0.7726 /0.17023.35/ 0.7611 /0.199表 5 不同帧间对齐方式的性能和参数比较

Table 5 Performance and parameter comparisons of different inter-frame alignment

对齐方式 参数量(M) 插值方法 光流(%) GT SpyNet 显式对齐(光流) 1.35 最近邻插值 31.84 31.78 双线性插值 31.92 31.85 双三次插值 31.93 31.89 混合对齐(光流引导 1.60 双线性插值 32.08 31.98 的可变形卷积) 混合对齐(光流引导 1.56 双线性插值 32.03 31.94 的可变形注意力) 混合对齐(光流引导 1.35 最近邻插值 31.81 31.82 的图像块对齐) 混合对齐(光流引导 1.36 基于注意力的 32.14 32.05 的隐式对齐) 隐式插值 表 6 GeForce RTX 3090平台下VSR的性能(PSNR/SSIM)/推理时间对比结果

Table 6 Performance (PSNR/SSIM) and inference time comparisons of VSR algorithm on GeForce RTX 3090 platform

对比方法 参数量

(M)推理时间

(ms)对齐

方式双三次插值下采样 高斯模糊下采样 REDS

(RGB通道)Vimeo-90K

(Y通道)Vid4

(Y通道)Vimeo-90K

(Y通道)Vid4

(Y通道)UDM10

(Y通道)Bicubic — <1 — 26.23/ 0.7319 31.32/ 0.8684 23.78/ 0.6374 31.30/ 0.8687 21.80/ 0.5346 28.47/ 0.8253 TOFlow[12] 1.41 250 显式 27.96/ 0.7981 33.08/ 0.9054 25.89/ 0.7651 34.62/ 0.9212 25.85/ 0.7659 36.26/ 0.9438 DUF[56] 5.8 737.5 无需 28.63/ 0.8251 −/− 27.33/ 0.8319 36.87/ 0.9447 27.38/ 0.8329 38.48/ 0.9605 EDVR[67] 20.6 188.2 隐式 31.09/ 0.8800 37.61/ 0.9489 27.35/ 0.8264 37.81/ 0.9523 27.85/ 0.8503 39.89/ 0.9686 TMP[61] 3.1 31.5 隐式 30.67/ 0.8710 −/− 27.10/ 0.8167 37.33/ 0.9481 27.61/ 0.8428 −/− BasicVSR[75] 6.3 45.4 显式 31.42/ 0.8909 37.18/ 0.9450 27.24/ 0.8251 37.53/ 0.9498 27.96/ 0.8553 39.96/ 0.9694 ICONVSR[75] 8.7 58.4 显式 31.67/ 0.8948 37.47/ 0.9476 27.39/ 0.8279 37.84/ 0.9524 28.04/ 0.8570 40.03/ 0.9694 TTVSR[87] 6.8 123.3 混合 32.12/ 0.9021 −/− −/− 37.92/ 0.9526 28.40/ 0.8643 40.41/ 0.9712 VRT[88] 35.6 1679 混合 32.17/ 0.9002 38.20/ 0.9530 27.93/ 0.8425 38.72/ 0.9584 29.37/ 0.8792 41.04/ 0.9737 BasicVSR++[83] 7.3 60.2 混合 32.39/ 0.9069 37.79/ 0.9500 27.79/ 0.8400 38.21/ 0.9550 29.04/ 0.8753 40.72/ 0.9722 PSRT[72] 13.4 1280.2 混合 32.72/ 0.9106 38.27/ 0.9536 28.07/ 0.8485 −/− −/− −/− MIA-VSR[97] 16.5 1194.6 无需 32.78 0.9220 38.22/ 0.9532 28.20/ 0.8507 −/− −/− −/− -

[1] Wan Z Y, Zhang B, Chen D D, Liao J. Bringing old films back to life. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17673−17682 [2] Li G, Ji J, Qin M H, Niu W, Ren B, Afghah F, et al. Towards high-quality and efficient video super-resolution via spatial-temporal data overfitting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 10259−10269 [3] Zhu H G, Wei Y C, Liang X D, Zhang C J, Zhao Y. CTP: Towards vision-language continual pretraining via compatible momentum contrast and topology preservation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 22200−22210 [4] Jiao S Y, Wei Y C, Wang Y W, Zhao Y, Shi H. Learning mask-aware CLIP representations for zero-shot segmentation. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1549 [5] Liu C, Sun D Q. On Bayesian adaptive video super resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(2): 346−360 doi: 10.1109/TPAMI.2013.127 [6] Ma Z Y, Liao R J, Tao X, Xu L, Jia J Y, Wu E H. Handling motion blur in multi-frame super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 5224−5232 [7] Wu Y X, Li F, Bai H H, Lin W S, Cong R M, Zhao Y. Bridging component learning with degradation modelling for blind image super-resolution. IEEE Transactions on Multimedia, DOI: 10.1109/TMM.2022.3216115 [8] 张帅勇, 刘美琴, 姚超, 林春雨, 赵耀. 分级特征反馈融合的深度图像超分辨率重建. 自动化学报, 2022, 48(4): 992−1003Zhang Shuai-Yong, Liu Mei-Qin, Yao Chao, Lin Chun-Yu, Zhao Yao. Hierarchical feature feedback network for depth super-resolution reconstruction. Acta Automatica Sinica, 2022, 48(4): 992−1003 [9] Charbonnier P, Blanc-Feraud L, Aubert G, Barlaud M. Two deterministic half-quadratic regularization algorithms for computed imaging. In: Proceedings of the 1st International Conference on Image Processing (ICIP). Austin, USA: IEEE, 1994. 168−172 [10] Lai W S, Huang J B, Ahuja N, Yang M H. Fast and accurate image super-resolution with deep Laplacian pyramid networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(11): 2599−2613 doi: 10.1109/TPAMI.2018.2865304 [11] Zha L, Yang Y, Lai Z C, Zhang Z W, Wen J. A lightweight dense connected approach with attention on single image super-resolution. Electronics, 2021, 10(11): Article No. 1234 doi: 10.3390/electronics10111234 [12] Xue T F, Chen B A, Wu J J, Wei D L, Freeman W T. Video enhancement with task-oriented flow. International Journal of Computer Vision, 2019, 127(8): 1106−1125 doi: 10.1007/s11263-018-01144-2 [13] Liu C, Sun D Q. A Bayesian approach to adaptive video super resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Colorado Springs, USA: IEEE, 2011. 209−216 [14] Nah S, Baik S, Hong S, Moon G, Son S, Timofte R, et al. NTIRE 2019 challenge on video deblurring and super-resolution: Dataset and study. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Long Beach, USA: IEEE, 2019. 1996−2005 [15] Protter M, Elad M, Takeda H, Milanfar P. Generalizing the nonlocal-means to super-resolution reconstruction. IEEE Transactions on Image Processing, 2009, 18(1): 36−51 doi: 10.1109/TIP.2008.2008067 [16] Shahar O, Faktor A, Irani M. Space-time super-resolution from a single video. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition (CVPR). Colorado Springs, USA: IEEE, 2011. 3353−3360 [17] Li D Y, Wang Z F. Video superresolution via motion compensation and deep residual learning. IEEE Transactions on Computational Imaging, 2017, 3(4): 749−762 doi: 10.1109/TCI.2017.2671360 [18] Fautier T. 4K and ultra high definition video services explained [Online], available: https://www.harmonicinc.com/free-4k-demo-footage/, March 22, 2021 [19] Myanmar 60p, Harmonic Inc. [Online], available: http://www.harmonicinc.com/resources/videos/4kvideo-clip-center, May 1, 2017Myanmar 60p, Harmonic Inc. [Online], available: http://www.harmonicinc.com/resources/videos/4kvideo-clip-center, May 1, 2017 [20] ITS. The consumer digital video library [Online], available: https://www.cdvl.org, March 20, 2024 [21] Mercat A, Viitanen M, Vanne J. UVG dataset: 50/120 fps 4K sequences for video codec analysis and development. In: Proceedings of the 11th ACM Multimedia Systems Conference. Istanbul, Turkey: ACM, 2020. 297−302 [22] Liu D, Wang Z W, Fan Y C, Liu X M, Wang Z Y, Chang S Y, et al. Robust video super-resolution with learned temporal dynamics. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 2526−2534 [23] Tao X, Gao H Y, Liao R J, Wang J, Jia J Y. Detail-revealing deep video super-resolution. In: Proceedings of the IEEE Inter national Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 4482−4490 [24] Wang Z Y, Yi P, Jiang K, Jiang J J, Han Z, Lu T, et al. Multi-memory convolutional neural network for video super-resolution. IEEE Transactions on Image Processing, 2019, 28(5): 2530−2544 doi: 10.1109/TIP.2018.2887017 [25] Yi P, Wang Z Y, Jiang K, Jiang J J, Ma J Y. Progressive fusion video super-resolution network via exploiting non-local spatio-temporal correlations. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, South Korea: IEEE, 2019. 3106−3115 [26] Yu J Y, Liu J E, Bo L F, Mei T. Memory-augmented non-local attention for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17813−17822 [27] Yang X, Xiang W M, Zeng H, Zhang L. Real-world video super-resolution: A benchmark dataset and a decomposition based learning scheme. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 4761−4770 [28] Chan K C K, Zhou S C, Xu X Y, Loy C C. Investigating tradeoffs in real-world video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 5952−5961 [29] Lee J, Lee M, Cho S, Lee S. Reference-based video super-resolution using multi-camera video triplets. In: Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17803−17812 [30] Wang R H, Liu X H, Zhang Z L, Wu X H, Feng C M, Zhang L, et al. Benchmark dataset and effective inter-frame alignment for real-world video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 1168−1177 [31] Huang Y H, Dong H, Pan J S, Zhu C, Liang B Y, Guo Y, et al. Boosting video super resolution with patch-based temporal redundancy optimization. In: Proceedings of the 32nd International Conference on Artificial Neural Networks on Artificial Neural Networks and Machine Learning. Heraklion, Greece: Springer, 2023. 362−375 [32] Zhou S C, Yang P Q, Wang J Y, Luo Y H, Loy C C. Upscale-a-video: Temporal-consistent diffusion model for real-world video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2023. 2535−2545 [33] Wang X T, Xie L B, Dong C, Shan Y. Real-ESRGAN: Training real-world blind super-resolution with pure synthetic data. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). Montreal, Canada: IEEE, 2021. 1905−1914 [34] Singh A, Singh J. Survey on single image based super-resolution——Implementation challenges and solutions. Multimedia Tools and Applications, 2020, 79(3−5): 1641−1672 [35] You Z Y, Li Z Y, Gu J J, Yin Z F, Xue T F, Dong C. Depicting beyond scores: Advancing image quality assessment through multi-modal language models. In: Proceedings of the 18th European Conference. Milan, Italy: Springer, 2024. 259−276 [36] You Z Y, Gu J J, Li Z Y, Cai X, Zhu K W, Xue T F, et al. Descriptive image quality assessment in the wild. arXiv preprint arXiv: 2405.18842, 2024. [37] Xie L B, Wang X T, Zhang H L, Dong C, Shan Y. VFHQ: A high-quality dataset and benchmark for video face super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 656−665 [38] Zhou F, Sheng W, Lu Z T, Qiu G P. A database and model for the visual quality assessment of super-resolution videos. IEEE Transactions on Broadcasting, 2024, 70(2): 516−532 doi: 10.1109/TBC.2024.3382949 [39] Jin J, Zhang X X, Fu X, Zhang H, Lin W S, Lou J, et al. Just noticeable difference for deep machine vision. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(6): 3452−3461 doi: 10.1109/TCSVT.2021.3113572 [40] Kappeler A, Yoo S, Dai Q Q, Katsaggelos A K. Video super-resolution with convolutional neural networks. IEEE Transactions on Computational Imaging, 2016, 2(2): 109−122 doi: 10.1109/TCI.2016.2532323 [41] Lucas A, Lopez-Tapia S, Molina R, Katsaggelos A K. Generative adversarial networks and perceptual losses for video super-resolution. IEEE Transactions on Image Processing, 2019, 28(7): 3312−3327 doi: 10.1109/TIP.2019.2895768 [42] Caballero J, Ledig C, Aitken A, Acosta A, Totz J, Wang Z H, et al. Real-time video super-resolution with spatio-temporal networks and motion compensation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 2848−2857 [43] Kim S Y, Lim J, Na T, Kim M. 3DSRNet: Video super-resolution using 3D convolutional neural networks. arXiv preprint arXiv: 1812.09079, 2018. [44] Li D Y, Liu Y, Wang Z F. Video super-resolution using non-simultaneous fully recurrent convolutional network. IEEE Transactions on Image Processing, 2019, 28(3): 1342−1355 doi: 10.1109/TIP.2018.2877334 [45] Haris M, Shakhnarovich G, Ukita N. Space-time-aware multi-resolution video enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 2856−2865 [46] Bao W B, Lai W S, Zhang X Y, Gao Z Y, Yang M H. MEMC-Net: Motion estimation and motion compensation driven neural network for video interpolation and enhancement. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(3): 933−948 doi: 10.1109/TPAMI.2019.2941941 [47] Zhu X B, Li Z Z, Lou J G, Shen Q. Video super-resolution based on a spatio-temporal matching network. Pattern Recognition, 2021, 110: Article No. 107619 doi: 10.1016/j.patcog.2020.107619 [48] Wang L G, Guo Y L, Liu L, Lin Z P, Deng X P, An W. Deep video super-resolution using HR optical flow estimation. IEEE Transactions on Image Processing, 2020, 29: 4323−4336 doi: 10.1109/TIP.2020.2967596 [49] Zhu X B, Li Z Z, Zhang X Y, Li C S, Liu Y Q, Xue Z Y. Residual invertible spatiotemporal network for video super-resolution. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence (AAAI). Honolulu, USA: AAAI, 2019. 5981−5988 [50] Bare B, Yan B, Ma C X, Li K. Real-time video super-resolution via motion convolution kernel estimation. Neurocomputing, 2019, 367: 236−245 doi: 10.1016/j.neucom.2019.07.089 [51] Tian Y P, Zhang Y L, Fu Y, Xu C L. TDAN: Temporally-deformable alignment network for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 3357−3366 [52] Ying X Y, Wang L G, Wang Y Q, Sheng W D, An W, Guo Y L. Deformable 3D convolution for video super-resolution. IEEE Signal Processing Letters, 2020, 27: 1500−1504 doi: 10.1109/LSP.2020.3013518 [53] Yan B, Lin C M, Tan W M. Frame and feature-context video super-resolution. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence (AAAI). Honolulu, USA: AAAI, 2019. 5597−5604 [54] Liu S L, Zheng C J, Lu K D, Gao S, Wang N, Wang B F, et al. EVSRNet: Efficient video super-resolution with neural architecture search. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 2480−2485 [55] Rota C, Buzzelli M, van de Weijer J. Enhancing perceptual quality in video super-resolution through temporally-consistent detail synthesis using diffusion models. In: Proceedings of the 18th European Conference on Computer Vision. Milan, Italy: Springer, 2024. 36−53 [56] Jo Y, Oh S W, Kang J, Kim S J. Deep video super-resolution network using dynamic upsampling filters without explicit motion compensation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 3224−3232 [57] Yi P, Wang Z Y, Jiang K, Jiang J J, Lu T, Ma J Y. A progressive fusion generative adversarial network for realistic and consistent video super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 44(5): 2264−2280Yi P, Wang Z Y, Jiang K, Jiang J J, Lu T, Ma J Y. A progressive fusion generative adversarial network for realistic and consistent video super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 44(5): 2264−2280 [58] Sun W, Sun J Q, Zhu Y, Zhang Y N. Video super-resolution via dense non-local spatial-temporal convolutional network. Neurocomputing, 2020, 403: 1−12 doi: 10.1016/j.neucom.2020.04.039 [59] Haris M, Shakhnarovich G, Ukita N. Recurrent back-projection network for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 3892−3901 [60] Liu H Y, Zhao P, Ruan Z B, Shang F H, Liu Y Y. Large motion video super-resolution with dual subnet and multi-stage communicated upsampling. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI). Virtual Event: AAAI, 2021. 2127−2135Liu H Y, Zhao P, Ruan Z B, Shang F H, Liu Y Y. Large motion video super-resolution with dual subnet and multi-stage communicated upsampling. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI). Virtual Event: AAAI, 2021. 2127−2135 [61] Zhang Z Q, Li R H, Guo S, Cao Y, Zhang L. TMP: Temporal motion propagation for online video super-resolution. IEEE Transactions on Image Processing, 2024, 33: 5014−5028 doi: 10.1109/TIP.2024.3453048 [62] Li W B, Tao X, Guo T A, Qi L, Lu J B, Jia J Y. MuCAN: Multi-correspondence aggregation network for video super-resolution. In: Proceedings of the 16th European Conference on Computer Vision (ECCV). Glasgow, UK: Springer, 2020. 335−351 [63] Song H H, Xu W J, Liu D, Liu B, Liu Q S, Metaxas D N. Multi-stage feature fusion network for video super-resolution. IEEE Transactions on Image Processing, 2021, 30: 2923−2934 doi: 10.1109/TIP.2021.3056868 [64] Fuoli D, Danelljan M, Timofte R, van Gool L. Fast online video super-resolution with deformable attention pyramid. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). Waikoloa, USA: IEEE, 2023. 1735−1744 [65] Kalarot R, Porikli F. MultiBoot Vsr: Multi-stage multi-reference bootstrapping for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Long Beach, USA: IEEE, 2019. 2060−2069 [66] Xia B, He J W, Zhang Y L, Wang Y T, Tian Y P, Yang W M, et al. Structured sparsity learning for efficient video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 22638−22647 [67] Wang X T, Chan K C K, Yu K, Dong C, Loy C C. EDVR: Video restoration with enhanced deformable convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Long Beach, USA: IEEE, 2019. 1954−1963 [68] Fuoli D, Gu S H, Timofte R. Efficient video super-resolution through recurrent latent space propagation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW). Seoul, South Korea: IEEE, 2019. 3476−3485 [69] Isobe T, Li S J, Jia X, Yuan S X, Slabaugh G, Xu C J, et al. Video super-resolution with temporal group attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 8005−8014 [70] Jin S, Liu M Q, Yao C, Lin C Y, Zhao Y. Kernel dimension matters: To activate available kernels for real-time video super-resolution. In: Proceedings of the 31st ACM International Conference on Multimedia (ACM MM). Ottawa, Canada: ACM, 2023. 8617−8625 [71] Cao J Z, Li Y W, Zhang K, van Gool L. Video super-resolution transformer. arXiv preprint arXiv: 2106.06847, 2021. [72] Shi S W, Gu J J, Xie L B, Wang X T, Yang Y J, Dong C. Rethinking alignment in video super-resolution transformers. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 2615 [73] Tang Q, Zhao Y, Liu M Q, Yao C. SeeClear: Semantic distillation enhances pixel condensation for video super-resolution. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: 2024.Tang Q, Zhao Y, Liu M Q, Yao C. SeeClear: Semantic distillation enhances pixel condensation for video super-resolution. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: 2024. [74] Huang C, Li J H, Chu L, Liu D, Lu Y. Disentangle propagation and restoration for efficient video recovery. In: Proceedings of the 31st ACM International Conference on Multimedia (ACM MM). Ottawa, Canada: ACM, 2023. 8336−8345 [75] Chan K C K, Wang X T, Yu K, Dong C, Loy C C. BasicVSR: The search for essential components in video super-resolution and beyond. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 4945−4954 [76] Chen Z K, Long F C, Qiu Z F, Yao T, Zhou W G, Luo J B, et al. Learning spatial adaptation and temporal coherence in diffusion models for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 9232−9241 [77] Leng J X, Wang J, Gao X B, Hu B, Gan J, Gao C Q. ICNet: Joint alignment and reconstruction via iterative collaboration for video super-resolution. In: Proceedings of the 30th ACM International Conference on Multimedia (ACM MM). Lisboa, Portugal: ACM, 2022. 6675−6684 [78] Yi P, Wang Z Y, Jiang K, Jiang J J, Lu T, Ma J Y. A progressive fusion generative adversarial network for realistic and consistent video super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(5): 2264−2280 [79] Zhang F, Chen G G, Wang H, Li J J, Zhang C M. Multi-scale video super-resolution transformer with polynomial approximation. IEEE Transactions on Circuits and Systems for Video Technology, 2023, 33(9): 4496−4506 doi: 10.1109/TCSVT.2023.3278131 [80] Qiu Z W, Yang H, Fu J L, Fu D M. Learning spatiotemporal frequency-transformer for compressed video super-resolution. In: Proceedings of the 17th European Conference on Computer Vision (ECCV). Tel Aviv, Israel: Springer, 2022. 257−273 [81] Jiang Y M, Chan K C K, Wang X T, Loy C C, Liu Z W. Reference-based image and video super-resolution via C.2-matching. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 45(7): 8874−8887 [82] Isobe T, Jia X, Tao X, Li C L, Li R H, Shi Y J, et al. Look back and forth: Video super-resolution with explicit temporal difference modeling. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17390−17399 [83] Chan K C K, Zhou S C, Xu X Y, Chen C L. Basicvsr++: Improving video super-resolution with enhanced propagation and alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 5962−5971Chan K C K, Zhou S C, Xu X Y, Chen C L. Basicvsr++: Improving video super-resolution with enhanced propagation and alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 5962−5971 [84] Zhou K, Li W B, Lu L Y, Han X G, Lu J B. Revisiting temporal alignment for video restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 6043−6052 [85] Tang Q, Zhao Y, Liu M Q, Jin J, Yao C. Semantic lens: Instance-centric semantic alignment for video super-resolution. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI). Vancouver, Canada: AAAI, 2024. 5154−5161 [86] Liu M Q, Jin S, Yao C, Lin C Y, Zhao Y. Temporal consistency learning of inter-frames for video super-resolution. IEEE Transactions on Circuits and Systems for Video Technology, 2023, 33(4): 1507−1520 doi: 10.1109/TCSVT.2022.3214538 [87] Liu C X, Yang H, Fu J L, Qian X M. Learning trajectory-aware transformer for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 5677−5686 [88] Liang J Y, Cao J Z, Fan Y C, Zhang K, Ranjan R, Li Y W, et al. VRT: A video restoration transformer. IEEE Transactions on Image Processing, 2024, 33: 2171−2182 doi: 10.1109/TIP.2024.3372454 [89] Tang J, Lu C Y, Liu Z X, Li J L, Dai H, Ding Y. CTVSR: Collaborative spatial-temporal transformer for video super-resolution. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(6): 5018−5032 doi: 10.1109/TCSVT.2023.3340439 [90] Qiu Z W, Yang H, Fu J L, Liu D C, Xu C, Fu D M. Learning degradation-robust spatiotemporal frequency-transformer for video super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(12): 14888−14904 doi: 10.1109/TPAMI.2023.3312166 [91] Zhang C P, Wang X T, Xiong R Q, Fan X P, Zhao D B. Local-global dynamic filtering network for video super-resolution. IEEE Transactions on Computational Imaging, 2023, 9: 963−976 doi: 10.1109/TCI.2023.3321980 [92] Jiang L L, Wang N, Dang Q Q, Liu R, Lai B H. PP-MSVSR: Multi-stage video super-resolution. arXiv preprint arXiv: 2112.02828, 2021. [93] Liang J Y, Fan Y C, Xiang X Y, Ranjan R, Ilg E, Green S, et al. Recurrent video restoration transformer with guided deformable attention. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 28 [94] Dong S T, Lu F, Wu Z, Yuan C. DFVSR: Directional frequency video super-resolution via asymmetric and enhancement alignment network. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence (IJCAI). Macao, China: IJCAI.org, 2023. 681−689 [95] Li F, Zhang L F, Liu Z K, Lei J, Li Z B. Multi-frequency representation enhancement with privilege information for video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 12768−12779 [96] Kai D C, Lu J Y, Zhang Y Y, Sun X Y. EvTexture: Event-driven texture enhancement for video super-resolution. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 917 [97] Zhou X Y, Zhang L H, Zhao X R, Wang K Z, Li L D, Gu S H. Video super-resolution transformer with masked inter&intra-frame attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 25399−25408 [98] Xu K, Yu Z W, Wang X, Mi M B, Yao A. An implicit alignment for video super-resolution. arXiv preprint arXiv: 2305.00163, 2023. [99] Huang Y, Wang W, Wang L. Video super-resolution via bidirectional recurrent convolutional networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(4): 1015−1028 doi: 10.1109/TPAMI.2017.2701380 [100] Chu M Y, Xie Y, Mayer J, Leal-Taixé L, Thuerey N. Learning temporal coherence via self-supervision for GAN-based video generation. ACM Transactions on Graphics, 2020, 39(4): Article No.75 [101] Isobe T, Zhu F, Jia X, Wang S J. Revisiting temporal modeling for video super-resolution. arXiv preprint arXiv: 2008.05765, 2020. [102] Sajjadi M S M, Vemulapalli R, Brown M. Frame-recurrent video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 6626−6634 [103] Isobe T, Jia X, Gu S H, Li S J, Wang S J, Tian Q. Video super-resolution with recurrent structure-detail network. In: Proceedings of the 16th European Conference on Computer Vision (ECCV). Glasgow, UK: Springer, 2020. 645−660 [104] Baniya A A, Lee T K, Eklund P W, Aryal S, Robles-Kelly A. Online video super-resolution using information replenishing unidirectional recurrent model. Neurocomputing, 2023, 546: Article No. 126355 doi: 10.1016/j.neucom.2023.126355 [105] Lin J Y, Huang Y, Wang L. FDAN: Flow-guided deformable alignment network for video super-resolution. arXiv preprint arXiv: 2105.05640, 2021. [106] Yi P, Wang Z Y, Jiang K, Jiang J J, Lu T, Tian X, et al. Omniscient video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 4409−4418 [107] Li S, He F X, Du B, Zhang L F, Xu Y H, Tao D C. Fast spatio-temporal residual network for video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 10514−10523 [108] Rombach R, Blattmann A, Lorenz D, Esser P, Ommer B. High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 10684−10695Rombach R, Blattmann A, Lorenz D, Esser P, Ommer B. High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 10684−10695 [109] Gupta A, Yu L, Sohn K, Gu X Y, Hahn M, Li F F, et al. Photorealistic video generation with diffusion models. In: Proceedings of the European Conference on Computer Vision (ECCV). Milan, Italy: Springer, 2024. 393−411 [110] Yang X, He C H, Ma J Q, Zhang L. Motion-guided latent diffusion for temporally consistent real-world video super-resolution. In: Proceedings of the 18th European Conference on Computer Vision. Milan, Italy: Springer, 2024. 224−242 [111] Liu H Y, Ruan Z B, Zhao P, Dong C, Shang F H, Liu Y Y, et al. Video super-resolution based on deep learning: A comprehensive survey. Artificial Intelligence Review, 2022, 55(8): 5981−6035 doi: 10.1007/s10462-022-10147-y [112] Tu Z G, Li H Y, Xie W, Liu Y Z, Zhang S F, Li B X, et al. Optical flow for video super-resolution: A survey. Artificial Intelligence Review, 2022, 55(8): 6505−6546 doi: 10.1007/s10462-022-10159-8 [113] Baniya A A, Lee T K, Eklund P W, Aryal S. A methodical study of deep learning based video super-resolution. Authorea Preprints, DOI: 10.36227/techrxiv.23896986.v1 [114] 江俊君, 程豪, 李震宇, 刘贤明, 王中元. 深度学习视频超分辨率技术概述. 中国图象图形学报, 2023, 28(7): 1927−1964 doi: 10.11834/jig.220130Jiang Jun-Jun, Cheng Hao, Li Zhen-Yu, Liu Xian-Ming, Wang Zhong-Yuan. Deep learning based video-related super-resolution technique: A survey. Journal of Image and Graphics, 2023, 28(7): 1927−1964 doi: 10.11834/jig.220130 [115] Dong C, Loy C C, He K M, Tang X O. Image super-resolution using deep convolutional networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(2): 295−307 doi: 10.1109/TPAMI.2015.2439281 [116] Drulea M, Nedevschi S. Total variation regularization of local-global optical flow. In: Proceedings of the 14th International IEEE Conference on Intelligent Transportation Systems (ITSC). Washington, USA: IEEE, 2011. 318−323 [117] Haris M, Shakhnarovich G, Ukita N. Deep back-projection networks for super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 1664−1673 [118] Dai J F, Qi H Z, Xiong Y W, Li Y, Zhang G D, Hu H, et al. Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 764−773 [119] Zhu X Z, Hu H, Lin S, Dai J F. Deformable ConvNets V2: More deformable, better results. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 9300−9308 [120] Chan K C K, Wang X T, Yu K, Dong C, Loy C C. Understanding deformable alignment in video super-resolution. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI). Virtual Event: AAAI, 2021. 973−981Chan K C K, Wang X T, Yu K, Dong C, Loy C C. Understanding deformable alignment in video super-resolution. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI). Virtual Event: AAAI, 2021. 973−981 [121] Butler D J, Wulff J, Stanley G B, Black M J. A naturalistic open source movie for optical flow evaluation. In: Proceedings of the 12th European Conference on Computer Vision (ECCV). Florence, Italy: Springer, 2012. 611−625 [122] Lian W Y, Lian W J. Sliding window recurrent network for efficient video super-resolution. In: Proceedings of the European Conference on Computer Vision Workshops (ECCVW). Tel Aviv, Israel: Springer, 2022. 591−601 [123] Xiao J, Jiang X Y, Zheng N X, Yang H, Yang Y F, Yang Y Q, et al. Online video super-resolution with convolutional kernel bypass grafts. IEEE Transactions on Multimedia, 2023, 25: 8972−8987 doi: 10.1109/TMM.2023.3243615 [124] Li D S, Shi X Y, Zhang Y, Cheung K C, See S, Wang X G, et al. A simple baseline for video restoration with grouped spatial-temporal shift. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 9822−9832 [125] Geng Z C, Liang L M, Ding T Y, Zharkov I. RSTT: Real-time spatial temporal transformer for space-time video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17420−17430 [126] Lin L J, Wang X T, Qi Z G, Shan Y. Accelerating the training of video super-resolution models. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence (AAAI). Washington, USA: AAAI, 2023. 1595−1603 [127] Li H, Chen X, Dong J X, Tang J H, Pan J S. Collaborative feedback discriminative propagation for video super-resolution. arXiv preprint arXiv: 2404.04745, 2024. [128] Hu M S, Jiang K, Wang Z, Bai X, Hu R M. CycMuNet+: Cycle-projected mutual learning for spatial-temporal video super-resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(11): 13376−13392 [129] Xiao Y, Yuan Q Q, Jiang K, Jin X Y, He J, Zhang L P, et al. Local-global temporal difference learning for satellite video super-resolution. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(4): 2789−2802 doi: 10.1109/TCSVT.2023.3312321 [130] Hui Y X, Liu Y, Liu Y F, Jia F, Pan J S, Chan R, et al. VJT: A video transformer on joint tasks of deblurring, low-light enhancement and denoising. arXiv preprint arXiv: 2401.14754, 2024. [131] Song Y X, Wang M L, Yang Z J, Xian X Y, Shi Y K. NegVSR: Augmenting negatives for generalized noise modeling in real-world video super-resolution. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI). Vancouver, Canada: AAAI, 2024. 10705−10713Song Y X, Wang M L, Yang Z J, Xian X Y, Shi Y K. NegVSR: Augmenting negatives for generalized noise modeling in real-world video super-resolution. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI). Vancouver, Canada: AAAI, 2024. 10705−10713 [132] Wang Y W, Isobe T, Jia X, Tao X, Lu H C, Tai Y W. Compression-aware video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 2012−2021 [133] Youk G, Oh J, Kim M. FMA-Net: Flow-guided dynamic filtering and iterative feature refinement with multi-attention for joint video super-resolution and deblurring. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 44−55 [134] Zhang Y H, Yao A. RealViformer: Investigating attention for real-world video super-resolution. In: Proceedings of the 18th European Conference on Computer Vision. Milan, Italy: Springer, 2024. 412−428 [135] Xiang X Y, Tian Y P, Zhang Y L, Fu Y, Allebach J P, Xu C L. Zooming Slow-Mo: Fast and accurate one-stage space-time video super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 3367−3376 [136] Jeelani M, Sadbhawna, Cheema N, Illgner-Fehns K, Slusallek P, Jaiswal S. Expanding synthetic real-world degradations for blind video super resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Vancouver, Canada: IEEE, 2023. 1199−1208 [137] Bai H R, Pan J S. Self-supervised deep blind video superresolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(7): 4641−4653 doi: 10.1109/TPAMI.2024.3361168 [138] Pan J S, Bai H R, Dong J X, Zhang J W, Tang J H. Deep blind video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 4791−4800 [139] Chen H Y, Li W B, Gu J J, Ren J J, Sun H Z, Zou X Y, et al. Low-res leads the way: Improving generalization for super-resolution by self-supervised learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 25857−25867 [140] Yuan J, Ma J, Wang B, Hu W M. Content-decoupled contrastive learning-based implicit degradation modeling for blind image super-resolution. arXiv preprint arXiv: 2408.05440, 2024. [141] Chen Y H, Chen S C, Chen Y H, Lin Y Y, Peng W H. MoTIF: Learning motion trajectories with local implicit neural functions for continuous space-time video super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 23074−23084 [142] Huang C, Li J H, Chu L, Liu D, Lu Y. Arbitrary-scale video super-resolution guided by dynamic context. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI). Vancouver, Canada: AAAI, 2024. 2294−2302 [143] Li Z K, Liu H Y, Shang F H, Liu Y Y, Wan L, Feng W. SAVSR: Arbitrary-scale video super-resolution via a learned scale-adaptive network. In: Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI). Vancouver, Canada: AAAI, 2024. 3288−3296 [144] Huang Z W, Huang A L, Hu X T, Hu C, Xu J, Zhou S C. Scale-adaptive feature aggregation for efficient space-time video super-resolution. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). Waikoloa, USA: IEEE, 2024. 4216−4227 [145] Xu Y R, Park T, Zhang R, Zhou Y, Shechtman E, Liu F, et al. VideoGigaGAN: Towards detail-rich video super-resolution. arXiv preprint arXiv: 2404.12388, 2024. [146] He Q X, Wang S, Liu T, Liu C, Liu X Q. Enhancing measurement precision for rotor vibration displacement via a progressive video super resolution network. IEEE Transactions on Instrumentation and Measurement, 2024, 73: Article No. 6005113 [147] Chang J H, Zhao Z H, Jia C M, Wang S Q, Yang L B, Mao Q, et al. Conceptual compression via deep structure and texture synthesis. IEEE Transactions on Image Processing, 2022, 31: 2809−2823 doi: 10.1109/TIP.2022.3159477 [148] Chang J H, Zhang J, Li J G, Wang S Q, Mao Q, Jia C M, et al. Semantic-aware visual decomposition for image coding. International Journal of Computer Vision, 2023, 131(9): 2333−2355 doi: 10.1007/s11263-023-01809-7 [149] Ren B, Li Y W, Liang J Y, Ranjan R, Liu M Y, Cucchiara R, et al. Sharing key semantics in transformer makes efficient image restoration. arXiv preprint arXiv: 2405.20008, 2024. [150] Wu R Y, Sun L C, Ma Z Y, Zhang L. One-step effective diffusion network for real-world image super-resolution. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: 2024.Wu R Y, Sun L C, Ma Z Y, Zhang L. One-step effective diffusion network for real-world image super-resolution. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: 2024. [151] Sun H Z, Li W B, Liu J Z, Chen H Y, Pei R J, Zou X Y, et al. CoSER: Bridging image and language for cognitive super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 25868−25878 [152] Wu R Y, Yang T, Sun L C, Zhang Z Q, Li S, Zhang L. SeeSR: Towards semantics-aware real-world image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 25456−25467 [153] Zhang Y H, Zhang H S, Chai X N, Xie R, Song L, Zhang W J. MRIR: Integrating multimodal insights for diffusion-based realistic image restoration. arXiv preprint arXiv: 2407.03635, 2024. [154] Zhang Y H, Zhang H S, Chai X N, Cheng Z X, Xie R, Song L, et al. Diff-restorer: Unleashing visual prompts for diffusion-based universal image restoration. arXiv preprint arXiv: 2407.03636, 2024. [155] Ouyang H, Wang Q Y, Xiao Y X, Bai Q Y, Zhang J T, Zheng K C, et al. CoDeF: Content deformation fields for temporally consistent video processing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 8089−8099 [156] Hu J F, Gu J J, Yu S Y, Yu F H, Li Z Y, You Z Y, et al. Interpreting low-level vision models with causal effect maps. arXiv preprint arXiv: 2407.19789, 2024. [157] Gu J J, Dong C. Interpreting super-resolution networks with local attribution maps. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 9195−9204 [158] Cao J Z, Liang J Y, Zhang K, Wang W G, Wang Q, Zhang Y L, et al. Towards interpretable video super-resolution via alternating optimization. In: Proceedings of the 17th European Conference on Computer Vision (ECCV). Tel Aviv, Israel: Springer, 2022. 393−411 -

下载:

下载: