The Classification, Applications, and Prospects of Prompt Learning in Computer Vision

-

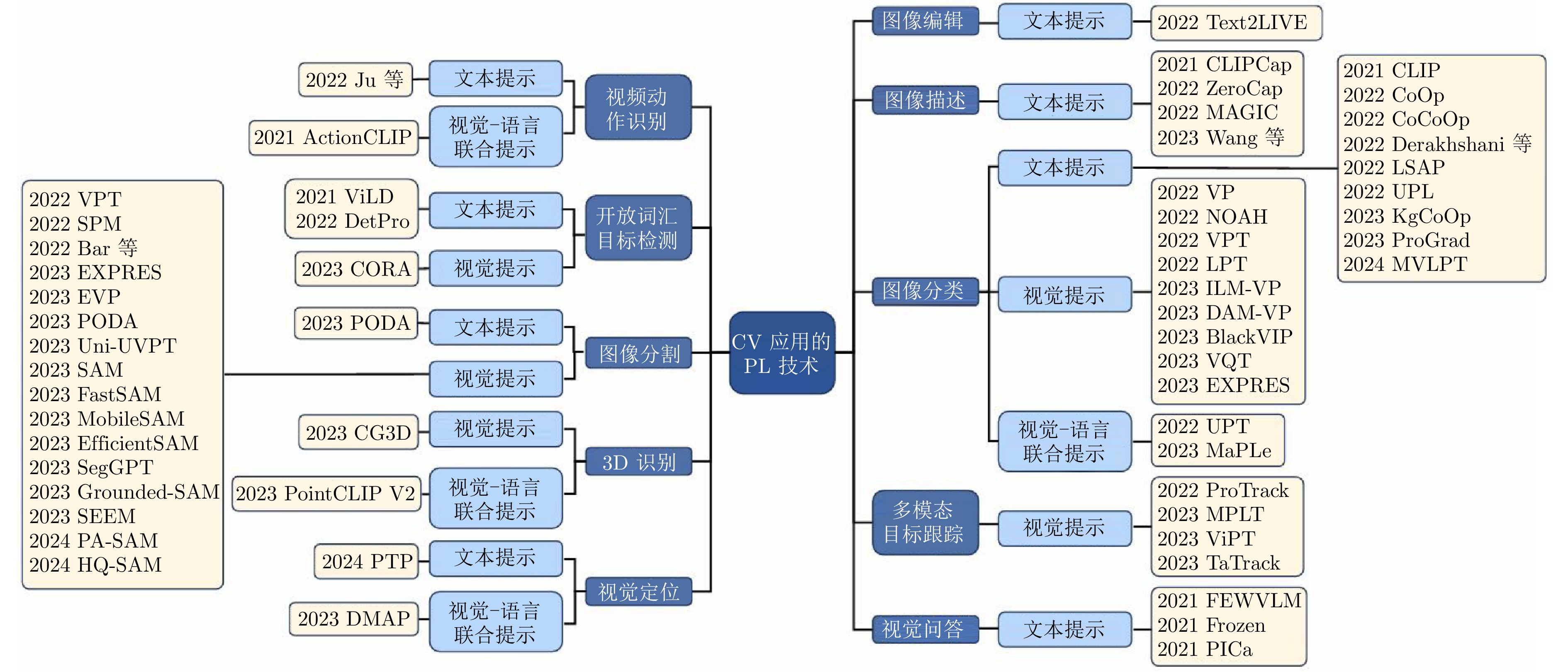

摘要: 随着计算机视觉(CV)的快速发展, 人们对于提高视觉任务的性能和泛化能力的需求不断增长, 导致模型的复杂度与对各种资源的需求进一步提高. 提示学习(PL)作为一种能有效地提升模型性能和泛化能力、重用预训练模型和降低计算量的方法, 在一系列下游视觉任务中受到广泛的关注与研究. 然而, 现有的PL综述缺乏对PL方法全面的分类和讨论, 也缺乏对现有实验结果进行深入的研究以评估现有方法的优缺点. 因此, 本文对PL在CV领域的分类、应用和性能进行全面的概述. 首先, 介绍PL的研究背景和定义, 并简要回顾CV领域中PL研究的最新进展. 其次, 对目前CV领域中的PL方法进行分类, 包括文本提示、视觉提示和视觉−语言联合提示, 对每类PL方法进行详细阐述并探讨其优缺点. 接着, 综述PL在十个常见下游视觉任务中的最新进展. 此外, 提供三个CV应用的实验结果并进行总结和分析, 全面讨论不同PL方法在CV领域的表现. 最后, 基于上述讨论对PL在CV领域面临的挑战和机遇进行分析, 为进一步推动PL在CV领域的发展提供前瞻性的思考.Abstract: With the rapid development of computer vision (CV), the growing demand for improving the performance and generalization of visual tasks has led to a further increase in model complexity and the need for various resources. Prompt learning (PL), as a method to effectively enhance model performance and generalization, reuse pre-trained models, and reduce computational costs, has gained extensive attention and research in a series of downstream visual tasks. However, existing PL surveys lack comprehensive classification and discussion of PL methods, as well as in-depth analysis of existing experimental results to evaluate the strengths and weaknesses of current methods. Therefore, this paper provides a comprehensive overview of the classification, application, and performance of PL in the field of CV. Firstly, the research background and definition of PL are introduced, followed by a brief review of recent PL progress in CV. Secondly, PL methods in CV are categorized into text prompt, visual prompt, and vision-language joint prompt, with each category elaborated in detail and its strengths and weaknesses discussed. Next, recent advances of PL in ten common downstream visual tasks are reviewed. Additionally, experimental results from three CV applications are provided, summarized, and analyzed to comprehensively discuss the performance of different PL methods in CV. Finally, based on the above discussions, the challenges and opportunities faced by PL in CV are analyzed, offering forward-looking insights to further advance the development of PL in the CV domain.

-

Key words:

- Computer vision /

- prompt learning /

- vision-language large model /

- pre-trained model

-

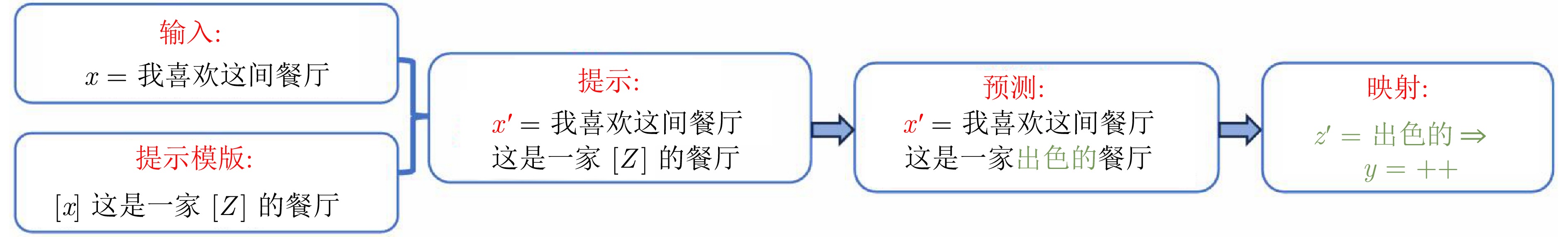

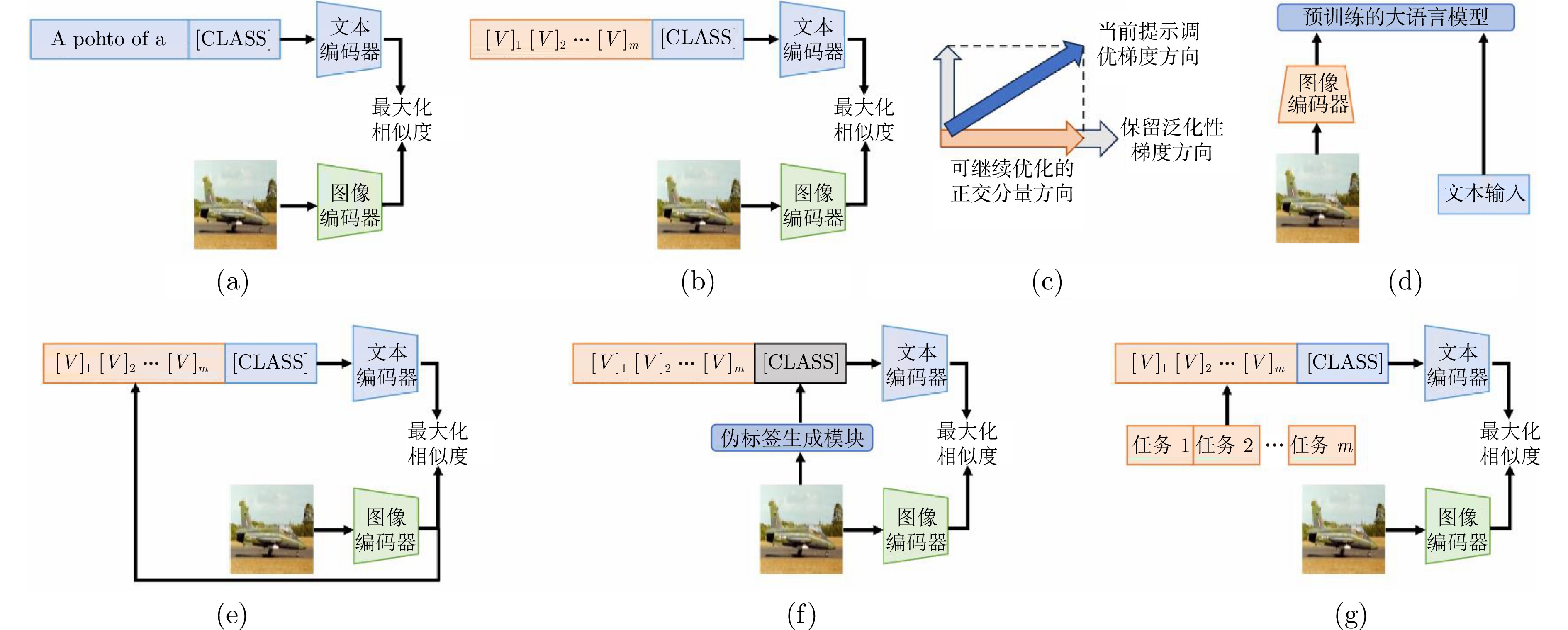

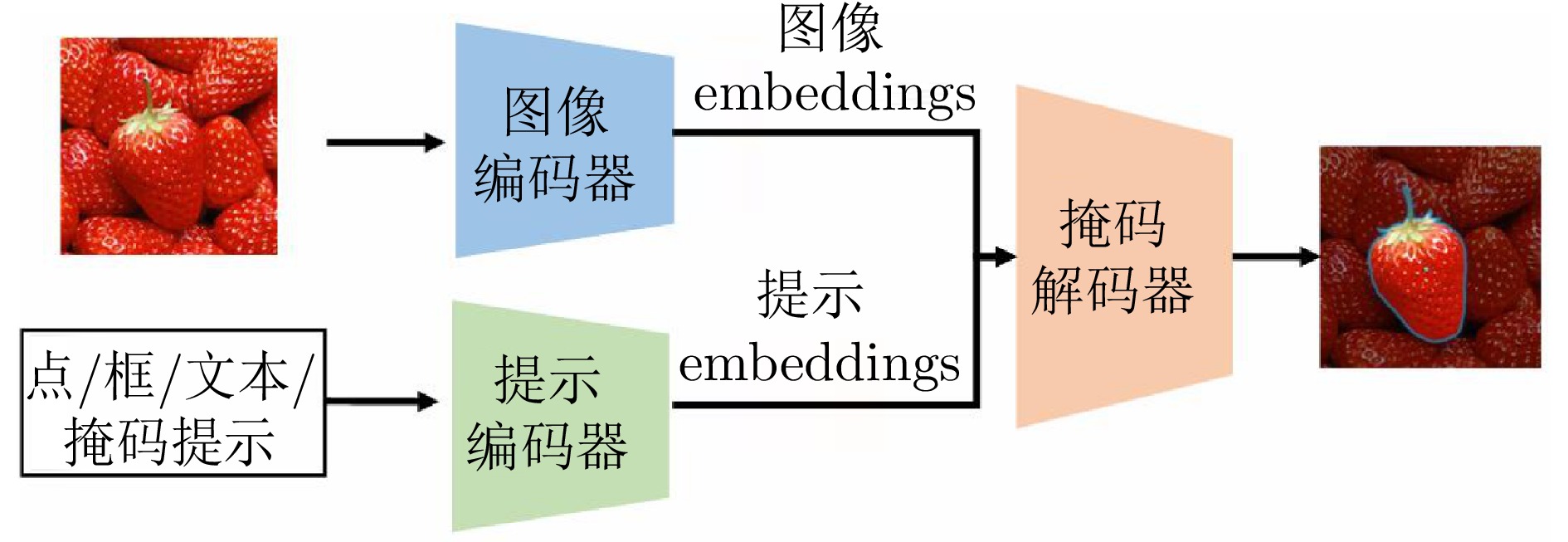

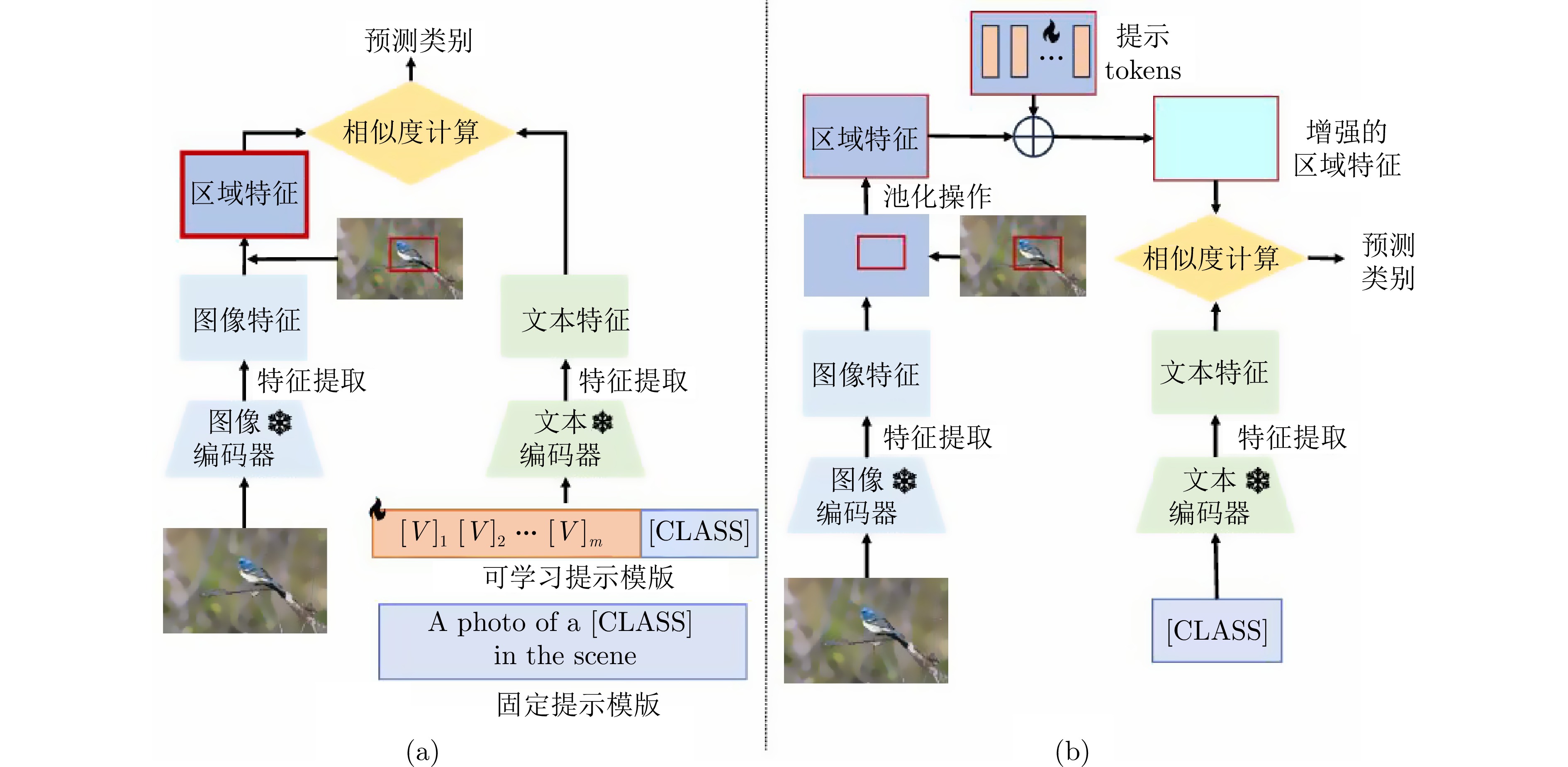

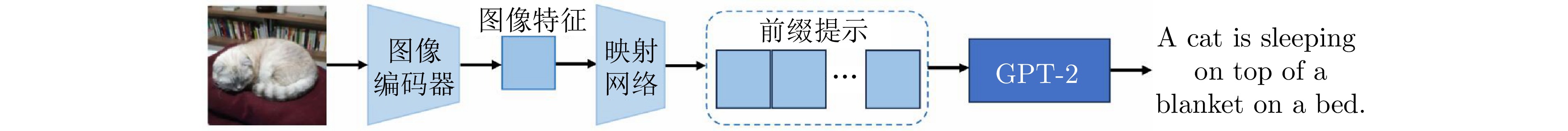

图 3 文本提示((a)基于手工设计的文本提示; (b)连续提示; (c)基于梯度引导的文本提示; (d)基于视觉映射到语言空间的提示; (e)基于图像引导的文本提示; (f)基于伪标签的文本提示; (g)基于多任务的文本提示)

Fig. 3 Text prompts ((a) Text prompt based on hand-crafted; (b) Continuous prompt; (c) Text prompt based on gradient guidance; (d) Prompt based on the mapping from vision to the language space; (e) Text prompt based on image guidance; (f) Text prompt based on pseudo-labels; (g) Text prompt based on multi-task)

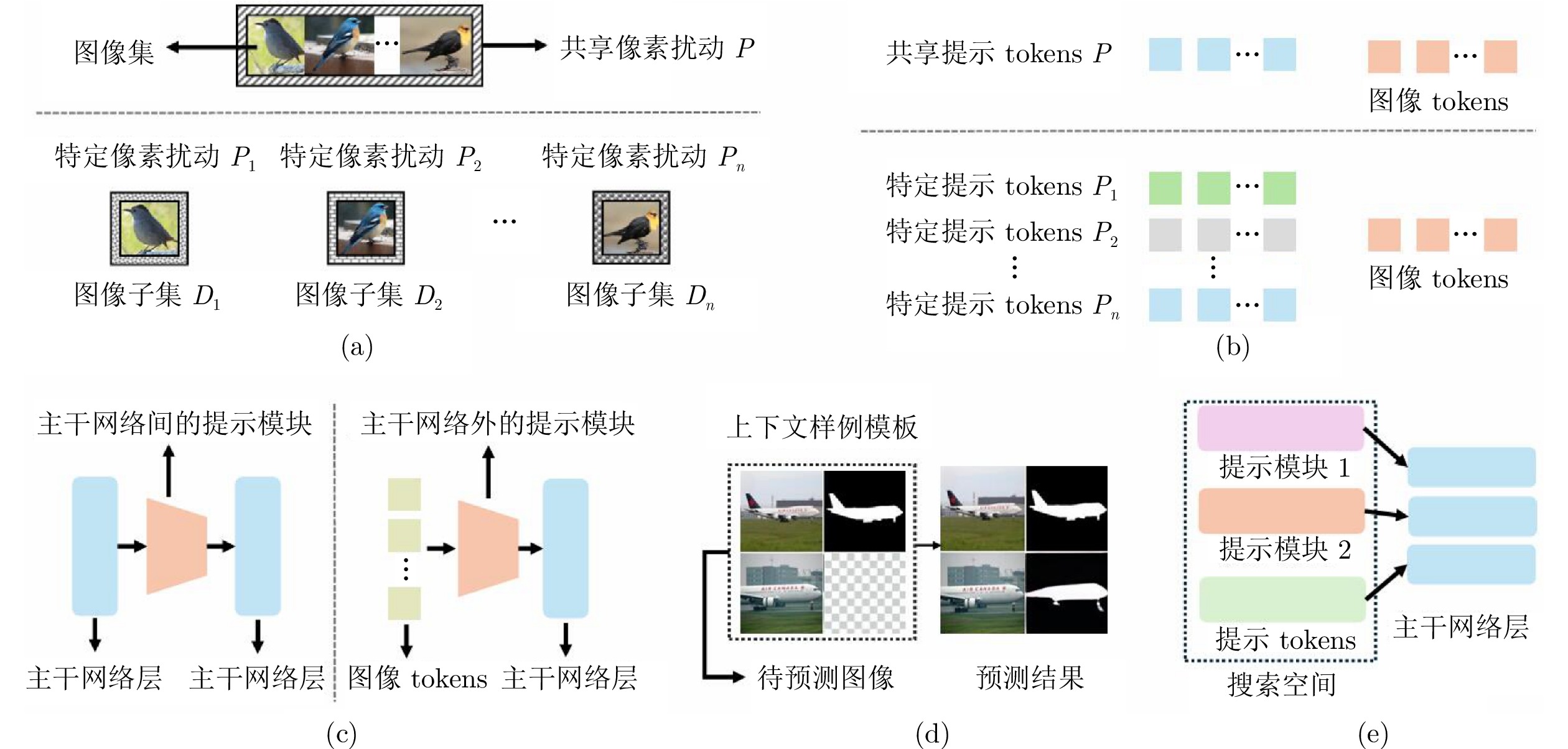

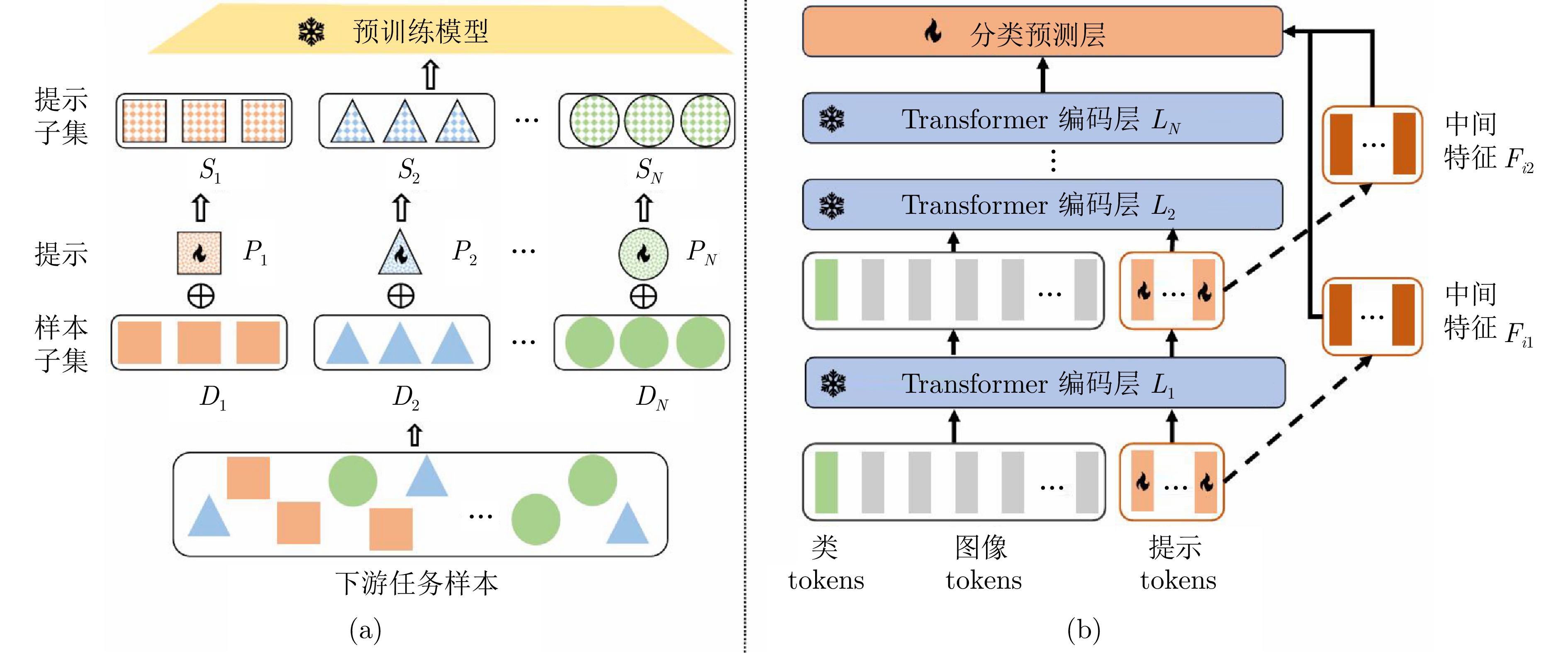

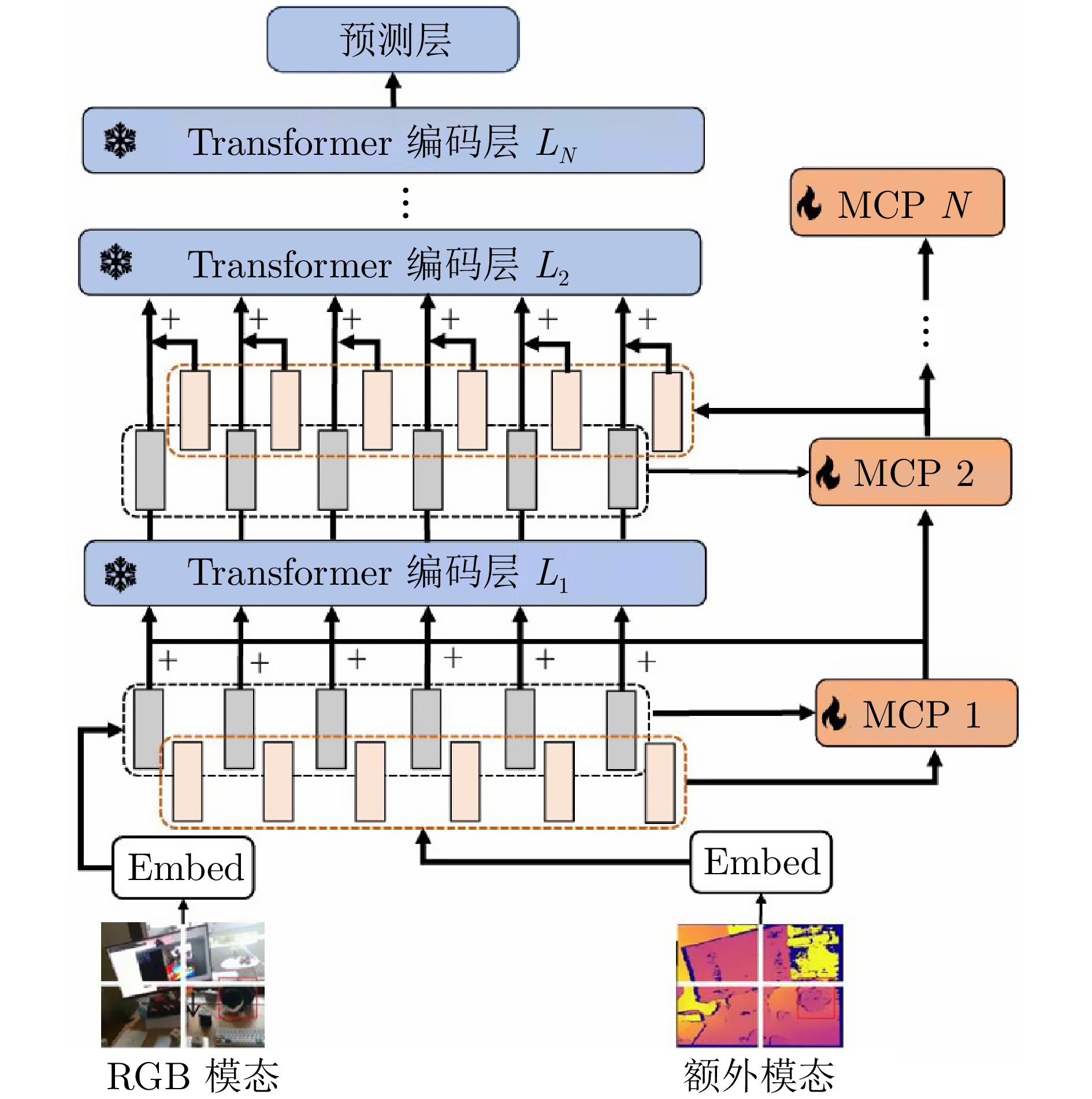

图 4 视觉提示((a)基于像素扰动的视觉提示; (b)基于提示tokens的视觉提示; (c)基于提示模块的视觉提示; (d)基于上下文样例模板的视觉提示; (e)基于网络结构搜索的视觉提示)

Fig. 4 Visual prompts ((a) Pixel perturbation-based visual prompt; (b) Prompt tokens-based visual prompt; (c) Prompt module-based visual prompt; (d) Contextual example template-based visual prompt; (e) Network architecture search-based visual prompt)

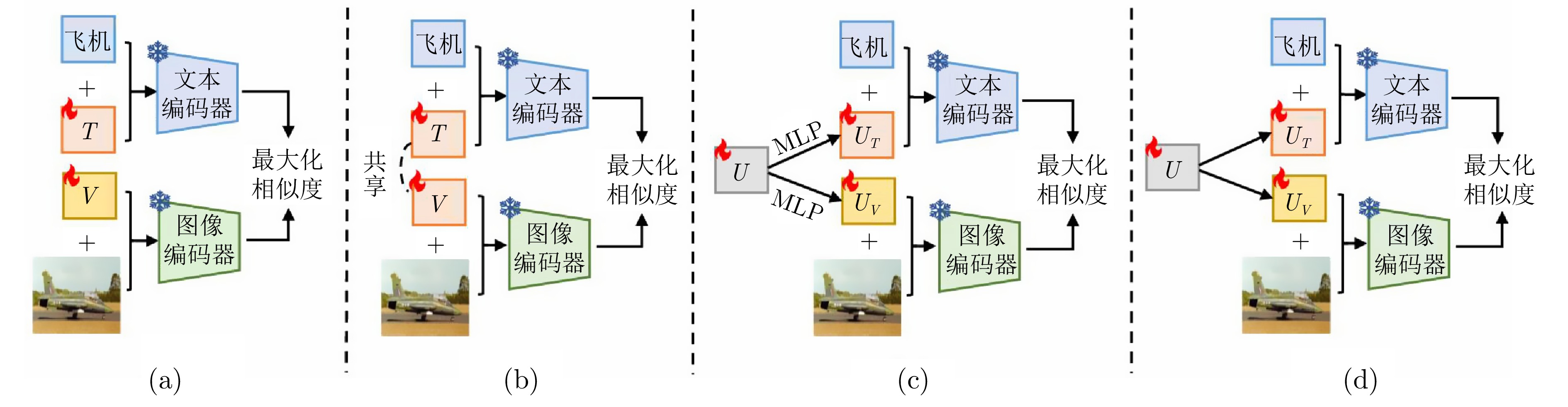

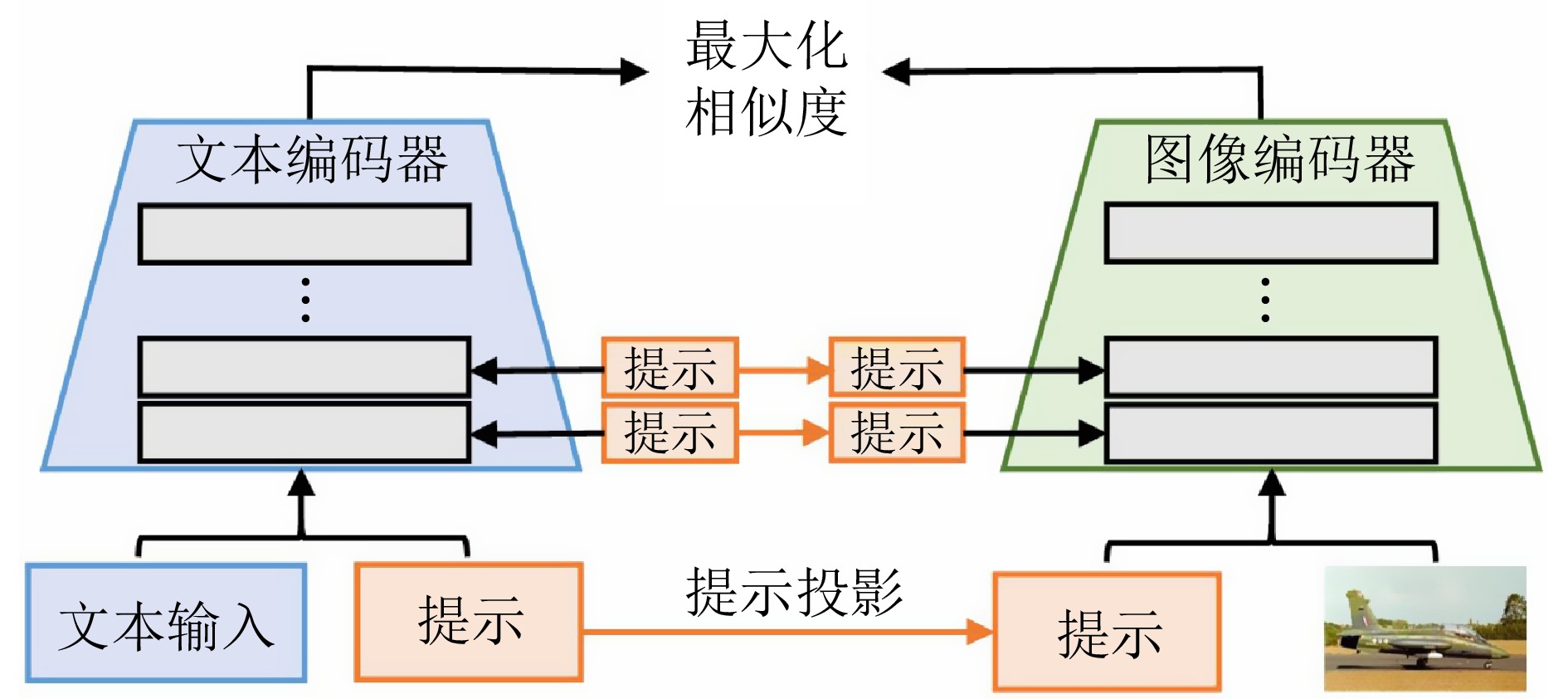

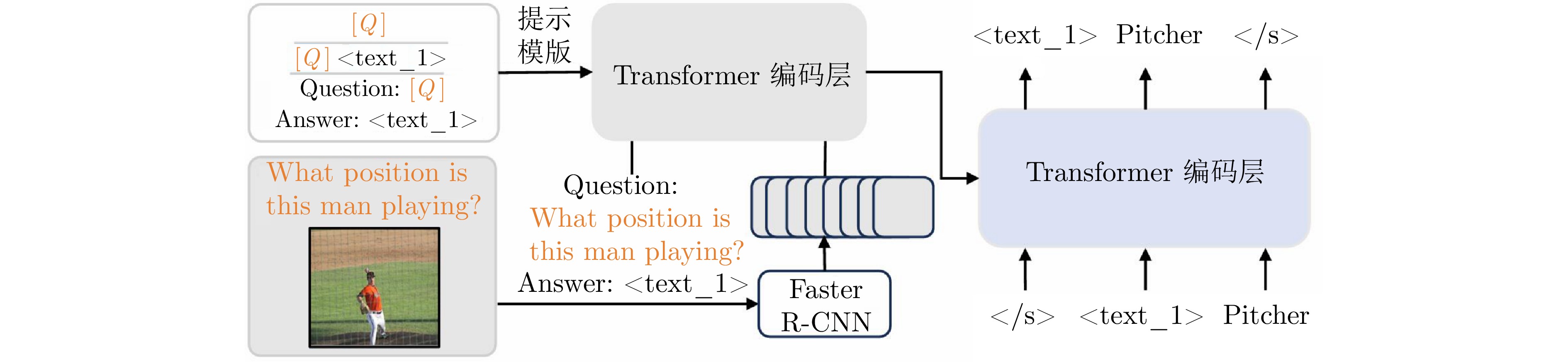

图 5 在视觉−语言模型上引入视觉−语言联合提示的四种方法对比((a)独立训练两种模态的提示; (b)共享地训练两种模态的提示; (c)使用两个MLP层来生成提示; (d)使用一个轻量级的自注意力网络来生成提示)

Fig. 5 Comparison of four methods for introducing vision-language joint prompts in vision-language models ((a) Independently train the prompts of the two modalities; (b) Train the prompts of two modalities in a shared manner; (c) Utilizing two MLP layers to generate prompts; (d) Employing a lightweight self-attention network to generate prompts)

表 1 CV领域视觉与多模态基础大模型及其参数量

Table 1 Vision and multimodal foundational large models in CV with their parameter size

视觉模型 多模态模型 DERT Vision Transformer DINOv2 LVM CLIP SAM MiniGPT-4 LLaVA Yi-VL 年份 2020 2021 2023 2023 2021 2023 2023 2023 2024 参数量 40 M 86 M ~ 632 M 1.1 B 300 M ~ 3 B 400 M ~ 1.6 B 1 B 13 B 7 B ~ 13 B 6 B ~ 34 B 表 2 图像分类任务中PL方法和非PL示方法的性能对比(加粗表示性能最优, 下划线表示性能次优) (%)

Table 2 In the task of image classification, a comparison of the performance between prompted and unprompted methods is presented (Bold indicates the best performance and underline indicates the second-best performance) (%)

ViT-B-22K Swin-B-22K 非PL方法 PL方法 非PL方法 PL方法 全面微调 线性探测 VP VPT DAM-VP 全面微调 线性探测 VP VPT DAM-VP CIFAR-10 97.4 96.3 94.2 96.8 97.3 98.3 96.3 94.8 96.9 97.3 CIFAR-100 68.9 63.4 78.7 78.8 88.1 73.3 61.6 80.6 80.5 88.1 Food-101 84.9 84.4 80.5 83.3 86.9 91.7 88.2 83.4 90.1 90.5 DTD 64.3 63.2 59.5 65.8 73.1 72.4 73.6 75.1 78.5 80.0 SVHN 87.4 36.6 87.6 78.1 87.9 91.2 43.5 80.3 87.8 81.7 CUB-200 87.3 85.3 84.6 88.5 87.5 89.7 88.6 86.5 90.0 90.4 Stanford Dogs 89.4 86.2 84.5 90.2 92.3 86.2 85.9 81.3 84.8 88.5 Flowers102 98.8 97.9 97.7 99.0 99.2 98.3 99.4 98.6 99.3 99.6 表 3 从基类到新类的泛化设置下CLIP、CoOp、CoCoOp和MaPLe的对比(HM代表对基类和新类的准确率取调和平均值, 加粗表示性能最优) (%)

Table 3 Comparison of CLIP, CoOp, CoCoOp and MaPLe under the generalization setting from base class to new class (HM denotes the harmonic mean of the accuracies on both base and new classes, bold indicates the best performance) (%)

数据集 CLIP CoOp CoCoOp MaPLe Base New HM Base New HM Base New HM Base New HM ImageNet 72.43 68.14 70.22 76.47 67.88 71.92 75.98 70.43 73.10 76.66 70.54 73.47 Caltech101 96.84 94.00 95.40 98.00 89.81 93.73 97.96 93.81 95.84 97.74 94.36 96.02 OxfordPets 91.17 97.26 94.12 93.67 95.29 94.47 95.20 97.69 96.43 95.43 97.76 96.58 StanfordCars 63.37 74.89 68.65 78.12 60.40 68.13 70.49 73.59 72.01 72.94 74.00 73.47 Flowers102 72.08 77.80 74.83 97.60 59.67 74.06 94.87 71.75 81.71 95.92 72.46 82.56 Food-101 90.10 91.22 90.66 88.33 82.26 85.19 90.70 91.29 90.99 90.71 92.05 91.38 FGVCAircraft 27.19 36.29 31.09 40.44 22.30 28.75 33.41 23.71 27.74 37.44 35.61 36.50 SUN397 69.36 75.35 72.23 80.60 65.89 72.51 79.74 76.86 78.27 80.82 78.70 79.75 DTD 53.24 59.90 56.37 79.44 41.18 54.24 77.01 56.00 64.85 80.36 59.18 68.16 EuroSAT 56.48 64.05 60.03 92.19 54.74 68.69 87.49 60.04 71.21 94.07 73.23 82.35 UCF101 70.53 77.50 73.85 84.69 56.05 67.46 82.33 73.45 77.64 83.00 78.66 80.77 平均值 69.34 74.22 71.10 82.69 63.22 71.66 80.47 71.69 75.83 82.28 75.14 78.55 表 4 ADE20K数据集上PL方法和非PL方法的语义分割性能对比

Table 4 Comparison of semantic segmentation performance on the ADE20K dataset between prompted and unprompted methods

参数量(M) mIoU (%) PL方法 SPM 14.90 45.05 VPT 13.39 42.11 AdaptFormer 16.31 44.00 SAM — 53.00 EfficientSAM — 51.80 非PL方法 fully tuning 317.29 47.53 head tuning 13.14 37.77 表 5 COCO数据集上PL方法和非PL方法的实例分割性能对比

Table 5 Comparison of instance segmentation performance on the COCO dataset between prompted and unprompted methods

mAP (%) PL方法 SAM 46.8 EfficientSAM 44.4 HQ-SAM 49.5 PA-SAM 49.9 非PL方法 Mask2Former 43.7 OneFormer 45.6 表 6 多模态跟踪任务中PL方法和非PL方法的性能对比 (%)

Table 6 Performance comparison between prompted and unprompted methods in multimodal tracking tasks (%)

RGBT234 LasHeR precision success precision success PL

方法TaTrack 87.2 64.4 85.3 61.8 MPLT 88.4 65.7 72.0 57.1 ViPT 83.5 61.7 65.1 52.5 ProTrack 79.5 59.9 53.8 42.0 非PL

方法OsTrack 72.9 54.9 51.5 41.2 FANet 78.7 55.3 44.1 30.9 SGT 72.0 47.2 36.5 25.1 -

[1] Xu M W, Yin W S, Cai D Q, Yi R J, Xu D L, Wang Q P, et al. A survey of resource-efficient LLM and multimodal foundation models. arXiv preprint arXiv: 2401.08092, 2024. [2] Zhou J H, Chen Y Y, Hong Z C, Chen W H, Yu Y, Zhang T, et al. Training and serving system of foundation models: A comprehensive survey. IEEE Open Journal of the Computer Society, 2024, 5: 107−119 doi: 10.1109/OJCS.2024.3380828 [3] Liu Z M, Yu X T, Fang Y, Zhang X M. GraphPrompt: Unifying pre-training and downstream tasks for graph neural networks. In: Proceedings of the ACM Web Conference. Austin, USA: ACM, 2023. 417−428 [4] Liu P F, Yuan W Z, Fu J L, Jiang Z B, Hayashi H, Neubig G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 2023, 55(9): Article No. 195 [5] Oquab M, Darcet T, Moutakanni T, Vo H V, Szafraniec M, Khalidov V, et al. DINOv2: Learning robust visual features without supervision. arXiv preprint arXiv: 2304.07193, 2023. [6] Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8748−8763Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8748−8763 [7] Kirillov A, Mintun E, Ravi N, Mao H, Rolland C, Gustafson L, et al. Segment anything. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 3992−4003 [8] 廖宁, 曹敏, 严骏驰. 视觉提示学习综述. 计算机学报, 2024, 47(4): 790−820 doi: 10.11897/SP.J.1016.2024.00790Liao Ning, Cao Min, Yan Jun-Chi. Visual prompt learning: A survey. Chinese Journal of Computers, 2024, 47(4): 790−820 doi: 10.11897/SP.J.1016.2024.00790 [9] Zang Y H, Li W, Zhou K Y, Huang C, Loy C C. Unified vision and language prompt learning. arXiv preprint arXiv: 2210.07225, 2022. [10] Khattak M U, Rasheed H, Maaz M, Khan S, Khan F S. MaPLe: Multi-modal prompt learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 19113−19122 [11] Chen S F, Ge C J, Tong Z, Wang J L, Song Y B, Wang J, et al. AdaptFormer: Adapting vision Transformers for scalable visual recognition. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 1212 [12] Deng J, Dong W, Socher R, Li L J, Li K, Li F F. ImageNet: A large-scale hierarchical image database. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Miami, USA: IEEE, 2009. 248−255 [13] Zhou K Y, Yang J K, Loy C C, Liu Z W. Learning to prompt for vision-language models. International Journal of Computer Vision, 2022, 130(9): 2337−2348 doi: 10.1007/s11263-022-01653-1 [14] Zhou K Y, Yang J K, Loy C C, Liu Z W. Conditional prompt learning for vision-language models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 16795−16804 [15] Derakhshani M M, Sanchez E, Bulat A, da Costa V G, Snoek C G M, Tzimiropoulos G, et al. Bayesian prompt learning for image-language model generalization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 15191−15200 [16] Yao H T, Zhang R, Xu C S. Visual-language prompt tuning with knowledge-guided context optimization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 6757−6767 [17] Bulat A, Tzimiropoulos G. LASP: Text-to-text optimization for language-aware soft prompting of vision & language models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 23232−23241 [18] Zhu B E, Niu Y L, Han Y C, Wu Y, Zhang H W. Prompt-aligned gradient for prompt tuning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 15613−15623 [19] Huang T, Chu J, Wei F Y. Unsupervised prompt learning for vision-language models. arXiv preprint arXiv: 2204.03649, 2022. [20] Shen S, Yang S J, Zhang T J, Zhai B H, Gonzalez J E, Keutzer K, et al. Multitask vision-language prompt tuning. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). Waikoloa, USA: IEEE, 2024. 5644−5655 [21] Bahng H, Jahanian A, Sankaranarayanan S, Isola P. Exploring visual prompts for adapting large-scale models. arXiv preprint arXiv: 2203.17274, 2022. [22] Chen A C, Yao Y G, Chen P Y, Zhang Y H, Liu S J. Understanding and improving visual prompting: A label-mapping perspective. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 19133−19143 [23] Oh C, Hwang H, Lee H Y, Lim Y, Jung G, Jung J, et al. BlackVIP: Black-box visual prompting for robust transfer learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 24224−24235 [24] Huang Q D, Dong X Y, Chen D D, Zhang W M, Wang F F, Hua G, et al. Diversity-aware meta visual prompting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 10878−10887 [25] Jia M L, Tang L M, Chen B C, Cardie C, Belongie S, Hariharan B, et al. Visual prompt tuning. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 709−727 [26] Tu C H, Mai Z D, Chao W L. Visual query tuning: Towards effective usage of intermediate representations for parameter and memory efficient transfer learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 7725−7735 [27] Das R, Dukler Y, Ravichandran A, Swaminathan A. Learning expressive prompting with residuals for vision Transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 3366−3377 [28] Dong B W, Zhou P, Yan S C, Zuo W M. LPT: Long-tailed prompt tuning for image classification. In: Proceedings of the Eleventh International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. 1−20 [29] Zhang Y H, Zhou K Y, Liu Z W. Neural prompt search. IEEE Transactions on Pattern Analysis and Machine Intelligence, DOI: 10.1109/TPAMI.2024.3435939 [30] Hu E J, Shen Y, Wallis P, Allen-Zhu Z, Li Y Z, Wang S A, et al. LoRA: Low-rank adaptation of large language models. arXiv preprint arXiv: 2106.09685, 2021.Hu E J, Shen Y, Wallis P, Allen-Zhu Z, Li Y Z, Wang S A, et al. LoRA: Low-rank adaptation of large language models. arXiv preprint arXiv: 2106.09685, 2021. [31] Houlsby N, Giurgiu A, Jastrzebski S, Morrone B, de Laroussilhe Q, Gesmundo A, et al. Parameter-efficient transfer learning for NLP. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 2790−2799 [32] Nilsback M E, Zisserman A. Automated flower classification over a large number of classes. In: Proceedings of the Sixth Indian Conference on Computer Vision, Graphics & Image Processing. Bhubaneswar, India: IEEE, 2008. 722−729 [33] Helber P, Bischke B, Dengel A, Borth D. EuroSAT: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2019, 12(7): 2217−2226 doi: 10.1109/JSTARS.2019.2918242 [34] Fahes M, Vu T H, Bursuc A, Pérez P, de Charette R. PØDA: Prompt-driven zero-shot domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 18577−18587 [35] Liu L B, Chang J L, Yu B X B, Lin L, Tian Q, Chen C W. Prompt-matched semantic segmentation. arXiv preprint arXiv: 2208.10159, 2022. [36] Liu W H, Shen X, Pun C M, Cun X D. Explicit visual prompting for low-level structure segmentations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 19434−19445 [37] Bar A, Gandelsman Y, Darrell T, Globerson A, Efros A A. Visual prompting via image inpainting. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 1813 [38] Ma X H, Wang Y M, Liu H, Guo T Y, Wang Y H. When visual prompt tuning meets source-free domain adaptive semantic segmentation. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 293 [39] Zhao X, Ding W C, An Y Q, Du Y L, Yu T, Li M, et al. Fast segment anything. arXiv preprint arXiv: 2306.12156, 2023. [40] Zhang C N, Han D S, Qiao Y, Kim J U, Bae S H, Lee S, et al. Faster segment anything: Towards lightweight SAM for mobile applications. arXiv preprint arXiv: 2306.14289, 2023. [41] Xiong Y Y, Varadarajan B, Wu L M, Xiang X Y, Xiao F Y, Zhu C C, et al. EfficientSAM: Leveraged masked image pretraining for efficient segment anything. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024. 16111−16121 [42] Ke L, Ye M Q, Danelljan M, Liu Y F, Tai Y W, Tang C K, et al. Segment anything in high quality. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1303 [43] Xie Z Z, Guan B C, Jiang W H, Yi M Y, Ding Y, Lu H T, et al. PA-SAM: Prompt adapter SAM for high-quality image segmentation. In: Proceedings of the IEEE International Conference on Multimedia and Expo (ICME). Niagara Falls, Canada: IEEE, 2024. 1−6 [44] Wang X L, Zhang X S, Cao Y, Wang W, Shen C H, Huang T J. SegGPT: Segmenting everything in context. arXiv preprint arXiv: 2304.03284, 2023. [45] Ren T H, Liu S L, Zeng A L, Lin J, Li K C, Cao H, et al. Grounded SAM: Assembling open-world models for diverse visual tasks. arXiv preprint arXiv: 2401.14159, 2024. [46] Zou X Y, Yang J W, Zhang H, Li F, Li L J, Wang J F, et al. Segment everything everywhere all at once. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 868 [47] Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv preprint arXiv: 2104.13921, 2021.Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv preprint arXiv: 2104.13921, 2021. [48] Du Y, Wei F Y, Zhang Z H, Shi M J, Gao Y, Li G Q. Learning to prompt for open-vocabulary object detection with vision-language model. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 14064−14073 [49] Wu X S, Zhu F, Zhao R, Li H S. CORA: Adapting CLIP for open-vocabulary detection with region prompting and anchor pre-matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 7031−7040 [50] Ju C, Han T D, Zheng K H, Zhang Y, Xie W D. Prompting visual-language models for efficient video understanding. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 105−124 [51] Wang M M, Xing J Z, Liu Y. ActionCLIP: A new paradigm for video action recognition. arXiv preprint arXiv: 2109.08472, 2021. [52] Mokady R, Hertz A, Bermano A H. ClipCap: CLIP prefix for image captioning. arXiv preprint arXiv: 2111.09734, 2021. [53] Tewel Y, Shalev Y, Schwartz I, Wolf L. ZeroCap: Zero-shot image-to-text generation for visual-semantic arithmetic. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 17897−17907 [54] Su Y X, Lan T, Liu Y H, Liu F Y, Yogatama D, Wang Y, et al. Language models can see: Plugging visual controls in text generation. arXiv preprint arXiv: 2205.02655, 2022. [55] Wang N, Xie J H, Wu J H, Jia M B, Li L L. Controllable image captioning via prompting. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI Press, 2023. 2617−2625 [56] Yang J Y, Li Z, Zheng F, Leonardis A, Song J K. Prompting for multi-modal tracking. In: Proceedings of the 30th ACM International Conference on Multimedia. Lisbon, Portugal: Association for Computing Machinery, 2022. 3492−3500 [57] Zhu J W, Lai S M, Chen X, Wang D, Lu H C. Visual prompt multi-modal tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 9516−9526 [58] He K J, Zhang C L, Xie S, Li Z X, Wang Z W. Target-aware tracking with long-term context attention. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI Press, 2023. 773−780 [59] Luo Y, Guo X Q, Feng H, Ao L. RGB-T tracking via multi-modal mutual prompt learning. arXiv preprint arXiv: 2308.16386, 2023. [60] Tsimpoukelli M, Menick J, Cabi S, Eslami S M A, Vinyals O, Hill F. Multimodal few-shot learning with frozen language models. arXiv preprint arXiv: 2106.13884, 2021.Tsimpoukelli M, Menick J, Cabi S, Eslami S M A, Vinyals O, Hill F. Multimodal few-shot learning with frozen language models. arXiv preprint arXiv: 2106.13884, 2021. [61] Yang Z Y, Gan Z, Wang J F, Hu X W, Lu Y M, Liu Z C, et al. An empirical study of GPT-3 for few-shot knowledge-based VQA. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI Press, 2022. 3081−3089Yang Z Y, Gan Z, Wang J F, Hu X W, Lu Y M, Liu Z C, et al. An empirical study of GPT-3 for few-shot knowledge-based VQA. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI Press, 2022. 3081−3089 [62] Jin W, Cheng Y, Shen Y L, Chen W Z, Ren X. A good prompt is worth millions of parameters: Low-resource prompt-based learning for vision-language models. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Dublin, Ireland: ACL, 2022. 2763−2775 [63] Wang A J, Zhou P, Shou M Z, Yan S C. Enhancing visual grounding in vision-language pre-training with position-guided text prompts. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(5): 3406−3421 doi: 10.1109/TPAMI.2023.3343736 [64] Wu W S, Liu T, Wang Y K, Xu K, Yin Q J, Hu Y. Dynamic multi-modal prompting for efficient visual grounding. In: Proceedings of the 6th Chinese Conference on Pattern Recognition and Computer Vision. Xiamen, China: Springer, 2023. 359−371 [65] Hegde D, Valanarasu J M J, Patel V M. CLIP goes 3D: Leveraging prompt tuning for language grounded 3D recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). Paris, France: IEEE, 2023. 2020−2030 [66] Zhu X Y, Zhang R R, He B W, Guo Z Y, Zeng Z Y, Qin Z P, et al. PointCLIP V2: Prompting clip and GPT for powerful 3D open-world learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023. 2639−2650 [67] Bar-Tal O, Ofri-Amar D, Fridman R, Kasten Y, Dekel T. Text2LIVE: Text-driven layered image and video editing. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 707−723 [68] Krizhevsky A. Learning Multiple Layers of Features From Tiny Images, Technical Report TR-2009, University of Toronto, Canada, 2009. [69] Bossard L, Guillaumin M, van Gool L. Food-101: Mining discriminative components with random forests. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: Springer, 2014. 446−461 [70] Cimpoi M, Maji S, Kokkinos I, Mohamed S, Vedaldi A. Describing textures in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 3606−3613 [71] Netzer Y, Wang T, Coates A, Bissacco A, Wu B, Ng A Y. Reading digits in natural images with unsupervised feature learning. In: Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning. Granada, Spain: NIPS, 2011. Article No. 4 [72] Wah C, Branson S, Welinder P, Perona P, Belongie S. The Caltech-UCSD Birds-200-2011 Dataset, Technical Report CNS-TR-2010-001, California Institute of Technology, USA, 2010. [73] Khosla A, Jayadevaprakash N, Yao B P, Li F F. Novel dataset for fine-grained image categorization. In: Proceedings of the First Workshop on Fine-grained Visual Categorization (FGVC), IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Colorado Springs, USA: IEEE, 2011. [74] Li F F, Fergus R, Perona P. Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories. In: Proceedings of the Conference on Computer Vision and Pattern Recognition Workshop. Washington, USA: IEEE, 2004. 178 [75] Parkhi O M, Vedaldi A, Zisserman A, Jawahar C V. Cats and dogs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 3498−3505 [76] Krause J, Stark M, Deng J, Li F F. 3D object representations for fine-grained categorization. In: Proceedings of the IEEE International Conference on Computer Vision Workshops. Sydney, Australia: IEEE, 2013. 554−561 [77] Maji S, Rahtu E, Kannala J, Blaschko M, Vedaldi A. Fine-grained visual classification of aircraft. arXiv preprint arXiv: 1306.5151, 2013. [78] Xiao J X, Hays J, Ehinger K A, Oliva A, Torralba A. SUN database: Large-scale scene recognition from abbey to zoo. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Francisco, USA: IEEE, 2010. 3485−3492 [79] Soomro K, Zamir A R, Shah M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv: 1212.0402, 2012. [80] Cheng B W, Misra I, Schwing A G, Kirillov A, Girdhar R. Masked-attention mask Transformer for universal image segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 1280−1289 [81] Jain J, Li J C, Chiu M, Hassani A, Orlov N, Shi H. OneFormer: One Transformer to rule universal image segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 2989−2998 [82] Zhou B L, Zhao H, Puig X, Fidler S, Barriuso A, Torralba A. Scene parsing through ADE20K dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 5122−5130 [83] Lin T Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft COCO: Common objects in context. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: Springer, 2014. 740−755 [84] Xiao Y, Yang M M, Li C L, Liu L, Tang J. Attribute-based progressive fusion network for RGBT tracking. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI Press, 2022. 2831−2838Xiao Y, Yang M M, Li C L, Liu L, Tang J. Attribute-based progressive fusion network for RGBT tracking. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI Press, 2022. 2831−2838 [85] Li C L, Xue W L, Jia Y Q, Qu Z C, Luo B, Tang J, et al. LasHeR: A large-scale high-diversity benchmark for RGBT tracking. IEEE Transactions on Image Processing, 2022, 31: 392−404 doi: 10.1109/TIP.2021.3130533 -

下载:

下载: