-

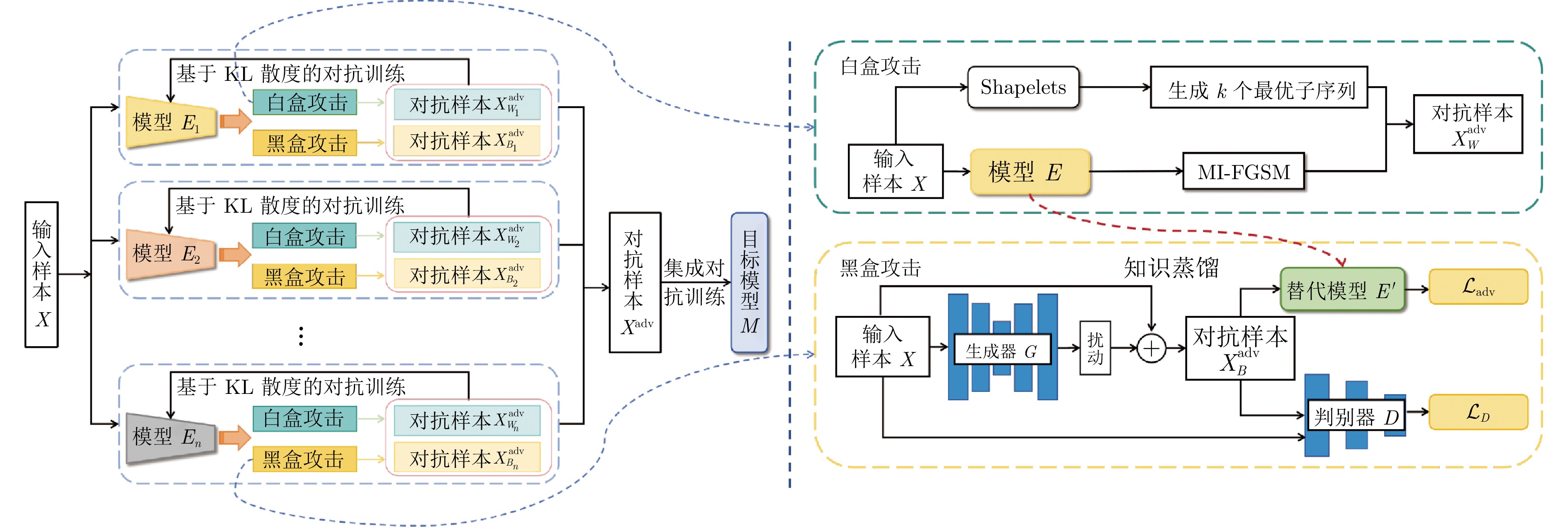

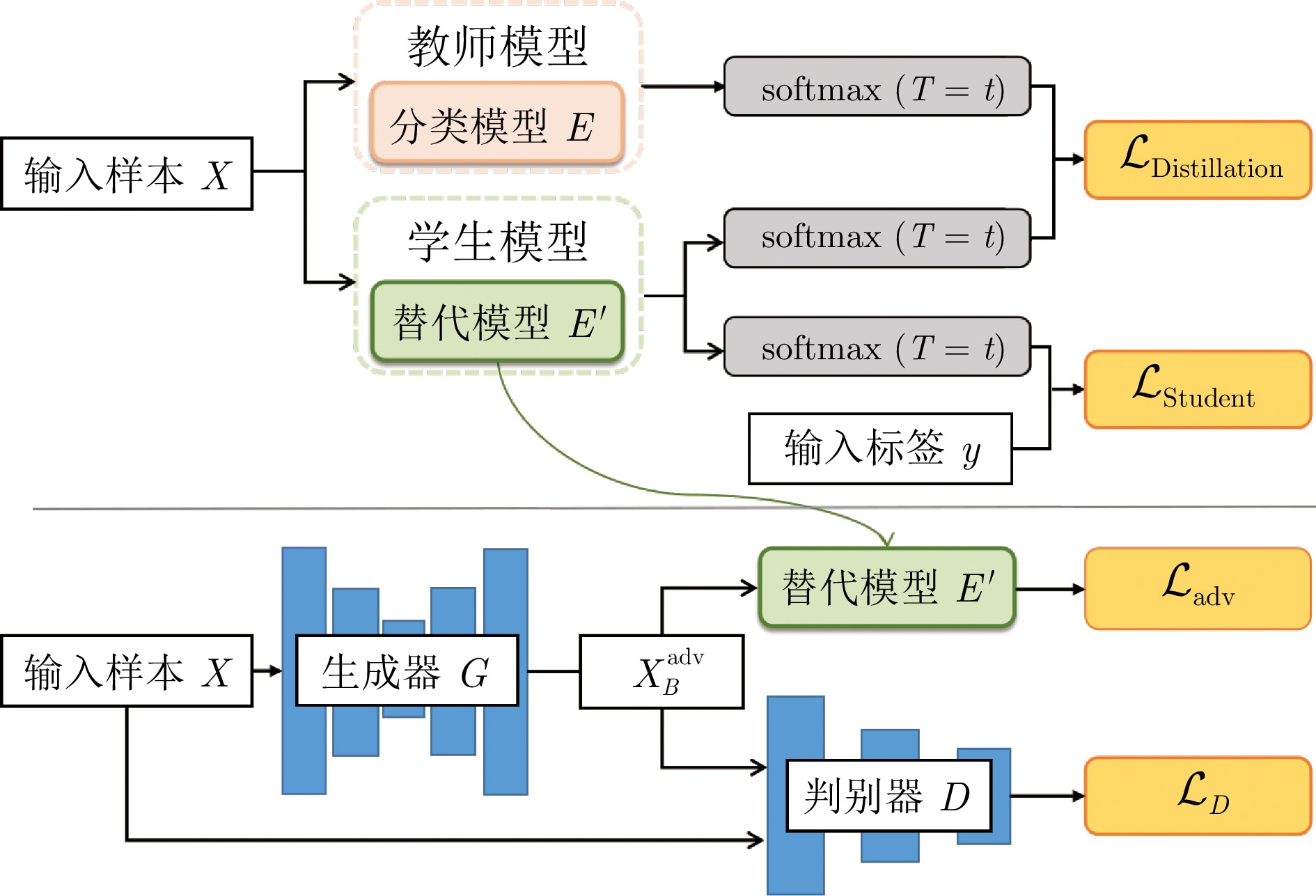

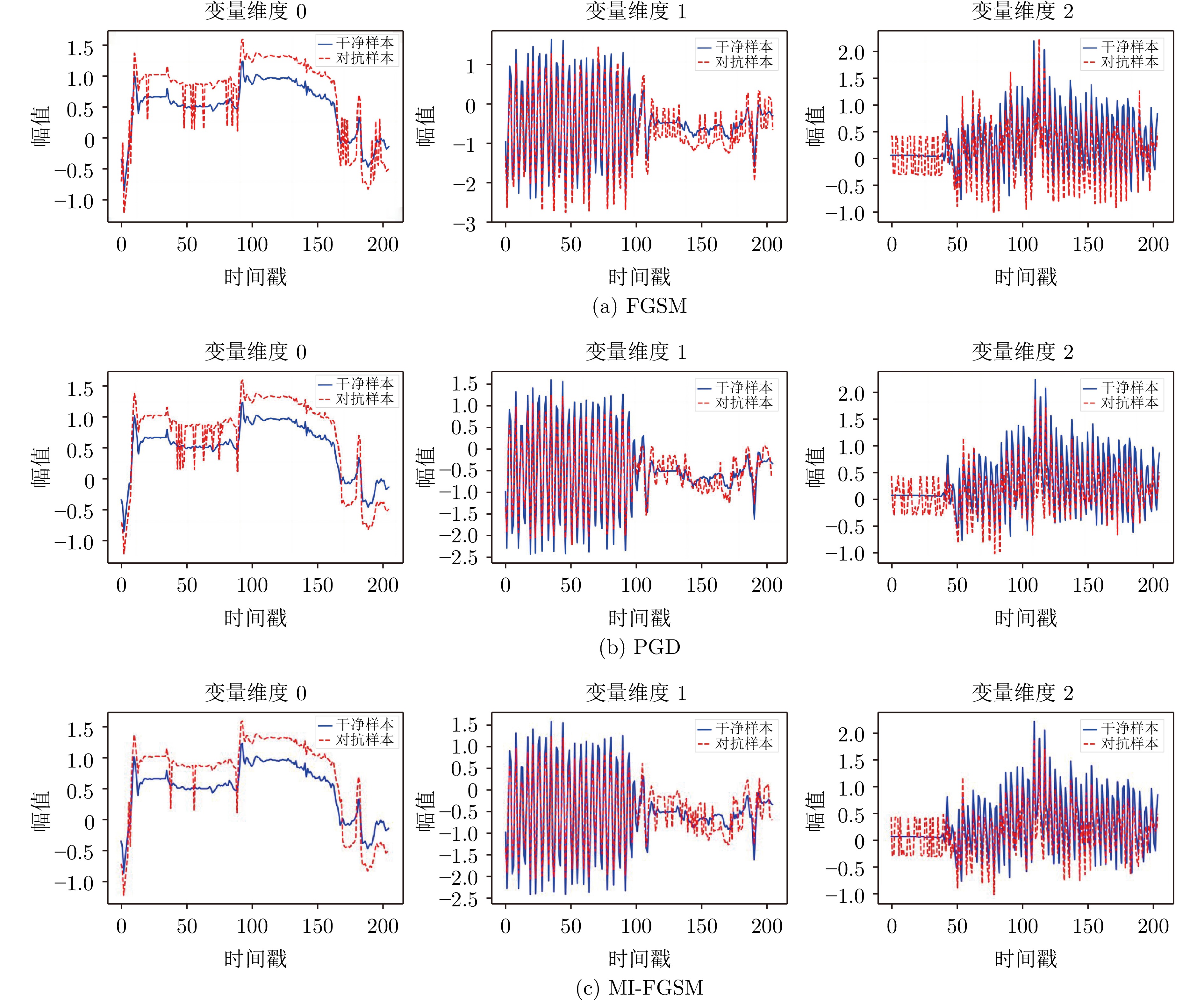

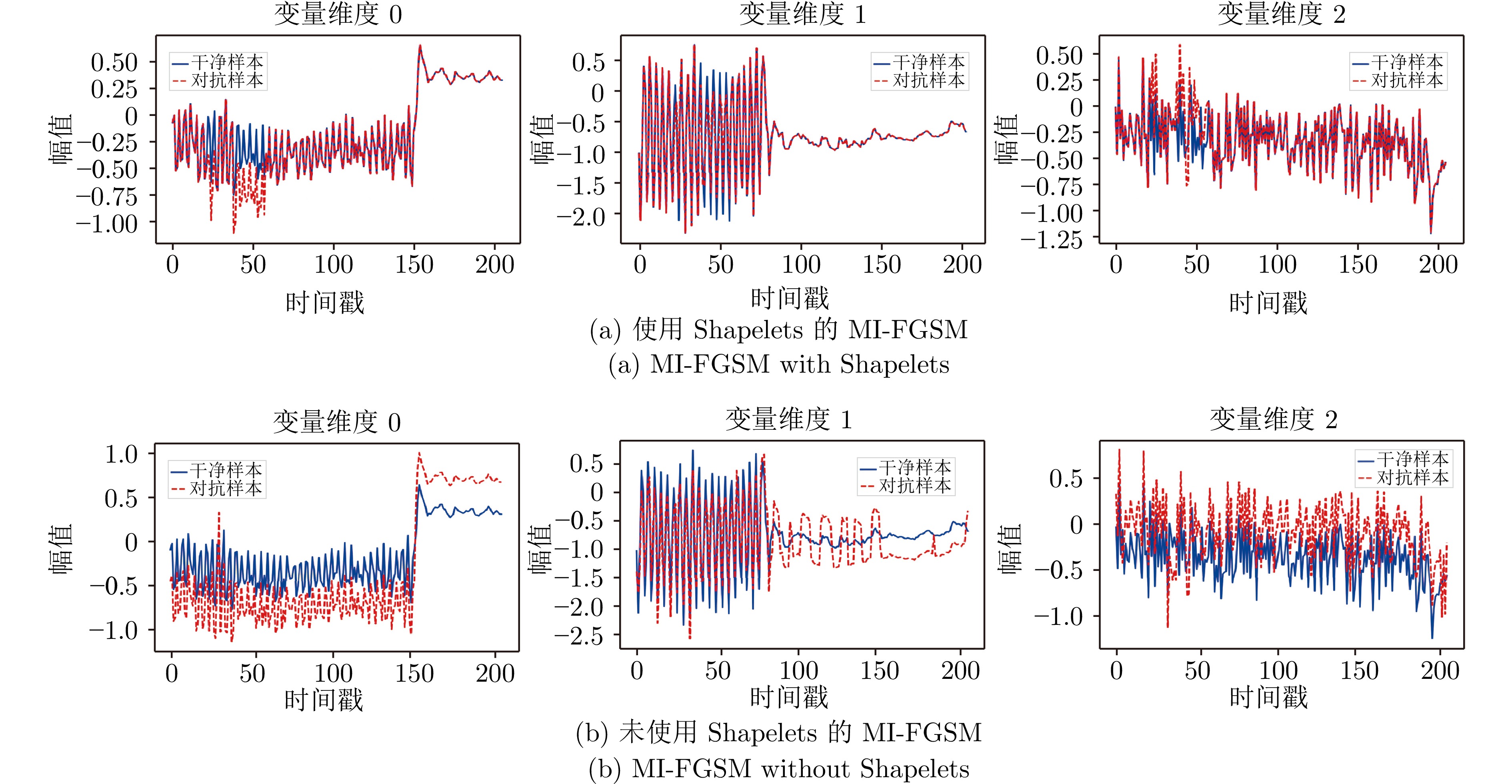

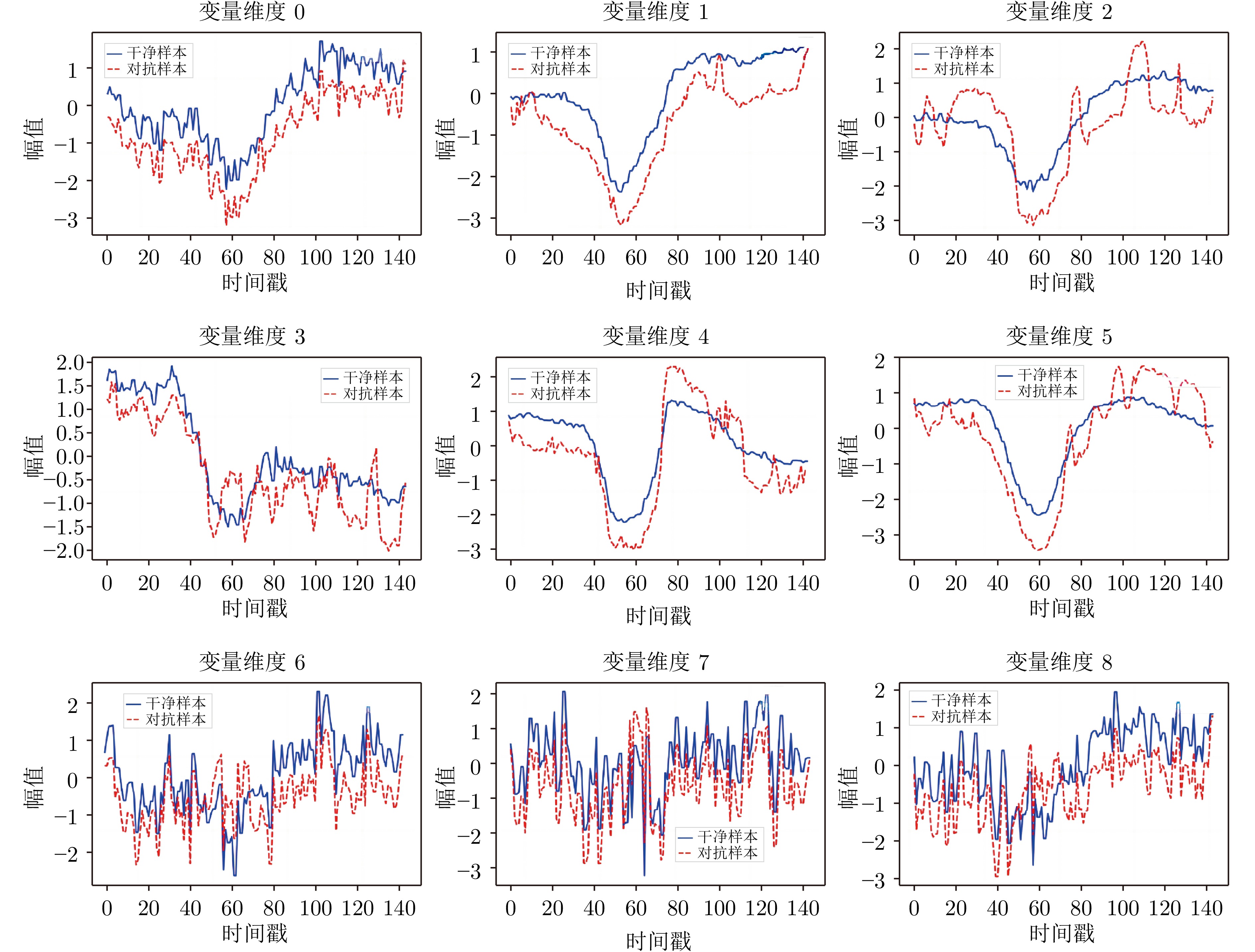

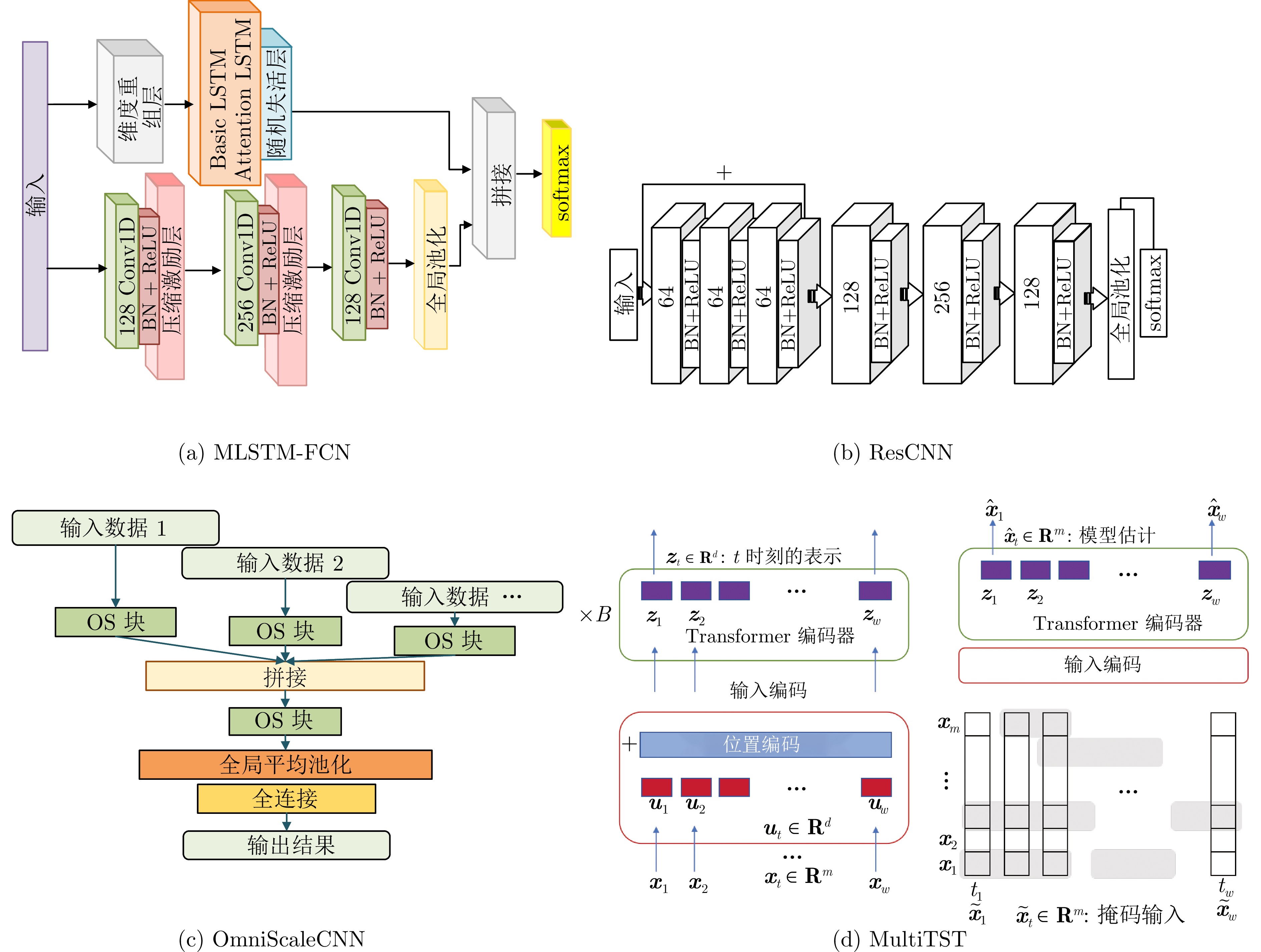

摘要: 深度学习是解决时间序列分类(Time series classification, TSC)问题的主要途径之一. 然而, 基于深度学习的TSC模型易受到对抗样本攻击, 从而导致模型分类准确率大幅度降低. 为此, 研究了TSC模型的对抗攻击防御问题, 设计了集成对抗训练(Adversarial training, AT)防御方法. 首先, 设计了一种针对TSC模型的集成对抗训练防御框架, 通过多种TSC模型和攻击方式生成对抗样本, 并用于训练目标模型. 其次, 在生成对抗样本的过程中, 设计了基于Shapelets的局部扰动算法, 并结合动量迭代的快速梯度符号法(Momentum iterative fast gradient sign method, MI-FGSM), 实现了有效的白盒攻击. 同时, 使用知识蒸馏(Knowledge distillation, KD)和基于沃瑟斯坦距离的生成对抗网络(Wasserstein generative adversarial network, WGAN)设计了针对替代模型的黑盒对抗攻击方法, 实现了攻击者对目标模型未知时的有效攻击. 在此基础上, 在对抗训练损失函数中添加Kullback-Leibler (KL)散度约束, 进一步提升了模型鲁棒性. 最后, 在多变量时间序列分类数据集UEA上验证了所提方法的有效性.Abstract: Deep learning is one of the primary approaches to solve the time series classification (TSC) problems. However, TSC models based on deep learning are susceptible to adversarial attacks, leading to a significant decrease in model classification accuracy. This paper investigates the issue of defense against adversarial attacks for TSC models and designs an ensemble adversarial training (AT) defense method. Firstly, this paper designs an ensemble adversarial training defense framework for TSC models. It generates adversarial examples using various TSC models and attack methods, which are then used to train the target model. Secondly, in the process of generating adversarial examples, a local perturbation algorithm based on Shapelets is designed, and combined with the fast gradient sign method (FGSM) based on momentum iteration to achieve effective white-box attacks. Simultaneously, the adversarial attacks against surrogate models are designed using knowledge distillation (KD) and Wasserstein generative adversarial network (WGAN), achieving effective attacks when the attacker has no knowledge of the target model. Then, Kullback-Leibler (KL) divergence is incorporated into the adversarial training loss function to further enhance model robustness. Finally, the effectiveness of the proposed approach is validated on the multivariate time series classification dataset from the UEA archive.

-

Key words:

- Time series /

- adversarial example /

- adversarial training (AT) /

- model robustness

-

表 1 分类模型训练参数设置

Table 1 The classification of the models for training parameter settings

参数名称 MultiTST ResCNN MLSTM-FCN OmniScaleCNN 优化器 SGD SGD SGD SGD 学习率 0.01 0.01 0.001 0.001 学习率衰减系数 0.1/30轮 0.1/30轮 无衰减 无衰减 动量系数 0.9 0.9 0.9 0.9 权重衰减系数 $2 \times10^{-4}$ $2 \times10^{-4}$ $2 \times10^{-4}$ $2 \times10^{-4}$ 批次大小 128 128 256 256 训练轮次 100 100 300 300 表 2 分类模型准确率

Table 2 The accuracy rate of classification models

子数据集名称 MultiTST ResCNN MLSTM-FCN OmniScaleCNN ArticularyWordRecognition 0.980 0.973 0.983 0.983 AtrialFibrillation 0.333 0.200 0.333 0.267 BasicMotions 0.700 1.000 1.000 1.000 CharacterTrajectories 0.897 0.760 0.596 0.811 Cricket 0.750 0.986 0.986 1.000 EigenWorms 0.573 0.847 0.687 – Epilepsy 0.804 0.971 0.848 0.783 ERing 0.904 0.848 0.919 0.889 EthanolConcentration 0.251 0.304 0.255 – FaceDetection 0.550 0.527 0.540 0.518 FingerMovements 0.510 0.520 0.490 0.500 HandMovementDirection 0.635 0.297 0.405 0.189 Handwriting 0.279 0.224 0.492 0.626 Heartbeat 0.293 0.756 0.707 0.712 InsectWingbeat 0.588 0.100 0.100 – JapaneseVowels 0.138 0.084 0.084 0.084 Libras 0.189 0.867 0.128 0.094 LSST 0.218 0.680 0.219 0.475 MotorImagery 0.460 0.530 0.510 – NATOPS 0.656 0.928 0.728 0.639 PEMS-SF 0.168 0.723 0.127 0.127 PenDigits 0.121 0.986 0.196 0.106 PhonemeSpectra 0.023 0.308 0.051 0.056 RacketSports 0.651 0.842 0.572 0.770 SelfRegulationSCP1 0.693 0.823 0.195 0.563 SelfRegulationSCP2 0.528 0.494 0.506 0.500 SpokenArabicDigits 0.343 0.100 0.100 0.100 StandWalkJump 0.467 0.400 0.333 0.333 UWaveGestureLibrary 0.803 0.794 0.887 0.912 DuckDuckGeese 0.320 0.620 – – 表 3 MI-FGSM的对抗攻击成功率

Table 3 The success rate of adversarial attacks based on MI-FGSM

子数据集名称 FGSM PGD MI-FGSM ArticularyWordRecognition $0.910 \pm 0.011$ $0.973 \pm 0.015$ $0.983 \pm 0.008$ BasicMotions $0.680 \pm 0.010$ $0.820 \pm 0.019$ $0.870 \pm 0.017$ CharacterTrajectories $0.596 \pm 0.063$ $0.616 \pm 0.118$ $0.622 \pm 0.046$ Cricket $0.810 \pm 0.073$ $0.910 \pm 0.023$ $0.950 \pm 0.024$ EigenWorms $0.710 \pm 0.023$ $0.840 \pm 0.011$ $0.790 \pm 0.054$ Epilepsy $0.783 \pm 0.065$ $0.804 \pm 0.083$ $0.848 \pm 0.054$ ERing $0.660 \pm 0.037$ $0.670 \pm 0.101$ $0.679 \pm 0.006$ EthanolConcentration $0.180 \pm 0.100$ $0.250 \pm 0.064$ $0.270 \pm 0.005$ FaceDetection $0.500 \pm 0.093$ $0.540 \pm 0.106$ $0.540 \pm 0.025$ FingerMovements $0.490 \pm 0.017$ $0.500 \pm 0.003$ $0.570 \pm 0.094$ HandMovementDirection $0.297 \pm 0.099$ $0.405 \pm 0.082$ $0.589 \pm 0.112$ Handwriting $0.179 \pm 0.107$ $0.224 \pm 0.073$ $0.312 \pm 0.088$ Heartbeat $0.293 \pm 0.091$ $0.326 \pm 0.117$ $0.342 \pm 0.061$ Libras $0.697 \pm 0.037$ $0.867 \pm 0.060$ $0.910 \pm 0.115$ LSST $0.720 \pm 0.024$ $0.860 \pm 0.019$ $0.820 \pm 0.084$ MotorImagery $0.110 \pm 0.048$ $0.140 \pm 0.016$ $0.140 \pm 0.030$ NATOPS $0.630 \pm 0.118$ $0.910 \pm 0.023$ $0.952 \pm 0.073$ PEMS-SF $0.668 \pm 0.089$ $0.723 \pm 0.128$ $0.757 \pm 0.117$ PenDigits $0.686 \pm 0.012$ $0.696 \pm 0.059$ $0.776 \pm 0.020$ PhonemeSpectra $0.123 \pm 0.046$ $0.208 \pm 0.001$ $0.256 \pm 0.039$ RacketSports $0.551 \pm 0.070$ $0.642 \pm 0.021$ $0.672 \pm 0.051$ SelfRegulationSCP1 $0.790 \pm 0.063$ $0.832 \pm 0.008$ $0.890 \pm 0.033$ SelfRegulationSCP2 $0.814 \pm 0.001$ $0.861 \pm 0.071$ $0.840 \pm 0.067$ StandWalkJump $0.330 \pm 0.111$ $0.500 \pm 0.063$ $0.500 \pm 0.096$ UWaveGestureLibrary $0.862 \pm 0.086$ $0.871 \pm 0.082$ $0.918 \pm 0.010$ DuckDuckGeese $0.420 \pm 0.062$ $0.490 \pm 0.002$ $0.631 \pm 0.043$ 表 4 基于Shapelets的局部扰动攻击实验结果

Table 4 Experimental results of the local perturbation attacks based on Shapelets

子数据集名称 MI-FGSM攻击 局部扰动攻击 攻击成功率 扰动大小(MAE) 攻击成功率 扰动大小(MAE) ArticularyWordRecognition $0.983 \pm 0.007$ $0.425 \pm 0.022$ $0.923 \pm 0.025$ $0.088 \pm 0.007$ BasicMotions $0.870 \pm 0.052$ $2.638 \pm 0.151$ $0.790 \pm 0.024$ $0.447 \pm 0.019$ CharacterTrajectories $0.622 \pm 0.025$ $0.040 \pm 0.003$ $0.572 \pm 0.015$ $0.011 \pm 0.001$ Cricket $0.950 \pm 0.034$ $0.947 \pm 0.020$ $0.895 \pm 0.040$ $0.247 \pm 0.016$ EigenWorms $0.790 \pm 0.053$ $18.292 \pm 1.090\,$ $0.720 \pm 0.056$ $1.941 \pm 0.101$ Epilepsy $0.848 \pm 0.030$ $0.359 \pm 0.020$ $0.808 \pm 0.022$ $0.098 \pm 0.006$ ERing $0.679 \pm 0.021$ $0.490 \pm 0.035$ $0.600 \pm 0.019$ $0.220 \pm 0.013$ EthanolConcentration $0.270 \pm 0.012$ $0.530 \pm 0.038$ $0.210 \pm 0.011$ $0.073 \pm 0.003$ FaceDetection $0.540 \pm 0.027$ $2.411 \pm 0.126$ $0.490 \pm 0.020$ $0.824 \pm 0.050$ FingerMovements $0.570 \pm 0.043$ $15.281 \pm 1.136\,$ $0.520 \pm 0.027$ $4.372 \pm 0.283$ HandMovementDirection $0.589 \pm 0.041$ $9.308 \pm 0.703$ $0.518 \pm 0.035$ $4.721 \pm 0.308$ Handwriting $0.312 \pm 0.020$ $1.019 \pm 0.070$ $0.297 \pm 0.024$ $0.241 \pm 0.012$ Heartbeat $0.342 \pm 0.027$ $1.695 \pm 0.097$ $0.314 \pm 0.025$ $0.755 \pm 0.050$ Libras $0.910 \pm 0.029$ $0.050 \pm 0.004$ $0.780 \pm 0.014$ $0.021 \pm 0.002$ LSST $0.820 \pm 0.044$ $6.831 \pm 0.547$ $0.650 \pm 0.032$ $1.928 \pm 0.154$ MotorImagery $0.140 \pm 0.009$ $25.570 \pm 1.946\,$ $0.080 \pm 0.005$ $7.880 \pm 0.596$ NATOPS $0.952 \pm 0.057$ $0.321 \pm 0.021$ $0.910 \pm 0.025$ $0.754 \pm 0.050$ PEMS-SF $0.757 \pm 0.031$ $0.050 \pm 0.004$ $0.742 \pm 0.019$ $0.025 \pm 0.002$ PenDigits $0.776 \pm 0.029$ $4.940 \pm 0.303$ $0.658 \pm 0.036$ $2.132 \pm 0.135$ PhonemeSpectra $0.256 \pm 0.015$ $8.019 \pm 0.623$ $0.256 \pm 0.020$ $3.894 \pm 0.215$ RacketSports $0.672 \pm 0.054$ $3.294 \pm 0.208$ $0.647 \pm 0.025$ $1.944 \pm 0.131$ SelfRegulationSCP1 $0.890 \pm 0.062$ $7.573 \pm 0.506$ $0.740 \pm 0.056$ $3.491 \pm 0.216$ SelfRegulationSCP2 $0.840 \pm 0.049$ $4.885 \pm 0.293$ $0.790 \pm 0.032$ $1.994 \pm 0.160$ StandWalkJump $0.500 \pm 0.029$ $0.579 \pm 0.041$ $0.500 \pm 0.022$ $0.384 \pm 0.031$ UWaveGestureLibrary $0.918 \pm 0.030$ $0.383 \pm 0.018$ $0.722 \pm 0.028$ $0.175 \pm 0.009$ DuckDuckGeese $0.631 \pm 0.050$ $14.750 \pm 1.089\,$ $0.597 \pm 0.046$ $4.579 \pm 0.302$ 表 5 针对替代模型的黑盒对抗攻击实验结果

Table 5 Experimental results of the black-box adversarial attacks for the surrogate model

子数据集名称 知识蒸馏 黑盒对抗攻击 原始模型准确率 替代模型准确率 攻击成功率 扰动大小 ArticularyWordRecognition $0.973 \pm 0.014$ $0.966 \pm 0.016$ $0.923 \pm 0.025$ $0.352 \pm 0.026$ BasicMotions $1.000 \pm 0.000$ $1.000 \pm 0.000$ $0.740 \pm 0.059$ $1.722 \pm 0.114$ CharacterTrajectories $0.760 \pm 0.036$ $0.720 \pm 0.027$ $0.544 \pm 0.029$ $0.075 \pm 0.003$ Cricket $0.986 \pm 0.011$ $0.937 \pm 0.018$ $0.825 \pm 0.033$ $1.474 \pm 0.112$ EigenWorms $0.847 \pm 0.038$ $0.810 \pm 0.042$ $0.690 \pm 0.048$ $20.120 \pm 1.201\,$ Epilepsy $0.971 \pm 0.011$ $0.359 \pm 0.012$ $0.790 \pm 0.037$ $0.419 \pm 0.021$ ERing $0.848 \pm 0.033$ $0.794 \pm 0.031$ $0.600 \pm 0.048$ $0.575 \pm 0.021$ FaceDetection $0.527 \pm 0.028$ $0.518 \pm 0.020$ $0.500 \pm 0.038$ $2.411 \pm 0.171$ FingerMovements $0.520 \pm 0.028$ $0.507 \pm 0.035$ $0.670 \pm 0.032$ $15.281 \pm 1.176\,$ Heartbeat $0.756 \pm 0.033$ $0.749 \pm 0.049$ $0.411 \pm 0.025$ $3.117 \pm 0.147$ Libras $0.867 \pm 0.051$ $0.822 \pm 0.058$ $0.740 \pm 0.042$ $0.054 \pm 0.003$ LSST $0.680 \pm 0.041$ $0.660 \pm 0.031$ $0.630 \pm 0.046$ $7.447 \pm 0.437$ MotorImagery $0.530 \pm 0.019$ $0.440 \pm 0.033$ $0.120 \pm 0.008$ $27.430 \pm 1.302\,$ NATOPS $0.928 \pm 0.039$ $0.733 \pm 0.041$ $0.850 \pm 0.061$ $2.120 \pm 0.093$ PEMS-SF $0.723 \pm 0.023$ $0.711 \pm 0.028$ $0.714 \pm 0.041$ $0.072 \pm 0.002$ PenDigits $0.986 \pm 0.004$ $0.981 \pm 0.004$ $0.410 \pm 0.024$ $5.131 \pm 0.381$ PhonemeSpectra $0.308 \pm 0.023$ $0.308 \pm 0.021$ $0.211 \pm 0.020$ $7.914 \pm 0.513$ RacketSports $0.842 \pm 0.041$ $0.790 \pm 0.057$ $0.600 \pm 0.048$ $3.721 \pm 0.191$ SelfRegulationSCP1 $0.823 \pm 0.045$ $0.819 \pm 0.057$ $0.670 \pm 0.037$ $8.780 \pm 0.512$ SelfRegulationSCP2 $0.494 \pm 0.037$ $0.317 \pm 0.018$ $0.660 \pm 0.021$ $5.130 \pm 0.391$ StandWalkJump $0.400 \pm 0.033$ $0.320 \pm 0.029$ $0.500 \pm 0.027$ $0.584 \pm 0.023$ UWaveGestureLibrary $0.794 \pm 0.039$ $0.788 \pm 0.034$ $0.625 \pm 0.041$ $0.693 \pm 0.045$ DuckDuckGeese $0.620 \pm 0.041$ $0.570 \pm 0.048$ $0.490 \pm 0.035$ $12.750 \pm 0.821\,$ 表 6 C&W攻击下集成不同攻击的防御结果

Table 6 The defense results of ensemble different attacks under C&W attacks

子数据集名称 原始模型ResCNN 白盒对抗训练 黑盒对抗训练 白盒 + 黑盒对抗训练 ArticularyWordRecognition 0.427 0.426 0.517 0.683 AtrialFibrillation 0.000 0.200 0.200 0.222 BasicMotions 0.250 0.550 0.573 0.625 CharacterTrajectories 0.060 0.417 0.448 0.537 Cricket 0.264 0.528 0.598 0.778 DuckDuckGeese 0.210 0.320 0.352 0.375 EigenWorms 0.111 0.222 0.222 0.256 Epilepsy 0.029 0.406 0.500 0.565 ERing 0.159 0.559 0.600 0.674 EthanolConcentration 0.002 0.100 0.150 0.200 FaceDetection 0.500 0.523 0.523 0.523 FingerMovements 0.470 0.490 0.490 0.490 HandMovementDirection 0.203 0.216 0.216 0.216 Handwriting 0.034 0.095 0.095 0.149 Heartbeat 0.210 0.332 0.486 0.567 InsectWingbeat 0.100 0.100 0.100 0.100 JapaneseVowels 0.073 0.352 0.483 0.576 Libras 0.083 0.217 0.413 0.667 LSST 0.003 0.050 0.050 0.050 MotorImagery 0.111 0.244 0.244 0.256 NATOPS 0.050 0.083 0.100 0.150 PEMS-SF 0.050 0.100 0.100 0.100 PenDigits 0.100 0.300 0.300 0.400 PhonemeSpectra 0.003 0.020 0.020 0.050 RacketSports 0.083 0.200 0.200 0.300 SelfRegulationSCP1 0.111 0.200 0.200 0.222 SelfRegulationSCP2 0.050 0.100 0.100 0.100 SpokenArabicDigits 0.020 0.030 0.030 0.050 StandWalkJump 0.050 0.100 0.100 0.100 UWaveGestureLibrary 0.083 0.200 0.200 0.300 表 7 C&W攻击下集成不同数量分类模型的防御结果

Table 7 The defense results of ensemble different numbers of classification models under C&W attacks

子数据集名称 单一模型 集成两个模型 集成三个模型 集成防御 集成防御 + KL散度 LSTM-FWED ArticularyWordRecognition 0.683 0.837 0.913 0.931 0.977 0.931 AtrialFibrillation 0.222 0.333 0.333 0.333 0.333 0.333 BasicMotions 0.625 0.715 0.753 0.796 0.815 0.875 CharacterTrajectories 0.537 0.567 0.747 0.751 0.751 0.543 Cricket 0.778 0.811 0.861 0.880 0.880 0.628 DuckDuckGeese 0.375 0.350 0.410 0.540 0.560 0.480 EigenWorms 0.256 0.256 0.433 0.472 0.498 0.378 Epilepsy 0.565 0.657 0.696 0.746 0.746 0.622 ERing 0.674 0.696 0.763 0.815 0.825 0.714 EthanolConcentration 0.200 0.180 0.200 0.300 0.300 0.200 FaceDetection 0.523 0.523 0.523 0.523 0.523 0.545 FingerMovements 0.490 0.490 0.490 0.510 0.510 0.520 HandMovementDirection 0.216 0.216 0.216 0.267 0.311 0.247 Handwriting 0.149 0.155 0.171 0.171 0.171 0.095 Heartbeat 0.567 0.558 0.722 0.730 0.730 0.756 InsectWingbeat 0.100 0.100 0.100 0.100 0.100 0.100 JapaneseVowels 0.576 0.759 0.844 0.900 0.900 0.844 Libras 0.667 0.598 0.733 0.746 0.746 0.568 LSST 0.050 0.050 0.050 0.169 0.169 0.350 MotorImagery 0.256 0.244 0.300 0.311 0.311 0.433 NATOPS 0.517 0.517 0.722 0.783 0.783 0.560 PEMS-SF 0.301 0.202 0.579 0.588 0.611 0.473 PenDigits 0.590 0.699 0.865 0.913 0.930 0.753 PhonemeSpectra 0.059 0.059 0.059 0.044 0.044 0.059 RacketSports 0.737 0.724 0.757 0.803 0.803 0.837 SelfRegulationSCP1 0.357 0.416 0.498 0.536 0.536 0.618 SelfRegulationSCP2 0.311 0.299 0.311 0.539 0.562 0.493 SpokenArabicDigits 0.100 0.100 0.100 0.100 0.100 0.100 StandWalkJump 0.400 0.375 0.533 0.53 0.533 0.533 UWaveGestureLibrary 0.612 0.712 0.806 0.838 0.855 0.622 A1 多变量时间序列分类数据集UEA

A1 Multivariate time series classification dataset UEA

子数据集名称 训练样本数 测试样本数 维度 序列长度 类别数 ArticularyWordRecognition 275 300 9 144 25 AtrialFibrillation 15 15 2 640 3 BasicMotions 40 40 6 100 4 CharacterTrajectories 1 422 1 436 3 182 20 Cricket 108 72 6 1 197 12 DuckDuckGeese 50 50 1 345 270 5 EigenWorms 128 131 6 17 984 5 Epilepsy 137 138 3 206 4 EthanolConcentration 261 263 3 1 751 4 ERing 30 270 4 65 6 FaceDetection 5 890 3 524 144 62 2 FingerMovements 316 100 28 50 2 HandMovementDirection 160 74 10 400 4 Handwriting 150 850 3 152 26 Heartbeat 204 205 61 405 2 InsectWingbeat 30 000 20 000 200 30 10 JapaneseVowels 270 370 12 29 9 Libras 180 180 2 45 15 LSST 2 459 2 466 6 36 14 MotorImagery 278 100 64 3 000 2 NATOPS 180 180 24 51 6 PenDigits 7 494 3 498 2 8 10 PEMS-SF 267 173 963 144 7 Phoneme 3 315 3 353 11 217 39 RacketSports 151 152 6 30 4 SelfRegulationSCP1 268 293 6 896 2 SelfRegulationSCP2 200 180 7 1 152 2 SpokenArabicDigits 6 599 2 199 13 93 10 StandWalkJump 12 15 4 2 500 3 UWaveGestureLibrary 120 320 3 315 8 -

[1] 夏元清, 闫策, 王笑京, 宋向辉. 智能交通信息物理融合云控制系统. 自动化学报, 2019, 45(1): 132−142Xia Yuan-Qing, Yan Ce, Wang Xiao-Jing, Song Xiang-Hui. Intelligent transportation cyber-physical cloud control systems. Acta Automatica Sinica, 2019, 45(1): 132−142 [2] 张淇瑞, 孟思琪, 王兰豪, 刘坤, 代伟. 隐蔽攻击下信息物理系统的安全输出反馈控制. 自动化学报, 2024, 50(7): 1363−1372Zhang Qi-Rui, Meng Si-Qi, Wang Lan-Hao, Liu Kun, Dai Wei. Secure output-feedback control for cyber-physical systems under stealthy attacks. Acta Automatica Sinica, 2024, 50(7): 1363−1372 [3] Zhang Z, Li W, Bao R, Harimoto K, Wu Y, Sun X. ASAT: Adaptively scaled adversarial training in time series. Neurocomputing, 2023, 522: 11−23 doi: 10.1016/j.neucom.2022.12.013 [4] Bai T, Luo J, Zhao J, Wen B, Wang Q. Recent advances in adversarial training for adversarial robustness. arXiv preprint arXiv: 2102.01356, 2021. [5] Goodfellow I. Defense against the dark arts: An overview of adversarial example security research and future research directions. arXiv preprint arXiv: 1806.04169, 2018. [6] Goodfellow I J, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. arXiv preprint arXiv: 1412.6572, 2014. [7] 张耀元, 原继东, 刘海洋, 王志海, 赵培翔. 基于局部扰动的时间序列预测对抗攻击. 软件学报, 2024, 35 (11): 5210−5227 doi: 10.13328/j.cnki.jos.007056Zhang Yao-Yuan, Yuan Ji-Dong, Liu Hai-Yang, Wang Zhi-Hai, Zhao Pei-Xiang. Adversarial attack of time series forecasting based on local perturbations. Journal of Software, 2024, 35 (11): 5210−5227 doi: 10.13328/j.cnki.jos.007056 [8] Kurakin A, Goodfellow I J, Bengio S. Adversarial examples in the physical world. In: Proceedings of the International Conference on Learning Representations. Toulon, France: ICLR, 2017. [9] Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv: 1706.06083, 2017. [10] Liu H Y, Ge Z J, Zhou Z Y, Shang F H, Liu Y Y, Jiao L C. Gradient correction for white-box adversarial attacks. IEEE Transactions on Neural Networks and Learning Systems, DOI: 10.1109/TNNLS.2023.3315414 [11] Carlini N, Wagner D. Towards evaluating the robustness of neural networks. In: Proceedings of the IEEE Symposium on Security and Privacy. California, USA: IEEE, 2017. 39−57 [12] Zhang H, Yu Y, Jiao J, Xing E, El Ghaoui L, Jordan M. Theoretically principled trade-off between robustness and accuracy. In: Proceedings of the International Conference on Machine Learning. California, USA: ICML, 2019. 7472−7482 [13] Tramèr F, Kurakin A, Papernot N, Goodfellow I, Boneh D, McDaniel P. Ensemble adversarial training: Attacks and defenses. arXiv preprint arXiv: 1705.07204, 2017. [14] Yang W, Yuan J, Wang X, Zhao P. TSadv: Black-box adversarial attack on time series with local perturbations. Engineering Applications of Artificial Intelligence, 2022, 114: Article No. 105218 doi: 10.1016/j.engappai.2022.105218 [15] Harford S, Karim F, Darabi H. Generating adversarial samples on multivariate time series using variational autoencoders. IEEE/CAA Journal of Automatica Sinica, 2021, 8(9): 1523−1538 doi: 10.1109/JAS.2021.1004108 [16] Qi S, Chen J, Chen P, Wen P, Shan W, Xiong L. An effective WGAN-based anomaly detection model for IoT multivariate time series. In: Proceedings of Pacific-Asia Conference on Knowledge Discovery and Data Mining. Osaka, Japan: Springer Nature Switzerland, 2023. 80−91 [17] 陈晋音, 沈诗婧, 苏蒙蒙, 郑海斌, 熊晖. 车牌识别系统的黑盒对抗攻击. 自动化学报, 2021, 47(1): 121−135Chen Jin-Yin, Shen Shi-Jing, Su Meng-Meng, Zheng Hai-Bin, Xiong Hui. Black-box adversarial attack on license plate recognition system. Acta Automatica Sinica, 2021, 47(1): 121−135 [18] Baluja S, Fischer I. Adversarial transformation networks: Learning to generate adversarial examples. arXiv preprint arXiv: 1703.09387, 2017. [19] Xiao C, Li B, Zhu J Y, He W, Liu M, Song D. Generating adversarial examples with adversarial networks. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence. Stockholm, Sweden: AAAI, 2018. 3905−3911 [20] Li J, Yang Y, Sun J S, Tomsovic K, Qi H. ConAML: Constrained adversarial machine learning for cyber-physical systems. In: Proceedings of the ACM Asia Conference on Computer and Communications Security. New York, USA: ACM, 2021. 52−66 [21] Karim F, Majumdar S, Darabi H. Adversarial attacks on time series. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 43(10): 3309−3320 [22] Qian Z, Huang K, Wang Q F, Zhang X Y. A survey of robust adversarial training in pattern recognition: Fundamental, theory, and methodologies. Pattern Recognition, 2022, 131: Article No. 108889 doi: 10.1016/j.patcog.2022.108889 [23] Zhang H, Chen H, Xiao C, Gowal S, Stanforth R, Li B, et al. Towards stable and efficient training of verifiably robust neural networks. In: Proceedings of the International Conference on Learning Representations. New Orleans, USA: ICLR, 2019. [24] Shafahi A, Najibi M, Ghiasi M A, Xu Z, Dickerson J, Studer C, et al. Adversarial training for free! In: Proceedings of the 33rd International Conference on Neural Information Processing Systems. Vancouver BC, Canada: Curran Associates Inc, 2019. 3358−3369 [25] Guo X, Zhang R, Zheng Y, Mao Y. Robust regularization with adversarial labelling of perturbed samples. arXiv preprint arXiv: 2105.13745, 2021. [26] Liu X, Hsieh C J. Rob-GAN: Generator, discriminator, and adversarial attacker. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. California, USA: IEEE, 2019. 11234−11243 [27] 陈晋音, 吴长安, 郑海斌, 王巍, 温浩. 基于通用逆扰动的对抗攻击防御方法. 自动化学报, 2023, 49(10): 2172−2187Chen Jin-Yin, Wu Chang-An, Zheng Hai-Bin, Wang Wei, Wen Hao. Universal inverse perturbation defense against adversarial attacks. Acta Automatica Sinica, 2023, 49(10): 2172−2187 [28] Liu L, Park Y, Hoang T N, Hasson H, Huan J. Robust multivariate time-series forecasting: Adversarial attacks and defense mechanisms. In: Proceedings of the International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2022. [29] Liu Y, Xu L, Yang S, Zhao D, Li X. Adversarial sample attacks and defenses based on LSTM-ED in industrial control systems. Computers and Security, 2024, 140: Article No. 103750 [30] Khan M, Wang H, Ngueilbaye A, Elfatyany A. End-to-end multivariate time series classification via hybrid deep learning architectures. Personal and Ubiquitous Computing, 2023, 27(2): 177−191 doi: 10.1007/s00779-020-01447-7 [31] Zou X, Wang Z, Li Q, Sheng W. Integration of residual network and convolutional neural network along with various activation functions and global pooling for time series classification. Neurocomputing, 2019, 367: 39−45 doi: 10.1016/j.neucom.2019.08.023 [32] He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Nevada, USA: IEEE, 2016. 770−778 [33] Tang W, Long G, Liu L, Zhou T, Blumenstein M, Jiang J. Omni-Scale CNNs: A simple and effective kernel size configuration for time series classification. In: Proceedings of the International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2022. [34] Zerveas G, Jayaraman S, Patel D, Eickhoff C. A transformer-based framework for multivariate time series representation learning. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. New York, USA: Association for Computing Machinery, 2021. 2114−2124 [35] Bagnall A, Dau H A, Lines J, Flynn M, Large J, Bostrom A, et al. The UEA multivariate time series classification archive. arXiv preprint arXiv: 1811.00075, 2018. [36] 夏元清. 云控制系统及其面临的挑战. 自动化学报, 2016, 42(1): 1−12Xia Yuan-Qing. Cloud control systems and their challenges. Acta Automatica Sinica, 2016, 42(1): 1−12 -

下载:

下载: