Virtual Tube Visual Obstacle Avoidance for UAV Based on Deep Reinforcement Learning

-

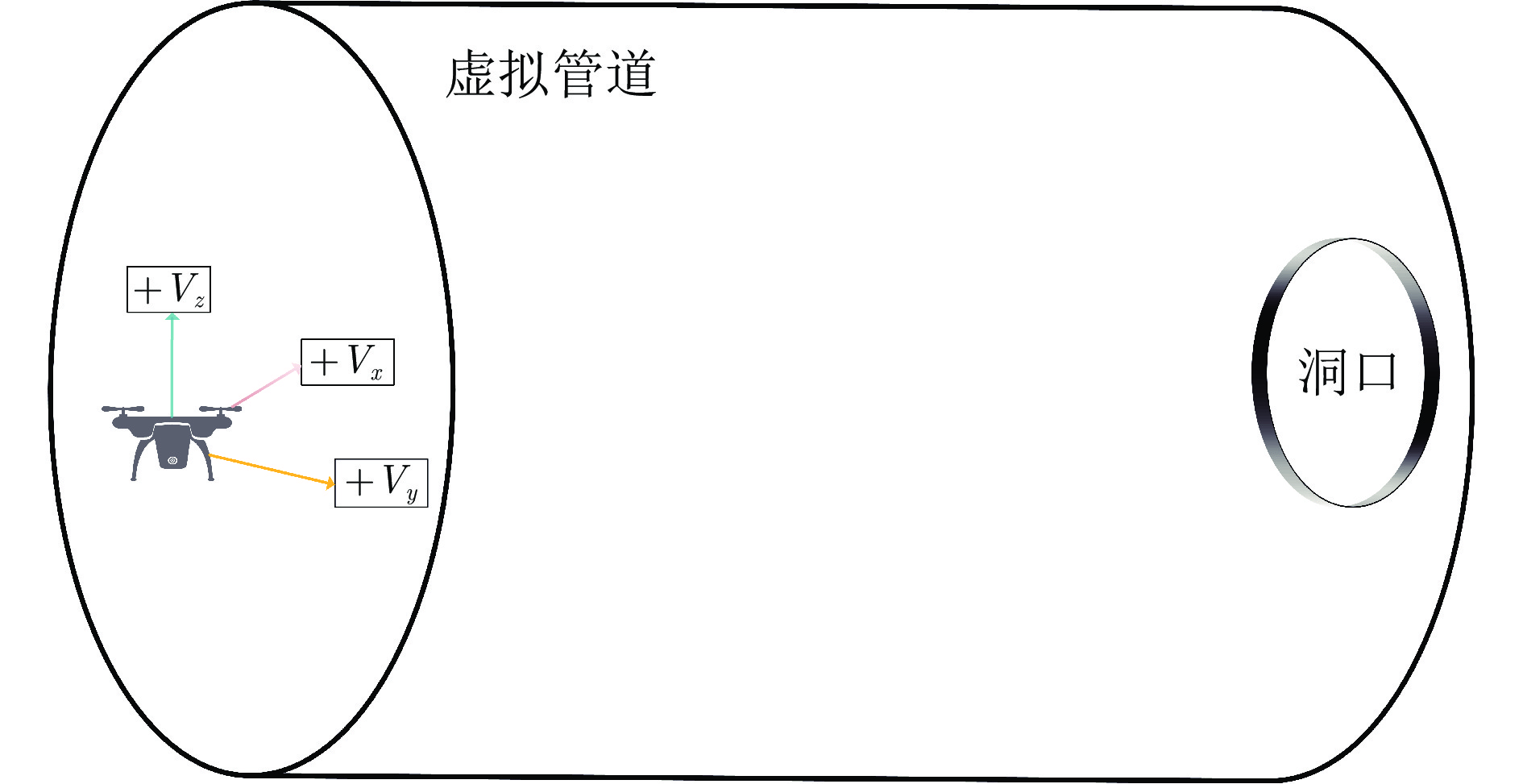

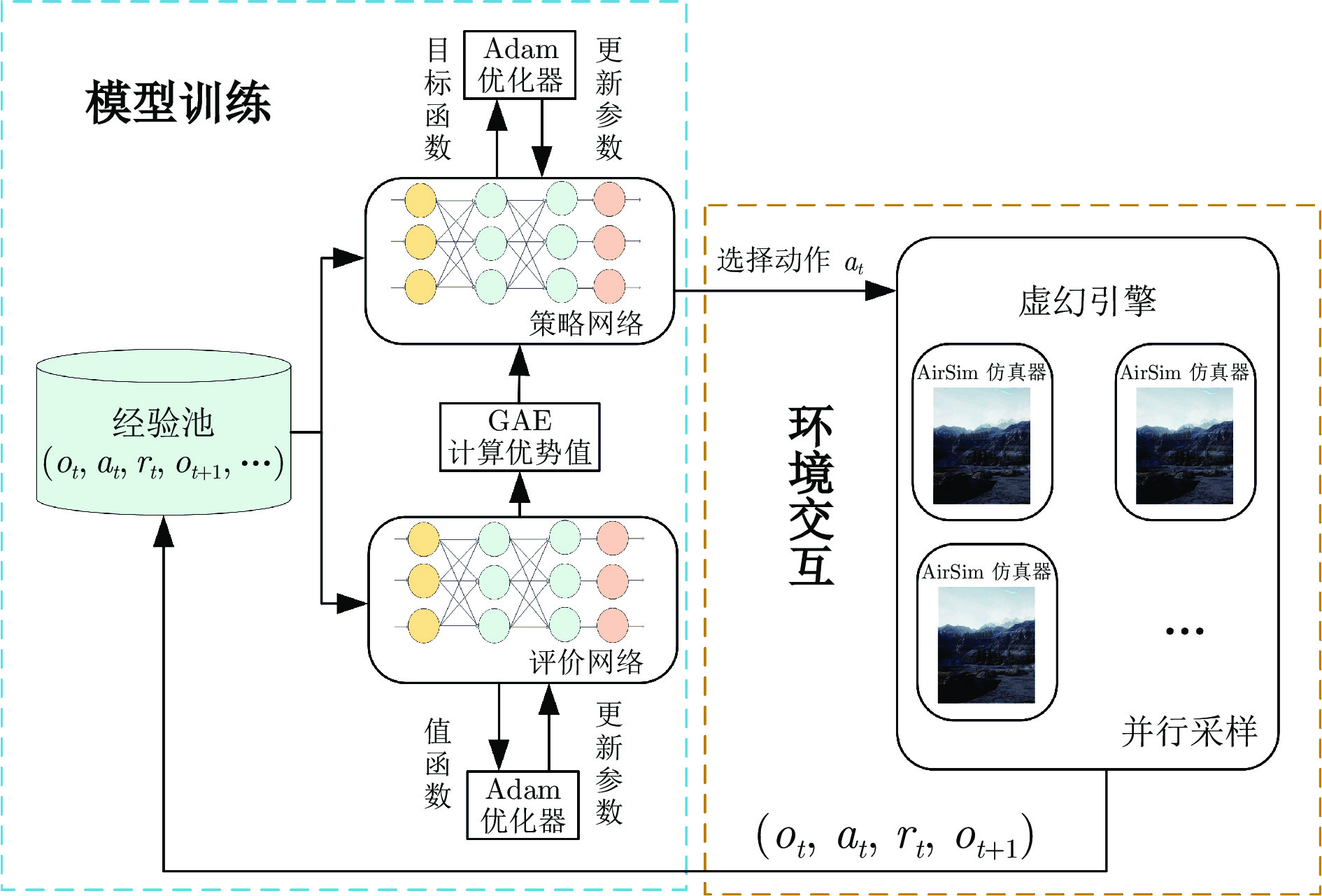

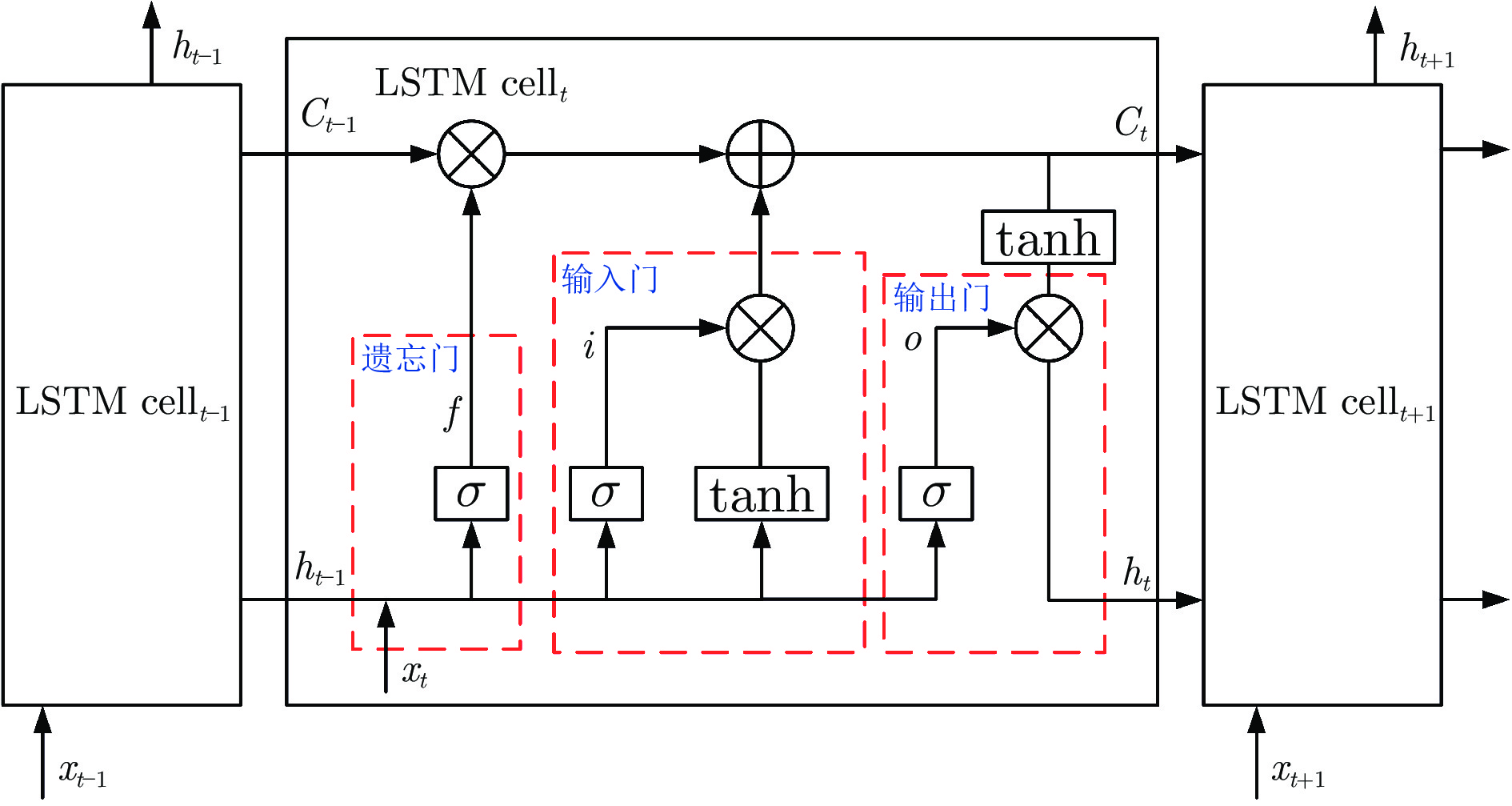

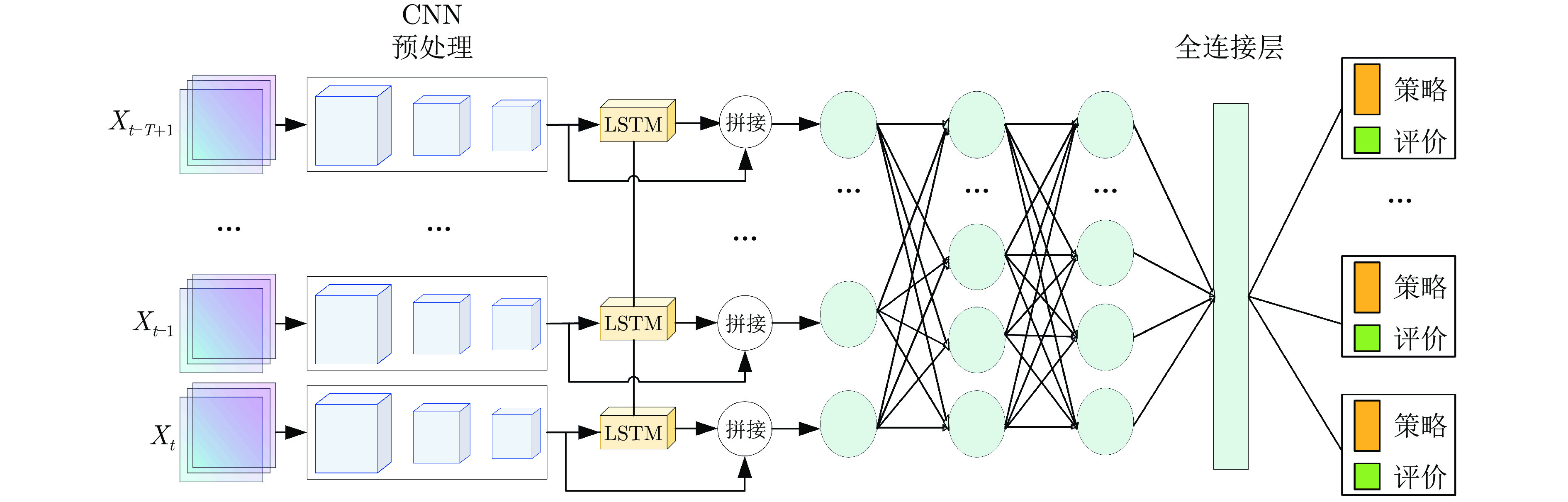

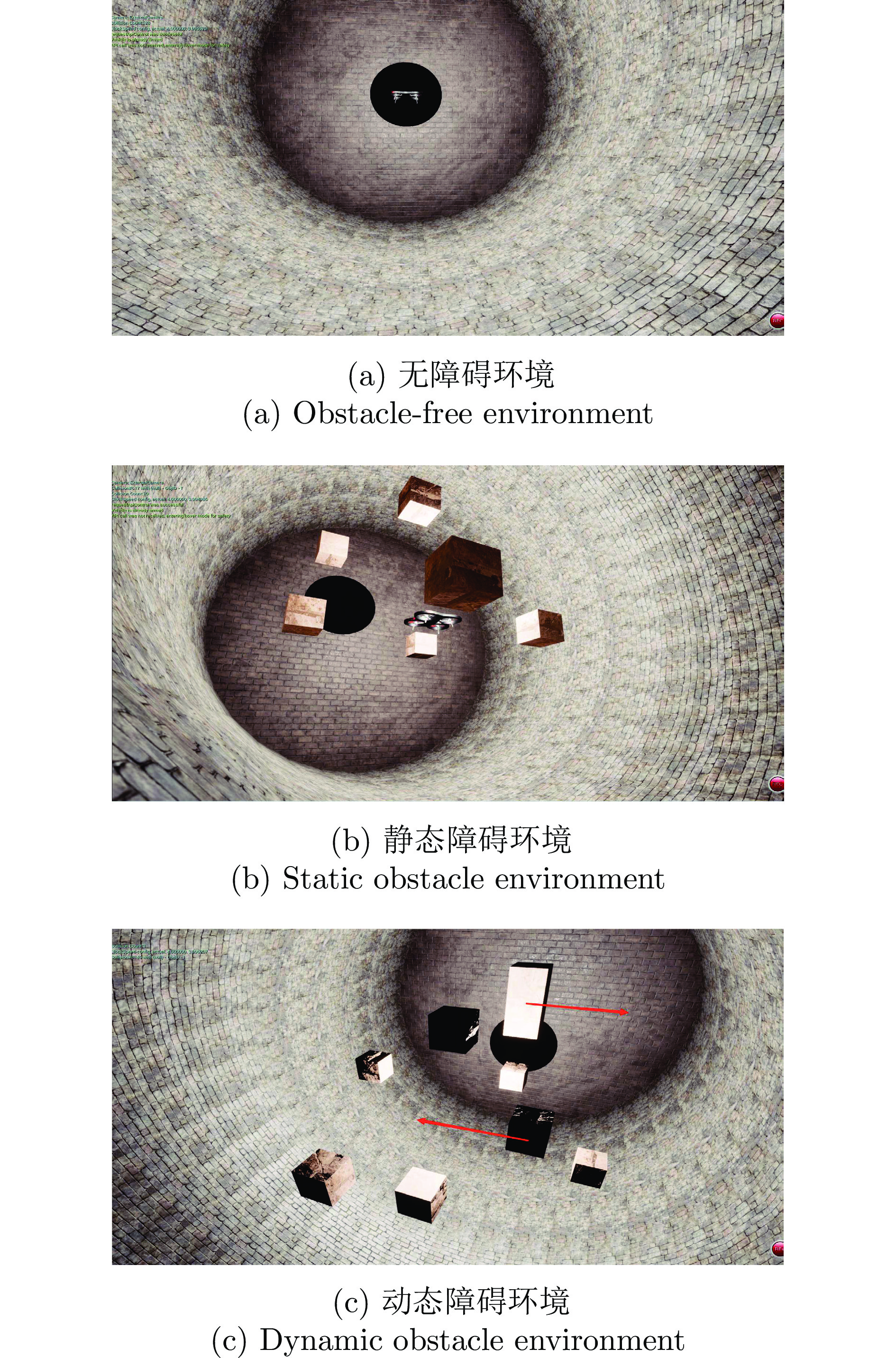

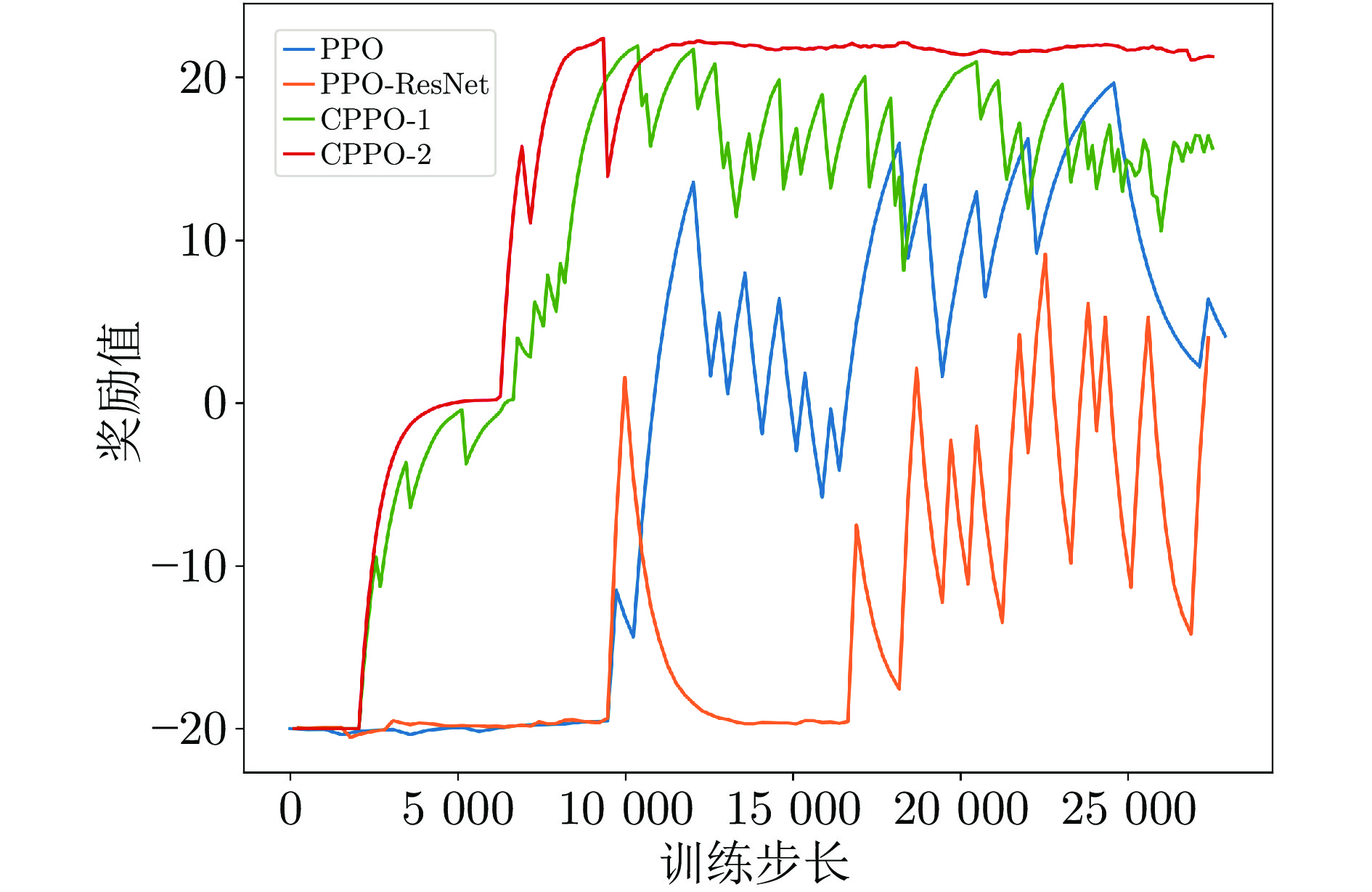

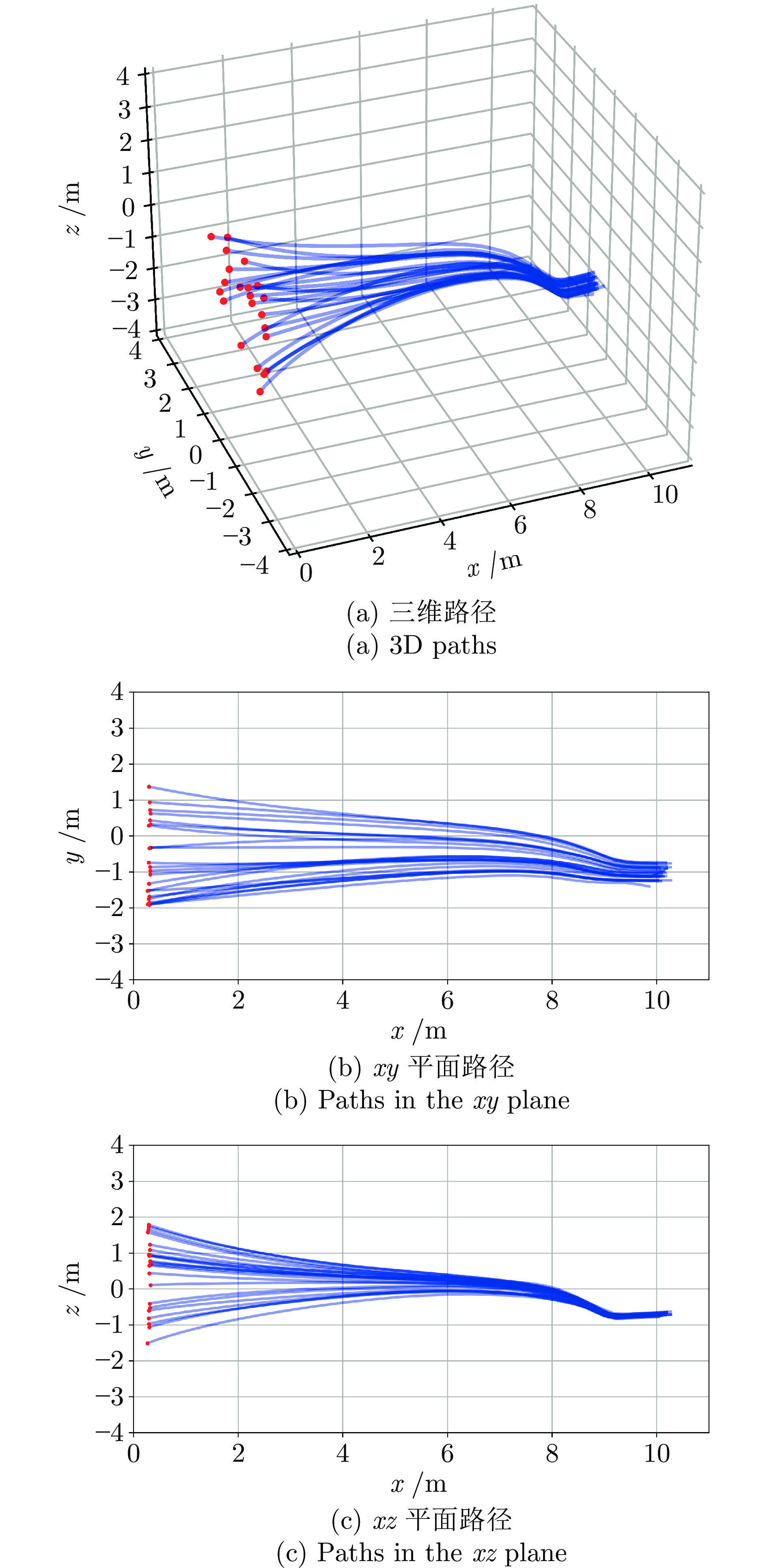

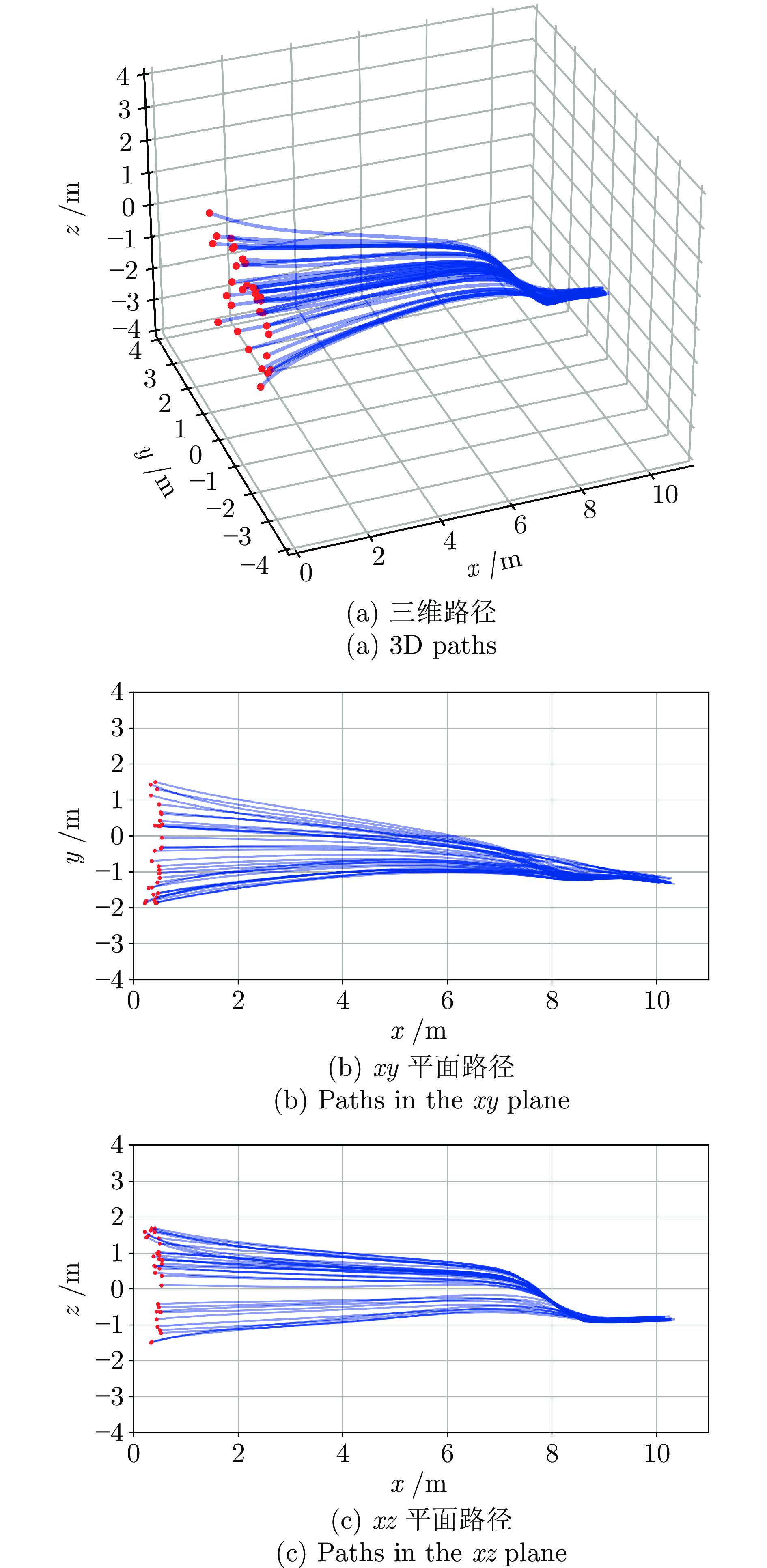

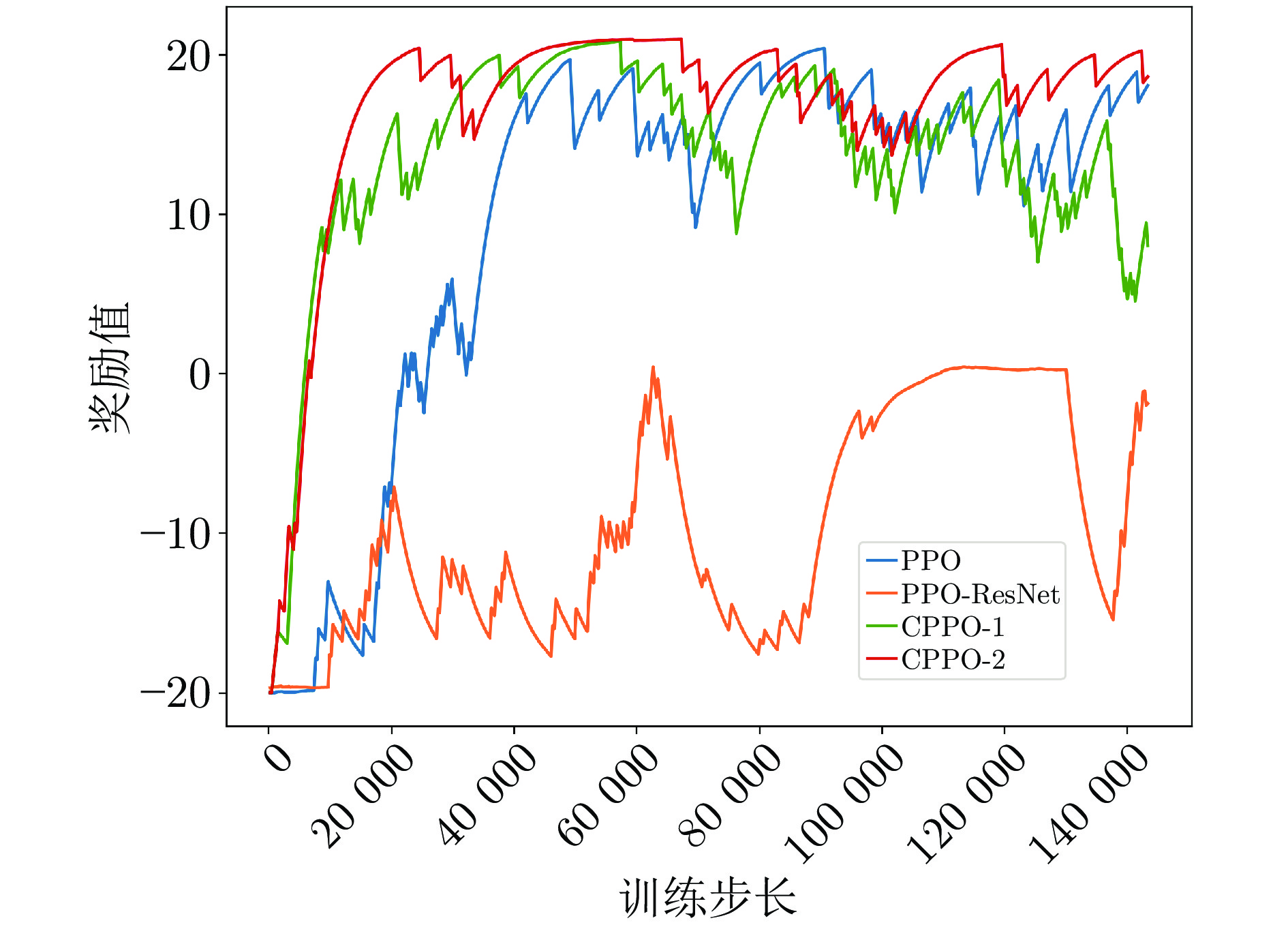

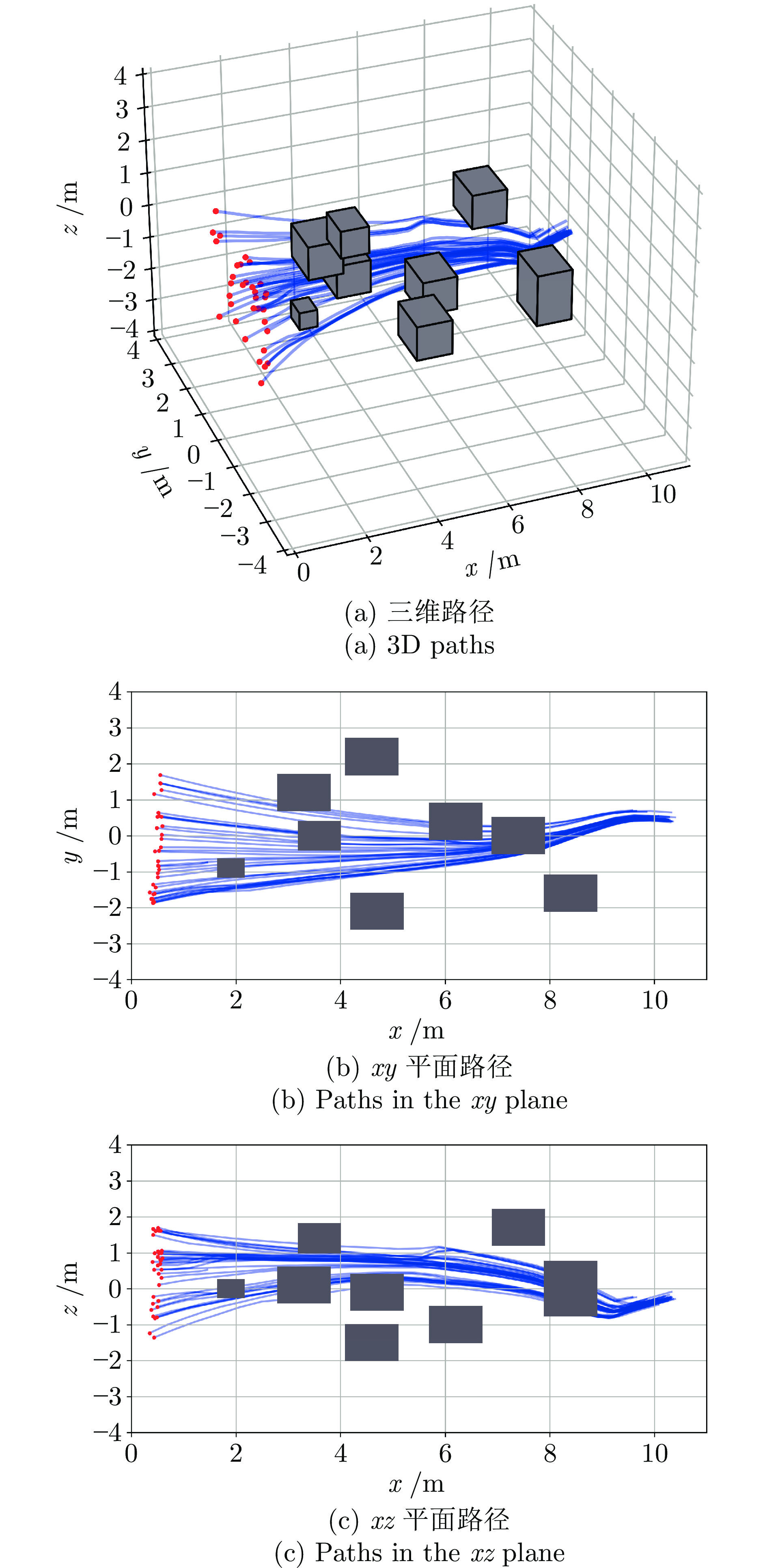

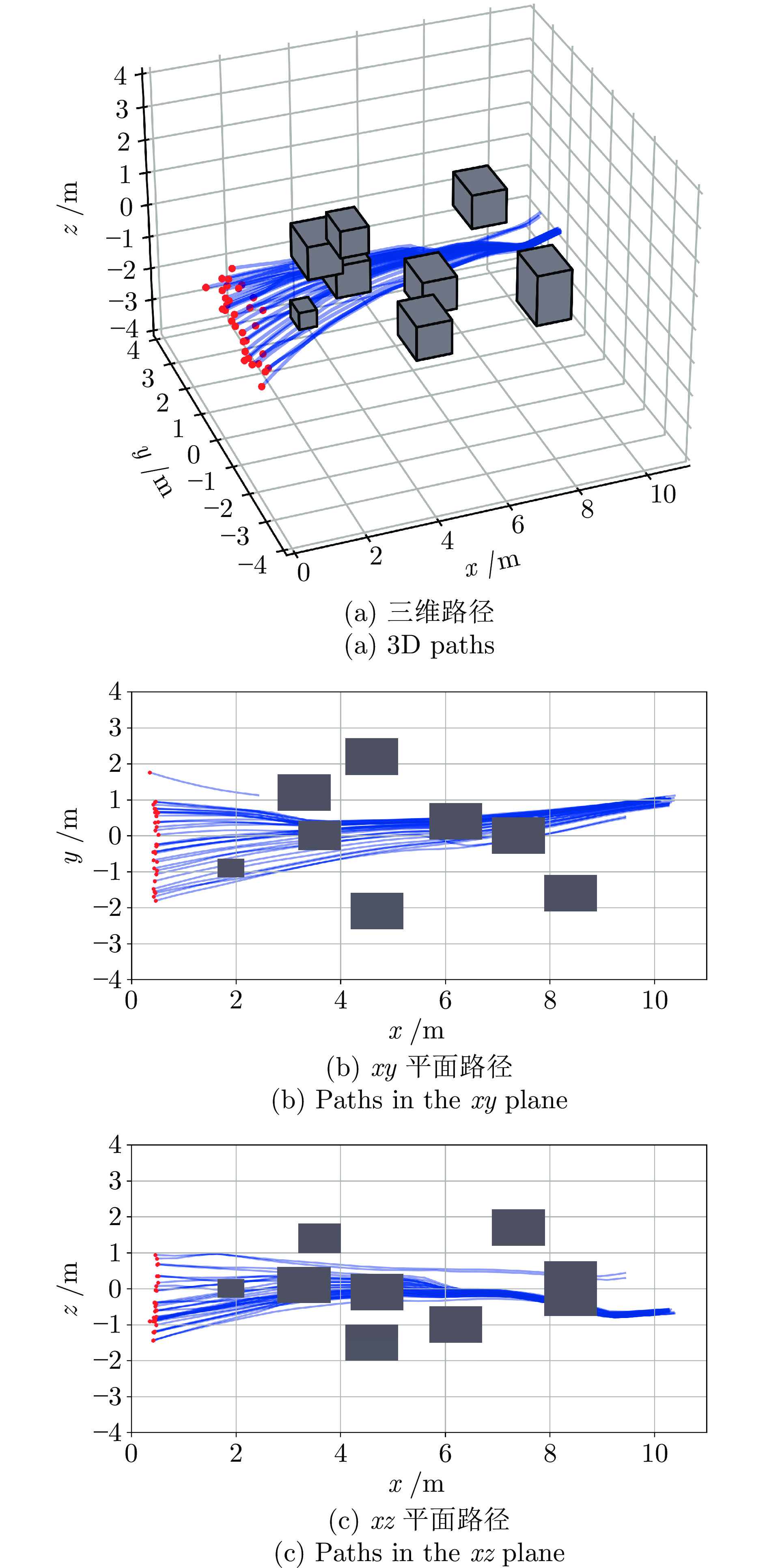

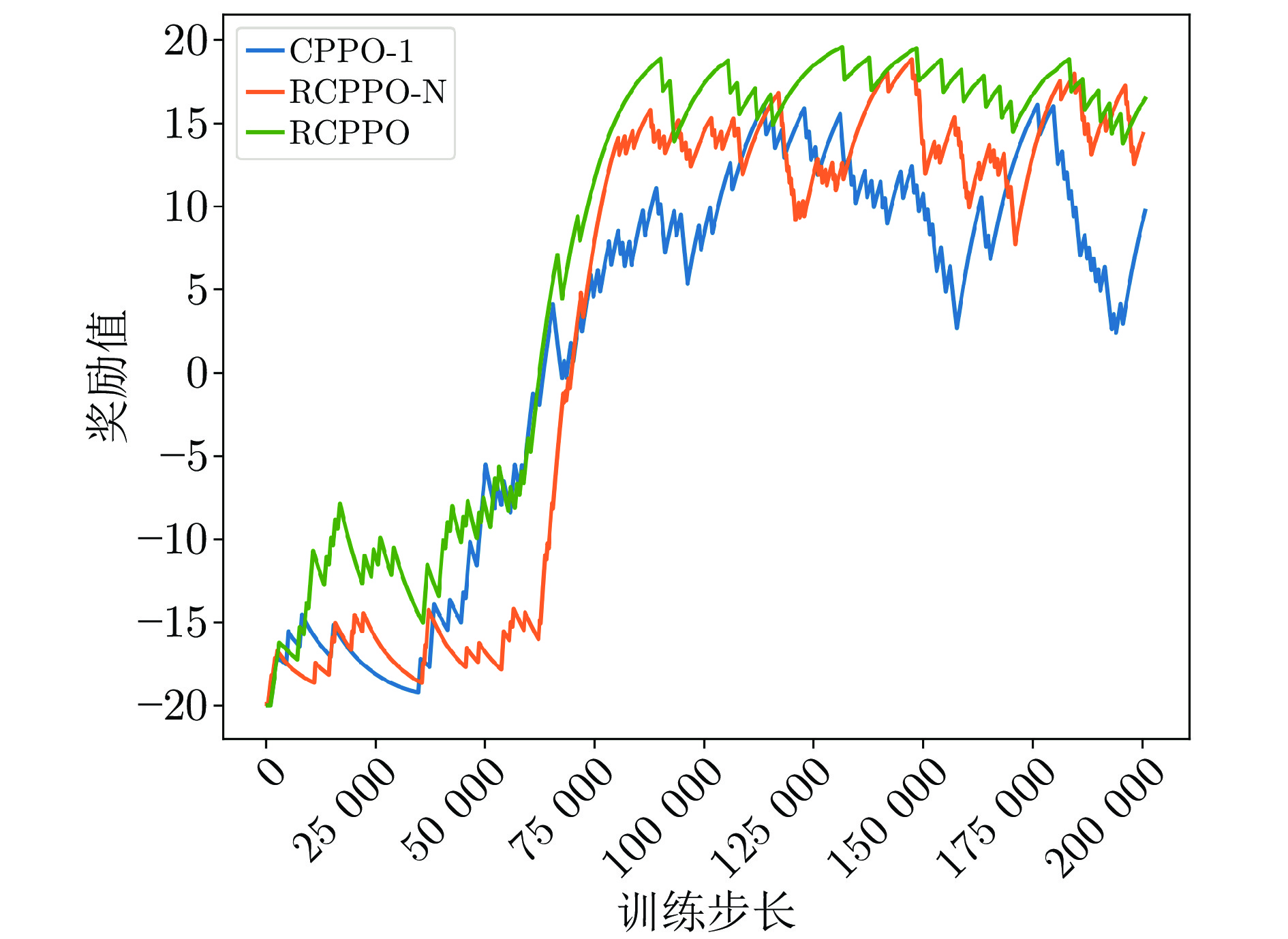

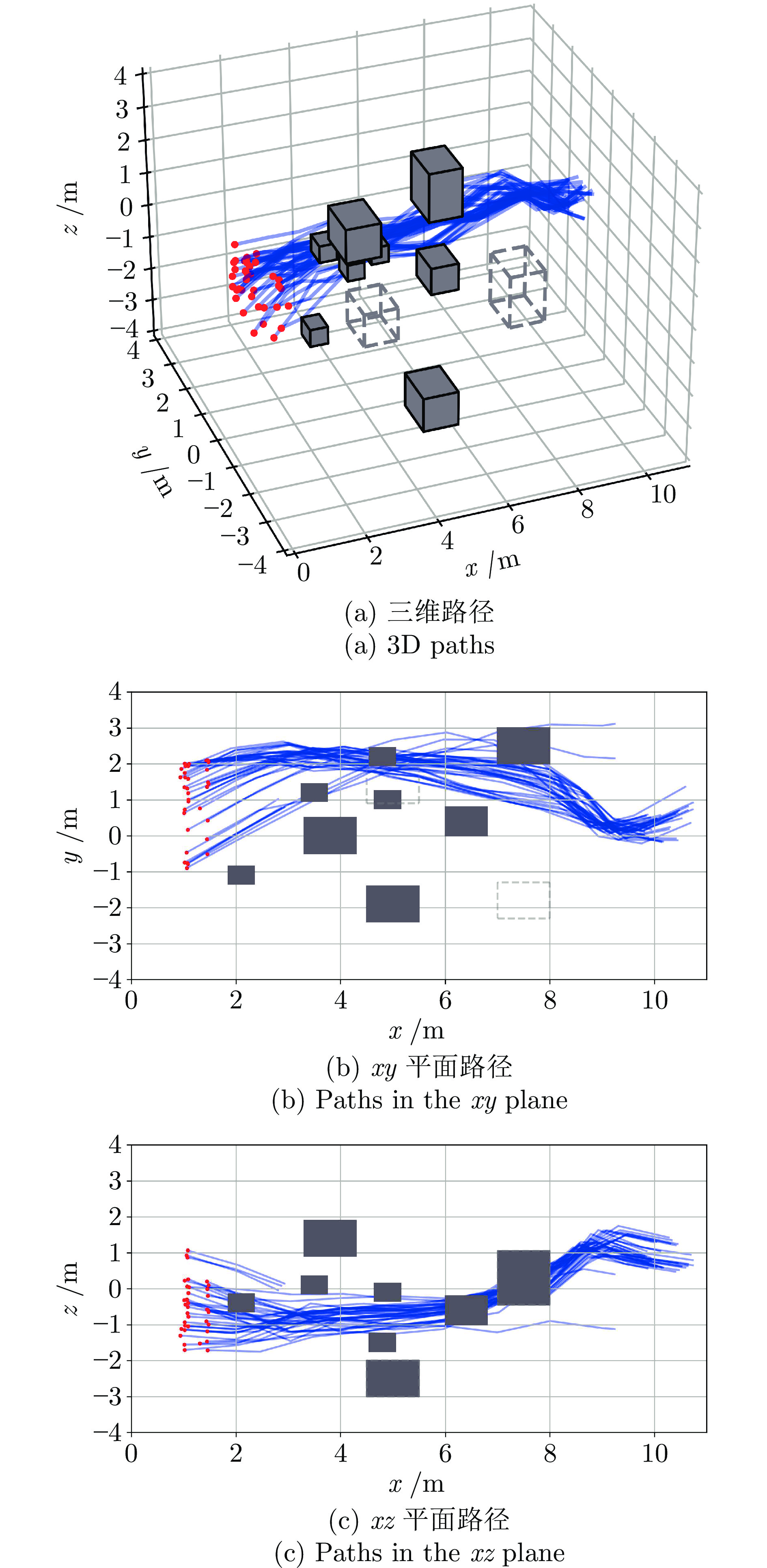

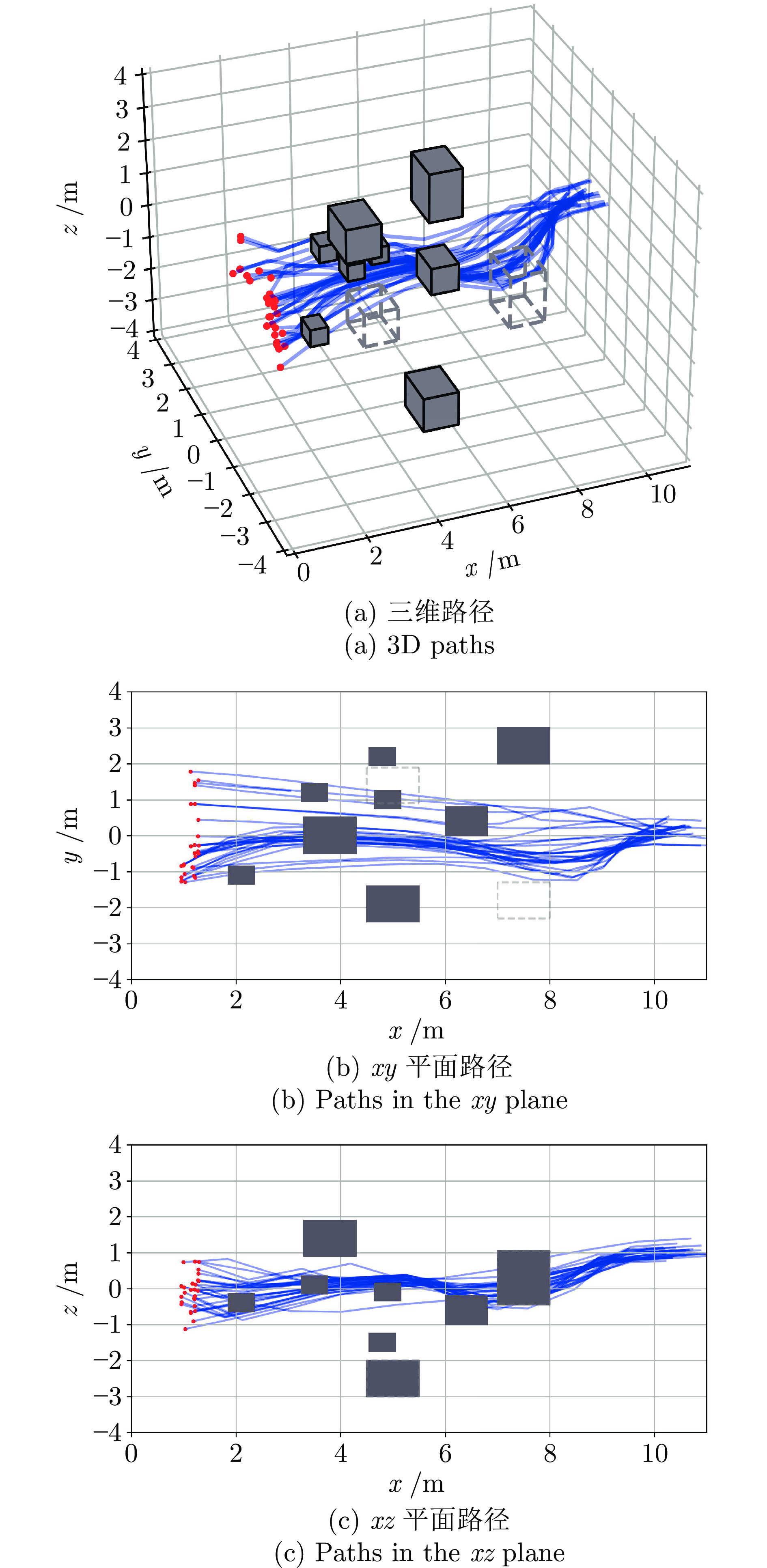

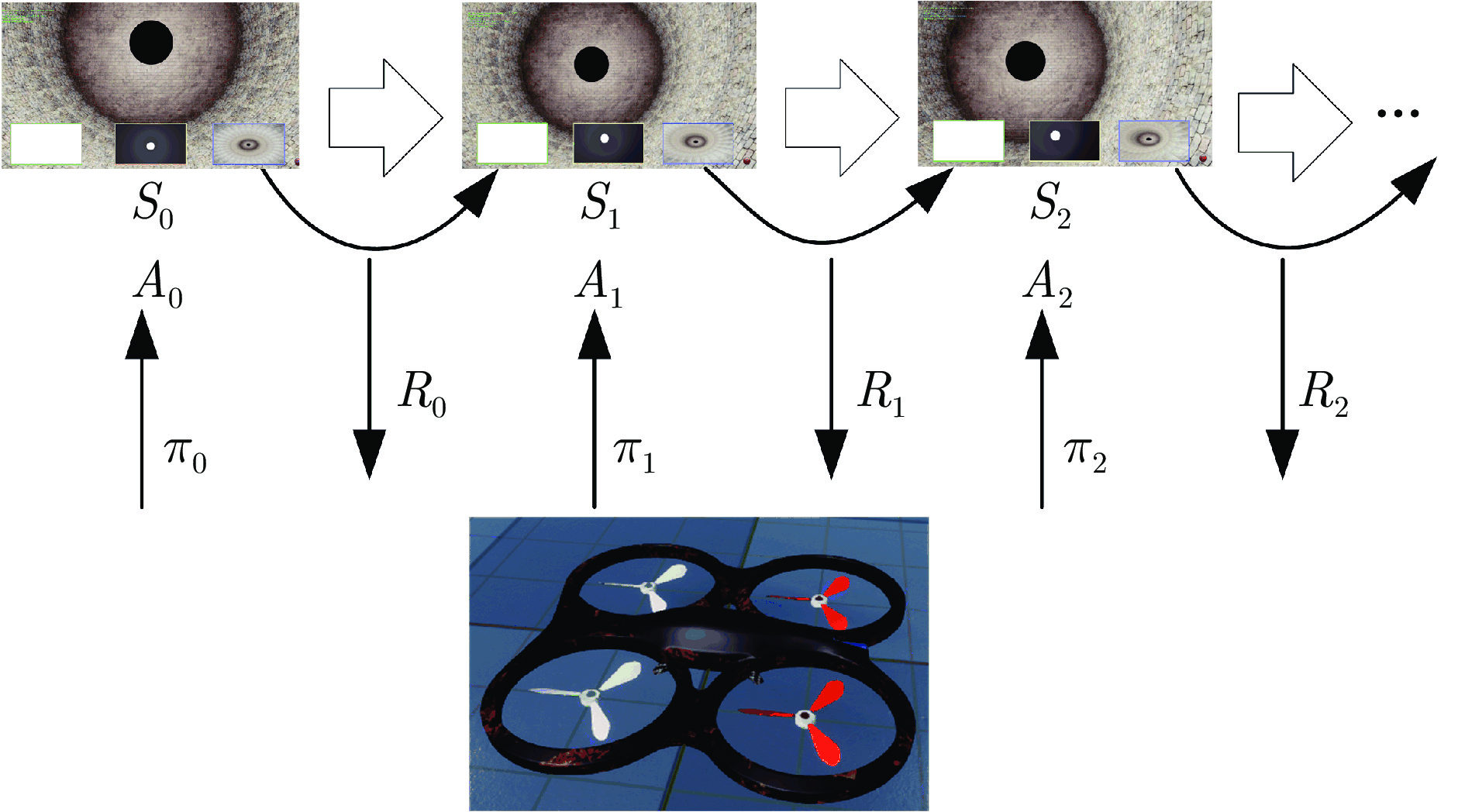

摘要: 针对虚拟管道下的无人机 (Unmanned aerial vehicle, UAV)自主避障问题, 提出一种基于视觉传感器的自主学习架构. 通过引入新颖的奖励函数, 设计了一种端到端的深度强化学习(Deep reinforcement learning, DRL)控制策略. 融合卷积神经网络 (Convolutional neural network, CNN)和循环神经网络 (Recurrent neural network, RNN)的优点构建双网络, 降低了网络复杂度, 对无人机深度图像进行有效处理. 进一步通过AirSim模拟器搭建三维实验环境, 采用连续动作空间优化无人机飞行轨迹的平滑性. 仿真结果表明, 与现有的方法对比, 该模型在面对静态和动态障碍时, 训练收敛速度快, 平均奖励高, 任务完成率分别增加9.4%和19.98%, 有效实现无人机的精细化避障和自主安全导航.Abstract: In order to solve the problem of autonomous obstacle avoidance of unmanned aerial vehicle (UAV) under virtual tube, this paper proposes an autonomous learning architecture based on visual sensors, in which a novel reward function is introduced, and an end-to-end deep reinforcement learning (DRL) control strategy is designed. By integrating convolutional neural network (CNN) and recurrent neural network (RNN), a dual-network architecture is constructed, reducing network complexity and enabling effective processing of UAV depth images. Furthermore, using the AirSim simulator, a three-dimensional experimental environment is created to optimize the smoothness of UAV flight trajectories in a continuous action space. Compared with the existing methods when confronting both static and dynamic obstacles, the simulation results indicate that this model achieves faster training convergence and higher average rewards. The task completion rates in the two scenarios are also increased by 9.4% and 19.98%, respectively, which can effectively achieve precise obstacle avoidance and autonomous safe navigation of drones.

-

表 1 CNN结构

Table 1 CNN structure

网络层 输入维度 卷积核尺寸 卷积核个数 步长 激活函数 输出维度 CNN1 84 × 84 × 1 8 × 8 32 4 ReLU 20 × 20 × 32 MaxPooling1 20 × 20 × 32 2 × 2 — 2 — 10 × 10 × 32 CNN2 10 × 10 × 32 3 × 3 64 1 ReLU 8 × 8 × 64 MaxPooling2 8 × 8 × 64 2 × 2 — 2 — 4 × 4 × 64 表 2 参数设定

Table 2 Parameter settings

参数 取值 学习率 0.0001 优化器 Adam 折扣因子 0.99 剪切值 0.2 批量大小 128 熵权重 0.02 GAE权重 0.95 表 3 无障碍环境中的测试成功率

Table 3 Test success rate in obstacle-free environment

算法类型 平均得分 得分标准差 成功率(%) CPPO-1 21.31 7.29 97.00 CPPO-1 (高噪声) 20.71 8.98 96.67 CPPO-2 22.65 0.21 100.00 CPPO-2 (高噪声) 22.64 0.21 100.00 表 4 静态障碍环境中的测试成功率

Table 4 Test success rate in static obstacle environment

算法类型 平均得分 得分标准差 成功率(%) CPPO-1 13.96 17.09 81.08 CPPO-1 (高噪声) 12.53 18.16 78.60 CPPO-2 20.26 9.32 90.52 CPPO-2 (高噪声) 17.84 13.76 88.93 表 5 动态障碍环境中的测试成功率

Table 5 Test success rate in dynamic obstacle environment

算法类型 平均得分 得分标准差 成功率(%) CPPO-1 7.52 19.70 65.34 RCPPO-N 12.34 17.34 78.47 RCPPO-N (高动态) 11.02 18.06 74.73 RCPPO 15.61 14.56 85.32 RCPPO (高动态) 15.02 16.02 82.63 表 6 RCPPO泛化性测试成功率

Table 6 Test success rate of RCPPO generalization

仿真环境 平均得分 得分标准差 成功率(%) 无障碍 22.61 0.28 100.00 静态障碍 17.70 13.07 89.36 动态障碍 15.61 14.56 85.32 -

[1] Zhou T, Chen M, Zou J. Reinforcement learning based data fusion method for multi-sensors. IEEE/CAA Journal of Automatica Sinica, 2020, 7(6): 1489−1497 doi: 10.1109/JAS.2020.1003180 [2] Yasin J N, Mohamed S A S, Haghbayan M H, Heikkonen J, Tenhunen H, Plosila J. Low-cost ultrasonic based object detection and collision avoidance method for autonomous robots. International Journal of Information Technology, 2021, 13: 97−107 doi: 10.1007/s41870-020-00513-w [3] Ravankar A, Ravankar A A, Rawankar A, Hoshino Y. Autonomous and safe navigation of mobile robots in vineyard with smooth collision avoidance. Agriculture, 2021, 11(10): 954−970 doi: 10.3390/agriculture11100954 [4] Fan J, Lei L, Cai S, Shen G, Cao P, Zhang L. Area surveillance with low detection probability using UAV swarms. IEEE Transactions on Vehicular Technology, 2024, 73(2): 1736−1752 doi: 10.1109/TVT.2023.3318641 [5] Mao P, Quan Q. Making robotics swarm flow more smoothly: A regular virtual tube model. In: Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems. Kyoto, Japan: IEEE, 2022. 4498−4504 [6] Lv S, Gao Y, Che J, Quan Q. Autonomous drone racing: Time-optimal spatial iterative learning control within a virtual tube. In: Proceedings of the 2023 IEEE International Conference on Robotics and Automation. London, United Kingdom: IEEE, 2023. 3197−3203 [7] 岳敬轩, 王红茹, 朱东琴, ALEKSANDR Chupalov. 基于改进粒子滤波的无人机编队协同导航算法. 航空学报, 2023, 44(14): 251−262Yue Jing-Xuan, Wang Hong-Ru, Zhu Dong-Qin, Aleksandr Chupalov. UAV formation cooperative navigation algorithm based on improved particle filter. Acta Aeronautica et Astronautica Sinica, 2023, 44(14): 251−262 [8] 吴健发, 王宏伦, 王延祥, 刘一恒. 无人机反应式扰动流体路径规划. 自动化学报, 2023, 49(2): 272−287Wu Jian-Fa, Wang Hong-Lun, Wang Yan-Xiang, Liu Yi-Heng. UAV reactive interfered fluid path planning. Acta Automatica Sinica, 2023, 49(2): 272−287 [9] Opromolla R, Fasano G. Visual-based obstacle detection and tracking, and conflict detection for small UAS sense and avoid. Aerospace Science and Technology, 2021, 119: 107167−107186 doi: 10.1016/j.ast.2021.107167 [10] Yao P, Sui X, Liu Y, Zhao Z. Vision-based environment perception and autonomous obstacle avoidance for unmanned underwater vehicle. Applied Ocean Research, 2023, 134: 103510−103527 doi: 10.1016/j.apor.2023.103510 [11] Xu Z, Xiu Y, Zhan X, Chen B, Shimada K. Vision-aided UAV navigation and dynamic obstacle avoidance using gradient-based b-spline trajectory optimization. In: Proceedings of the 2023 IEEE International Conference on Robotics and Automation. London, United Kingdom: IEEE, 2023. 1214−1220 [12] Rezaei N, Darabi S. Mobile robot monocular vision-based obstacle avoidance algorithm using a deep neural network. Evolutionary Intelligence, 2023, 16(6): 1999−2014 doi: 10.1007/s12065-023-00829-z [13] Abd Elaziz M, Dahou A, Abualigah L, Yu L, Alshinwan M, Khasawneh A M, et al. Advanced metaheuristic optimization techniques in applications of deep neural networks: A review. Neural Computing and Applications, 2021, 33(21): 14079−14099 doi: 10.1007/s00521-021-05960-5 [14] Roghair J, Niaraki A, Ko K, Jannesari A. A vision based deep reinforcement learning algorithm for UAV obstacle avoidance. In: Proceedings of the 2021 Intelligent Systems Conference. Cham, Switzerland: Springer, 2022. 115−128 [15] 周治国, 余思雨, 于家宝, 段俊伟, 陈龙, 陈俊龙. 面向无人艇的T-DQN智能避障算法研究. 自动化学报, 2023, 49(8): 1645−1655Zhou Zhi-Guo, Yu Si-Yu, Yu Jia-Bao, Duan Jun-Wei, Chen Long, Chen Jun-Long. Research on T-DQN intelligent obstacle avoidance algorithm of unmanned surface vehicle. Acta Automatica Sinica, 2023, 49(8): 1645−1655 [16] Kalidas A P, Joshua C J, Md A Q, Basheer S, Mohan S, Sakri S. Deep reinforcement learning for vision-based navigation of UAVs in avoiding stationary and mobile obstacles. Drones, 2023, 7(4): 245−267 doi: 10.3390/drones7040245 [17] Liang C, Liu L, Liu C. Multi-UAV autonomous collision avoidance based on PPO-GIC algorithm with CNN-LSTM fusion network. Neural Networks, 2023, 162: 21−33 doi: 10.1016/j.neunet.2023.02.027 [18] Zhao X, Yang R, Zhang Y, Yan M, Yue L. Deep reinforcement learning for intelligent dual-UAV reconnaissance mission planning. Electronics, 2022, 11(13): 2031−2048 doi: 10.3390/electronics11132031 [19] 施伟, 冯旸赫, 程光权, 黄红蓝, 黄金才, 刘忠, 等. 基于深度强化学习的多机协同空战方法研究. 自动化学报, 2021, 47(7): 1610−1623Shi Wei, Feng Yang-He, Cheng Guang-Quan, Huang Hong-Lan, Huang Jin-Cai, Liu Zhong, et al. Research on multi-aircraft cooperative air combat method based on deep reinforcement learning. Acta Automatica Sinica, 2021, 47(7): 1610−1623 [20] Kurniawati H. Partially observable Markov decision processes and robotics. Annual Review of Control, Robotics, and Autonomous Systems, 2022, 5: 253−277 doi: 10.1146/annurev-control-042920-092451 [21] Fang W, Chen Y, Xue Q. Survey on research of RNN-based spatio-temporal sequence prediction algorithms. Journal on Big Data, 2021, 3(3): 97−110 doi: 10.32604/jbd.2021.016993 [22] Schulman J, Moritz P, Levine S, Jordan M, Abbeel P. High-dimensional continuous control using generalized advantage estimation. arXiv preprint arXiv: 1506.02438, 2015. [23] Zhang X, Zheng K, Wang C, Chen J, Qi H. A novel deep reinforcement learning for POMDP-based autonomous ship collision decision-making. Neural Computing and Applications, 2023: 1−15 [24] 姚鹏, 解则晓. 基于修正导航向量场的AUV自主避障方法. 自动化学报, 2020, 46(8): 1670−1680Yao Peng, Xie Ze-Xiao. Autonomous obstacle avoidance for AUV based on modified guidance vector field. Acta Automatica Sinica, 2020, 46(8): 1670−1680 [25] Khetarpal K, Riemer M, Rish I, Precup D. Towards continual reinforcement learning: A review and perspectives. Journal of Artificial Intelligence Research, 2022, 75: 1401−1476 doi: 10.1613/jair.1.13673 [26] Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. arXiv preprint arXiv: 1707.06347, 2017. [27] Hou Y, Liu L, Wei Q, Xu X, Chen C. A novel DDPG method with prioritized experience replay. In: Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics. Banff, Canada: IEEE, 2017. 316−321 [28] Shah S, Dey D, Lovett C, Kapoor A. AirSim: High-fidelity visual and physical simulation for autonomous vehicles. In: Proceedings of the Field and Service Robotics: Results of the 11th International Conference. Zurich, Switzerland: Springer, 2018. 621−635 -

下载:

下载: