-

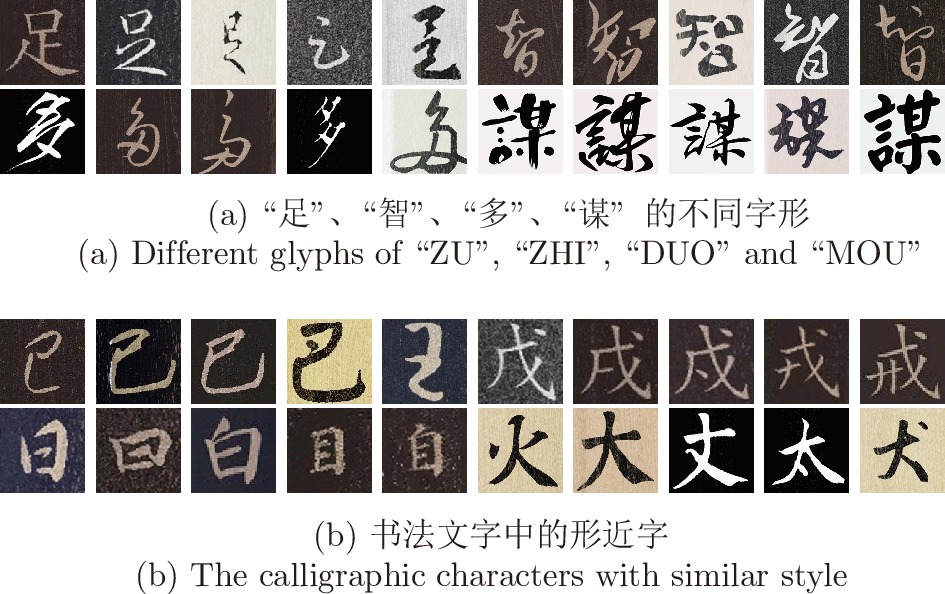

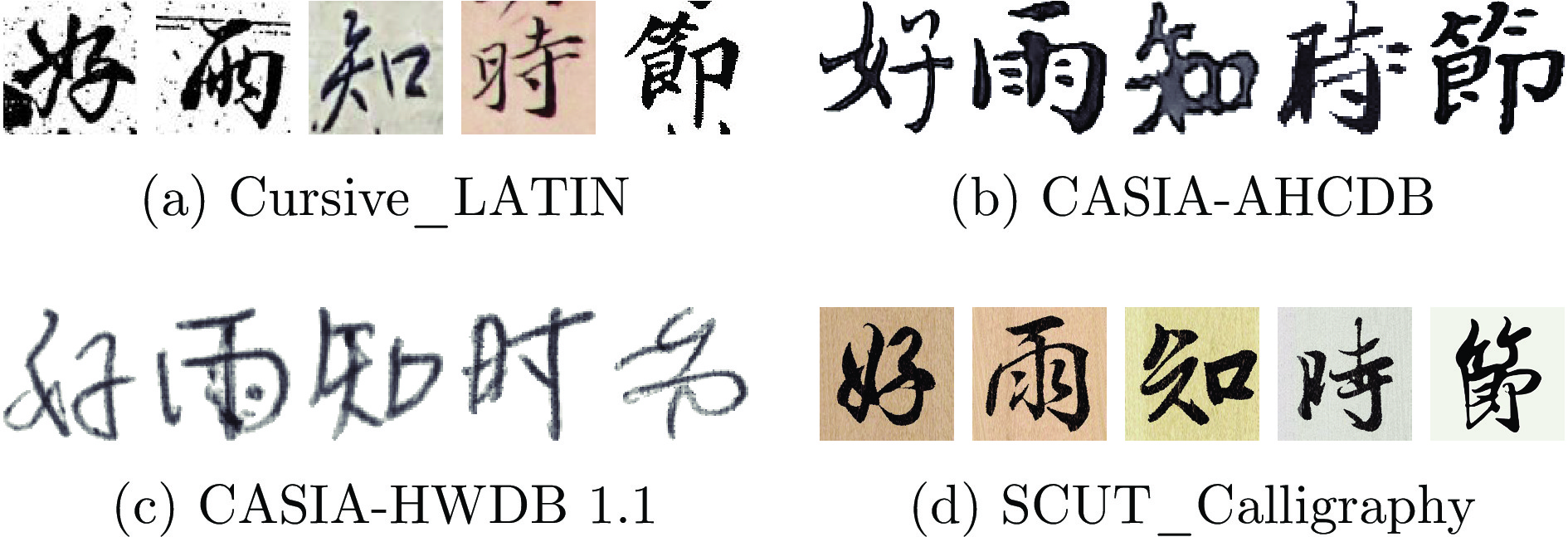

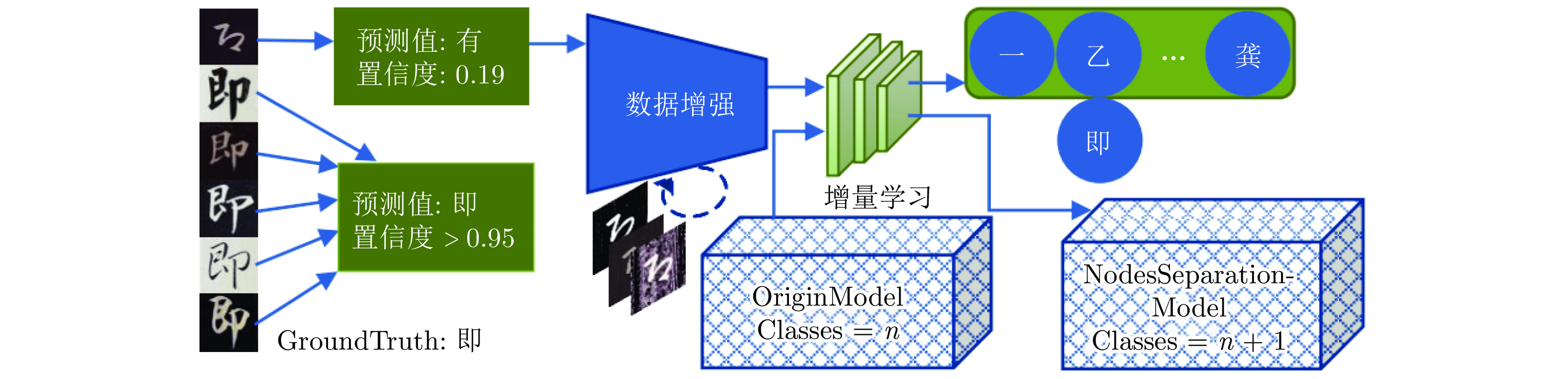

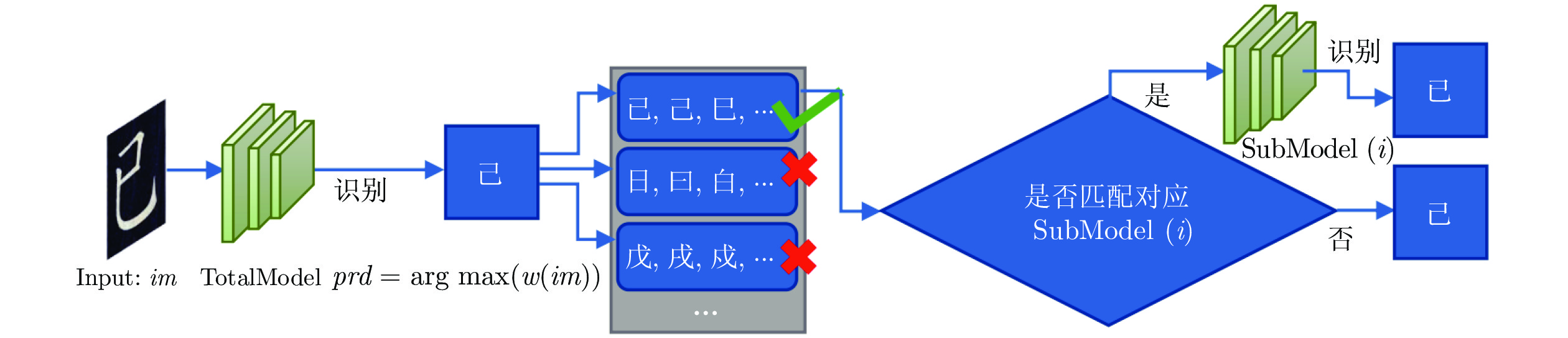

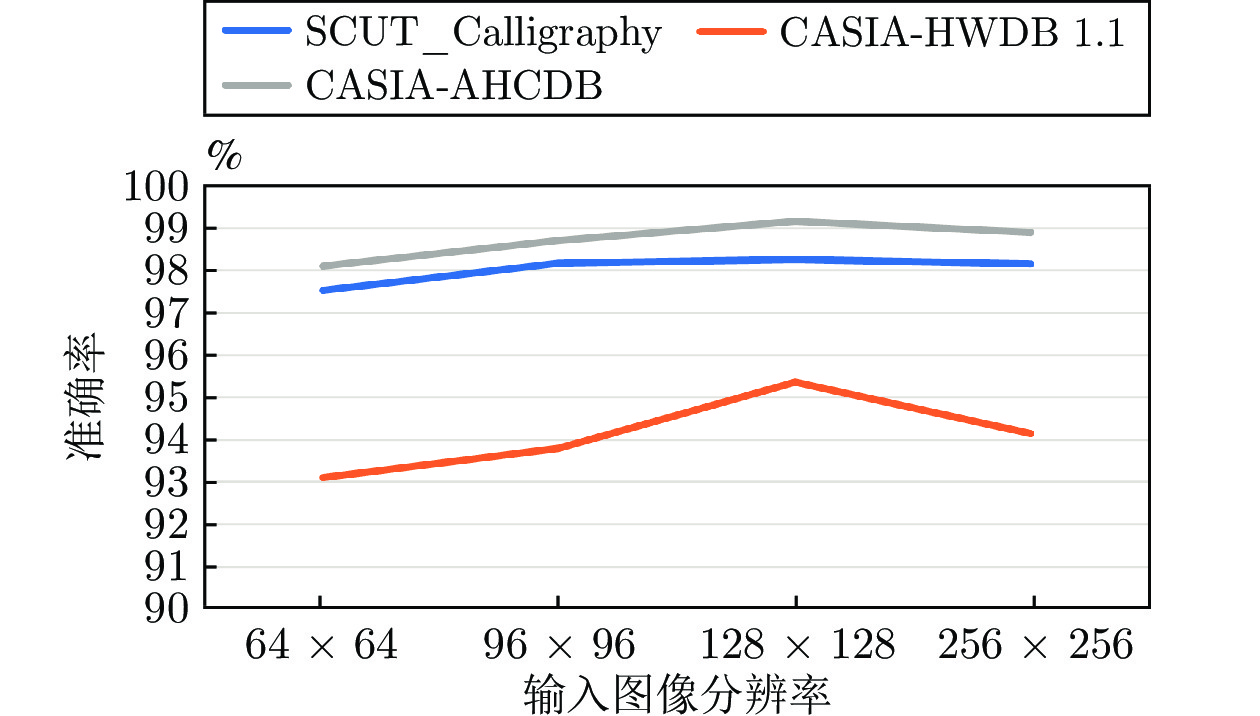

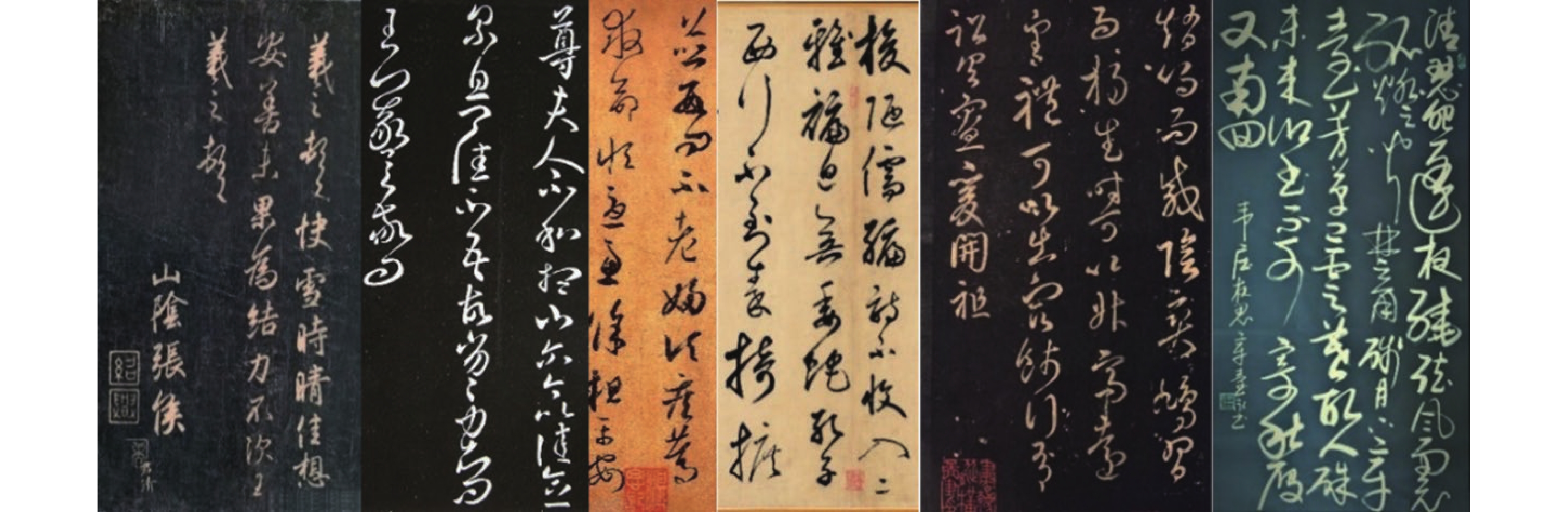

摘要: 基于二维图像的书法文字识别是指利用计算机视觉技术对书法文字单字图像进行识别, 在古籍研究和文化传播中具有重要应用. 目前书法文字识别技术已经取得了相当不错的进展, 但依旧面临很多挑战, 比如复杂多变的字形可能导致的识别误差, 汉字本身又存在较多形近字, 且汉字字符类别数与其他语言文字相比更多, 书法文字图像普遍存在类内差距大、类间差距小的问题. 为解决这些问题, 提出叠层模型驱动的书法文字识别方法(Stacked-model driven character recognition, SDCR), 通过使用数据预处理、节点分离策略和叠层模型对现有单一分类模型进行改进, 按照字体类别对同一类别不同字体风格的文字进行二次划分; 针对类间差距小的问题, 根据书法文字训练集图像识别置信度对形近字进行子集划分, 针对子集进行嵌套模型增强训练, 在测试阶段利用叠层模型对形近字进行二次识别, 提升形近字的识别准确率. 为了验证该方法的鲁棒性, 在自主生成的SCUT_Calligraphy数据集和CASIA-HWDB 1.1, CASIA-AHCDB公开数据集上进行训练和测试, 实验结果表明该方法在上述数据集的识别准确率均有较大幅度提升, 在CASIA-HWDB 1.1、CASIA-AHCDB和自建数据集SCUT_Calligraphy上测试准确率分别达到96.33%、99.51%和99.90%, 证明了该方法的有效性.Abstract: Calligraphy character recognition based on two-dimensional images means to recognize single calligraphy character based on computer vision, which has important applications in ancient book research and cultural dissemination. At present, calligraphy character recognition has made considerable progress, but still faces many challenges, such as recognition errors caused by complex and variable font shapes, the existence of many similar characters in Chinese, and the number of Chinese character categories is extremely large. Calligraphy character images generally have large intra class differences and small inter class differences. In order to tackle these issues, we proposed a calligraphy character recognition method based on stacked model (SDCR). By using data preprocessing, node separation strategy and stacked model, and the characters with different font styles in the same category is subdivided according to the font style. To address the issue of small inter class differences, the calligraphy character training set image recognition confidence level is used to divide the characters with similar style into subsets. Nested model enhancement training is conducted on the subsets, and in the testing stage, a stacked model is used for secondary recognition of characters with similar style to improve the recognition accuracy of shape near characters. In order to verify the robustness of our proposed method, we train and test on self-generated dataset SCUT_Calligraphy and publicly available datasets CASIA-HWDB 1.1, CASIA-AHCDB. The experimental results showed that the proposed method significantly improved the recognition accuracy of the datasets mentioned above. The testing accuracy on CASIA-HWDB 1.1, CASIA-AHCDB and SCUT_Calligraphy reached 96.33%, 99.51%, and 99.90%, respectively, which proves the effectiveness of the method described in this article.

-

Key words:

- Calligraphy character recognition /

- model driven /

- nodes separation /

- stacked model /

- precision learning

-

表 1 实验数据集详细属性

Table 1 Detailed properties of experimental datasets

数据集名称 类别数 训练集规模 测试集规模 CASIA-AHCDB Style-1 BC 2 353 828 969 253 990 Style-1 EC 3 201 88 870 36 143 Style-2 BC 2 353 725 240 202 404 Style-2 EC 740 66 690 17 741 CASIA-HWDB 1.1 3 755 847 466 223 991 SCUT_Calligraphy 3 767 251 664 26 106 表 2 叠层模型驱动的书法文字识别消融实验结果

Table 2 Ablation experimental results of calligraphy character recognition driven by stacked model

测试数据集 数据预处理 节点分离 叠层模型驱动 Precision (%) Recall (%) F1-Score (%) CASIA-HWDB 1.1 × × × 89.64 88.95 89.29 $\surd$ × × 90.34 89.35 89.84 $\surd$ $\surd$ × 91.26 89.56 90.40 $\surd$ $\surd$ $\surd$ 96.33 92.10 94.16 CASIA-AHCDB (Style-1 BC) × × × 94.50 95.10 94.79 $\surd$ × × 98.92 98.34 98.62 $\surd$ $\surd$ × 99.19 99.14 99.16 $\surd$ $\surd$ $\surd$ 99.51 99.21 99.35 SCUT_Calligraphy × × × 91.33 90.45 90.88 $\surd$ × × 98.38 98.22 98.30 $\surd$ $\surd$ × 98.85 98.36 98.60 $\surd$ $\surd$ $\surd$ 99.90 98.96 99.42 表 3 单模型和叠层模型驱动模型识别可视化结果对比

Table 3 Comparison of visualization results for single model and stacked precision neural network model recognition

输入图片 标签 单模型预测值 叠层模型预测值

自 白 自

巾 力 力

王 工 王

勿 句 勿

右 古 右

右 芯 芯

王 己 己

布 希 布

布 常 常 表 4 不同子集书法文字图像使用单模型和叠层模型驱动模型识别结果对比

Table 4 Comparison of recognition results of different calligraphy character images subsets using single model and stacked model

子集字符类别 子集规模 单模型错误数 叠层模型错误数 准确率提升(%) 日目白自向冶治囚曰沼 74 11 3 10.81 大己已木犬片斤火本巳 83 5 3 2.40 力工巾王勿古右布句希 76 9 4 6.57 巨予主矛母吉臣吝圭毋 86 7 3 4.65 夫云去央尘尖伏伐亥矢 69 7 2 7.24 士土千比午北白自血皿 76 7 4 3.94 去式戒赤坊束辰来妨展 68 7 2 7.35 助忍驳玩抵忽振玖肋骏 64 7 4 4.68 表 5 不同方法在CASIA-AHCDB, CASIA-HWDB 1.1和SCUT_Calligraphy数据集上的测试结果对比 (%)

Table 5 The performance of comparison different methods test on the CASIA-AHCDB, CASIA-HWDB 1.1 and SCUT_Calligraphy (%)

方法 数据集 CASIA-AHCDB CASIA-HWDB 1.1 SCUT_Calligraphy Style-1 BC Style-1 BC&EC Style-2 BC Style-2 BC&EC Style-1 BC (train) Style-2 BC (test) LW-ViT[34] — — — — — 95.80 — CPN[35] 98.50 96.95 94.42 91.99 74.74 95.45 98.70 RAN[36] 82.39 — 69.61 — — — — RPN 83.65 — 69.63 — — — — RAN + CRA[36] 85.54 — 71.02 — — — — RPN + CRA[37] 86.91 — 72.06 — — — — SDCR + JD 99.51 98.23 98.74 97.01 86.15 96.33 99.90 注: SDCR + JD指同时使用叠层模型驱动和节点分离训练策略. -

[1] Zhang H N, Dong B, Zheng Q H, Feng B Q, Xu B, Wu H Y. All-content text recognition method for financial ticket images. Multimedia Tools and Applications, 2022, 81(20): 28327−28346 doi: 10.1007/s11042-022-12741-2 [2] Kabiraj A, Pal D, Ganguly D, Chatterjee K, Roy S. Number plate recognition from enhanced super-resolution using generative adversarial network. Multimedia Tools and Applications, 2023, 82(9): 13837−13853 doi: 10.1007/s11042-022-14018-0 [3] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016. 770–778 [4] Bhunia A K, Ghose S, Kumar A, Chowdhury P N, Sain A, Song Y Z. MetaHTR: Towards writer-adaptive handwritten text recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 15825–15834 [5] Wang X H, Wu K, Zhang Y, Xiao Y, Xu P F. A GAN-based denoising method for Chinese stele and rubbing calligraphic image. The Visual Computer, 2023, 39(4): 1351−1362 [6] Fang S C, Xie H T, Wang Y X, Mao Z D, Zhang Y D. Read like humans: Autonomous, bidirectional and iterative language modeling for scene text recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 7094–7103 [7] Cireşan D, Meier U. Multi-column deep neural networks for offline handwritten Chinese character classification. In: Proceedings of the International Joint Conference on Neural Networks (IJCNN). Killarney, Ireland: IEEE, 2015. 1–6 [8] Yin F, Wang Q F, Zhang X Y, Liu C L. ICDAR 2013 Chinese handwriting recognition competition. In: Proceedings of the 12th International Conference on Document Analysis and Recognition. Washington, USA: IEEE, 2013. 1464–1470 [9] Zhong Z Y, Jin L W, Xie Z C. High performance offline handwritten Chinese character recognition using GoogLeNet and directional feature maps. In: Proceedings of the 13th International Conference on Document Analysis and Recognition (ICDAR). Tunis, Tunisia: IEEE, 2015. 846–850 [10] Chen L, Wang S, Fan W, Sun J, Naoi S. Beyond human recognition: A CNN-based framework for handwritten character recognition. In: Proceedings of the 3rd IAPR Asian Conference on Pattern Recognition (ACPR). Kuala Lumpur, Malaysia: IEEE, 2015. 695–699 [11] Zhong Z, Zhang X Y, Yin F, Liu C L. Handwritten Chinese character recognition with spatial transformer and deep residual networks. In: Proceedings of the 23rd International Conference on Pattern Recognition (ICPR). Cancun, Mexico: IEEE, 2016. 3440–3445 [12] Li Z Y, Teng N J, Jin M, Lu H X. Building efficient CNN architecture for offline handwritten Chinese character recognition. International Journal on Document Analysis and Recognition (IJDAR), 2018, 21(4): 233−240 doi: 10.1007/s10032-018-0311-4 [13] Bi N, Chen J H, Tan J. The handwritten Chinese character recognition uses convolutional neural networks with the GoogLeNet. International Journal of Pattern Recognition and Artificial Intelligence, 2019, 33(11): Article No. 1940016 doi: 10.1142/S0218001419400160 [14] Zhang X Y, Liu C L. Evaluation of weighted Fisher criteria for large category dimensionality reduction in application to Chinese handwriting recognition. Pattern Recognition, 2013, 46(9): 2599−2611 doi: 10.1016/j.patcog.2013.01.036 [15] Dan Y P, Zhu Z N, Jin W S, Li Z. PF-VIT: Parallel and fast vision transformer for offline handwritten Chinese character recognition. Computational Intelligence and Neuroscience, 2022, 2022: Article No. 8255763 [16] Cao Z, Lu J, Cui S, Zhang C S. Zero-shot handwritten Chinese character recognition with hierarchical decomposition embedding. Pattern Recognition, 2020, 107: Article No. 107488 doi: 10.1016/j.patcog.2020.107488 [17] Diao X L, Shi D Q, Tang H, Shen Q, Li Y Z, Wu L, et al. RZCR: Zero-shot character recognition via radical-based reasoning. In: Proceedings of the 32nd International Joint Conference on Artificial Intelligence (IJCAI). Macao, China: ijcai.org, 2023. 654–662 [18] Wang T W, Xie Z C, Li Z, Jin L W, Chen X L. Radical aggregation network for few-shot offline handwritten Chinese character recognition. Pattern Recognition Letters, 2019, 125: 821−827 doi: 10.1016/j.patrec.2019.08.005 [19] Wang W C, Zhang J S, Du J, Wang Z R, Zhu Y X. DenseRAN for offline handwritten Chinese character recognition. In: Proceedings of the 16th International Conference on Frontiers in Handwriting Recognition (ICFHR). Niagara Falls, USA: IEEE, 2018. 104–109 [20] Chen J Y, Li B, Xue X Y. Zero-shot Chinese character recognition with stroke-level decomposition. In: Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI). Montreal, Canada: ijcai.org, 2021. 615–621 [21] Liu C, Yang C, Qin H B, Zhu X B, Liu C L, Yin X C. Towards open-set text recognition via label-to-prototype learning. Pattern Recognition, 2023, 134: Article No. 109109 doi: 10.1016/j.patcog.2022.109109 [22] Huang Y H, Jin L W, Peng D Z. Zero-shot Chinese text recognition via matching class embedding. In: Proceedings of the 16th International Conference on Document Analysis and Recognition (ICDAR). Lausanne, Switzerland: Springer, 2021. 127–141 [23] Jalali A, Kavuri S, Lee M. Low-shot transfer with attention for highly imbalanced cursive character recognition. Neural Networks, 2021, 143: 489−499 doi: 10.1016/j.neunet.2021.07.003 [24] Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016. 2818–2826 [25] Huang J D, Cheng G J, Zhang J H, Miao W. Recognition method for stone carved calligraphy characters based on a convolutional neural network. Neural Computing and Applications, 2023, 35(12): 8723−8732 [26] Dan Y P, Li Z. Particle swarm optimization-based convolutional neural network for handwritten Chinese character recognition. Journal of Advanced Computational Intelligence and Intelligent Informatics, 2023, 27(2): 165−172 doi: 10.20965/jaciii.2023.p0165 [27] Liu C L, Yin F, Wang D H, Wang Q F. Online and offline handwritten Chinese character recognition: Benchmarking on new databases. Pattern Recognition, 2013, 46(1): 155−162 doi: 10.1016/j.patcog.2012.06.021 [28] Peng D Z, Jin L W, Liu Y L, Luo C J, Lai S X. PageNet: Towards end-to-end weakly supervised page-level handwritten Chinese text recognition. International Journal of Computer Vision, 2022, 130(11): 2623−2645 doi: 10.1007/s11263-022-01654-0 [29] Xu Y, Yin F, Wang D H, Zhang X Y, Zhang Z X, Liu C L. CASIA-AHCDB: A large-scale Chinese ancient handwritten characters database. In: Proceedings of the International Conference on Document Analysis and Recognition (ICDAR). Sydney, Australia: IEEE, 2019. 793–798 [30] Qu X W, Wang W Q, Lu K, Zhou J S. Data augmentation and directional feature maps extraction for in-air handwritten Chinese character recognition based on convolutional neural network. Pattern Recognition Letters, 2018, 111: 9−15 doi: 10.1016/j.patrec.2018.04.001 [31] Su T H, Pan W, Yu L J. HITHCD-2018: Handwritten Chinese character database of 21K-category. In: Proceedings of the International Conference on Document Analysis and Recognition (ICDAR). Sydney, Australia: IEEE, 2019. 1378–1383 [32] Luo C J, Zhu Y Z, Jin L W, Li Z, Peng D Z. SLOGAN: Handwriting style synthesis for arbitrary-length and out-of-vocabulary text. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(11): 8503−8515 doi: 10.1109/TNNLS.2022.3151477 [33] Wang P C, Xiong H, He H X. Bearing fault diagnosis under various conditions using an incremental learning-based multi-task shared classifier. Knowledge-Based Systems, 2023, 266: Article No. 110395 doi: 10.1016/j.knosys.2023.110395 [34] Geng S Y, Zhu Z N, Wang Z D, Dan Y P, Li H Y. LW-VIT: The lightweight vision transformer model applied in offline handwritten Chinese character recognition. Electronics, 2023, 12(7): Article No. 1693 doi: 10.3390/electronics12071693 [35] Yang H M, Zhang X Y, Yin F, Liu C L. Robust classification with convolutional prototype learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 3474–3482 [36] Zhang J S, Du J, Dai L R. Radical analysis network for learning hierarchies of Chinese characters. Pattern Recognition, 2020, 103: Article No. 107305 doi: 10.1016/j.patcog.2020.107305 [37] Luo G F, Yin H Y, Wang D H, Zhang X Y, Zhu S Z. Critical radical analysis network for Chinese character recognition. In: Proceedings of the 26th International Conference on Pattern Recognition (ICPR). Montreal, Canada: IEEE, 2022. 2878–2884 -

下载:

下载: