-

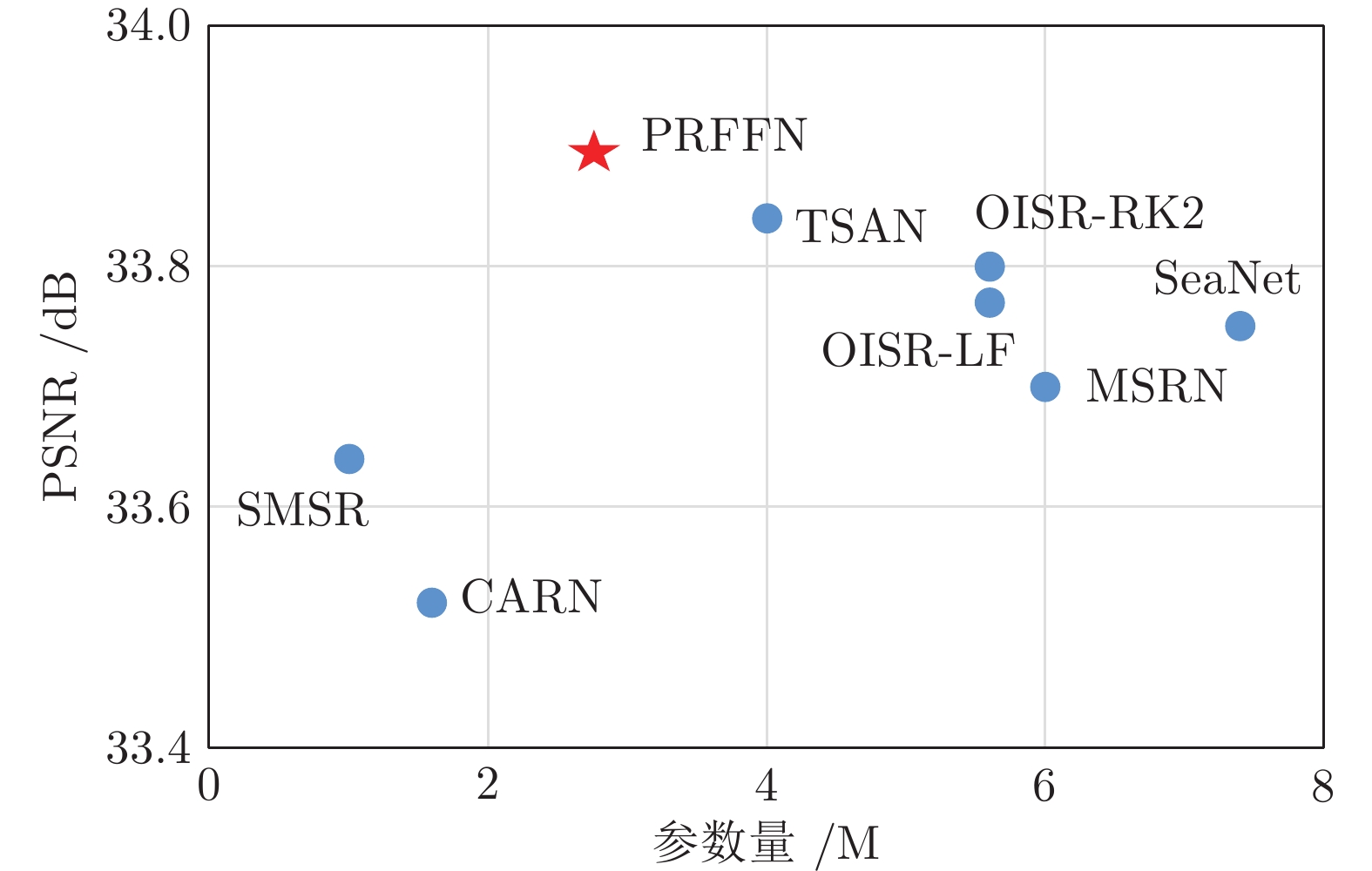

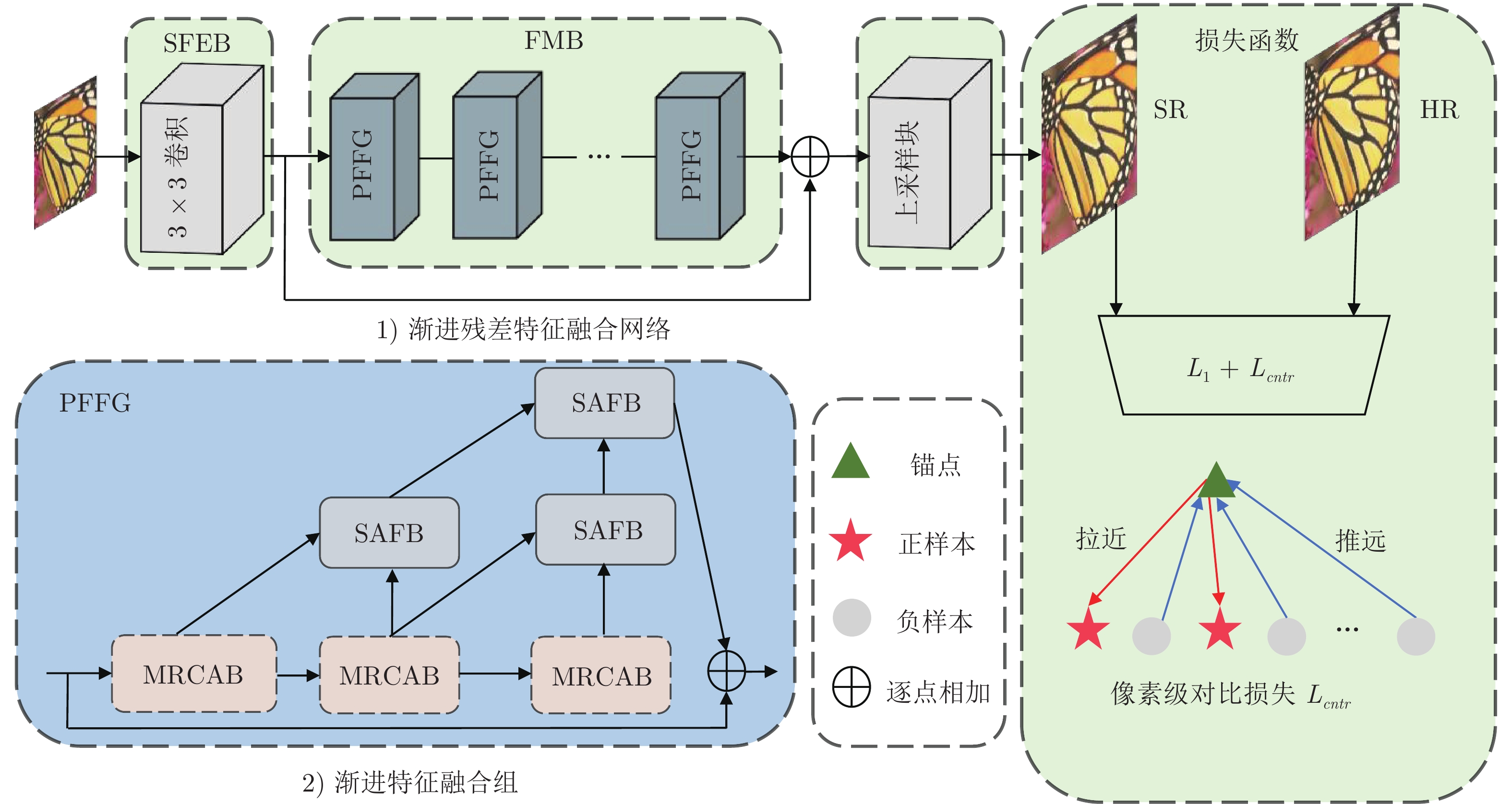

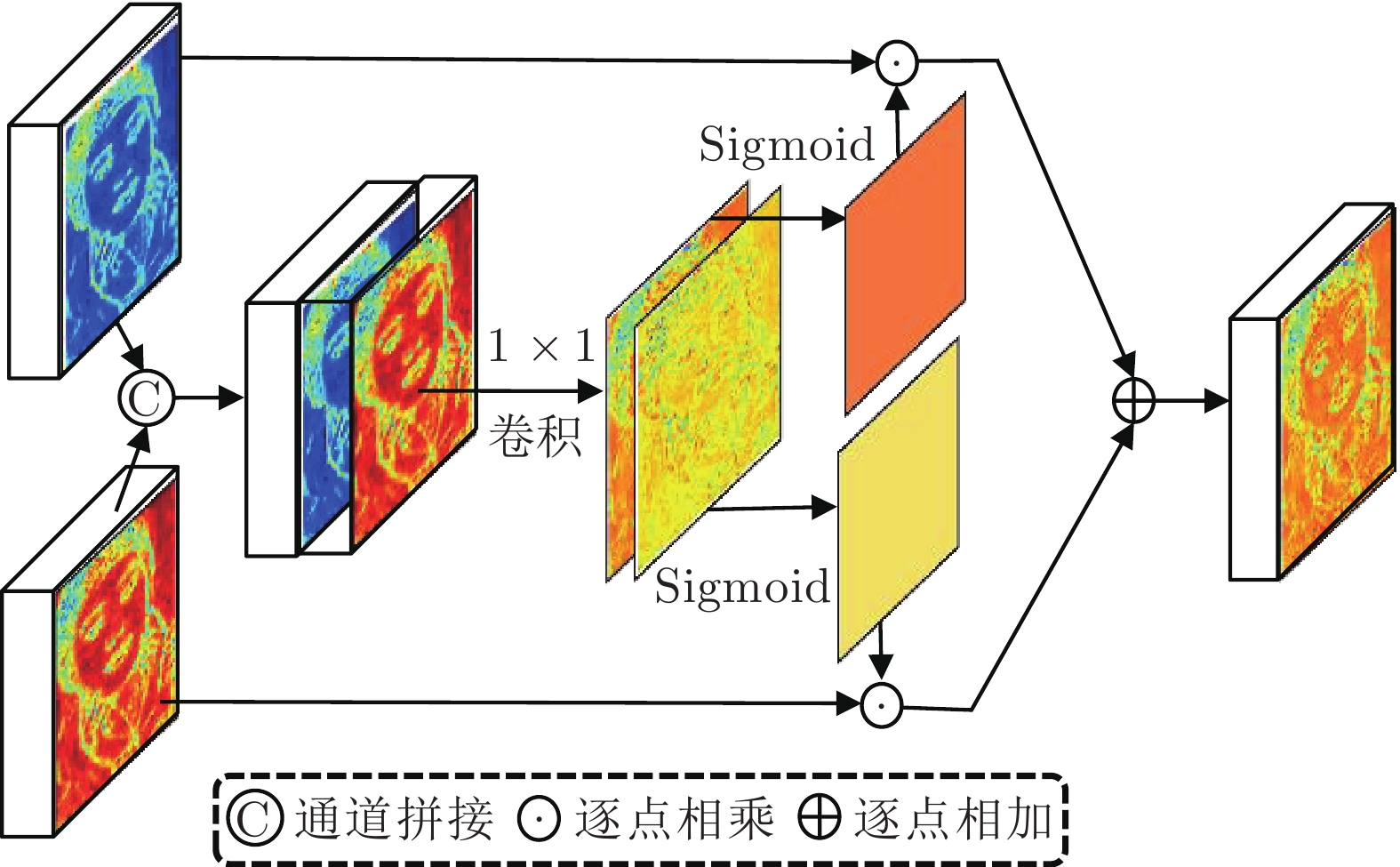

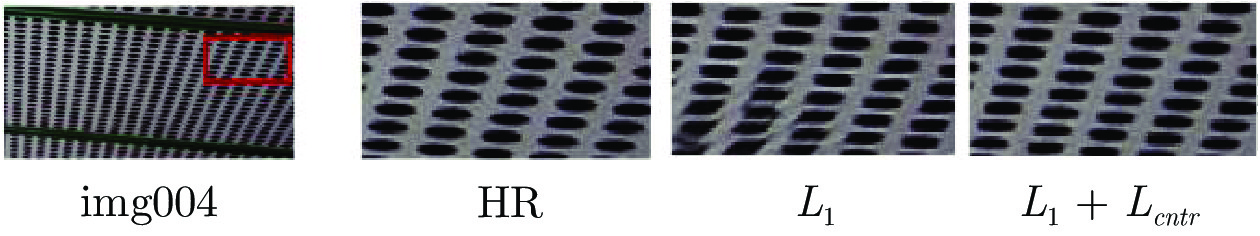

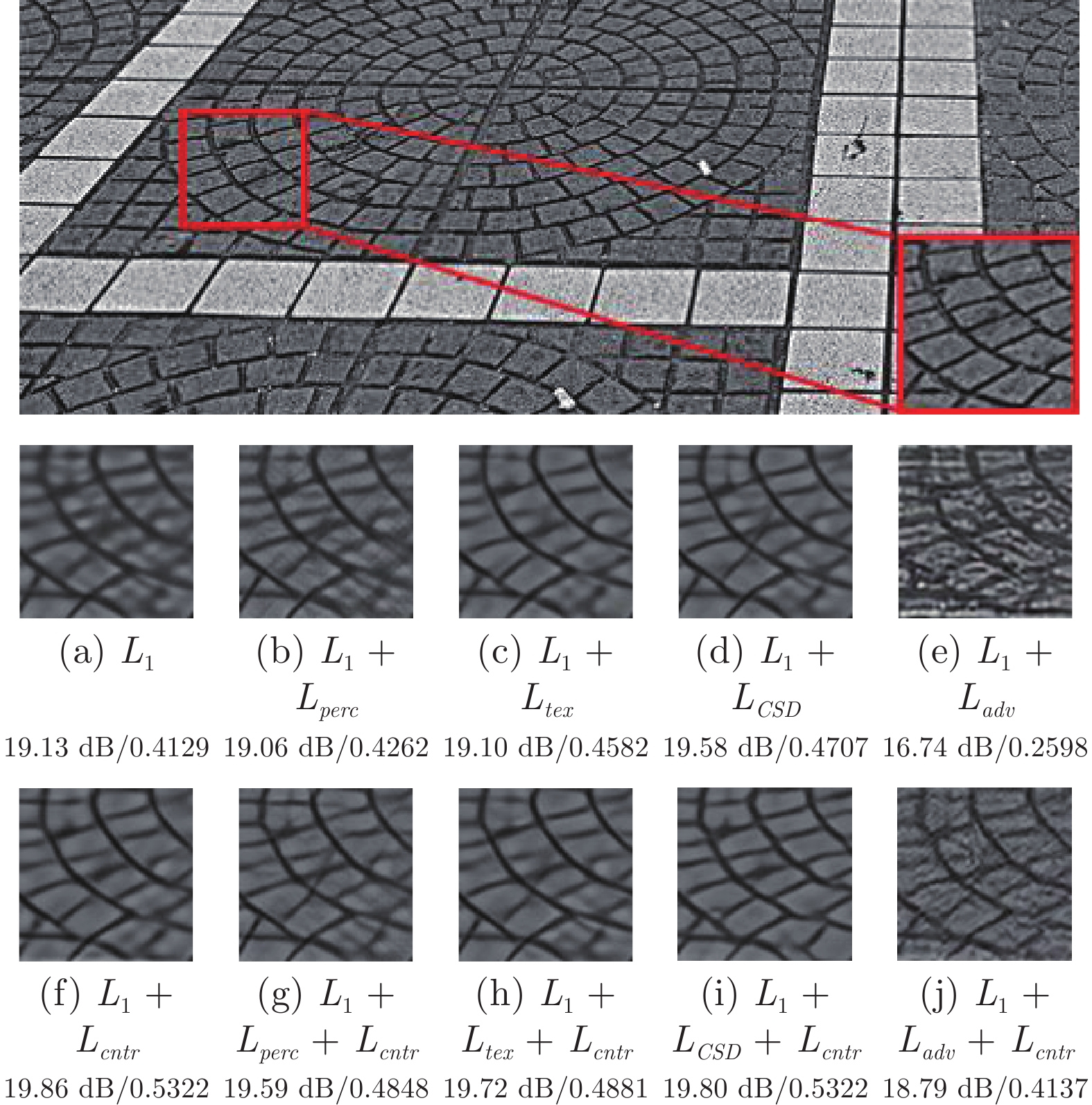

摘要: 目前, 深度卷积神经网络(Convolutional neural network, CNN)已主导了单图像超分辨率(Single image super-resolution, SISR)技术的研究, 并取得了很大进展. 但是, SISR仍是一个开放性问题, 重建的超分辨率(Super-resolution, SR)图像往往会出现模糊、纹理细节丢失和失真等问题. 提出一个新的逐像素对比损失, 在一个局部区域中, 使SR图像的像素尽可能靠近对应的原高分辨率(High-resolution, HR)图像的像素, 并远离局部区域中的其他像素, 可改进SR图像的保真度和视觉质量. 提出一个组合对比损失的渐进残差特征融合网络(Progressive residual feature fusion network, PRFFN). 主要贡献有: 1)提出一个通用的基于对比学习的逐像素损失函数, 能够改进SR图像的保真度和视觉质量; 2)提出一个轻量的多尺度残差通道注意力块(Multi-scale residual channel attention block, MRCAB), 可以更好地提取和利用多尺度特征信息; 3)提出一个空间注意力融合块(Spatial attention fuse block, SAFB), 可以更好地利用邻近空间特征的相关性. 实验结果表明, PRFFN显著优于其他代表性方法.Abstract: Deep convolutional neural network (CNN) has achieved great success in single image super-resolution (SISR). However, SISR is still an open issue, and reconstructed super-resolution (SR) images often suffer from blurring, loss of texture details and distortion. In this paper, a new pixel-wise contrastive loss is proposed to improve the fidelity and visual quality of SR images by making the pixels of SR images as close as possible to the corresponding pixels of the original high-resolution (HR) images and away from the other pixels in the local region. We also propose a progressive residual feature fusion network (PRFFN) with combined contrastive loss, and the main contributions include: 1) A general pixel-wise loss function based on contrastive learning is proposed, which can improve the fidelity and visual quality of SR images; 2) A lightweight multi-scale residual channel attention block (MRCAB) is proposed, which can better extract and utilize multi-scale feature information; 3) A spatial attention fusion block (SAFB) is proposed, which can better utilize the correlation of neighboring spatial features. The experimental results demonstrate that PRFFN significantly outperforms other representative methods.

-

表 1 DIV2K_val5验证集上, 不同模型, 3倍SR的平均PSNR和参数量

Table 1 The average PSNRs and parameter counts of 3 times SR for different models on the DIV2K_val5 validation data set

模型 $L_{1}$ $L_{cntr}$ 参数量(K) PSNR (dB) PRFFN0 $\checkmark$ — 2975 32.259 PRFFN1 $\checkmark$ — 3222 32.307 PRFFN2 $\checkmark$ — 3167 32.342 PRFFN3 $\checkmark$ — 3167 32.364 PRFFN $\checkmark$ $\checkmark$ 3167 32.451 表 2 DIV2K_val5验证集上, 不同损失函数及其组合, 3倍SR的平均PSNR和LPIPS结果

Table 2 The average PSNRs and LPIPSs of 3 times SR for different losses and their combinations on the DIV2K_val5 validation data set

$L_{1}$ $L_{perc}$ $L_{CSD}$ $L_{cntr}$ PSNR (dB) LPIPS $\checkmark$ — — — 32.364 0.0978 $\checkmark$ — — $\checkmark$ 32.451 0.0969 $\checkmark$ $\checkmark$ — — 32.236 0.0672 $\checkmark$ $\checkmark$ — $\checkmark$ 32.305 0.0656 $\checkmark$ — $\checkmark$ — 32.387 0.0624 $\checkmark$ — $\checkmark$ $\checkmark$ 32.432 0.0613 表 3 DIV2K_val5验证集上, 不同$ \lambda_{C} $, 3倍SR的平均PSNR结果

Table 3 The average PSNRs of 3 times SR for different $ \lambda_{C} $on the DIV2K_val5 validation data set

$\lambda_{C}$ PSNR (dB) $10^{-2}$ 32.386 $10^{-1}$ 32.451 $1$ 32.381 $10$ 32.372 表 4 DIV2K_val5验证集上, 不同模型包含与不包含$ L_{cntr} $损失, 3倍SR的平均PSNR和SSIM结果

Table 4 The average PSNRs and SSIMs of 3 times SR for different models with and without $ L_{cntr} $ loss on the DIV2K_val5 validation data set

模型 $L_{cntr}$ PSNR (dB) SSIM EDSR — 32.273 0.9057 $ \checkmark$ 32.334 ($\uparrow 0.061$) 0.9067 ($\uparrow 0.0010$) SwinIR-light — 32.442 0.9062 $ \checkmark$ 32.489 ($\uparrow 0.047$) 0.9069 ($\uparrow 0.0007$) RCAN — 32.564 0.9088 $ \checkmark$ 32.628 ($\uparrow 0.064$) 0.9096 ($\uparrow 0.0008$) 表 5 DIV2K_val5验证集上, 不同大小局部区域, 3倍SR的平均PSNR结果

Table 5 The average PSNRs of 3 times SR for different size local regions on the DIV2K_val5 validation data set

$S$ PSNR (dB) $16$ 32.425 $64$ 32.451 $256$ 32.458 表 6 3倍SR训练10个迭代周期, 训练占用的内存和使用的训练时间

Table 6 For 3 times SR, 10 epochs, comparing the memory and time used by training

$L_{cntr}$ $S$ 内存(MB) 时间(s) — — 4126 1962 $\checkmark$ 16 4836 2081 $\checkmark$ 64 5216 2219 $\checkmark$ 256 7541 2893 表 7 DIV2K_val5验证集上, MRCAB不同分支和不同扩张率组合, 3倍SR的平均PSNR结果

Table 7 The average PSNRs of 3 times SR for the different branches of MRCAB with different dilation rate combinations on the DIV2K_val5 validation data set

不同的扩张率卷积组合 PSNR (dB) $1$ 32.375 $1, 2$ 32.370 $1, 2, 3$ 32.392 $1, 2, 4$ 32.451 $1, 2, 5$ 32.415 表 8 5个标准测试数据集上, 不同SISR方法的2倍、3倍和4倍SR的平均PSNR和SSIM结果

Table 8 The average PSNRs and SSIMs of 2 times, 3 times, and 4 times SR for different SISR methods on five standard test data sets

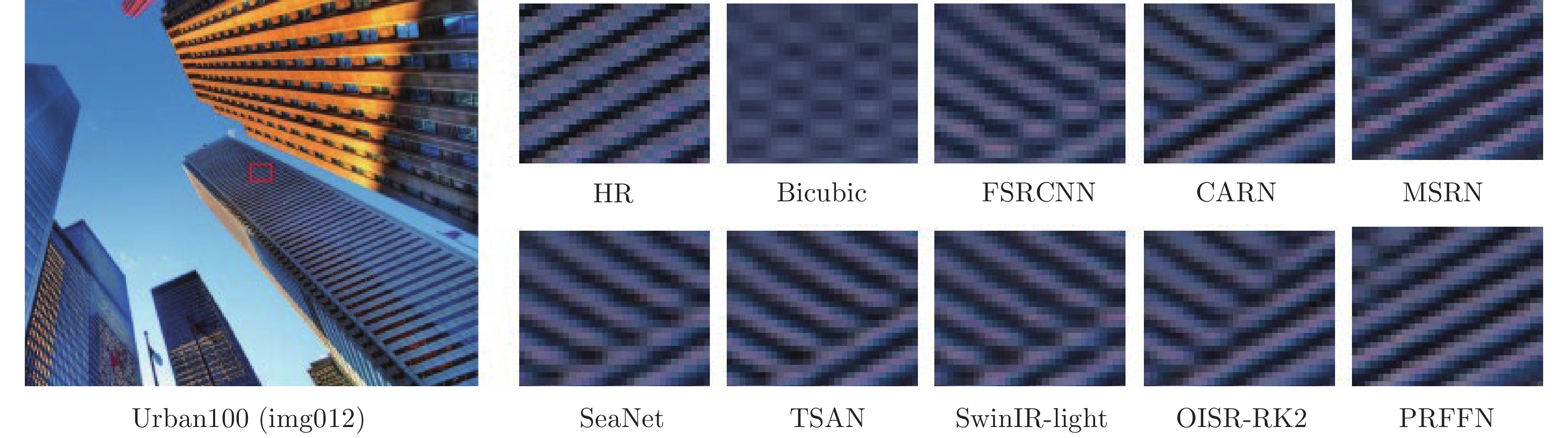

放大倍数 方法 参数量(K) 计算量(G) 推理时间(ms) Set5 PSNR/SSIM Set14 PSNR/SSIM B100 PSNR/SSIM Urban100 PSNR/SSIM Manga109 PSNR/SSIM 2 FSRCNN 13 6.0 26 37.00/0.9558 32.63/0.9088 31.53/0.8920 29.88/0.9020 36.67/0.9694 SMSR 985 224.1 536 38.00/0.9601 33.64/0.9179 32.17/0.8990 32.19/0.9284 38.76/0.9771 ACAN 800 2108.0 — 38.10/0.9608 33.60/0.9177 32.21/0.9001 32.29/0.9297 38.81/0.9773 AWSRN 1397 320.5 506 38.11/0.9608 33.78/0.9189 32.26/0.9006 32.49/0.9316 38.87/0.9776 DRCN 1774 17974.0 — 37.63/0.9588 33.04/0.9118 31.85/0.8942 30.75/0.9133 37.55/0.9732 CARN 1592 222.8 352 37.76/0.9590 33.52/0.9166 32.09/0.8978 31.92/0.9256 38.36/0.9765 OISR-RK2 4971 1145.7 715 38.12/0.9609 33.80/0.9193 32.26/0.9007 32.48/0.9317 38.79/0.9773 OISR-LF 4971 1145.7 722 38.12/0.9609 33.78/0.9196 32.26/0.9007 32.52/0.9320 38.80/0.9774 MSRN 6078 1356.8 810 38.08/0.9607 33.70/0.9186 32.23/0.9002 32.29/0.9303 38.69/0.9772 SeaNet 7471 3709.1 1920 38.08/0.9609 33.75/0.9190 32.27/0.9008 32.50/0.9318 38.76/0.9774 TSAN 3989 1013.1 1183 38.22/0.9619 33.84/0.9218 32.32/0.9015 32.77/0.9345 —/— PRFFN 2988 656.4 792 38.18/0.9611 33.90/0.9207 32.30/0.9012 32.75/0.9337 39.02/0.9777 3 FSRCNN 13 4.6 14 33.16/0.9140 29.43/0.8242 28.53/0.7910 26.43/0.8080 30.98/0.9212 SMSR 993 100.5 281 34.40/0.9270 30.33/0.8412 29.10/0.8050 28.25/0.8536 33.68/0.9445 ACAN 1115 1051.7 — 34.46/0.9277 30.39/0.8435 29.11/0.8065 28.28/0.8550 33.61/09447 AWSRN 1476 150.6 263 34.52/0.9281 30.38/0.8426 29.16/0.8069 28.42/0.8580 33.85/0.9463 DRCN 1774 17974.0 — 33.85/0.9215 29.89/0.8304 28.81/0.7954 27.16/0.8311 32.31/0.9328 CARN 1592 118.8 177 34.29/0.9255 30.29/0.8407 29.06/0.8034 27.38/0.8493 33.50/0.9440 OISR-RK2 5640 578.6 366 34.55/0.9282 30.46/0.8443 29.18/0.8075 28.50/0.8597 33.80/0.9442 OISR-LF 5640 578.6 367 34.56/0.9284 30.46/0.8450 29.20/0.8077 28.56/0.8606 33.78/0.9441 MSRN 6078 621.2 476 34.38/0.9262 30.34/0.8395 29.08/0.8041 28.08/0.8554 33.44/0.9427 SeaNet 7397 3233.2 994 34.55/0.9282 30.42/0.8444 29.17/0.8071 28.50/0.8594 33.73/0.9463 TSAN 4174 565.6 650 34.64/0.9282 30.52/0.8454 29.20/0.8080 28.55/0.8602 —/— PRFFN 3167 312.5 441 34.67/0.9288 30.54/0.8460 29.23/0.8084 28.65/0.8621 34.03/0.9473 4 FSRCNN 13 4.6 8 30.71/0.8657 27.59/0.7535 26.98/0.7150 24.62/0.7280 27.90/0.8517 SMSR 1006 57.2 192 32.12/0.8932 28.55/0.7808 27.55/0.7351 26.11/0.7868 30.54/0.9085 ACAN 1556 616.5 — 32.24/0.8955 28.62/0.7824 27.59/0.7379 26.31/0.7922 30.53/0.9086 AWSRN 1587 91.1 188 32.27/0.8960 28.69/0.7843 27.64/0.7385 26.29/0.7930 30.72/0.9109 DRCN 1774 17974.0 — 31.56/0.8810 28.15/0.7627 27.23/0.7150 25.14/0.7510 28.98/0.8816 CARN 1592 90.9 121 32.13/0.8937 28.60/0.7806 27.58/0.7349 26.07/0.7837 30.47/0.9084 OISR-RK2 5500 412.2 241 32.32/0.8965 28.72/0.7843 27.66/0.7390 26.37/0.7953 30.75/0.9082 OISR-LF 5500 412.2 239 32.33/0.8968 28.73/0.7845 27.66/0.7389 26.38/0.7953 30.76/0.9080 MSRN 6078 365.1 352 32.07/0.8903 28.60/0.7751 27.52/0.7273 26.04/0.7896 30.17/0.9034 SeaNet 7397 3065.6 704 32.33/0.8981 28.72/0.7855 27.65/0.7388 26.32/0.7942 30.74/0.9129 TSAN 4137 415.1 452 32.40/0.8975 28.73/0.7847 27.67/0.7398 26.39/0.7955 —/— PRFFN 3131 200.1 316 32.43/0.8983 28.75/0.7857 27.70/0.7398 26.55/0.7974 30.93/0.9133 -

[1] Freeman W T, Pasztor E C, Carmichael O T. Learning low-level vision. International Journal of Computer Vision, 2000, 40(1): 25–47 doi: 10.1023/A:1026501619075 [2] Thornton M W, Atkinson P M, Holland D A. Subpixel mapping of rural land cover objects from fine spatial resolution satellite sensor imagery using super-resolution pixel-swapping. International Journal of Remote Sensing, 2006, 27(3): 473–491 doi: 10.1080/01431160500207088 [3] Zou W, Yuen P C. Very low resolution face recognition problem. IEEE Transactions on Image Processing, 2011, 21(1): 327–340 [4] Shi W, Caballero J, Ledig C, Zhuang X, Bai W, Bhatia K, et al. Cardiac image super-resolution with global correspondence using multi-atlas patch-match. In: Proceedings of the International Conference on Medical Image Computing and Computer-assisted Intervention. Nagoya, Japan: 2013. 9–16 [5] Chang H, Yeung D Y, Xiong Y M. Super-resolution through neighbor embedding. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Washington DC, USA: IEEE, 2004. 1−8 [6] Zeyde R, Elad M, Protter M. On single image scale-up using sparse-representations. In: Proceedings of the International Conference on Curves and Surfaces. Avignon, France: 2010. 711–730 [7] Timofte R, De Smet V, Van Gool L. Anchored neighborhood regression for fast example-based super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision. Sydney, Australia: 2013. 1920–1927 [8] Dong C, Loy C C, He K M, Tang X O. Image super-resolution using deep convolutional networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 38(2): 295–307 [9] Kim J, Lee J K, Lee K M. Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 1646–1654 [10] Lim B, Son S, Kim H, Nah S, Lee K M. Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Hawaii, USA: 2017. 136–144 [11] Zhang Y L, Li K P, Li K, Wang L C, Zhong B N, Fu Y. Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: 2018. 286–301 [12] Tong T, Li G, Liu X J, Gao Q Q. Image super-resolution using dense skip connections. In: Proceedings of the IEEE International Conference on Computer Vision. Venice, Italy: 2017. 4799– 4807 [13] Zhang Y L, Li K P, Li K, Zhong B N, Fu Y. Residual non-local attention networks for image restoration. In: Proceedings of the International Conference on Learning Representations. New Orleans, USA: 2019. 1−18 [14] Fan Y C, Shi H H, Yu J H, Liu D, Han W, Yu H C, et al. Balanced two-stage residual networks for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Hawaii, USA: 2017. 161–168 [15] Johnson J, Alahi A, Li F F. Perceptual losses for real-time style transfer and super-resolution. In: Proceedings of the European Conference on Computer Vision. Amsterdam, Netherlands: 2016. 694–711 [16] Karen S, Andrew Z. Very deep convolutional networks for large-scale image recognition. In: Proceedings of the 3rd International Conference on Learning Representations, Conference Track Proceedings. San Diego, USA: 2015. 7−9 [17] Wang Y, Lin S, Qu Y, Wu H, Zhang Z, Xie Y, et al. Towards compact single image super-resolution via contrastive self-distillation. In: Proceedings of the 30th International Joint Conference on Artificial Intelligence. Montreal, Canada: 2021. 1122– 1128 [18] Wang Z, Bovik A C, Sheikh H R, Simoncelli E P. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 2004, 13(4): 600–612 doi: 10.1109/TIP.2003.819861 [19] Sajjadi M S, Scholkopf B, Hirsch M. EnhanceNet: Single image super-resolution through automated texture synthesis. In: Proceedings of the IEEE International Conference on Computer Vision. Venice, Italy: 2017. 4491–4500 [20] Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, et al. Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Hawaii, USA: 2017. 4681–4690 [21] Fisher Y, Vladlen K. Multi-scale context aggregation by dilated convolutions. In: Proceedings of the International Conference on Learning Representations. San Juan, Puerto Rico: 2016. 1−13 [22] Woo S, Park J, Lee J Y, Kweon I S. CBAM: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: 2018. 3–19 [23] Zhang Y L, Tian Y P, Kong Y, Zhong B N, Fu Y. Residual dense network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: 2018. 2472–2481 [24] Niu B, Wen W L, Ren W Q, Zhang X D, Yang L P, Wang S Z, et al. Single image super-resolution via a holistic attention network. In: Proceedings of the European Conference on Computer Vision. Glasgow, UK: 2020. 191–207 [25] Li J C, Fang F M, Mei K F, Zhang G X. Multi-scale residual network for image super-resolution. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: 2018. 517–532 [26] Liang J Y, Cao J Z, Sun G L, Zhang K, Van Gool L, Timofte R. SwinIR: Image restoration using swin transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Montreal, Canada: 2021. 1833–1844 [27] Liu Z, Lin Y T, Cao Y, Hu H, Wei Y X, Zhang Z, et al. Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Montreal, Canada: 2021. 10012–10022 [28] Gao D D, Zhou D W. A very lightweight and efficient image super-resolution network. Expert Systems with Applications, 2023, 213: Article No.118898 doi: 10.1016/j.eswa.2022.118898 [29] He K M, Fan H Q, Wu Y X, Xie S N, Girshick R. Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: 2020. 9729–9738 [30] Sermanet P, Lynch C, Chebotar Y, Hsu J, Jang E, Schaal S, et al. Time-contrastive networks: Self-supervised learning from video. In: Proceedings of the IEEE International Conference on Robotics and Automation. Queensland, Australia: IEEE, 2018. 1134–1141 [31] Wu G, Jiang J J, Liu X M, Ma J Y. A practical contrastive learning framework for single image super-resolution. arXiv preprint arXiv: 2111.13924, 2021. [32] Wu H Y, Qu Y Y, Lin S H, Zhou J, Qiao R Z, Zhang Z Z, et al. Contrastive learning for compact single image dehazing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Virtual Event: 2021. 10551–10560 [33] Wang L G, Wang Y Q, Dong X Y, Xu Q Y, Yang J G, An W, et al. Unsupervised degradation representation learning for blind super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Virtual Event: 2021. 10581–10590 [34] Shi W Z, Caballero J, Huszár F, Totz J, Aitken A P, Bishop B, et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 1874–1883 [35] Liu J, Zhang W J, Tang Y, Tang J, Wu G S. Residual feature aggregation network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: 2020. 2359–2368 [36] Chen T, Kornblith S, Norouzi M, Hinton G. A simple framework for contrastive learning of visual representations. In: Proceedings of the International Conference on Machine Learning. Virtual Event: 2020. 1597–1607 [37] Xie Z D, Lin Y T, Zhang Z, Cao Y, Lin S, Hu H. Propagate yourself: Exploring pixel-level consistency for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Virtual Event: 2021. 16684–16693 [38] Oord A V D, Li Y Z, Vinyals O. Representation learning with contrastive predictive coding. arXiv preprint arXiv: 1807.03748, 2018. [39] Timofte R, Agustsson E, Van Gool L, Yang M H, Zhang L. Ntire 2017 challenge on single image super-resolution: Methods and results. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Hawaii, USA: 2017. 114–125 [40] Bevilacqua M, Roumy A, Guillemot C, Alberi-Morel M. Low-complexity single-image super-resolution based on non-negative neighbor embedding. In: Proceedings of the British Machine Vision Conference. Surrey, UK: 2012. 1–10 [41] Martin D, Fowlkes C, Tal D, Malik J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings of the 8th IEEE International Conference on Computer Vision. Vancouver, Canada: IEEE, 2001. 416–423 [42] Huang J B, Singh A, Ahuja N. Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: 2015. 5197–5206 [43] Yusuke M, Ito K, Aramaki Y, Fujimoto A, Ogawa T, Yamasaki T, et al. Sketch-based manga retrieval using manga109 dataset. Multimedia Tools and Applications, 2017, 76(20): 21811–21838 doi: 10.1007/s11042-016-4020-z [44] Zhang R, Isola P, Efros A A, Shechtman E, Wang O. The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: 2018. 586− 595 [45] Dong C, Loy C C, Tang X O. Accelerating the super-resolution convolutional neural network. In: Proceedings of the European Conference on Computer Vision. Amsterdam, Netherlands: 2016. 391–407 [46] Wang L G, Dong X Y, Wang Y Q, Ying X Y, Lin Z P, An W, et al. Exploring sparsity in image super-resolution for efficient inference. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Virtual Event: 2021. 4917–4926 [47] Zhou D W, Chen Y M, Li W B, Li J X. Image super-resolution based on adaptive cascading attention network. Expert Systems with Applications, 2021, 186: Article No.115815 doi: 10.1016/j.eswa.2021.115815 [48] Li Z, Wang C F, Wang J, Ying S H, Shi J. Lightweight adaptive weighted network for single image super-resolution. Computer Vision and Image Understanding, 2021, 211: 103-254 [49] Kim J, Lee J K, Lee K M. Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 1637–1645 [50] Ahn N, Kang B, Sohn K A. Fast, accurate, lightweight super-resolution with cascading residual network. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: 2018. 252–268 [51] He X Y, Mo Z T, Wang P S, Liu Y, Yang M Y, Cheng J. Ode-inspired network design for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: 2019. 1732–1741 [52] Fang F M, Li J C, Zeng T Y. Soft-edge assisted network for single image super-resolution. IEEE Transactions on Image Processing, 2020, 29: 4656–4668 doi: 10.1109/TIP.2020.2973769 [53] Zhang J Q, Long C J, Wang Y X, Piao H Y, Mei H Y, Yang X, et al. A two-stage attentive network for single image super-resolution. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(3): 1020–1033 doi: 10.1109/TCSVT.2021.3071191 -

下载:

下载: