-

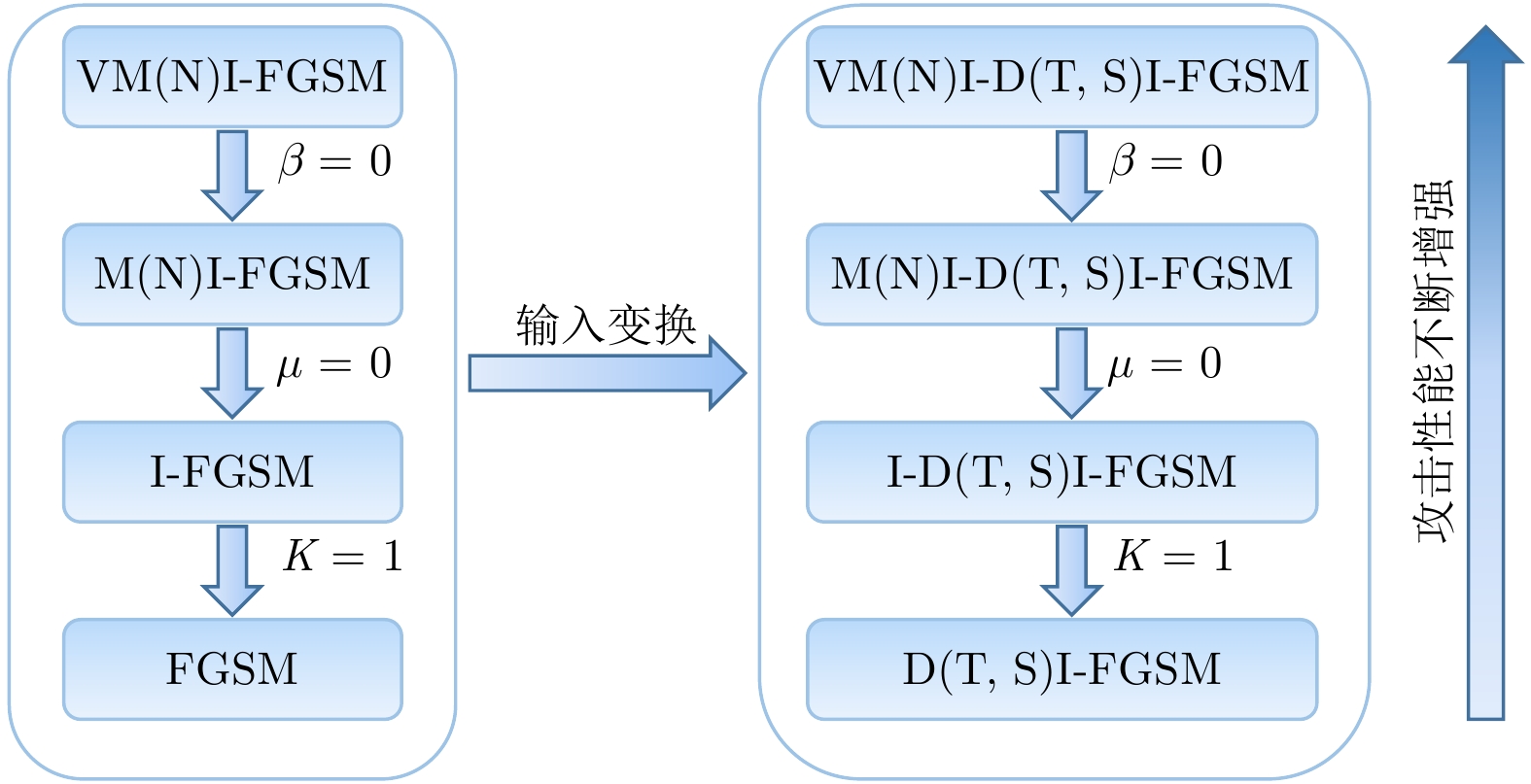

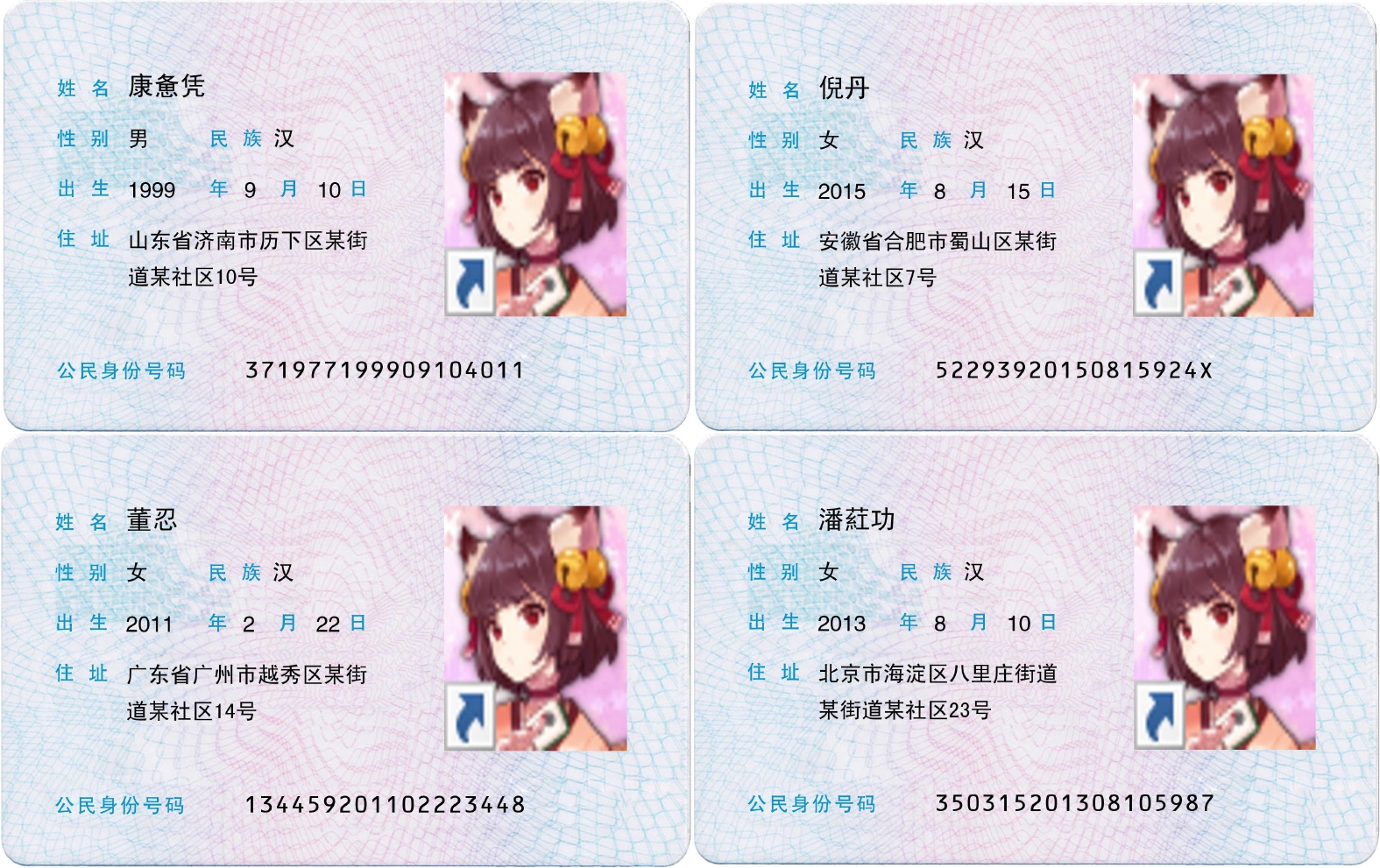

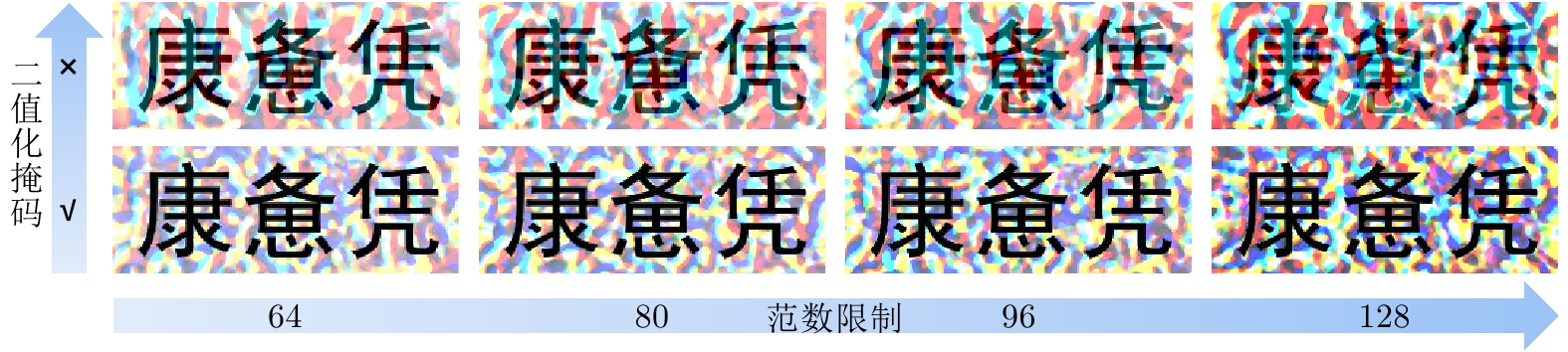

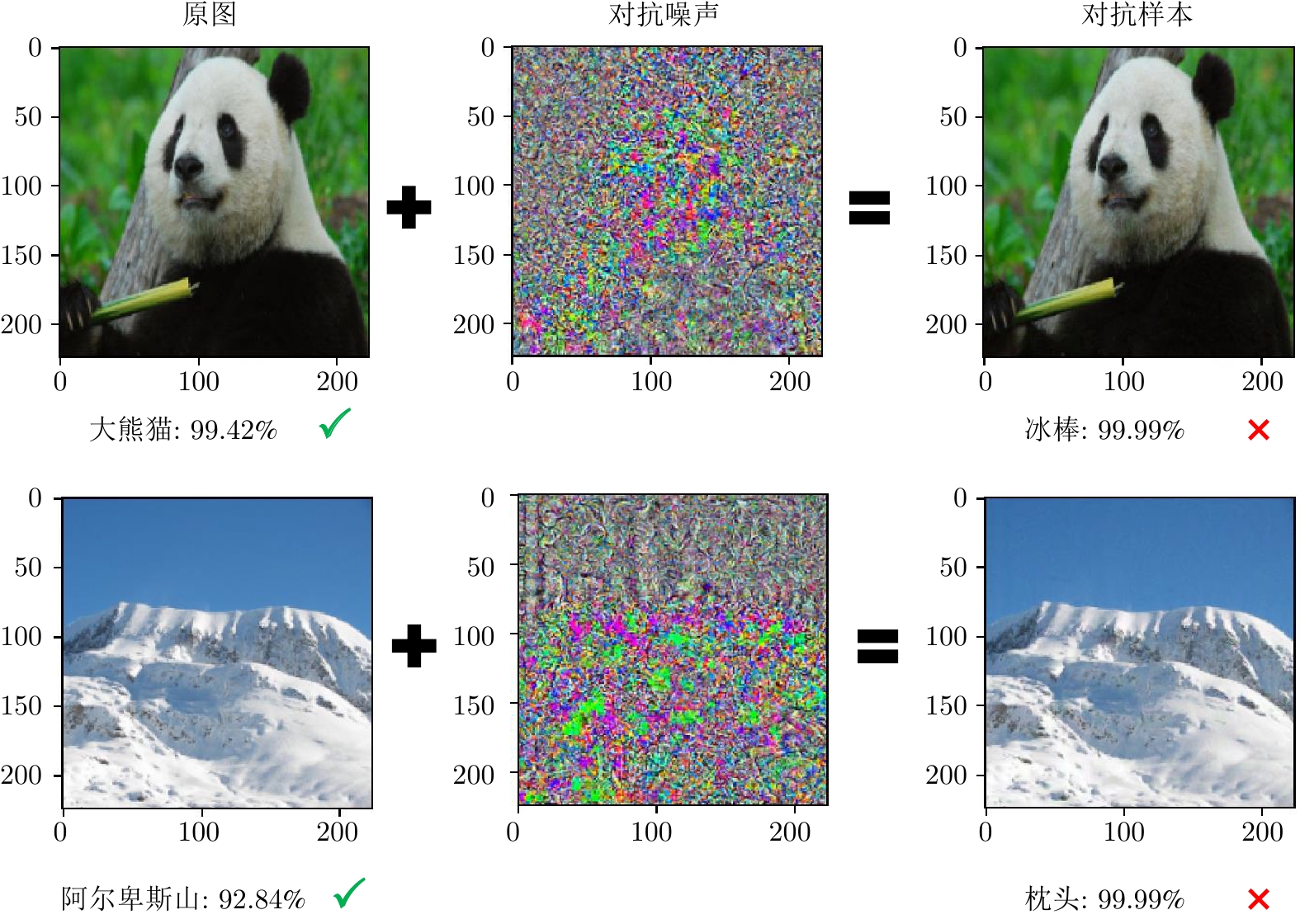

摘要: 身份证认证场景多采用文本识别模型对身份证图片的字段进行提取、识别和身份认证, 存在很大的隐私泄露隐患. 并且, 当前基于文本识别模型的对抗攻击算法大多只考虑简单背景的数据(如印刷体)和白盒条件, 很难在物理世界达到理想的攻击效果, 不适用于复杂背景、数据及黑盒条件. 为缓解上述问题, 本文提出针对身份证文本识别模型的黑盒攻击算法, 考虑较为复杂的图像背景、更严苛的黑盒条件以及物理世界的攻击效果. 本算法在基于迁移的黑盒攻击算法的基础上引入二值化掩码和空间变换, 在保证攻击成功率的前提下提升了对抗样本的视觉效果和物理世界中的鲁棒性. 通过探索不同范数限制下基于迁移的黑盒攻击算法的性能上限和关键超参数的影响, 本算法在百度身份证识别模型上实现了100%的攻击成功率. 身份证数据集后续将开源.Abstract: Identity card authentication scenarios often use text recognition models to extract, recognize, and authenticate ID card images, which poses a significant privacy breach risk. Besides, most of current adversarial attack algorithms for text recognition models only consider simple background data (such as print) and white-box conditions, making it difficult to achieve ideal attack effects in the physical world, and is not suitable for complex backgrounds, data, and black-box conditions. In order to alleviate the above problems, this paper proposes a black-box attack algorithm for the ID card text recognition model by taking into account the more complex image background, more stringent black-box conditions and attack effects in the physical world. By using the transfer-based black-box attack algorithm, the proposed algorithm introduces binarization mask and space transformation, which improves the visual effect of adversarial examples and the robustness in the physical world while ensuring the attack success rate. By exploring the performance upper limit and the influence of key hyper-parameters of the transfer-based black-box attack algorithm under different norm constraints, the proposed algorithm achieves 100% attack success rate on the Baidu ID card recognition model. The ID card dataset will be made publicly available in the future.

-

Key words:

- Adversarial examples /

- black-box attack /

- ID card text recognition /

- physical world /

- binarization mask

-

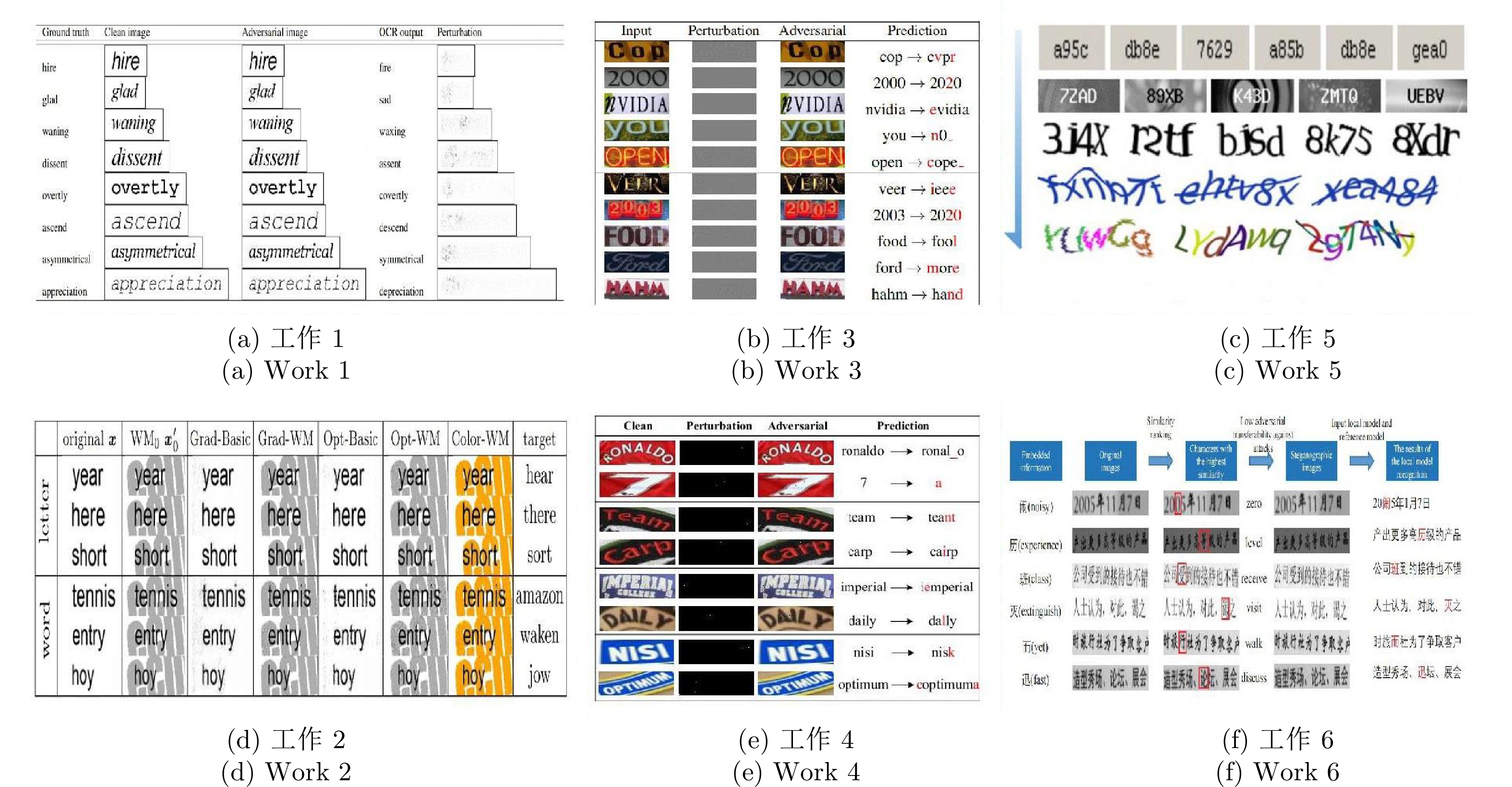

表 1 本工作与已有工作的对比

Table 1 Comparison of this work with existing work

工作 攻击条件 图像数据 攻击方式 商用模型 物理世界 1 白盒 白色背景 基于优化 $\times$ $\times$ 2 白盒 白色背景 基于梯度 + 水印 $\times$ $\times$ 3 白盒 彩色背景 基于梯度 + 优化 $\checkmark$ $\times$ 4 黑盒 彩色背景 基于查询 $\checkmark$ $\times$ 5 白盒 白色背景 多方式集成 $\times$ $\times$ 6 白盒 灰色背景 基于梯度 $\times$ $\times$ 7 白盒 彩色背景 基于优化 $\times$ $\times$ 8 白盒 灰色背景 基于生成 $\times$ $\times$ 9 白盒 灰色背景 基于优化 $\checkmark$ $\times$ 本文 黑盒 彩色背景 基于迁移 + 二值化掩码 $\checkmark$ $\checkmark$ 表 2 身份证关键字段文本图像生成标准

Table 2 ID card key field text image generation standard

类别 字典长度 字段 长度 频率 训练集 测试集 文字 6270 姓名 [2, 4] 50 110875 11088 住址 [1, 11] 150 156750 15675 数字 11 出生日期 [1, 4] 5000 20000 2000 身份证号 18 18000 10000 1000 表 3 第一组实验中针对替代模型res34的白盒攻击结果

Table 3 White-box attack results against the surrogate model res34 in the first set of experiments

评价指标 白盒条件 范数限制 指标均值 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 100.00 100.00 100.00 100.00 100.00 100.00 100.00 100.00 TMI-FGSM 100.00 100.00 100.00 100.00 100.00 100.00 100.00 100.00 SI-NI-TMI 88.24 100.00 100.00 100.00 100.00 100.00 100.00 98.32 DMI-FGSM 96.47 98.82 100.00 100.00 100.00 100.00 100.00 99.33 SI-NI-DMI 100.00 100.00 100.00 100.00 100.00 100.00 100.00 100.00 DI-TIM 90.59 92.94 98.82 100.00 100.00 100.00 100.00 97.48 VNI-SI-DI-TIM 92.94 98.82 100.00 100.00 100.00 100.00 100.00 98.82 平均编辑距离 MI-FGSM 0.4612 0.6120 0.8203 0.9382 0.9705 0.9910 0.9939 0.83 TMI-FGSM 0.4328 0.6399 0.8345 0.9190 0.9519 0.9169 0.9903 0.81 SI-NI-TMI 0.3156 0.4200 0.5679 0.6580 0.7536 0.8083 0.8454 0.62 DMI-FGSM 0.4148 0.5757 0.7379 0.8907 0.9375 0.9693 0.9869 0.79 SI-NI-DMI 0.5038 0.6676 0.7998 0.8889 0.9716 0.9764 0.9887 0.83 DI-TIM 0.3279 0.4115 0.5432 0.6689 0.7093 0.7685 0.8007 0.60 VNI-SI-DI-TIM 0.4046 0.5194 0.6116 0.6876 0.7506 0.7730 0.8378 0.65 表 4 第二组实验中针对替代模型mbv3的白盒攻击结果

Table 4 White-box attack results against the surrogate model mbv3 in the second set of experiments

评价指标 白盒条件 范数限制 指标均值 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 100.00 100.00 100.00 100.00 100.00 100.00 100.00 100.00 TMI-FGSM 98.82 100.00 100.00 100.00 100.00 100.00 100.00 100.00 SI-NI-TMI 98.82 100.00 100.00 100.00 100.00 100.00 100.00 99.83 DMI-FGSM 95.29 100.00 100.00 100.00 100.00 100.00 100.00 99.33 SI-NI-DMI 98.82 100.00 100.00 100.00 100.00 100.00 100.00 100.00 DI-TIM 90.59 98.82 100.00 100.00 100.00 100.00 100.00 98.49 VNI-SI-DI-TIM 90.59 95.29 98.82 100.00 100.00 100.00 100.00 97.81 平均编辑距离 MI-FGSM 0.6540 0.6911 0.8557 0.9594 0.9895 0.9945 0.9975 0.88 TMI-FGSM 0.6683 0.6930 0.8901 0.9652 0.9982 0.9991 0.9996 0.89 SI-NI-TMI 0.5756 0.6348 0.7950 0.9263 0.9876 0.9927 0.9956 0.84 DMI-FGSM 0.5227 0.6420 0.7592 0.9395 0.9701 0.9813 0.9859 0.83 SI-NI-DMI 0.6478 0.7881 0.8962 0.9833 0.9941 0.9965 0.9990 0.90 DI-TIM 0.5294 0.6257 0.7360 0.8430 0.8756 0.9136 0.9507 0.78 VNI-SI-DI-TIM 0.4619 0.5740 0.6240 0.7392 0.8217 0.8887 0.9176 0.72 表 5 第一组实验中针对黑盒模型mbv3的黑盒攻击结果

Table 5 Black-box attack results against the black-box model mbv3 in the first set of experiments

评价指标 黑盒条件 范数限制 指标均值 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 7.06 7.06 18.82 41.18 62.35 87.06 90.59 44.87 TMI-FGSM 7.06 7.06 12.94 35.29 47.06 71.76 90.59 38.82 SI-NI-TMI 7.06 7.06 17.65 36.47 67.06 84.71 89.41 44.20 DMI-FGSM 7.06 7.06 12.94 49.41 88.24 94.12 98.82 51.09 SI-NI-DMI 7.06 9.41 16.47 63.53 87.06 95.29 100.00 54.12 DI-TIM 7.06 9.41 21.18 37.65 64.71 88.24 89.41 45.38 VNI-SI-DI-TIM 7.06 9.41 16.47 75.29 92.94 96.67 100.00 56.83 平均编辑距离 MI-FGSM 0.0316 0.0316 0.0446 0.1386 0.2062 0.3148 0.4156 0.17 TMI-FGSM 0.0316 0.0316 0.0398 0.1152 0.1535 0.2815 0.3921 0.15 SI-NI-TMI 0.0316 0.0316 0.0526 0.1161 0.233 0.3153 0.4265 0.17 DMI-FGSM 0.0316 0.0316 0.0631 0.1545 0.362 0.4191 0.5942 0.24 SI-NI-DMI 0.0316 0.0402 0.0616 0.2252 0.3862 0.4943 0.5695 0.26 DI-TIM 0.0316 0.0350 0.0577 0.1390 0.2179 0.3289 0.4331 0.18 VNI-SI-DI-TIM 0.0316 0.0378 0.0519 0.2609 0.4450 0.5263 0.6202 0.28 表 6 第二组实验中针对黑盒模型res34的黑盒攻击结果

Table 6 Black-box attack results against the black-box model res34 in the second set of experiments

评价指标 黑盒条件 范数限制 指标均值 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 7.06 7.06 27.06 58.82 89.41 91.76 98.82 54.28 TMI-FGSM 7.06 9.41 21.18 55.29 83.53 89.41 95.29 51.60 SI-NI-TMI 7.06 9.41 23.53 62.35 88.24 89.41 97.65 53.95 DMI-FGSM 7.06 8.24 30.59 83.24 91.29 95.46 100.00 59.41 SI-NI-DMI 5.88 7.06 36.47 87.06 94.12 100.00 100.00 61.51 DI-TIM 7.06 9.41 23.53 62.35 88.24 90.59 100.00 54.45 VNI-SI-DI-TIM 7.06 4.71 40.00 89.41 97.65 100.00 100.00 62.69 平均编辑距离 MI-FGSM 0.0182 0.0201 0.0810 0.2821 0.4629 0.5447 0.6466 0.29 TMI-FGSM 0.0182 0.0210 0.0726 0.2441 0.4271 0.4797 0.5173 0.25 SI-NI-TMI 0.0182 0.0254 0.0654 0.2833 0.4435 0.5106 0.5690 0.27 DMI-FGSM 0.0182 0.0192 0.0815 0.4807 0.6509 0.7377 0.8433 0.40 SI-NI-DMI 0.0173 0.0185 0.1024 0.4961 0.6931 0.7505 0.8771 0.42 DI-TIM 0.0182 0.0232 0.0908 0.3027 0.4506 0.5138 0.6211 0.29 VNI-SI-DI-TIM 0.0182 0.0169 0.1021 0.4745 0.6471 0.8197 0.9620 0.43 表 7 第三组实验中针对黑盒模型res34-att的黑盒攻击结果

Table 7 Black-box attack results against the black-box model res34-att in the third set of experiments

评价指标 黑盒条件 范数限制 指标均值 对抗攻击算法 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 65.17 74.76 84.13 94.57 99.07 100.00 86.28 TMI-FGSM 65.67 69.62 80.83 99.04 100.00 100.00 83.03 SI-NI-TMI 70.82 70.56 85.03 95.74 100.00 100.00 87.02 DMI-FGSM 65.60 75.52 80.39 99.45 99.81 100.00 86.80 SI-NI-DMI 65.00 75.92 69.64 90.10 99.42 100.00 83.35 DI-TIM 74.72 76.47 82.44 94.01 98.66 98.96 87.55 VNI-SI-DI-TIM 70.34 65.74 89.82 100.00 100.00 100.00 87.65 平均编辑距离 MI-FGSM 0.7700 0.7800 0.7800 0.8000 0.8500 0.9600 0.8200 TMI-FGSM 0.7693 0.7743 0.7842 0.8025 0.8618 0.9715 0.8270 SI-NI-TMI 0.7701 0.7750 0.7823 0.8068 0.8741 0.9445 0.8257 DMI-FGSM 0.7699 0.7745 0.7801 0.7972 0.8645 0.9639 0.8250 SI-NI-DMI 0.7698 0.7745 0.7814 0.8000 0.8622 0.9652 0.8260 DI-TIM 0.6847 0.8035 0.7840 0.7796 0.8458 0.9909 0.8148 VNI-SI-DI-TIM 0.7690 0.7769 0.7841 0.8100 0.8756 0.9900 0.8340 表 8 第三组实验中针对黑盒模型NRTR的黑盒攻击结果

Table 8 Black-box attack results against the black-box model NRTR in the third set of experiments

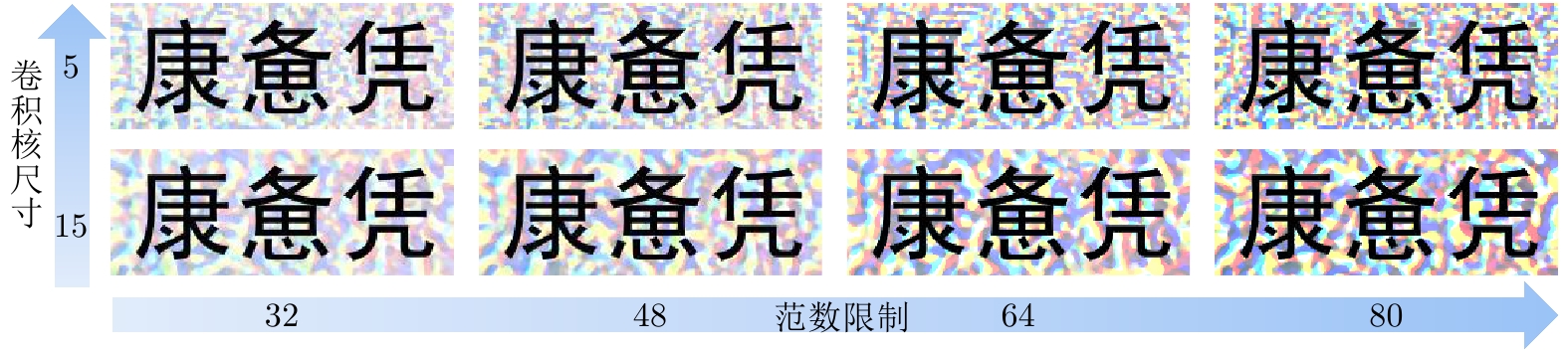

评价指标 黑盒条件 范数限制 指标均值 对抗攻击算法 8 16 32 48 64 80 攻击成功率(%) MI-FGSM 59.29 74.93 84.05 94.59 99.08 100.00 85.32 TMI-FGSM 65.11 69.33 79.45 99.32 99.65 99.62 85.41 SI-NI-TMI 69.19 69.77 84.08 94.51 98.96 99.06 85.93 DMI-FGSM 64.10 74.95 79.39 100.00 99.08 99.61 86.19 SI-NI-DMI 64.39 74.37 69.06 88.89 99.01 99.27 82.50 DI-TIM 74.76 76.73 80.57 93.26 96.98 98.51 86.80 VNI-SI-DI-TIM 69.46 65.08 88.63 99.49 99.60 100.00 87.04 平均编辑距离 MI-FGSM 0.7493 0.7414 0.7270 0.7765 0.8302 0.9357 0.7933 TMI-FGSM 0.7533 0.7602 0.7955 0.7506 0.8673 1.0099 0.8228 SI-NI-TMI 0.7805 0.7618 0.8034 0.7646 0.8952 0.9310 0.8228 DMI-FGSM 0.7612 0.7475 0.7929 0.7523 0.8245 0.9529 0.8052 SI-NI-DMI 0.7781 0.7453 0.7928 0.8143 0.8072 0.9282 0.8110 DI-TIM 0.7037 0.7494 0.7428 0.7576 0.8373 0.9509 0.8076 VNI-SI-DI-TIM 0.7828 0.8020 0.7653 0.8293 0.8307 0.9357 0.8243 表 9 卷积核尺寸消融实验, 第一组实验中针对黑盒模型res34的攻击结果

Table 9 Convolution kernel size ablation experiment, the attack results against the black-box model res34 in the first set of experiments

评价指标 黑盒条件 范数限制 指标均值 卷积核 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) $5\times5$ TMI 7.06 7.06 8.21 23.97 31.34 55.49 77.98 30.16 VNI-SI-DI-TIM 7.06 8.11 13.56 66.37 80.71 87.67 93.38 50.98 $15\times15$ TMI 7.06 7.06 12.94 35.29 47.06 71.76 90.59 38.82 VNI-SI-DI-TIM 7.06 9.41 16.47 75.29 92.94 96.67 100.00 56.83 表 10 卷积核尺寸消融实验, 第二组实验中针对黑盒模型mbv3的攻击结果

Table 10 Convolution kernel size ablation experiment, the attack results against the black-box model mbv3 in the second set of experiments

评价指标 黑盒条件 范数限制 指标均值 卷积核 对抗攻击算法 4 8 16 32 48 64 80 攻击成功率(%) $5\times5$ TMI 5.88 7.06 15.67 44.31 72.15 79.76 84.81 44.23 VNI-SI-DI-TIM 5.88 7.06 29.86 78.03 85.69 88.96 92.11 55.37 $15\times15$ TMI 7.06 9.41 21.18 55.29 83.53 89.41 95.29 51.60 VNI-SI-DI-TIM 7.06 4.71 40.00 89.41 97.65 100.00 100.00 62.69 表 11 针对百度身份证识别模型的攻击结果

Table 11 Attack results against Baidu ID card recognition model

评价指标 对抗攻击算法 字段 范数限制 指标均值 VNI-SI-DI-TIM 48 64 80 96 128 攻击成功率(%) 二值化掩码 $ \checkmark$ 姓名 1 12 45 84 100 48.4 身份证号 2 9 44 80 100 47.0 $\times$ 姓名 2 15 46 86 100 49.8 身份证号 2 11 46 82 100 48.2 -

[1] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: Curran Associates Inc., 2012. 1097−1105 [2] Liu Z, Mao H Z, Wu C Y, Feichtenhofer C, Darrell T, Xie S N. A ConvNet for the 2020s. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 11966−11976 [3] Bahdanau D, Chorowski J, Serdyuk D, Brakel P, Bengio Y. End-to-end attention-based large vocabulary speech recognition. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Shanghai, China: IEEE, 2016. 4945−4949 [4] Afkanpour A, Adeel S, Bassani H, Epshteyn A, Fan H B, Jones I, et al. BERT for long documents: A case study of automated ICD coding. In: Proceedings of the 13th International Workshop on Health Text Mining and Information Analysis (LOUHI). Abu Dhabi, United Arab Emirates: Association for Computational Linguistics, 2022. 100−107 [5] Ouyang L, Wu J, Jiang X, Almeida D, Wainwright C L, Mishkin P, et al. Training language models to follow instructions with human feedback. In: Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS). 2022. 27730−27744 [6] Silver D, Huang A, Maddison C J, Guez A, Sifre L, van den driessche G, et al. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587): 484-489 doi: 10.1038/nature16961 [7] Jumper J, Evans R, Pritzel A, Green T, Figurnov M, Ronneberger O, et al. Highly accurate protein structure prediction with AlphaFold. Nature, 2021, 596(7873): 583-589 doi: 10.1038/s41586-021-03819-2 [8] Sallam M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare, 2023, 11(6): Article No. 887 doi: 10.3390/healthcare11060887 [9] Wang J K, Yin Z X, Hu P F, Liu A S, Tao R S, Qin H T, et al. Defensive patches for robust recognition in the physical world. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 2446−2455 [10] Yuan X Y, He P, Zhu Q L, Li X L. Adversarial examples: Attacks and defenses for deep learning. IEEE Transactions on Neural Networks and Learning Systems, 2019, 30(9): 2805-2824 doi: 10.1109/TNNLS.2018.2886017 [11] Wang B H, Li Y Q, Zhou P. Bandits for structure perturbation-based black-box attacks to graph neural networks with theoretical guarantees. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 13369−13377 [12] Jia X J, Zhang Y, Wu B Y, Ma K, Wang J, Cao X C. LAS-AT: Adversarial training with learnable attack strategy. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 13388−13398 [13] Li T, Wu Y W, Chen S Z, Fang K, Huang X L. Subspace adversarial training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 13399−13408 [14] Xu C K, Zhang C J, Yang Y W, Yang H Z, Bo Y J, Li D Y, et al. Accelerate adversarial training with loss guided propagation for robust image classification. Information Processing & Management, 2023, 60(1): Article No. 103143 [15] Chen Z Y, Li B, Xu J H, Wu S, Ding S H, Zhang W Q. Towards practical certifiable patch defense with vision transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 15127−15137 [16] Suryanto N, Kim Y, Kang H, Larasati H T, Yun Y, Le T T H, et al. DTA: Physical camouflage attacks using differentiable transformation network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 15284−15293 [17] Zhong Y Q, Liu X M, Zhai D M, Jiang J J, Ji X Y. Shadows can be dangerous: Stealthy and effective physical-world adversarial attack by natural phenomenon. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 15324−15333 [18] Chen P Y, Zhang H, Sharma Y, Yi J F, Hsieh C J. ZOO: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security. Dallas, USA: ACM, 2017. 15−26 [19] Ilyas A, Engstrom L, Athalye A, Lin J. Black-box adversarial attacks with limited queries and information. In: Proceedings of the 35th International Conference on Machine Learning (ICML). Stockholm, Sweden: PMLR, 2018. 2137−2146 [20] Uesato J, O'donoghue B, Kohli P, Oord A. Adversarial risk and the dangers of evaluating against weak attacks. In: Proceedings of the 35th International Conference on Machine Learning (ICML). Stockholm, Sweden: PMLR, 2018. 5025−5034 [21] Li Y D, Li L J, Wang L Q, Zhang T, Gong B Q. NATTACK: Learning the distributions of adversarial examples for an improved black-box attack on deep neural networks. In: Proceedings of the 36th International Conference on Machine Learning (ICML). Long Beach, USA: PMLR, 2019. 3866−3876 [22] Brendel W, Rauber J, Bethge M. Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. In: Proceedings of the 6th International Conference on Learning Representations (ICLR). Vancouver, Canada: OpenReview.net, 2018. [23] Dong Y P, Su H, Wu B Y, Li Z F, Liu W, Zhang T, et al. Efficient decision-based black-box adversarial attacks on face recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 7706−7714 [24] Rahmati A, Moosavi-Dezfooli S M, Frossard P, Dai H Y. GeoDA: A geometric framework for black-box adversarial attacks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 8443−8452 [25] Dong Y P, Liao F Z, Pang T Y, Su H, Zhu J, Hu X L, et al. Boosting adversarial attacks with momentum. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 9185−9193 [26] Lin J D, Song C B, He K, Wang L W, Hopcroft J E. Nesterov accelerated gradient and scale invariance for adversarial attacks. In: Proceedings of the 8th International Conference on Learning Representations (ICLR). Addis Ababa, Ethiopia: OpenReview.net, 2020. [27] Xie C H, Zhang Z S, Zhou Y Y, Bai S, Wang J Y, Ren Z, et al. Improving transferability of adversarial examples with input diversity. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 2725−2734 [28] Dong Y P, Pang T Y, Su H, Zhu J. Evading defenses to transferable adversarial examples by translation-invariant attacks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 4307−4316 [29] Wang X S, He K. Enhancing the transferability of adversarial attacks through variance tuning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 1924−1933 [30] Shi B G, Bai X, Yao C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(11): 2298-2304 doi: 10.1109/TPAMI.2016.2646371 [31] Graves A, Fernández S, Gomez F, Schmidhuber J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In: Proceedings of the 23rd International Conference on Machine Learning. Pittsburgh Pennsylvania, USA: ACM, 2006. 369−376 [32] Song C Z, Shmatikov V. Fooling OCR systems with adversarial text images. arXiv preprint arXiv: 1802.05385, 2018. [33] Jiang H, Yang J T, Hua G, Li L X, Wang Y, Tu S H, et al. FAWA: Fast adversarial watermark attack. IEEE Transactions on Computers, DOI: 10.1109/TC.2021.3065172 [34] Xu X, Chen J F, Xiao J H, Gao L L, Shen F M, Shen H T. What machines see is not what they get: Fooling scene text recognition models with adversarial text images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 12301−12311 [35] Xu Y K, Dai P W, Li Z K, Wang H J, Cao X C. The best protection is attack: Fooling scene text recognition with minimal pixels. IEEE Transactions on Information Forensics and Security, 2023, 18: 1580-1595 doi: 10.1109/TIFS.2023.3245984 [36] Zhang J M, Sang J T, Xu K Y, Wu S X, Zhao X, Sun Y F, et al. Robust CAPTCHAs towards malicious OCR. IEEE Transactions on Multimedia, 2021, 23: 2575-2587 doi: 10.1109/TMM.2020.3013376 [37] Ding K Y, Hu T, Niu W N, Liu X L, He J P, Yin M Y, et al. A novel steganography method for character-level text image based on adversarial attacks. Sensors, 2022, 22(17): Article No. 6497 doi: 10.3390/s22176497 [38] Yang M K, Zheng H T, Bai X, Luo J B. Cost-effective adversarial attacks against scene text recognition. In: Proceedings of the 25th International Conference on Pattern Recognition (ICPR). Milan, Italy: IEEE, 2021. 2368−2374 [39] Chen L, Xu W. Attacking optical character recognition (OCR) systems with adversarial watermarks. arXiv preprint arXiv: 2002.03095, 2020. [40] 徐昌凯. 基于深度学习的对抗样本防御与生成算法研究 [硕士学位论文], 北京交通大学, 2023.Xu Chang-Kai. Research on Adversarial Example Defense and Generation Algorithm Based on Deep Learning [Master thesis], Beijing Jiaotong University, China, 2023. [41] Hu Z H, Huang S Y, Zhu X P, Sun F C, Zhang B, Hu X L. Adversarial texture for fooling person detectors in the physical world. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 13297−13306 [42] Hu Y C T, Chen J C, Kung B H, Hua K L, Tan D S. Naturalistic physical adversarial patch for object detectors. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 7828−7837 [43] Huang L F, Gao C Y, Zhou Y Y, Xie C H, Yuille A L, Zou X Q, et al. Universal physical camouflage attacks on object detectors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 717−726 [44] Athalye A, Engstrom L, Ilyas A, Kwok K. Synthesizing robust adversarial examples. In: Proceedings of the 35th International Conference on Machine Learning (ICML). Stockholm, Sweden: PMLR, 2018. 284−293 -

下载:

下载: