Shrink, Separate and Aggregate: A Feature Balancing Method for Long-tailed Visual Recognition

-

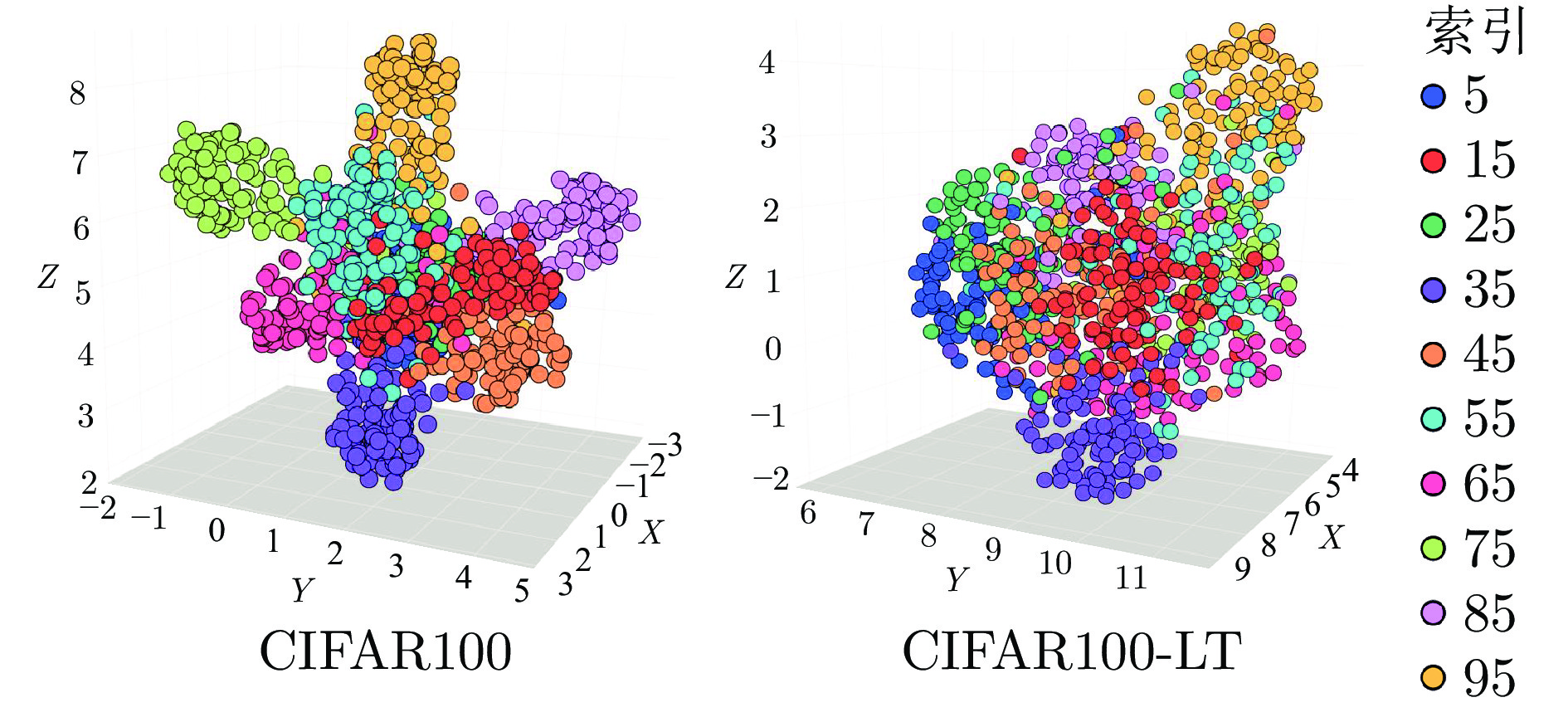

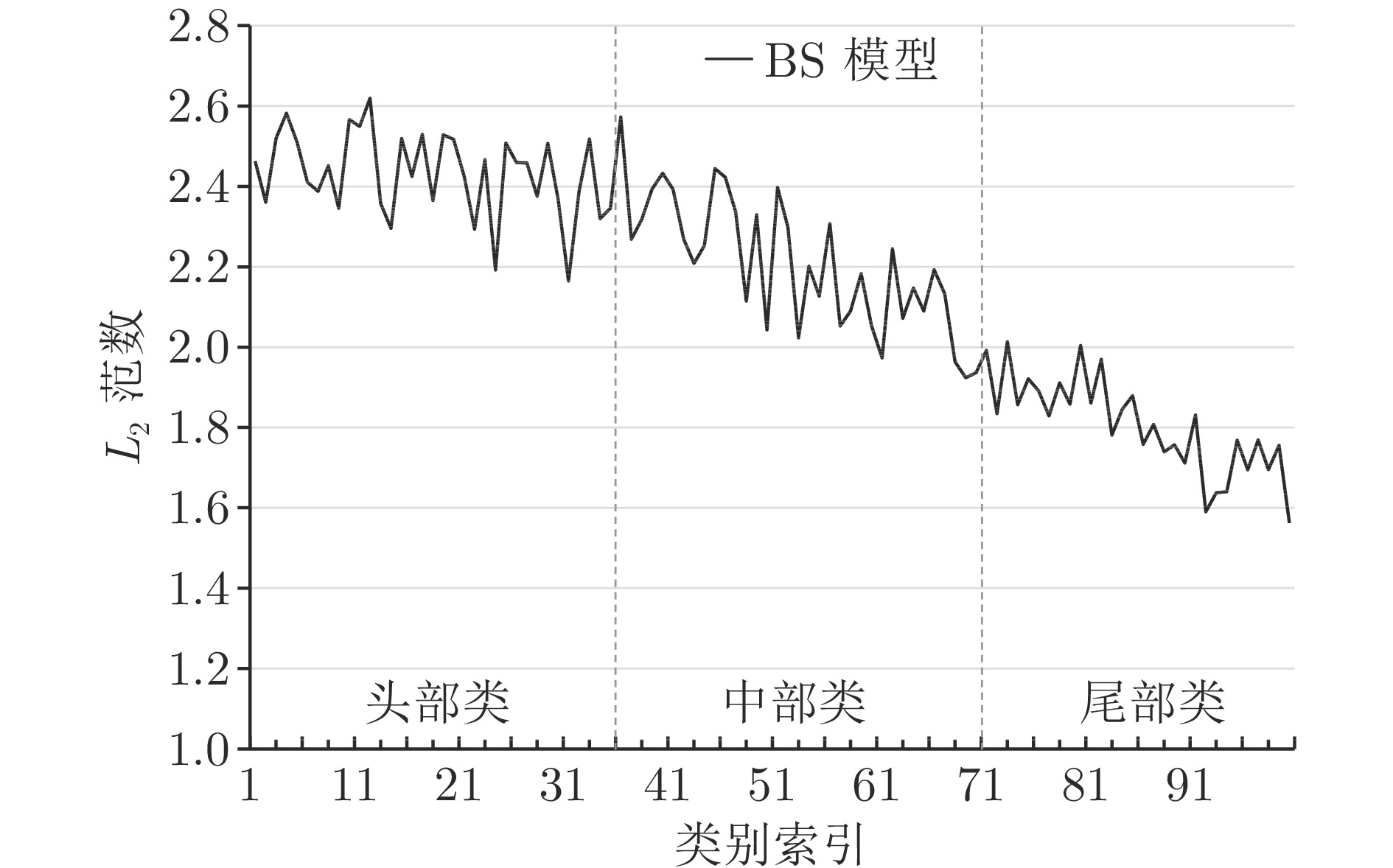

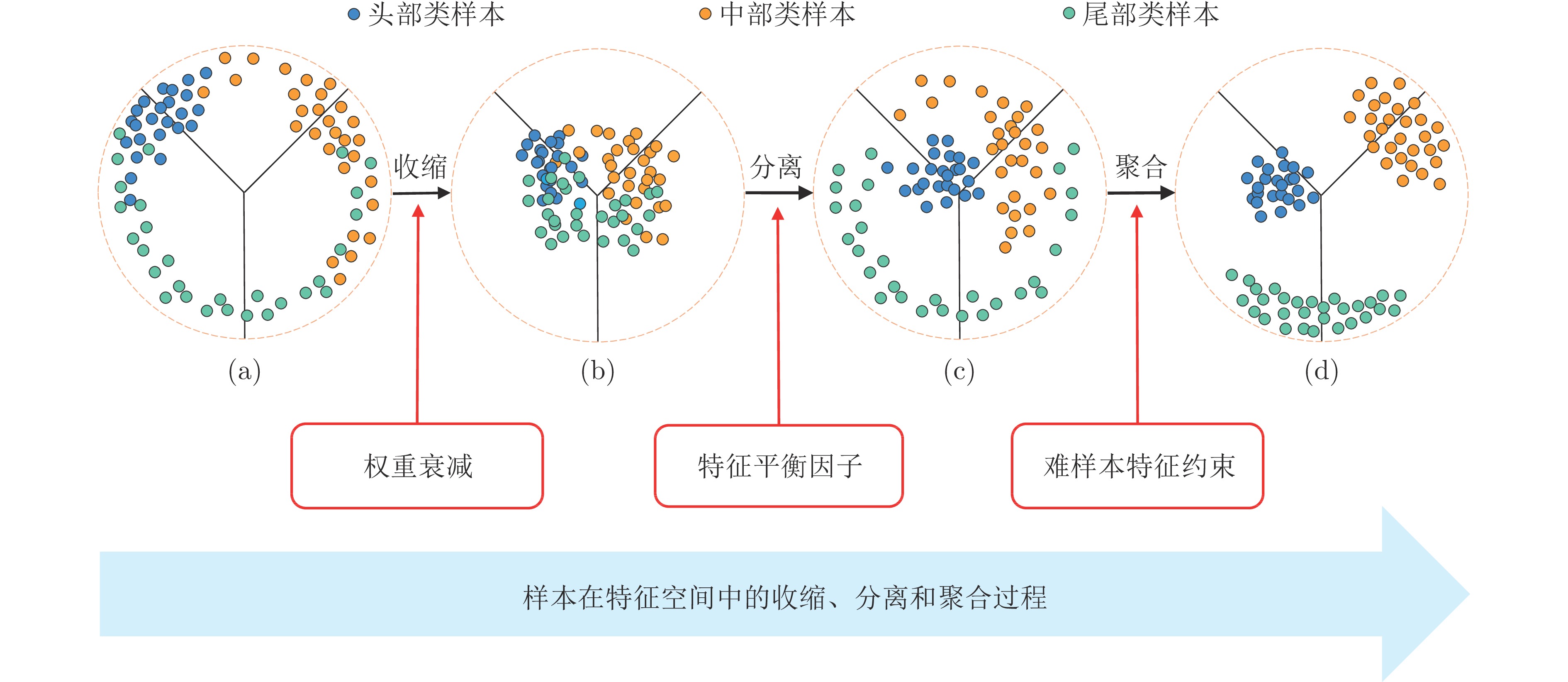

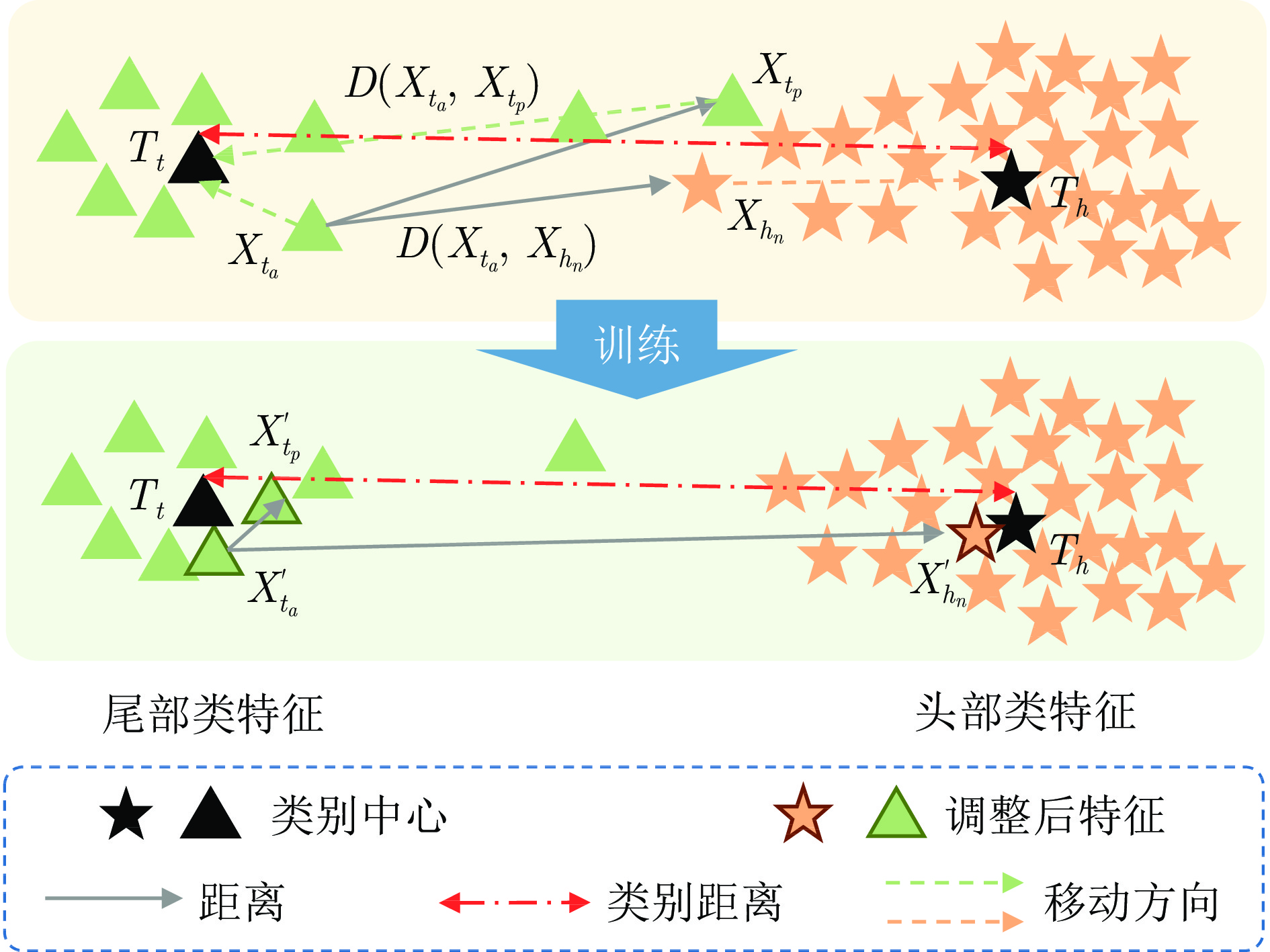

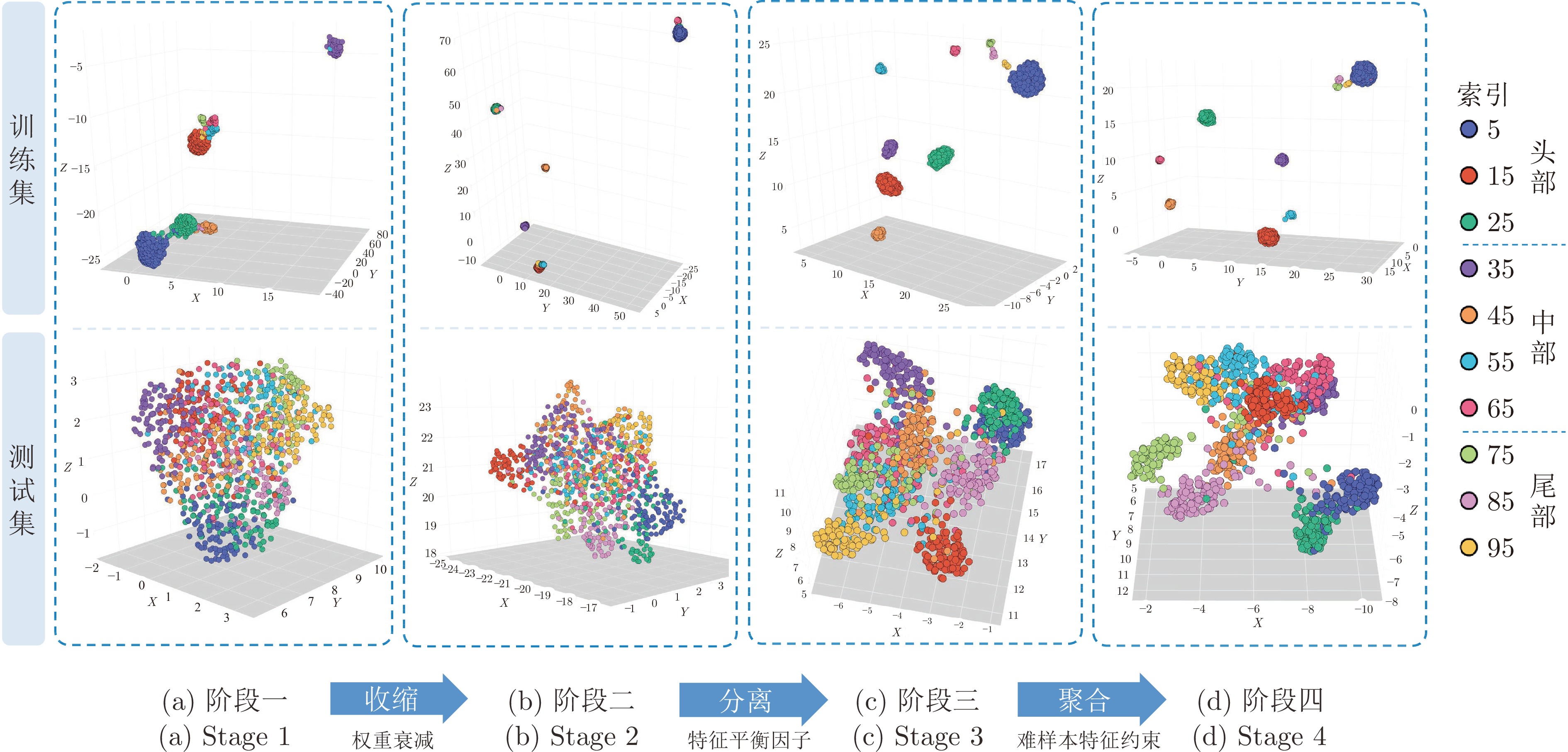

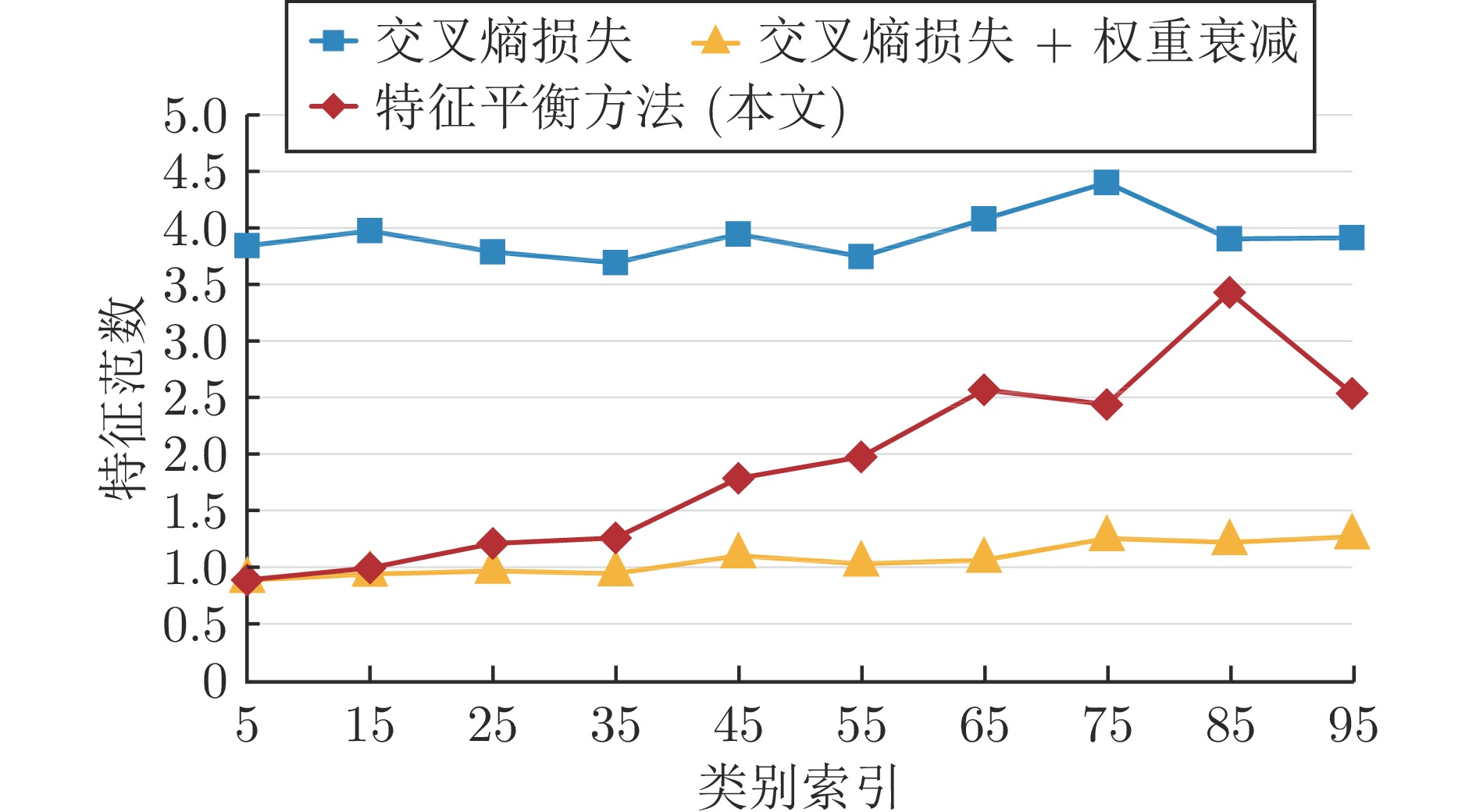

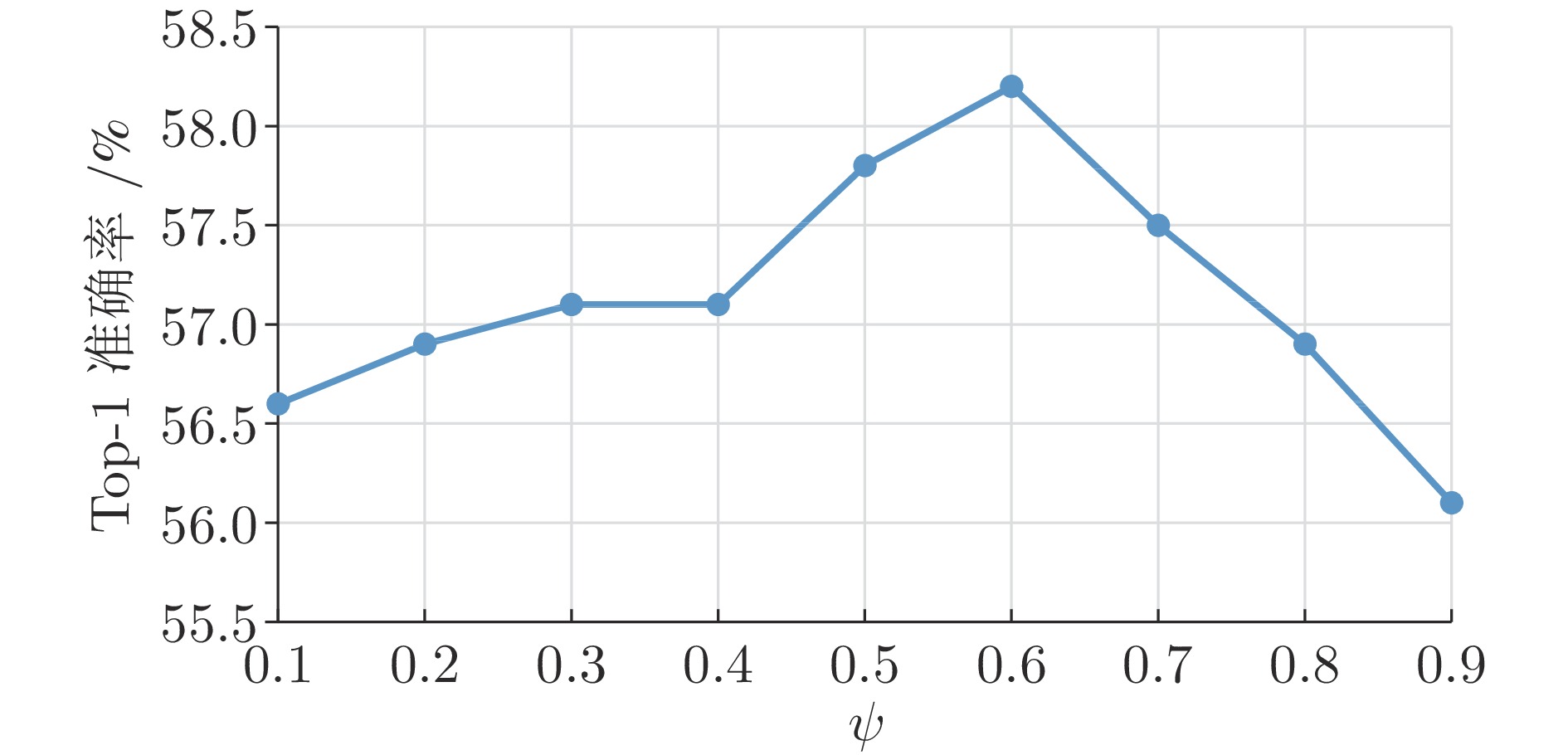

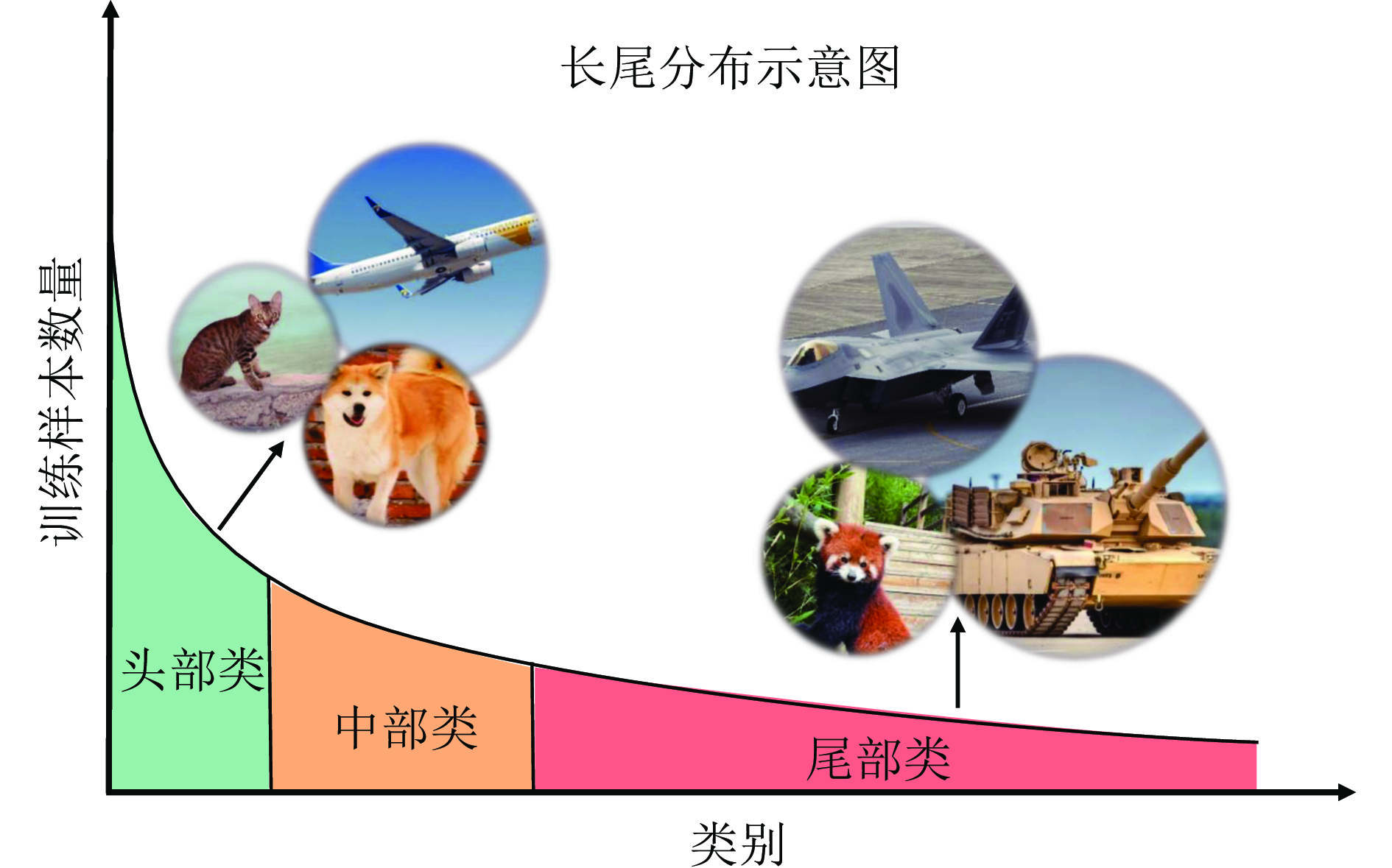

摘要: 数据在现实世界中通常呈现长尾分布, 即, 少数类别拥有大量样本, 而多数类别仅有少量样本. 这种数据不均衡的情况会导致在该数据集上训练的模型对于样本数量较少的尾部类别产生过拟合. 面对长尾视觉识别这一任务, 提出一种面向长尾视觉识别的特征平衡方法, 通过对样本在特征空间中的收缩、分离和聚合操作, 增强模型对于难样本的识别能力. 该方法主要由特征平衡因子和难样本特征约束两个模块组成. 特征平衡因子利用类样本数量来调整模型的输出概率分布, 使得不同类别之间的特征距离更加均衡, 从而提高模型的分类准确率. 难样本特征约束通过对样本特征进行聚类分析, 增加不同类别之间的边界距离, 使得模型能够找到更合理的决策边界. 该方法在多个常用的长尾基准数据集上进行实验验证, 结果表明不但提高了模型在长尾数据上的整体分类精度, 而且显著提升了尾部类别的识别性能. 与基准方法BS相比较, 该方法在CIFAR100-LT、ImageNet-LT和iNaturalist 2018数据集上的性能分别提升了7.40%、6.60%和2.89%.Abstract: Data in the real world often exhibits a long-tailed distribution, where a few classes have a large number of samples, while most classes have only a few samples. This data imbalance can lead to overfitting in the model trained on this dataset for tail classes with fewer samples. To address this problem, we propose a feature balancing method for long-tailed visual recognition, which enhances the model's ability to recognize hard samples by shrinking, separating and aggregating samples in the feature space. The method consists of two modules: Feature balance factor and hard sample feature constraint. The feature balance factor uses the sample number of classes to adjust the model's output probability distribution, making the feature distance between different classes more balanced, thereby improving the model's classification accuracy. The hard sample feature constraint performs clustering analysis on the sample features, increasing the boundary distance between different classes, enabling the model to find a more reasonable decision boundary. We conduct experiments on several common long-tailed benchmark datasets, experimental results show that the proposed method not only improves the model's overall classification accuracy on long-tailed data, but also significantly enhances the recognition performance of tail classes. Compared with baseline method BS, the proposed method achieves performance improvements of 7.40%, 6.60% and 2.89% on CIFAR100-LT, ImageNet-LT and iNaturalist 2018 datasets respectively.

-

Key words:

- Long-tailed recognition /

- loss design /

- feature balance /

- feature constraint

-

表 1 数据集的基本信息

Table 1 Basic information of the datasets

数据集 类数量 (个) 训练样本 (张) 测试样本 (张) $IF$ CIFAR100-LT 100 10847 10000 100 ImageNet-LT 1000 115846 500000 256 iNaturalist 2018 8142 437513 24426 435 表 2 模型的基本设定

Table 2 Basic settings of the model

数据集 CIFAR100-LT ImageNet-LT iNaturalist 2018 骨干网络 ResNet-32 ResNet-50 ResNeXt-50 batch size 64 256 512 权重衰减 0.0040 0.0002 0.0002 初始学习率 0.1 0.2 0.2 调整策略 warmup cosine cosine 动量 0.9 0.9 0.9 表 3 CIFAR100-LT上的Top-1准确率 (%)

Table 3 Top-1 accuracy on CIFAR100-LT (%)

方法 来源 年份 CIFAR100-LT 10 50 100 CE[41] 55.7 43.9 38.6 CE-DRW[41] NeurIPS 2022 57.9 47.9 41.1 LDAM-DRW[37] NeurIPS 2019 58.7 46.3 42.0 Causal Norm[42] NeurIPS 2020 59.6 50.3 44.1 BS[12] NeurIPS 2020 63.0 50.8 Remix[43] ECCV 2020 59.2 49.5 45.8 RIDE(3E)[14] ICLR 2020 48.0 MiSLAS[44] CVPR 2021 63.2 52.3 47.0 TSC[11] CVPR 2022 59.0 47.4 43.8 WD[29] CVPR 2022 68.7 57.7 53.6 KPS[9] PAMI 2023 49.2 45.0 PC[40] IJCAI 2023 69.1 57.8 53.4 SuperDisco[39] CVPR 2023 69.3 58.3 53.8 SHIKE[38] CVPR 2023 59.8 56.3 特征平衡方法 本文 73.3 63.0 58.2 表 4 ImageNet-LT上的Top-1准确率 (%)

Table 4 Top-1 accuracy on ImageNet-LT (%)

方法 来源 年份 骨干网络 头部类 中部类 尾部类 总计 CE[41] ResNet-50 64.0 33.8 5.8 41.60 CE-DRW[41] NeurIPS 2022 ResNet-50 61.7 47.3 28.8 50.10 LDAM-DRW[37] NeurIPS 2019 ResNet-50 60.4 46.9 30.7 49.80 Causal Norm[42] NeurIPS 2020 ResNeXt-50 62.7 48.8 31.6 51.80 BS[12] NeurIPS 2020 ResNet-50 60.9 48.8 32.1 51.00 Remix[43] ECCV 2020 ResNet-18 60.4 46.9 30.7 48.60 RIDE(3E)[14] ICLR 2020 ResNeXt-50 66.2 51.7 34.9 55.40 MiSLAS[44] CVPR 2021 ResNet-50 61.7 51.3 35.8 52.70 CMO[41] CVPR 2022 ResNet-50 66.4 53.9 35.6 56.20 TSC[11] CVPR 2022 ResNet-50 63.5 49.7 30.4 52.40 WD[29] CVPR 2022 ResNeXt-50 62.5 50.4 41.5 53.90 KPS[9] PAMI 2023 ResNet-50 51.28 PC[40] IJCAI 2023 ResNeXt-50 63.5 50.8 42.7 54.90 SuperDisco[39] CVPR 2023 ResNeXt-50 66.1 53.3 37.1 57.10 SHIKE[38] CVPR 2023 ResNet-50 59.70 特征平衡方法 本文 ResNet-50 67.9 54.3 40.1 57.60 ResNeXt-50 67.6 55.3 41.7 58.19 表 5 iNaturalist 2018上的Top-1准确率 (%)

Table 5 Top-1 accuracy on iNaturalist 2018 (%)

方法 来源 年份 骨干网络 头部类 中部类 尾部类 总计 CE[41] ResNet-50 73.9 63.5 55.5 61.00 LDAM-DRW[37] NeurIPS 2019 ResNet-50 66.10 BS[12] NeurIPS 2020 ResNet-50 70.0 70.2 69.9 70.00 Remix[43] ECCV 2020 ResNet-50 70.50 RIDE(3E)[14] ICLR 2020 ResNet-50 70.2 72.2 72.7 72.20 MiSLAS[44] CVPR 2021 ResNet-50 73.2 72.4 70.4 71.60 CMO[41] CVPR 2022 ResNet-50 68.7 72.6 73.1 72.80 TSC[11] CVPR 2022 ResNet-50 72.6 70.6 67.8 69.70 WD[29] CVPR 2022 ResNet-50 71.2 70.4 69.7 70.20 KPS[9] PAMI 2023 ResNet-50 70.35 PC[40] IJCAI 2023 ResNet-50 71.6 70.6 70.2 70.60 SuperDisco[39] CVPR 2023 ResNet-50 72.3 72.9 71.3 73.60 SHIKE[38] CVPR 2023 ResNet-50 74.50 特征平衡方法 本文 ResNet-50 74.9 72.2 73.2 72.89 ResNeXt-50 74.6 72.3 72.2 72.53 表 6 模块的消融实验

Table 6 Ablation experiments of the module

数据增强 权重衰减 特征平衡因子 难样本特征约束 CE BS 头部 中部 尾部 总体 头部 中部 尾部 总体 38.6 50.8 √ 76.0 45.6 16.9 47.6 72.0 51.5 28.0 51.6 √ √ 82.2 52.3 13.3 51.0 78.2 55.3 31.6 56.2 √ √ √ 79.9 56.5 16.1 52.6 76.1 56.8 36.8 57.6 √ √ √ √ 80.1 57.6 17.1 53.3 76.6 57.9 37.4 58.2 -

[1] Zoph B, Vasudevan V, Shlens J, Le Q V. Learning transferable architectures for scalable image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8697−8710 [2] Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016. 779−788 [3] Deng J, Dong W, Socher R, Li L J, Li K, Li F F. ImageNet: A large-scale hierarchical image database. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Miami, USA: IEEE, 2009. 248−255 [4] Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet large scale visual recognition challenge. International Journal of Computer Vision, 2015, 115: 211−252 doi: 10.1007/s11263-015-0816-y [5] Lin T Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft COCO: Common objects in context. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: Springer, 2014. 740−755 [6] Zhou B L, Lapedriza A, Khosla A, Oliva A, Torralba A. Places: A 10 million image database for scene recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(6): 1452−1464 doi: 10.1109/TPAMI.2017.2723009 [7] Anwar S M, Majid M, Qayyum A, Awais M, Alnowami M, Khan M K. Medical image analysis using convolutional neural networks: A review. Journal of Medical Systems, 2018, 42: Article No. 226 doi: 10.1007/s10916-018-1088-1 [8] Liu Z W, Miao Z Q, Zhan X H, Wang J Y, Gong B Q, Yu S X. Large-scale long-tailed recognition in an open world. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2537−2546 [9] Li M K, Cheung Y M, Hu Z K. Key point sensitive loss for long-tailed visual recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(4): 4812−4825 [10] Tian C Y, Wang W H, Zhu X Z, Dai J F, Qiao Y. VL-LTR: Learning class-wise visual-linguistic representation for long-tailed visual recognition. In: Proceedings of the 17th European Conference on Computer Vision. Tel Aviv, Israel: Springer, 2022. 73–91 [11] Li T H, Cao P, Yuan Y, Fan L J, Yang Y Z, Feris R, et al. Targeted supervised contrastive learning for long-tailed recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 6908−6918 [12] Ren J W, Yu C J, Sheng S A, Ma X, Zhao H Y, Yi S, et al. Balanced meta-softmax for long-tailed visual recognition. Advances in Neural Information Processing Systems, 2020, 33: 4175−4186 [13] Hong Y, Han S, Choi K, Seo S, Kim B, Chang B. Disentangling label distribution for long-tailed visual recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 6622−6632 [14] Wang X D, Lian L, Miao Z Q, Liu Z W, Yu S X. Long-tailed recognition by outing diverse distribution-aware experts. arXiv preprint arXiv: 2010.01809, 2020. [15] Li T H, Wang L M, Wu G S. Self supervision to distillation for long-tailed visual recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 610−619 [16] Wang Y R, Gan W H, Yang J, Wu W, Yan J J. Dynamic curriculum learning for imbalanced data classication. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, South Korea: IEEE, 2019. 5016−5025 [17] Zang Y H, Huang C, Loy C C. FASA: Feature augmentation and sampling adaptation for long-tailed instance segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 3457−3466 [18] Cui Y, Jia M L, Lin T Y, Song Y, Belongie S. Class-balanced loss based on effective number of samples. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 9268−9277 [19] Lin T Y, Goyal P, Girshick R, He K M, Dollár P. Focal loss for dense object detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 318−327 doi: 10.1109/TPAMI.2018.2858826 [20] Tan J R, Wang C B, Li B Y, Li Q Q, Ouyang W L, Yin C Q, et al. Equalization loss for long-tailed object recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 11659−11668 [21] Wang J Q, Zhang W W, Zang Y H, Cao Y H, Pang J M, Gong T, et al. Seesaw loss for long-tailed instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 9690−9699 [22] Zhao Y, Chen W C, Tan X, Huang K, Zhu J H. Adaptive logit adjustment loss for long-tailed visual recognition. arXiv preprint arXiv: 2104.06094, 2021. [23] Guo H, Wang S. Long-tailed multi-label visual recognition by collaborative training on uniform and re-balanced samplings. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 15084−15093 [24] Zhou B Y, Cui Q, Wei X S, Chen Z M. BBN: Bilateral-branch network with cumulative learning for long-tailed visual recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 9716−9725 [25] Li Y, Wang T, Kang B Y, Tang S, Wang C F, Li J T, et al. Overcoming classier imbalance for long-tail object detection with balanced group softmax. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 10988−10997 [26] Cui J Q, Liu S, Tian Z T, Zhong Z S, Jia J Y. ResLT: Residual learning for long-tailed recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(3): 3695−3706 [27] Li J, Tan Z C, Wan J, Lei Z, Guo G D. Nested collaborative learning for long-tailed visual recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 6939−6948 [28] Cui J Q, Zhong Z S, Liu S, Yu B, Jia J Y. Parametric contrastive learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021. 695−704 [29] Alshammari S, Wang Y X, Ramanan D, Kong S. Long-tailed recognition via weight balancing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 6887−6897 [30] Li M K, Cheung Y M, Jiang J Y. Feature-balanced loss for long-tailed visual recognition. In: Proceedings of the IEEE International Conference on Multimedia and Expo (ICME). Taiwan, China: IEEE, 2022. 1−6 [31] Goodfellow I, Bengio Y, Courville A. Deep Learning. Massachusetts: MIT Press, 2016. [32] Schroff F, Kalenichenko D, Philbin J. FaceNet: A unified embedding for face recognition and clustering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 815−823 [33] Krizhevsky A. Learning Multiple Layers of Features From Tiny Images [Master thesis], University of Toronto, Canada, 2009. [34] Horn G V, Aodha O M, Song Y, Cui Y, Sun C, Shepard A, et al. The iNaturalist species classication and detection dataset. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 8769−8778 [35] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016. 770−778 [36] Xie S N, Girshick R, Dollar P, Tu Z W, He K M. Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 5987−5995 [37] Cao K D, Wei C, Gaidon A, Arechiga N, Ma T Y. Learning imbalanced datasets with label-distribution-aware margin loss. In: Proceedings of the 33rd International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2019. 1567–1578 [38] Jin Y, Li M K, Lu Y, Cheung Y M, Wang H Z. Long-tailed visual recognition via self-heterogeneous integration with knowledge excavation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 23695−23704 [39] Du Y J, Shen J Y, Zhen X T, Snoek C G M. SuperDisco: Super-class discovery improves visual recognition for the long-tail. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 19944−19954 [40] Sharma S, Xian Y Q, Yu N, Singh A. Learning prototype classiers for long-tailed recognition. In: Proceedings of the Thirty-second International Joint Conference on Articial Intelligence. Macao, China: ACM, 2023. 1360−1368 [41] Park S, Hong Y, Heo B, Yun S, Choi J Y. The majority can help the minority: Context-rich minority oversampling for long-tailed classication. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022. 6877−6886 [42] Tang K H, Huang J Q, Zhang H W. Long-tailed classication by keeping the good and removing the bad momentum causal effect. arXiv preprint arXiv: 2009.12991, 2020. [43] Chou H P, Chang S C, Pan J Y, Wei W, Juan D C. Remix: Rebalanced mixup. In: Proceedings of the European Conference on Computer Vision. Glasgow, UK: Springer, 2020. 95−110 [44] Zhong Z S, Cui J Q, Liu S, Jia J Y. Improving calibration for long-tailed recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021. 16484−16493 [45] McInnes L, Healy J, Melville J. UMAP: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv: 1802.03426, 2020. -

下载:

下载: