A Machine Reading Comprehension Approach Based on Reading Skill Recognition and Dual Channel Fusion Mechanism

-

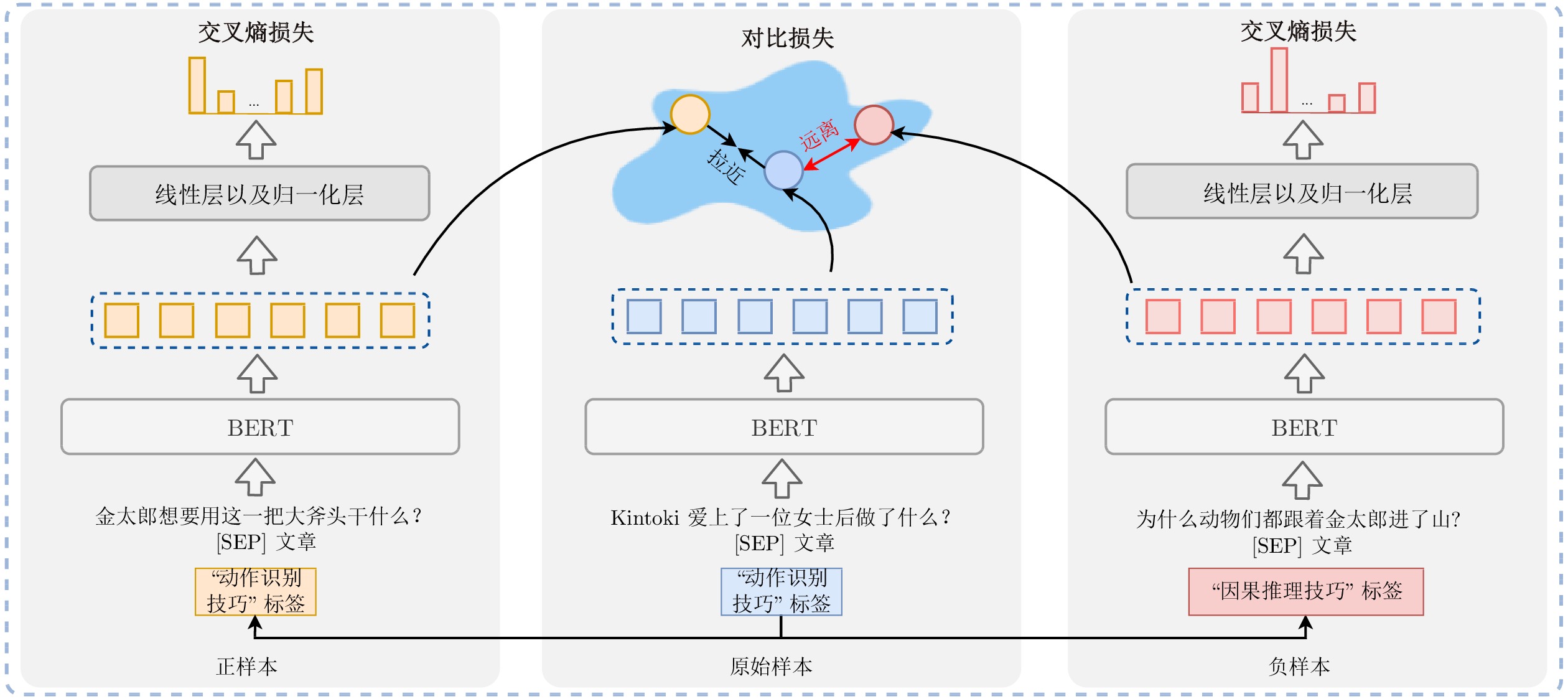

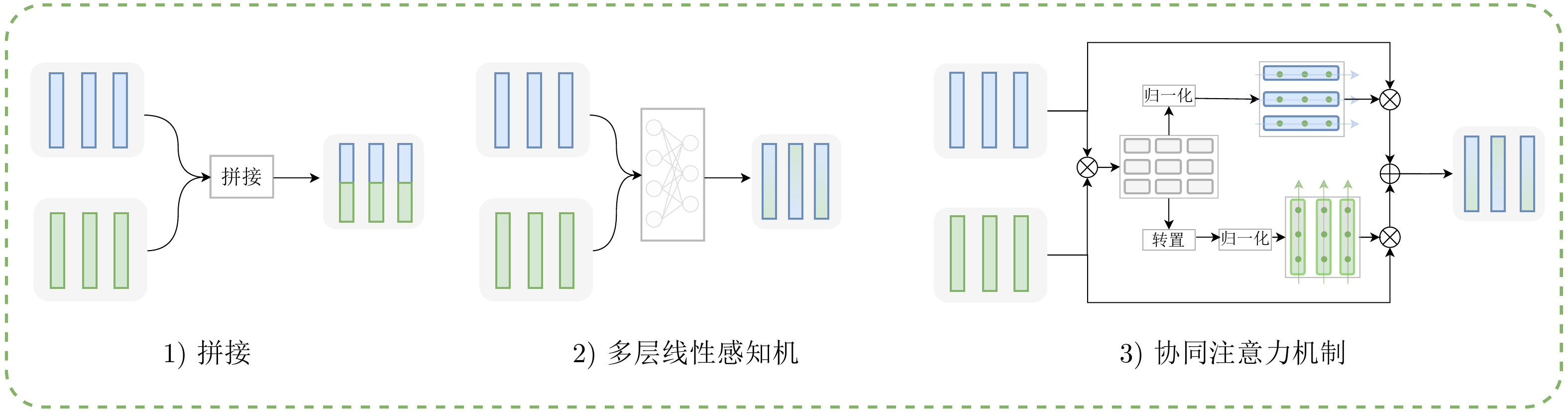

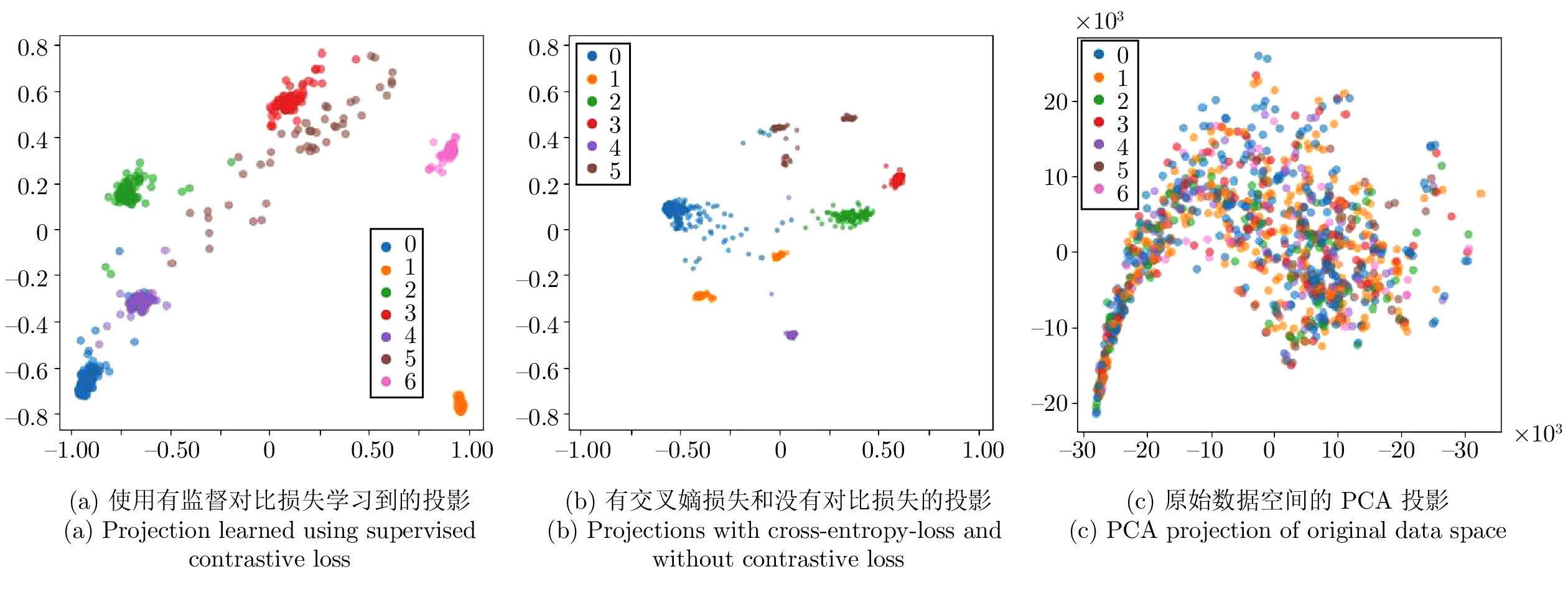

摘要: 机器阅读理解任务旨在要求系统对给定文章进行理解, 然后对给定问题进行回答. 先前的工作重点聚焦在问题和文章间的交互信息, 忽略了对问题进行更加细粒度的分析(如问题所考察的阅读技巧是什么?). 受先前研究的启发, 人类对于问题的理解是一个多维度的过程. 首先, 人类需要理解问题的上下文信息; 然后, 针对不同类型问题, 识别其需要使用的阅读技巧; 最后, 通过与文章交互回答出问题答案. 针对这些问题, 提出一种基于阅读技巧识别和双通道融合的机器阅读理解方法, 对问题进行更加细致的分析, 从而提高模型回答问题的准确性. 阅读技巧识别器通过对比学习的方法, 能够显式地捕获阅读技巧的语义信息. 双通道融合机制将问题与文章的交互信息和阅读技巧的语义信息进行深层次的融合, 从而达到辅助系统理解问题和文章的目的. 为了验证该模型的效果, 在FairytaleQA数据集上进行实验, 实验结果表明, 该方法实现了在机器阅读理解任务和阅读技巧识别任务上的最好效果.Abstract: Machine reading comprehension task aims to require the system to understand a given passage and then answer a question. Previous researches focus on the interaction between questions and passages. However, they neglect to make a more granular analysis of the questions, e.g., what is the reading skill examined by the questions? Inspired by the previous reading comprehension literature, the understanding of questions is a multi-dimensional process where humans first need to understand the context semantics of the question, then identify the reading skills they need to use for different types of questions, and finally answer the question. In the end, we propose a machine reading comprehension method based on reading skill recognition and dual channel fusion mechanism to make a comprehensive analysis of questions, so as to improve the accuracy of the model in answering questions. Specifically, the reading skill recognizer can capture the semantic representations of reading skills through contrastive learning. The dual channel fusion mechanism deeply integrates the contextual information and the semantic representations of reading skills, so as to help the system understand the question and passage. To verify the effectivenesss of the model, we conduct experiments on the FairytaleQA dataset. The experimental results show that the proposed method achieves the state-of-the-art performance on machine reading comprehension task and reading skill recognition task.

-

表 1 FairytaleQA数据集的主要统计数据

Table 1 Core statistics of the FairytaleQA dataset

项目 均值 标准偏差 最小值 最大值 每个故事章节数 15.6 9.8 2 60 每个故事单词数 2305.4 1480.8 228 7577 每个章节单词数 147.7 60.0 12 447 每个故事问题数 41.7 29.1 5 161 每个章节问题数 2.9 2.4 0 18 每个问题单词数 10.5 3.2 3 27 每个答案单词数 7.2 5.8 1 70 表 2 FairytaleQA数据集中验证集和测试集上的性能对比 (%)

Table 2 Performance comparison on the validation and the test set in FairytaleQA dataset (%)

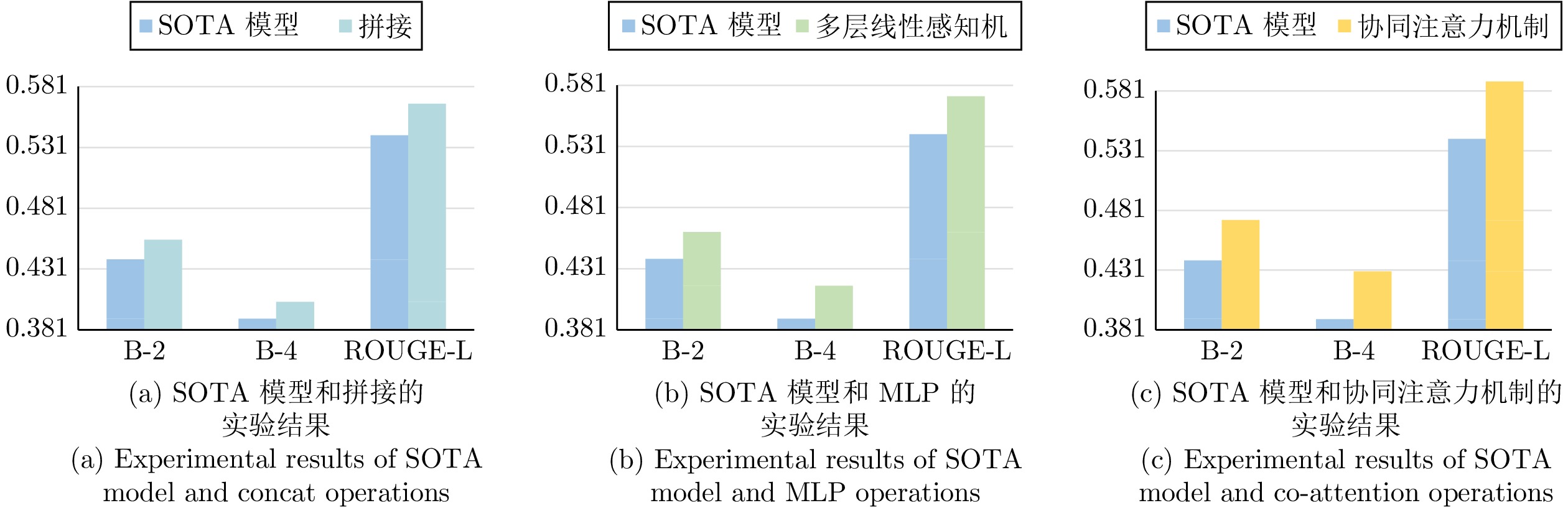

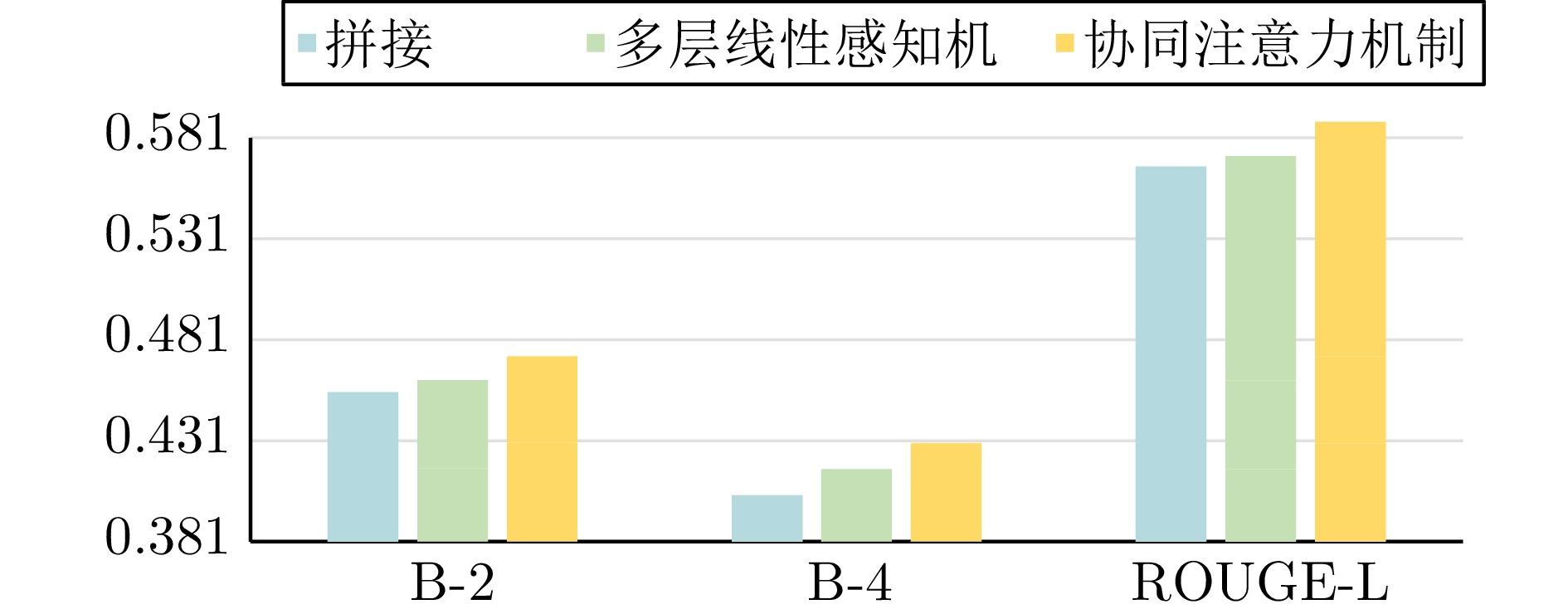

模型名称 验证集 测试集 B-1 B-2 B-3 B-4 ROUGE-L METEOR B-1 B-2 B-3 B-4 ROUGE-L METEOR 轻量化模型 Seq2Seq 25.12 6.67 2.01 0.81 13.61 6.94 26.33 6.72 2.17 0.81 14.55 7.34 CAQA-LSTM 28.05 8.24 3.66 1.57 16.15 8.11 30.04 8.85 4.17 1.98 17.33 8.60 Transformer 21.87 4.94 1.53 0.59 10.32 6.01 21.72 5.21 1.74 0.67 10.27 6.22 预训练语言模型 DistilBERT — — — — 9.70 — — — — — 8.20 — BERT — — — — 10.40 — — — — — 9.70 — BART 19.13 7.92 3.42 2.14 12.25 6.51 21.05 8.93 3.90 2.52 12.66 6.70 微调模型 BART-Question-types — — — — — — — — — — 49.10 — CAQA-BART 52.59 44.17 42.76 40.07 53.20 28.31 55.73 47.00 43.68 40.45 55.13 28.80 BART-NarrativeQA 45.34 39.17 36.33 34.10 47.39 24.65 48.13 41.50 38.26 36.97 49.16 26.93 BART-FairytaleQA$ \dagger $ 51.74 43.30 41.23 38.29 53.88 27.09 54.04 45.98 42.08 39.46 53.64 27.45 BART-FairytaleQA ‡ 51.28 43.96 41.51 39.05 54.11 26.86 54.82 46.37 43.02 39.71 54.44 27.82 本文模型 54.21 47.38 44.65 43.02 58.99 29.70 57.36 49.55 46.23 42.91 58.48 30.93 人类表现 — — — — 65.10 — — — — — 64.40 — 表 3 FairytaleQA数据集中验证集和测试集上的各组件消融实验结果 (%)

Table 3 The performance of ablation study on each component in our model on the validation set and the test set of the FairytaleQA dataset (%)

模型设置 验证集 测试集 B-1 B-2 B-3 B-4 ROUGE-L METEOR B-1 B-2 B-3 B-4 ROUGE-L METEOR SOTA 模型 51.28 43.96 41.51 39.05 54.11 26.86 54.82 46.37 43.02 39.71 54.44 27.82 去除阅读技巧识别器 52.15 44.47 42.11 40.73 55.38 27.45 54.90 47.16 43.55 40.67 56.48 29.31 去除对比学习损失 53.20 45.07 42.88 41.94 56.75 28.15 55.22 47.98 44.13 41.42 57.34 30.20 去除双通道融合机制 52.58 45.38 43.15 41.62 57.22 27.75 55.79 48.20 44.96 41.28 57.12 29.88 本文模型 54.21 47.38 44.65 43.02 58.99 29.70 57.36 49.55 46.23 42.91 58.48 30.93 表 4 基于交叉熵损失的方法和基于有监督对比学习的方法在2个任务上的效果 (%)

Table 4 The performance of cross-entropy-loss-based method and supervised contrastive learning method on the two tasks (%)

实验设置 准确率 B-4 ROUGE-L METEOR 基于交叉熵损失的方法 91.40 41.42 57.34 30.20 本文基于有监督对比

学习损失的方法93.77 42.91 58.48 30.93 表 5 不同输入下的阅读技巧识别器的识别准确率 (%)

Table 5 The recognition accuracy of reading skill recognizer under different inputs (%)

实验设置 验证集 测试集 只输入问题 85.31 82.56 输入问题和文章 92.24 93.77 -

[1] Hermann K M, Kociský T, Grefenstette E, Espeholt L, Kay W, Suleyman M, et al. Teaching machines to read and comprehend. In: Proceedings of the Neural Information Processing Systems. Montreal, Canada: 2015. 1693–1701 [2] Seo M J, Kembhavi A, Farhadi A, Hajishirzi H. Bidirectional attention flow for machine comprehension. arXiv preprint arXiv: 1611.01603, 2016. [3] Tay Y, Wang S, Luu A T, Fu J, Phan M C, Yuan X, et al. Simple and effective curriculum pointer-generator networks for reading comprehension over long narratives. In: Proceedings of the Conference of the Association for Computational Linguistics. Florence, Italy: 2019. 4922–4931 [4] Peng W, Hu Y, Yu J, Xing L X, Xie Y Q. APER: Adaptive evidence-driven reasoning network for machine reading comprehension with unanswerable questions. Knowledge-Based Systems, 2021, 229: Article No. 107364 doi: 10.1016/j.knosys.2021.107364 [5] Perevalov A, Both A, Diefenbach D, Ngomo A N. Can machine translation be a reasonable alternative for multilingual question answering systems over knowledge graphs? In: Proceedings of the ACM Web Conference. Lyon, France: 2022. 977–986 [6] Xu Y, Wang D, Yu M, Ritchie D, Yao B, Wu T, et al. Fantastic questions and where to find them: FairytaleQA——An authentic dataset for narrative comprehension. In: Proceedings of the Conference of the Association for Computational Linguistics. Dublin, Ireland: 2022. 447–460 [7] Liu S, Zhang X, Zhang S, Wang H, Zhang W. Neural machine reading comprehension: Methods and trends. arXiv preprint arXiv: 1907.01118, 2019. [8] Yan M, Xia J, Wu C, Bi B, Zhao Z, Zhang J, et al. A deep cascade model for multi-document reading comprehension. In: Proceedings of the Conference on Artificial Intelligence. Honolulu, USA: 2019. 7354–7361 [9] Liao J, Zhao X, Li X, Tang J, Ge B. Contrastive heterogeneous graphs learning for multi-hop machine reading comprehension. World Wide Web, 2022, 25(3): 1469−1487 doi: 10.1007/s11280-021-00980-6 [10] Lehnert W G. Human and computational question answering. Cognitive Science, 1977, 1(1): 47−73 doi: 10.1207/s15516709cog0101_3 [11] Kim Y. Why the simple view of reading is not simplistic: Unpacking component skills of reading using a direct and indirect effect model of reading. Scientific Studies of Reading, 2017, 21(4): 310−333 doi: 10.1080/10888438.2017.1291643 [12] Sugawara S, Yokono H, Aizawa A. Prerequisite skills for reading comprehension: Multi-perspective analysis of MCTest datasets and systems. In: Proceedings of the Conference on Artificial Intelligence. San Francisco, USA: 2017. 3089–3096 [13] Weston J, Bordes A, Chopra S, Mikolov T. Towards AI-complete question answering: A set of prerequisite toy tasks. arXiv preprint arXiv: 1502.05698, 2015. [14] Purves A C, Söter A, Takala S, Vähäpassi A. Towards a domain-referenced system for classifying composition assignments. Research in the Teaching of English, 1984: 385−416 [15] Vähäpassi A. On the specification of the domain of school writing. Afinlan Vuosikirja, 1981: 85−107 [16] Chen D, Bolton J, Manning C D. A thorough examination of the CNN/daily mail reading comprehension task. arXiv preprint arXiv: 1606.02858, 2016. [17] Rajpurkar P, Zhang J, Lopyrev K, Liang P. Squad: 100,000 + questions for machine comprehension of text. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Austin, USA: 2016. 2383–2392 [18] Richardson M, Renshaw E. MCTest: A challenge dataset for the open-domain machine comprehension of text. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Washington, USA: 2013. 193–203 [19] Kocisk'y T, Schwarz J, Blunsom P, Dyer C, Hermann K M, Melis G. The narrativeqa reading comprehension challenge. Transactions of the Association for Computational Linguistics, 2018, 6: 317−328 doi: 10.1162/tacl_a_00023 [20] Yang B, Mitchell T M. Leveraging knowledge bases in LSTMs for improving machine reading. In: Proceedings of the Conference of the Association for Computational Linguistics. Vancou-ver, Canada: 2017. 1436–1446 [21] Zhang Z, Wu Y, Zhou J, Duan S, Zhao H, Wang R. SG-Net: Syntax-guided machine reading comprehension. In: Proceedings of the Conference on Artificial Intelligence. New York, USA: 2020. 9636–9643 [22] Kao K Y, Chang C H. Applying information extraction to storybook question and answer generation. In: Proceedings of the Conference on Computational Linguistics and Speech Process-ing. Taipei, China: 2022. 289–298 [23] Lu J, Sun X, Li B, Bo L, Zhang T. BEAT: Considering question types for bug question answering via templates. Knowledge-Based Systems, 2021, 225: Article No. 107098 [24] Yang C, Jiang M, Jiang B, Zhou W, Li K. Co-attention network with question type for visual question answering. IEEE Access, 2019, (7): 40771−40781 doi: 10.1109/ACCESS.2019.2908035 [25] Wu Z, Xiong Y, Yu S X, Lin D. Unsupervised feature learning via non-parametric instance discrimination. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE Computer Society, 2018. 3733– 3742 [26] Chen X, He K. Exploring simple siamese representation learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Virtual Event: IEEE, 2021. 15750– 15758 [27] Yang J, Duan J, Tran S, Xu Y, Chanda S, Li Q C, et al. Vision-language pre-training with triple contrastive learning. arXiv preprint arXiv: 2202.10401, 2022. [28] Chopra S, Hadsell R, LeCun Y. Learning a similarity metric discriminatively, with application to face verification. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Diego, USA: IEEE Computer Society, 2005. 539–546 [29] Weinberger K Q, Saul L K. Distance metric learning for large margin nearest neighbor classification. Journal of Machine Lear-ning Research, 2009, 10(2): 207−244 doi: 10.5555/1577069.1577078 [30] Gao T, Yao X, Chen D. SimCSE: Simple contrastive learning of sentence embeddings. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Virtual Event: 2021. 6894–6910 [31] Giorgi J M, Nitski O, Wang B, Bader G D. DeCLUTR: Deep contrastive learning for unsupervised textual representations. In: Proceedings of the Conference of the Association for Computational Linguistics. Virtual Event: 2021. 879–895 [32] Khosla P, Teterwak P, Wang C, Sarna A, Tian Y, Krishnan D, et al. Supervised contrastive learning. In: Proceedings of the Neural Information Processing Systems. Virtual Event: 2020. 18661–18673 [33] Li S, Hu X, Lin L, Wen L. Pair-level supervised contrastive learning for natural language inference. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing. Virtual Event: IEEE, 2022. 8237–8241 [34] Devlin J, Chang M, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of North American Chapter of the Association for Computational Linguistics. Minneapolis, USA: 2019. 4171– 4186 [35] Papineni K, Roukos S, Ward T, Zhu W. BLEU: A method for automatic evaluation of machine translation. In: Proceedings of the Conference of the Association for Computational Linguistics. Philadelphia, USA: 2002. 311–318 [36] Lin C Y. ROUGE: A package for automatic evaluation of summaries. Text Summarization Branches Out, 2004: 74−81 [37] Banerjee S, Lavie A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In: Proceedings of the Conference of the Association for Computational Linguistics. Ann Arbor, USA: 2005. 65–72 [38] Lewis M, Liu Y, Goyal N, Ghazvininejad M, Mohamed A, Levy O, et al. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Proceedings of the Association for Computational Linguistics. Virtual Event: 2020. 7871–7880 [39] Loshchilov I, Hutter F. Fixing weight decay regularization in adam. arXiv preprint arXiv: 1711.05101, 2017. [40] Cho K, Merrienboer B, Bengio Y, Gulcehre C, Bahdanau D, Bougares F, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the Empirical Methods in Natural Language Processing. Doha, Qatar: 2014. 1724–1734 [41] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A, et al. Attention is all you need. In: Proceedings of the Neural Information Processing Systems. Long Beach, USA: 2017. 5998–6008 [42] Sanh V, Debut L, Chaumond J, Wolf T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv preprint arXiv: 1910.01108, 2019. [43] Mou X, Yang C, Yu M, Yao B, Guo X, Potdar S. Narrative question answering with cutting-edge open-domain QA techni-ques: A comprehensive study. Transactions of the Association for Computational Linguistics, 2021, 9: 1032−1046 doi: 10.1162/tacl_a_00411 -

下载:

下载: