-

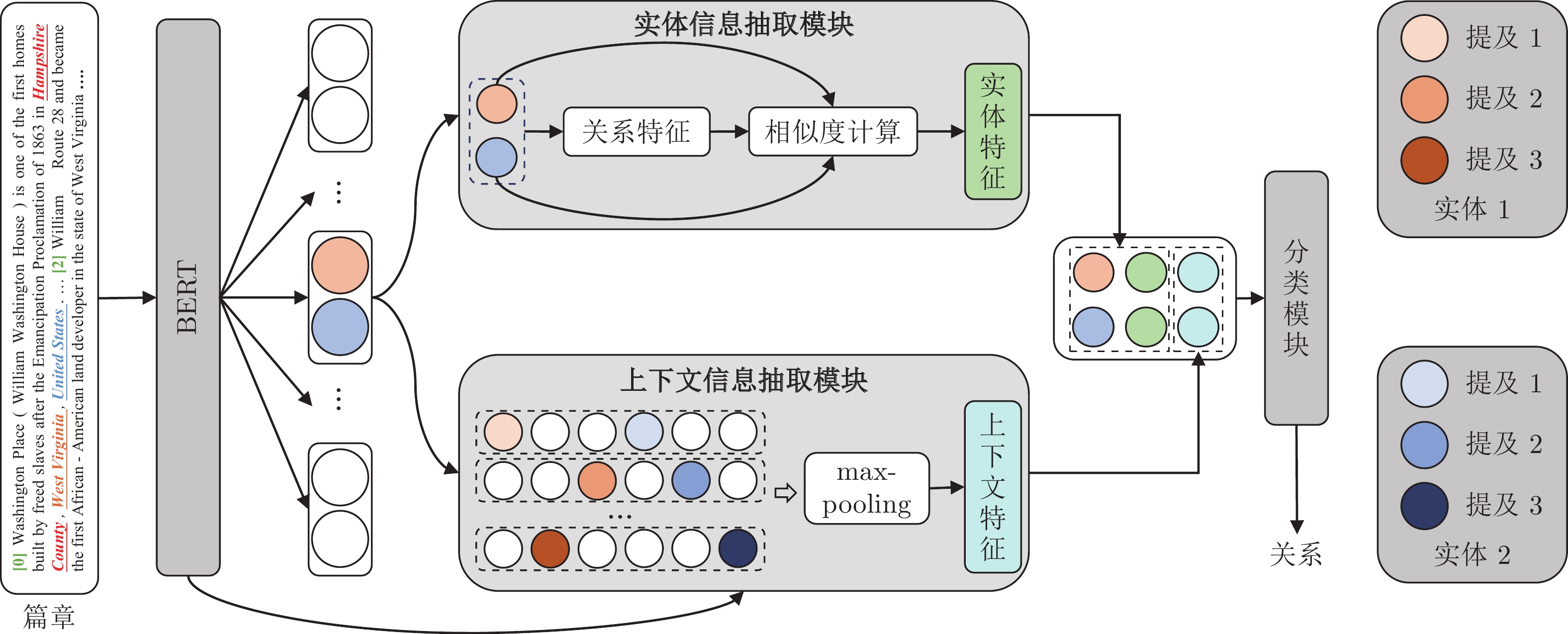

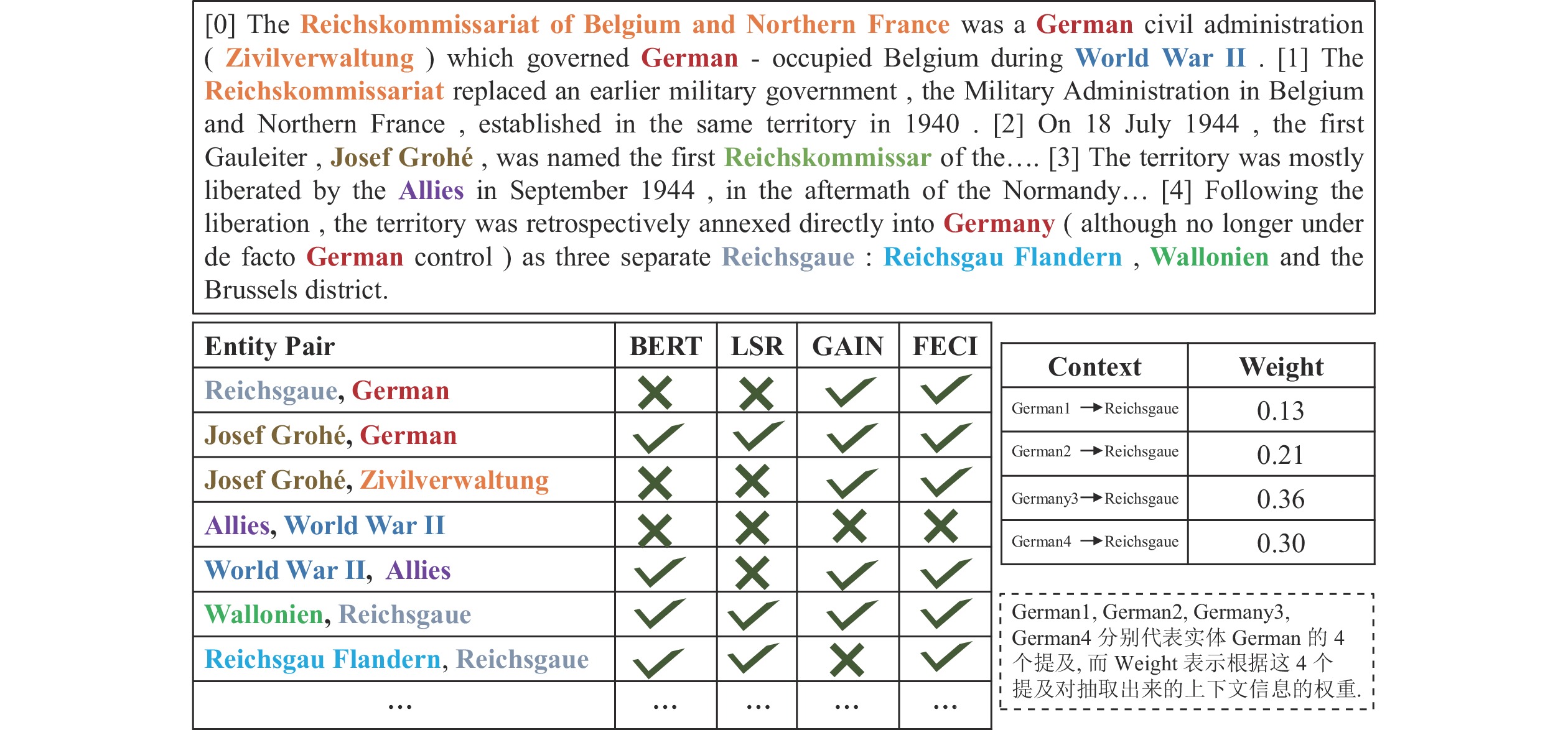

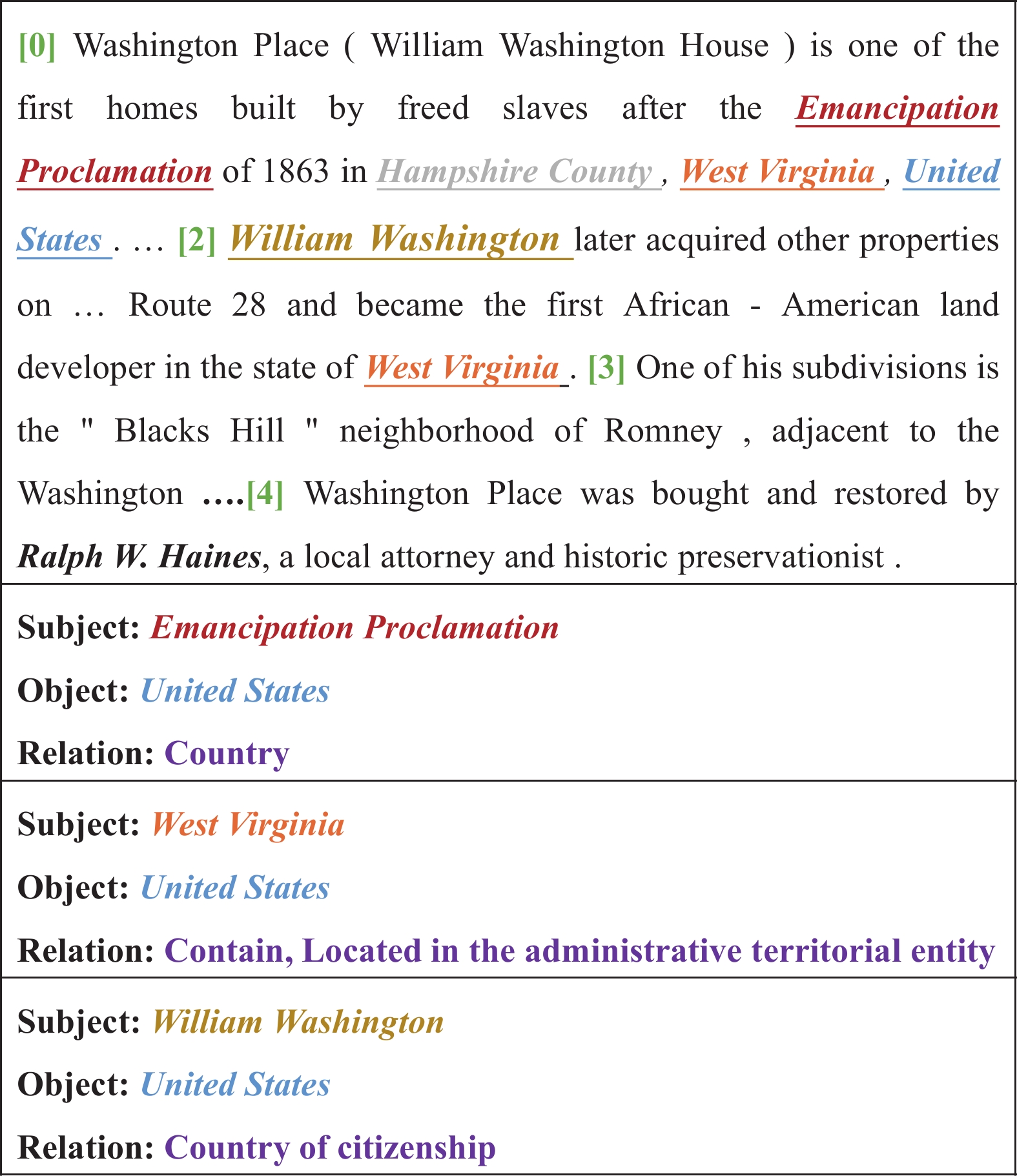

摘要: 篇章关系抽取旨在识别篇章中实体对之间的关系. 相较于传统的句子级别关系抽取, 篇章级别关系抽取任务更加贴近实际应用, 但是它对实体对的跨句子推理和上下文信息感知等问题提出了新的挑战. 本文提出融合实体和上下文信息(Fuse entity and context information, FECI)的篇章关系抽取方法, 它包含两个模块, 分别是实体信息抽取模块和上下文信息抽取模块. 实体信息抽取模块从两个实体中自动地抽取出能够表示实体对关系的特征. 上下文信息抽取模块根据实体对的提及位置信息, 从篇章中抽取不同的上下文关系特征. 本文在三个篇章级别的关系抽取数据集上进行实验, 效果得到显著提升.Abstract: Document-level relation extraction aims to identify the relations among entities from the document. Compared with traditional sentence-level relation extraction, document-level relation extraction is more realistic and poses new challenges of cross-sentence inference and context information understanding. In this paper, we propose a novel method for document-level relation extraction by fusing entity and context information (FECI), which contains two modules: Entity information extraction module and context information extraction module. Entity information extraction module automatically extracts crucial relation features about entity pair. Context information extraction module extracts different context relation features from the document according to mentions' position information of entity pair. We have conducted experiments on three document-level relation extraction datasets, and the effect has been significantly improved.

-

表 1 数据集的统计

Table 1 Statistics of the datasets

统计 DocRED CDR GDA 训练集 3053 500 23353 开发集 1000 500 5839 测试集 1000 500 1000 关系种类 97 2 2 每篇的关系数量 19.5 7.6 5.4 表 2 模型的超参数

Table 2 Hyper-parameters of model

参数名称 DocRED CDR GDA 批次大小 4 4 4 迭代次数 30 30 10 学习率 (编码) $5\times 10^{-5}$ $5\times 10^{-5}$ $5\times 10^{-5}$ 学习率 (分类) $1\times 10^{-4}$ $1\times 10^{-4}$ $1\times 10^{-4}$ 分组大小 64 64 64 Dropout 0.1 0.1 0.1 梯度裁剪 1.0 1.0 1.0 表 3 在DocRED开发集和测试集上的实验结果(%)

Table 3 Experiment results on the development and test sets of DocRED (%)

模型 开发集 测试集 Ign F1 F1 Ign F1 F1 CNN 41.58 43.45 40.33 42.26 LSTM 48.44 50.68 47.71 50.07 Bi-LSTM 48.87 50.94 48.78 51.06 Context-Aware 48.94 51.09 48.40 50.70 HIN-GloVe 51.06 52.95 51.15 53.30 GAT-GloVe 45.17 51.44 47.36 49.51 GCNN-GloVe 46.22 51.52 49.59 51.62 EoG-GloVe 45.94 52.15 49.48 51.82 AGGCN-GloVe 46.29 52.47 48.89 51.45 LSR-GloVe 48.82 55.17 52.15 54.18 BERT-REBASE — 54.16 — 53.20 RoBERTaBASE 53.85 56.05 53.52 55.77 BERT-Two-StepBASE — 54.42 — 53.92 HIN-BERTBASE 54.29 56.31 53.70 55.60 CorefBERTBASE 55.32 57.51 54.54 56.96 LSR-BERTBASE 52.43 59.00 56.97 59.05 BERT-EBASE 56.51 58.52 — — GAINBASE 59.14 61.22 59.00 61.24 FECIBASE 59.74 61.38 59.81 61.22 表 4 在CDR和GDA数据集上测试集F1值(%)

Table 4 F1 values of test set on CDR and GDA datasets (%)

模型 CDR GDA BRAN 62.1 — CNN 62.3 — EoG 63.6 81.5 LSR-BERT 64.8 82.2 SciBERTBASE 65.1 82.5 SciBERT-EBASE 65.9 83.3 FECIBASE 69.2 83.7 表 5 FECIBASE在开发集上的消融研究结果

Table 5 Ablation study results of FECIBASE on the development set

模型 开发集 Ign F1 (%) F1 (%) P (M) T (s) FECIBASE 59.74 61.38 133.4 2962.4 w/o Entity 58.16 60.07 132.2 2831.7 w/o Context 58.67 60.89 130.5 482.3 表 6 FECIBASE在开发集上噪声实体和噪声上下文的实验结果(%)

Table 6 The experiment results of noisy entity and noisy context of FECIBASE on the development set (%)

模型 开发集 Ign F1 F1 FECIBASE 59.74 61.38 Head entity 58.42 60.14 Tail entity 57.97 60.08 Entity pair 58.91 60.85 Tradition 57.42 59.72 Co-occurrence 58.27 61.01 Non co-occurrence 56.72 58.86 表 7 FECIBASE在开发集上不同上下文信息的实验结果(%)

Table 7 The experiment results of different context information of FECIBASE on the development set (%)

模型 开发集 Ign F1 F1 FECIBASE 59.74 61.38 Random 58.47 60.61 Mean 59.56 60.94 Tradition 58.19 60.06 表 8 不同方法在开发集上的效率

Table 8 Efficiency of different methods on the development set

模型 开发集 P (M) Train T (s) Decoder T (s) LSR-BERTBASE 112.1 282.9 38.8 GAINBASE 217.0 2271.6 817.2 FECIBASE 133.4 2962.4 829.0 -

[1] Yu M, Yin W P, Hasan K S, Santos C D, Xiang B, Zhou B W. Improved neural relation detection for knowledge base question answering. arXiv preprint arXiv: 1704.06194, 2017. [2] Chen Z Y, Chang C H, Chen Y P, Nayak J, Ku L W. UHop: An unrestricted-hop relation extraction framework for knowledge-based question answering. arXiv preprint arXiv: 1904.01246, 2019. [3] Yu H Z, Li H S, Mao D H, Cai Q. A relationship extraction method for domain knowledge graph construction. World Wide Web, 2020, 23(2): 735−753 doi: 10.1007/s11280-019-00765-y [4] Ristoski P, Gentile A L, Alba A, Gruhl D, Welch S. Large-scale relation extraction from web documents and knowledge graphs with human-in-the-loop. Journal of Web Semantics, 2020, 60: Article No. 100546 doi: 10.1016/j.websem.2019.100546 [5] Macdonald E, Barbosa D. Neural relation extraction on Wikipedia tables for augmenting knowledge graphs. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management. New York, USA: ACM, 2020. 2133–2136 [6] Mintz M, Bills S, Snow R, Jurafsky D. Distant supervision for relation extraction without labeled data. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Suntec, Singapore: ACL, 2009. 1003–1011 [7] Lin Y K, Shen S Q, Liu Z Y, Luan H B, Sun M S. Neural relation extraction with selective attention over instances. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin, Germany: ACL, 2016. 2124–2133 [8] Miwa M, Bansal M. End-to-end relation extraction using LSTMs on sequences and tree structures. arXiv preprint arXiv: 1601.00770, 2016. [9] Zhang Y H, Qi P, Manning C D. Graph convolution over pruned dependency trees improves relation extraction. arXiv preprint arXiv: 1809.10185, 2018. [10] Guo Z J, Zhang Y, Lu W. Attention guided graph convolutional networks for relation extraction. arXiv preprint arXiv: 1906.07510, 2019. [11] Yao Y, Ye D M, Li P, Han X, Lin Y K, Liu Z H, et al. DocRED: A large-scale document-level relation extraction dataset. arXiv preprint arXiv: 1906.06127, 2019. [12] Zhou W X, Huang K, Ma T Y, Huang J. Document-level relation extraction with adaptive thresholding and localized context pooling. arXiv preprint arXiv: 2010.11304, 2020. [13] Zeng S, Xu R X, Chang B B, Li L. Double graph based reasoning for document-level relation extraction. arXiv preprint arXiv: 2009.13752, 2020. [14] Santos C N D, Xiang B, Zhou B W. Classifying relations by ranking with convolutional neural networks. arXiv preprint arXiv: 1504.06580, 2015. [15] Cho K, Merrienboer B V, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv: 1406.1078, 2014. [16] Liu Y, Wei F R, Li S J, Ji H, Zhou M, Wang H F. A dependency-based neural network for relation classification. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Short Papers). Beijing, China: ACL, 2015. 285–290 [17] Christopoulou F, Miwa M, Ananiadou S. A walk-based model on entity graphs for relation extraction. arXiv preprint arXiv: 1902.07023, 2019. [18] Christopoulou F, Miwa M, Ananiadou S. Connecting the dots: Document-level neural relation extraction with edge-oriented graphs. arXiv preprint arXiv: 1909.00228, 2019. [19] Yang B S, Mitchell T. Joint extraction of events and entities within a document context. arXiv preprint arXiv: 1609.03632, 2016. [20] Swampillai K, Stevenson M. Extracting relations within and across sentences. In: Proceedings of the Recent Advances in Natural Language Processing. Hissar, Bulgaria: DBLP, 2011. 25–32 [21] Jia R, Wong C, Poon H. Document-level n-ary relation extraction with multiscale representation learning. arXiv preprint arXiv: 1904.02347, 2019. [22] Verga P, Strubell E, McCallum A. Simultaneously self-attending to all mentions for full-abstract biological relation extraction. arXiv preprint arXiv: 1802.10569, 2018. [23] Nan G S, Guo Z J, Sekulic I, Lu W. Reasoning with latent structure refinement for document-level relation extraction. arXiv preprint arXiv: 2005.06312, 2020. [24] Wang D F, Hu W, Cao E, Sun W J. Global-to-local neural networks for document-level relation extraction. arXiv preprint arXiv: 2009.10359, 2020. [25] Devlin J, Chang M W, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional Transformers for language understanding. arXiv preprint arXiv: 1810.04805, 2019. [26] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 6000–6010 [27] Sennrich R, Haddow B, Birch A. Neural machine translation of rare words with subword units. arXiv preprint arXiv: 1508.07909, 2016. [28] Li J, Sun Y P, Johnson R J, Sciaky D, Wei C, Leaman R, et al. BioCreative V CDR task corpus: A resource for chemical disease relation extraction. The Journal of Biological Databases and Curation, 2016: Article No. baw068 [29] Wu Y, Luo R B, Leung H C M, Ting H, Lam T. RENET: A deep learning approach for extracting gene-disease associations from literature. In: Proceedings of the International Conference on Research in Computational Molecular Biology. Washington, USA: Springer, 2019. 272–284 [30] Liu Y H, Ott M, Goyal N, Du J F, Joshi M, Chen D Q, et al. RoBERTa: A robustly optimized BERT pretraining approach. arXiv preprint arXiv: 1907.11692, 2019. [31] Beltagy I, Lo K, Cohan A. SciBERT: A pretrained language model for scientific text. arXiv preprint arXiv: 1903.10676, 2019. [32] Micikevicius P, Narang S, Alben J, Diamos G, Elsen E, Garca D, et al. Mixed precision training. arXiv preprint arXiv: 1710.03740, 2018. [33] Loshchilov I, Hutter F. Decoupled weight decay regularization. arXiv preprint arXiv: 1711.05101, 2019. [34] Velickovic P, Cucurull G, Casanova A, Romero A, Liò P, Bengio Y. Graph attention networks. arXiv preprint arXiv: 1710.10903, 2018. [35] Wang H, Focke C, Sylvester R, Mishra N, Wang W. Fine-tune BERT for DocRED with two-step process. arXiv preprint arXiv: 1909.11898, 2019. [36] Tang H Z, Cao Y N, Zhang Z Y, Cao J X, Fang F, Wang S, et al. HIN: Hierarchical inference network for document-level relation extraction. arXiv preprint arXiv: 2003.12754, 2020. [37] Pennington J, Socher R, Manning C D. GloVe: Global vectors for word representation. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha, Qatar: Association for Computational Linguistics, 2014. 1532–1543 [38] Ye D M, Lin Y K, Du J J, Liu Z H, Sun M S, Liu Z Y. Coreferential reasoning learning for language representation. arXiv preprint arXiv: 2004.06870, 2020. [39] Nguyen D Q, Verspoor K. Convolutional neural networks for chemical-disease relation extraction are improved with character-based word embeddings. arXiv preprint arXiv: 1805.10586, 2018. -

下载:

下载: