-

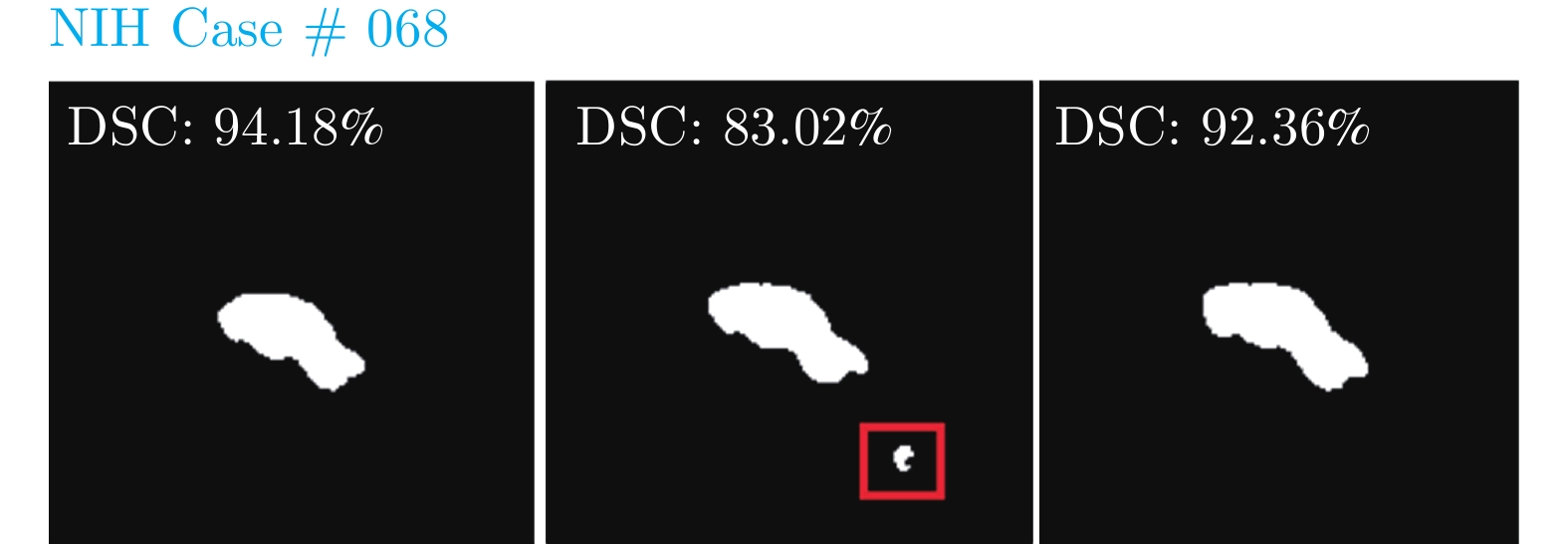

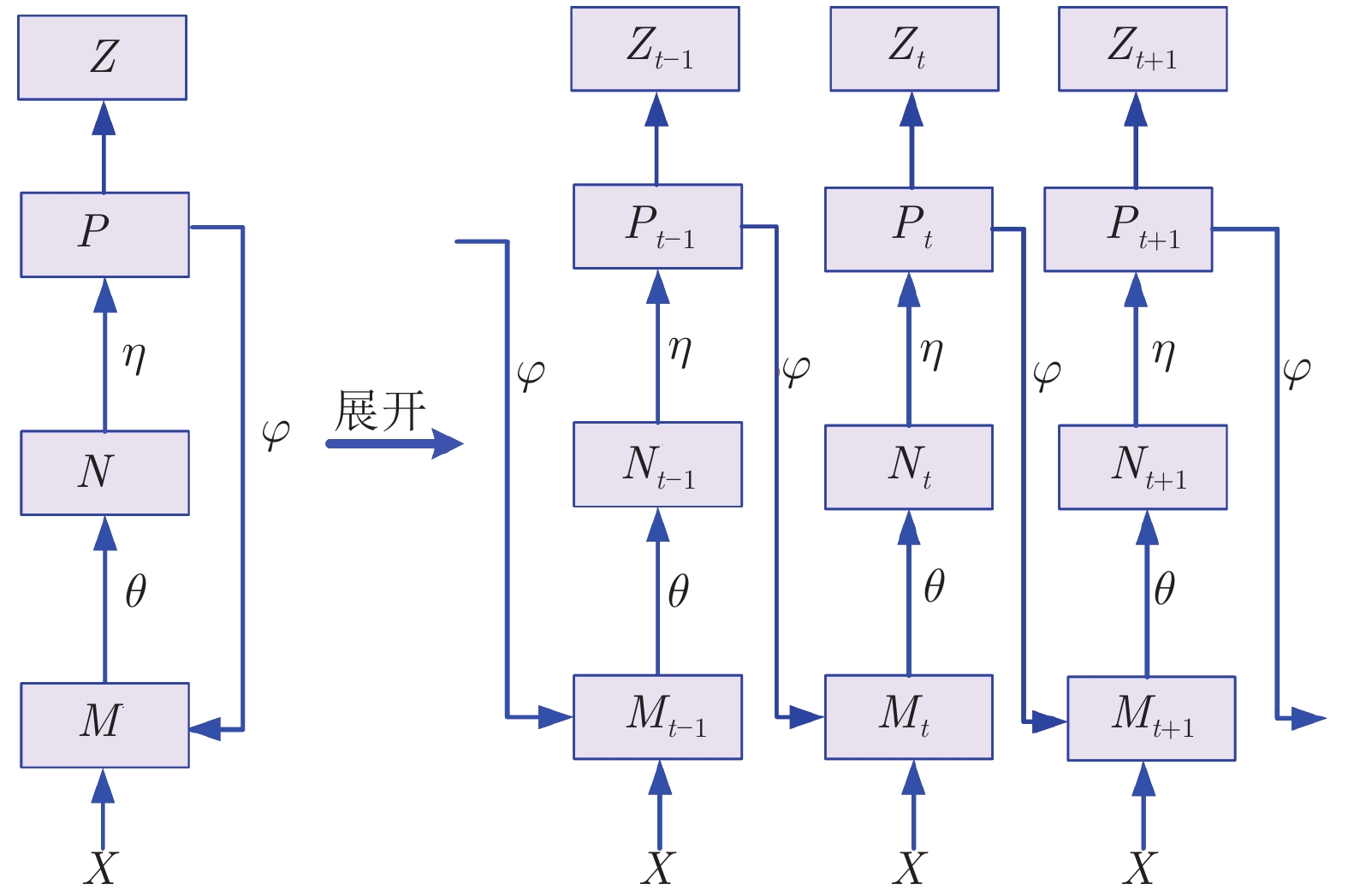

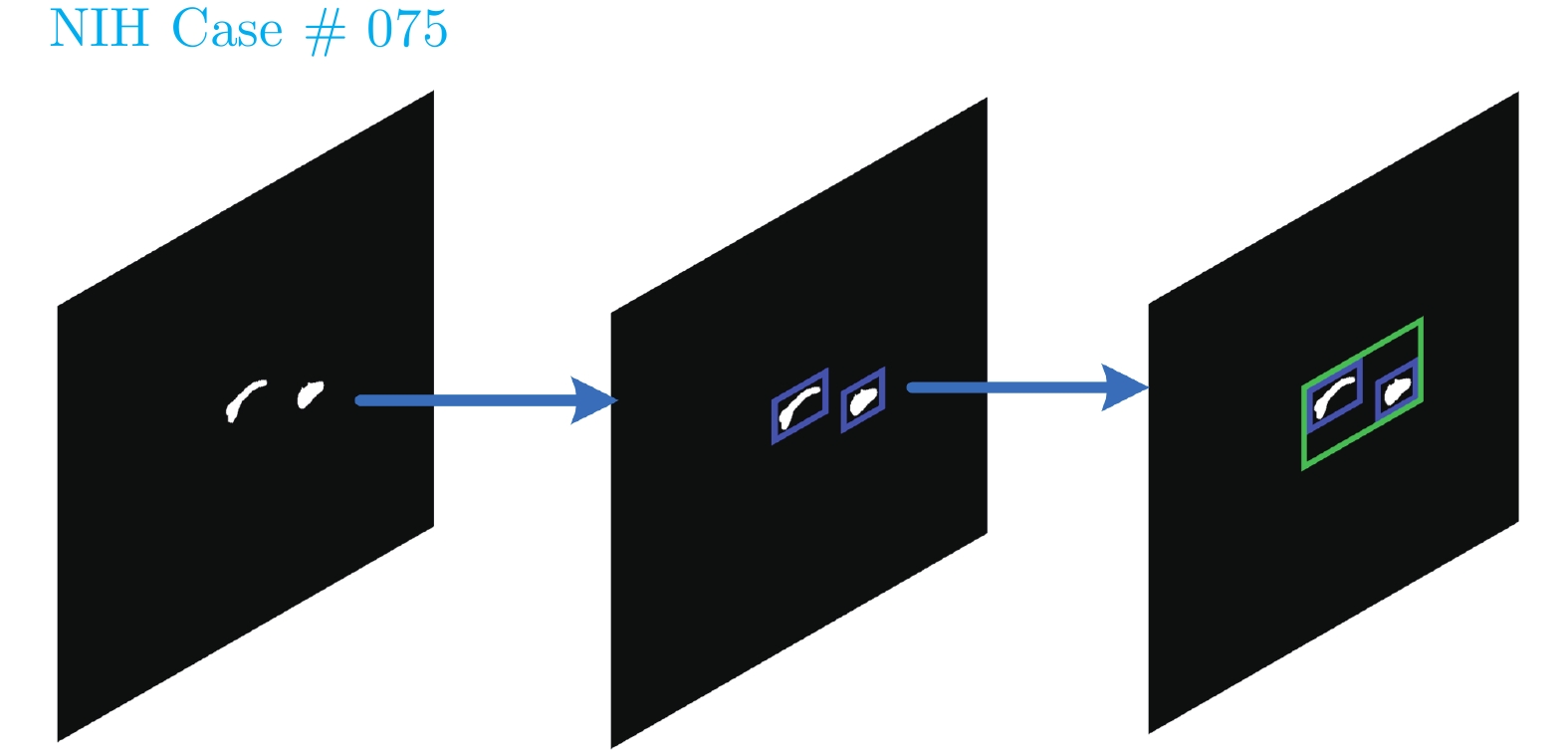

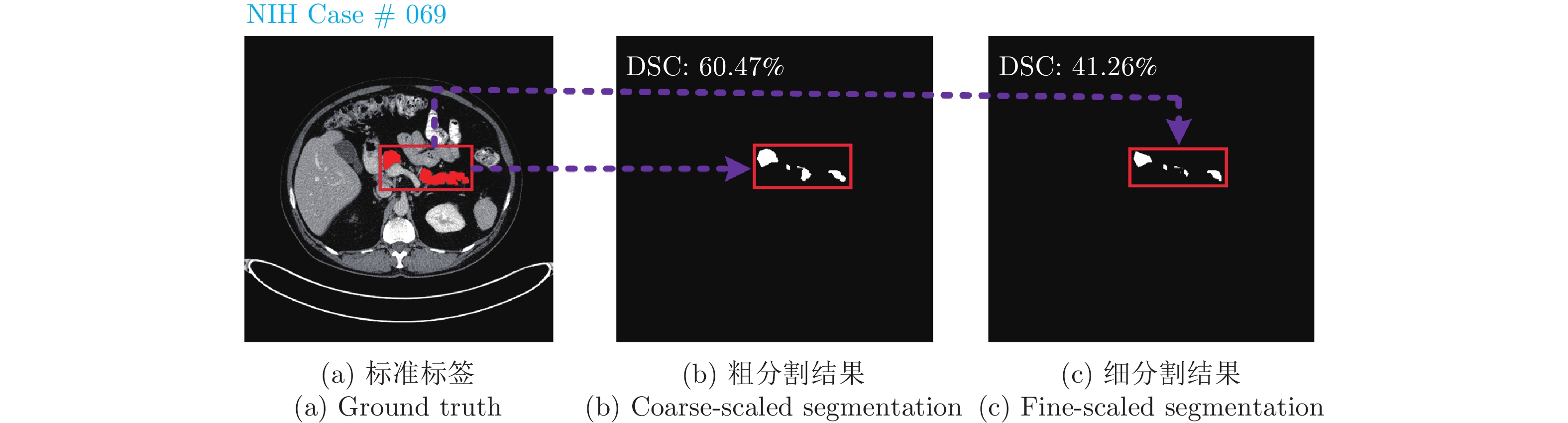

摘要: 胰腺的准确分割对于胰腺癌的识别和分析至关重要. 研究者提出通过第一阶段粗分割掩码的位置信息缩小第二阶段细分割网络输入的由粗到细分割方法, 尽管极大地提升了分割精度, 但是在胰腺分割过程中对于上下文信息的利用却存在以下两个问题: 1) 粗分割和细分割阶段分开训练, 细分割阶段缺少粗分割阶段分割掩码信息, 抑制了阶段间上下文信息的流动, 导致部分细分割阶段结果无法比粗分割阶段更准确; 2) 粗分割和细分割阶段单批次相邻预测分割掩码之间缺少信息互监督, 丢失切片上下文信息, 增加了误分割风险. 针对上述问题, 提出了一种基于循环显著性校准网络的胰腺分割方法. 通过循环使用前一阶段输出的胰腺分割掩码作为当前阶段输入的空间权重, 进行两阶段联合训练, 实现阶段间上下文信息的有效利用; 提出卷积自注意力校准模块进行胰腺预测分割掩码切片上下文信息跨顺序互监督, 显著改善了相邻切片误分割现象. 提出的方法在公开的数据集上进行了验证, 实验结果表明其改善误分割结果的同时提升了平均分割精度.Abstract: Accurate segmentation of the pancreas is very important for the identification and analysis of pancreatic cancer. The researchers proposed a coarse-to-fine segmentation method to reduce the input of the second-stage fine segmentation network through the position information of the first-stage coarse segmentation mask. Although the segmentation accuracy is greatly improved, however, the use of context information in the pancreas segmentation process has the following two problems: 1) The coarse segmentation and fine segmentation stages are trained individually, and the fine segmentation lacks the predicted mask information of the coarse segmentation, which suppresses the flow of context information between stages, resulting in part of the fine segmentation that cannot be more accurate than the coarse segmentation; 2) In the coarse and fine segmentation stage, there is a lack of mutual supervision information between the adjacent predicted masks of a single batch, which leads to the loss of inter-slice context information and increases the risk of false segmentation. To solve the above problems, a pancreas segmentation method based on the recurrent saliency calibration network is proposed. By recurrently using the previous stage output segmentation mask as the spatial weight of the current stage input and performing joint training, the context information between stages is effectively used. Besides, a convolutional self-attention calibration module is suggested, which performs cross-sequence supervision of inter-slice context information and significantly improves the false segmentation. The proposed method is verified on the public datasets, and the experimental results show that it improves the average segmentation accuracy while improving the results of false segmentation.

-

表 1 粗细分割分开训练、联合训练和循环显著性联合训练分割结果

Table 1 Segmentation of coarse-to-fine separate training, joint training and recurrent saliency joint training

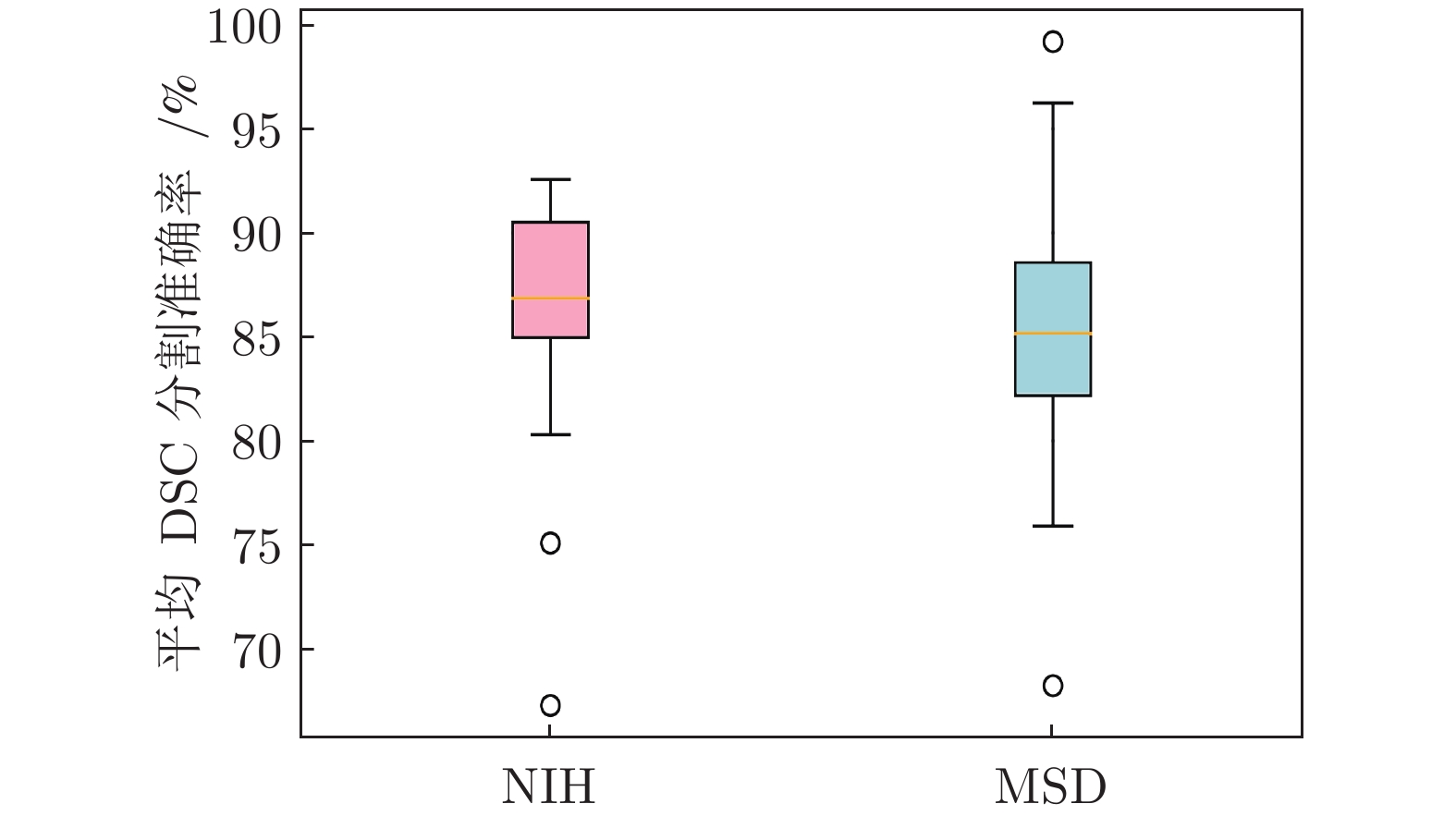

方法 平均 DSC (%) ± Std (%) 最大 DSC (%) 最小 DSC (%) NIH MSD NIH MSD NIH MSD 粗细分割分开训练 $81.96 \pm 5.79$ $78.92 \pm 9.61$ 89.58 89.91 48.39 51.23 粗细分割联合训练 $83.08 \pm 5.47$ $80.80 \pm 8.79$ 90.58 91.13 49.94 52.79 循环显著性网络联合训练 $85.56 \pm 4.79$ $83.24 \pm 5.93$ 91.14 92.80 62.82 64.47 表 2 循环显著性网络测试结果

Table 2 Test results of recurrent saliency network segmentation

迭代次数 平均 DSC (%) ± Std (%) 最大 DSC (%) 最小 DSC (%) NIH MSD NIH MSD NIH MSD 第 0 次迭代 (粗分割) $76.81 \pm 9.68$ $73.46 \pm 11.73$ 87.94 88.67 40.12 47.76 第 1 次迭代 $84.89 \pm 5.14$ $81.67 \pm 8.05$ 91.02 91.89 50.36 52.90 第 2 次迭代 $83.34\pm 5.07$ $82.23 \pm 7.57$ 90.96 91.94 53.73 56.81 第 3 次迭代 $85.63 \pm 4.96$ $82.78 \pm 6.83$ 91.08 92.32 57.96 58.04 第 4 次迭代 $85.79 \pm 4.83$ $82.94 \pm 6.46$ 91.15 92.56 62.97 63.73 第 5 次迭代 $85.82 \pm 4.82$ $83.15 \pm 6.04$ 91.20 92.77 62.85 63.99 第 6 次迭代 $85.86 \pm 4.79$ $83.24 \pm 5.93$ 91.14 92.80 62.82 64.47 表 3 添加校准模块结果对比

Table 3 Comparison results of adding calibration module

方法 平均 DSC (%) ± Std (%) 最大 DSC (%) 最小 DSC (%) NIH MSD NIH MSD NIH MSD 粗细分割联合训练未添加校准模块 $83.08 \pm 5.47$ $80.80 \pm 8.79$ 90.58 91.13 49.94 52.79 粗细分割联合训练添加校准模块 $84.72 \pm 5.07$ $82.09 \pm 7.91$ 90.98 92.90 50.27 53.35 循环显著性网络未添加校准模块 $85.86 \pm 4.79$ $83.24 \pm 5.93$ 91.14 92.80 62.82 64.47 循环显著性网络添加校准模块 $87.11 \pm 4.02$ $85.13 \pm 5.17$ 92.57 94.48 67.30 68.24 表 4 胰腺分割基于CLSTM和自注意力结果对比

Table 4 Comparison results based on CLSTM and self-attention mechanism in pancreas segmentation

方法 平均 DSC (%) ± Std (%) 最大 DSC (%) 最小 DSC (%) NIH MSD NIH MSD NIH MSD 基于 CLSTM 校准模块 $86.13 \pm 4.54$ $84.21 \pm 5.80$ 91.20 93.47 63.18 64.76 基于 ConvGRU 校准模块 $86.34 \pm 4.21$ $84.41\pm 5.62$ 92.31 94.05 65.73 66.02 基于 TrajGRU 校准模块 $86.96 \pm 4.14$ $84.87 \pm 5.22$ 92.49 94.32 67.20 67.93 基于卷积自注意力校准模块 $ 87.11 \pm 4.02$ $85.13 \pm 5.17$ 92.57 94.48 67.30 68.24 表 5 NIH数据集上不同分割方法结果比较(“—”表示文献中缺少参数说明)

Table 5 Comparison of different segmentation methods on NIH dataset (“—” indicates a lack of reference in the literature)

方法 分割维度 平均 DSC (%) ±

Std (%)最大 DSC (%) 最小 DSC (%) 文献 [22] 2D $71.80 \pm 10.70$ 86.90 25.00 文献 [23] 2D $81.27 \pm 6.27$ 88.96 50.69 文献 [36] 2D $82.40 \pm 6.70$ 90.10 60.00 文献 [3] 2D $82.37 \pm 5.68$ 90.85 62.43 文献 [37] 3D $84.59 \pm 4.86$ 91.45 69.62 文献 [10] 3D $85.99 \pm 4.51$ 91.20 57.20 文献 [5] 3D $85.93 \pm 3.42$ 91.48 75.01 文献 [29] 3D $82.47 \pm 5.50$ 91.17 62.36 文献 [20] 2D $82.87 \pm 1.00$ 87.67 81.18 文献 [19] 2D $84.90 \pm -$ 91.46 61.82 文献 [26] 2D $85.35 \pm 4.13$ 91.05 71.36 文献 [21] 2D $85.40 \pm 1.60$ — — 文献 [30] 3D $86.19 \pm -$ 91.90 69.17 本文方法 2D 87.11 ± 4.02 92.57 67.30 表 6 MSD数据集上不同分割方法结果比较

Table 6 Comparison of different segmentation methods on MSD dataset

表 7 NIH数据集不同网络输入切片数目分割结果比较

Table 7 Comparison of the segmentation of different network input slices on NIH dataset

网络输入

切片数目分割维度 平均 DSC (%) ±

Std (%)最大 DSC (%) 最小 DSC (%) 3 2D $87.11\pm4.02$ 92.57 67.30 5 2D $87.53\pm3.74$ 92.69 69.32 7 2D $87.96\pm3.25$ 92.94 71.91 表 8 MSD数据集不同网络输入切片数目分割结果比较

Table 8 Comparison of the segmentation of different network input slices on MSD dataset

网络输入

切片数目分割维度 平均 DSC (%) ±

Std (%)最大 DSC (%) 最小 DSC (%) 3 2D $85.13\pm5.17$ 94.48 68.24 5 2D $85.86\pm5.01$ 94.75 70.31 7 2D $86.29\pm4.80$ 95.01 73.07 表 9 不同分割方法参数量比较

Table 9 Comparison of the number of parameters of different segmentation methods

表 10 不同分割方法时间消耗比较(“—”表示文献中缺少参数说明)

Table 10 Comparison of time consumption of different segmentation methods (“—” indicates a lack of reference in the literature)

-

[1] Siegel R, Miller K, Fuchs H, Jemal, A. Cancer statistics 2021. CA: A Cancer Journal for Clinicians, 2021, 71(1): 7-33 doi: 10.3322/caac.21654 [2] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 3431−3440 [3] Zhou Y, Xie L, Shen W, Wang Y, Fishman E K, Yuille A L. A fixed-point model for pancreas segmentation in abdominal CT scans. In: Proceedings of the 2017 International Conference on Medical Image Computing and Computer-assisted Intervention. Quebec, Canada: Springer, 2017. 693−701 [4] Zhang Y, Wu J, Liu Y, Chen Y F, Chen W, Wu E X, et al. A deep learning framework for pancreas segmentation with multi-atlas registration and 3D level-set. Medical Image Analysis, 2021, 68(2): 101884-101889 [5] Wang W, Song Q, Feng R, Chen T, Chen J, Chen D Z, et al. A fully 3D cascaded framework for pancreas segmentation. In: Proceedings of the 2020 IEEE International Symposium on Biomedical Imaging (ISBI). Lowa, USA: IEEE, 2020. 207−211 [6] 马超, 刘亚淑, 骆功宁, 王宽全. 基于级联随机森林与活动轮廓的3D MR图像分割. 自动化学报, 2019, 45(5): 1004-1014Ma Chao, Liu Ya-Shu, Luo Gong-Ning, Wang Kuan-Quan. Combining concatenated random forests and active contour for the 3D MR images segmentation. Acta Automatica Sinica, 2019, 45(5): 1004-1014 [7] Shi X, Chen Z, Wang H, Yeung D Y, Wong W K, Woo W. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In: Proceedings of the 2015 Advances in Neural Information Processing Systems. Quebec, Canada: MIT, 2015. 802−810 [8] Yang Z, Zhang L, Zhang M, Feng J, Wu Z, Ren F, et al. Pancreas segmentation in abdominal CT scans using inter-/intra-slice contextual information with a cascade neural network. In: Proceedings of the 2019 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). Berlin, Germany: IEEE, 2019. 5937−5940 [9] Li H, Li J, Lin X, Qian X. A model-driven stack-based fully convolutional network for pancreas segmentation. In: Proceedings of the 5th International Conference on Communication, Image and Signal Processing (CCISP). Chengdu, China: IEEE, 2020. 288−293 [10] Zhao N, Tong N, Ruan D, Sheng K. Fully automated pancreas segmentation with two-stage 3D convolutional neural networks. In: Proceedings of the 2019 International Conference on Medical Image Computing and Computer-Assisted Intervention. Shenzhen, China: Springer, 2019. 201−209 [11] Ballas N, Yao Y, Pal C, Courville A. Delving deeper into convolutional networks for learning video representations. arXiv preprint arXiv: 1511.06432, 2015. [12] Farag A, Lu L, Turkbey E, Liu J, Summers R M. A bottom-up approach for automatic pancreas segmentation in abdominal CT scans. In: Proceedings of the 2014 International Conference on Medical Image Computing and Computer-Assisted Intervention Workshop on Computational and Clinical Challenges in Abdominal Imaging. Cambridge, USA: Springer, 2014. 103−113 [13] Kitasaka T, Oda M, Nimura Y, Hayashi Y, Fujiwara M, Misawa K, et al. Structure specific atlas generation and its application to pancreas segmentation from contrasted abdominal CT volumes. In: Proceedings of the 2015 International Conference on Medical Image Computing and Computer-Assisted Intervention Workshop on Medical Computer Vision. Munich, Germany: Springer, 2015. 47−56 [14] Deng G, Wu Z, Wang C, Xu M, Zhong Y. CCANet: Class-constraint coarse-to-fine attentional deep network for subdecimeter aerial image semantic segmentation. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60(1): 1-20 [15] Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Proceedings of the 2015 International Conference on Medical Image Computing and Computer-Assisted Intervention. Munich, Germany: Springer, 2015. 234−241 [16] 李阳, 赵于前, 廖苗, 廖胜辉, 杨振. 基于水平集和形状描述符的腹部CT序列肝脏自动分割. 自动化学报, 2021, 47(2): 327-337Li Yang, Zhao Yu-Qian, Liao Miao, Liao Sheng-Hui, Yang Zhen. Automatic liver segmentation from CT volumes based on level set and shape descriptor. Acta Automatica Sinica, 2021, 47(2): 327-337 [17] 夏平, 施宇, 雷帮军, 龚国强, 胡蓉, 师冬霞. 复小波域混合概率图模型的超声医学图像分割. 自动化学报, 2021, 47(1): 185-196Xia Ping, Shi Yu, Lei Bang-Jun, Gong Guo-Qiang, Hu Rong, Shi Dong-Xia. Ultrasound medical image segmentation based on hybrid probabilistic graphical model in complex-wavelet domain. Acta Automatica Sinica, 2021, 47(1): 185-196 [18] 冯宝, 陈业航, 刘壮盛, 李智, 宋嵘, 龙晚生. 结合MRF能量和模糊速度的乳腺癌图像分割方法. 自动化学报, 2020, 46(6): 1188-1199Feng Bao, Chen Ye-Hang, Liu Zhuang-Sheng, Li Zhi, Song Rong, Long Wan-Sheng. Segmentation of breast cancer on DCE-MRI images with MRF energy and fuzzy speed function. Acta Automatica Sinica, 2020, 46(6): 1188-1199 [19] Zhang D, Zhang J, Zhang Q, Han J, Zhang S, Han J. Automatic pancreas segmentation based on lightweight DCNN modules and spatial prior propagation. Pattern Recognition, 2021, 114(6): Article No. 107762 [20] Huang M L, Wu Z Y. Semantic segmentation of pancreatic medical images by using convolutional neural network. Biomedical Signal Processing and Control, 2022, 73(3): 103458-103470 [21] Liu Z, Su J, Wang R H, Jiang R, Song Y Q, Zhang D Y, et al. Pancreas Co-segmentation based on dynamic ROI extraction and VGGU-Net. Expert Systems With Applications, 2022, 192(6): 116444-116453 [22] Roth H R, Lu L, Farag A, Shin H C, Liu J M, Turkbey E B, et al. Deeporgan: Multi-level deep convolutional networks for automated pancreas segmentation. In: Proceedings of the 2015 International Conference on Medical Image Computing and Computer-Assisted Intervention. Munich, Germany: Springer, 2015. 556−564 [23] Roth H R, Lu L, Lay N, Harrison A P, Farag A, Sohn A, et al. Spatial aggregation of holistically-nested convolutional neural networks for automated pancreas localization and segmentation. Medical Image Analysis, 2018, 45(3): 94-107 [24] Karasawa K, Oda M, Kitasaka T, Misawa K, Fujiwara M, Chu C, et al. Multi-atlas pancreas segmentation: Atlas selection based on vessel structure. Medical Image Analysis, 2017, 39(5): 18-28 [25] Man Y, Huang Y, Feng J, Li X, Wu F. Deep Q learning driven CT pancreas segmentation with geometry-aware U-Net. IEEE Transactions on Medical Imaging, 2019, 38(8): 1971-1980 doi: 10.1109/TMI.2019.2911588 [26] Li J, Lin X, Che H, Li H, Qian X. Pancreas segmentation with probabilistic map guided bi-directional recurrent UNet. Physics in Medicine and Biology, 2021, 66(11): 115010-115026 doi: 10.1088/1361-6560/abfce3 [27] Khosravan N, Mortazi A, Wallace M, Bagci U. Pan: Projective adversarial network for medical image segmentation. In: Proceedings of the 2019 International Conference on Medical Image Computing and Computer-Assisted Intervention. Shenzhen, China: Springer, 2019. 68−76 [28] Fang C, Li G, Pan C, Li Y, Yu Y. Globally guided progressive fusion network for 3D pancreas segmentation. In: Proceedings of the 2019 International Conference on Medical Image Computing and Computer-Assisted Intervention. Shenzhen, China: Springer, 2019. 210−218 [29] Mo J, Zhang L, Wang Y, Huang H. Iterative 3D feature enhancement network for pancreas segmentation from CT images. Neural Computing and Applications, 2020, 32(16): 12535-12546 doi: 10.1007/s00521-020-04710-3 [30] Wang Y, Zhang J, Cui H, Zhang Y, Xia Y. View adaptive learning for pancreas segmentation. Biomedical Signal Processing and Control, 2021, 66(4): 102347-102361 [31] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 2017 Advances in Neural Information Processing Systems. California, USA: MIT, 2017. 5998−6008 [32] Simpson A L, Antonelli M, Bakas S, Bilello M, Farahani K, Van G. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. arXiv preprint arXiv: 1902.09063, 2019. [33] Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks. In: Proceedings of the 14th International Conference on Artificial Intelligence and Statistics. Florida, USA: ML Research, 2017. 315−323 [34] Kingma D P, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv: 1412.6980, 2014. [35] Shi X J, Gao Z H, Lausen L, Wang H, Yeung D Y, Wong W K, et al. Deep learning for precipitation nowcasting: A benchmark and a new model. In: Proceedings of the 2017 Advances in Neural Information Processing Systems. California, USA: MIT, 2017. 5617−5627 [36] Cai J, Lu L, Xie Y, Xing F, Yang Y. Improving deep pancreas segmentation in CT and MRI images via recurrent neural contextual learning and direct loss function. arXiv preprint arXiv: 1707.04912, 2017. [37] Zhu Z, Xia Y, Shen W, Fishman E, Yuille A. A 3D coarse-to-fine framework for volumetric medical image segmentation. In: Proceedings of the 2018 International Conference on 3D Vision (3DV). Verona, Italy: IEEE, 2018. 682−690 [38] Roth H R, Shen C, Oda H, Oda M, Hayashi Y, Misawa K, et al. Deep learning and its application to medical image segmentation. Medical Imaging Technology, 2018, 36(2): 63-71 [39] Roth H R, Oda H, Zhou X, Shimizu N, Yang Y, Hayashi Y, et al. An application of cascaded 3D fully convolutional networks for medical image segmentation. Computerized Medical Imaging and Graphics, 2018, 66(4): 90-99 [40] Li W, Wu X, Hu Y, Wang L, He Z, Du J. High-resolution recurrent gated fusion network for 3D pancreas segmentation. In: Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN). Shenzhen, China: IEEE, 2021. 1−7 [41] Cicek O, Abdulkadir A, Lienkamp S S, Brox T, Ronneberger O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In: Proceedings of the 2016 International Conference on Medical Image Computing and Computer-Assisted Intervention. Athens, Greece: Springer, 2016. 424−432 [42] Oktay O, Schlemper J, Folgoc L L, Lee M, Heinrich M, Misawa K, et al. Attention U-Net: Learning where to look for the pancreas. arXiv preprint arXiv: 1804.03999, 2018. [43] Zhou Z, Siddiquee R M M, Tajbakhsh N, Liang J. Unet++: A nested U-Net architecture for medical image segmentation. In: Proceedings of the 2018 Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Granada, Spain: Springer, 2018. 3−11 [44] Roth H, Lu L, Farag A, Sohn A, Summers R. Spatial aggregation of holistically-nested networks for automated pancreas segmentation. In: Proceedings of the 2016 International Conference on Medical Image Computing and Computer-Assisted Intervention. Athens, Greece: Springer, 2016. 451−459 -

下载:

下载: