-

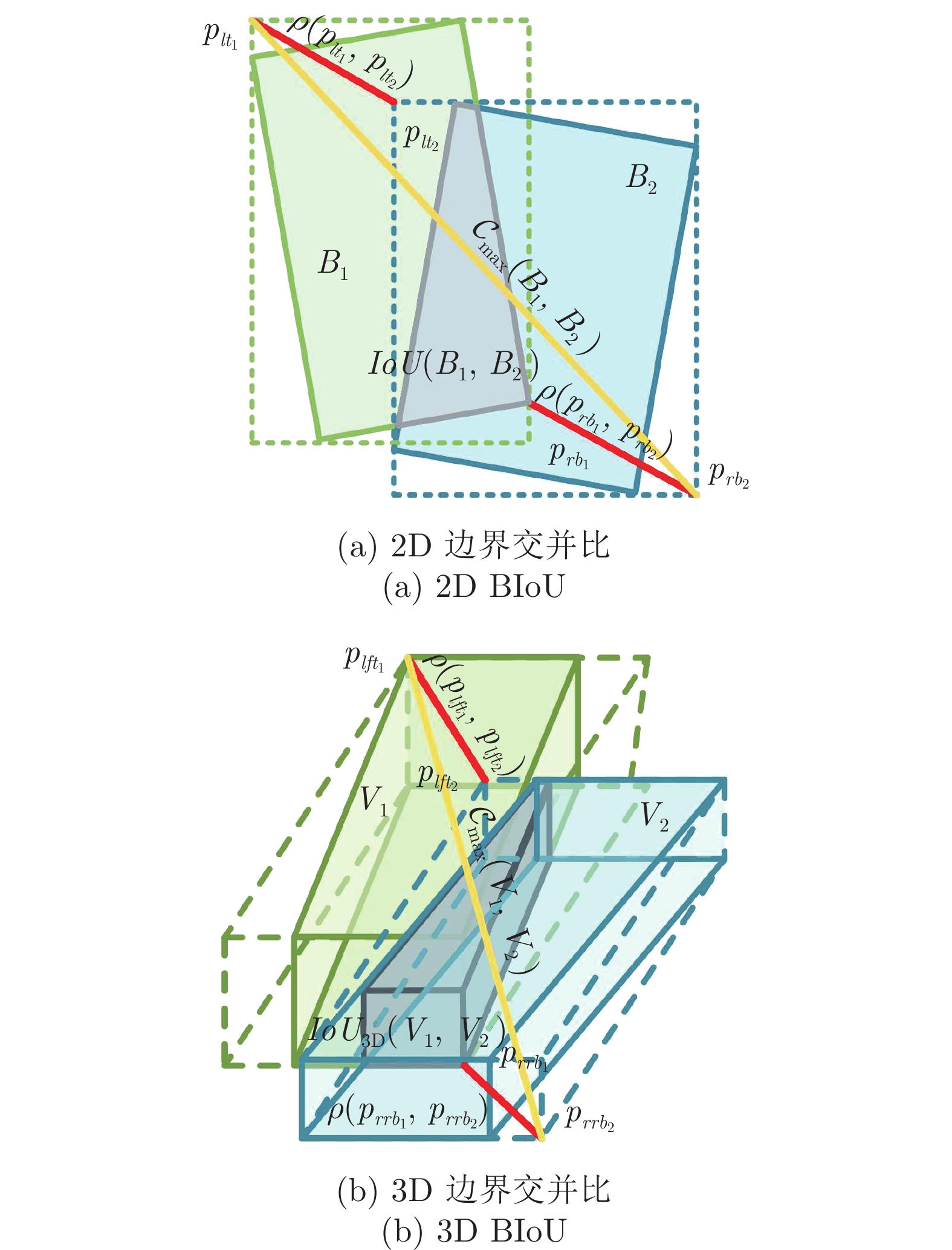

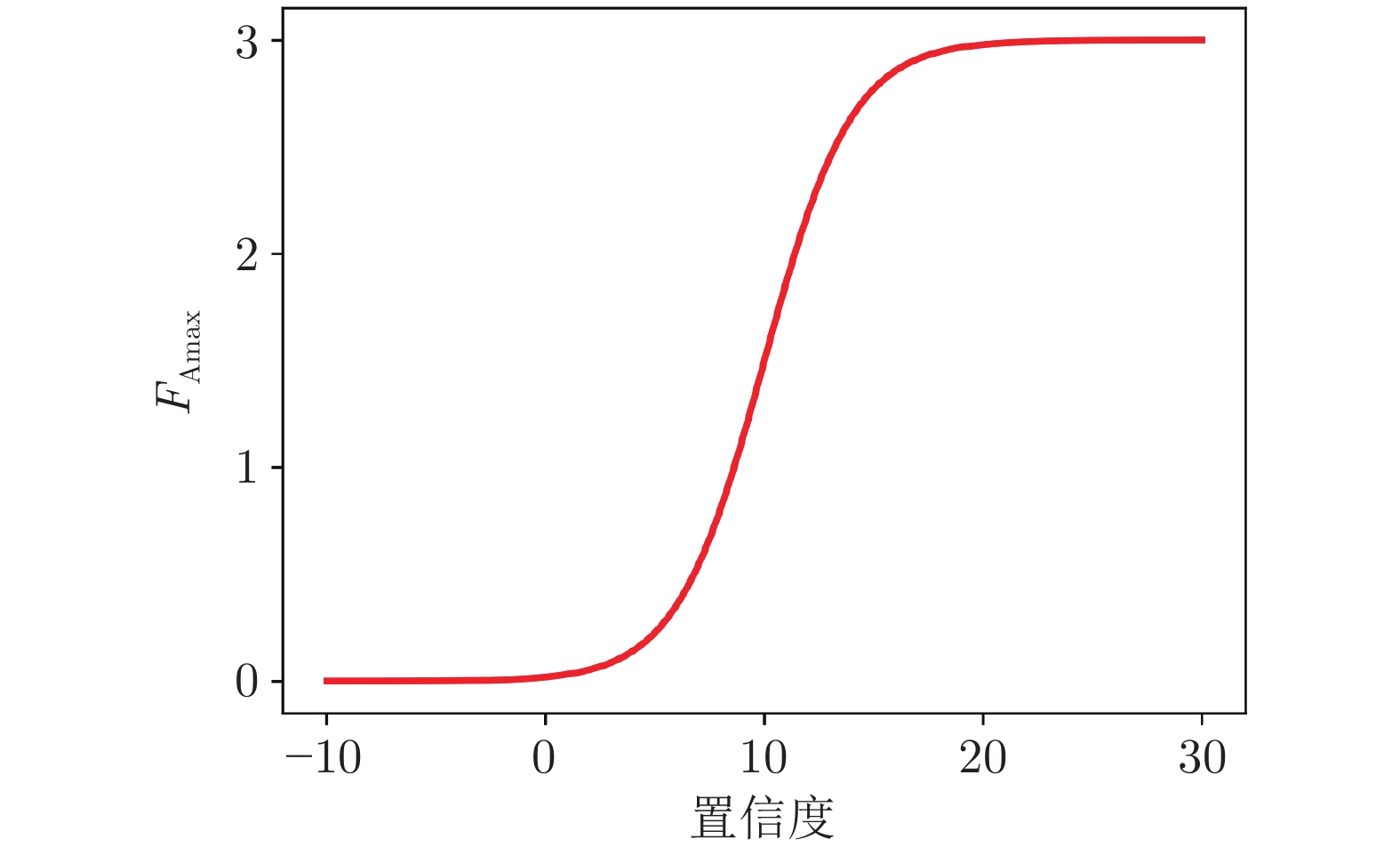

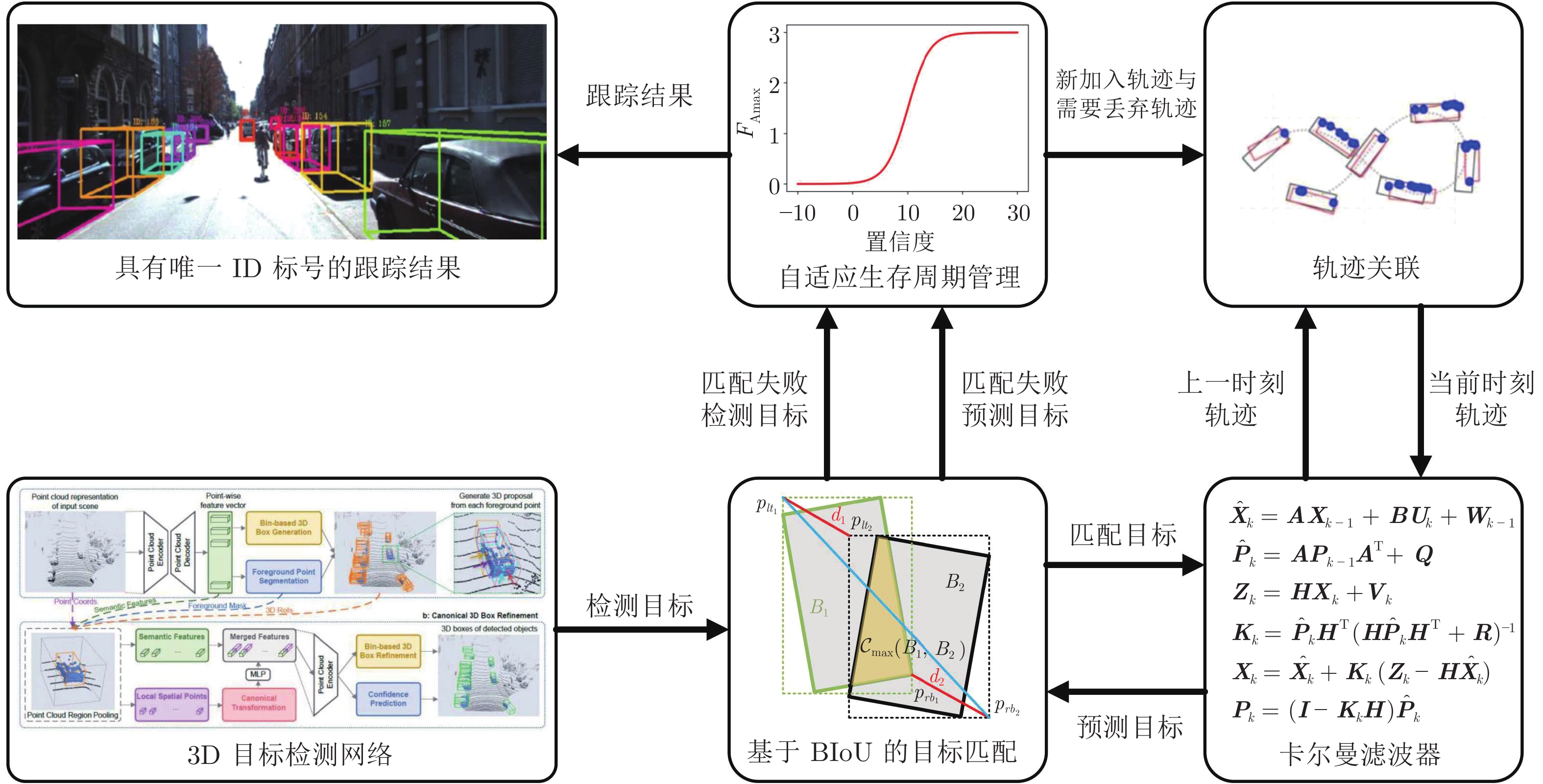

摘要: 无人驾驶汽车行驶是连续时空的三维运动, 汽车周围的目标不可能突然消失或者出现, 因此, 对于感知层而言, 稳定可靠的多目标跟踪(Multi-object tracking, MOT)意义重大. 针对传统的目标关联和固定生存周期(Birth and death memory, BDM)管理的不足, 提出基于边界交并比(Border intersection over union, BIoU)度量的目标关联和自适应生存周期管理策略. BIoU综合了欧氏距离和交并比(Intersection over union, IoU)的优点, 提高了目标关联的精度. 自适应生存周期管理将目标轨迹置信度与生存周期相联系, 显著减少了目标丢失和误检. 在KITTI多目标跟踪数据集上的实验验证了该方法的有效性.Abstract: Unmanned vehicle is a three-dimensional motion in continuous time and space, and the object around the vehicle can not disappear or appear suddenly. Therefore, for the perception system, stable and robust multi-object tracking (MOT) is of great significance. Aiming at the shortcomings of object association and fixed birth and death memory (BDM) in the traditional one, the border intersection over union (BIoU) based object association and adaptive life cycle management strategy are put forward. The BIoU takes into account the advantages of both Euclidean distance and intersection over union (IoU) to improve the accuracy of object association. The adaptive life cycle management associates the object trajectory confidence with the life cycle, which significantly reduces object missing and false detection. The effectiveness of the proposed approach is verified through experiments on the KITTI multi-object tracking dataset.

-

Key words:

- Unmanned vehicles /

- LiDAR /

- 3D object detection /

- 3D multi-object tracking

-

表 1 模型参数

Table 1 Model parameters

参数 值 说明 $ \gamma $ 0.05 BIoU惩罚因子 $ \alpha $ 0.5 生存周期尺度系数 $ \beta $ 4 生存周期偏移系数 $ F_{\max} $ 3 (Car)

5 (Others)最大生存周期

对Car目标为3

对其他类别目标为5$ F_{\min} $ 3 目标轨迹的最小跟踪周期

该值与AB3DMOT相同${ {BIoU} }_{\rm{thres} }$ $ -0.01 $ BIoU阈值

小于阈值认为匹配失败表 2 KITTI数据集上对3类目标 (汽车、行人、骑自行车的人) 跟踪性能对比

Table 2 Tracking performance comparison about three kinds of objects (Car, Pedestrian, Cyclist) on KITTI dataset

类别 方法 MOTA (%) $ \uparrow $1 MOTP (%) $ \uparrow $ MT (%) $ \uparrow $ ML (%) $ \downarrow $2 IDS$ \downarrow $ FRAG$ \downarrow $ Car FANTrack[21] 76.52 84.81 73.14 9.25 1 54 DiTNet[22] 81.08 87.83 79.35 4.21 20 120 AB3DMOT[6] 85.70 86.99 75.68 3.78 2 24 本文 85.69 86.96 76.22 3.78 2 24 Pedestrian AB3DMOT[6] 59.76 67.27 40.14 20.42 52 371 本文 59.93 67.22 42.25 20.42 52 377 Cyclist AB3DMOT[6] 74.75 79.89 62.42 14.02 54 403 本文 76.43 79.89 64.49 11.63 54 409 1$ \uparrow $表示指标数值越大性能越好;

2$ \downarrow $表示指标数值越小性能越好.表 3 消融实验

Table 3 Ablation experiments

类别 BIoU $ F_{\rm{Amax}} $ MOTA (%) $ \uparrow $ MOTP (%) $ \uparrow $ MT (%) $ \uparrow $ ML (%) $ \downarrow $ IDS$ \downarrow $ FRAG$ \downarrow $ Car — — 81.55 86.72 79.46 4.32 2 21 $ \checkmark $ — 81.69 86.69 80.00 4.32 3 22 — $ \checkmark $ 84.09 86.98 75.68 4.32 2 24 $ \checkmark $ $ \checkmark $ 84.31 86.96 76.22 4.32 2 24 Pedestrian — — 57.54 67.19 42.96 21.13 99 411 $ \checkmark $ — 57.73 67.18 46.48 20.42 99 417 — $ \checkmark $ 59.59 67.24 40.14 21.13 59 372 $ \checkmark $ $ \checkmark $ 59.77 67.21 44.37 16.90 61 393 Cyclist — — 73.44 85.40 75.00 14.29 0 5 $ \checkmark $ — 77.82 85.61 75.00 14.29 0 5 — $ \checkmark $ 77.97 85.41 71.43 17.86 0 7 $ \checkmark $ $ \checkmark $ 82.94 85.51 75.00 10.71 0 7 -

[1] Zhao Z Q, Zheng P, Xu S T, Wu X D. Object detection with deep learning: A review. IEEE Transactions on Neural Networks and Learning Systems, 2019, 30(11): 3212-3232 doi: 10.1109/TNNLS.2018.2876865 [2] Simon M, Milz S, Amenda K, Gross H M. Complex-YOLO: An Euler-region-proposal for real-time 3D object detection on point clouds. In: Proceedings of the European Conference on Computer Vision (ECCV). Munich, Germany: Springer, 2018. 197−209 [3] Shi S S, Wang X G, Li H S. PointRCNN: 3D object proposal generation and detection from point cloud. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 770−779 [4] Bewley A, Ge Z Y, Ott L, Ramos F, Upcroft B. Simple online and real time tracking. In: Proceedings of the IEEE International Conference on Image Processing (ICIP). Phoenix, USA: IEEE, 2016. 3464−3468 [5] Wojke N, Bewley A, Paulus D. Simple online and realtime tracking with a deep association metric. In: Proceedings of the IEEE International Conference on Image Processing (ICIP). Beijing, China: IEEE, 2017. 3645−3649 [6] Weng X S, Wang J R, Held D, Kitani K. 3D multi-object tracking: A baseline and new evaluation metrics. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Las Vegas, USA: IEEE, 2020. 10359−10366 [7] Rezatofighi H, Tsoi N, Gwak J, Sadeghian A, Reid I, Savarese S. Generalized intersection over union: A metric and a loss for bounding box regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 658−666 [8] Zheng Z H, Wang P, Liu W, Li J Z, Ye R G, Ren D W. Distance-IoU Loss: Faster and better learning for bounding box regression. In: Proceedings of the American Association for Artificial Intelligence (AAAI). New York, USA: IEEE, 2020. 12993−13000 [9] Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Providence, USA: IEEE, 2012. 3354−3361 [10] Luo W H, Xing J L, Milon A, Zhang X Q, Liu W, Kim T K. Multiple object tracking: A literature review. Artificial Intelligence, 2021, 293: 103448 doi: 10.1016/j.artint.2020.103448 [11] Leal-Taixé L, Canton-Ferrer C, Schindler K. Learning by tracking: Siamese CNN for robust target association. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Vegas, USA: IEEE, 2016. 33−40 [12] 孟琭, 杨旭. 目标跟踪算法综述. 自动化学报, 2019, 45(7): 1244-1260 doi: 10.16383/j.aas.c180277Meng L, Yang X. A Survey of Object Tracking Algorithms. Acta Automatic Sinica, 2019: 45(7): 1244-1260 doi: 10.16383/j.aas.c180277 [13] Azim A, Aycard O. Detection, classification and tracking of moving objects in a 3D environment. In: Proceedings of the IEEE Intelligent Vehicles Symposium (IV). Madrid, Spain: IEEE, 2012. 802−807 [14] Song S Y, Xiang Z Y, Liu J L. Object tracking with 3D LiDAR via multi-task sparse learning. In: Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA). Beijing, China: IEEE, 2015. 2603−2608 [15] Sharma S, Ansari J A, Murthy J K, Krishna K M. Beyond pixels: Leveraging geometry and shape cues for online multi-object tracking. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Brisbane, Australia: IEEE, 2018. 3508−3515 [16] 侯建华, 张国帅, 项俊.基于深度学习的多目标跟踪关联模型设计.自动化学报, 2020, 46(12): 2690-2700 doi: 10.16383/j.aas.c180528Hou J H, Zhang G S, Xiang J. Designing affinity model for multiple object tracking based on deep learning. Acta Automatic Sinica, 2020, 46(12): 2690-2700 doi: 10.16383/j.aas.c180528 [17] 张伟俊, 钟胜, 徐文辉, WU Ying. 融合显著性与运动信息的相关滤波跟踪算法. 自动化学报, 2021, 47(7): 1572-1588 doi: 10.16383/j.aas.c190122Zhang W J, Zhong S, Xu W H, Wu Y. Correlation filter based visual tracking integrating saliency and motion cues. Acta Automatic Sinica, 2021, 47(7): 1572-1588 doi: 10.16383/j.aas.c190122 [18] Wu H, Han W K, Wen C L, Li X, Wang C. 3D multi-object tracking in point clouds based on prediction confidence-guided data association. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(6): 5668-5677 doi: 10.1109/TITS.2021.3055616 [19] 李绍明, 储珺, 冷璐, 涂序继. 目标跟踪中基于IoU和中心点距离预测的尺度估计. 自动化学报, DOI: 10.16383/j.aas.c210356Li Shao-Ming, Chu Jun, Leng Lu, Tu Xu-Ji. Accurate scale estimation with IoU and distance between centroids for object tracking. Acta Automatica Sinica, DOI: 10.16383/j.aas.c210356 [20] 彭丁聪. 卡尔曼滤波的基本原理及应用. 软件导刊, 2009, 8(11): 32-34Peng D C. Basic Principle and Application of Kalman Filter. Software Guide, 2009, 8(11): 32-34 [21] Baser E, Balasubramanian V, Bhattacharyya P, Czarnecki K. FANTrack: 3D multi-object tracking with feature association network. In: Proceedings of the IEEE Intelligent Vehicles Symposium (IV). Paris, France: IEEE, 2019. 1426−1433 [22] Wang S K, Cai P D, Wang L J, Liu M. DiTNet: End-to-end 3D object detection and track ID assignment in spatio-temporal world. IEEE Robotics and Automation Letters, 2021: 6(2): 3397-3404 doi: 10.1109/LRA.2021.3062016 -

下载:

下载: