-

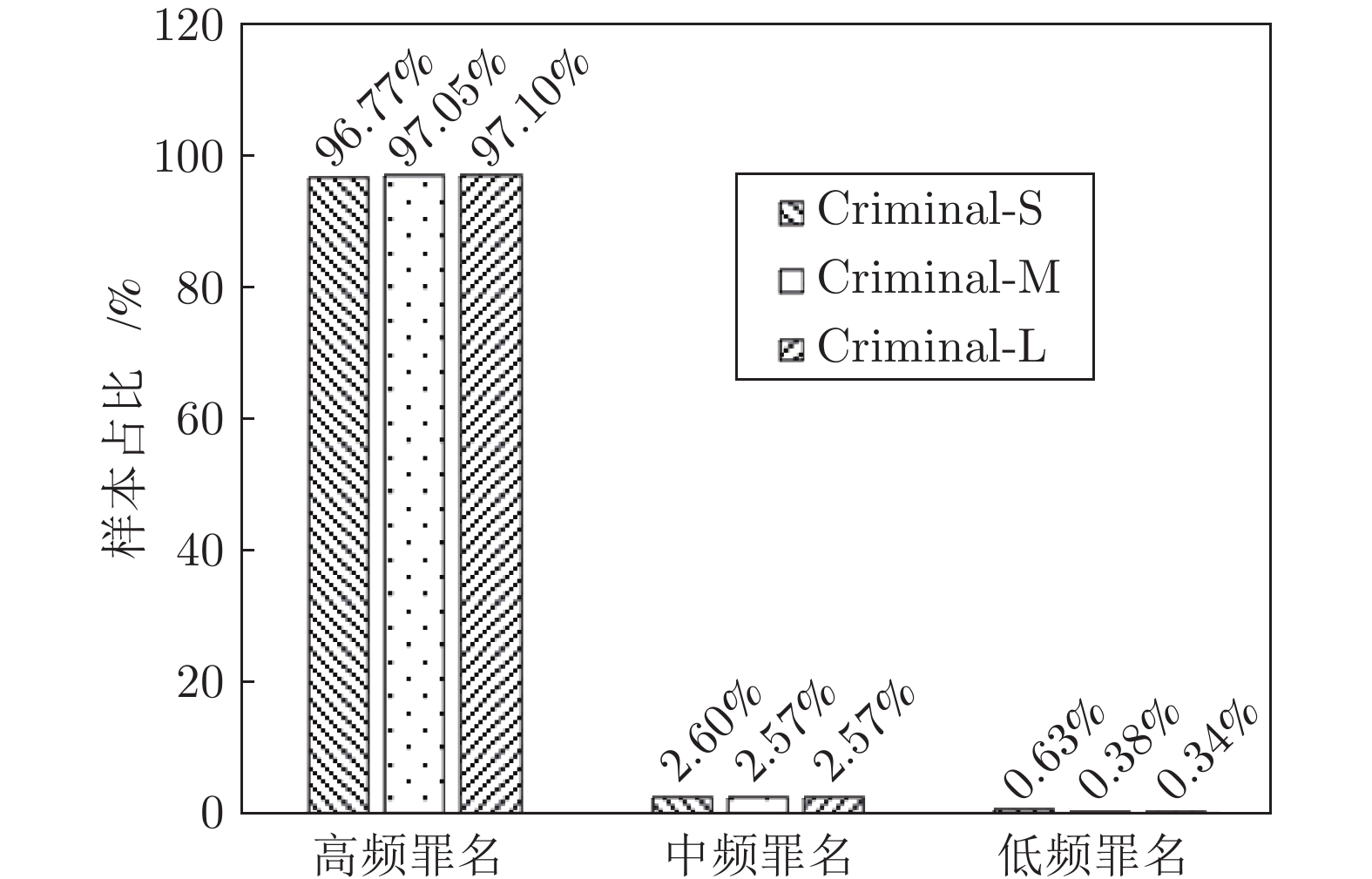

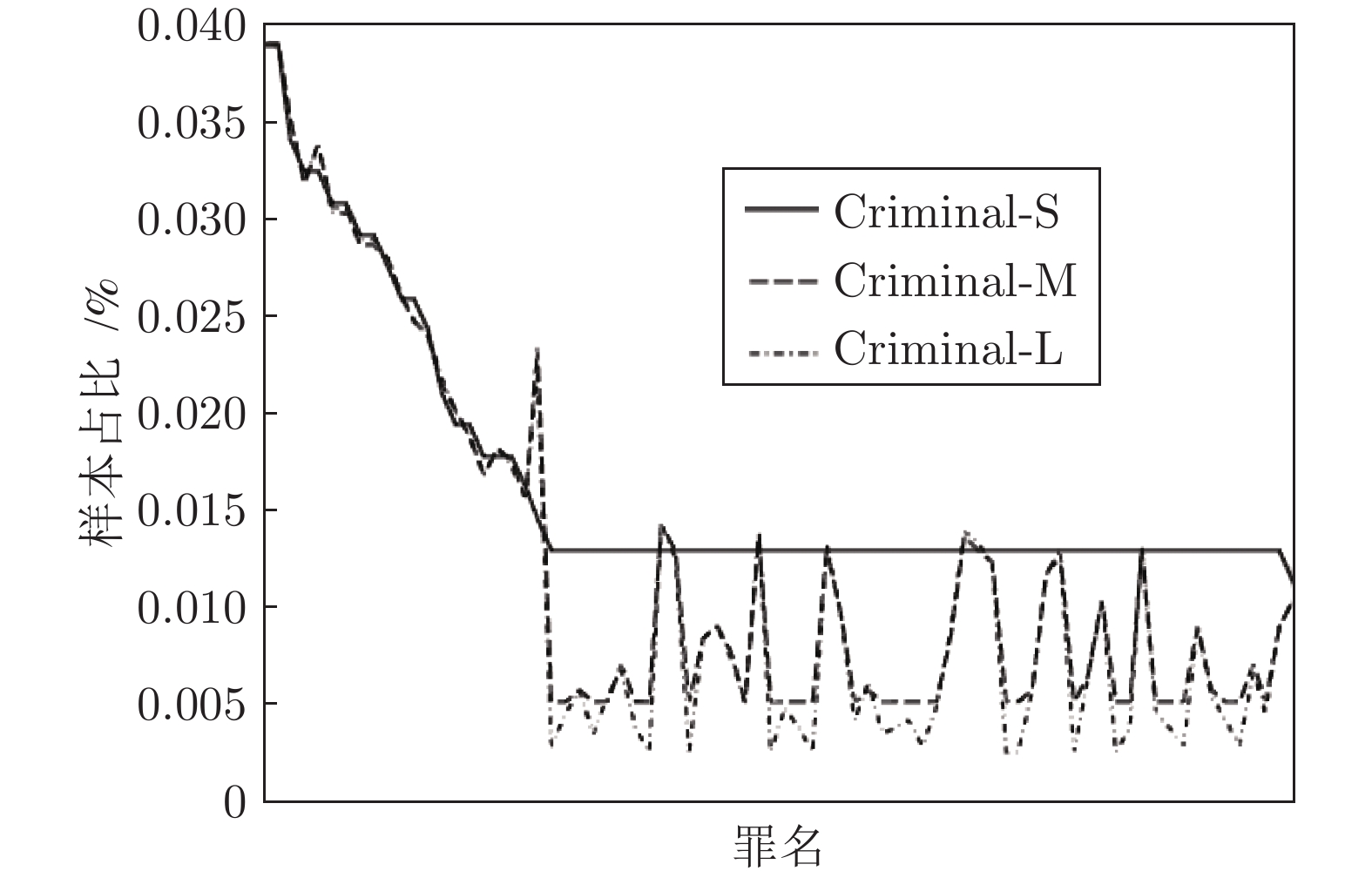

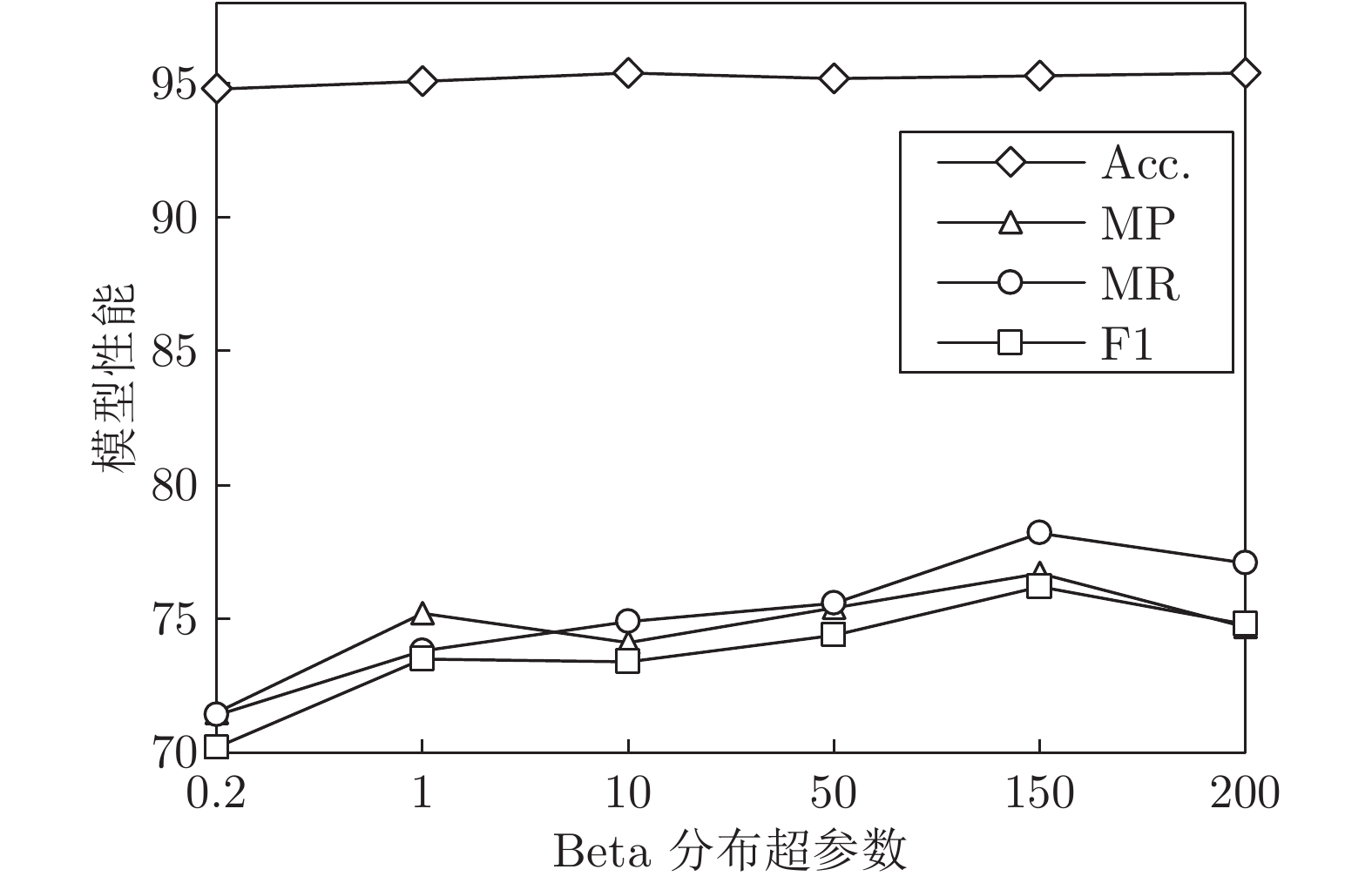

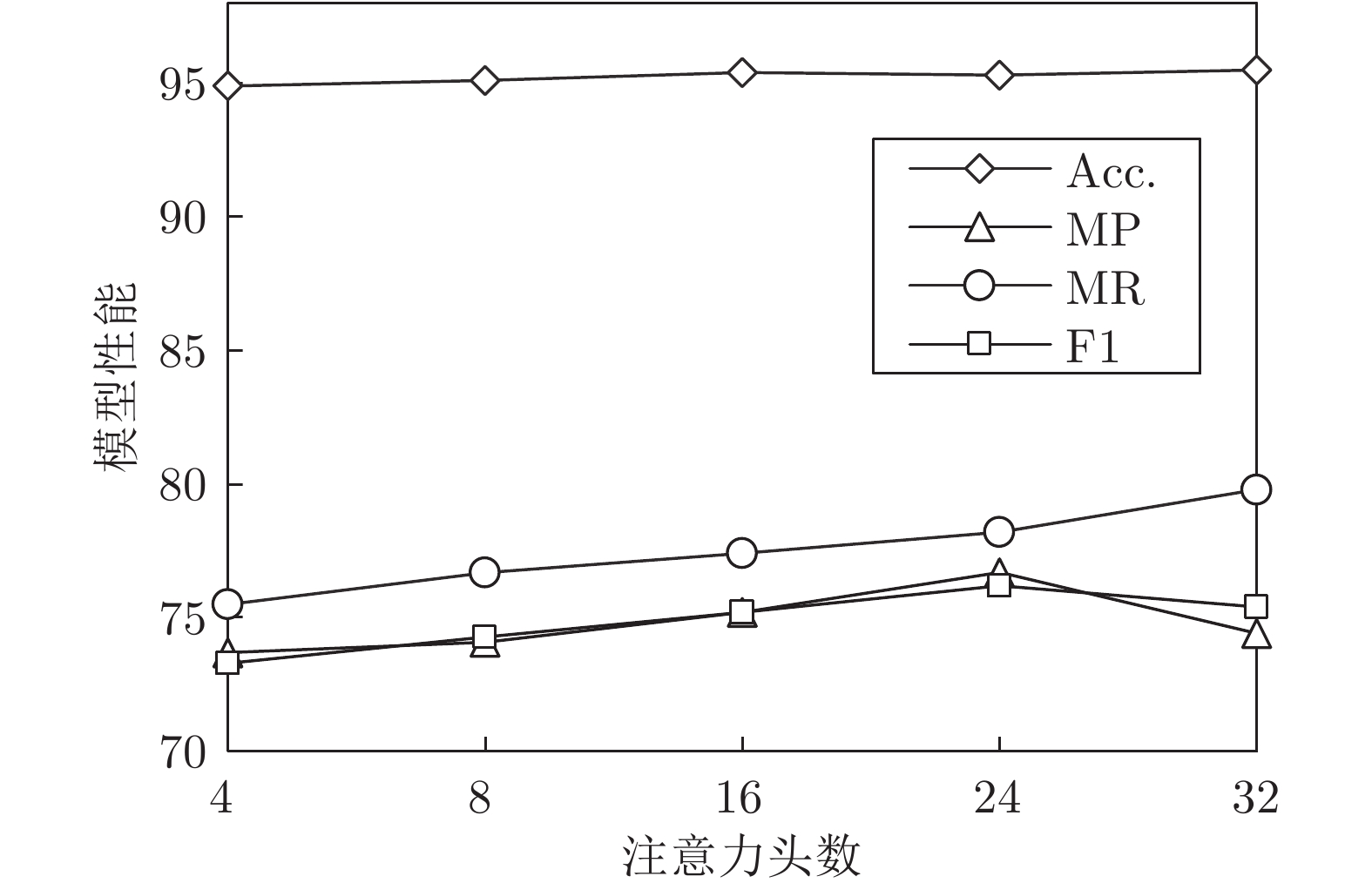

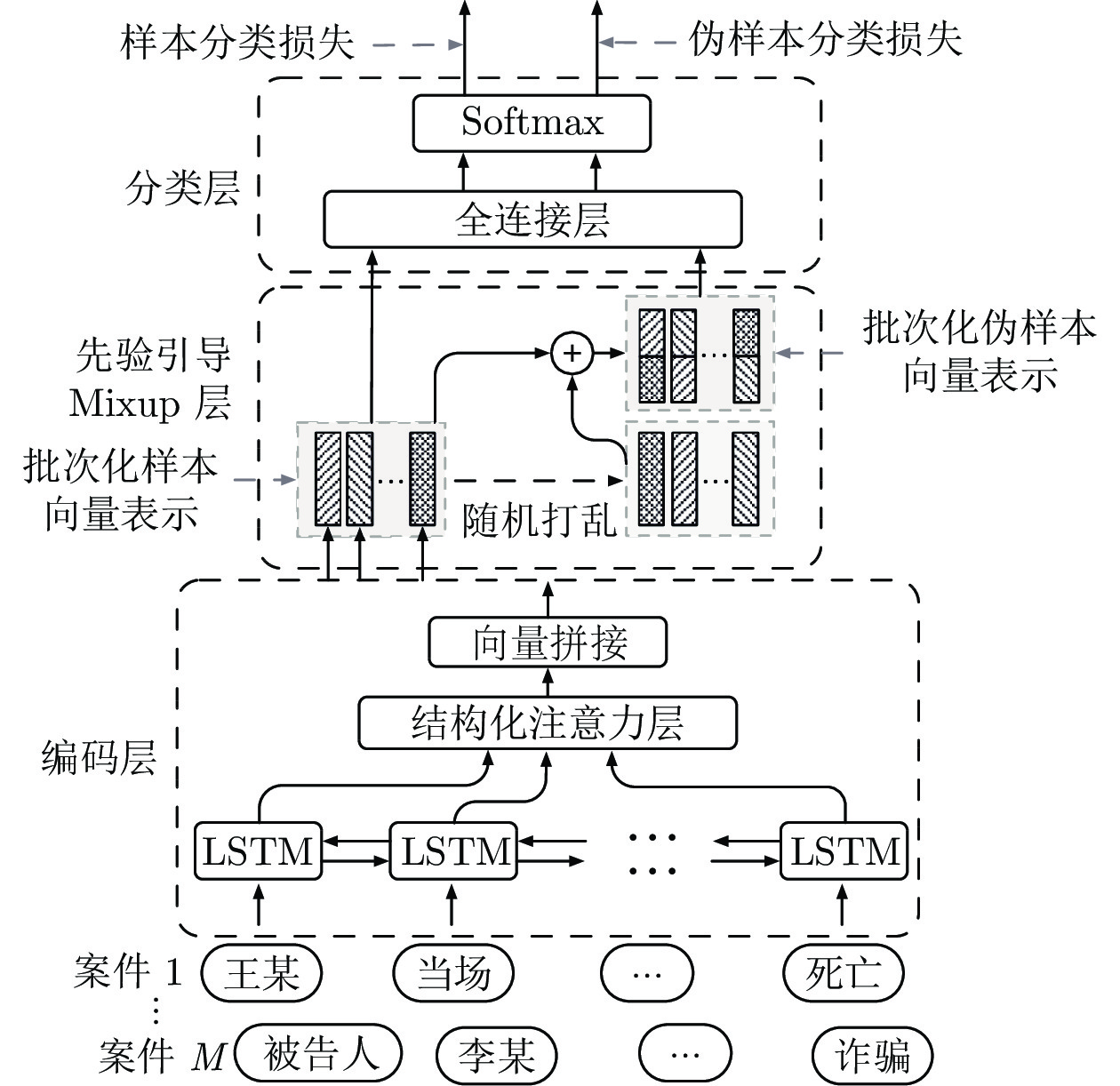

摘要: 罪名预测是人工智能技术应用于司法领域的代表性任务. 该任务根据案情描述和事实预测被告人被判的罪名. 由于各类罪名样本数量高度不平衡, 分类模型训练时分类器易偏向高频罪名类别, 从而导致低频罪名预测性能不佳. 针对罪名预测类别不平衡问题, 提出融合类别先验Mixup数据增强策略的罪名预测模型, 改进低频罪名预测效果. 该模型利用双向长短期记忆网络与结构化自注意力机制学习文本向量表示, 在此基础上, 通过Mixup数据增强策略在向量表示空间中合成伪样本, 并利用类别先验使合成样本的标签偏向低频罪名类别, 以此来扩增低频罪名训练样本. 实验结果表明, 与现有方法相比, 该方法在准确率、宏精确率、宏召回率和宏F1值上都获得了大幅提升, 低频罪名预测的宏F1值提升达到13.5%.Abstract: Charge prediction is a typical task of artificial intelligence technology applied in the field of justice. The task is to predict the charges of the accused based on the description of the case and the fact section. Due to the highly class-imbalanced of charges, classifiers usually result in poor prediction of low-frequency charges. To address the class-imbalanced problem, we propose a Mixup data augmentation strategy that combines category prior knowledge to improve the prediction performance of low-frequency charges. In this paper, we first learn the representation of text vector using the bi-directional long short-term memory model and structured attention mechanism. Then, we apply the proposed Mixup data augmentation strategy to generate synthetic samples in the text representation space. To emphasize data augmentation of the low-frequency charge samples, the category prior is employed to bias the synthetic labels to the low-frequency category. The experimental results on real-work data sets demonstrate that our method achieves significant and consistent improvements compared to other state-of-the-art baselines on the accuracy, macro precision, macro recall, and macro F1 value. Specifically, our model outperforms other baselines by more than 13.5% macro F1 value in the low-frequency charges.

-

表 1 数据集统计信息

Table 1 The statistics of different datasets

数据集 Criminal-S Criminal-M Criminal-L Train 61589 153521 306900 Test 7702 19189 38368 Valid 7755 19250 38429 表 2 罪名预测对比实验结果

Table 2 Comparative experimental results

模型 Criminal-S Criminal-M Criminal-L Acc. MP MR F1 Acc. MP MR F1 Acc. MP MR F1 TFIDF+SVM 85.8 49.7 41.9 43.5 89.6 58.8 50.1 52.1 91.8 67.5 54.1 57.5 CNN 91.9 50.5 44.9 46.1 93.5 57.6 48.1 50.5 93.9 66.0 50.3 54.7 CNN-200 92.6 51.1 46.3 47.3 92.8 56.2 50.0 50.8 94.1 61.9 50.0 53.1 LSTM 93.5 59.4 58.6 57.3 94.7 65.8 63.0 62.6 95.5 69.8 67.0 66.8 LSTM-200 92.7 66.0 58.4 57.0 94.4 66.5 62.4 62.7 95.1 72.8 66.7 67.6 Fact-Law Att 92.8 57.0 53.9 53.4 94.7 66.7 60.4 61.8 95.7 73.3 67.1 68.6 Few-Shot Attri 93.4 66.7 69.2 64.9 94.4 69.2 69.2 67.1 95.8 75.8 73.7 73.1 SECaps 94.8 71.3 70.3 69.4 95.4 71.3 70.2 69.6 96.0 81.9 79.7 79.5 LSTM-Att 95.2 75.1 74.4 73.5 95.9 75.9 76.6 75.2 96.6 86.2 79.5 80.8 LSTM-Att-Manifold-Mixup 95.3 73.7 75.3 73.3 96.3 80.1 79.1 79.5 96.8 85.8 81.5 82.3 LSTM-Att-Prior-Mixup 95.3 76.7 78.2 76.2 96.3 80.8 82.0 80.1 96.6 84.5 84.9 83.3 表 3 不同频率罪名预测宏 F1 值

Table 3 Macro F1 value of different frequency charges

模型 低频 (49类) 中频 (51类) 高频 (49类) Few-Shot Attri 49.7 60.0 85.2 SECaps 53.8 65.5 89.0 LSTM-Att 54.1 65.0 90.1 LSTM-Att-Manifold-Mixup 64.2 66.5 89.5 LSTM-Att-Prior-Mixup 67.3(↑13.5%) 67.8(↑2.3%) 90.0(↑1.0%) 表 4 易混淆罪名预测宏F1值

Table 4 Macro F1 value for confusing charges

模型 F1值 LSTM-200 79.7 Few-Shot Attri 88.1 SECaps 90.5 LSTM-Att 91.8 LSTM-Att-Manifold-Mixup 92.3 LSTM-Att-Prior-Mixup 92.1(↑1.6%) 表 5 不同编码器对比实验结果

Table 5 Comparative experimental results of different encoder

模型 Criminal-S Acc. MP MR F1 BERT-CLS 93.4 65.6 63.1 63.2 BERT-CLS-Manifold-Mixup 93.6 69.2 69.5 67.6 BERT-CLS-Prior-Mixup 93.8 70.6 72.9 70.6 BERT-Att 93.6 68.5 69.7 67.2 BERT-Att-Manifold-Mixup 94.1 70.8 73.0 70.9 BERT-Att-Prior-Mixup 94.4 71.4 73.3 71.1 LSTM-Att-Prior-Mixup 95.3 76.7 78.2 76.2 表 6 消融实验罪名预测结果

Table 6 Results of ablation experiments

模型 Criminal-S Criminal-M Criminal-L Acc. MP MR F1 Acc. MP MR F1 Acc. MP MR F1 LSTM-Att-Prior-Mixup 95.3 76.7 78.2 76.2 96.3 80.8 82.0 80.1 96.6 84.5 84.9 83.3 LSTM-Att 95.2 75.1 74.4 73.5 95.9 75.9 76.6 75.2 96.6 86.2 79.5 80.8 LSTM-Maxpool 93.5 44.2 41.1 41.3 95.6 58.0 54.1 54.9 96.3 71.2 64.8 65.9 -

[1] Zhong H X, Xiao C J, Tu C C, Zhang T Y, Liu Z Y, Sun M S. How does NLP benefit legal system: A summary of legal artificial intelligence. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Virtual Event: 2020. 5218−5230 [2] Kort F. Predicting Supreme Court Decisions Mathematically: A Quantitative Analysis of the" Right to Counsel" cases. The American Political Science Review, 1957, 51(1): 1-12. doi: 10.2307/1951767 [3] Mackaay E, Robillard P. Predicting judicial decisions: the nearest neighbour rule and visual representation of case patterns. De Gruyter, 1974, 41: 302-331. [4] Liu C L, Chang C T, Ho J H. Case instance generation and refinement for case-based criminal summary judgments in chinese. Journal of Information Science and Engineering, 2004, 20(4): 783-800. [5] Xiao C J, Zhong H X, Guo Z P, Tu C C, Liu Z Y, Sun M S, et al. CAIL2018: A large-scale legal dataset for judgment prediction, arXiv preprint, 2018, arXiv: 1807.02478 [6] Zhong H X, Guo Z P, Tu C C, Xiao C J, Liu Z Y, Sun M S. Legal judgment prediction via topological learning. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, Belgium: 2018. 3540−3549 [7] Yang W, Jia W, Zhou X J. Legal judgment prediction via multi-perspective bi-feedback network. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence. Macao, China: 2019. 4085−4091 [8] 王文广, 陈运文, 蔡华, 曾彦能, 杨慧宇. 基于混合深度神经网络模型的司法文书智能化处理. 清华大学学报(自然科学版), 2019, 59(7): 505-511.Wang Guang-Wen, Chen Yun-Wen, Cai Hua, Zeng Yan-Neng, Yang Hui-Yu. Judicial document intellectual processing using hybrid deep neural networks. Journal of Tsinghua University(Science and Technology), 2019, 59(7): 505-511. [9] Jiang X, Ye H, Luo Z C, Chao W H, Ma W J. Interpretable rationale augmented charge prediction system. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, New-Mexico, USA: 2018. 149−151 [10] 刘宗林, 张梅山, 甄冉冉, 公佐权, 余南, 付国宏. 融入罪名关键词的法律判决预测多任务学习模型. 清华大学学报(自然科学版), 2019, 59(7): 497-504.Liu Zong-Lin, Zhang Mei-Shan, Zhen Ran-Ran, Gong Zuo-Quan, Yu Nan, Fu Guo-Hong. Multi-task learning model for legal judgment predictions with charge keywords. Journal of Tsinghua University(Science and Technology), 2019, 59(7): 497-504. [11] Xu N, Wang P, Chen L, Pan L, Wang X Y, Zhao J Z. Distinguish confusing law articles for legal judgment prediction. In: Proceedings of the 58th Annual Meeting of the Association-for-Computational-Linguistics, Virtual Event: 2020. 3086−3095 [12] Hu Z K, Li X, Tu C C, Liu Z Y, Sun M S. Few-shot charge prediction with discriminative legal attributes. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, New-Mexico, USA: 2018. 487−498 [13] He C Q, Peng L, Le Y Q, He J W, Zhu X Y. SECaps: A sequence enhanced capsule model for charge prediction. In: Proceedings of the 28th International Conference on Artificial Neural Networks. Munich, Germany: Springer Verlag, 2019. 227−239 [14] Zhang H, Cisse M, Dauphin Y N, David L P. Mixup: Beyond empirical risk minimization. arXiv preprint, 2017, arXiv: 1710.09412 [15] Verma V, Lamb A, Beckham C, Najafi A, Mitiagkas I, Lopez-Paz D, et al. Manifold mixup: Better representations by interpolating hidden states. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, CA, USA: 2019. 11196−11205 [16] Lin Z H, Feng M W, Santos C N, Yu M, Xiang B, Zhou B, et al. A structured self-attentive sentence embedding. In: Proceedings of the 5th International Conference on Learning Representations. Toulon, France: 2017. 1−15 [17] Guo H Y, Mao Y Y, Zhang R C. Augmenting data with mixup for sentence classification: An empirical study. arXiv preprint, 2019, arXiv: 1905.08941 [18] Chen J A, Yang Z C, Yang D Y. MixText: Linguistically-informed interpolation of hidden space for semi-supervised text classification. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Virtual Event: 2020. 2147−2157 [19] Hochreiter S, Schmidhuber J. Long short-term memory. Neural computation, 1997, 9(8): 1735-1780. doi: 10.1162/neco.1997.9.8.1735 [20] Devlin J, Chang M W, Lee K, Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceeding of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, USA: 2019. 4171− 4186 [21] Kingma D P, Ba J L. Adam: A method for stochastic optimization. In: Proceedings of the 3rd International Conference on Learning Representations. San Diego, USA: 2015. 1−15 [22] Salton G, Buckley C. Term-weighting approaches in automatic text retrieval. Information processing & management, 1988, 24(5): 513-523. [23] Suykens J A K, Vandewalle J. Least Squares Support Vector Machine Classifiers. Neural processing letters, 1999, 9(3): 293-300. doi: 10.1023/A:1018628609742 [24] Kim Y. Convolutional neural networks for sentence classification. In: Proceedings of the 19th conference on Empirical Methods in Natural Language. Doha, Qatar: 2014. 1746−1751 [25] Luo B F, Feng Y S, Xu J B, Zhang X, Zhao D Y. Learning to predict charges for criminal cases with legal basis. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark: 2017. 2727−2736 [26] Zhong H X, Zhang Z Y, Liu Z Y, Sun M S. Open chinese language pre-trained model zoo [Online], available: https://github.com/thunlp/openclap, March 6, 2021. -

下载:

下载: