-

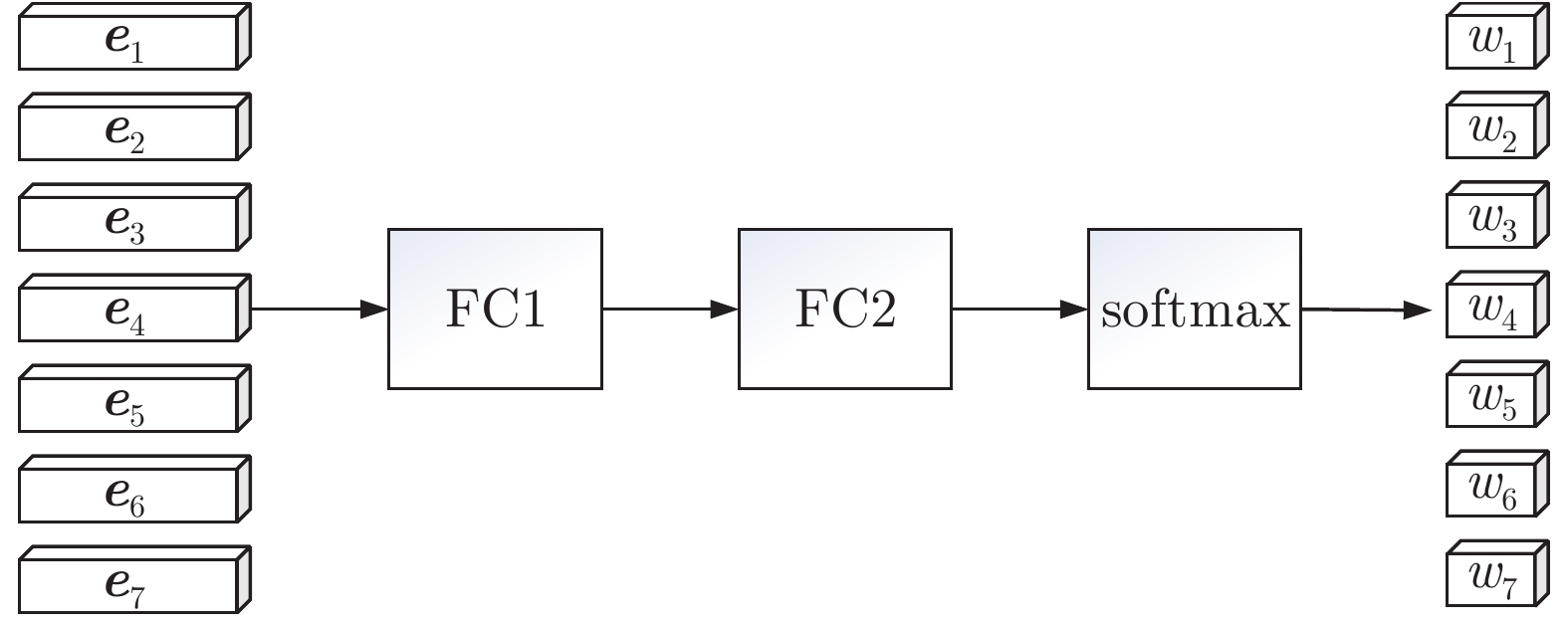

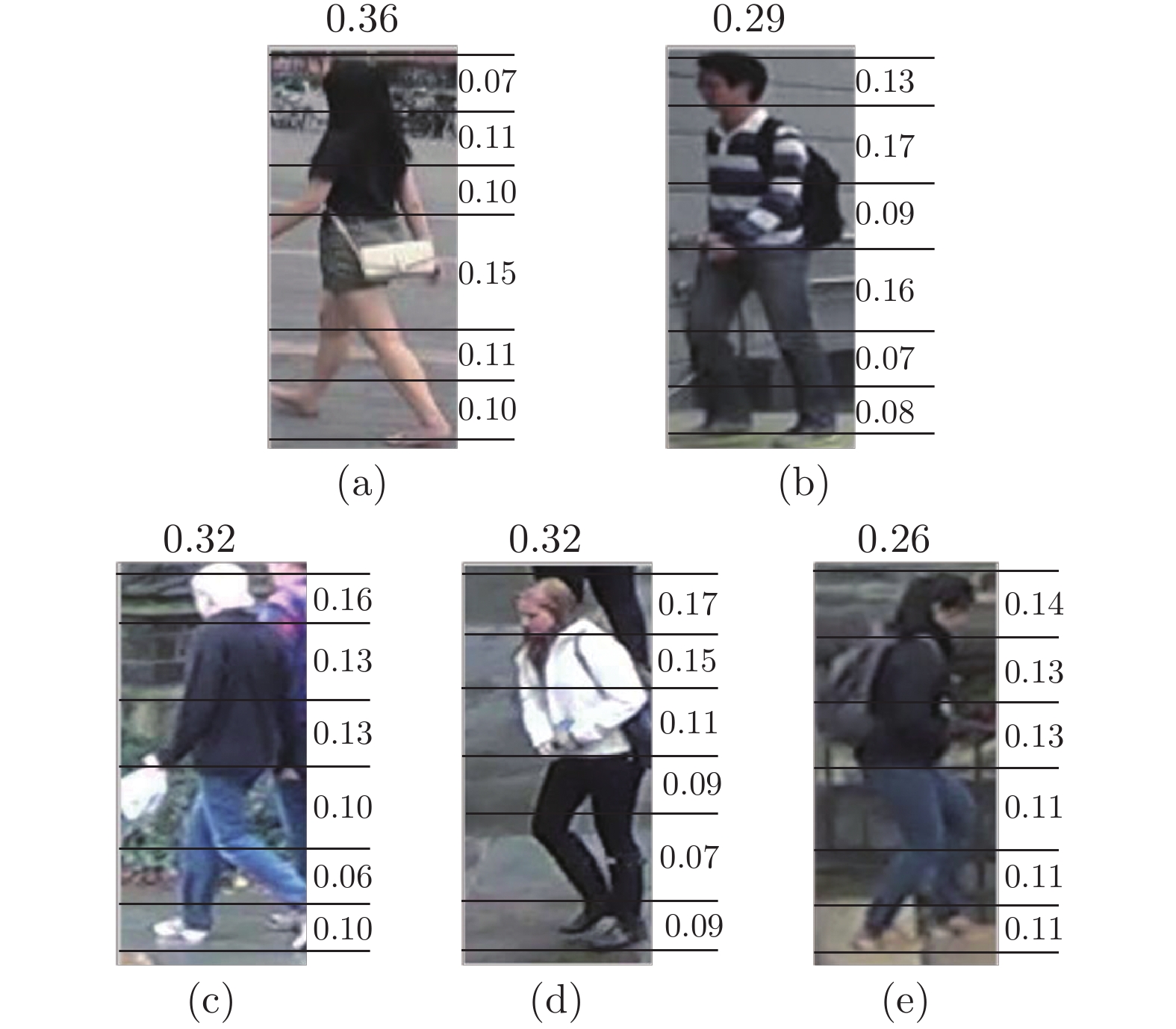

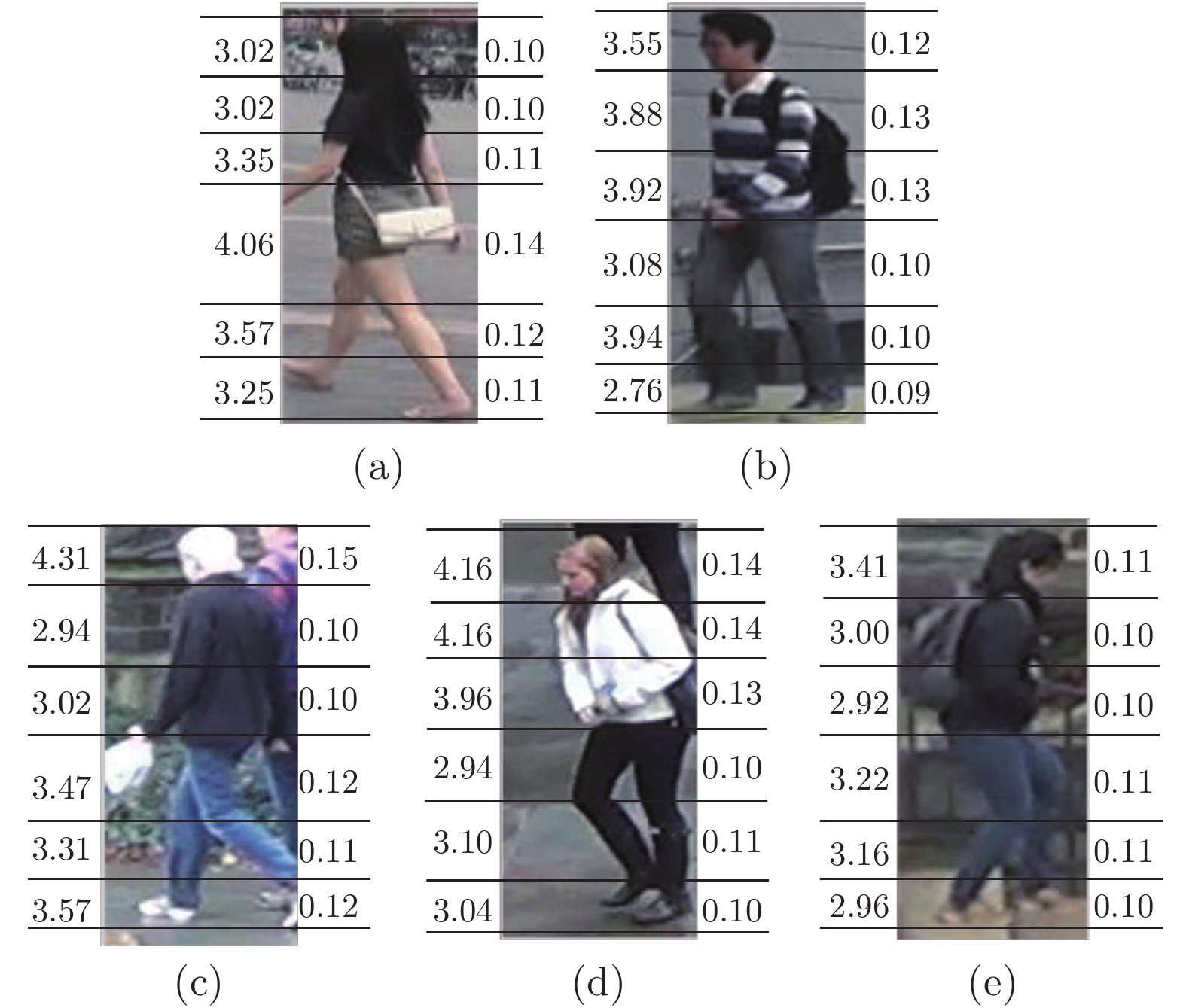

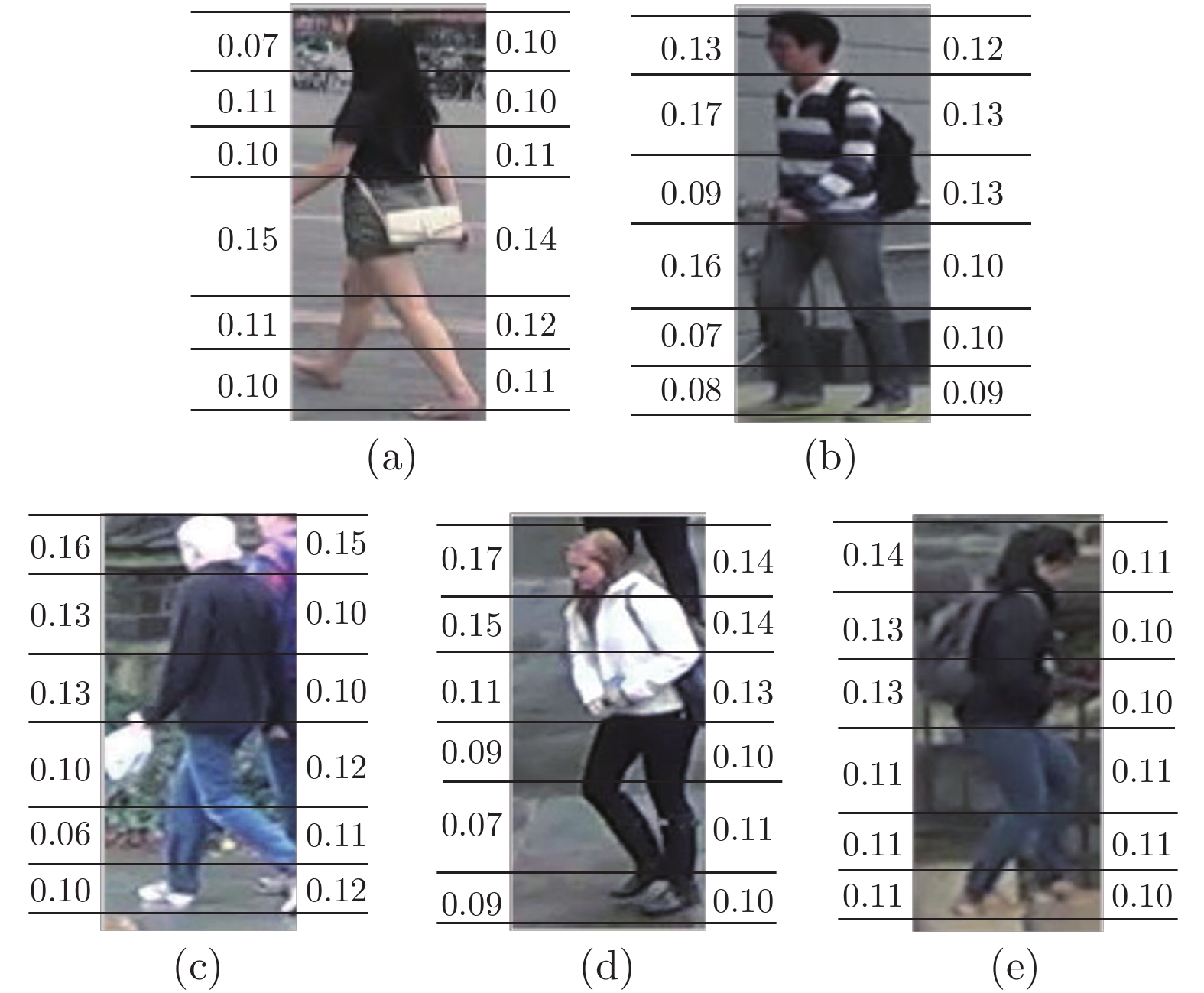

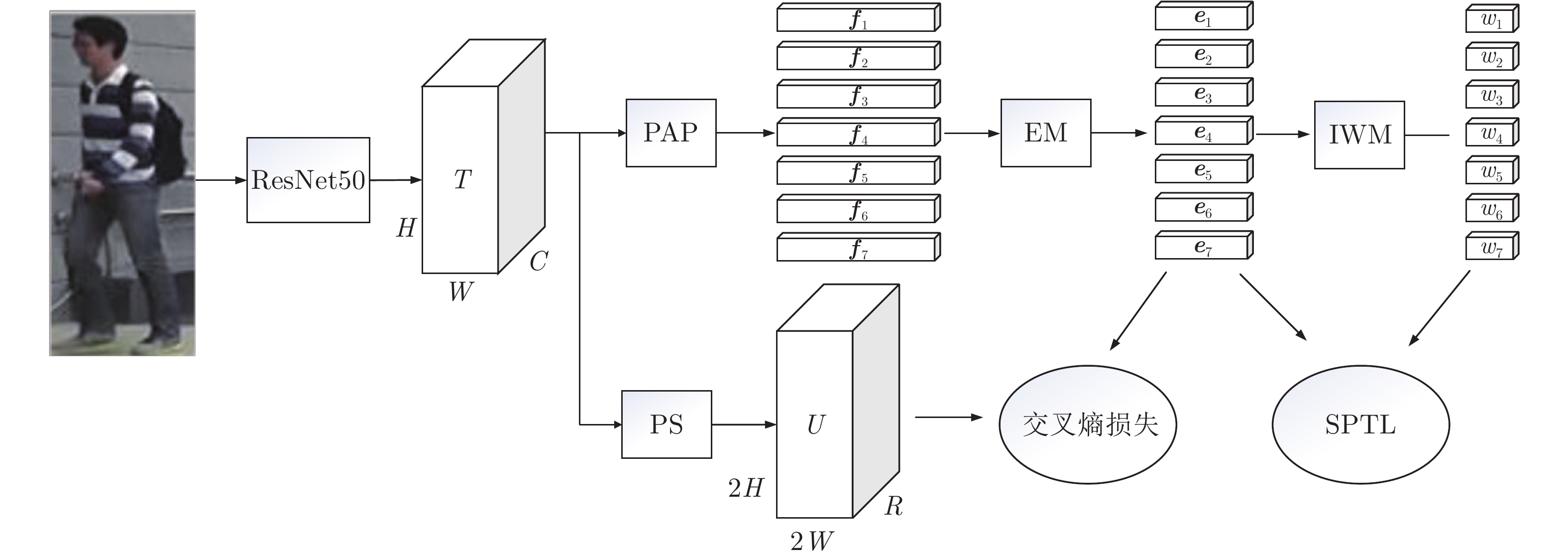

摘要: 大多数行人重识别(Person re-identification, ReID)方法仅将注意力机制作为提取显著特征的辅助手段, 缺少网络对行人图像关注程度的量化研究. 基于此, 提出一种可解释注意力部件模型(Interpretable attention part model, IAPM). 该模型有3 个优点: 1)利用注意力掩码提取部件特征, 解决部件不对齐问题; 2)为了根据部件的显著性程度生成可解释权重, 设计可解释权重生成模块(Interpretable weight generation module, IWM); 3)提出显著部件三元损失(Salient part triplet loss, SPTL)用于IWM的训练, 提高识别精度和可解释性. 在3 个主流数据集上进行实验, 验证所提出的方法优于现有行人重识别方法. 最后通过一项人群主观测评比较IWM生成可解释权重的相对大小与人类直观判断得分, 证明本方法具有良好的可解释性.Abstract: Most person re-identification (ReID) methods only use the attention mechanism as an auxiliary method to extract salient features, and lack of quantitative research on the attention degree of person images on the network. Based on this, this paper proposes an interpretable attention part model (IAPM). The model has three advantages: 1) Using the attention mask to extract component features for solving the problem of component misalignment; 2) To generate interpretable weights based on the significance of the components, we devise the interpretable weight generation module (IWM); 3) Salient part triple loss (SPTL) for IWM is proposed to further improve recognition accuracy and interpretability. A series of experiments are carried out on three mainstream datasets, and demonstrate that our method is superior to the state-of-the-art methods. Finally, a crowd subjective test is used to compare the relative size of the interpretable weights generated by IWM and human intuitive judgment scores, which proves that the method has good interpretability.

-

表 1 实验环境

Table 1 Experimental environment

软硬件环境 配置 实验平台 Pytorch 显卡 NVIDIA Tesla P100 内存 40 GB 显存 16 GB 表 2 实验参数

Table 2 Experimental parameters

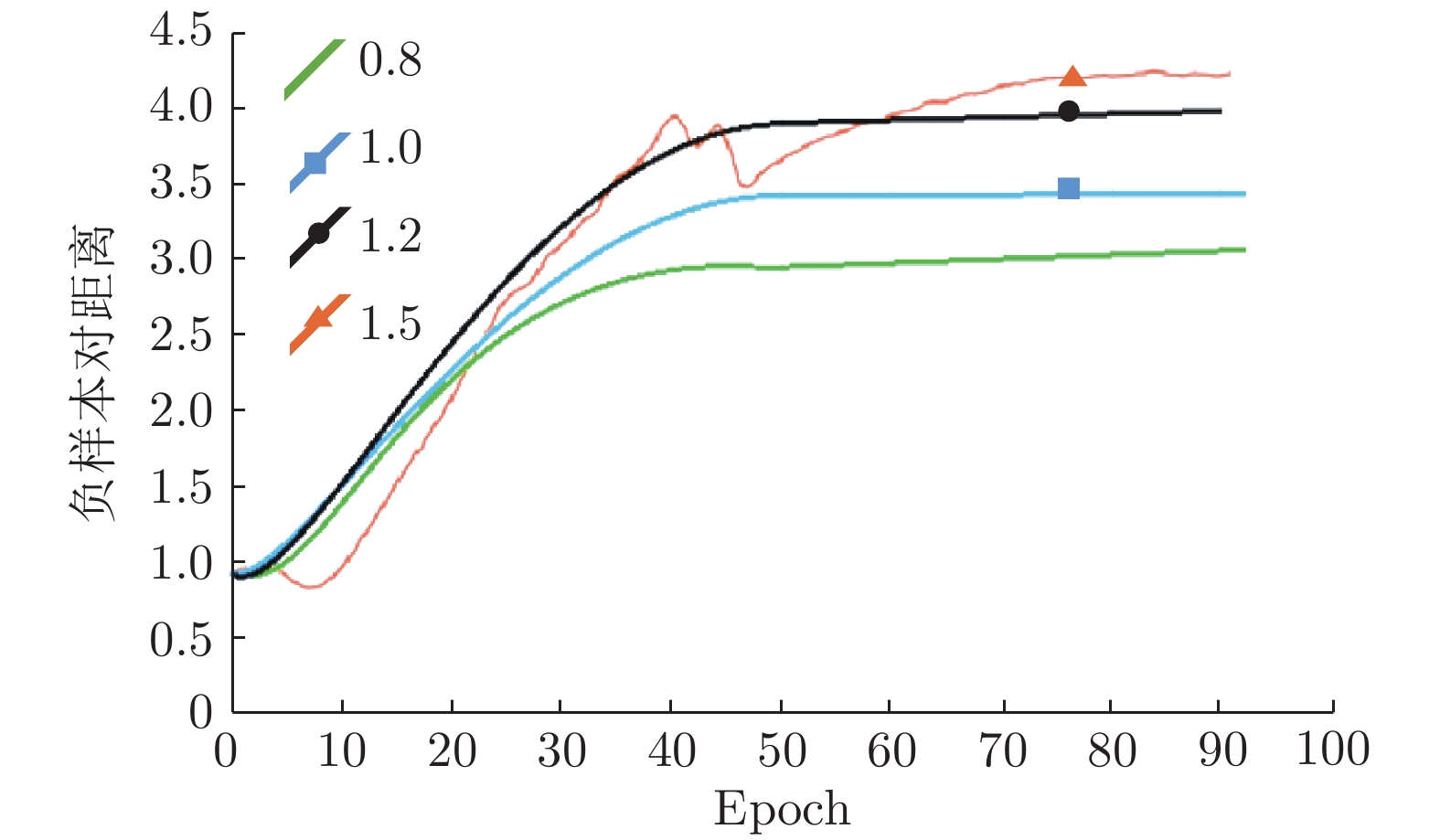

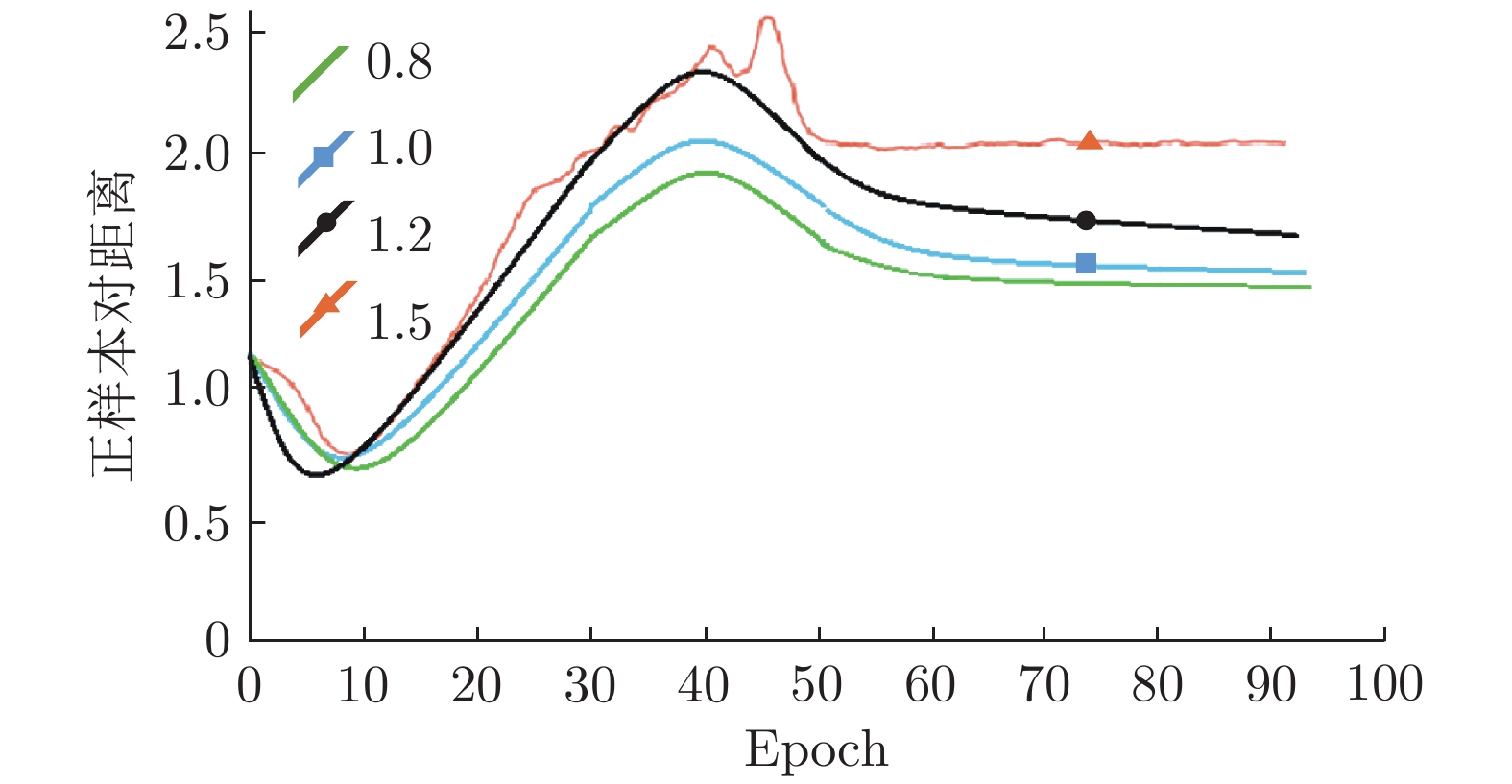

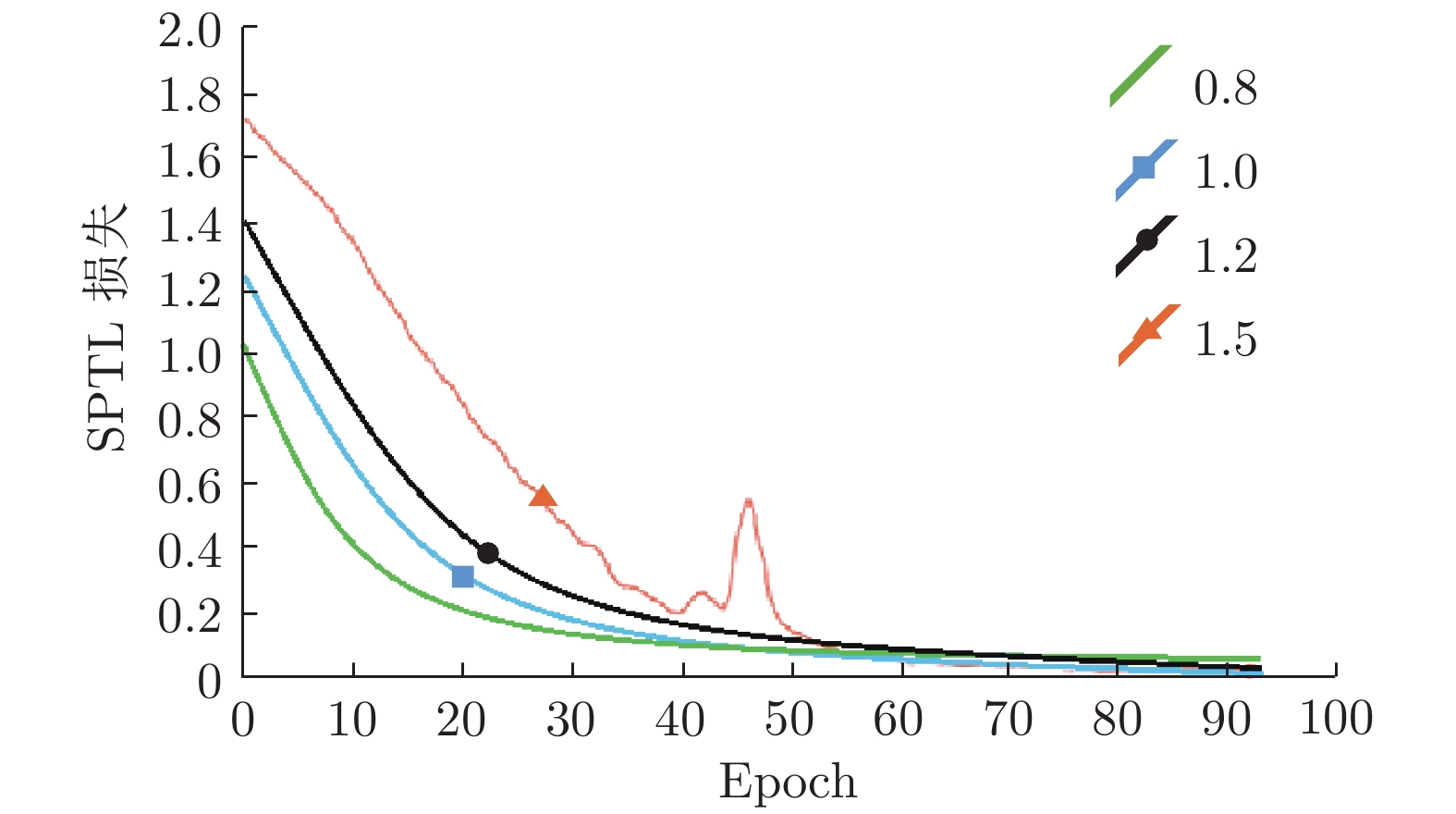

实验参数 参数数值 输入图像尺寸(像素) $384\times 128 $ 迭代次数 100 优化器 SGD 动量因子 0.9 权重衰减系数 $5\times10^{-4} $ Batchsize 128 显著部件三元损失$\alpha $ 1.2 表 3 与EANet的性能对比(%)

Table 3 Performance comparison with EANet (%)

方法 数据集 Market-1501 DukeMTMC-reID CUHK03 PAP-6P 94.3 (84.3) 85.6 (72.4) 68.1 (62.4) PAP 94.5 (84.9) 86.1 (73.3) 72.0 (66.2) PAP-S-PS 94.6 (85.6) 87.5 (74.6) 72.5 (66.8) IAPM-6P (本文) 95.0 (85.3) 86.9 (74.3) 72.5 (65.2) IAPM-9P (本文) 95.1 (86.0) 87.9 (75.6) 72.6 (67.4) IAPM (本文) 95.2 (86.3) 88.0 (75.7) 72.6 (67.2) 表 4 与其他方法的性能对比 (%)

Table 4 Performance comparison with other methods (%)

方法 数据集 Market-1501 DukeMTMC-reID CUHK03 Verif-Identify[38] 79.5 (59.9) 68.9 (49.3) — MSCAN[29] 80.8 (57.5) — — MGCAM[12] 83.8 (74.3) — 50.1 (50.2) Part-Aligned[39] 91.7 (79.6) 84.4 (69.3) — SPReID[40] 92.5 (81.3) 84.4 (71.0) — AlignedReID[41] 91.8 (79.3) — — Deep-Person[42] 92.3 (79.6) 80.9 (64.8) — PCB[7] 85.3 (68.5) 73.2 (52.8) 43.8 (38.9) PCB + RPP[7] 93.8 (81.6) 83.3 (69.2) 63.7 (57.5) HA-CNN[43] 91.2 (75.7) 80.5 (63.8) 44.4 (41.0) Mancs[44] 93.1 (82.3) 84.9 (71.8) 69.0 (63.9) P2-Net [45] 95.1 (85.6) 86.5 (73.1) 74.9 (68.9) M3 + ResNet50[46] 95.4 (82.6) 84.7 (68.5) 66.9 (60.7) IAPM (本文) 95.2 (86.3) 88.0 (75.7) 72.6 (67.2) 注: “—” 表示文献中没有提供相应数据. 表 5 消融实验1

Table 5 Ablation experiment 1

模型 Rank-1 (%) mAP (%) 原始模型 92.4 80.5 原始模型 + IWM + SPTL 95.0 86.1 原始模型 + IWM + SPTL +

中心损失95.2 86.3 注: 加粗字体表示各列最优结果. 表 6 消融实验2

Table 6 Ablation experiment 2

人体部件个数 Rank-1 (%) mAP (%) 6 95.0 85.3 7 95.2 86.3 9 95.1 86.0 注: 加粗字体表示各列最优结果. 表 7 消融实验3

Table 7 Ablation experiment 3

$\alpha $ Rank-1 (%) mAP (%) 0.1 94.4 85.2 0.5 94.5 85.3 0.8 94.8 85.7 1.0 94.7 85.6 1.2 95.2 86.3 1.5 94.6 85.6 2.0 94.7 85.3 5.0 93.5 83.5 10.0 93.3 81.0 注: 加粗字体表示各列最优结果. 表 8 消融实验4

Table 8 Ablation experiment 4

$\lambda $ Rank-1 (%) mAP (%) 0.2 94.4 85.4 0.4 94.8 85.4 0.6 94.4 85.1 0.8 94.8 85.7 1.0 95.2 86.3 注: 加粗字体表示各列最优结果. -

[1] Yi D, Lei Z, Liao S C, Li S Z. Deep metric learning for person re-identification. In: Proceedings of the 22nd IEEE International Conference on Pattern Recognition. Stockholm, Sweden: IEEE, 2014. 34−39 [2] Liao S C, Hu Y, Zhu X Y, Li S Z. Person re-identification by local maximal occurrence representation and metric learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 2197−2206 [3] 罗浩, 姜伟, 范星, 张思朋. 基于深度学习的行人重识别研究进展. 自动化学报, 2019, 45(11): 2032-2049.Luo Hao, Jiang Wei, Fan xing, Zhang Si-Peng. A survey on deep learning based person re-identification. Acta Automatica Sinica, 2019, 45(11): 2032-2049. [4] Rumelhart D E, Hinton G E, Williams R J. Learning representations by back-propagating errors. Nature, 1986, 323(6088): 533-536. doi: 10.1038/323533a0 [5] 吴飞, 廖彬兵, 韩亚洪. 深度学习的可解释性. 航空兵器, 2019, 26(01): 43-50.Wu Fei, Liao Bin-Bing, Han Ya-Hong. Interpretability for Deep Learning. Aero Weaponry, 2019, 26(01): 43-50. [6] Chen W H, Chen X T, Zhang J G, Huang K Q. A multi-task deep network for person re-identification. In: Proceedings of the 31st Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2017. 3988−3994 [7] Sun Y G, Zheng L, Yang Y, Tian Q, Wang S J. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In: Proceedings of the European Conference on Computer Vision. Munich, Germany: Springer, 2018. 480−496 [8] Zhou S P, Wang J J, Wang J Y, Gong Y H, Zheng N N. Point to set similarity based deep feature learning for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 5028−5037 [9] Sarfraz M S, Schumann A, Eberle A, Stiefelhagen R. A pose-pensitive embedding for person re-identification with expanded cross neighborhood re-ranking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 420−429 [10] Zhao L M, Li X, Zhuang Y T, Wang J D. Deeply-learned part-aligned representations for person re-identification. In: Proceedings of the IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 3239−3248 [11] Zhou S P, Wang J J, Meng D Y, Liang Y D, Gong Y H, Zheng N N. Discriminative feature learning with foreground attention for person re-identification. IEEE Transactions on Image Processing, 2019, 28(9): 4671-4684. [12] Song C F, Huang Y, Ouyang W L, Wang L. Mask-guided contrastive attention model for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 1179−1188 [13] Xu J, Zhao R, Zhu F, Wang H M, Ouyang W L. Attention-aware compositional network for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 2119−2128 [14] Tay C P, Roy S, Yap K H. Aanet: Attribute attention network for person re-identifications. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 7134−7143 [15] Zhou S P, Wang F, Huang Z Y, Wang J J. Discriminative feature learning with consistent attention regularization for person re-identification. In: Proceedings of the IEEE International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 8039−8048 [16] Huang H J, Yang W J, Chen X T, Zhao X, Huang K Q, Lin J B, et al. EANet: Enhancing alignment for cross-domain person re-identification [Online], available: http://arxiv.org/abs/1812.11369, October 21, 2020 [17] Bach S, Binder A, Montavon G, Klauschen F, Muller K, Samek W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One, 2015, 10(7): e0130140. doi: 10.1371/journal.pone.0130140 [18] Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning deep features for discriminative localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 2921−2929 [19] Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow I J, et al. Intriguing properties of neural networks [Online], available: http://arxiv.org/abs/1312.6199, October 21, 2020 [20] Bau D, Zhou B, Khosla A, Oliva A, Torralba A. Network dissection: Quantifying interpretability of deep visual representations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 3319−3327 [21] Dong Y P, Su H, Zhu J, Zhang B. Improving interpretability of deep neural networks with semantic information. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 975−983 [22] Zhang Q S, Wu Y N, Zhu S C. Interpretable convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8827−8836 [23] Zheng L, Yang Y, Hauptmann A G. Person re-identification: Past, present and future [Online], available: http://arxiv.org/abs/1610.02984, October 21, 2020 [24] Zheng L, Zhang H H, Sun S Y, Chandraker M, Yang Y, Tian Qi. Person re-identification in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 3346−3355 [25] Lin Y T, Zheng L, Zheng Z D, Wu Y, Hu Z L, Yan C G, et al. Improving person re-identification by attribute and identity learning. Pattern Recognition, 2019, 95: 151-161. doi: 10.1016/j.patcog.2019.06.006 [26] Geng M Y, Wang Y W, Xiang T, Tian Y H. Deep transfer learning for person re-identification [Online], available: http://arxiv.org/abs/1611.05244, October 21, 2020 [27] Varior R R, Haloi M, Wang G. Gated siamese convolutional neural network architecture for human re-identification. In: Proceedings of the European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 791−808 [28] Hermans A, Beyer L, Leibe B. In defense of the triplet loss for person re-identification [Online], available: http://arxiv.org/abs/1703.07737, October 21, 2020 [29] Li D W, Chen X T, Zhang Z, Huang K Q. Learning deep context-aware features over body and latent parts for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 7398−7407 [30] Fang P F, Zhou J M, Roy S K, Petersson L, Harandi M. Bilinear attention networks for person retrieval. In: Proceedings of the IEEE International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 8029−8038 [31] Liu H, Feng J S, Qi M B, Jiang J G, Yan S C. End-to-end comparative attention networks for person re-identification. IEEE Transactions on Image Processing, 2017, 26(7): 3492-3506. doi: 10.1109/TIP.2017.2700762 [32] Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8): 1735-1780. doi: 10.1162/neco.1997.9.8.1735 [33] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, Nevada, USA: IEEE, 2016. 770−778 [34] Wen Y D, Zhang K P, Li Z F, Qiao Y. A discriminative feature learning approach for deep face recognition. In: Proceedings of the European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 499−515 [35] Zheng L, Shen L, Tian L, Wang S J, Wang J D, Tian Q. Scalable person re-identification: A benchmark. In: Proceedings of the IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 1116−1124 [36] Ristani E, Solera F, Zou R, Cucchiara, R, Tomasi C. Performance measures and a data set for multi-target, multi-camera tracking. In: Proceedings of the European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 17−35 [37] Li W, Zhao R, Xiao T, Wang X G. Deepreid: Deep filter pairing neural network for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 152−159 [38] Zheng Z D, Zheng L, Yang Y. A discriminatively learned cnn embedding for person reidentification. ACM Transactions on Multimedia Computing, Communications, and Applications, 2018, 14(1): Article No. 13. [39] Suh Y, Wang J, Tang S, Mei T, Lee K M. Part-aligned bilinear representations for person re-identification. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: Springer, 2018. 418−437 [40] Kalayeh M M, Basaran E, Gökmen M, Kamasak M E, Shah M. Human semantic parsing for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 1062−1071 [41] Zhang X, Luo H, Fan X, Xiang W L, Sun Y X, Xiao Q Q, et al. Alignedreid: Surpassing human-level performance in person re-identification [Online], available: http://arxiv.org/abs/1711.08184, October 21, 2020 [42] Bai X, Yang M K, Huang T T, Dou Z Y, Yu R, Xu Y C. Deep-person: learning discriminative deep features for person re-identification. Pattern Recognition, 2020, 98: 107036. doi: 10.1016/j.patcog.2019.107036 [43] Li W, Zhu X T, Gong S G. Harmonious attention network for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 2285−2294 [44] Wang C, Zhang Q, Huang C, Liu W Y, Wang X G. Mancs: A multi-task attentional network with curriculum sampling for person re-identification. In: Proceedings of the European Conference on Computer Vision. Munich, Germany: Springer, 2018. 384−400 [45] Guo J Y, Yuan Y H, Huang L, Zhang C, Yao J G, Han K. Beyond human parts: Dual part-aligned representations for person re-identification. In: Proceedings of the IEEE International Conference on Computer Vision. Seoul, South Korea: IEEE, 2019. 3641−3650 [46] Zhou J H, Su B, Wu Y. Online joint multi-metric adaptation from frequent sharing-subset mining for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Virtual Event: IEEE, 2020. 2909−2918 -

下载:

下载: