-

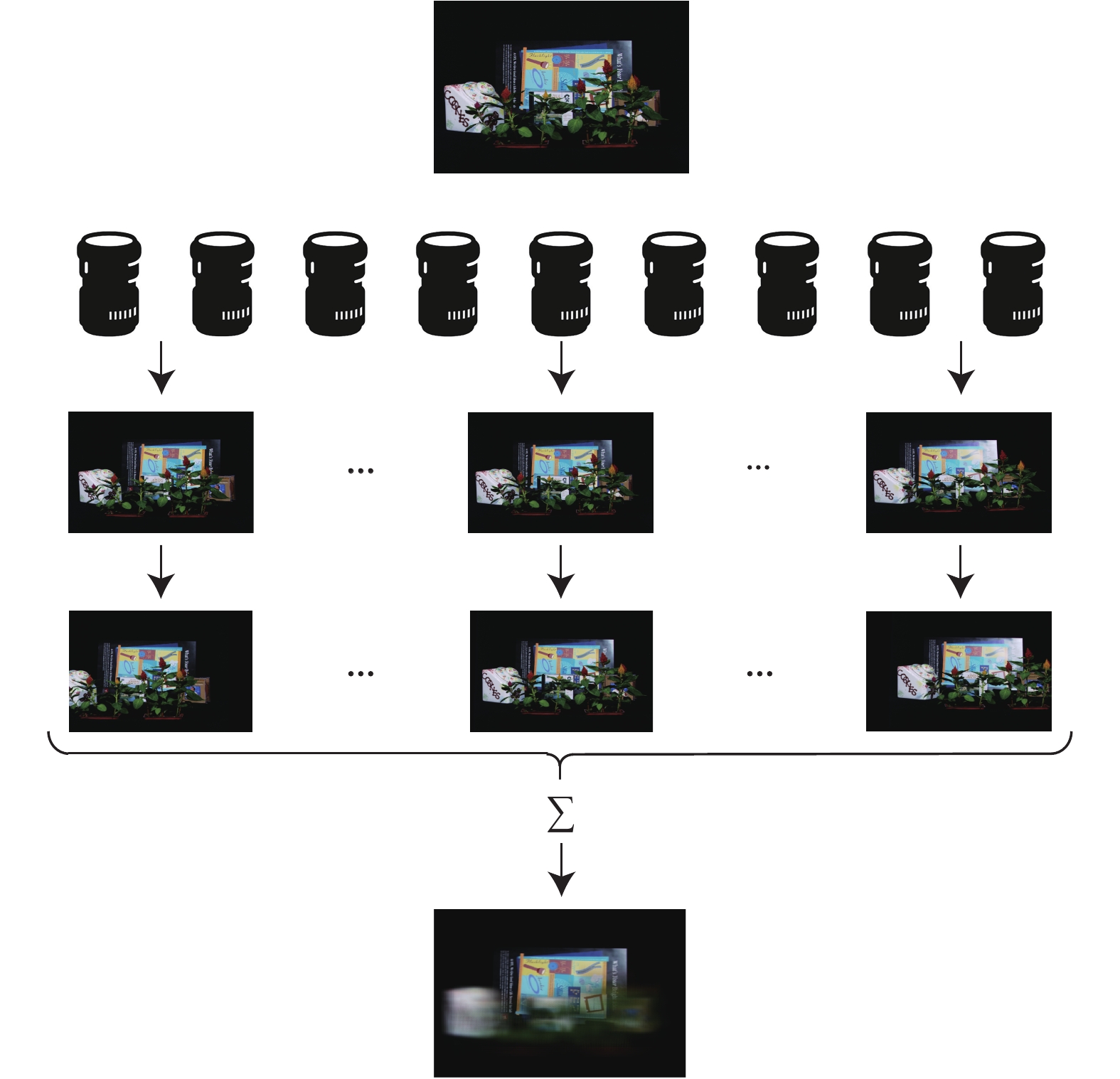

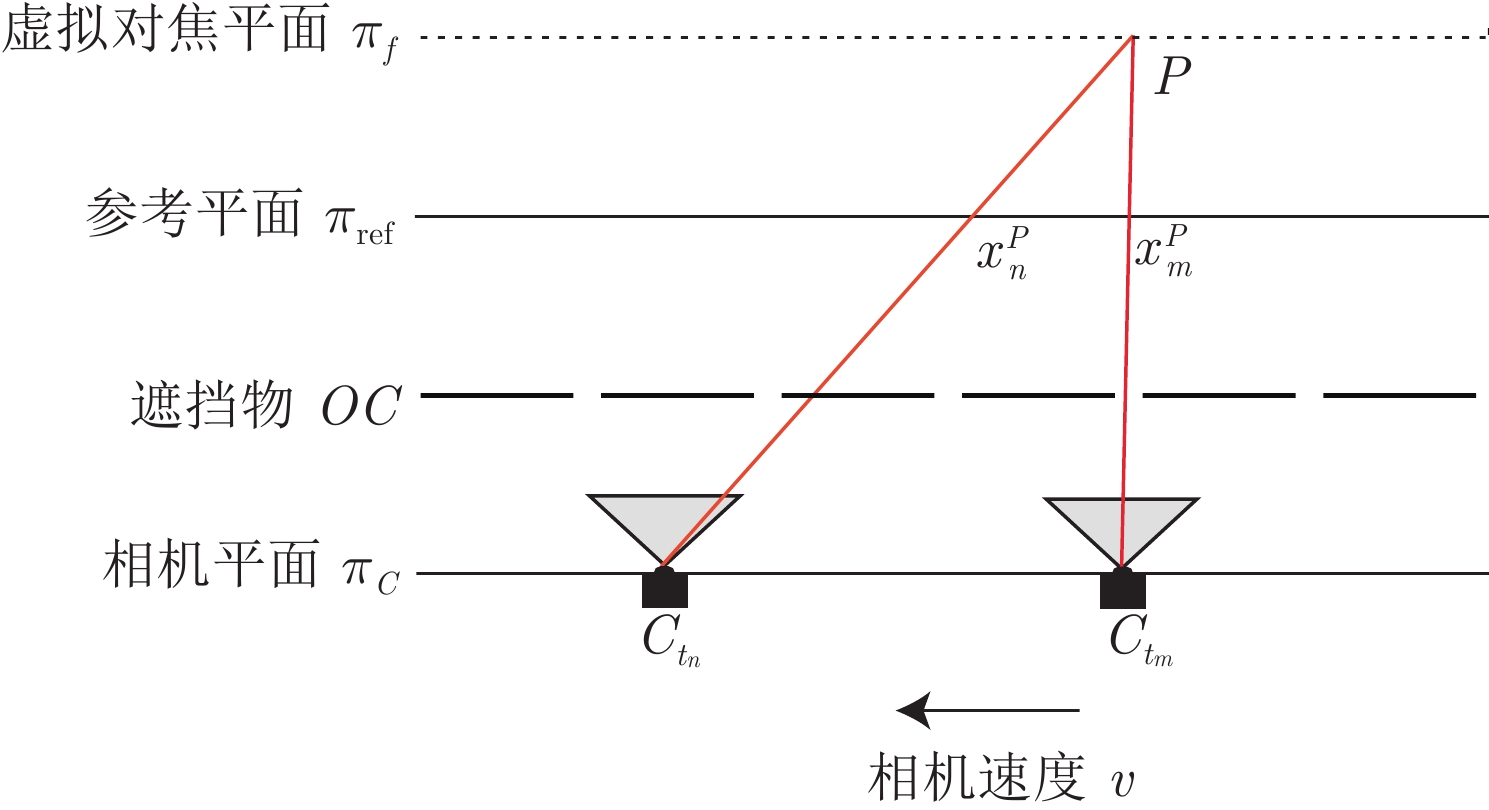

摘要: 合成孔径成像(Synthetic aperture imaging, SAI)通过多角度获取目标信息来等效大孔径和小景深相机成像. 因此, 该技术可以虚化遮挡物, 实现对被遮挡目标的成像. 然而, 在密集遮挡和极端光照条件下, 由于遮挡物的密集干扰和相机本身较低的动态范围, 基于传统相机的合成孔径成像(SAI with conventional cameras, SAI-C)无法有效地对被遮挡目标进行成像. 利用事件相机低延时、高动态的特性, 本文提出基于事件相机的合成孔径成像方法. 事件相机产生异步事件数据, 具有极低的延时, 能够以连续视角观测场景, 从而消除密集干扰的影响. 而事件相机的高动态范围使其能够有效处理极端光照条件下的成像问题. 通过分析场景亮度变化与事件相机输出的事件点之间的关系, 从对焦后事件点重建出被遮挡目标, 实现基于事件相机的合成孔径成像. 实验结果表明, 所提出方法与传统方法相比, 在密集遮挡条件下重建图像的对比度、清晰度、峰值信噪比(Peak signal-to-noise ratio, PSNR)和结构相似性(Structural similarity index measure, SSIM)指数均有较大提升. 同时, 在极端光照条件下, 所提出方法能有效解决过曝/欠曝问题, 重建出清晰的被遮挡目标图像.Abstract: The technique of the synthetic aperture imaging (SAI) can reconstruct the occluded objects by blurring out the occlusions through multi-view exposures, which is equivalent to imaging with the large aperture and low depth of field. However, due to the very dense disturbances of occlusions and low dynamic range of traditional cameras, it is hard to effectively reconstruct the occluded objects by SAI with conventional cameras (SAI-C) under dense occlusions and extreme light conditions. To address these problems, we propose a new SAI method based on event cameras which can produce asynchronous events with extremely low latency and high dynamic range. Thus, it can eliminate the dense disturbances of occlusions by measuring with almost continuous views and tackle the problem of imaging with extreme light conditions. Particularly, the occlusions can be blurred out and the occluded objects can be reconstructed from the focused events followed by relating the brightness change to the generated events. The experimental results demonstrate that the proposed method can greatly improve the contrast and sharpness of the reconstructed images under dense occlusion conditions comparing to the SAI-C. Quantitative comparisons of peak signal-to-noise ration (PSNR) and structural similarity index measure (SSIM) also illustrate the superiority of the proposed method. On the other hand, under extreme light conditions, the proposed method can effectively solve the over/under exposure problem and reconstruct the occluded objects clearly.

-

图 1 基于传统相机的SAI和基于事件相机的SAI效果对比. 第1列分别为拍摄实景和目标图像. 第2 ~ 4列分别对应密集遮挡、极高光照条件、极低光照条件下, 基于传统相机的SAI与本文提出的基于事件相机SAI的成像结果对比

Fig. 1 Comparison of conventional camera based SAI and event camera based SAI. The first column illurstrates experimental scene and object image. Columns 2, 3, and 4 correspond to the comparison of conventional camera based SAI results and event camera based SAI results under dense occlusions, extreme high light and extreme low light conditions

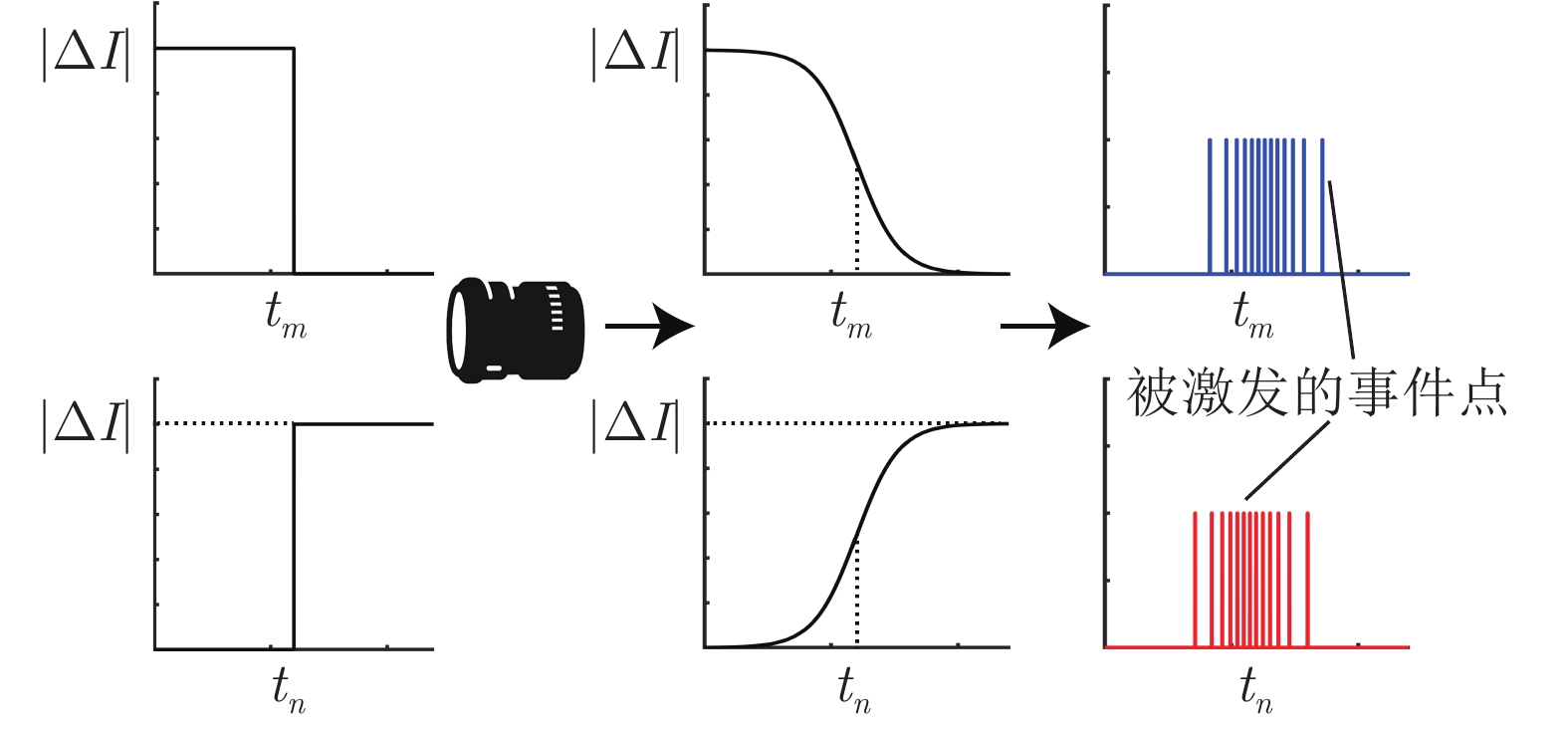

图 4 亮度变化导致事件相机激发事件点数据. 光学成像系统等效于一个低通滤波器, 场景亮度的突变传入相机后转变为连续的亮度变化, 事件相机对亮度变化作出响应, 激发正极性(右下子图)或负极性(右上子图)事件点

Fig. 4 Event camera generates events when brightness changes. The optical imaging system is equivalent to a low-pass filter. The sudden change of brightness is converted into a continuous brightness change in the camera. Event camera responds to the brightness change and generates positive (bottom-right inset) or negative (top-right inset) events

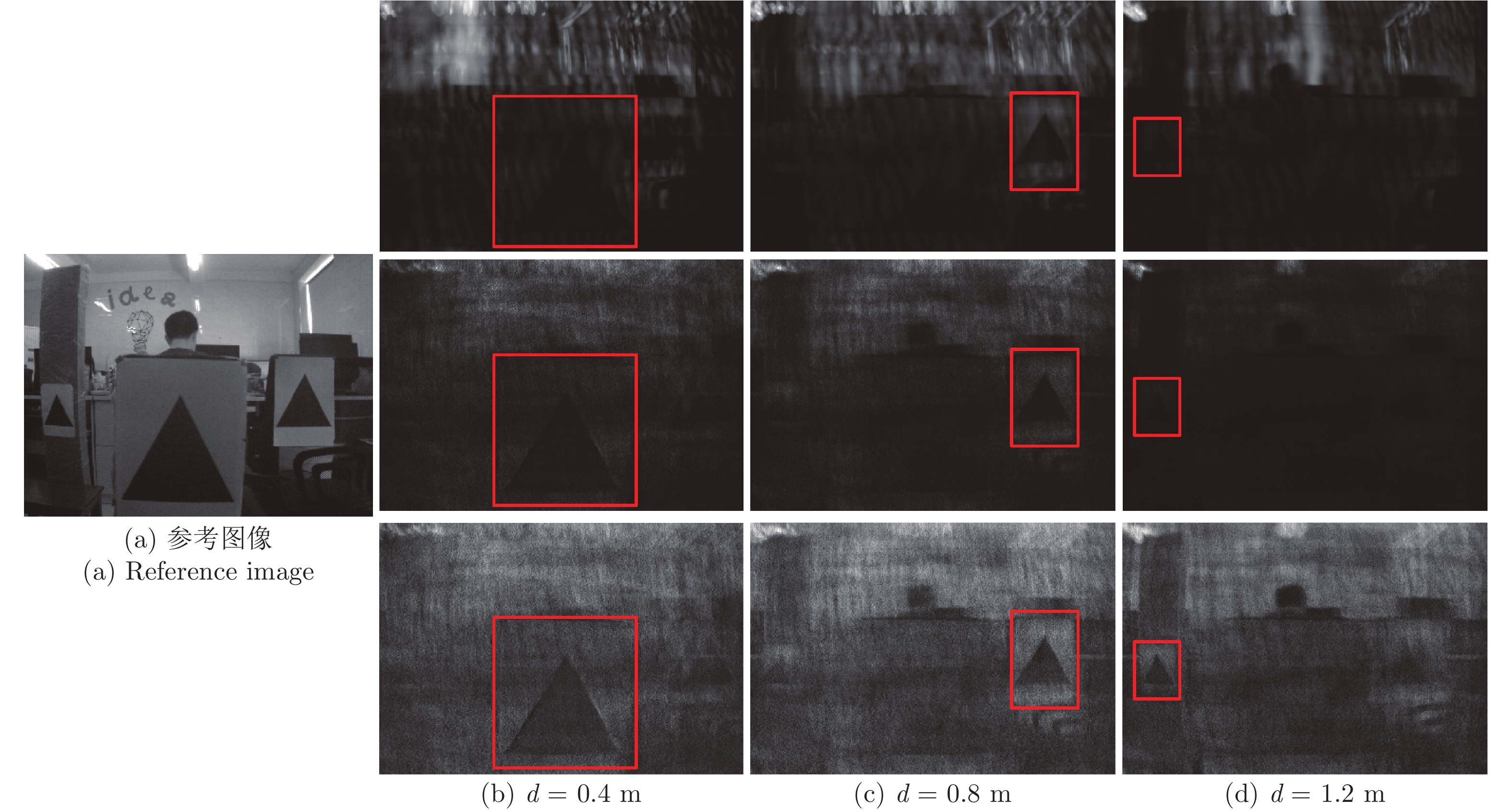

图 9 灌木枝丛遮挡条件下的多深度合成孔径成像结果与对比 (第1行为传统合成孔径成像结果,第2行为基于固定阈值重建法的成像结果, 第3行为基于自适应阈值重建法的成像结果)

Fig. 9 Comparison of SAI results at different focus depths under the condition of dense bushes occlusion (The first row is SAI-C; the second row is reconstructed result with fixed thresholds; the third row is reconstructed result with adaptive thresholds)

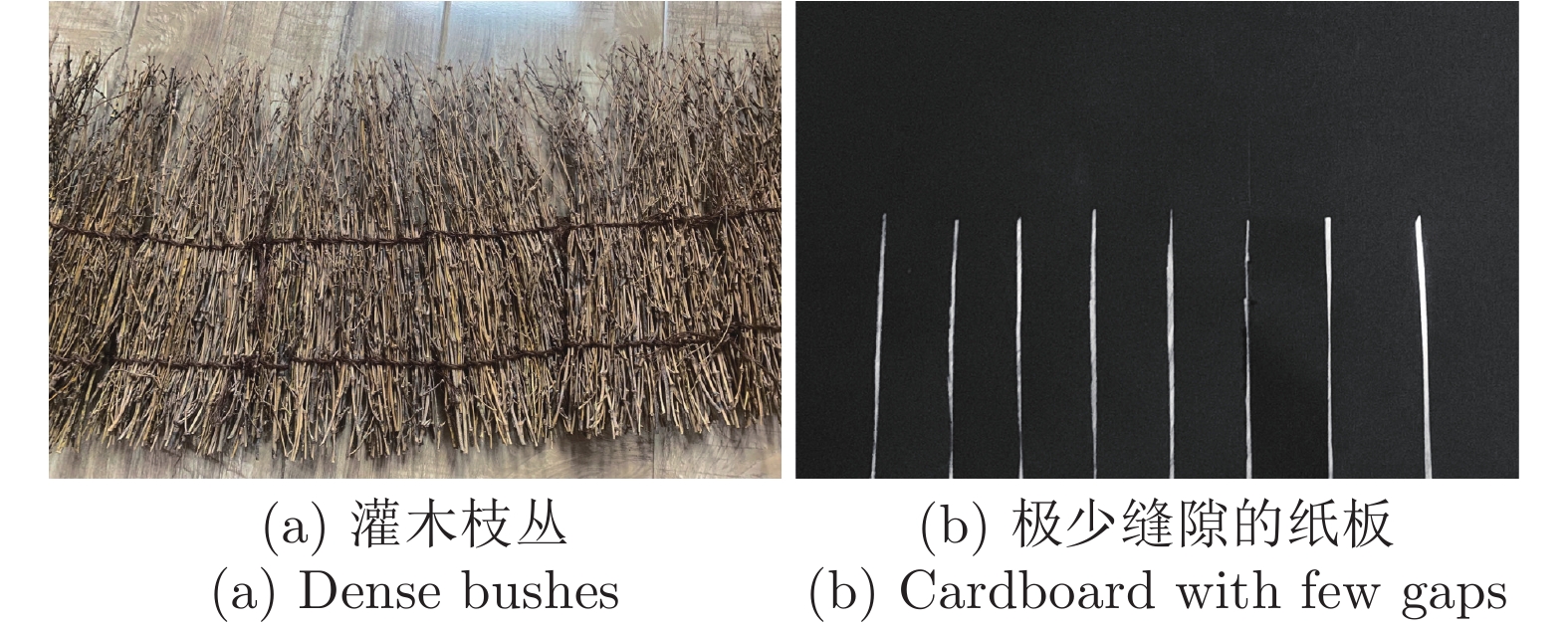

图 11 不同密集遮挡条件情况下的合成孔径成像结果与对比 (第1行为极端密集遮挡情况;第2行为一般密集遮挡情况; 第3行为稀疏遮挡情况)

Fig. 11 Comparison of SAI results under different density occlusions condition (The first row corresponds to extremely dense occlusion condition, the second row corresponds to normal dense occlusion condition, the third row corresponds to sparse occlusion condition)

图 12 极端光照条件下的合成孔径成像结果与对比 (第1行和第2行分别对应于极高光照条件下, 几何目标与玩具熊目标的合成孔径成像结果; 第3行和第4行分别对应于极低光照条件下, 几何目标与玩具熊目标的合成孔径成像结果)

Fig. 12 Comparison of SAI results under extrme light conditions (The first and second rows correspond to geometric object and teddy bear under extremely high light condition, and the third and fourth rows correspond to geometric object and teddy bear under extremely low light condition)

表 1 灌木枝丛遮挡条件下的多深度合成孔径成像质量对比

Table 1 Quantitative comparison of multi-depth SAI results under dense bushes occlusion condition

对焦深度 (m) 方法 PSNR (dB) SSIM 0.4 传统方法 16.72 0.2167 固定阈值重建 17.73 0.2919 自适应阈值重建 21.13 0.3061 0.8 传统方法 15.41 0.2693 固定阈值重建 15.42 0.2782 自适应阈值重建 21.73 0.3136 1.2 传统方法 17.62 0.0916 固定阈值重建 18.03 0.1759 自适应阈值重建 25.22 0.4518 表 2 灌木枝丛遮挡条件下的合成孔径成像质量对比

Table 2 Quantitative comparison of SAI results under the dense bushes occlusion condition

目标类型 方法 PSNR (dB) SSIM 几何目标 传统方法 13.45 0.2313 固定阈值重建 17.57 0.2671 自适应阈值重建 18.03 0.2646 玩具熊 传统方法 6.952 0.1439 固定阈值重建 7.795 0.2175 自适应阈值重建 9.199 0.2334 表 3 不同密集遮挡情况下的多深度合成孔径成像质量对比

Table 3 Quantitative comparison of SAI results under different density occlusions condition

遮挡密集程度 方法 PSNR (dB) SSIM 极端密集遮挡 传统方法 11.20 0.1476 固定阈值重建 17.14 0.1884 自适应阈值重建 18.52 0.1741 一般密集遮挡 传统方法 13.37 0.4028 固定阈值重建 18.54 0.1840 自适应阈值重建 19.51 0.2037 稀疏遮挡 传统方法 14.35 0.5508 固定阈值重建 11.22 0.3384 自适应阈值重建 14.19 0.3912 -

[1] Gershun A. The light field. Journal of Mathematics and Physics, 1939, 18(1-4): 51-151 doi: 10.1002/sapm193918151 [2] Levoy M, Hanrahan P. Light field rendering. In: Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques. New Orleans, USA: ACM Press, 1996. 31−42 [3] Zhang X Q, Zhang Y N, Yang T, Yang Y H. Synthetic aperture photography using a moving camera-IMU system. Pattern Recognition, 2017, 62: 175-188 doi: 10.1016/j.patcog.2016.07.019 [4] Vaish V, Wilburn B, Joshi N, Levoy M. Using plane + parallax for calibrating dense camera arrays. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Washington, USA: IEEE, 2004. 1: 2−9 [5] Vaish V, Garg G, Talvala E, Antunez E, Wilburn B, Horowitz M, et al. Synthetic aperture focusing using a shear-warp factorization of the viewing transform. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. San Diego, USA: IEEE, 2005. 129 [6] 项祎祎, 刘宾, 李艳艳. 基于共焦照明的合成孔径成像方法. 光学学报, 2020, 40(8): Article No. 0811003Xiang Yi-Yi, Liu Bin, Li Yan-Yan. Synthetic aperture imaging method based on confocal illumination. Acta Optica Sinica, 2020, 40(8): Article No. 0811003 [7] Yang T, Zhang Y N, Tong X M, Zhang X Q, Yu R. A new hybrid synthetic aperture imaging model for tracking and seeing people through occlusion. IEEE Transactions on Circuits and Systems for Video Technology, 2013, 23(9): 1461-1475 doi: 10.1109/TCSVT.2013.2242553 [8] Yang T, Zhang Y N, Tong X M, Zhang X Q, Yu R. Continuously tracking and see-through occlusion based on a new hybrid synthetic aperture imaging model. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Colorado Springs, USA: IEEE, 2011. 3409−3416 [9] Joshi N, Avidan S, Matusik W, Kriegman D J. Synthetic aperture tracking: Tracking through occlusions. In: Proceedings of the 11th IEEE International Conference on Computer Vision. Rio de Janeiro, Brazil: IEEE, 2007. 1−8 [10] 周程灏, 王治乐, 朱峰. 大口径光学合成孔径成像技术发展现状. 中国光学, 2017, 10(1): 25-38 doi: 10.3788/co.20171001.0025Zhou Cheng-Hao, Wang Zhi-Le, Zhu Feng. Review on optical synthetic aperture imaging technique. Chinese Optics, 2017, 10(1): 25-38 doi: 10.3788/co.20171001.0025 [11] Pei Z, Li Y W, Ma M, Li J, Leng C C, Zhang X Q, et al. Occluded-object 3D reconstruction using camera array synthetic aperture imaging. Sensors, 2019, 19(3): Article No. 607 [12] Pei Z, Zhang Y N, Chen X D, Yang Y H. Synthetic aperture imaging using pixel labeling via energy minimization. Pattern Recognition, 2013, 46(1): 174-187 doi: 10.1016/j.patcog.2012.06.014 [13] Lichtsteiner P, Posch C, Delbruck T. A 128×128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE Journal of Solid-State Circuits, 2008, 43(2): 566-576 doi: 10.1109/JSSC.2007.914337 [14] Brandli C, Berner R, Yang M H, Liu S C, Delbruck T. A 240×180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333-2341 doi: 10.1109/JSSC.2014.2342715 [15] Isaksen A, McMillan L, Gortler S J. Dynamically reparameterized light fields. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques. New Orleans, USA: ACM Press, 2000. 297−306 [16] Yang J C, Everett M, Buehler C, McMillan L. A real-time distributed light field camera. In: Proceedings of the 13th Eurographics Workshop on Rendering. Pisa, Italy: Eurographics Association, 2002. 77−86 [17] Wilburn B, Joshi N, Vaish V, Talvala E V, Antunez E, Barth A, et al. High performance imaging using large camera arrays. In: Proceedings of ACM SIGGRAPH Papers. Los Angeles, USA: Association for Computing Machinery, 2005. 765−776 [18] Vaish V, Levoy M, Szeliski R, Zitnick C L, Kang S B. Reconstructing occluded surfaces using synthetic apertures: Stereo, focus and robust measures. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. New York, USA: IEEE, 2006. 2331−2338 [19] Pei Z, Zhang Y N, Yang T, Zhang X W, Yang Y H. A novel multi-object detection method in complex scene using synthetic aperture imaging. Pattern Recognition, 2012, 45(4): 1637-1658 doi: 10.1016/j.patcog.2011.10.003 [20] Maqueda A I, Loquercio A, Gallego G, García N, Scaramuzza D. Event-based vision meets deep learning on steering prediction for self-driving cars. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 5419−5427 [21] Zhu A Z, Atanasov N, Daniilidis K. Event-based visual inertial odometry. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 5816−5824 [22] Vidal A R, Rebecq H, Horstschaefer T, Scaramuzza D. Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high-speed scenarios. IEEE Robotics and Automation Letters, 2018, 3(2): 994-1001 doi: 10.1109/LRA.2018.2793357 [23] Kim H, Leutenegger S, Davison A J. Real-time 3D reconstruction and 6-DoF tracking with an event camera. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, the Netherlands: Springer, 2016. 349−364 [24] Cohen G, Afshar S, Morreale B, Bessell T, Wabnitz A, Rutten M, et al. Event-based sensing for space situational awareness. The Journal of the Astronautical Sciences, 2019, 66(2): 125-141 doi: 10.1007/s40295-018-00140-5 [25] Barua S, Miyatani Y, Veeraraghavan A. Direct face detection and video reconstruction from event cameras. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision. Lake Placid, USA: IEEE, 2016. 1−9 [26] Watkins Y, Thresher A, Mascarenas D, Kenyon G T. Sparse coding enables the reconstruction of high-fidelity images and video from retinal spike trains. In: Proceedings of the International Conference on Neuromorphic Systems. Knoxville, USA: ACM, 2018. Article No. 8 [27] Scheerlinck C, Barnes N, Mahony R. Continuous-time intensity estimation using event cameras. In: Proceedings of the 14th Asian Conference on Computer Vision. Perth, Australia: Springer, 2018. 308−324 [28] Rebecq H, Ranftl R, Koltun V, Scaramuzza D. Events-to-video: Bringing modern computer vision to event cameras. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 3852−3861 [29] Scheerlinck C, Rebecq H, Gehrig D, Barnes N, Mahony R E, Scaramuzza D. Fast image reconstruction with an event camera. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision. Snowmass, USA: IEEE, 2020. 156−163 [30] Wang L, Mostafavi I S M, Ho Y S, Yoon K J. Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 10073−10082 [31] Goodman J W. Introduction to Fourier Optics. Colorado: Roberts and Company Publishers, 2005. [32] Hartley R, Zisserman A. Multiple View Geometry in Computer Vision. Cambridge: Cambridge University Press, 2003. [33] Zhang Z. A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(11): 1330-1334 doi: 10.1109/34.888718 [34] Wang L, Kim T K, Yoon K J. EventSR: From asynchronous events to image reconstruction, restoration, and super-resolution via end-to-end adversarial learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2020. 8312−8322 [35] Li H M, Li G Q, Shi L P. Super-resolution of spatiotemporal event-stream image. Neurocomputing, 2019, 335: 206-214 doi: 10.1016/j.neucom.2018.12.048 [36] Mostafavi I S M, Choi J, Yoon K J. Learning to super resolve intensity images from events. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2020. 2765−2773 -

下载:

下载: