Semi-supervised Classification Model Based on Ladder Network and Improved Tri-training

-

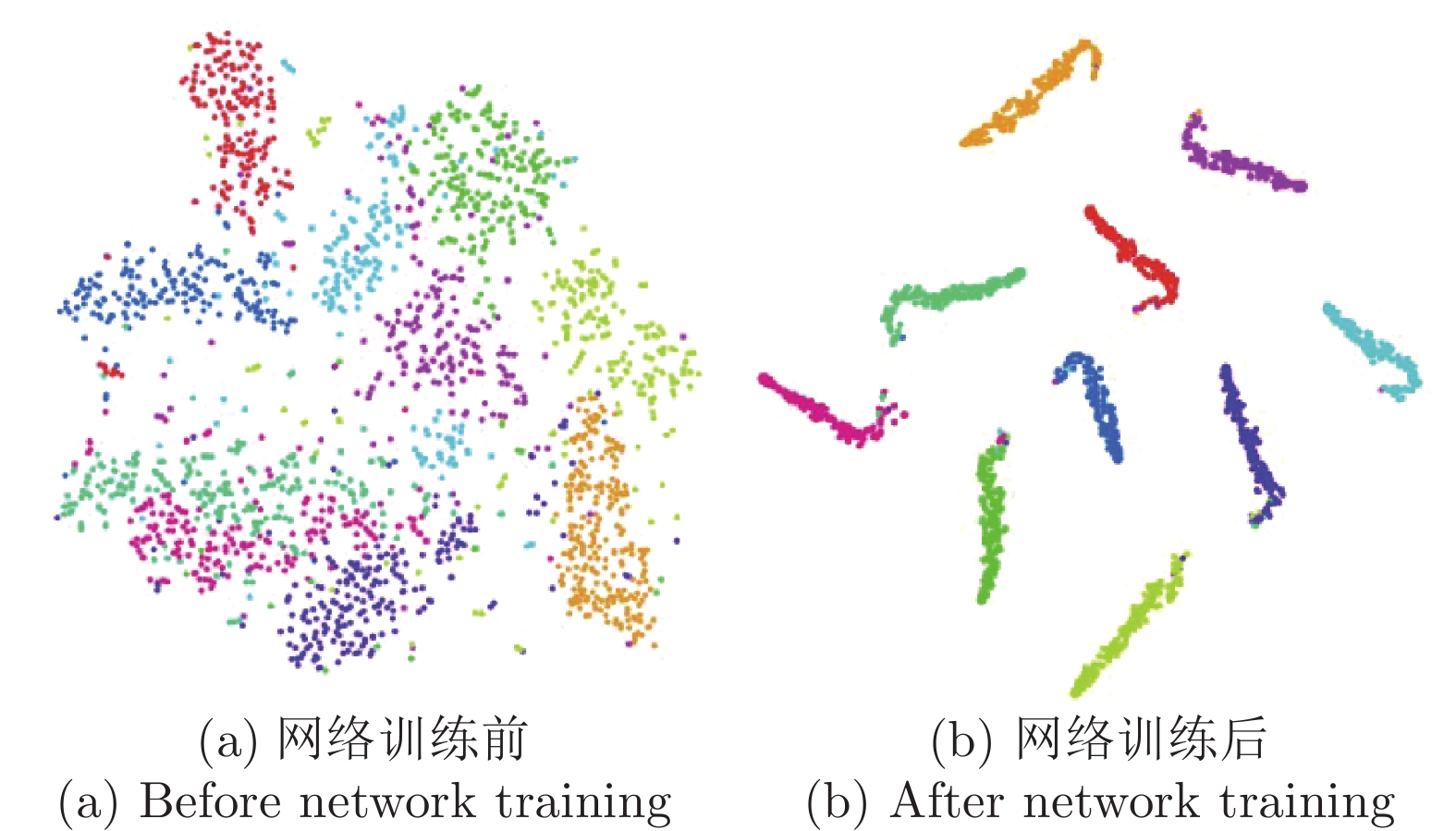

摘要: 为了提高半监督深层生成模型的分类性能, 提出一种基于梯形网络和改进三训练法的半监督分类模型. 该模型在梯形网络框架有噪编码器的最高层添加3个分类器, 结合改进的三训练法提高图像分类性能. 首先, 用基于类别抽样的方法将有标记数据分为3份, 模型以有标记数据的标签误差和未标记数据的重构误差相结合的方式调整参数, 训练得到3个Large-margin Softmax分类器; 接着, 用改进的三训练法对未标记数据添加伪标签, 并对新的标记数据分配不同权重, 扩充训练集; 最后, 利用扩充的训练集更新模型. 训练完成后, 对分类器进行加权投票, 得到分类结果. 模型得到的梯形网络的特征有更好的低维流形表示, 可以有效地避免因为样本数据分布不均而导致的分类误差, 增强泛化能力. 模型分别在MNIST数据库, SVHN数据库和CIFAR10数据库上进行实验, 并且与其他半监督深层生成模型进行了比较, 结果表明本文所提出的模型得到了更高的分类精度.

-

关键词:

- 梯形网络 /

- 改进的三训练法 /

- 半监督学习 /

- Large-margin Softmax分类器

Abstract: In order to improve the classification performance of semi-supervised deep generation models, a new model based on ladder network and improved tri-training algorithm is proposed. This model adds three classifiers to the highest layer of the noisy coding layer of the ladder network, and improves the image classification performance by combining the improved tri-training. First, the labeled data is divided into three parts by using a class-based sampling method. The model adjusts the parameters by combining the labeled error of labeled data and the reconstruction error of unlabeled data, and is trained to get three Large-margin Softmax classifiers. Next, the improved tri-training algorithm is developed to add pseudo-labels to the unlabeled data, and different weights are assigned to the new labeled data to expand the training set. Finally, the expanded training set is applied to update the model. After the above practice, a weighted voting is performed on the classifier to obtain the classification result. The characteristics of the ladder network obtained by this model have better low-dimensional manifold representations, which can effectively avoid classification error caused by uneven distribution of sample data and enhance generalization ability. The model is tested on the MNIST, SVHN and CIFAR10 respectively. In comparison with other semi-supervised deep generation models, test results show that the model proposed in this paper has obtained state-of-the-art classification accuracy over existing semi-supervised learning methods. -

表 1 LN-TT SSC模型及混合方案在SVHN和CIFAR10数据集上的分类精度(%)

Table 1 Classification accuracy of LN-TT SSC model and hybrid scheme on SVHN and CIFAR10 (%)

模型 SVHN CIFAR10 基准实验 $(\Pi$+ softmax) 88.75 83.56 方案 A $(\Pi$+ LM-Softmax) 91.35 86.11 方案 B (VGG19 + softmax) 89.72 84.73 LN-TT SSC 93.05 88.64 表 2 MNIST数据集上的分类精度(%)

Table 2 Classification accuracy on MNIST dataset (%)

表 3 SVHN数据集上的分类精度(%)

Table 3 Classification accuracy on SVHN dataset (%)

-

[1] 刘建伟, 刘媛, 罗雄麟. 半监督学习方法. 计算机学报, 2015, 38(8): 1592-1617 doi: 10.11897/SP.J.1016.2015.01592Liu Jian-Wei, Liu Yuan, Luo Xiong-Lin. Semi-supervised learning. Journal of Computer Science, 2015, 38(8): 1592-1617 doi: 10.11897/SP.J.1016.2015.01592 [2] Chau V T N, Phung N H. Combining self-training and tri-training for course-level student classification. In: Proceedings of the 2018 International Conference on Engineering, Applied Sciences, and Technology, Phuket, Thailand: IEEE, 2018. 1−4 [3] Lu X C, Zhang J P, Li T, Zhang Y. Hyperspectral image classification based on semi-supervised rotation forest. Remote Sensing, 2017, 9(9): 924-938 doi: 10.3390/rs9090924 [4] Cheung E, Li Y. Self-training with adaptive regularization for S3VM. In: Proceedings of the 2017 International Joint Conference on Neural Networks, Anchorage, AR, USA: IEEE, 2017. 3633−3640 [5] Kvan Y, Wadysaw S. Convolutional and recurrent neural networks for face image analysis. Foundations of Computing and Decision Sciences, 2019, 44(3): 331-347 doi: 10.2478/fcds-2019-0017 [6] 罗建豪, 吴建鑫. 基于深度卷积特征的细粒度图像分类研究综述. 自动化学报, 2017, 43(8): 1306-1318Luo Jian-Hao, Wu Jian-Xin. A review of fine-grained image-classification based on depth convolution features. Acta Automatica Sinica, 2017, 43(8): 1306-1318 [7] 林懿伦, 戴星原, 李力, 王晓, 王飞跃. 人工智能研究的新前线: 生成对抗网络. 自动化学报, 2018, 44(5): 775-792Lin Yi-Lun, Dai Xing-Yuan, Li Li, Wang Xiao, Wang Fei-Yue. A new frontier in artificial intelligence research: generative adversarial networks. Acta Automatica Sinica, 2018, 44(5): 775-792 [8] Springenberg J T. Unsupervised and semi-supervised learning with categorical generative adversarial networks. In: Proceedings of the 2005 International Conference on Learning Representations, 2015. 1−20 [9] Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X. Improved techniques for training GANs. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain: ACM, 2016. 2234−2242 [10] 付晓, 沈远彤, 李宏伟, 程晓梅. 基于半监督编码生成对抗网络的图像分类模型. 自动化学报, 2020, 46(3): 531-539Fu Xiao, Shen Yuan-Tong, Li Hong-Wei, Cheng Xiao-Mei. Image classification model based on semi-supervised coding generative adversarial networks. Acta Automatica Sinica, 2020, 46(3): 531-539 [11] Pezeshki M, Fan L X, Brakel P, Courville A, Bengio Y. Deconstructing the ladder network architecture. In: Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA: ACM, 2016. 2368−2376 [12] Rasmus A, Valpola H, Honkala M, Berglund M, Raiko T. Semi-supervised learning with ladder networks. Computer Science, 2015, 9(1): 1-9 [13] Zhao S, Song J, Ermon S. Learning hierarchical features from generative models. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia: ACM, 2017. 4091−4099 [14] Saito K, Ushiku Y, Harada T. Asymmetric tri-training for unsupervised domain adaptation. International Conference on Machine Learning, 2017: 2988-2997 [15] Chen D D, Wang W, Gao W, Zhou Z H. Tri-net for semi-supervised deep learning. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden: ACM, 2018. 2014−2020 [16] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. Computer Science and Information Systems, 2014. [17] Samuli L, Timo A. Temporal ensembling for semi-supervised learning. In: Proceedings of the 2017 International Conference on Learning Representation, 2017. 1−13 [18] Liu W Y, Wen Y D, Yu Z D, Yang M. Large-margin softmax loss for convolutional neural networks. In: Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA: ACM, 2016. 507−516 [19] Kilinc O, Uysal I. GAR: an efficient and scalable graph-based activity regularization for semi-supervised learning. Neuro-computing, 2018, 296(28): 46-54 [20] Maaten L, Hinton G. Visualizing data using t-SNE. Journal of Machine Learning Research, 2008, 9: 2579-2605 [21] Miyato T, Maeda S, Koyama M, Lshii S. Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 7(23): 99–112 -

下载:

下载: