Self-attention Cross-modality Fusion Network for Cross-modality Person Re-identification

-

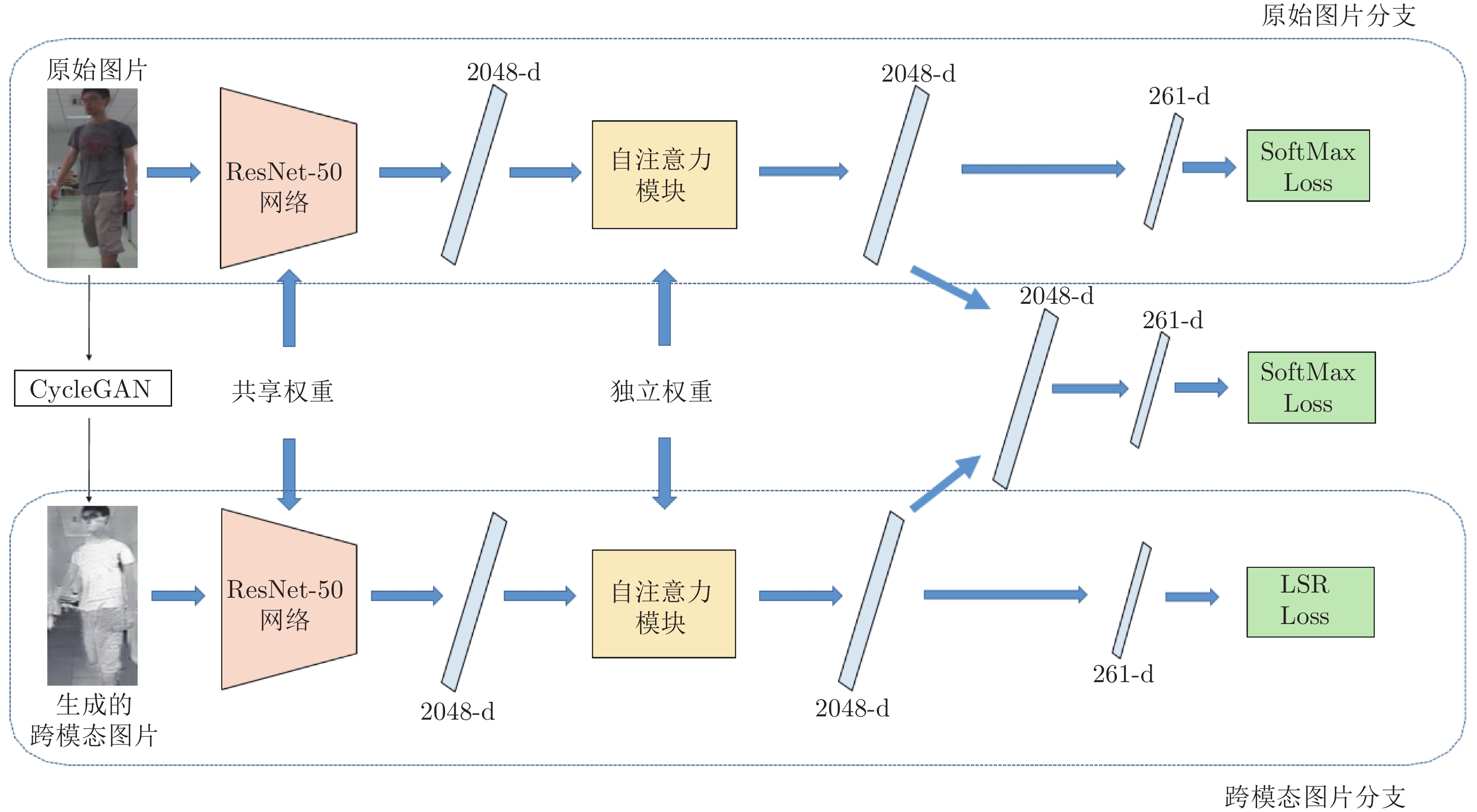

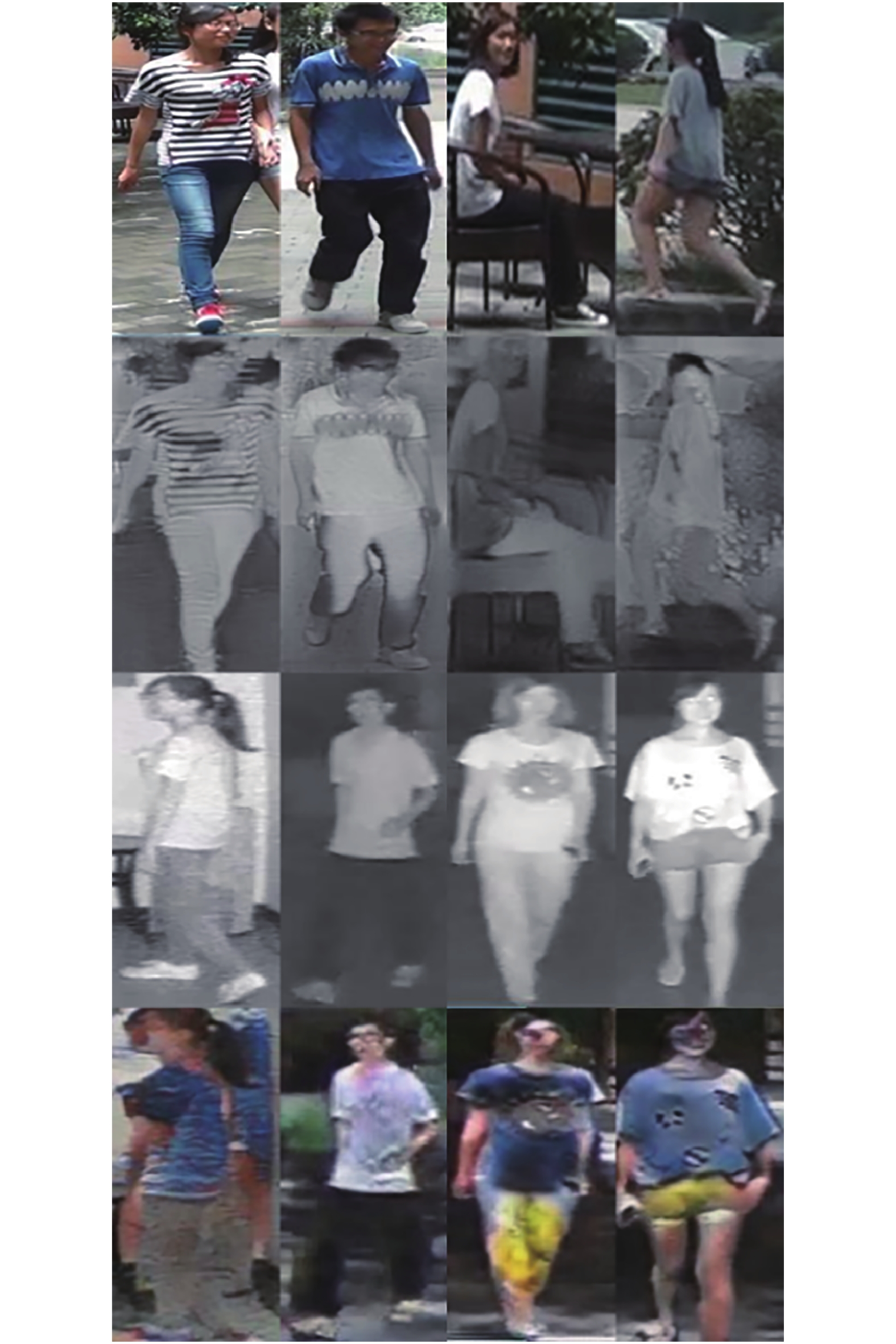

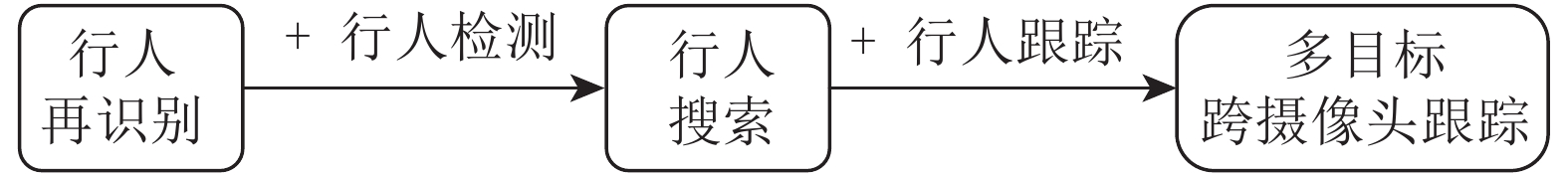

摘要: 行人再识别是实现多目标跨摄像头跟踪的核心技术, 该技术能够广泛应用于安防、智能视频监控、刑事侦查等领域. 一般的行人再识别问题面临的挑战包括摄像机的低分辨率、行人姿态变化、光照变化、行人检测误差、遮挡等. 跨模态行人再识别相比于一般的行人再识别问题增加了相同行人不同模态的变化. 针对跨模态行人再识别中存在的模态变化问题, 本文提出了一种自注意力模态融合网络. 首先是利用CycleGAN生成跨模态图像. 在得到了跨模态图像后利用跨模态学习网络同时学习两种模态图像特征, 对于原始数据集中的图像利用SoftMax 损失进行有监督的训练, 对生成的跨模态图像利用LSR (Label smooth regularization) 损失进行有监督的训练. 之后, 使用自注意力模块将原始图像和CycleGAN生成的图像进行区分, 自动地对跨模态学习网络的特征在通道层面进行筛选. 最后利用模态融合模块将两种筛选后的特征进行融合. 通过在跨模态数据集SYSU-MM01上的实验证明了本文提出的方法和跨模态行人再识别其他方法相比有一定程度的性能提升.Abstract: Person re-identification is the core technology to achieve multi-target multi-camera tracking. It can be widely used in many areas such as security, intelligent video surveillance, and criminal investigation. Person re-identification is a challenging task due to the low resolution of camera, human pose variations, illumination variations, pedestrian detector errors and occlusion. Compared with the general person re-identification, the cross-modality person re-identification has the variations of different modalities of the same person. In order to solve the cross-modality problem in cross-modality person re-identification, we propose the self-attention cross-modality fusion network. First, CycleGAN is used to generate cross-modality images. After obtaining the cross-modality images, we use the cross-modality learning network to learn the two modalities features simultaneously. SoftMax loss is used to train original images and label smooth regularization (LSR) loss is used to train generated images. Then, we use self-attention module to distinguish between original images and the generated image, and automatically select the useful features between channels. Finally, modality fusion module is used to fuse these selected features from two modalities images. Comparing with state-of-the-art methods on a large scale cross-modality dataset SYSU-MM01 further demonstrate the effectiveness of the proposed self-attention cross-modality fusion network.

-

表 1 各模块在SYSU-MM01 All-search模式下的实验结果

Table 1 Experimental results of each module in SYSU-MM01 dataset and All-search mode

方法 All-search Single-shot Multi-shot Rank 1 Rank 10 Rank 20 mAP Rank 1 Rank 10 Rank 20 mAP Baseline 27.36 71.95 84.58 28.53 32.48 78.34 88.93 23.17 跨模态学习 30.83 72.35 84.07 31.45 37.25 80.58 90.22 25.48 跨模态 + 自注意力 31.3 73.34 84.78 31.72 37.98 81.76 91.05 25.39 跨模态 + 模态融合 31.85 74.38 85.66 32.49 38.65 81.74 91.25 26.46 自注意力模态融合 33.31 74.51 85.79 33.18 39.71 82 91.14 26.89 表 2 各模块在SYSU-MM01 Indoor-search模式下的实验结果

Table 2 Experimental results of each module in SYSU-MM01 dataset and Indoor-search mode

方法 Indoor-search Single-shot Multi-shot Rank 1 Rank 10 Rank 20 mAP Rank 1 Rank 10 Rank 20 mAP Baseline 32.17 81.3 92.26 42.76 38.95 85.29 93.62 33.73 跨模态学习 37.21 80.81 90.29 47.06 43.98 86.01 93.37 37.09 跨模态 + 自注意力 36.55 80.32 90.41 46.42 44.89 85.31 94.18 36.43 跨模态 + 模态融合 37.63 81.75 91.48 47.73 44.82 87.26 94.97 38.07 自注意力模态融合 38.09 81.68 90.61 47.86 45.8 86.72 93.86 37.95 表 3 加入各模块后的GFLOPs和参数量

Table 3 GFLOPs and parameters after joining each module

方法 GFLOPs GFLOPs相比于Baseline的变化 参数量 参数量相比于Baseline的变化 Baseline 2.702772224 − 25 557 032 − 跨模态学习 2.702772224 0 25 557 032 0 跨模态 + 自注意力 2.7038208 0.001048576 26 609 960 +1 052 928 (4.12%) 跨模态 + 模态融合 5.405544448 2.702772224 25 557 032 0 自注意力模态融合 5.409639424 2.7068672 27 136 424 +1 579 392 (6.18%) 表 4 在SYSU-MM01 All-search模式下和跨模态行人再识别的对比实验

Table 4 Comparative experiments between our method and others in SYSU-MM01 dataset and All-search mode

方法 All-search Single-shot Multi-shot Rank 1 Rank 10 Rank 20 mAP Rank 1 Rank 10 Rank 20 mAP HOG + Euclidean 2.76 18.25 31.91 4.24 3.82 22.77 37.63 2.16 Zero-padding 14.8 54.12 71.33 15.95 19.13 61.4 78.41 10.89 BDTR 17.01 55.43 71.96 19.66 − − − − cmGAN 26.97 67.51 80.56 27.8 31.49 72.74 85.01 22.27 Baseline (本文方法) 27.36 71.95 84.58 28.53 32.48 78.34 88.93 23.17 跨模态学习网络 (本文方法) 30.83 72.35 84.07 31.45 37.25 80.58 90.22 25.48 自注意力模态融合 (本文方法) 33.31 74.51 85.79 33.18 39.71 82 91.14 26.89 表 5 在SYSU-MM01 Indoor-search模式下和跨模态行人再识别的对比实验

Table 5 Comparative experiments between our method and others in SYSU-MM01 dataset and Indoor-search mode

方法 Indoor-search Single-shot Multi-shot Rank 1 Rank 10 Rank 20 mAP Rank 1 Rank 10 Rank 20 mAP HOG + Euclidean 3.22 24.68 44.52 7.25 4.75 29.06 49.38 3.51 Zero-padding 20.58 68.38 85.79 26.92 24.43 75.86 91.32 18.64 cmGAN 31.63 77.23 89.18 42.19 37 80.94 92.11 32.76 Baseline (本文方法) 32.17 81.3 92.26 42.76 38.95 85.29 93.62 33.73 跨模态学习网络 (本文方法) 37.21 80.81 90.29 47.06 43.98 86.01 93.37 37.09 自注意力模态融合 (本文方法) 38.09 81.68 90.61 47.86 45.8 86.72 93.86 37.95 -

[1] 李幼蛟, 卓力, 张菁, 李嘉锋, 张辉. 行人再识别技术综述[J]. 自动 化学报, 2018, 44(9): 1554-1568LI You-Jiao, ZHUO Li, ZHANG Jing, LI Jia-Feng, ZHANG Hui. A Survey of Person Re-identiflcation. Acta Automatica Sinica, 2018, 44(9): 1554-1568 [2] 吴彦丞, 陈鸿昶, 李邵梅, 高超. 基于行人属性先验分布的行人再识 别. 自动化学报, 2019, 45(5): 953-964Wu Yan-Cheng, Chen Hong-Chang, Li Shao-Mei, Gao Chao. Person re-identiflcation using attribute priori distribution. Acta Automatica Sinica, 2019, 45(5): 953-964 [3] Zhang L, Ma B, Li G, Huang Q, Tian Q. Generalized semisupervised and structured subspace learning for cross-modal retrieval. IEEE Transactions on Multimedia, 2018, 20: 128-141 doi: 10.1109/TMM.2017.2723841 [4] Zhang L, Ma B P, Li G R, Huang Q M, Tian Q. PL-ranking: A novel ranking method for cross-modal retrieval. In: Proceedings of the 24th ACM on Multimedia Conference. Amsterdam, the Netherlamds: ACM, 2016. 1355−1364 [5] Krizhevsky A, Sutskever I, Hinton G. ImageNet classiflcation with deep convolutional neural networks. COMMUNICATIONS OF THE ACM, 2012, 60: 84-90 [6] Gray D, Tao H. Viewpoint invariant pedestrian recognition with an ensemble of localized features. In: Proceedings of the 10th European Conference on Computer Vision, Marseille, France: Springer, 2008. 262−275 [7] Yang Y, Yang J M, Yan J J, Liao S C, Yi D, Li S Z. Salient color names for person re-identiflcation. In: Proceedings of the 2014 Eruopeam Computer Vision. Zuich, Switzerland: Springer, 2014. Part I : 536−551 [8] Köstinger M, Hirzer M, Wohlhart P, Roth P M, Bischof H. Large scale metric learning from equivalence constraints. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 2288−2295 [9] Zheng Z, Zheng L, Yang Y. A discriminatively learned cnn embedding for person reidentiflcation.ACM Transactions on Multimedia Computing Communications and Applications, 2016, 14(1):13-1-13-20 [10] Bromley J, Bentz J W, BOTTOU L, Guyon I, Lecun Y, Moore C, et al. Signature veriflcation using a “ siamese” time delay neural network. International Journal of Pattern Recognition and Artiflcial Intelligence, 1993, 7(4): 669-688 doi: 10.1142/S0218001493000339 [11] Zhang X, Luo H, Fan X, Xiang W L, Sun Y X, Xiao Q Q, Jiang W, Zhang C, Sun J. AlignedReID: Surpassing humanlevel performance in person re-identiflcation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Hawaii, USA: IEEE, 2017. [12] Zhao H Y, Tian M Q, Sun S Y, Shao J, Yan J J, Yi S, Wang X G, Tang X O. Spindle net: Person re-identiflcation with human body region guided feature decomposition and fusion. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017, 907−915 [13] Dai Z Z, Chen M Q, Zhu S Y, Tan P. Batch feature erasing for person re-identiflcation and beyond. Computer Research Repository, 2018. [14] Zhong Z, Zheng L, Zheng Z D, Li S Z, Yang Y. Camera style adaptation for person re-identiflcation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake, USA: IEEE, 2018. 5157−5166 [15] Zhu J Y, Park T, Isola P, ECfros A A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 2242−2251 [16] Wu A C, Zheng W S, Yu H X, Gong S G, Lai J H. RGB-infrared crossmodality person re-identiflcation. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5390−5399 [17] Ye M, Wang Z, Lan X Y, Yuen P C. Visible thermal person re-identiflcation via dual-constrained top-ranking. In: Proceedings of the 27th International Joint Conference on Artiflcial Intelligence. Stockholm, Sweden: AAAI, 2018.1092−1099 [18] Dai P Y, Ji R R, Wang H B, Wu Q, Huang Y Y. Cross-modality person re-identiflcation with generative adversarial training. In: Proceedings of the 27th International Joint Conference on Artiflcial Intelligence. Stockholm, Sweden: AAAI, 2018. 677−683 [19] Lin J W, Li H. HPILN: A feature learning framework for crossmodality person re-identiflcation. [Online], available: https://arxiv.org/abs/1906.03142, August 14, 2019 [20] Szegedy C, Vanhoucke V, Iofie S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 2818−2826 [21] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. In: Advances in Neural Information Processing Systems, Berlin, Germany: Springer, 2014. 2672−2680 [22] 林懿伦, 戴星原, 李力, 王晓, 王飞跃. 人工智能研究的新前线: 生 成式对抗网络. 自动化学报, 2018, 44(5): 775-792Lin Yi-Lun, Dai Xing-Yuan, Li Li, Wang Xiao, Wang FeiYue. The new frontier of AI research: generative adversarial networks. Acta Automatica Sinica, 2018, 44(5): 775-792 [23] Mirza M P, Osindero S. Conditional generative adversarial nets. [Online], available: https://arxiv.org/abs/1411.1784, November 6, 2014 [24] Isola P, Zhu J Y, Zhou T H, Efros A A. Image-to-image translation with conditional adversarial networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 5967−5976 [25] Yi Z L, Zhang H, Tan P, Gong M L. DualGAN: unsupervised dual learning for image-to-image translation. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 2868−2876 [26] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 770−778 [27] Hu J, Shen L, Sun G. Squeeze-and-excitation networks. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, UT, USA: IEEE, 2018. 7132−7141 [28] Nair V, Hinton G E. Rectifled linear units improve restricted Boltzmann machines vinod nair. In: Proceedings of the 27th International Conference on International Conference on Machine Learning. Haifa, Israel: Omnipress, 2010. 807−814 [29] Yin X, Goudriaan J W, Lantinga E A, Vos J C, Spiertz H L. A flexible sigmoid function of determinate growth. Annals of botany, 2003, 91(3): 361-371. [30] Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z M, Desmaison A, Antiga L, Lerer A. Automatic differentiation in PyTorch. In: Proceedings of the 31st Conference and Workshop on Neural Information Processing Systems. California, USA: NIPS, 2017. [31] Reddi S J, Kale S, Kumar S. On the convergence of Adam and Beyond. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, BC, Canada: ICLR, 2018. [32] Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Diego, CA, USA: IEEE, 2005. 886−893 [33] Liao S C, Hu Y, Zhu X Y, Li S Z. Person re-identiflcation by local maximal occurrence representation and metric learning. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 2197−2206 -

下载:

下载: