-

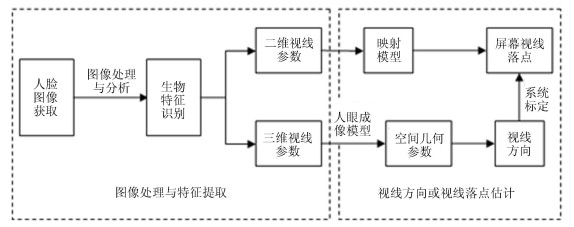

摘要: 针对基于特征的视线跟踪方法进行了综述.首先对视线跟踪技术的发展、相关研究工作和研究现状进行了阐述; 然后将基于特征的视线跟踪方法分成了两大类:二维视线跟踪方法和三维视线跟踪方法, 从硬件系统配置、误差主要来源、头部运动影响、优缺点等多个方面重点分析了这两类视线跟踪方法, 对近五年现有的部分基于特征的视线跟踪方法进行了对比分析, 并对二维视线跟踪系统和三维视线跟踪系统中的几个关键问题进行了探讨; 此外, 介绍了视线跟踪技术在人机交互、医学、军事、智能交通等多个领域的应用; 最后对基于特征的视线跟踪方法的发展趋势和研究热点进行了总结与展望.Abstract: In this paper, the feature-based gaze tracking methods are reviewed. Firstly, the development, the related works and the research status of gaze tracking technology are introduced. Then, the feature-based gaze tracking methods are classified into two categories: Two-dimensional gaze tracking methods and three-dimensional gaze tracking methods. These two kinds of gaze tracking methods are emphatically analyzed in terms of hardware system configuration, main error sources, head movement, advantages and disadvantages, etc. Some of the existing feature-based gaze tracking methods in recent five years are compared, and several key issues in two-dimensional gaze tracking systems and three-dimensional gaze tracking systems are discussed. Moreover, the applications of gaze tracking technology in many fields, such as human-computer interaction, medicine, military, intelligent transportation, etc. are introduced. Finally, the development trends and research topics of feature-based gaze tracking methods are summarized.

-

Key words:

- Feature-based /

- gaze tracking /

- two-dimensional mapping model /

- three-dimensional gaze estimation

1) 本文责任编委 黄庆明 -

表 1 二维视线跟踪方法的特点对比

Table 1 Comparison of the characteristics of two-dimensional gaze tracking methods

方法 相机 光源 误差主要来源 头动影响 优点 缺点 瞳孔(虹膜)—眼角法 1 0 眼角检测

头部偏离标定位置头部固定 系统简单

便于操作与实现用户标定复杂

头部固定带来的不适感瞳孔(虹膜)—角膜反射法 1 1 头部偏离标定位置 头部固定 系统简单

无需标定光源和屏幕位置用户标定复杂

头部固定带来的不适感1 $\geq$2 普尔钦斑检测 头部一定范围运动 无需标定光源和屏幕位置

模型相对简单用户标定复杂 $\geq$2 $\geq$1 多个相机的转换关系 头部自由运动 允许头部自由运动

精度较高用户标定复杂、系统标定复杂

相机标定复杂交比值法 $\geq$1 $\geq$4 虚拟正切平面的构建 头部一定范围运动 免相机标定

模型简单四个光源均需成像限制头部运动范围

需多点用户标定补偿光轴与视轴的夹角HN法 1 $\geq$4 近似瞳孔在普尔钦斑构成的平面中 头部一定范围运动 无需已知光源构成的矩形尺寸

模型简单

补偿了光轴与视轴的夹角四个光源均需成像限制头部运动范围

用户标定复杂表 2 三维视线跟踪方法的特点对比

Table 2 Comparison of the characteristics of three-dimensional gaze tracking methods

方法 相机 光源 误差主要来源 头动影响 视线信息 优点 缺点 基于深度相机的三维视线估计方法 1 0 眼球中心、虹膜中心等中间量的精确计算 头部一定范围运动 视线落点 系统简单、模型简单 用户标定复杂、视线精度非常依赖于人眼特征检测的精度 基于普通相机的三维视线估计方法 1 1 预先设定的人眼不变参数 头部一定范围运动 三维视线 系统简单、便于实现 未考虑个体差异性, 精度较低 1 $\geq$2 普尔钦斑检测、人眼不变参数的求解或预先设定 头部一定范围运动 三维视线 系统相对简单、可计算空间三维信息 解非线性方程组求取人眼不变参数, 运算速度较慢, 精度较低 $\geq$2 $\geq$1 系统标定 头部自由运动 三维视线 用户标定简单、视线精度高 系统复杂、系统标定复杂、成本较高 表 3 二维视线跟踪方法与三维视线跟踪方法的比较

Table 3 Comparison of two-dimensional gaze tracking methods and three-dimensional gaze tracking methods

类型 系统配置 系统标定 用户标定 视线精度 头部运动 应用场景 二维视线

跟踪方法单相机无光源系统 — 复杂 较低 $\times$ 系统简单, 精度较低, 应用场合有限 单相机单光源系统 — 复杂 较高(头部固定) $\times$ 头部固定或微小运动, 适用于穿戴式或遥测式设备, 适合人因分析、认知疾病诊断等应用 单相机多光源系统 简单 复杂 较高 $\surd$ 头部较小运动范围, 适用于穿戴式或遥测式设备, 适合心理分析、助残等应用 多相机多光源系统 复杂 复杂 高 $\surd$ 头部较大运动范围, 精度要求较高, 适合虚拟现实等应用 三维视线

跟踪方法单相机无光源系统 复杂 复杂 较低 $\surd$ 需获取真实彩色图像, 精度要求低, 适用于移动便携式设备、网络终端设备 单相机单光源系统 复杂 较复杂 较低 $\surd$ 在三维空间物体上的注视点, 精度要求较低, 适用于移动便携式设备, 如手机、平板电脑等 单相机多光源系统 复杂 较复杂 较高 $\surd$ 在三维空间物体上的注视点, 精度要求一般, 适用于穿戴式或遥测式设备, 适合虚拟现实等应用 多相机多光源系统 复杂 简单 高 $\surd$ 在三维空间物体上的注视点, 精度要求较高, 适用于穿戴式或遥测式设备, 适合虚拟现实等应用 表 4 近五年部分基于特征的视线跟踪方法对比

Table 4 Comparison of feature-based gaze tracking methods in recent five years

文献 相机 光源 类型 特征 头动 精度 特殊说明 Yu等[37] 1 0 二维映射 虹膜、眼角 头部固定 水平0.99$^\circ $, 竖直1.33$^\circ $ Skodras等[39] 1 0 二维映射 眼角、眼睑、脸部定位点 头部固定 1.42$^\circ $$\pm$1.35$^\circ $ 免相机标定 Xia等[40] 1 0 二维映射 眼角、瞳孔 头部固定 水平0.84$^\circ $, 竖直0.56$^\circ $ 简单映射模型 Cheung等[41] 1 0 二维映射 虹膜、眼角 运动范围: 15.5$^\circ\times15.5^\circ\times 5^\circ $ 2.27$^\circ $ 熊春水等[27] 1 1 二维映射 瞳孔、普尔钦斑 运动范围: 100mm$\times$ 100mm $\times$ 100mm 1.05$^\circ $ 引入增量学习, 单点标定 Blignaut等[48] 1 1 二维映射 瞳孔、普尔钦斑 头部固定 0.5$^\circ $ 标定点个数$\geq$12 Banaeeyan等[52] 1 2 二维映射 虹膜、眼角 头部固定 水平0.928$^\circ $, 竖直1.282$^\circ $ Cheng等[62] 1 5 二维交比值 瞳孔、普尔钦斑 自由运动 0.70$^\circ $ 动态正切平面 Arar等[63] 1 5 二维交比值 瞳孔、普尔钦斑 自由运动 1.15$^\circ $(5点标定) 图像分辨率低, 标定点少 Zhang等[67] 1 8 二维HN 瞳孔、普尔钦斑 运动范围: 80mm$\times$ 100mm $\times$ 100mm 0.56$^\circ $ 双目视觉 Ma等[69] 1 4 二维HN 瞳孔、普尔钦斑 自由运动 1.23$^\circ $$\pm$0.54$^\circ $ Choi等[70] 1 4 二维HN 瞳孔、普尔钦斑 头部固定 $1.03^\circ\sim 1.17^\circ$ 单点标定 Kim等[73] 1 4 二维HN 瞳孔、普尔钦斑 仅平转 0.76$^\circ $ Zhou等[89] 1 (Kinect) 0 三维 虹膜、眼角 运动范围: 11.63$^\circ\times $13.44 $^\circ \times $9.43$^\circ$ 1.99$^\circ $ Sun等[28] 1 (Kinect) 0 三维 虹膜、眼角 自由运动 $1.28^\circ \sim 2.71^\circ$ Li等[92] 1 (Kinect) 0 三维 虹膜、眼角 自由运动 水平3.0$^\circ $, 竖直4.5$^\circ $ Cristina等[11] 1 0 三维 虹膜、眼角 运动范围(5$^\circ $内) $1.46^\circ \sim 1.93^\circ$ Wang等[77] 1 4 三维 瞳孔、普尔钦斑 自由运动 1.3$^\circ $ 免个体标定 Lai等[107] 2 2 三维 瞳孔、普尔钦斑 运动范围: 50mm$\times$ 25mm $\times$ 50mm 1.18$^\circ $ Bakker等[113] 3 3 三维 瞳孔、普尔钦斑 自由运动 0.5$^\circ $ Lidegaard等[116] 4 4 三维 瞳孔、普尔钦斑 自由运动 1.5$^\circ $ 头戴式 -

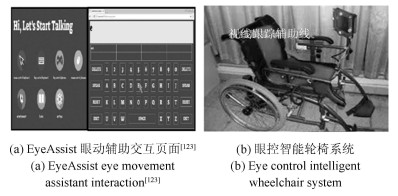

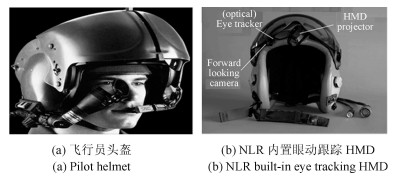

[1] Zhang C, Chi J N, Zhang Z H, Gao X L, Hu T, Wang Z L. Gaze estimation in a gaze tracking system. Science China Information Sciences, 2011, 54(11): 2295-2306 doi: 10.1007/s11432-011-4243-6 [2] Wang K, Wang S, Ji Q. Deep eye fixation map learning for calibration-free eye gaze tracking. In: Proceedings of the 9th Biennial ACM Symposium on Eye Tracking Research & Applications. Charleston, USA: ACM, 2016. 47-55 [3] Sigut J, Sidha S A. Iris center corneal reflection method for gaze tracking using visible light. IEEE Transactions on Biomedical Engineering, 2011, 58(2): 411-419 doi: 10.1109/TBME.2010.2087330 [4] Hansen D W, Ji Q. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(3): 478-500 doi: 10.1109/TPAMI.2009.30 [5] Kar A, Corcoran P. A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access, 2017, 5(1): 16495-16519 [6] 侯志强, 韩崇昭.视觉跟踪技术综述.自动化学报, 2006, 32(4): 603-617 http://www.aas.net.cn/article/id/14397Hou Zhi-Qiang, Han Chong-Zhao. A survey of visual tracking. Acta Automatica Sinica, 2006, 32(4): 603-617 http://www.aas.net.cn/article/id/14397 [7] 尹宏鹏, 陈波, 柴毅, 刘兆栋.基于视觉的目标检测与跟踪综述.自动化学报, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823Yin Hong-Peng, Chen Bo, Chai Yi, Liu Zhao-Dong. Vision-based object detection and tracking: A review. Acta Automatica Sinica, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823 [8] Zhang X C, Sugano Y, Bulling A. Evaluation of appearance-based methods and implications for gaze-based applications. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. Glasgow, UK: ACM, 2019. Article No. 416 [9] Jung D, Lee J M, Gwon S Y, Pan W Y, Lee H C, Park K R, et al. Compensation method of natural head movement for gaze tracking system using an ultrasonic sensor for distance measurement. Sensors, 2016, 16(1): Article No. 110 doi: 10.3390/s16010110 [10] Stefanov K. Webcam-based eye gaze tracking under natural head movement. arXiv: 1803.11088, 2018. [11] Cristina S, Camilleri K P. Model-based head pose-free gaze estimation for assistive communication. Computer Vision and Image Understanding, 2016, 149: 157-170 doi: 10.1016/j.cviu.2016.02.012 [12] Jeni L A, Cohn J F. Person-independent 3D gaze estimation using face frontalization. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Las Vegas, USA: IEEE, 2016. 792-800 [13] Mora K A F, Odobez J M. Person independent 3D gaze estimation from remote RGB-D cameras. In: Proceedings of the 2013 IEEE International Conference on Image Processing. Melbourne, Australia: IEEE, 2013. 2787-2791 [14] Lu F, Sugano Y, Okabe T, Sato Y. Adaptive linear regression for appearance-based gaze estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(10): 2033-2046 doi: 10.1109/TPAMI.2014.2313123 [15] Lu F, Sugano Y, Okabe T, Sato Y. Head pose-free appearance-based gaze sensing via eye image synthesis. In: Proceedings of the 21st International Conference on Pattern Recognition. Tsukuba, Japan: IEEE, 2012. 1008-1011 [16] Lu F, Okabe T, Sugano Y, Sato Y. Learning gaze biases with head motion for head pose-free gaze estimation. Image and Vision Computing, 2014, 32(3): 169-179 doi: 10.1016/j.imavis.2014.01.005 [17] Zhang X C, Sugano Y, Fritz M, Bulling A. Appearance-based gaze estimation in the wild. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 4511-4520 [18] 王信亮.自然光下视线跟踪算法研究[硕士学位论文], 华南理工大学, 中国, 2014Wang Xin-Liang. Study of Gaze Tracking Algorithm in Natural Light[Master thesis], South China University of Technology, China, 2014 [19] 刘硕硕.头盔式视线跟踪技术研究[硕士学位论文], 东南大学, 中国, 2016Liu Shuo-Shuo. The Research on Head-Mounted Gaze Tracking Technology[Master thesis], Southeast University, China, 2016 [20] 王林.头部可运动的头戴式视线跟踪系统关键技术研究[硕士学位论文], 中国科学技术大学, 中国, 2014Wang Lin. Head-Mounted Gaze Tracking System With Head Motion[Master thesis], University of Science and Technology of China, China, 2014 [21] 张宝玉. 3D视线跟踪系统中的非线性方程组算法与鲁棒性分析[硕士学位论文], 西安电子科技大学, 中国, 2015Zhang Bao-Yu. Algorithms and Robustness Analysis for Solving Nonlinear Equations in 3D Gaze Tracking System[Master thesis], Xidian University, China, 2015 [22] 鲁亚楠.头戴式视线跟踪系统结构设计与视线信息获取方法研究[硕士学位论文], 天津大学, 中国, 2017Lu Ya-Nan. Structure Design of the Head-mounted Eye Tracking System and the Acquiring Method of Eye Information[Master thesis], Tianjin University, China, 2017 [23] 宫德麟.基于单目视觉的视线跟踪技术研究[硕士学位论文], 北京理工大学, 中国, 2016Gong De-Lin. Research on Eye Gaze Tracking Technology Based on Monocular Vision[Master thesis], Beijing Institute of Technology, China, 2016 [24] 兰善伟.基于瞳孔角膜反射视线追踪技术的研究[硕士学位论文], 哈尔滨工业大学, 中国, 2014Lan Shan-Wei. The Research of Eye Gaze Tracking Technology Based on Pupil Corneal Reflection[Master thesis], Harbin Institute of Technology, China, 2014 [25] 秦华标, 严伟洪, 王信亮, 余翔宇.一种可克服头动影响的视线跟踪系统.电子学报, 2013, 41(12): 2403-2408 doi: 10.3969/j.issn.0372-2112.2013.12.013Qin Hua-Biao, Yan Wei-Hong, Wang Xin-Liang, Yu Xiang-Yu. A gaze tracking system overcoming influences of head movements. Acta Electronica Sinica, 2013, 41(12): 2403-2408 doi: 10.3969/j.issn.0372-2112.2013.12.013 [26] 高迪, 印桂生, 马春光.基于角膜反射的非侵入式视线跟踪技术.通信学报, 2012, 33(12): 133-139 https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201212018.htmGao Di, Yin Gui-Sheng, Ma Chun-Guang. Non-invasive eye tracking technology based on corneal reflex. Journal on Communications, 2012, 33(12): 133-139 https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201212018.htm [27] 熊春水, 黄磊, 刘昌平.一种新的单点标定视线估计方法.自动化学报, 2014, 40(3): 459-470 doi: 10.3724/SP.J.1004.2014.00459Xiong Chun-Shui, Huang Lei, Liu Chang-Ping. A novel gaze estimation method with one-point calibration. Acta Automatica Sinica, 2014, 40(3): 459-470 doi: 10.3724/SP.J.1004.2014.00459 [28] Sun L, Liu Z C, Sun M T. Real time gaze estimation with a consumer depth camera. Information Sciences, 2015, 320: 346-360 doi: 10.1016/j.ins.2015.02.004 [29] Lu F, Chen X W, Sato Y. Appearance-based gaze estimation via uncalibrated gaze pattern recovery. IEEE Transactions on Image Processing, 2017, 26(4): 1543-1553 doi: 10.1109/TIP.2017.2657880 [30] Deng H P, Zhu W J. Monocular free-head 3D gaze tracking with deep learning and geometry constraints. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 3162-3171 [31] Li B, Fu H, Wen D S, Lo W. Etracker: A mobile gaze-tracking system with near-eye display based on a combined gaze-tracking algorithm. Sensors, 2018, 18(5): Article No. 1626 doi: 10.3390/s18051626 [32] He G, Oueida S, Ward T. Gazing into the abyss: Real-time gaze estimation. arXiv: 1711.06918, 2017. [33] Wojciechowski A, Fornalczyk K. Single web camera robust interactive eye-gaze tracking method. Bulletin of the Polish Academy of Sciences Technical Sciences, 2015, 63(4): 879-886 doi: 10.1515/bpasts-2015-0100 [34] Topal C, Gunal S, Koçdeviren O, Doğan A, Gerek $\ddot{\rm O}$ N. A low-computational approach on gaze estimation with eye touch system. IEEE Transactions on Cybernetics, 2014, 44(2): 228-239 doi: 10.1109/TCYB.2013.2252792 [35] Zhu J, Yang J. Subpixel eye gaze tracking. In: Proceedings of the 5th IEEE International Conference on Automatic Face Gesture Recognition. Washington, USA: IEEE, 2002. 131-136 [36] Shao G J, Che M, Zhang B Y, Cen K F, Gao W. A novel simple 2D model of eye gaze estimation. In: Proceedings of the 2nd International Conference on Intelligent Human-Machine Systems and Cybernetics. Nanjing, China: IEEE, 2010. 300-304 [37] Yu M X, Lin Y Z, Tang X Y, Xu J, Schmidt D, Wang X Z, et al. An easy iris center detection method for eye gaze tracking system. Journal of Eye Movement Research, 2015, 8(3): 1-20 [38] George A, Routray A. Fast and accurate algorithm for eye localisation for gaze tracking in low-resolution images. IET Computer Vision, 2016, 10(7): 660-669 doi: 10.1049/iet-cvi.2015.0316 [39] Skodras E, Kanas V G, Fakotakis N. On visual gaze tracking based on a single low cost camera. Signal Processing: Image Communication, 2015, 36: 29-42 doi: 10.1016/j.image.2015.05.007 [40] Xia L, Sheng B, Wu W, Ma L Z, Li P. Accurate gaze tracking from single camera using gabor corner detector. Multimedia Tools and Applications, 2016, 75(1): 221-239 doi: 10.1007/s11042-014-2288-4 [41] Cheung Y M, Peng Q M. Eye gaze tracking with a web camera in a desktop environment. IEEE Transactions on Human-Machine Systems, 2015, 45(4): 419-430 doi: 10.1109/THMS.2015.2400442 [42] Jen C L, Chen Y L, Lin Y J, Lee C H, Tsai A, Li M T. Vision based wearable eye-gaze tracking system. In: Proceedings of the 2016 IEEE International Conference on Consumer Electronics. Las Vegas, USA: IEEE, 2016. 202-203 [43] 张闯, 迟健男, 张朝晖, 王志良.一种新的基于瞳孔——角膜反射技术的视线追踪方法.计算机学报, 2010, 33(7): 1272-1285 https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX201007014.htmZhang Chuang, Chi Jian-Nan, Zhang Zhao-Hui, Wang Zhi-Liang. A novel eye gaze tracking technique based on pupil center cornea reflection technique. Chinese Journal of Computers, 2010, 33(7): 1272-1285 https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX201007014.htm [44] Morimoto C H, Mimica M R M. Eye gaze tracking techniques for interactive applications. Computer Vision and Image Understanding, 2005, 98(1): 4-24 doi: 10.1016/j.cviu.2004.07.010 [45] Kim S M, Sked M, Ji Q. Non-intrusive eye gaze tracking under natural head movements. In: Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. San Francisco, USA: IEEE, 2004. 2271-2274 [46] Blignaut P. Mapping the pupil-glint vector to gaze coordinates in a simple video-based eye tracker. Journal of Eye Movement Research, 2013, 7(1): 1-11 [47] Blignaut P, Wium D. The effect of mapping function on the accuracy of a video-based eye tracker. In: Proceedings of the 2013 Conference on Eye Tracking South Africa. Cape Town, South Africa: ACM, 2013. 39-46 [48] Blignaut P. A new mapping function to improve the accuracy of a video-based eye tracker. In: Proceedings of the South African Institute for Computer Scientists and Information Technologists Conference. East London, South Africa: ACM, 2013. 56-59 [49] Hennessey C, Noureddin B, Lawrence P. Fixation precision in high-speed noncontact eye-gaze tracking. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2008, 38(2): 289-298 doi: 10.1109/TSMCB.2007.911378 [50] Sesma-Sanchez L, Villanueva A, Cabeza R. Gaze estimation interpolation methods based on binocular data. IEEE Transactions on Biomedical Engineering, 2012, 59(8): 2235-2243 doi: 10.1109/TBME.2012.2201716 [51] 张太宁, 白晋军, 孟春宁, 常胜江.基于单相机双光源的视线估计.光电子$\cdot$激光, 2012, 23(10): 1990-1995 https://www.cnki.com.cn/Article/CJFDTOTAL-GDZJ201210028.htmZhang Tai-Ning, Bai Jin-Jun, Meng Chun-Ning, Chang Sheng-Jiang. Eye-gaze tracking based on one camera and two light sources. Journal of Optoelectronics $\cdot$ Laser, 2012, 23(10): 1990-1995 https://www.cnki.com.cn/Article/CJFDTOTAL-GDZJ201210028.htm [52] Banaeeyan R, Halin A A, Bahari M. Nonintrusive eye gaze tracking using a single eye image. In: Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications. Kuala Lumpur, Malaysia: IEEE, 2015. 139-144 [53] Cerrolaza J J, Villanueva A, Cabeza R. Taxonomic study of polynomial regressions applied to the calibration of video-oculographic systems. In: Proceedings of the 2008 Symposium on Eye Tracking Research & Applications. Savannah, USA: ACM, 2008. 259-266 [54] Zhu Z W, Ji Q. Novel eye gaze tracking techniques under natural head movement. IEEE Transactions on Biomedical Engineering, 2007, 54(12): 2246-2260 doi: 10.1109/TBME.2007.895750 [55] Zhu Z W, Ji Q. Eye gaze tracking under natural head movements. In: Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Diego, USA: IEEE, 2005. 918-923 [56] Kim J H, Han D I, Min C O, Lee D W, Eom W. IR vision-based LOS tracking using non-uniform illumination compensation. International Journal of Precision Engineering and Manufacturing, 2013, 14(8): 1355-1360 doi: 10.1007/s12541-013-0183-y [57] Arar N M, Thiran J P. Robust real-time multi-view eye tracking. arXiv: 1711.05444, 2017. [58] Kang J J, Eizenman M, Guestrin E D, Eizenman E. Investigation of the cross-ratios method for point-of-gaze estimation. IEEE Transactions on Biomedical Engineering, 2008, 55(9): 2293-2302 doi: 10.1109/TBME.2008.919722 [59] Coutinho F L, Morimoto C H. Free head motion eye gaze tracking using a single camera and multiple light sources. In: Proceedings of the 2006 19th Brazilian Symposium on Computer Graphics and Image Processing. Manaus, Brazil: IEEE, 2006. 171-178 [60] Yoo D H, Kim J H, Lee B R, Chung M J. Non-contact eye gaze tracking system by mapping of corneal reflections. In: Proceedings of the 5th IEEE International Conference on Automatic Face Gesture Recognition. Washington, USA: IEEE, 2002. 101-106 [61] Yoo D H, Chung M J. A novel non-intrusive eye gaze estimation using cross-ratio under large head motion. Computer Vision and Image Understanding, 2005, 98(1): 25-51 doi: 10.1016/j.cviu.2004.07.011 [62] Cheng H, Liu Y Q, Fu W H, Ji Y L, Yang L, Zhao Y, et al. Gazing point dependent eye gaze estimation. Pattern Recognition, 2017, 71: 36-44 doi: 10.1016/j.patcog.2017.04.026 [63] Arar N M, Gao H, Thiran J P. Towards convenient calibration for cross-ratio based gaze estimation. In: Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision. Waikoloa, USA: IEEE, 2015. 642-648 [64] Huang J B, Cai Q, Liu Z C, Ahuja N, Zhang Z Y. Towards accurate and robust cross-ratio based gaze trackers through learning from simulation. In: Proceedings of the 2014 Symposium on Eye Tracking Research and Applications. Safety Harbor, USA: ACM, 2014. 75-82 [65] Coutinho F L, Morimoto C H. Augmenting the robustness of cross-ratio gaze tracking methods to head movement. In: Proceedings of the 2012 Symposium on Eye Tracking Research and Applications. Santa Barbara, USA: ACM, 2012. 59-66 [66] Arar N M, Gao H, Thiran J P. A regression-based user calibration framework for real-time gaze estimation. IEEE Transactions on Circuits and Systems for Video Technology, 2017, 27(12): 2623-2638 doi: 10.1109/TCSVT.2016.2595322 [67] Zhang Z Y, Cai Q. Improving cross-ratio-based eye tracking techniques by leveraging the binocular fixation constraint. In: Proceedings of the 2014 Symposium on Eye Tracking Research and Applications. Safety Harbor, USA: ACM, 2014. 267-270 [68] Kang J J, Guestrin E D, MacLean W J, Eizenman M. Simplifying the cross-ratios method of point-of-gaze estimation. In: Proceedings of the 30th Canadian Medical and Biological Engineering Conference (CMBEC30). Toronto, ON, Canada: Oxford Univ Press, Jun 2007. 14-17 [69] Ma C F, Baek S J, Choi K A, Ko S J. Improved remote gaze estimation using corneal reflection-adaptive geometric transforms. Optical Engineering, 2014, 53(5): Article No. 053112 [70] Choi K A, Ma C F, Ko S J. Improving the usability of remote eye gaze tracking for human-device interaction. IEEE Transactions on Consumer Electronics, 2014, 60(3): 493-498 doi: 10.1109/TCE.2014.6937335 [71] Hansen D W, Agustin J S, Villanueva A. Homography normalization for robust gaze estimation in uncalibrated setups. In: Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications. Austin, USA: ACM, 2010. 13-20 [72] Shin Y G, Choi K A, Kim S T, Ko S J. A novel single IR light based gaze estimation method using virtual glints. IEEE Transactions on Consumer Electronics, 2015, 61(2): 254-260 doi: 10.1109/TCE.2015.7150601 [73] Kim S T, Choi K A, Shin Y G, Kang M C, Ko S J. Remote eye-gaze tracking method robust to the device rotation. Optical Engineering, 2016, 55(8): Article No. 083108 [74] Shin Y G, Choi K A, Kim S T, Yoo C H, Ko S J. A novel 2-D mapping-based remote eye gaze tracking method using two IR light sources. In: Proceedings of the 2015 IEEE International Conference on Consumer Electronics. Las Vegas, USA: IEEE, 2015. 190-191 [75] Wan Z J, Wang X J, Zhou K, Chen X Y, Wang X Q. A novel method for estimating free space 3D point-of-regard using pupillary reflex and line-of-sight convergence points. Sensors, 2018, 18(7): Article No. 2292 doi: 10.3390/s18072292 [76] Li J F, Li S G. Eye-model-based gaze estimation by RGB-D camera. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Columbus, USA: IEEE, 2014. 606-610 [77] Wang K, Ji Q. 3D gaze estimation without explicit personal calibration. Pattern Recognition, 2018, 79: 216-227 doi: 10.1016/j.patcog.2018.01.031 [78] Park K R. A real-time gaze position estimation method based on a 3-D eye model. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2007, 37(1): 199-212 doi: 10.1109/TSMCB.2006.883426 [79] Villanueva A, Cabeza R. Evaluation of corneal refraction in a model of a gaze tracking system. IEEE Transactions on Biomedical Engineering, 2008, 55(12): 2812-2822 doi: 10.1109/TBME.2008.2002152 [80] Villanueva A, Cabeza R. Models for gaze tracking systems. Eurasip Journal on Image and Video Processing, 2007, 2007(1): Article No. 023570 [81] Nakazawa A, Nitschke C. Point of gaze estimation through corneal surface reflection in an active illumination environment. In: Proceedings of the 12th European Conference on Computer Vision. Florence, Italy: Springer, 2012. 159-172 [82] Villanueva A, Cabeza R, Porta S. Gaze tracking system model based on physical parameters. International Journal of Pattern Recognition and Artificial Intelligence, 2007, 21(5): 855-877 doi: 10.1142/S0218001407005697 [83] Mansouryar M, Steil J, Sugano Y, Bulling A. 3D gaze estimation from 2D pupil positions on monocular head-mounted eye trackers. In: Proceedings of the 9th Biennial ACM Symposium on Eye Tracking Research & Applications. Charleston, USA: ACM, 2016. 197-200 [84] Kacete A, Séguier R, Collobert M, Royan J. Head pose free 3D gaze estimation using RGB-D camera. In: Proceedings of the 8th International Conference on Graphic and Image Processing. Tokyo, Japan: SPIE, 2016. Article No. 102251S [85] Wang X, Lindlbauer D, Lessig C, Alexa M. Accuracy of monocular gaze tracking on 3D geometry. In: Proceedings Springer International Publishing of the 1st Workshop on Eye Tracking and Visualization. Springer, 2017. 169-184 [86] Sun L, Song M L, Liu Z C, Sun M T. Real-time gaze estimation with online calibration. IEEE Multimedia, 2014, 21(4): 28-37 doi: 10.1109/MMUL.2014.54 [87] Cen K F, Che M. Research on eye gaze estimation technique base on 3D model. In: Proceedings of the 2011 International Conference on Electronics, Communications and Control. Ningbo, China: IEEE, 2011. 1623-1626 [88] Tamura K, Choi R, Aoki Y. Unconstrained and calibration-free gaze estimation in a room-scale area using a monocular camera. IEEE Access, 2017, 6: 10896-10908 [89] Zhou X L, Cai H B, Li Y F, Liu H H. Two-eye model-based gaze estimation from a Kinect sensor. In: Proceedings of the 2017 IEEE International Conference on Robotics and Automation. Singapore: IEEE, 2017. 1646-1653 [90] Zhou X L, Cai H B, Shao Z P, Yu H, Liu H H. 3D eye model-based gaze estimation from a depth sensor. In: Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics. Qingdao, China: IEEE, 2016. 369-374 [91] Wang K, Ji Q. Real time eye gaze tracking with Kinect. In: Proceedings of the 2016 23rd International Conference on Pattern Recognition. Cancun, Mexico: IEEE, 2016. 2752-2757 [92] Li J F, Li S G. Gaze estimation from color image based on the eye model with known head pose. IEEE Transactions on Human-Machine Systems, 2016, 46(3): 414-423 doi: 10.1109/THMS.2015.2477507 [93] Mora K A F, Odobez J M. Gaze estimation from multimodal Kinect data. In: Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. Providence, USA: IEEE, 2012. 25-30 [94] Jafari R, Ziou D. Gaze estimation using Kinect/PTZ camera. In: Proceedings of the 2012 IEEE International Symposium on Robotic and Sensors Environments. Magdeburg, Germany: IEEE, 2012. 13-18 [95] Xiong X H, Liu Z C, Cai Q, Zhang Z Y. Eye gaze tracking using an RGBD camera: A comparison with a RGB solution. In: Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication. Seattle, USA: ACM, 2014. 1113-1121 [96] Jafari R, Ziou D. Eye-gaze estimation under various head positions and iris states. Expert Systems with Applications, 2015, 42(1): 510-518 doi: 10.1016/j.eswa.2014.08.003 [97] Ghiass R S, Arandjelovic O. Highly accurate gaze estimation using a consumer RGB-D sensor. In: Proceedings of the 25th International Joint Conference on Artificial Intelligence. New York, USA: AAAI Press, 2016. 3368-3374 [98] Guestrin E D, Eizenman M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Transactions on Biomedical Engineering, 2006, 53(6): 1124-1133 doi: 10.1109/TBME.2005.863952 [99] Shih S W, Liu J. A novel approach to 3-D gaze tracking using stereo cameras. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2004, 34(1): 234-245 doi: 10.1109/TSMCB.2003.811128 [100] Ohno T, Mukawa N, Yoshikawa A. FreeGaze: A gaze tracking system for everyday gaze interaction. In: Proceedings of the 2002 Symposium on Eye Tracking Research & Applications. New Orleans, USA: ACM, 2002. 125-132 [101] Hennessey C, Noureddin B, Lawrence P. A single camera eye-gaze tracking system with free head motion. In: Proceedings of the 2006 Symposium on Eye Tracking Research & Applications. San Diego, USA: ACM, 2006. 87-94 [102] Morimoto C H, Amir A, Flickner M. Detecting eye position and gaze from a single camera and 2 light sources. Object Recognition Supported by User Interaction for Service Robots, 2002, 4: 314-317. doi: 10.1109/ ICPR.2002.1047459 [103] Hennessey C, Lawrence P. Noncontact binocular eye-gaze tracking for point-of-gaze estimation in three dimensions. IEEE Transactions on Biomedical Engineering, 2009, 56(3): 790-799 doi: 10.1109/TBME.2008.2005943 [104] Mujahidin S, Wibirama S, Nugroho H A, Hamamoto K. 3D gaze tracking in real world environment using orthographic projection. AIP Conference Proceedings, 2016, 1746(1): Article No. 020072 [105] Meyer A, Bohme M, Martinetz T, Barth E. A single-camera remote eye tracker. Perception and Interactive Technologies. Berlin, Heidelberg: Springer, 2006. 208-211 [106] Villanueva A, Cabeza R. A novel gaze estimation system with one calibration point. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2008, 38(4): 1123-1138 doi: 10.1109/TSMCB.2008.926606 [107] Lai C C, Shih S W, Hung Y P. Hybrid method for 3-D gaze tracking using glint and contour features. IEEE Transactions on Circuits and Systems for Video Technology, 2015, 25(1): 24-37 doi: 10.1109/TCSVT.2014.2329362 [108] Guestrin E D, Eizenman M. Remote point-of-gaze estimation with free head movements requiring a single-point calibration. In: Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Lyon, France: IEEE, 2007. 4556-4560 [109] Lai C C, Shih S W, Tsai H R, Hung Y P. 3-D gaze tracking using pupil contour features. In: Proceedings of the 22nd International Conference on Pattern Recognition. Stockholm, Sweden: IEEE, 2014. 1162-1166 [110] Shih S W, Wu Y T, Liu J. A calibration-free gaze tracking technique. In: Proceedings of the 15th International Conference on Pattern Recognition. Barcelona, Spain: IEEE, 2000. 201-204 [111] Zhang K, Zhao X B, Ma Z, Man Y. A simplified 3D gaze tracking technology with stereo vision. In: Proceedings of the 2010 International Conference on Optoelectronics and Image Processing. Haikou, China: IEEE, 2010. 131-134 [112] Nagamatsu T, Kamahara J, Tanaka N. Calibration-free gaze tracking using a binocular 3D eye model. In: Proceedings of the 27th International Conference on Human Factors in Computing Systems. Boston, USA: ACM, 2009. 3613-3618 [113] Bakker N M, Lenseigne B A J, Schutte S, Geukers E B M, Jonker P P, Van Der Helm F C T, et al. Accurate gaze direction measurements with free head movement for strabismus angle estimation. IEEE Transactions on Biomedical Engineering, 2013, 60(11): 3028-3035 doi: 10.1109/TBME.2013.2246161 [114] Nagamatsu T, Sugano R, Iwamoto Y, Kamahara J, Tanaka N. User-calibration-free gaze tracking with estimation of the horizontal angles between the visual and the optical axes of both eyes. In: Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications. Austin, USA: ACM, 2010. 251-254 [115] Beymer D, Flickner M. Eye gaze tracking using an active stereo head. In: Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Madison, USA: IEEE, 2003. vol. 2, pp. Ⅱ-451-Ⅱ-458 [116] Lidegaard M, Hansen D W, Kruger N. Head mounted device for point-of-gaze estimation in three dimensions. In: Proceedings of the 2014 Symposium on Eye Tracking Research and Applications. Safety Harbor, USA: ACM, 2014. 83-86 [117] Shiu Y C, Ahmad S. 3D location of circular and spherical features by monocular model-based vision. In: Proceedings of the 1989 IEEE International Conference on Systems, Man and Cybernetics. Cambridge, USA: IEEE, 1989. 576-581 [118] Meena Y K, Cecotti H, Wong-Lin K, Dutta A, Prasad G. Toward optimization of gaze-controlled human-computer interaction: Application to hindi virtual keyboard for stroke patients. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2018, 26(4): 911-922 doi: 10.1109/TNSRE.2018.2814826 [119] Rajanna V, Hammond T. A gaze-assisted multimodal approach to rich and accessible human-computer interaction. arXiv: 1803.04713, 2018. [120] Mazeika D, Carbone A, Pissaloux E E. Design of a generic head-mounted gaze tracker for human-computer interaction. In: Proceedings of the 2012 Joint Conference New Trends in Audio & Video and Signal Processing: Algorithms, Architectures, Arrangements and Applications. Lodz, Poland: IEEE, 2012. 127-131 [121] Rantanen V, Vanhala T, Tuisku O, Niemenlehto P H, Verho J, Surakka V, et al. A wearable, wireless gaze tracker with integrated selection command source for human-computer interaction. IEEE Transactions on Information Technology in Biomedicine, 2011, 15(5): 795-801 doi: 10.1109/TITB.2011.2158321 [122] Cristanti R Y, Sigit R, Harsono T, Adelina D C, Nabilah A, Anggraeni N P. Eye gaze tracking to operate android-based communication helper application. In: Proceedings of the 2017 International Electronics Symposium on Knowledge Creation and Intelligent Computing. Surabaya, Indonesia: IEEE, 2017. 89-94 [123] Khasnobish A, Gavas R, Chatterjee D, Raj V, Naitam S. EyeAssist: A communication aid through gaze tracking for patients with neuro-motor disabilities. In: Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops. Kona, USA: IEEE, 2017. 382-387 [124] Hyder R, Chowdhury S S, Fattah S A. Real-time non-intrusive eye-gaze tracking based wheelchair control for the physically challenged. In: Proceedings of the 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences. Kuala Lumpur, Malaysia: IEEE, 2016. 784-787 [125] Bovery M D M J, Dawson G, Hashemi J, Sapiro G. A scalable off-the-shelf framework for measuring patterns of attention in young children and its application in autism spectrum disorder. IEEE Transactions on Affective Computing, doi: 10.1109/TAFFC.2018.2890610, https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8598852 [126] Han J X, Kang J N, Ouyang G X, Tong Z, Ding M, Zhang D, et al. A study of EEG and eye tracking in children with autism. Chinese Science Bulletin, 2018, 63(15): 1464-1473 doi: 10.1360/N972017-01305 [127] Harezlak K, Kasprowski P. Application of eye tracking in medicine: A survey, research issues and challenges. Computerized Medical Imaging and Graphics, 2018, 65: 176-190 doi: 10.1016/j.compmedimag.2017.04.006 [128] Florea L. Future trends in early diagnosis for cognition impairments in children based on eye measurements[Trends in Future I & M]. IEEE Instrumentation & Measurement Magazine, 2018, 21(3): 41-42 [129] Tsutsumi S, Tamashiro W, Sato M, Okajima M, Ogura T, DoiK. Frequency analysis of gaze points with CT colonography interpretation using eye gaze tracking system. In: Proceedings of the 2017 SPIE Medical Imaging. Orlando, United States: SPIE, 2017. Article No. 1014011 [130] Kattoulas E, Evdokimidis I, Stefanis N C, Avramopoulos D, Stefanis C N, Smyrnis N. Predictive smooth eye pursuit in a population of young men: Ⅱ. Effects of schizotypy, anxiety and depression. Experimental Brain Research, 2011, 215(3-4): 219-226 doi: 10.1007/s00221-011-2888-4 [131] Wyder S, Hennings F, Pezold S, Hrbacek J, Cattin P C. With gaze tracking toward noninvasive eye cancer treatment. IEEE Transactions on Biomedical Engineering, 2016, 63(9): 1914-1924 doi: 10.1109/TBME.2015.2505740 [132] 卢姗姗, 王庆敏, 姚永杰, 李科华, 刘秋红.视觉追踪技术在国外军事航空领域中的应用.飞机设计, 2015, 35(3): 61-64 https://www.cnki.com.cn/Article/CJFDTOTAL-FJSJ201503014.htmLu Shan-Shan, Wang Qing-Min, Yao Yong-Jie, Li Ke-Hua, Liu Qiu-Hong. Application of eye tracking technology in foreign military aviation. Aircraft Design, 2015, 35(3): 61-64 https://www.cnki.com.cn/Article/CJFDTOTAL-FJSJ201503014.htm [133] Rani P S, Subhashree P, Devi N S. Computer vision based gaze tracking for accident prevention. In: Proceedings of the 2016 World Conference on Futuristic Trends in Research and Innovation for Social Welfare. Coimbatore, India: IEEE, 2016. 1-6 [134] Craye C, Karray F. Driver distraction detection and recognition using RGB-D sensor. CoRR, vol. abs/1502.00250, 2015 http://arxiv.org/abs/1502.00250 [135] Jianu R, Alam S S. A data model and task space for data of interest (DOI) eye-tracking analyses. IEEE Transactions on Visualization and Computer Graphics, 2018, 24(3): 1232-1245 doi: 10.1109/TVCG.2017.2665498 [136] Takahashi R, Suzuki H, Chew J Y, Ohtake Y, Nagai Y, Ohtomi K. A system for three-dimensional gaze fixation analysis using eye tracking glasses. Journal of Computational Design and Engineering, 2018, 5(4): 449-457 doi: 10.1016/j.jcde.2017.12.007 [137] O'Dwyer J, Flynn R, Murray N. Continuous affect prediction using eye gaze and speech. In: Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine. Kansas City, USA: IEEE, 2017. 2001-2007 [138] Steil J, Müller P, Sugano Y, Bulling A. Forecasting user attention during everyday mobile interactions using device-integrated and wearable sensors. In: Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services. Barcelona, Spain: ACM, 2018. Article No. 1 [139] Mikalef P, Sharma K, Pappas I O, Giannakos M N. Online reviews or marketer information? An eye-tracking study on social commerce consumers. In: Proceedings of the 16th Conference on e-Business, e-Services and e-Society. Delhi, India: Springer, 2017. 388-399 [140] George A, Routray A. Recognition of activities from eye gaze and egocentric video. arXiv: 1805.07253, 2018. [141] Zheng R C, Nakano K, Ishiko H, Hagita K, Kihira M, Yokozeki T. Eye-gaze tracking analysis of driver behavior while interacting with navigation systems in an urban area. IEEE Transactions on Human-Machine Systems, 2016, 46(4): 546-556 doi: 10.1109/THMS.2015.2504083 [142] Low T, Bubalo N, Gossen T, Kotzyba M, Brechmann A, Huckauf A, et al. Towards identifying user intentions in exploratory search using gaze and pupil tracking. In: Proceedings of the 2017 Conference on Conference Human Information Interaction and Retrieval. Oslo, Norway: ACM, 2017. 273-276 [143] Peterson J, Pardos Z, Rau M, Swigart A, Gerber C, McKinsey J. Understanding student success in chemistry using gaze tracking and pupillometry. In: Proceedings of the 2015 International Conference on Artificial Intelligence in Education. Madrid, Spain: Springer, 2015. 358-366 [144] 金瑞铭.视线追踪技术在网页关注点提取中的应用研究[硕士学位论文], 西安工业大学, 中国, 2016Jin Rui-Ming. Research on Application of Eye Tracking Technology in Extracting Web Page Concerns[Master thesis], Xi'an Technological University, China, 2016 [145] Li W C, Braithwaite G, Greaves M, Hsu C K, Lin S C. The evaluation of military pilot's attention distributions on the flight deck. In: Proceedings of the 2016 International Conference on Human-computer Interaction in Aerospace. Paris, France: ACM, 2016. Article No. 15 [146] Chen S Y, Gao L, Lai Y K, Rosin P L, Xia S H. Real-time 3D face reconstruction and gaze tracking for virtual reality. In: Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces. Reutlingen, Germany: IEEE, 2018. 525-526 [147] Tripathi S, Guenter B. A statistical approach to continuous self-calibrating eye gaze tracking for head-mounted virtual reality systems. In: Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision. Santa Rosa, USA: IEEE, 2017. 862-870 [148] Wibirama S, Nugroho H A, Hamamoto K. Evaluating 3D gaze tracking in virtual space: A computer graphics approach. Entertainment Computing, 2017, 21: 11-17 doi: 10.1016/j.entcom.2017.04.003 [149] Alnajar F, Gevers T, Valenti R, Ghebreab S. Auto-calibrated gaze estimation using human gaze patterns. International Journal of Computer Vision, 2017, 124(2): 223-236 doi: 10.1007/s11263-017-1014-x -

下载:

下载: