Long-term Autonomous Environment Adaptation of Mobile Robots: State-of-the-art Methods and Prospects

-

摘要: 真实世界中存在光照、天气、季节及场景结构等复杂环境因素, 这些因素的改变对移动机器人基本行为和任务能力带来巨大挑战.随着机器人与人工智能技术的不断发展, 如何使移动机器人在长期运行中与复杂多变的环境条件相适应是智能机器人领域的研究热点.本文重点从地图构建与动态维护、重定位及场景理解等移动机器人基本行为能力的系统综述入手, 对移动机器人长期自主环境适应的前沿技术与研究方向进行了着重论述与分析.最后对该领域的研究重点和技术发展趋势进行了探讨.Abstract: In real-world applications, mobile robots will work in complex open environments, where there are various changing factors such as time-varying illumination, weather, seasons and scene layout, which are all challenging tasks for a mobile robot with a long-term autonomous environment adaptation ability. With the rapid development and improvement of the technology in mobile robotics and artificial intelligence, how to make mobile robots adapt to changing environments is a hot research topic. This paper starts with a systematic review of the basic capabilities of mobile robots such as mapping and map updating, relocalization and scene understanding in dynamic environments, and then focuses on the cutting-edge technologies of long-term autonomous environment adaptation of mobile robots. The research emphases and prospective technical development trends are also presented at the end of this paper.

-

Key words:

- Environment adaptation /

- long-term autonomy /

- mapping /

- relocalization /

- scene understanding /

- mobile robots

1) 本文责任编委 吴立刚 -

表 1 动态环境下长航时机器人地图构建与长期维护方法

Table 1 Methods of mapping and long-term maintenance for mobile robots in dynamic environment

测试场景 关键词 主要思想 局限性 文献 停车场 临时地图 用临时地图跟踪由环境中半静态物体引起的矛盾观测, 并临时地扩展环境的静态地图, 进而利用粒子滤波算法实现机器人的定位 该算法默认动态物体被成功地检测并滤除 [24] 动态栅格地图 将每个栅格定义为隐式马尔科夫模型, 利用初始状态分布和观测模型预测状态转换概率 模型假设与真实环境中的动态变化的一致性问题; 栅格与栅格之间的独立假设不合理 [23] 非马尔科夫过程; 插曲片段 释放了马尔科夫过程的独立性假设, 分析观测数据与地图中未标记的物体之间的关联; 利用关联分析将对环境的观测分为长期、短期和动态特征 依赖提前构建的地图先验信息 [26] 生产车间 独立马尔科夫链; 栅格地图 将每个栅格定义为有两个状态的独立马尔科夫链; 栅格状态转换被定义为两个泊松过程, 且转换模型参数通过在线学习获得 算法模型假设环境中的动态变化属于齐次过程, 而事实上动态变化是有时间依赖性的 [25] 正态分布变换栅格地图 结合正态分布变换地图的简洁和栅格地图的稳定来表述动态环境, 并定义了精确的栅格状态递归更新模型; 该方法可用于多分辨率地图 该方法依赖一个外部准确的定位系统 [7] 室内环境 记忆消退 利用多重时间尺度下的环境模型表述环境; 随时间推移, 新的环境模型不断更新, 旧的不断消退 需要不断地对环境进行访问和建模; 只适应渐进的环境变化 [27] 多重记忆存储模型 模仿人类多重记忆模型, 用选择机制将环境观测分为传感器记忆、临时记忆和永久记忆; 并利用永久记忆对地图进行更新维护 地图中缺少尺度度量信息, 且只考虑了环境中有限的环境变化 [28] 多重记忆存储模型/3D构图 该方法利用短期记忆和永久记忆机制, 保证只利用永久的环境信息构建地图 环境中稳定的元素需要经常被观测和识别才能加入到永久记忆中 [29] 频谱分析 利用频谱对环境中的时空动态变化进行建模; 较小的存储需求适用于大范围环境 假设人类的行为是有规律可循的, 只适用于部分情形 [30] 摘要地图 摘要地图中只保存被认为有用的路标信息 地图中有限的环境信息, 只适用于解决特定的任务 [36] 城市环境 端到端分割聚类 搭建了输入是原始点云数据、输出是分割聚类结果的端到端架构; 利用二分类解决多分类问题 只考虑了车辆、行人和自行车三类动态目标 [32] 统一的栅格环境模型 构建了新颖的基于栅格的环境模型, 其中对动静态物体及其不确定性、速度等特征进行统一建模 利用栅格地图表述城市环境, 存在栅格分辨率选择和边缘混淆问题 [33] 校园环境 无监督增量学习 利用AP聚类算法对三维点云进行聚类; 并通过机器人与环境的交互得到聚类目标是障碍的概率 该方法只判断聚类目标是否为障碍, 没有进一步估计其速度等特性 [34] 地图长期维护 构建了包含位姿估计、全局地图维护和速度估计三个模块长期定位与构图系统, 通过对环境的重复观测, 直接对三维点进行状态预测与更新 该方法假设动态目标运动平滑 [35] 表 2 移动机器人基于人工设计图像特征的重定位方法

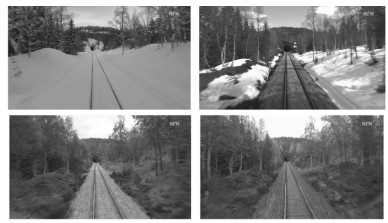

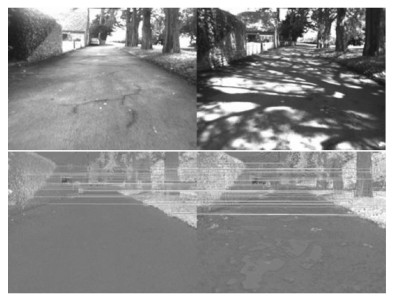

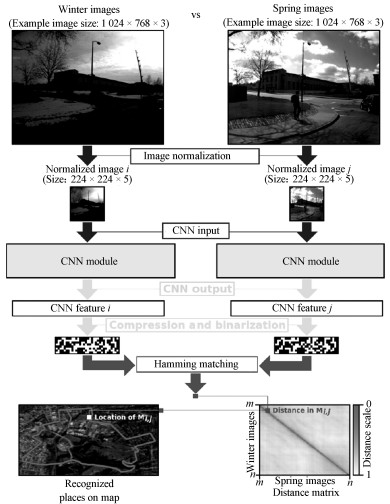

Table 2 Visual methods of relocalization based on hand-crafted features for mobile robots in term of long-term autonomy

目标环境 关键词 主要思想 发表年份 文献 光照/季节/环境结构变化 基于经验描述的重定位 将环境的模型表述定义为一条"经验", 利用视觉相对定位将场景的多种经验串联起来 2013 [49] 光照/季节变化 基于图像序列的重定位 利用较长的图像序列代替单幅图像实现场景匹配, 完成重定位 2012 [50] 光照条件变化 光照不变性图像 将RGB图像转换为具有光照不变性的图像, 进而利用FAST特征检测器和BRIEF特征描述符实现场景匹配 2014 [54] 光照/季节变化 光照不变性图像; 图像序列 利用光照不变性图像和图像序列等技术手段实现重定位; 同时提取全局二进制描述符来提高效率 2018 [56] 光照/季节变化 外观变化预测 鉴于自然条件下环境外观呈现周期性变化, 该方法通过预测不同条件下的环境外观来实现长期重定位 2013 [57] 光照/季节/动态因素 场景动态模型 通过分析场景中各种动态元素对局部特征的影响, 学习并利用场景中稳定的静态特征, 实现鲁棒的重定位 2013 [60] 表 3 移动机器人基于三维点云的重定位方法

Table 3 Methods of relocalization based on point clouds for mobile robots in term of long-term autonomy

算法类型 主要思想 局限性 发表年份 文献 直接法 对三维点云进行降采样, 利用关键点投票的方法实现高效的场景配准 不能解决环境的结构变化 2013 [70] 特征法 从三维点云中提取线性特征、面性特征以及球性特征, 并利用对不同类型特征的分布统计来实现场景间的高效匹配 众多的参数调节, 时间成本高 2009 [71] 将三维点云转换为二维图像, 利用视觉的方法实现机器人的重定位 生成二维图像不具有视点不变性 2018 [73] 将三维点云向若干个平面投影, 统计每个平面上点的投影分布生成全局特征, 进而实现场景匹配 对季节变化敏感 2016 [76] 分割聚类 对三维点云进行分割聚类, 利用聚类目标替代特征点实现场景匹配 对车辆等可能被移动的半静态物体敏感 2017 [75] 基于经验的方法 通过对同一场景的重复观测, 捕获其在结构或外观上的变化, 进而利用基于经验的方法实现机器人的长期稳定重定位 为了捕获某一场景的所有变化, 需要不断对该场景重复访问, 代价昂贵, 在大范围场景中易造成信息爆炸 2015 [77] 表 4 动态环境下长航时机器人自主场景理解方法

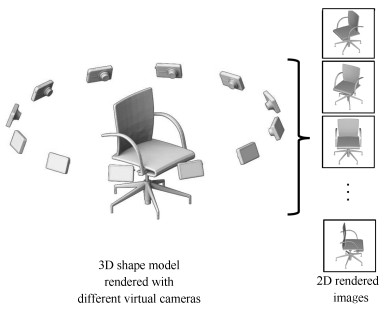

Table 4 Methods of scene understanding for mobile robots in term of long-term autonomy in dynamic environment

关键词 主要思想 目标问题 发表年份 文献 经典学习方法 将三维点云转换为二维图模型, 利用图像纹理和尺度协调技术解决多尺度分类问题 尺度不变性 2015 [85] 深度学习方法 利用大量训练数据训练目标识别模型, 利用训练数据的多样性解决多视角/多尺度目标识别 视角/尺度不变性 2015 [87] 结合深度学习和立体视觉重构算法实现了具有尺度不变性的语义分割方法 尺度不变性 2016 [92] 利用深度信息学习网络估计充足的深度, 进而利用深度信息调整分割网络中池化域的尺寸, 进而实现了具有尺度不变性的语义场景分割网络 尺度不变性 2018 [93] 利用图像和三维点云联合训练语义分割网络结构, 学习并结合2D和3D特征, 实现鲁棒的语义场景分割方法 光照/季节不变性 2018 [94] 迁移学习方法 提出基于在线学习的跨领域特征变换算法, 并结合$k$-NN分类器实现了跨领域的多类物体识别 跨领域多分类 2018 [88] 通过跨领域动态合成实例和"有选择性"地进行实例迁移来再平衡目标领域中训练数据的类分布 类不平衡问题 2017 [89] 通过共享碎片特征的方式联合训练多个目标检测器, 并且提出了一个基于数据采样技术的类不平衡算法, 对低置信率检测输出矩形框的再分类进一步提高了多类目标检测的准确率 类不平衡/小样本问题 2018 [90] -

[1] 柴天佑.自动化科学与技术发展方向.自动化学报, 2018, 44(11):1923-1930 doi: 10.16383/j.aas.2018.c180252Chai Tian-You. Development directions of automation science and technology. Acta Automatica Sinica, 2018, 44(11): 1923-1930 doi: 10.16383/j.aas.2018.c180252 [2] Barfoot T, Kelly J, Sibley G. Special issue on long-term autonomy. The International Journal of Robotics Research, 2013, 32(14): 1609-1610 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364913511182 [3] Kunze L, Hawes N, Duckett T, Hanheide M, Krajník T. Artificial intelligence for long-term robot autonomy: a survey. IEEE Robotics and Automation Letters, 2018, 3(4): 4023-4030 [4] Garg S, Jacobson A, Kumar S, Milford M. Improving condition- and environment-invariant place recognition with semantic place categorization. In: Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Vancouver, Canada: IEEE, 2017. 6863-6870 [5] Garg S, Suenderhauf N, Milford M. Don't look back: robustifying place categorization for viewpoint- and condition-invariant place recognition. In: Proceedings of the 2018 IEEE International Conference on Robotics and Automation ICRA). Brisbane, Australia: IEEE, 2018. 3645-3652 [6] Naseer T, Burgard W, Stachniss C. Robust visual localization across seasons. IEEE Transactions on Robotics, 2018, 34(2): 289-302 [7] Saarinen J P, Andreasson H, Stoyanov T, Lilienthal A J. 3D normal distributions transform occupancy maps: an efficient representation for mapping in dynamic environments. The International Journal of Robotics Research, 2013, 32(14): 1627-1644 [8] Lázaro M T, Capobianco R, Grisetti G. Efficient long-term mapping in dynamic environments. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Madrid, Spain: IEEE, 2018. 153-160 [9] Siva S, Zhang H. Omnidirectional multisensory perception fusion for long-term place recognition. In: Proceedings of the 2018 IEEE International Conference on Robotics and Automation ICRA). Brisbane, Australia: IEEE, 2018. 5175-5181 [10] Zhu J L, Ai Y F, Tian B, Cao D P, Scherer S. Visual place recognition in long-term and large-scale environment based on CNN feature. In: Proceedings of the 2018 IEEE Intelligent Vehicles Symposium IV). Changshu, China: IEEE, 2018. 1679-1685 [11] Se S, Lowe D G, Little J J. Vision-based global localization and mapping for mobile robots. IEEE Transactions on Robotics, 2005, 21(3): 364-375 [12] Pitzer B, Stiller C. Probabilistic mapping for mobile robots using spatial correlation models. In: Proceedings of the 2010 IEEE International Conference on Robotics and Automation. Anchorage, USA: IEEE, 2010. 5402-5409 [13] de la Puente P, Rodriguez-Losada D, Valero A, Matia F. 3D feature based mapping towards mobile robots' enhanced performance in rescue missions. In: Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems. St. Louis, USA: IEEE, 2009. 1138-1143 [14] 辛菁, 苟蛟龙, 马晓敏, 黄凯, 刘丁, 张友民.基于Kinect的移动机器人大视角3维V-SLAM.机器人, 2014, 36(5):560-568 http://d.old.wanfangdata.com.cn/Periodical/jqr201405007Xin Jing, Gou Jiao-Long, Ma Xiao-Min, Huang Kai, Liu Ding, Zhang You-Min. A large viewing angle 3-dimensional V-SLAM algorithm with a Kinect-based mobile robot system. Robot, 2014, 36(5): 560-568 http://d.old.wanfangdata.com.cn/Periodical/jqr201405007 [15] 杨鸿, 钱堃, 戴先中, 马旭东, 房芳.基于Kinect传感器的移动机器人室内环境三维地图创建.东南大学学报(自然科学版), 2013, 43(S1): 183-187 http://d.old.wanfangdata.com.cn/Periodical/dndxxb2013z1038Yang Hong, Qian Kun, Dai Xian-Zhong, Ma Xu-Dong, Fang Fang. Kinect-based 3D indoor environment map building for mobile robot. Journal of Southeast University Natural Science Edition), 2013, 43(S1): 183-187 http://d.old.wanfangdata.com.cn/Periodical/dndxxb2013z1038 [16] 丁文东, 徐德, 刘希龙, 张大朋, 陈天.移动机器人视觉里程计综述.自动化学报, 2018, 44(3): 385-400 doi: 10.16383/j.aas.2018.c170107Ding Wen-Dong, Xu De, Liu Xi-Long, Zhang Da-Peng, Chen Tian. Review on visual odometry for mobile robots. Acta Automatica Sinica, 2018, 44(3): 385-400 doi: 10.16383/j.aas.2018.c170107 [17] Montemerlo M, Thrun S, Whittaker W. Conditional particle filters for simultaneous mobile robot localization and people-tracking. In: Proceedings of the 2002 International conference on Robotics and Automation. Washington, USA: IEEE, 2002. 695-701 [18] Wolf D F, Sukhatme G S. Mobile robot simultaneous localization and mapping in dynamic environments. Autonomous Robots, 2005, 19(1): 53-65 doi: 10.1007-s10514-005-0606-4/ [19] Montesano L, Minguez J, Montano L. Modeling dynamic scenarios for local sensor-based motion planning. Autonomous Robots, 2008, 25(3): 231-251 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=578b332117cc1fba3aa17d98a66ee403 [20] Wang C C, Thorpe C, Thrun S, Hebert M, Durrant-Whyte H. Simultaneous localization, mapping and moving object tracking. The International Journal of Robotics Research, 2007, 26(9): 889-916 http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ028484490/ [21] Henriques J F, Vedaldi A. Mapnet: an allocentric spatial memory for mapping environments. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8476-8484 [22] Bürki M, Dymczyk M, Gilitschenski I, Cadena C, Siegwart R, Nieto J. Map management for efficient long-term visual localization in outdoor environments. In: Proceedings of the 2018 IEEE Intelligent Vehicles Symposium IV). Changshu, China: IEEE, 2018. 682-688 [23] Tipaldi G D, Meyer-Delius D, Burgard W. Lifelong localization in changing environments. The International Journal of Robotics Research, 2013, 32(14): 1662-1678 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364913502830 [24] Meyer-Delius D, Hess J, Grisetti G, Burgard W. Temporary maps for robust localization in semi-static environments. In: Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems. Taipei, China: IEEE, 2010. 5750-5755 [25] Saarinen J, Andreasson H, Lilienthal A J. Independent Markov chain occupancy grid maps for representation of dynamic environment. In: Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. Vilamoura, Portugal: IEEE, 2012. 3489-3495 [26] Biswas J, Veloso M. Episodic non-Markov localization: reasoning about short-term and long-term features. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 3969-3974 [27] Biber P, Duckett T. Dynamic maps for long-term operation of mobile service robots. In: Proceedings of the 2005 Robotics: Science and Systems Conference. Cambridge, USA: Massachusetts Institute of Technology, 2005. 17-24 [28] Dayoub F, Cielniak G, Duckett T. Long-term experiments with an adaptive spherical view representation for navigation in changing environments. Robotics and Autonomous Systems, 2011, 59(5): 285-295 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=41aa241ec4c3d77da06633964020821e [29] Morris T, Dayoub F, Corke P, Wyeth G, Upcroft B. Multiple map hypotheses for planning and navigating in non-stationary environments. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 2765-2770 [30] Krajnik T, Fentanes J P, Cielniak G, Dondrup C, Duckett T. Spectral analysis for long-term robotic mapping. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 3706-3711 [31] Zhang J, Singh S. Low-drift and real-time lidar odometry and mapping. Autonomous Robots, 2017, 41(2): 401-416 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=96d086fad7e673afa04089e3f0bd785e [32] Wang D Z, Posner I, Newman P. What could move? Finding cars, pedestrians and bicyclists in 3D laser data. In: Proceedings of the 2012 IEEE International Conference on Robotics and Automation. Saint Paul, USA: IEEE, 2012. 4038-4044 [33] Tanzmeister G, Thomas J, Wollherr D, Buss M. Grid-based mapping and tracking in dynamic environments using a uniform evidential environment representation. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 6090-6095 [34] Ott L, Ramos F. Unsupervised online learning for long-term autonomy. The International Journal of Robotics Research, 2013, 32(14): 1724-1741 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364913505657 [35] Pomerleau F, Krüsi P, Colas F, Furgale P, Siegwart R. Long-term 3D map maintenance in dynamic environments. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 3712-3719 [36] Dymczyk M, Lynen S, Cieslewski T, Bosse M, Siegwart R, Furgale P. The gist of maps -- summarizing experience for lifelong localization. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation ICRA). Seattle, USA: IEEE, 2015. 2767-2773 [37] 庄严, 卢希彬, 李云辉, 王伟.移动机器人基于三维激光测距的室内场景认知.自动化学报, 2011, 37(10): 1232-1240 doi: 10.3724/SP.J.1004.2011.01232Zhuang Yan, Lu Xi-Bin, Li Yun-Hui, Wang Wei. Mobile robot indoor scene cognition using 3D laser scanning. Acta Automatica Sinica, 2011, 37(10): 1232-1240 doi: 10.3724/SP.J.1004.2011.01232 [38] 闫飞, 庄严, 王伟.移动机器人基于多传感器信息融合的室外场景理解.控制理论与应用, 2011, 28(8): 1093-1098Yan Fei, Zhuang Yan, Wang Wei. Outdoor scene comprehension of mobile robot based on multi-sensor information fusion. Control Theory & Applications, 2011, 28(8): 1093-1098 [39] 余淼, 胡占义.高阶马尔科夫随机场及其在场景理解中的应用.自动化学报, 2015, 41(7): 1213-1234 doi: 10.16383/j.aas.2015.c140684Yu Miao, Hu Zhan-Yi. Higher-order markov random fields and their applications in scene understanding. Acta Automatica Sinica, 2015, 41(7): 1213-1234 doi: 10.16383/j.aas.2015.c140684 [40] 朱博, 高翔, 赵燕喃.机器人室内语义建图中的场所感知方法综述.自动化学报, 2017, 43(4): 493-508 doi: 10.16383/j.aas.2017.c160350Zhu Bo, Gao Xiang, Zhao Yan-Nan. Place perception for robot indoor semantic mapping: a survey. Acta Automatica Sinica, 2017, 43(4): 493-508 doi: 10.16383/j.aas.2017.c160350 [41] Sun L, Yan Z, Zaganidis A, Zhao C, Duckett T. Recurrent-OctoMap: learning state-based map refinement for long-term semantic mapping with 3-D-lidar data. IEEE Robotics and Automation Letters, 2018, 3(4): 3749-3756 [42] Drouilly R, Rives P, Morisset B. Semantic representation for navigation in large-scale environments. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation ICRA). Seattle, USA: IEEE, 2015. 1106-1111 [43] Wang S, Clark R., Wen H K, Trigoni N. End-to-end, sequence-to-sequence probabilistic visual odometry through deep neural networks. The International Journal of Robotics Research, 2018, 37(4-5): 513-542 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364917734298 [44] Kendall A, Grimes M, Cipolla R. PoseNet: a convolutional network for real-time 6-dof camera relocalization. In: Proceedings of the 2015 IEEE international conference on computer vision ICCV). Santiago, Chile: IEEE, 2015. 2938-2946 [45] Lowry S, Sünderhauf N, Newman P, Leonard J J, Cox D, Corke P, et al. Visual place recognition: a survey. IEEE Transactions on Robotics, 2016, 32(1): 1-19 http://d.old.wanfangdata.com.cn/Periodical/zhlxbx200807004 [46] Kim G, Kim A. Scan context: egocentric spatial descriptor for place recognition within 3D point cloud map. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Madrid, Spain: IEEE, 2018. 4802-4809 [47] Cummins M J, Newman P M. FAB-MAP: appearance-based place recognition and mapping using a learned visual vocabulary model. In: Proceedings of the 27th International Conference on Machine Learning. Haifa, Israel: Omnipress, 2010. 3-10 [48] Galvez-López D, Tardos J D. Bags of binary words for fast place recognition in image sequences. IEEE Transactions on Robotics, 2012, 28(5): 1188-1197 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=67749a17e9b82a5f605904c28b2ebb2f [49] Churchill W, Newman P. Experience-based navigation for long-term localisation. The International Journal of Robotics Research, 2013, 32(14): 1645-1661 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364913499193 [50] Milford M J, Wyeth G F. SeqSLAM: visual route-based navigation for sunny summer days and stormy winter nights. In: Proceedings of the 2012 IEEE International Conference on Robotics and Automation. Saint Paul, USA: IEEE, 2012. 1643-1649 [51] Tang L, Wang Y, Ding X Q, Yin H, Xiong R, Huang S D. Topological local-metric framework for mobile robots navigation: a long term perspective. Autonomous Robots, 2019, 43(1): 197-211 [52] Rublee E, Rabaud V, Konolige K, Bradski G. ORB: an efficient alternative to SIFT or SURF. In: Proceedings of the 2011 IEEE International Conference on Computer Vision. Barcelona, Spain: IEEE, 2011. 2564-2571 [53] Milford M, Lowry S, Sunderhauf N, Shirazi S, Pepperell E, Upcroft B, et al. Sequence searching with deep-learnt depth for condition- and viewpoint-invariant route-based place recognition. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops CVPRW). Boston, USA: IEEE, 2015. 18-25 [54] McManus C, Churchill W, Maddern W, Stewart A D, Newman P. Shady dealings: robust, long-term visual localisation using illumination invariance. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation ICRA). Hong Kong, China: IEEE, 2014. 901-906 [55] Ratnasingam S, McGinnity T M. Chromaticity space for illuminant invariant recognition. IEEE Transactions on Image Processing, 2012, 21(8): 3612-3623 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=79cf7cfaef24ccac564aee125992328e [56] Arroyo R, Alcantarilla P F, Bergasa L M, Romera E. Are you ABLE to perform a life-long visual topological localization. Autonomous Robots, 2018, 42(3): 665-685 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=8f3c8ff51defba77bc2d7c0ccc9a5df8 [57] Neubert P, Sünderhauf N, Protzel P. Appearance change prediction for long-term navigation across seasons. In: Proceedings of the 2013 European Conference on Mobile Robots. Barcelona, Spain: IEEE, 2013. 198-203 [58] Cummins M, Newman P M. Appearance-only SLAM at large scale with FAB-MAP 2.0. The International Journal of Robotics Research, 2011, 30(9): 1100-1123 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364910385483 [59] Sünderhauf N, Protzel P. BRIEF-Gist - closing the loop by simple means. In: Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems. San Francisco, USA: IEEE, 2011. 1234-1241 [60] Johns E, Yang G Z. Dynamic scene models for incremental, long-term, appearance-based localisation. In: Proceedings of the 2013 IEEE International Conference on Robotics and Automation. Karlsruhe, Germany: IEEE, 2013. 2731-2736 [61] Han F, Wang H, Huang G Q, Zhang H. Sequence-based sparse optimization methods for long-term loop closure detection in visual SLAM. Autonomous Robots, 2018, 42(7): 1323-1335 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=7b0e7ff1905f0527bf750d954e7776ae [62] McManus C, Upcroft B, Newman P. Learning place-dependant features for long-term vision-based localisation. Autonomous Robots, 2015, 39(3): 363-387 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=31f1d3f30d5eb5242d977cf0617c6e41 [63] Linegar C, Churchill W, Newman P. Made to measure: bespoke landmarks for 24-hour, all-weather localisation with a camera. In: Proceedings of the 2016 IEEE International Conference on Robotics and Automation ICRA). Stockholm, Sweden: IEEE, 2016. 787-794 [64] 张慧, 王坤峰, 王飞跃.深度学习在目标视觉检测中的应用进展与展望.自动化学报, 2017, 43(8): 1289-1305 doi: 10.16383/j.aas.2017.c160822Zhang Hui, Wang Kun-Feng, Wang Fei-Yue. Advances and perspectives on applications of deep learning in visual object detection. Acta Automatica Sinica, 2017, 43(8): 1289-1305 doi: 10.16383/j.aas.2017.c160822 [65] Chen L C, Papandreou G, Kokkinos I, Murphy K, Yuille A L. DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(4): 834-848 http://d.old.wanfangdata.com.cn/Periodical/zgtxtxxb-a201911017 [66] Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(4): 640-651 http://d.old.wanfangdata.com.cn/Periodical/nygcxb201918019 [67] Arroyo R, Alcantarilla P F, Bergasa L M, Romera E. Fusion and binarization of CNN features for robust topological localization across seasons. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Daejeon, South Korea: IEEE, 2016. 4656-4663 [68] Hou Y, Zhang H, Zhou S L. BoCNF: efficient image matching with Bag of ConvNet features for scalable and robust visual place recognition. Autonomous Robots, 2018, 42(6): 1169-1185 [69] Sünderhauf N, Shirazi S, Dayoub F, Upcroft B, Milford M. On the performance of ConvNet features for place recognition. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Hamburg, Germany: IEEE, 2015. 4297-4304 [70] Bosse M, Zlot R. Place recognition using keypoint voting in large 3D lidar datasets. In: Proceedings of the 2013 IEEE International Conference on Robotics and Automation. Karlsruhe, Germany: IEEE, 2013. 2677-2684 [71] Magnusson M, Andreasson H, Nuchter A, Lilienthal A J. Appearance-based loop detection from 3D laser data using the normal distributions transform. In: Proceedings of the 2009 IEEE International Conference on Robotics and Automation. Kobe, Japan: IEEE, 2009. 23-28 [72] Zhuang Y, Jiang N, Hu H S, Yan F. 3-D-laser-based scene measurement and place recognition for mobile robots in dynamic indoor environments. IEEE Transactions on Instrumentation and Measurement, 2013, 62(2): 438-450 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=d0e502be8383632ba1735f10900c3417 [73] Cao F K, Zhuang Y, Zhang H, Wang W. Robust place recognition and loop closing in laser-based SLAM for UGVs in urban environments. IEEE Sensors Journal, 2018, 18(10): 4242-4252 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=9075705a40bec6d3065347a2892bc30b [74] Kim G, Park B, Kim A. 1-day learning, 1-year localization: long-term LiDAR localization using scan context image. IEEE Robotics and Automation Letters, 2019, 4(2): 1948-1955 [75] Dubé R, Dugas D, Stumm E, Nieto J, Siegwart R, Cadena C. SegMatch: segment based place recognition in 3D point clouds. In: Proceedings of the 2017 IEEE International Conference on Robotics and Automation ICRA). Singapore, Singapore: IEEE, 2017. 5266-5272 [76] He L, Wang X L, Zhang H. M2DP: a novel 3D point cloud descriptor and its application in loop closure detection. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems. Daejeon, South Korea: IEEE, 2016. 231-237 [77] Maddern W, Pascoe G, Newman P. Leveraging experience for large-scale LIDAR localisation in changing cities. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation ICRA). Seattle, USA: IEEE, 2015. 1684-1691 [78] Latif Y, Cadena C, Neira J. Robust loop closing over time for pose graph SLAM. The International Journal of Robotics Research, 2013, 32(14): 1611-1626 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364913498910 [79] Uy M A, Lee G H. PointNetVLAD: deep point cloud based retrieval for large-scale place recognition. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: 2018. 4470-4479 [80] 庄严, 陈东, 王伟, 韩建达, 王越超.移动机器人基于视觉室外自然场景理解的研究与进展.自动化学报, 2010, 36(1): 1-11 doi: 10.3724/SP.J.1004.2010.00001Zhuang Yan, Chen Dong, Wang Wei, Han Jian-Da, Wang Yue-Chao. Status and development of natural scene understanding for vision-based outdoor moblie robot. Acta Automatica Sinica, 2010, 36(1): 1-11 doi: 10.3724/SP.J.1004.2010.00001 [81] Li L J, Socher R, Li F F. Towards total scene understanding: classification, annotation and segmentation in an automatic framework. In: Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, USA: IEEE, 2009. 2036-2043 [82] Kumar M P, Koller D. Efficiently selecting regions for scene understanding. In: Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition. San Francisco, USA: IEEE, 2010. 3217-3224 [83] Kim B S, Kohli P, Savarese S. 3D scene understanding by voxel-CRF. In: Proceedings of the 2013 IEEE International Conference on Computer Vision. Sydney, Australia: IEEE, 2013. 1425-1432 [84] Su H, Maji S, Kalogerakis E, Learned-Miller E. Multi-view convolutional neural networks for 3D shape recognition. In: Proceedings of the 2015 IEEE International Conference on Computer Vision ICCV). Santiago, Chile: IEEE, 2015. 945-953 [85] Zhuang Y, Lin X Q, Hu H S, Guo G. Using scale coordination and semantic information for robust 3-D object recognition by a service robot. IEEE Sensors Journal, 2015, 15(1): 37-47 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=d0235780d49ee238dbfe97d36ae136b4 [86] Eitel A, Springenberg J T, Spinello L, Riedmiller M, Burgard W. Multimodal deep learning for robust RGB-D object recognition. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems. Hamburg, Germany: IEEE, 2015. 681-687 [87] Wang A R, Lu J W, Cai J F, Cham T J, Wang G. Large-margin multi-modal deep learning for RGB-D object recognition. IEEE Transactions on Multimedia, 2015, 17(11): 1887-1898 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=71e349bf5320c3caac8bb60f7142f0ee [88] Zhang X S, Zhuang Y, Wang W, Pedrycz W. Transfer boosting with synthetic instances for class imbalanced object recognition. IEEE Transactions on Cybernetics, 2018, 48(1): 357-370 [89] Zhang X S, Zhuang Y, Hu H S, Wang W. 3-D laser-based multiclass and multiview object detection in cluttered indoor scenes. IEEE Transactions on Neural Networks and Learning Systems, 2017, 28(1): 177-190 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=6a862dbd69f8c258307137e739b878d0 [90] Zhang X S, Zhuang Y, Wei W, Pedrycz W. Online feature transformation learning for cross-domain object category recognition. IEEE Transactions on Neural Networks and Learning Systems, 2018, 29(7): 2857-2871 [91] Zhuang Y, Liu Y S, He G J, Wang W. Contextual classification of 3D laser points with conditional random fields in urban environments. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS). Hamburg, Germany: IEEE, 2015. 3908-3913 [92] Krešo I, Čaušević D, Krapac J, šegvić S. Convolutional scale invariance for semantic segmentation. In: Proceedings of the 38th German Conference on Pattern Recognition. Hannover, Germany: Springer, 2016. 64-75 [93] Ansari M D, KraußS, Wasenmüller O, Stricker D. ScaleNet: scale invariant network for semantic segmentation in urban driving scenes. In: Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications. Funchal, Madeira, Portugal: Scitepress, 2018. 399-404 [94] Kim D K, Maturana D, Uenoyama M, Scherer S. Season-invariant semantic segmentation with a deep multimodal network. Field and Service Robotics. Cham, Germany: Springer, 2018. 255-270 [95] 熊丹, 卢惠民, 肖军浩, 郑志强.具有尺度和旋转适应性的长时间目标跟踪.自动化学报, 2019, 45(2): 289-304 doi: 10.16383/j.aas.2018.c170359Xiong Dan, Lu Hui-Min, Xiao Jun-Hao, Zheng Zhi-Qiang. Robust long-term object tracking with adaptive scale and rotation estimation. Acta Automatica Sinica, 2019, 45(2): 289-304 doi: 10.16383/j.aas.2018.c170359 [96] Bansal A, Badino H, Huber D. Understanding how camera configuration and environmental conditions affect appearance-based localization. In: Proceedings of the 2014 IEEE Intelligent Vehicles Symposium. Dearborn, USA: IEEE, 2014. 800-807 [97] Maddern W, Pascoe G, Linegar C, Newman P. 1 year, 1000 km: The Oxford RobotCar dataset. The International Journal of Robotics Research, 2017, 36(1): 3-15 [98] Carlevaris-Bianco N, Ushani A K, Eustice R M. University of Michigan North Campus long-term vision and lidar dataset. The International Journal of Robotics Research, 2016, 35(9): 1023-1035 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=10.1177/0278364915614638 [99] Liu Y S, Wang F, Dobaie A M, He G J, Zhuang Y. Comparison of 2D image models in segmentation performance for 3D laser point clouds. Neurocomputing, 2017, 251: 136-144 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=30709a9943019170c59af0c88fa64f27 [100] Dosovitskiy A, Ros G, Codevilla F, Lopez A, Koltun V. CARLA: an open urban driving simulator. In: Proceedings of the 1st Annual Conference on Robot Learning. Mountain View, United States: PMLR, 2017. [101] Quiter C, Ernst M. deepdrive/deepdrive: 2.0 [Online]. available: https://doi.org/10.5281/zenodo.1248998, March 26, 2018. -

下载:

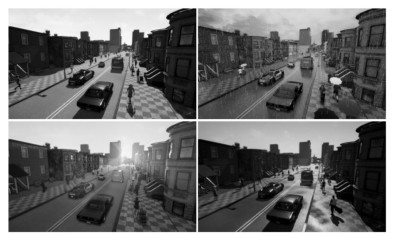

下载: