-

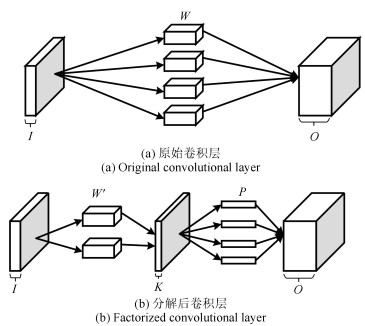

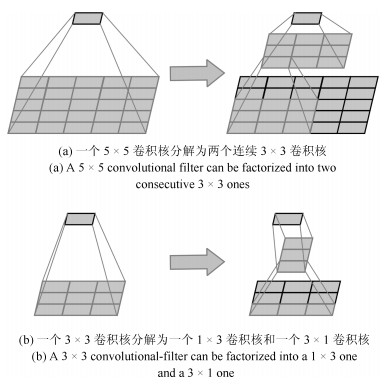

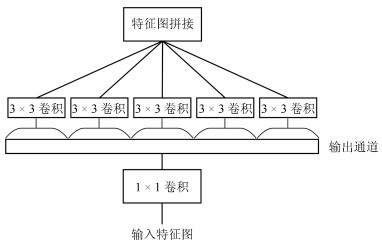

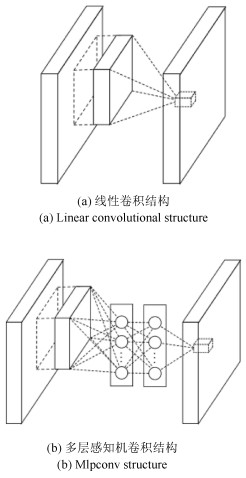

摘要: 近年来, 卷积神经网络(Convolutional neural network, CNNs)在计算机视觉、自然语言处理、语音识别等领域取得了突飞猛进的发展, 其强大的特征学习能力引起了国内外专家学者广泛关注.然而, 由于深度卷积神经网络普遍规模庞大、计算度复杂, 限制了其在实时要求高和资源受限环境下的应用.对卷积神经网络的结构进行优化以压缩并加速现有网络有助于深度学习在更大范围的推广应用, 目前已成为深度学习社区的一个研究热点.本文整理了卷积神经网络结构优化技术的发展历史、研究现状以及典型方法, 将这些工作归纳为网络剪枝与稀疏化、张量分解、知识迁移和精细模块设计4个方面并进行了较为全面的探讨.最后, 本文对当前研究的热点与难点作了分析和总结, 并对网络结构优化领域未来的发展方向和应用前景进行了展望.Abstract: Recently convolutional neural networks (CNNs) have made great progress in computer vision, natural language processing and speech recognition, which attracts wide attention for their powerful ability of feature learning. However, deep convolutional neural networks usually have large capacity and high computational complexity, hindering their applications in real-time and source-constrained areas. Thus, optimizing the structure of deep model will contribute to rapid deployment of such networks, which has been a hot topic of deep learning community. In this paper, we provide a comprehensive survey of history progress, recent advances and typical approaches in network structure optimization. These approaches are mainly categorized into four schemes, which are pruning & sparsification, tensor factorization, knowledge transferring and compacting module designing. Finally, the remaining problems and potential trend in this topic are concluded and discussed.

-

Key words:

- Convolutional neural networks (CNNs) /

- structure optimization /

- network pruning /

- tensor factorization /

- knowledge transferring

1) 本文责任编委 贺威 -

表 1 经典卷积神经网络的性能及相关参数

Table 1 Classic convolutional neural networks and corresponding parameters

年份 网络名称 网络层数 卷积层数量 参数数量 卷积层 全连接层 乘加操作数(MACs) 卷积层 全连接层 Top-5错误率(%) 2012 AlexNet[1] 8 5 2.3M 58.6M 666 M 58.6M 16.4 2014 Overfeat[2] 8 5 16 M 130M 2.67G 124M 14.2 2014 VGGNet-16[3] 16 13 14.7M 124M 15.3 G 130M 7.4 2015 GoogLeNet[4] 22 21 6M 1M 1.43 G 1M 6.7 2016 ResNet-50[5] 50 49 23.5M 2M 3.86 G 2M 3.6 表 2 网络剪枝对不同网络的压缩效果

Table 2 Comparison of different pruned networks

-

[1] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. Lake Tahoe, Nevada, USA: Curran Associates Inc., 2012. 1097-1105 [2] Zeiler M D, Fergus R. Visualizing and understanding convolutional networks. In: Proceedings of 13th European Conference on Computer Vision. Zurich, Switzerland: Springer, 2014. 818-833 http://cn.bing.com/academic/profile?id=2e04eadd73b7358f1ea104aef2c94bd4&encoded=0&v=paper_preview&mkt=zh-cn [3] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv: 1409.1556, 2014. http://cn.bing.com/academic/profile?id=9a83dddfc646cd21a3e38737d303a369&encoded=0&v=paper_preview&mkt=zh-cn [4] Szegedy C, Liu W, Jia Y Q, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, MA, USA: IEEE, 2015. 1-9 http://cn.bing.com/academic/profile?id=7d4011aa0a4959f0c5e4af61acc12466&encoded=0&v=paper_preview&mkt=zh-cn [5] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 770-778 http://cn.bing.com/academic/profile?id=d3fa279e4a35560a5429ba8f84dff15e&encoded=0&v=paper_preview&mkt=zh-cn [6] LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11): 2278-2324 doi: 10.1109/5.726791 [7] He K M, Sun J. Convolutional neural networks at constrained time cost. In: Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 5353-5360 [8] Le Cun Y, Denker J S, Solla S A. Optimal brain damage. In: Proceedings of the 2nd International Conference on Neural Information Processing Systems. Denver, Colorado, USA: MIT Press, 1989. 598-605 [9] Hassibi B, Stork D G, Wolff G, Watanabe T. Optimal brain surgeon: extensions and performance comparisons. In: Proceedings of the 6th International Conference on Neural Information Processing Systems. Denver, Colorado, USA: Morgan Kaufmann Publishers Inc., 1993. 263-270 [10] Cheng Y, Wang D, Zhou P, Zhang T. A survey of model compression and acceleration for deep neural networks. arXiv: 1710.09282, 2017. [11] Cheng J, Wang P S, Li G, Hu Q H, Lu H Q. Recent advances in efficient computation of deep convolutional neural networks. Frontiers of Information Technology & Electronic Engineering, 2018, 19(1): 64-77 http://d.old.wanfangdata.com.cn/Periodical/zjdxxbc-e201801008 [12] 雷杰, 高鑫, 宋杰, 王兴路, 宋明黎.深度网络模型压缩综述.软件学报, 2018, 29(2): 251-266 http://d.old.wanfangdata.com.cn/Periodical/rjxb201802002Lei Jie, Gao Xin, Song Jie, Wang Xing-Lu, Song Ming-Li. Survey of deep neural network model compression. Journal of Software, 2018, 29(2): 251-266 http://d.old.wanfangdata.com.cn/Periodical/rjxb201802002 [13] Hu H Y, Peng R, Tai Y W, Tang C K. Network trimming: a data-driven neuron pruning approach towards efficient deep architectures. arXiv: 1607.03250, 2016. [14] Cheng Y, Wang D, Zhou P, Zhang T. Model compression and acceleration for deep neural networks: the principles, progress, and challenges. IEEE Signal Processing Magazine, 2018, 35(1): 126-136 http://cn.bing.com/academic/profile?id=c41edb9c79f4cae56125bbfc508801d3&encoded=0&v=paper_preview&mkt=zh-cn [15] Gong Y C, Liu L, Yang M, Bourdev L. Compressing deep convolutional networks using vector quantization. arXiv: 1412.6115, 2014. http://cn.bing.com/academic/profile?id=fb878b7aaad93122079eeaf80c4b058f&encoded=0&v=paper_preview&mkt=zh-cn [16] Reed R. Pruning algorithms-a survey. IEEE Transactions on Neural Networks, 1993, 4(5): 740-747 doi: 10.1109/72.248452 [17] Collins M D, Kohli P. Memory bounded deep convolutional networks. arXiv: 1412.1442, 2014. [18] Jin X J, Yuan X T, Feng J S, Yan S C. Training skinny deep neural networks with iterative hard thresholding methods. arXiv: 1607.05423, 2016. [19] Zhou H, Alvarez J M, Porikli F. Less is more: towards compact CNNs. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 662-677 [20] Wen W, Wu C P, Wang Y D, Chen Y R, Li H. Learning structured sparsity in deep neural networks. In: Proceedings of the 30th Conference on Neural Information Processing Systems. Barcelona, Spain: MIT Press, 2016. 2074-2082 [21] Lebedev V, Lempitsky V. Fast convnets using group-wise brain damage. In: Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 2554-2564 [22] Louizos C, Welling M, Kingma D P. Learning sparse neural networks through L0regularization. arXiv: 1712.01312, 2017. [23] Hinton G E, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov R R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv: 1207.0580, 2012. [24] Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research, 2014, 15(1): 1929-1958 http://d.old.wanfangdata.com.cn/Periodical/kzyjc200606005 [25] Li Z, Gong B Q, Yang T B. Improved dropout for shallow and deep learning. In: Proceedings of the 30th Conference on Neural Information Processing Systems. Barcelona, Spain: MIT Press, 2016. 2523-2531 [26] Anwar S, Sung W. Coarse pruning of convolutional neural networks with random masks. In: Proceedings of 2017 International Conference on Learning Representations. Toulon, France: 2017. 134-145 [27] Hanson S J, Pratt L Y. Comparing biases for minimal network construction with back-propagation. In: Proceedings of the 1st International Conference on Neural Information Processing Systems. Denver, Colorado, USA: MIT Press, 1988. 177-185 [28] Han S, Mao H Z, Dally W J. Deep compression: compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv: 1510.00149, 2015. http://cn.bing.com/academic/profile?id=9bf6fa99e4da3298640c577b462462d5&encoded=0&v=paper_preview&mkt=zh-cn [29] Srinivas S, Babu R V. Data-free parameter pruning for deep neural networks. arXiv: 1507.06149, 2015. [30] Guo Y W, Yao A B, Chen Y R. Dynamic network surgery for efficient DNNs. In: Proceedings of the 30th Conference on Neural Information Processing Systems. Barcelona, Spain: MIT Press, 2016. 1379-1387 [31] Liu X Y, Pool J, Han S, Dally W J. Efficient sparse-winograd convolutional neural networks. In: Proceedings of 2017 International Conference on Learning Representation. France: 2017. [32] He Y H, Zhang X Y, Sun J. Channel pruning for accelerating very deep neural networks. In: Proceedings of 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 1398-1406 [33] Li H, Kadav A, Durdanovic I, Samet H, Graf H P. Pruning filters for efficient convNets. arXiv: 1608.08710, 2016. [34] Luo J H, Wu J X, Lin W Y. Thinet: a filter level pruning method for deep neural network compression. In: Proceedings of 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5068-5076 [35] Denil M, Shakibi B, Dinh L, Ranzato M, de Freitas N. Predicting parameters in deep learning. In: Proceedings of the 26th International Conference on Neural Information Processing Systems. Lake Tahoe, Nevada, USA: Curran Associates Inc., 2013. 2148-2156 [36] Rigamonti R, Sironi A, Lepetit V, Fua P. Learning separable filters. In: Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition. Portland, OR, USA: IEEE, 2013. 2754-2761 [37] Jaderberg M, Vedaldi A, Zisserman A. Speeding up convolutional neural networks with low rank expansions. arXiv: 1405.3866, 2014. http://cn.bing.com/academic/profile?id=2c2b54ee2cf492b32a9efa47b48a5cfc&encoded=0&v=paper_preview&mkt=zh-cn [38] Denton E, Zaremba W, Bruna J, LeCun Y, Fergus R. Exploiting linear structure within convolutional networks for efficient evaluation. In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2014. 1269-1277 [39] Lebedev V, Ganin Y, Rakhuba M, Oseledets I, Lempitsky V. Speeding-up convolutional neural networks using fine-tuned CP-decomposition. arXiv: 1412.6553, 2014. [40] Tai C, Xiao T, Zhang Y, Wang X G, E W N. Convolutional neural networks with low-rank regularization. arXiv: 1511.06067, 2015. [41] Zhang X Y, Zou J H, Ming X, He K M, Sun J. Efficient and accurate approximations of nonlinear convolutional networks. In: Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 1984-1992 [42] Kim Y D, Park E, Yoo S, Choi T, Yang L, Shin D. Compression of deep convolutional neural networks for fast and low power mobile applications. arXiv: 1511.06530, 2015. http://cn.bing.com/academic/profile?id=281aebb382c2e11ab8d73baaafadfbe5&encoded=0&v=paper_preview&mkt=zh-cn [43] Wang Y H, Xu C, Xu C, Tao D C. Beyond filters: compact feature map for portable deep model. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: JMLR.org, 2017. 3703-3711 [44] Astrid M, Lee S I. CP-decomposition with tensor power method for convolutional neural networks compression. In: Proceedings of 2017 IEEE International Conference on Big Data and Smart Computing. Jeju, South Korea: IEEE, 2017. 115-118 [45] Bucilu\v{a} C, Caruana R, Niculescu-Mizil A. Model compression. In: Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Philadelphia, USA: ACM, 2006. 535-541 [46] Ba J, Caruana R. Do deep nets really need to be deep? In: Proceedings of Advances in Neural Information Processing Systems. Montreal, Quebec, Canada: MIT Press, 2014. 2654-2662 [47] Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network. arXiv: 1503.02531, 2015. [48] Romero A, Ballas N, Kahou S E, Chassang A, Gatta C, Bengio Y. Fitnets: hints for thin deep nets. arXiv: 1412.6550, 2014. [49] Luo P, Zhu Z Y, Liu Z W, Wang X G, Tang X O. Face model compression by distilling knowledge from neurons. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, Arizona, USA: AAAI, 2016. 3560-3566 [50] Chen T Q, Goodfellow I, Shlens J. Net2Net: accelerating learning via knowledge transfer. arXiv: 1511.05641, 2015. [51] Zagoruyko S, Komodakis N. Paying more attention to attention: improving the performance of convolutional neural networks via attention transfer. In: Proceedings of 2017 International Conference on Learning Representations. France: 2017. [52] Theis L, Korshunova I, Tejani A, Huszár F. Faster gaze prediction with dense networks and Fisher pruning. arXiv: 1801.05787, 2018. [53] Yim J, Joo D, Bae J, Kim J. A gift from knowledge distillation: fast optimization, network minimization and transfer learning. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. [54] Chen G B, Choi W, Yu X, Han T, Chandraker M. Learning efficient object detection models with knowledge distillation. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 742-751 [55] Lin M, Chen Q, Yan S C. Network in network. arXiv: 1312.4400, 2013. [56] Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv: 1502.03167, 2015. [57] Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In: Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 2818-2826 [58] Szegedy C, Ioffe S, Vanhoucke V, Alemi A A. Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2017. 12 [59] Chollet F. Xception: deep learning with depthwise separable convolutions. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. [60] Chang J R, Chen Y S. Batch-normalized maxout network in network. arXiv: 1511.02583, 2015. [61] Pang Y W, Sun M L, Jiang X H, Li X L. Convolution in convolution for network in network. IEEE transactions on neural networks and learning systems, 2018, 29(5): 1587-1597 doi: 10.1109/TNNLS.2017.2676130 [62] Han X M, Dai Q. Batch-normalized mlpconv-wise supervised pre-training network in network. Applied Intelligence, 2018, 48(1): 142-155 doi: 10.1007/s10489-017-0968-2 [63] Srivastava R K, Greff K, Schmidhuber J. Highway networks. arXiv: 1505.00387, 2015. [64] Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8): 1735-1780 doi: 10.1162/neco.1997.9.8.1735 [65] Larsson G, Maire M, Shakhnarovich G. Fractalnet: ultra-deep neural networks without residuals. arXiv: 1605.07648, 2016. [66] Huang G, Sun Y, Liu Z, Sedra D, Weinberger K Q. Deep networks with stochastic depth. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 646-661 [67] He K M, Zhang X Y, Ren S Q, Sun J. Identity mappings in deep residual networks. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 630-645 [68] Xie S N, Girshick R, Dollár P, Tu Z W, He K M. Aggregated residual transformations for deep neural networks. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. 5987-5995 [69] LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521(7553): 436-444 doi: 10.1038/nature14539 [70] Zagoruyko S, Komodakis N. Wide residual networks. arXiv: 1605.07146, 2016. [71] Targ S, Almeida D, Lyman K. Resnet in resnet: generalizing residual architectures. arXiv: 1603.08029, 2016. [72] Zhang K, Sun M, Han T X, Yuan X F, Guo L R, Liu T. Residual networks of residual networks: multilevel residual networks. IEEE Transactions on Circuits and Systems for Video Technology, 2018, 28(6): 1303-1314 doi: 10.1109/TCSVT.2017.2654543 [73] Abdi M, Nahavandi S. Multi-residual networks: improving the speed and accuracy of residual networks. arXiv: 1609.05672, 2016. [74] Huang G, Liu Z, van der Maaten L, Weinberger K Q. Densely connected convolutional networks. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. [75] 张婷, 李玉鑑, 胡海鹤, 张亚红.基于跨连卷积神经网络的性别分类模型.自动化学报, 2016, 42(6): 858-865 doi: 10.16383/j.aas.2016.c150658Zhang Ting, Li Yu-Jian, Hu Hai-He, Zhang Ya-Hong. A gender classification model based on cross-connected convolutional neural networks. Acta Automatica Sinica, 2016, 42(6): 858-865 doi: 10.16383/j.aas.2016.c150658 [76] 李勇, 林小竹, 蒋梦莹.基于跨连接LeNet-5网络的面部表情识别.自动化学报, 2018, 44(1): 176-182 doi: 10.16383/j.aas.2018.c160835Li Yong, Lin Xiao-Zhu, Jiang Meng-Ying. Facial expression recognition with cross-connect LeNet-5 network. Acta Automatica Sinica, 2018, 44(1): 176-182 doi: 10.16383/j.aas.2018.c160835 [77] Howard A G, Zhu M L, Chen B, Kalenichenko D, Wang W J, Weyand T, et al. Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv: 1704.04861, 2017. [78] Sandler M, Howard A, Zhu M L, Zhmoginov A, Chen L C. MobileNetV2: inverted residuals and linear bottlenecks. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 4510-4520 [79] Zhang X Y, Zhou X Y, Lin M X, Sun J. ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. -

下载:

下载: