An Algorithm for Affordance Parts Detection of Household Tools Based on Joint Learning

-

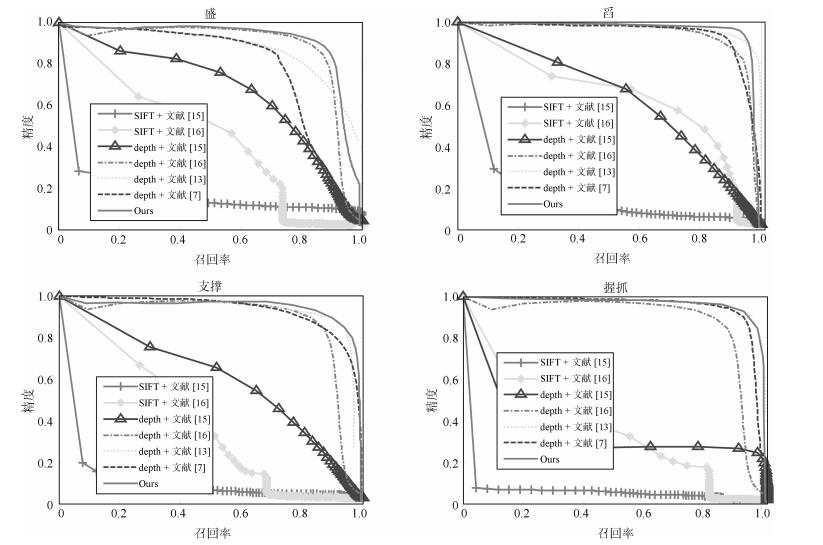

摘要: 对工具及其功用性部件的认知是共融机器人智能提升的重要研究方向.本文针对家庭日常工具的功用性部件建模与检测问题展开研究,提出了一种基于条件随机场(Conditional random field,CRF)和稀疏编码联合学习的家庭日常工具功用性部件检测算法.首先,从工具深度图像提取表征工具功用性部件的几何特征;然后,分析CRF和稀疏编码之间的耦合关系并进行公式化表示,将特征稀疏化后作为潜变量构建初始条件随机场模型,并进行稀疏字典和CRF的协同优化:一方面,将特征的稀疏表示作为CRF的随机变量条件及权重参数选择器;另一方面,在CRF调控下对稀疏字典进行更新.随后使用自适应时刻估计(Adaptive moment estimation,Adam)方法实现模型解耦与求解.最后,给出了基于联合学习的工具功用性部件模型离线构建算法,以及基于该模型的在线检测方法.实验结果表明,相较于使用传统特征提取和模型构建方法,本文方法对功用性部件的检测精度和效率均得到提升,且能够满足普通配置机器人对工具功用性认知的需要.Abstract: The research for coherent robots to cognize tools and their affordance parts is an important direction to improve their machine intelligence. Aimed at modeling and detecting affordance parts of household tools, a joint learning algorithm for affordance parts detection via both conditional random field (CRF) and sparse coding is proposed. Firstly, geometric features of affordance parts are obtained from depth images of the tools. Secondly, the coupled relationship between CRF and sparse coding is analyzed and described with formulations. Initial CRF model is built by using sparse coded features as latent variables, and both the sparse dictionary and CRF are optimized simultaneously. On one hand, the sparse coded features are considered as the random variable condition and the weight parameter selector of CRF, and on the other hand, sparse dictionary is updated with the modulation of CRF. Then the model is decoupled and solved with the adaptive moment estimation (Adam). Finally, the offline joint learning algorithm for affordance parts modeling and online detection method are given. The experimental results show that, comparing with traditional features extracting and modeling methods, both the accuracy and efficiency of our method are improved, which can satisfy the affordance cognition requirements for robots with common configurations.1) 本文责任编委 胡清华

-

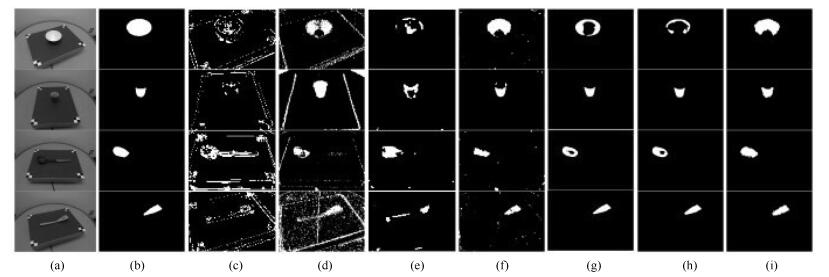

图 4 本文方法与其他方法的检测结果对比图((a)为单一场景下的待检测工具图, 由上到下分别为碗(bowl)、杯子(cup)、勺子(ladle)、铲子(turner); (b)为待检测目标功用性部件的真实值图, 由上到下分别为盛(contain)、握抓(wrap-grasp)、舀(scoop)、支撑(support); (c) SIFT +文献[15]方法检测结果; (d)深度特征+文献[15]方法检测结果; (e) SIFT +文献[16]方法检测结果; (f)深度特征+文献[16]方法检测结果; (g)深度特征+文献[7]方法检测结果; (h)深度特征+文献[13]方法检测结果; (i)本文方法检测结果)

Fig. 4 Comparison of detection results between our method and others ((a) Tools in a single scene, from the top to the bottom: bowl, cup, ladle and turner; (b) Ground truth of object affordances, from the top to the bottom: contain、wrap-grasp、scoop、support; (c) Detection result with SIFT + Paper [15]; (d) Detection result with Depth + Paper [15]; (e) Detection result with SIFT + Paper [16]; (f) Detection result with Depth + Paper [16]; (g) Detection result with Depth + Paper [7]; (h) Detection result with Depth + Paper [13]; (i) Detection result with our method)

表 1 本文方法与其他方法的效率对比(秒)

Table 1 Comparison of efficiency between our method and others (s)

-

[1] Aly A, Griffiths S, Stramandinoli F. Towards intelligent social robots:current advances in cognitive robotics. Cognitive Systems Research, 2017, 43:153-156 doi: 10.1016/j.cogsys.2016.11.005 [2] Min H Q, Yi C A, Luo R H, Zhu J H, Bi S. Affordance research in developmental robotics:a survey. IEEE Transactions on Cognitive and Developmental Systems, 2016, 8(4):237-255 https://ieeexplore.ieee.org/document/7582380 [3] Lenz I, Lee H, Saxena A. Deep learning for detecting robotic grasps. The International Journal of Robotics Research, 2015, 34(4-5):705-724 doi: 10.1177/0278364914549607 [4] Kjellström H, Romero J, Kragić D. Visual object-action recognition:inferring object affordances from human demonstration. Computer Vision and Image Understanding, 2011, 115(1):81-90 http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0220084270/ [5] Grabner H, Gall J, Van Gool L. What makes a chair a chair? In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition. Providence, RI: IEEE, 2011. 1529-1536 [6] Koppula H S, Gupta R, Saxena A. Learning human activities and object affordances from RGB-D videos. The International Journal of Robotics Research, 2013, 32(8):951-970 doi: 10.1177/0278364913478446 [7] Myers A, Teo C L, Fermüller C, Aloimonos Y. Affordance detection of tool parts from geometric features. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation. Seattle, WA: IEEE, 2015. 1374-1381 [8] 林煜东, 和红杰, 陈帆, 尹忠科.基于轮廓几何稀疏表示的刚性目标模型及其分级检测算法.自动化学报, 2015, 41(4):843-853 http://www.aas.net.cn/CN/abstract/abstract18658.shtmlLi Yu-Dong, He Hong-Jie, Chen Fan, Yin Zhong-Ke. A rigid object detection model based on geometric sparse representation of profile and its hierarchical detection algorithm. Acta Automatica Sinica, 2015, 41(4):843-853 http://www.aas.net.cn/CN/abstract/abstract18658.shtml [9] Redmon J, Angelova A. Real-time grasp detection using convolutional neural networks. In: Proceedings of the 2015 IEEE International Conference on Robotics and Automation. Seattle, WA: IEEE, 2015. 1316-1322 [10] 仲训杲, 徐敏, 仲训昱, 彭侠夫.基于多模特征深度学习的机器人抓取判别方法.自动化学报, 2016, 42(7):1022-1029 http://www.aas.net.cn/CN/abstract/abstract18893.shtmlZhong Xun-Gao, Xu Min, Zhong Xun-Yu, Peng Xia-Fu. Multimodal features deep learning for robotic potential grasp recognition. Acta Automatica Sinica, 2016, 42(7):1022-1029 http://www.aas.net.cn/CN/abstract/abstract18893.shtml [11] Myers A O. From form to function: detecting the affordance of tool parts using geometric features and material cues[Ph.D. dissertation], University of Maryland, 2016 [12] Nguyen A, Kanoulas D, Caldwell D G, Tsagarakis N G. Detecting object affordances with Convolutional Neural Networks. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems. Daejeon: IEEE, 2016. 2765-2770 [13] 吴培良, 付卫兴, 孔令富.一种基于结构随机森林的家庭日常工具部件功用性快速检测算法.光学学报, 2017, 37(2):0215001 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201702020Wu Pei-Liang, Fu Wei-Xing, Kong Ling-Fu. A fast algorithm for affordance detection of household tool parts based on structured random forest. Acta Optica Sinica, 2017, 37(2):0215001 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201702020 [14] Thogersen M, Escalera S, González J, Moeslund T B. Segmentation of RGB-D indoor scenes by stacking random forests and conditional random fields. Pattern Recognition Letters, 2016, 80:208-215 doi: 10.1016/j.patrec.2016.06.024 [15] Bao C L, Ji H, Quan Y H, Shen Z W. Dictionary learning for sparse coding:algorithms and convergence analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(7):1356-1369 doi: 10.1109/TPAMI.2015.2487966 [16] Yang J M, Yang M H. Top-down visual saliency via joint CRF and dictionary learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(3):576-588 doi: 10.1109/TPAMI.2016.2547384 [17] Yang E, Gwak J, Jeon M. Conditional random field (CRF)-boosting:constructing a robust online hybrid boosting multiple object tracker facilitated by CRF learning. Sensors, 2017, 17(3):617 doi: 10.3390/s17030617 [18] Liu T, Huang X T, Ma J S. Conditional random fields for image labeling. Mathematical Problems in Engineering, 2016, 2016: Article ID 3846125 [19] Lv P Y, Zhong Y F, Zhao J, Jiao H Z, Zhang L P. Change detection based on a multifeature probabilistic ensemble conditional random field model for high spatial resolution remote sensing imagery. IEEE Geoscience & Remote Sensing Letters, 2016, 13(12):1965-1969 https://ieeexplore.ieee.org/document/7731208 [20] 钱生, 陈宗海, 林名强, 张陈斌.基于条件随机场和图像分割的显著性检测.自动化学报, 2015, 41(4):711-724 http://www.aas.net.cn/CN/abstract/abstract18647.shtmlQian Sheng, Chen Zong-Hai, Lin Ming-Qiang, Zhang Chen-Bin. Saliency detection based on conditional random field and image segmentation. Acta Automatica Sinica, 2015, 41(4):711-724 http://www.aas.net.cn/CN/abstract/abstract18647.shtml [21] Wang Z, Zhu S Q, Li Y H, Cui Z Z. Convolutional neural network based deep conditional random fields for stereo matching. Journal of Visual Communication & Image Representation, 2016, 40:739-750 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=c784f7eb1578e1bfa06238c4fb50b4ea [22] Szummer M, Kohli P, Hoiem D. Learning CRFs using graph cuts. In: Proceedings of European Conference on Computer Vision, Lecture Notes in Computer Science, vol.5303. Berlin, Heidelberg: Springer, 2008. 582-595 [23] Kolmogorov V, Zabin R. What energy functions can be minimized via graph cuts? IEEE Transactions on Pattern Analysis & Machine Intelligence, 2004, 26(2):147-159 http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0214863408/ [24] Kingma D P, Ba J. Adam: a method for stochastic optimization. In: Proceedings of the 3rd International Conference for Learning Representations. San Diego, 2015. [25] Mairal J, Bach F, Ponce J. Task-driven dictionary learning. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2012, 34(4):791-804 http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0225672733/ -

下载:

下载: