-

摘要: 顺序回归是一种标签具有序信息的多分类问题,广泛存在于信息检索、推荐系统、情感分析等领域.随着互联网、移动通信等技术的发展,面对大量具有大规模、高维、稀疏等特征的数据,传统的顺序回归算法往往表现不足.非平行支持向量顺序回归模型具有适应性强,在性能上优于其他基于SVM的方法等优点,该文在此模型基础上提出基于L2损失的大规模线性非平行支持向量顺序回归模型,其中线性模型的设计可处理大规模数据,基于L2的损失可使标签偏离较大的样本得到更大惩罚.此外,该文从模型的两种不同角度分别设计了信赖域牛顿算法和坐标下降算法求解该线性模型,并比较了两种算法在性能上的差异.为验证模型的有效性,该文在大量数据集上对提出的模型及算法进行了分析,结果表明,该文提出的模型表现最优,尤其采用坐标下降算法求解的该模型在数据集上获得了最好的测试性能.Abstract: Ordinal regression, where the labels of the samples exhibit a natural ordering, is a kind of multi-classification problem. It has found wide applications in information retrieval, recommendation systems, and sentiment analysis. With the development of internet and mobile communication technology, traditional ordinal regression models often underperform when facing numerous large scale, high dimensional and sparse data. However, the nonparallel support vector ordinal regression model shows its advantages with strong adaptability and better performance compared with other SVM-based models. Based on this model, this paper presents a new L2-loss linear nonparallel support vector ordinal regression model, whose linear model could deal with large-scale problems and whose L2-loss could give a great punishment to the sample that deviates from the true label. Besides, two algorithms:trust region Newton method and the dual coordinate descent method (DCD) are developed in terms of different perspectives of the model and their performances are compared. To verify the effectiveness of the model, experiments are conducted on numerous datasets and the results show that the proposed model, especially the model with the DCD algorithm can achieve the state-of-art performance.

-

Key words:

- Ordinal regression /

- SVM /

- trust region Newton method /

- dual coordinate descent method

1) 本文责任编委 何海波 -

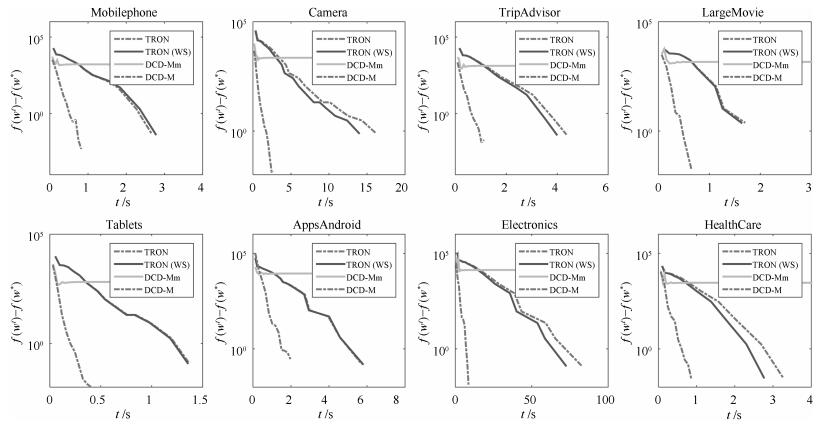

图 2 TRON, TRON (WS), DCD-M and DCD-Mm在8个数据集上的比较(这里展示了类别3对应的优化问题).横坐标是时间$t$, 纵坐标为L2-NPSVOR原问题目标函数的$f(\boldsymbol{w}^t)-f(\boldsymbol{w}^*)$的值

Fig. 2 Comparison of TRON, TRON (WS), DCD-M and DCD-Mm on eight datasets (Show the optimization model for rank 3). The horizontal axis is training time in seconds and the vertical axis is the difference between $f(\boldsymbol{w}^t)$ and $f(\boldsymbol{w}^*$)

表 1 数据集特征描述

Table 1 Data statistics

数据集 样本($n$) 特征($m$) 非零元素个数 类别 类别分布 AmazonMp3 10 391 65 487 1 004 435 5 ≈ 2 078 VideoSurveillance 22 281 119 793 1 754 092 5 ≈ 4 456 Tablets 35 166 201 061 3 095 663 5 ≈ 7 033 Mobilephone 69 891 265 432 5 041 894 5 ≈ 13 978 Cameras 138 011 654 268 14 308 676 5 ≈ 27 602 TripAdvisor 65 326 404 778 8 687 561 5 ≈ 13 065 Treebank 11 856 8 569 98 883 5 ≈ 2 371 MovieReview 5 007 55 020 961 379 4 ≈ 1 251 LargeMovie 50 000 309 362 6 588 192 8 ≈ 6 250 Electronics 409 041 1 422 181 37 303 259 5 ≈ 81 808 HealthCare 82 251 283 542 5 201 794 5 ≈ 16 450 AppsAndroid 220 566 253 932 6 602 522 5 ≈ 44 113 HomeKitchen 120 856 427 558 8 473 465 5 ≈ 24 171 表 2 方法在各数据集上测试结果, 包括MAE、MSE和最优参数下的训练时间(s)

Table 2 Test results for each dataset and method, including MAE, MSE and training time (s)

数据集 指标 L1-SVC L2-SVC SVR RedSVM NPSVOR L2-NPSVOR (TRON) L2-NPSVOR (DCD) AmazonMp3 MAE 0.564 0.557 0.534 0.535 0.488 0.481 0.481 MSE 0.996 0.987 0.732 0.735 0.699 0.670 0.683 TIME 0.209 0.660 0.031 0.186 0.165 0.830 0.144 VideoSurveillance MAE 0.404 0.391 0.426 0.446 0.376 0.371 0.372 MSE 0.709 0.668 0.578 0.592 0.511 0.493 0.491 TIME 0.433 1.708 0.087 0.551 0.492 1.996 0.402 Tablets MAE 0.306 0.299 0.334 0.346 0.280 0.278 0.278 MSE 0.514 0.496 0.444 0.444 0.373 0.362 0.363 TIME 0.821 3.400 0.198 1.029 0.948 2.958 0.674 Mobilephone MAE 0.431 0.419 0.450 0.444 0.391 0.388 0.385 MSE 0.736 0.705 0.604 0.587 0.536 0.524 0.522 TIME 1.811 7.574 0.353 1.909 2.330 6.724 1.605 Cameras MAE 0.246 0.240 0.273 0.301 0.227 0.232 0.226 MSE 0.394 0.381 0.357 0.375 0.296 0.299 0.298 TIME 9.552 34.480 1.401 6.016 6.341 30.132 5.388 TripAdvisor MAE 0.398 0.388 0.433 0.429 0.365 0.365 0.366 MSE 0.611 0.583 0.539 0.523 0.445 0.449 0.452 TIME 2.331 12.778 0.807 2.110 2.857 9.238 3.505 Treebank MAE 0.907 0.841 0.784 0.752 0.763 0.806 0.756 MSE 1.652 1.455 1.116 1.049 1.126 1.229 1.068 TIME 0.025 0.040 0.004 0.015 0.026 0.035 0.024 MovieReview MAE 0.501 0.490 0.448 0.447 0.432 0.436 0.431 MSE 0.615 0.582 0.486 0.485 0.476 0.476 0.475 TIME 0.121 0.429 0.029 0.133 0.130 0.373 0.125 LargeMovie MAE 1.205 1.176 1.182 1.093 0.992 1.008 1.002 MSE 3.617 3.502 2.469 2.225 2.046 2.075 2.020 TIME 3.311 10.416 0.328 1.965 2.569 7.523 2.493 Electronics MAE 0.592 0.590 0.606 0.620 0.529 0.526 0.520 MSE 1.069 1.050 0.840 0.848 0.747 0.736 0.731 TIME 22.316 168.141 4.878 10.736 23.075 116.586 18.062 HealthCare MAE 0.637 0.621 0.660 0.681 0.591 0.590 0.589 MSE 1.338 1.282 1.004 1.062 0.945 0.920 0.929 TIME 2.098 7.429 0.439 2.686 2.954 6.365 2.673 AppsAndroid MAE 0.640 0.616 0.656 0.659 0.584 0.590 0.584 MSE 1.179 1.106 0.922 0.920 0.844 0.872 0.859 TIME 4.043 14.924 0.634 1.603 4.574 11.423 6.290 HomeKitchen MAE 0.585 0.574 0.597 0.609 0.519 0.519 0.510 MSE 1.050 1.015 0.829 0.842 0.745 0.723 0.720 TIME 5.587 19.393 0.896 1.786 5.171 19.560 4.475 平均排序 MAE 5.64 4.57 5.64 5.86 2.50 2.21 1.57 MSE 7.00 6.00 4.36 4.29 2.36 2.29 1.64 TIME 3.57 6.79 1.00 3.29 4.07 6.21 3.07 -

[1] Nakov P, Ritter A, Rosenthal S, Sebastiani F, Stoyanov V. SemEval-2016 task 4:sentiment analysis in Twitter. In:Proceedings of the 10th International Workshop on Semantic Evaluation. San Diego, CA, USA:ACL, 2016. 1-18 [2] Dikkers H, Rothkrantz L. Support vector machines in ordinal classification:An application to corporate credit scoring. Neural Network World, 2005, 15(6):491 http://cn.bing.com/academic/profile?id=24de1d6567f75a19b2e740ee74907f6e&encoded=0&v=paper_preview&mkt=zh-cn [3] Chang K Y, Chen C S, Hung Y P. Ordinal hyperplanes ranker with cost sensitivities for age estimation. In:Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Providence, RI, USA:IEEE, 2011. 585-592 https://www.researchgate.net/publication/224254798_Ordinal_hyperplanes_ranker_with_cost_sensitivities_for_age_estimation [4] Gutiérrez P A, Pérez-Ortiz M, Sánchez-Monedero J, Fernández-Navarro F, Hervás-Martínez C. Ordinal regression methods:survey and experimental study. IEEE Transactions on Knowledge and Data Engineering, 2016, 28(1):127-146 doi: 10.1109/TKDE.2015.2457911 [5] 张学工.关于统计学习理论与支持向量机.自动化学报, 2000, 26(1):32-42 doi: 10.3969/j.issn.1003-8930.2000.01.008Zhang Xue-Gong. Introduction to statistical learning theory and support vector machines. Acta Automatica Sinica, 2000, 26(1):32-42 doi: 10.3969/j.issn.1003-8930.2000.01.008 [6] Chu W, Keerthi S S. Support vector ordinal regression. Neural Computation, 2007, 19(3):792-815 doi: 10.1162/neco.2007.19.3.792 [7] Lin H T, Li L. Reduction from cost-sensitive ordinal ranking to weighted binary classification. Neural Computation, 2012, 24(5):1329-1367 doi: 10.1162/NECO_a_00265 [8] Pérez-Ortiz M, Gutiérrez P A, Hervás-Martínez C. Projection-based ensemble learning for ordinal regression. IEEE Transactions on Cybernetics, 2014, 44(5):681-694 doi: 10.1109/TCYB.2013.2266336 [9] Chang K W, Hsieh C J, Lin C J. Coordinate descent method for large-scale L2-loss linear support vector machines. The Journal of Machine Learning Research, 2008, 9:1369-1398 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=CC0210084282 [10] Wang H D, Shi Y, Niu L F, Tian Y J. Nonparallel support vector ordinal regression. IEEE Transactions on Cybernetics, 2017, 47(10):3306-3317 doi: 10.1109/TCYB.2017.2682852 [11] Hsieh C J, Chang K W, Lin C J, Keerthi S S, Sundararajan S. A dual coordinate descent method for large-scale linear SVM. In:Proceedings of the 25th International Conference on Machine Learning. New York, USA:ACM, 2008. 408-415 https://www.researchgate.net/publication/215601307_A_Dual_Coordinate_Descent_Method_for_Large-scale_Linear_SVM [12] Ho C H, Lin C J. Large-scale linear support vector regression. The Journal of Machine Learning Research, 2012, 13(1):3323-3348 http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0231088023/ [13] Lin C J, Moré J J. Newton's method for large bound-constrained optimization problems. SIAM Journal on Optimization, 1999, 9(4):1100-1127 doi: 10.1137/S1052623498345075 [14] Lin C J, Weng R C, Keerthi S S. Trust region newton method for logistic regression. The Journal of Machine Learning Research, 2008, 9:627-650 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=CC024921794 [15] Hsia C Y, Zhu Y, Lin C J. A study on trust region update rules in newton methods for large-scale linear classification. In:Proceedings of the 9th Asian Conference on Machine Learning (ACML). Seoul, South Korea:ACML, 2017 [16] Chiang W L, Lee M C, Lin C J. Parallel Dual coordinate descent method for large-scale linear classification in multi-core environments. In:Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. San Francisco, USA:ACM, 2016. 1485-1494 https://www.researchgate.net/publication/310825079_Parallel_Dual_Coordinate_Descent_Method_for_Large-scale_Linear_Classification_in_Multi-core_Environments [17] Yuan G X, Chang K W, Hsieh C J, Lin C J. A comparison of optimization methods and software for large-scale l1-regularized linear classification. The Journal of Machine Learning Research, 2010, 11:3183-3234 http://cn.bing.com/academic/profile?id=37aed315925f78d33806d3741e753dbb&encoded=0&v=paper_preview&mkt=zh-cn [18] Tseng P, Yun S. A coordinate gradient descent method for nonsmooth separable minimization. Mathematical Programming, 2009, 117(1-2):387-423 doi: 10.1007/s10107-007-0170-0 [19] Joachims T. Making Large-scale SVM Learning Practical, Technical Report, SFB 475:Komplexitätsreduktion in Multivariaten Datenstrukturen, Universität Dortmund, Germany, 1998 [20] Wang H N, Lu Y, Zhai C X. Latent aspect rating analysis on review text data:a rating regression approach. In:Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Washington, DC, USA:ACM, 2010. 783-792 https://www.researchgate.net/publication/221653225_Latent_Aspect_Rating_Analysis_on_Review_Text_Data_A_Rating_Regression_Approach [21] Pang B, Lee L. Seeing stars:exploiting class relationships for sentiment categorization with respect to rating scales. In:Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics. Association for Computational Linguistics. Ann Arbor, USA:ACL, 2005. 115-124 [22] McAuley J, Targett C, Shi Q F, van den Hengel A. Image-based recommendations on styles and substitutes. In:Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval. Santiago, Chile:ACM, 2015. 43-52 https://www.researchgate.net/publication/278734421_Image-Based_Recommendations_on_Styles_and_Substitutes [23] McAuley J, Pandey R, Leskovec J. Inferring networks of substitutable and complementary products. In Proceedings of the 21st ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Sydney, Australia:ACM, 2015. 785-794 https://dl.acm.org/citation.cfm?id=2783381 [24] Tang D Y, Qin B, Liu T. Document modeling with gated recurrent neural network for sentiment classification. In:Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal:ACL, 2015. 1422-1432 [25] Diao Q M, Qiu M H, Wu C Y, Smola A J, Jiang J, Wang C. Jointly modeling aspects, ratings and sentiments for movie recommendation (JMARS). In:Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, USA:ACM, 2014. 193-202 -

下载:

下载: