-

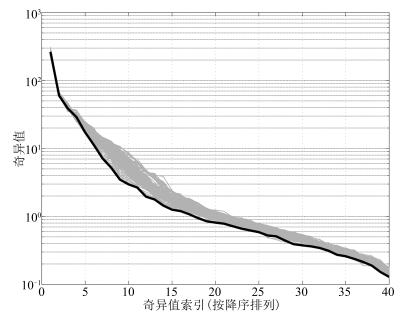

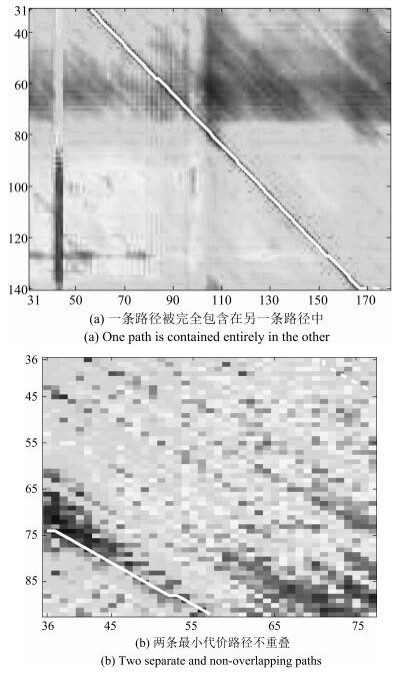

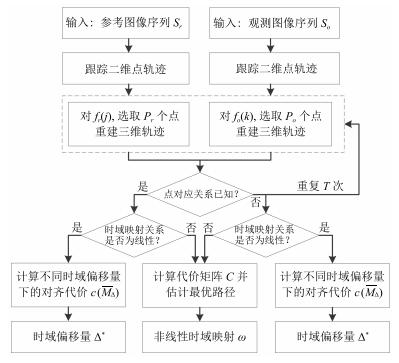

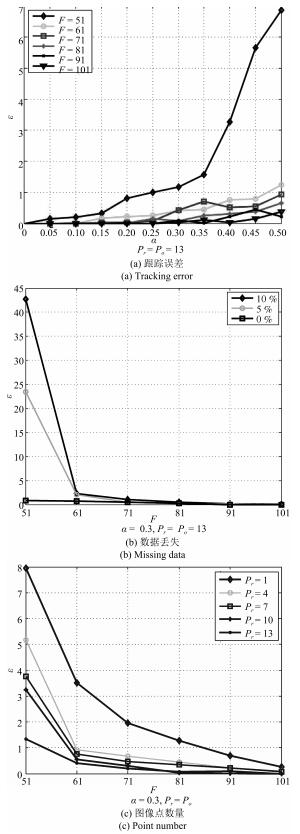

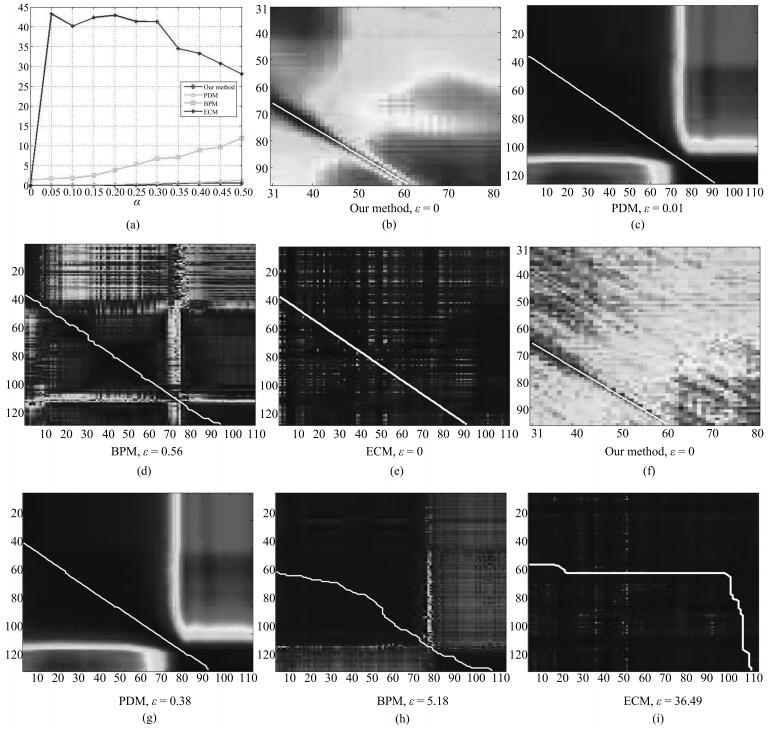

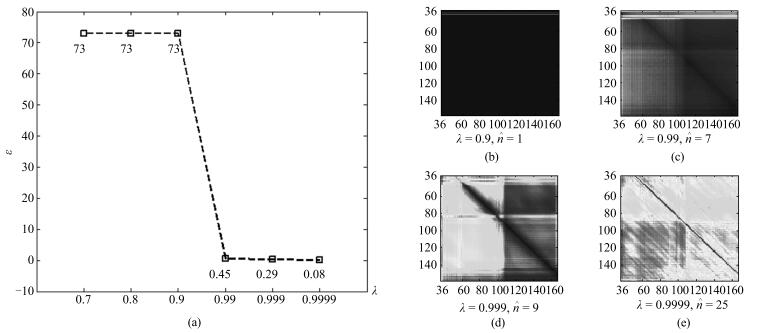

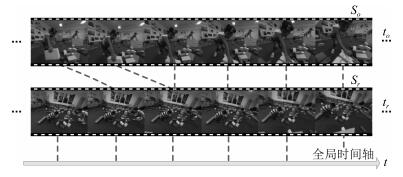

摘要: 提出一种利用运动目标三维轨迹重建的视频时域同步算法.待同步的视频序列由不同相机在同一场景中同时拍摄得到,对场景及相机运动不做限制性约束.假设每帧图像的相机投影矩阵已知,首先基于离散余弦变换基重建运动目标的三维轨迹.然后提出一种基于轨迹基系数矩阵的秩约束,用于衡量不同序列子段间的空间时间对准程度.最后构建代价矩阵,并利用基于图的方法实现视频间的非线性时域同步.我们不依赖已知的点对应关系,不同视频中的跟踪点甚至可以对应不同的三维点,只要它们之间满足以下假设:观测序列中跟踪点对应的三维点,其空间位置可以用参考序列中所有跟踪点对应的三维点集的子集的线性组合描述,且该线性关系维持不变.与多数现有方法要求特征点跟踪持续整个图像序列不同,本文方法可以利用长短不一的图像点轨迹.本文在仿真数据和真实数据集上验证了提出方法的鲁棒性和性能.Abstract: We present an algorithm for synchronization of an arbitrary number of videos captured by cameras independently moving in a dynamic 3D scene. Assuming the 3D spatial poses of the cameras are known for each frame, we first reconstruct the 3D trajectory of a moving point using the trajectory basis-based method. The trajectory coefficients are computed for each sequence separately. Point correspondences across sequences are not required, or even it is possible to track different points in different sequences, only if every 3D point tracked in the second sequence is a linear combination of subsets of the 3D points tracked in the first sequence. Then we propose use a robust rank constraint of the coefficient matrices to measure the spatio-temporal alignment quality for every feasible pair of video fragments. Finally, the optimal temporal mapping is found using a graph-based approach. Our algorithm can use both short and long feature trajectories, and it is robust to mild outliers. We verify the robustness and performance of the proposed approach on synthetic data as well as on challenging real video sequences.1) 本文责任编委 黄庆明

-

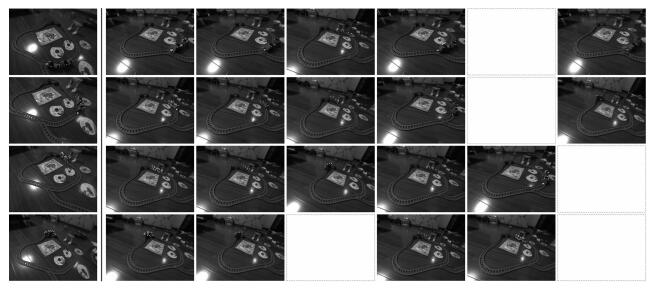

图 9 积木场景中各算法的时域对准结果对比(从左到右依次为:参考序列中的图像帧、本文算法、PDM、BPM、ECM、MFM和SMM找到的第二个序列中的对应帧(上)及第三个序列中的对应帧(下))

Fig. 9 Synchronization results on the blocks scene (From left to right: sample frames from the reference sequence, corresponding frames from the second sequence (top) and the third sequence (bottom) by our method, PDM, BPM, ECM, MFM and SMM, respectively.)

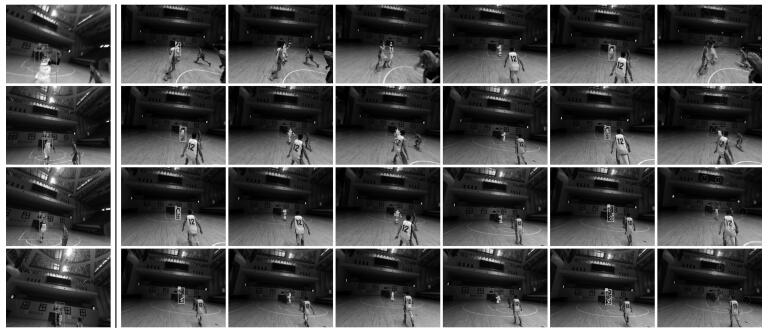

图 11 篮球#1场景中各算法的时域对准结果对比(从左到右依次为:参考序列中的图像帧、本文算法、PDM、BPM、ECM、MFM和SMM找到的第二个序列中的对应帧)

Fig. 11 Synchronization results on the basketball scene (#1) (From left to right: sample frames from the reference sequence, corresponding frames from the second sequence by our method, PDM, BPM, ECM, MFM and SMM, respectively.)

表 1 真实数据集上各算法的归一化时域对准误差对比(帧)

Table 1 Quantitative comparisons of alignment error on real scenes (frame)

积木#1 积木#2 健身毯#1 健身毯#2 篮球#1 篮球#2 玩具火车 BPM (手动标记点轨迹) 39.61 9.16 12.05 15.63 16.81 12.42 56.80 ECM (手动标记点轨迹) 25.15 32.37 57.48 62.60 50.44 29.83 24.86 MFM (自动跟踪点轨迹) 11.81 21.70 22.17 9.44 17.68 22.78 70.04 SMM (SIFT) 155.75 196.56 132.08 202.50 9.71 31.74 130.83 PDM (手动标记点轨迹) 0.85 2.53 2.96 4.60 4.29 1.49 1.28 本文算法(手动标记点轨迹) 0.45 1.27 2.52 2.76 3.07 1.12 1.33 本文算法(自动跟踪点轨迹) 0.52 1.74 1.35 1.48 2.84 1.54 3.18 本文算法(手动标记和自动跟踪) 0.56 1.40 2.07 1.99 3.75 0.92 2.01 -

[1] Caspi Y, Irani M. Spatio-temporal alignment of sequences. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(11):1409-1424 doi: 10.1109/TPAMI.2002.1046148 [2] Caspi Y, Simakov D, Irani M. Feature-based sequence-tosequence matching. International Journal of Computer Vision, 2006, 68(1):53-64 doi: 10.1007/s11263-005-4842-z [3] Lu C, Mandal M. A robust technique for motion-based video sequences temporal alignment. IEEE Transactions on Multimedia, 2013, 15(1):70-82 doi: 10.1109/TMM.2012.2225036 [4] Pundik D, Moses Y. Video synchronization using temporal signals from epipolar lines. In:Proceedings of the 11th European Conference on Computer Vision. Heraklion, Crete, Greece:Springer Berlin Heidelberg, 2010. 15-28 [5] Pádua F, Carceroni F, Santos G, Kutulakos K. Linear sequence-to-sequence alignment. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(2):304-320 doi: 10.1109/TPAMI.2008.301 [6] Yilmaz A, Shah M. Matching actions in presence of camera motion. Computer Vision and Image Understanding, 2006, 104(2-3):221-231 doi: 10.1016/j.cviu.2006.07.012 [7] Rao C, Gritai A, Shah M, Syeda-Mahmood T. Viewinvariant alignment and matching of video sequences. In:Proceedings of the 9th IEEE International Conference on Computer Vision. Nice, France:IEEE, 2003. 939-945 [8] Tresadern P A, Reid I D. Video synchronization from human motion using rank constraints. Computer Vision and Image Understanding, 2009, 113(8):891-906 doi: 10.1016/j.cviu.2009.03.012 [9] Wolf L, Zomet A. Correspondence-free synchronization and reconstruction in a non-rigid scene. In:Proceedings of the 7th European Conference on Computer Vision, Workshop on Vision and Modelling of Dynamic Scenes. Copenhagen, Denmark:Springer Berlin Heidelberg, 2002. [10] Wolf L, Zomet A. Wide baseline matching between unsynchronized video sequences. International Journal of Computer Vision, 2006, 68(1):43-52 doi: 10.1007/s11263-005-4841-0 [11] Sand P, Teller S. Video matching. ACM Transactions on Graphics, 2004, 23(3):592-599 doi: 10.1145/1015706 [12] Evangelidis G D, Bauckhage C. Efficient subframe video alignment using short descriptors. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(10):2371-2386 doi: 10.1109/TPAMI.2013.56 [13] Serrat J, Diego F, Lumbreras F, Álvarez J M. Synchronization of video sequences from free-moving camreas. In:Proceedings of the 3rd Iberian Conference on Pattern Recognition and Image Analysis, Part Ⅱ. Girona, Spain:Springer Berlin Heidelberg, 2007. 620-627 [14] Diego F, Ponsa D, Serrat J, López A M. Video alignment for change detection. IEEE Transactions on Image Processing, 2011, 20(7):1858-1869 doi: 10.1109/TIP.2010.2095873 [15] Diego F, Serrat J, López A M. Joint spatio-temporal alignment of sequences. IEEE Transactions on Multimedia, 2013, 15(6):1377-1387 doi: 10.1109/TMM.2013.2247390 [16] Wang O, Schroers C, Zimmer H, Gross M, Sorkine-Hornung A. VideoSnapping:interactive synchronization of multiple videos. ACM Transactions on Graphics, 2014, 33(4):77:1-77:10 http://dblp.uni-trier.de/db/journals/tog/tog33.html#WangSZGS14 [17] Tuytelaars T, van Gool L. Synchronizing video sequences. In:Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Washington D C, USA:IEEE, 2004. 762-768 [18] Lei C, Yang Y. Trifocal tensor-based multiple video synchronization with subframe optimization. IEEE Transactions on Image Processing, 2006, 15(9):2473-2480 doi: 10.1109/TIP.2006.877438 [19] Dexter E, Pérez P, Laptev I. Multi-view synchronization of human actions and dynamic scenes. In:Proceedings of the 2009 British Machine Vision Conference. London, UK:BMVA Press, 2009. 122:1-122:11 [20] Akhter I, Sheikh Y, Khan S, Kanade T. Nonrigid strcture from motion in trajectory space. In:Proceedings of the 2008 Advances in Neural Information Processing Systems. Vancouver, Canada:NIPS, 2008. 41-48 [21] Park H S, Shiratori T, Matthews I, Sheikh Y. 3D reconstruction of a moving point from a series of 2D projections. In:Proceedings of the 11th European Conference on Computer Vision. Heraklion, Crete, Greece:Springer, 2010. 158-171 [22] Kutulakos K N, Vallino J. Affine object representations for calibration-free augmented reality. In:Proceedings of the 1996 IEEE Virtual Reality Annual International Symposium. Washington DC, USA:IEEE, 1996. 25-36 [23] Fragkiadaki K, Zhang W J, Zhang G, Shi J B. Twogranularity tracking:mediating trajectory and detection graphs for tracking under occlusions. In:Proceedings of the 12th European Conference on Computer Vision. Florence, Italy:Springer, 2012. 552-565 [24] Lucas B D, Kanade T. An interative image registration technique with an application to stereo vision. In:Proceedings of the 7th International Joint Conference on Artificial Intelligence. Vancouver, Canada:Morgan Kaufmann Publishers Inc., 1981. 674-679 [25] Snavely N, Seitz S M, Szeliski R. Photo tourism:exploring photo collections in 3D. ACM Transactions on Graphics, 2006, 25(3):835-846 doi: 10.1145/1141911 [26] Hartley R I, Zisserman A. Multiple View Geometry in Computer Vision (2nd edition). Cambridge:Cambridge University Press, 2004. [27] Park H S, Jain E, Sheikh Y. 3D gaze concurrences from head-mounted cameras. In:Proceedings of the 2012 Advances in Neural Information Processing Systems. Nevada, USA:NIPS, 2012. 422-430 -

下载:

下载: