-

摘要: 谷歌的围棋系统阿法狗(AlphaGo)在三月的比赛中以4:1的成绩击败了围棋世界冠军李世石, 大大超过了许多人对计算机围棋程序何时能赶上人类职业高手的预期(约10~30年).本文在技术层面分析了阿法狗系统的组成部分, 并基于它过去的公开对局预测了它可能的弱点.Abstract: In March 2016, the AlphaGo system from Google DeepMind beat the World Go Champion Lee Sedol 4:1 in a historic five-game match. This is a giant leap filling the gap between Go AI and top human professional players, which was once regarded to be filled in at least 10~30 years. In this paper, based on published results [Silver et al., 2016], i analyze the components of AlphaGo and predict its potential technical weakness based on the public games of AlphaGo.

-

Key words:

- Deep learning /

- deep convolutional neural network /

- computer Go /

- reinforcement learning /

- AlphaGo

-

表 1 阿法狗在快速走子中使用的盘面特征

Table 1 Input features for rollout and tree policy

Feature # of patterns Description Response 1 Whether move matches one or more response pattern features Save atari 1 Move saves stone(s) from capture Neighbour 8 Move is 8-connected to previous move Nakade 8 192 Move matches a nakade pattern at captured stone Response pattern 32 207 Move matches 12-point diamond pattern near previous move Non-response pattern 69 338 Move matches 3 £ 3 pattern around move Self-atari 1 Move allows stones to be captured Last move distance 34 Manhattan distance to previous two moves Non-response pattern 32 207 Move matches 12-point diamond pattern centred around move (Features used by the rollout pollcy (the frst set) and tree policy (the frst and second sets). Patterns are based on stone colour (black/white/empty) and liberties (1, 2,≥3) at each intersection of the pattern.) 表 2 不同版本阿法狗的等级分比较(等级分由一场内部锦标赛决出)

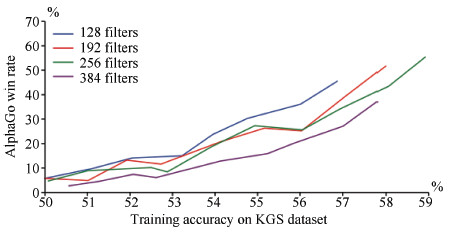

Table 2 Results of a tournament between di®erent variants of AlphaGo

Short name Policy network Value network Rollouts Mixing constant Policy GPUs Value GPUs Elo rating αrvp pσ vθ pπ λ= 0.5 2 6 2 890 αvp pσ vθ - λ= 0 2 6 2 177 αrp pσ - pπ λ= 1 8 0 2 416 αrv [pτ] vθ pπ λ= 0.5 0 8 2 077 αv [pτ] vθ - λ= 0 0 8 1 655 αr [pτ] - pπ λ= 1 0 0 1 457 αp pσ - - - 0 0 1 517 Evaluating positions using rollouts only (αrp; αr), value nets only (αvp; αv), or mixing both (αrvp; αrv); either using the policy network ρσ(αrvp; αvp; αrp) or no policy network (αrvp; αvp; αrp), that is, instead using the placeholder probabilities from the tree policy pτ throughout. Each program used 5 s per move on a single machine with 48 CPUs and 8 GPUs. Elo ratings were computed by BayesElo. -

[1] Silver D, Huang A, Maddison C J, Guez A, Sifre L, van den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, Dieleman S, Grewe D, Nham J, Kalchbrenner N, Sutskever I, Lillicrap T, Leach M, Kavukcuoglu K, Graepel T, Hassabis D. Mastering the game of go with deep neural networks and tree search. Nature, 2016, 529(7587): 484-489 [2] Tian Y D, Zhu Y. Better computer go player with neural network and long-term prediction. In: International Conference on Learning Representation (ICLR). San Juan, Puerto Rico, 2016. -

下载:

下载: