-

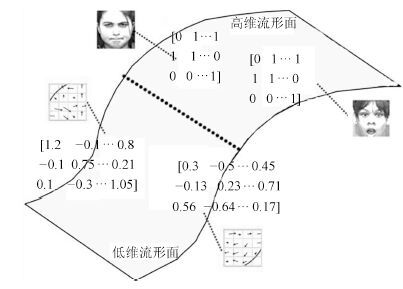

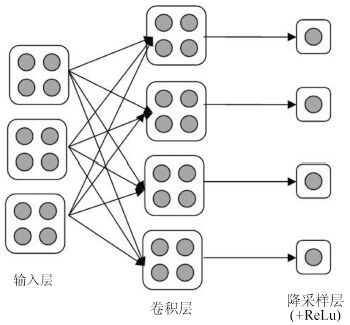

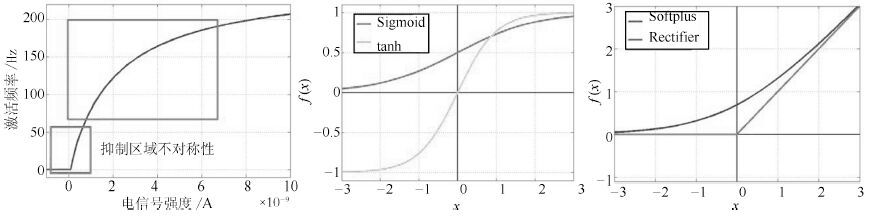

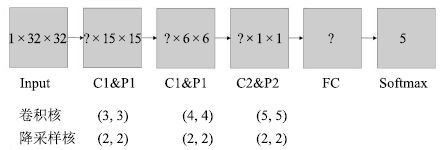

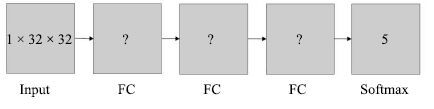

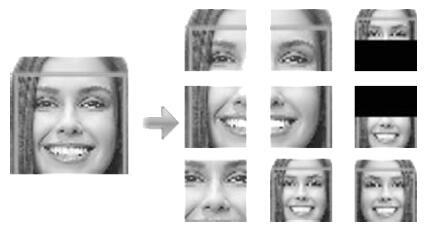

摘要: 深度神经网络已经被证明在图像、语音、文本领域具有挖掘数据深层潜在的分布式表达特征的能力. 通过在多个面部情感数据集上训练深度卷积神经网络和深度稀疏校正神经网络两种深度学习模型, 对深度神经网络在面部情感分类领域的应用作了对比评估. 进而, 引入了面部结构先验知识, 结合感兴趣区域(Region of interest, ROI)和K最近邻算法(K-nearest neighbors, KNN), 提出一种快速、简易的针对面部表情分类的深度学习训练改进方案——ROI-KNN, 该训练方案降低了由于面部表情训练数据过少而导致深度神经网络模型泛化能力不佳的问题, 提高了深度学习在面部表情分类中的鲁棒性, 同时, 显著地降低了测试错误率.Abstract: Deep neural networks have been proved to be able to mine distributed representation of data including image, speech and text. By building two models of deep convolutional neural networks and deep sparse rectifier neural networks on facial expression dataset, we make contrastive evaluations in facial expression recognition system with deep neural networks. Additionally, combining region of interest (ROI) and K-nearest neighbors (KNN), we propose a fast and simple improved method called "ROI-KNN" for facial expression classification, which relieves the poor generalization of deep neural networks due to lacking of data and decreases the testing error rate apparently and generally. The proposed method also improves the robustness of deep learning in facial expression classification.

-

表 1 ROI辅助评估的测试集错误率 (%)

Table 1 Test set error rate of ROI auxiliary (%)

中性 高兴 悲伤 惊讶 愤怒 整体 CNN-64 4.7 32.7 54.3 33 40.3 33.3 CNN-64* 5.6 36.3 59.3 20.0 31.7 30.6 CNN-96* 5.0 36.7 53.3 20.7 24.7 28.6 CNN-128 3.3 32.0 51.0 27.0 37.7 30.2 CNN-128* 3.0 31.0 55.7 18.7 24.3 26.6 DNN-1000 3.0 37.7 65.3 38.3 36.7 36.2 DNN-1000* 2.3 39.0 52.0 30.0 31.7 31.0 DNN-2000* 2.0 43.3 55.0 24.7 32.7 31.5 表 2 旋转生成样本评估的测试集错误率 (%)

Table 2 Test set error rate of rotating generated sample(%)

中性 高兴 悲伤 惊讶 愤怒 整体 CNN-128 3.3 32.0 51.0 27.0 37.7 30.2 CNN-128* 4.7 41.3 52.7 32.7 35.0 33.2 CNN-128+ 3.0 37.0 51.7 15.7 24.0 26.3 CNN-128^ 0.0 30.0 54.0 13.0 26.7 24.7 DNN-1000 3.0 37.7 65.3 38.3 36.7 36.2 DNN-1000* 1.3 39.7 62.0 37.3 42.0 36.5 DNN-1000+ 2.3 41.3 57.0 30.0 35.7 33.3 DNN-1000^ 1.3 43.0 67.7 31.0 33.7 35.3 表 3 ROI-KNN辅助评估的测试集错误率 (%)

Table 3 Test set error rate with ROI-KNN (%)

中性 高兴 悲伤 惊讶 愤怒 整体 CNN-64 5.6 36.3 59.3 20.0 31.7 30.6 CNN-64* 1.0 29.7 56.0 17.0 30.0 26.7 CNN-96 5.0 36.7 53.3 20.7 24.7 28.6 CNN-96* 0.3 26.0 56.3 16.0 26.7 25.8 CNN-128 3.0 31.0 55.7 18.7 24.3 26.6 CNN-128* 0.6 22.7 57.0 12.0 26.3 23.7 DNN-1000 2.3 39.0 52.0 30.0 31.7 31.0 DNN-1000* 0.3 37.3 61.0 31.7 31.0 32.2 DNN-2000 2.0 43.3 55.0 24.7 32.7 31.5 DNN-2000* 0.3 40.0 68.0 26.3 33.3 33.6 -

[1] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems 25. Lake Tahoe, Nevada, USA: Curran Associates, Inc., 2012. 1097-1105 [2] Lopes A T, de Aguiar E, Oliveira-Santos T. A facial expression recognition system using convolutional networks. In: Proceedings of the 28th SIBGRAPI Conference on Graphics, Patterns and Images. Salvador: IEEE, 2015. 273-280 [3] Lucey P, Cohn J F, Kanade T, Saragih J, Ambadar Z, Matthews I. The extended Cohn-Kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression. In: Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). San Francisco, CA: IEEE, 2010. 94-101 [4] Bishop C M. Pattern Recognition and Machine Learning. New York: Springer, 2007. [5] Bengio Y. Learning deep architectures for AI. Foundations and Trends in Machine Learning. Hanover, MA, USA: Now Publishers Inc., 2009. 1-127 [6] LeCun Y, Boser B, Denker J S, Howard R E, Hubbard W, Jackel L D, Henderson D. Handwritten digit recognition with a back-propagation network. In: Proceedings of Advances in Neural Information Processing Systems 2. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc., 1990. 396-404 [7] Fukushima K. Neocognitron: a self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 1980, 36(4): 193-202 [8] Rumelhart D E, Hinton G E, Williams R J. Learning representations by back-propagating errors. Nature, 1986, 323(6088): 533-536 [9] LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11): 2278-2324 [10] Szegedy C, Liu W, Jia Y Q, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A. Going deeper with convolutions. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, MA: IEEE, 2015. 1-9 [11] Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks. In: Proceedings of the 14th International Conference on Artificial Intelligence and Statistics (AISTATS). Fort Lauderdale, FL, USA, 2011, 15: 315-323 [12] Barron A R. Universal approximation bounds for superpositions of a sigmoidal function. IEEE Transactions on Information Theory, 1993, 39(3): 930-945 [13] Hubel D H, Wiesel T N, LeVay S. Visual-field representation in layer IV C of monkey striate cortex. In: Proceedings of the 4th Annual Meeting, Society for Neuroscience. St. Louis, US, 1974. 264 [14] Dayan P, Abott L F. Theoretical Neuroscience. Cambridge: MIT Press, 2001. [15] Attwell D, Laughlin S B. An energy budget for signaling in the grey matter of the brain. Journal of Cerebral Blood Flow and Metabolism, 2001, 21(10): 1133-1145 [16] Hinton G E, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov R R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv: 1207.0580, 2012. [17] Darwin C. On the Origin of Species. London: John Murray, Albemarle Street, 1859. [18] Xavier G, Yoshua B. Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the 13th International Conference on Artificial Intelligence and Statistics (AISTATS 2010). Chia Laguna Resort, Sardinia, Italy, 2010, 9: 249-256 [19] Sun Y, Wang X, Tang X. Deep learning face representation from predicting 10000 classes. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Columbus, OH: IEEE, 2014. 1891-1898 [20] Kumbhar M, Jadhav A, Patil M. Facial expression recognition based on image feature. International Journal of Computer and Communication Engineering, 2012, 1(2): 117-119 [21] Lekshmi V P, Sasikumar M. Analysis of facial expression using Gabor and SVM. International Journal of Recent Trends in Engineering, 2009, 1(2): 47-50 [22] Zhao L H, Zhuang G B, Xu X H. Facial expression recognition based on PCA and NMF. In: Proceedings of the 7th World Congress on Intelligent Control and Automation. Chongqing, China: IEEE, 2008. 6826-6829 [23] Zhi R C, Ruan Q Q. Facial expression recognition based on two-dimensional discriminant locality preserving projections. Neurocomputing, 2008, 71(7-9): 1730-1734 [24] Lee C C, Huang S S, Shih C Y. Facial affect recognition using regularized discriminant analysis-based algorithms. EURASIP Journal on Advances in Signal Processing, 2010, article ID 596842(doi: 10.1155/2010/596842) [25] Bastien F, Lamblin P, Pascanu R, Bergstra J, Goodfellow I J, Bergeron A, Bouchard N, Warde-Farley D, Bengio Y. Theano: new features and speed improvements. In: Conference on Neural Information Processing Systems (NIPS) Workshop on Deep Learning and Unsuper Vised Feature Learning. Lake Tahoe, US, 2012. -

下载:

下载: