|

[1]

|

Turing A M. Computing machinery and intelligence. Mind, 1950, 59: 433−460

|

|

[2]

|

Roy N, Posner I, Barfoot T, Beaudoin P, Bengio Y, Bohg J, et al. From machine learning to robotics: Challenges and opportunities for embodied intelligence. arXiv preprint arXiv: 2110.15245, 2021.Roy N, Posner I, Barfoot T, Beaudoin P, Bengio Y, Bohg J, et al. From machine learning to robotics: Challenges and opportunities for embodied intelligence. arXiv preprint arXiv: 2110.15245, 2021.

|

|

[3]

|

Brooks R A. Intelligence without representation. Artificial Intelligence, 1991, 47(1−3): 139−159 doi: 10.1016/0004-3702(91)90053-M

|

|

[4]

|

Brown T B, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P, et al. Language models are few-shot learners. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2020.

|

|

[5]

|

Achiam J, Adler S, Agarwal S, Ahmad L, Akkaya I, Aleman F L, et al. GPT-4 technical report. arXiv preprint arXiv: 2303.08774, 2024.Achiam J, Adler S, Agarwal S, Ahmad L, Akkaya I, Aleman F L, et al. GPT-4 technical report. arXiv preprint arXiv: 2303.08774, 2024.

|

|

[6]

|

Touvron H, Lavril T, Izacard G, Martinet X, Lachaux M A, Lacroix T, et al. LLaMA: Open and efficient foundation language models. arXiv preprint arXiv: 2302.13971, 2023.Touvron H, Lavril T, Izacard G, Martinet X, Lachaux M A, Lacroix T, et al. LLaMA: Open and efficient foundation language models. arXiv preprint arXiv: 2302.13971, 2023.

|

|

[7]

|

Touvron H, Martin L, Stone K, Albert P, Almahairi A, Babaei Y, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv: 2307.09288, 2023.Touvron H, Martin L, Stone K, Albert P, Almahairi A, Babaei Y, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv: 2307.09288, 2023.

|

|

[8]

|

Anil R, Borgeaud S, Alayrac J B, Yu J H, Soricut R, Schalkwyk J, et al. Gemini: A family of highly capable multimodal models. arXiv preprint arXiv: 2312.11805, 2024.Anil R, Borgeaud S, Alayrac J B, Yu J H, Soricut R, Schalkwyk J, et al. Gemini: A family of highly capable multimodal models. arXiv preprint arXiv: 2312.11805, 2024.

|

|

[9]

|

Georgiev P, Lei V I, Burnell R, Bai L B, Gulati A, Tanzer G, et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv preprint arXiv: 2403.05530, 2024.Georgiev P, Lei V I, Burnell R, Bai L B, Gulati A, Tanzer G, et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv preprint arXiv: 2403.05530, 2024.

|

|

[10]

|

Li J N, Li D X, Xiong C M, Hoi S. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. arXiv preprint arXiv: 2201.12086, 2022.Li J N, Li D X, Xiong C M, Hoi S. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. arXiv preprint arXiv: 2201.12086, 2022.

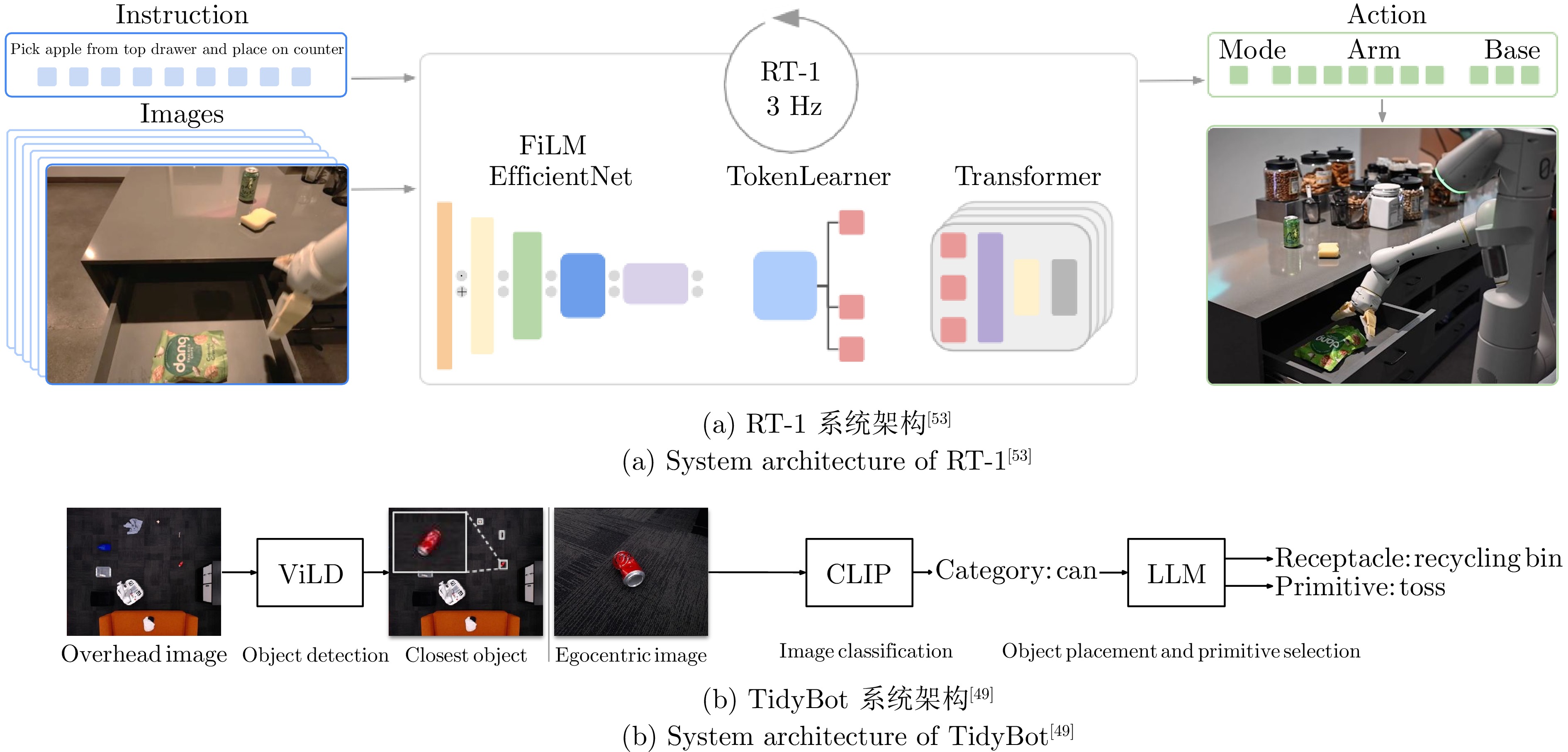

|

|

[11]

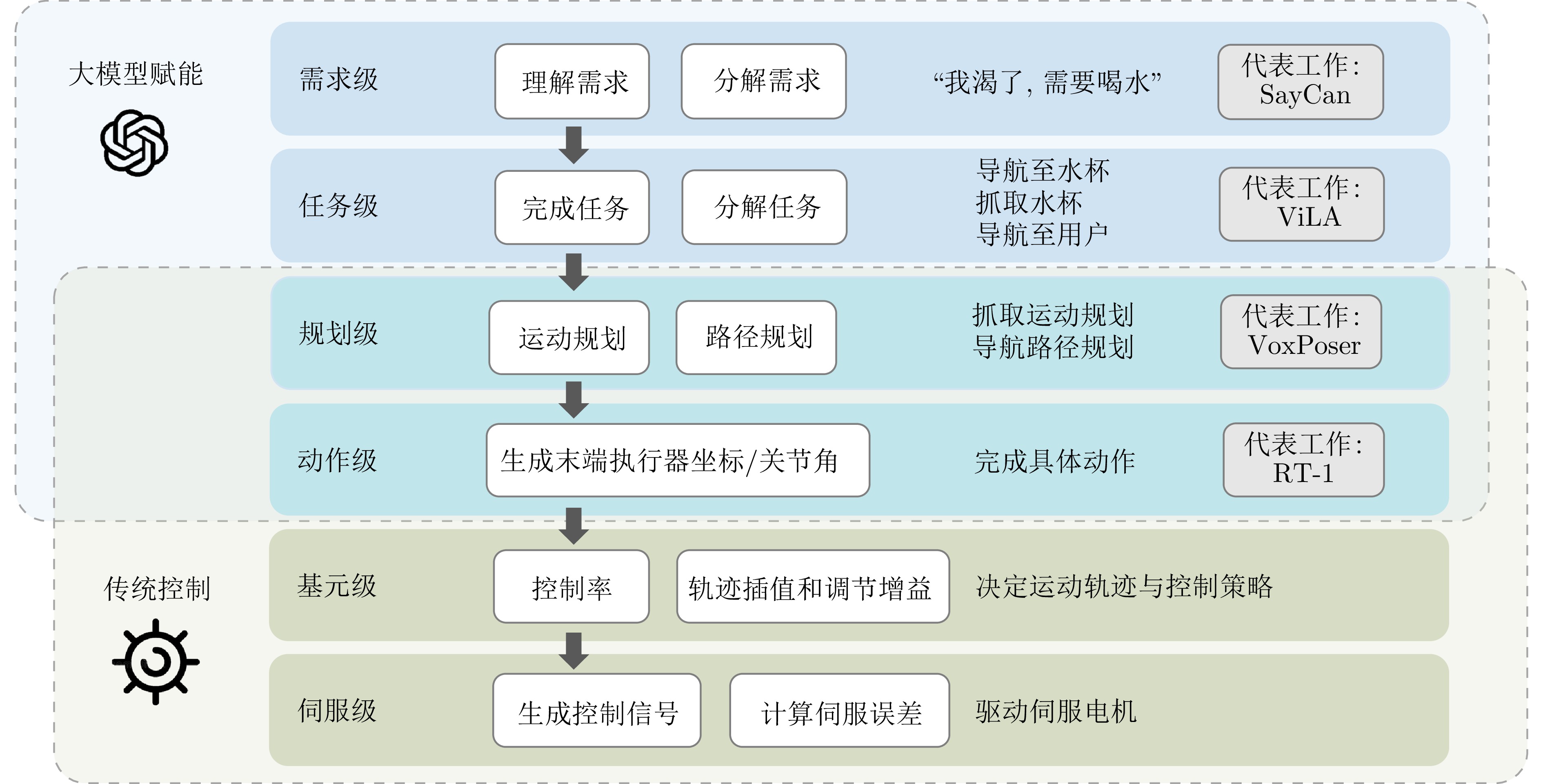

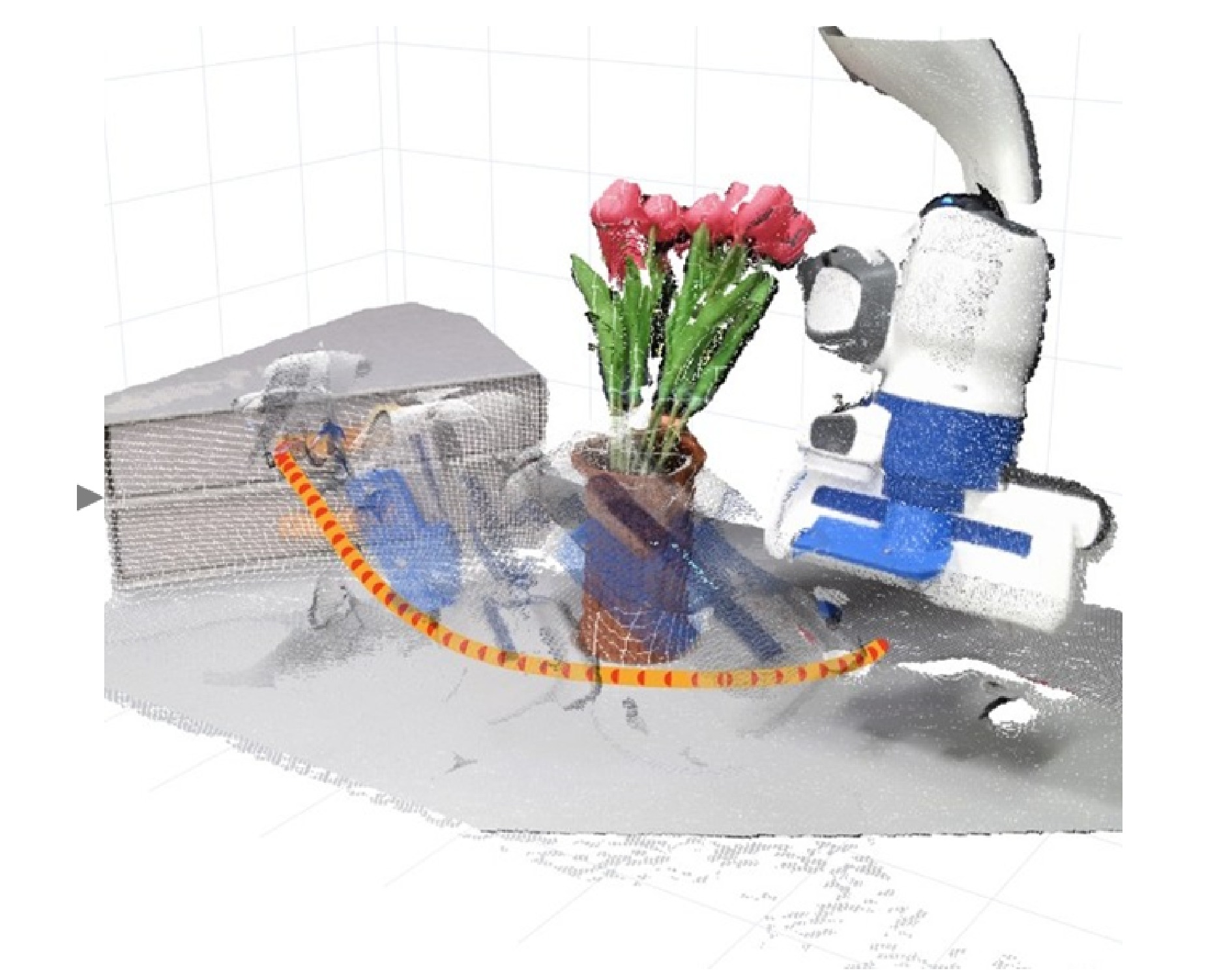

|

Li J N, Li D X, Savarese S, Hoi S C H. BLIP-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In: Proceedings of the International Conference on Machine Learning. Honolulu, USA: PMLR, 2023. 19730−19742

|

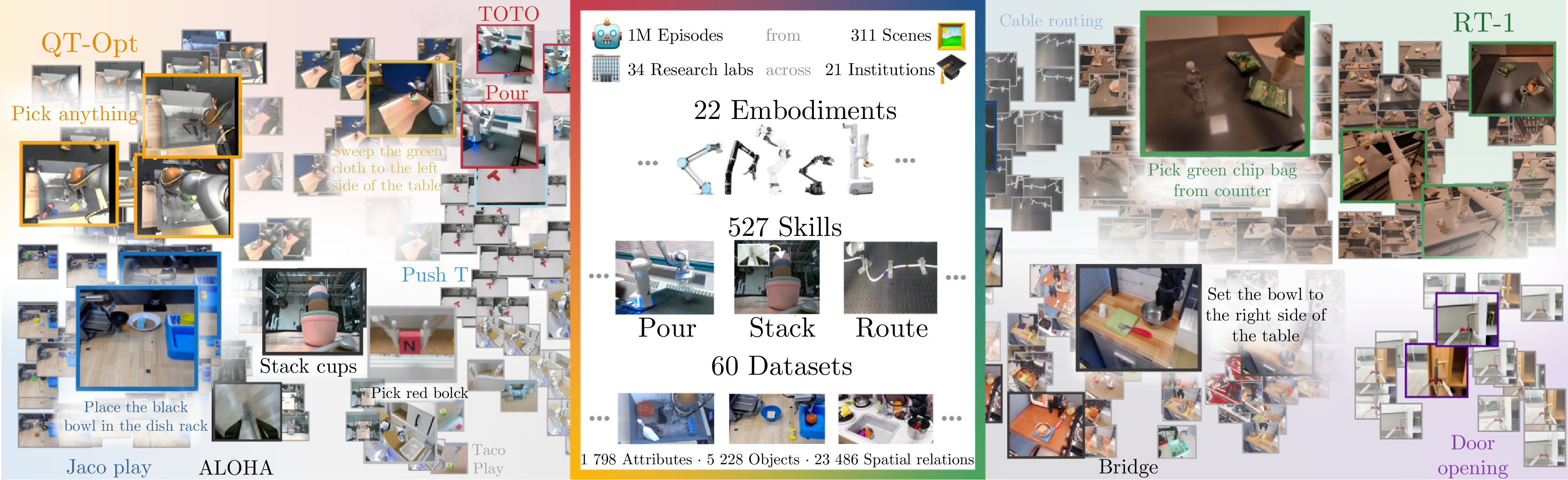

|

[12]

|

Wake N, Kanehira A, Sasabuchi K, Takamatsu J, Ikeuchi K. GPT-4V(ision) for robotics: Multimodal task planning from human demonstration. IEEE Robotics and Automation Letters, 2024, 9(11): 10567−10574 doi: 10.1109/LRA.2024.3477090

|

|

[13]

|

Li B Y, Weinberger K Q, Belongie S J, Koltun V, Ranftl R. Language-driven semantic segmentation. In: Proceedings of the 10th International Conference on Learning Representations. OpenReview.net, 2022.Li B Y, Weinberger K Q, Belongie S J, Koltun V, Ranftl R. Language-driven semantic segmentation. In: Proceedings of the 10th International Conference on Learning Representations. OpenReview.net, 2022.

|

|

[14]

|

Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv preprint arXiv: 2104.13921, 2022.Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv preprint arXiv: 2104.13921, 2022.

|

|

[15]

|

Antol S, Agrawal A, Lu J S, Mitchell M, Batra D, Zitnick C L, et al. VQA: Visual question answering. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). Santiago, Chile: IEEE, 2016.

|

|

[16]

|

Caron M, Touvron H, Misra I, Jégou H, Mairal J, Bojanowski P, et al. Emerging properties in self-supervised vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, Canada: IEEE, 2021.

|

|

[17]

|

Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. PMLR, 2021.Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. PMLR, 2021.

|

|

[18]

|

Kirillov A, Mintun E, Ravi N, Mao H Z, Rolland C, Gustafson L, et al. Segment anything. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023.

|

|

[19]

|

Bommasani R, Hudson D A, Adeli E, Altman R, Arora S, von Arx S, et al. On the opportunities and risks of foundation models. arXiv preprint arXiv: 2108.07258, 2022.Bommasani R, Hudson D A, Adeli E, Altman R, Arora S, von Arx S, et al. On the opportunities and risks of foundation models. arXiv preprint arXiv: 2108.07258, 2022.

|

|

[20]

|

杨雨彤. AI大模型与具身智能终将相遇. 机器人产业, 2024(2): 71−74Yang Yu-Tong. AI large models and embodied intelligence will eventually meet. Robot Industry, 2024(2): 71−74

|

|

[21]

|

Gupta A, Savarese S, Ganguli S, Fei-Fei L. Embodied intelligence via learning and evolution. Nature Communications, 2021, 12(1): Article No. 5721 doi: 10.1038/s41467-021-25874-z

|

|

[22]

|

刘华平, 郭迪, 孙富春, 张新钰. 基于形态的具身智能研究: 历史回顾与前沿进展. 自动化学报, 2023, 49(6): 1131−1154Liu Hua-Ping, Guo Di, Sun Fu-Chun, Zhang Xin-Yu. Morphology-based embodied intelligence: Historical retrospect and research progress. Acta Automatica Sinica, 2023, 49(6): 1131−1154

|

|

[23]

|

兰沣卜, 赵文博, 朱凯, 张涛. 基于具身智能的移动操作机器人系统发展研究. 中国工程科学, 2024, 26(1): 139−148 doi: 10.15302/J-SSCAE-2024.01.010Lan Feng-Bo, Zhao Wen-Bo, Zhu Kai, Zhang Tao. Development of mobile manipulator robot system with embodied intelligence. Strategic Study of CAE, 2024, 26(1): 139−148 doi: 10.15302/J-SSCAE-2024.01.010

|

|

[24]

|

Firoozi R, Tucker J, Tian S, Majumdar A, Sun J K, Liu W Y, et al. Foundation models in robotics: Applications, challenges, and the future. arXiv preprint arXiv: 2312.07843v1, 2023.

|

|

[25]

|

Wang J Q, Wu Z H, Li Y W, Jiang H Q, Shu P, Shi E Z, et al. Large language models for robotics: Opportunities, challenges, and perspectives. arXiv preprint arXiv: 2401.04334, 2024.Wang J Q, Wu Z H, Li Y W, Jiang H Q, Shu P, Shi E Z, et al. Large language models for robotics: Opportunities, challenges, and perspectives. arXiv preprint arXiv: 2401.04334, 2024.

|

|

[26]

|

Kim Y, Kim D, Choi J, Park J, Oh N, Park D. A survey on integration of large language models with intelligent robots. Intelligent Service Robotics, 2024, 17(5): 1091−1107 doi: 10.1007/s11370-024-00550-5

|

|

[27]

|

Hu Y F, Xie Q T, Jain V, Francis J, Patrikar J, Keetha N, et al. Toward general-purpose robots via foundation models: A survey and meta-analysis. arXiv preprint arXiv: 2312.08782, 2023.Hu Y F, Xie Q T, Jain V, Francis J, Patrikar J, Keetha N, et al. Toward general-purpose robots via foundation models: A survey and meta-analysis. arXiv preprint arXiv: 2312.08782, 2023.

|

|

[28]

|

Liu Y, Chen W X, Bai Y J, Liang X D, Li G B, Gao W, et al. Aligning cyber space with physical world: A comprehensive survey on embodied AI. arXiv preprint arXiv: 2407.06886, 2024.Liu Y, Chen W X, Bai Y J, Liang X D, Li G B, Gao W, et al. Aligning cyber space with physical world: A comprehensive survey on embodied AI. arXiv preprint arXiv: 2407.06886, 2024.

|

|

[29]

|

Yang Z Y, Li L J, Lin K, Wang J F, Lin C C, Liu Z C, et al. The dawn of LMMs: Preliminary explorations with GPT-4V(ision). arXiv preprint arXiv: 2309.17421, 2023.Yang Z Y, Li L J, Lin K, Wang J F, Lin C C, Liu Z C, et al. The dawn of LMMs: Preliminary explorations with GPT-4V(ision). arXiv preprint arXiv: 2309.17421, 2023.

|

|

[30]

|

Hu Y D, Lin F Q, Zhang T, Yi L, Gao Y. Look before you leap: Unveiling the power of GPT-4V in robotic vision-language planning. arXiv preprint arXiv: 2311.17842, 2023.Hu Y D, Lin F Q, Zhang T, Yi L, Gao Y. Look before you leap: Unveiling the power of GPT-4V in robotic vision-language planning. arXiv preprint arXiv: 2311.17842, 2023.

|

|

[31]

|

Fan L X, Wang G Z, Jiang Y F, Mandlekar A, Yang Y C, Zhu H Y, et al. MineDojo: Building open-ended embodied agents with internet-scale knowledge. arXiv preprint arXiv: 2206.08853, 2022.Fan L X, Wang G Z, Jiang Y F, Mandlekar A, Yang Y C, Zhu H Y, et al. MineDojo: Building open-ended embodied agents with internet-scale knowledge. arXiv preprint arXiv: 2206.08853, 2022.

|

|

[32]

|

Bahl S, Mendonca R, Chen L L, Jain U, Pathak D. Affordances from human videos as a versatile representation for robotics. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023.

|

|

[33]

|

Baker B, Akkaya I, Zhokhov P, Huizinga J, Tang J, Ecoffet A, et al. Video PreTraining (VPT): Learning to act by watching unlabeled online videos. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: 2022.Baker B, Akkaya I, Zhokhov P, Huizinga J, Tang J, Ecoffet A, et al. Video PreTraining (VPT): Learning to act by watching unlabeled online videos. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: 2022.

|

|

[34]

|

Sontakke S, Zhang J, Arnold S M R, Pertsch K, Biyik E, Sadigh D, et al. RoboCLIP: One demonstration is enough to learn robot policies. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.Sontakke S, Zhang J, Arnold S M R, Pertsch K, Biyik E, Sadigh D, et al. RoboCLIP: One demonstration is enough to learn robot policies. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.

|

|

[35]

|

Seo Y, Lee K, James S, Abbeel P. Reinforcement learning with action-free pre-training from videos. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022.

|

|

[36]

|

Han L, Zhu Q X, Sheng J P, Zhang C, Li T G, Zhang Y Z, et al. Lifelike agility and play in quadrupedal robots using reinforcement learning and generative pre-trained models. Nature Machine Intelligence, 2024, 6(7): 787−798 doi: 10.1038/s42256-024-00861-3

|

|

[37]

|

Zhao T Z, Kumar V, Levine S, Finn C. Learning fine-grained bimanual manipulation with low-cost hardware. arXiv preprint arXiv: 2304.13705, 2023.Zhao T Z, Kumar V, Levine S, Finn C. Learning fine-grained bimanual manipulation with low-cost hardware. arXiv preprint arXiv: 2304.13705, 2023.

|

|

[38]

|

Chi C, Xu Z J, Pan C, Cousineau E, Burchfiel B, Feng S Y, et al. Universal manipulation interface: In-the-wild robot teaching without in-the-wild robots. arXiv preprint arXiv: 2402.10329v3, 2024.Chi C, Xu Z J, Pan C, Cousineau E, Burchfiel B, Feng S Y, et al. Universal manipulation interface: In-the-wild robot teaching without in-the-wild robots. arXiv preprint arXiv: 2402.10329v3, 2024.

|

|

[39]

|

Fu Z P, Zhao Q Q, Wu Q, Wetzstein G, Finn C. HumanPlus: Humanoid shadowing and imitation from humans. arXiv preprint arXiv: 2406.10454, 2024.Fu Z P, Zhao Q Q, Wu Q, Wetzstein G, Finn C. HumanPlus: Humanoid shadowing and imitation from humans. arXiv preprint arXiv: 2406.10454, 2024.

|

|

[40]

|

Wu P, Shentu Y, Yi Z K, Lin X Y, Abbeel P. GELLO: A general, low-cost, and intuitive teleoperation framework for robot manipulators. arXiv preprint arXiv: 2309.13037, 2023.Wu P, Shentu Y, Yi Z K, Lin X Y, Abbeel P. GELLO: A general, low-cost, and intuitive teleoperation framework for robot manipulators. arXiv preprint arXiv: 2309.13037, 2023.

|

|

[41]

|

Kim H, Ohmura Y, Kuniyoshi Y. Goal-conditioned dual-action imitation learning for dexterous dual-arm robot manipulation. IEEE Transactions on Robotics, 2024, 40: 2287−2305 doi: 10.1109/TRO.2024.3372778

|

|

[42]

|

Wang Y F, Xian Z, Chen F, Wang T H, Wang Y, Fragkiadaki K, et al. RoboGen: Towards unleashing infinite data for automated robot learning via generative simulation. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: OpenReview.net, 2024.

|

|

[43]

|

Mandlekar A, Nasiriany S, Wen B W, Akinola I, Narang Y S, Fan L X, et al. MimicGen: A data generation system for scalable robot learning using human demonstrations. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[44]

|

Ha H, Florence P, Song S. Scaling up and distilling down: Language-guided robot skill acquisition. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[45]

|

Ma Y J, Liang W, Wang H J, Wang S, Zhu Y K, Fan L X, et al. DrEureka: Language model guided sim-to-real transfer. arXiv preprint arXiv: 2406.01967, 2024.Ma Y J, Liang W, Wang H J, Wang S, Zhu Y K, Fan L X, et al. DrEureka: Language model guided sim-to-real transfer. arXiv preprint arXiv: 2406.01967, 2024.

|

|

[46]

|

Luo Z Y, Cao J K, Christen S, Winkler A, Kitani K, Xu W P. Grasping diverse objects with simulated humanoids. arXiv preprint arXiv: 2407.11385, 2024.Luo Z Y, Cao J K, Christen S, Winkler A, Kitani K, Xu W P. Grasping diverse objects with simulated humanoids. arXiv preprint arXiv: 2407.11385, 2024.

|

|

[47]

|

Yu T H, Quillen D, He Z P, Julian R, Hausman K, Finn C, et al. Meta-world: A benchmark and evaluation for multi-task and meta reinforcement learning. In: Proceedings of the 3rd Annual Conference on Robot Learning. Osaka, Japan: PMLR, 2019.

|

|

[48]

|

Li C S, Zhang R H, Wong J, Gokmen C, Srivastava S, Martín-Martín R, et al. BEHAVIOR-1K: A human-centered, embodied AI benchmark with 1 000 everyday activities and realistic simulation. arXiv preprint arXiv: 2403.09227, 2024.Li C S, Zhang R H, Wong J, Gokmen C, Srivastava S, Martín-Martín R, et al. BEHAVIOR-1K: A human-centered, embodied AI benchmark with 1 000 everyday activities and realistic simulation. arXiv preprint arXiv: 2403.09227, 2024.

|

|

[49]

|

Wu J, Antonova R, Kan A, Lepert M, Zeng A, Song S R, et al. TidyBot: Personalized robot assistance with large language models. Autonomous Robots, 2023, 47(8): 1087−1102 doi: 10.1007/s10514-023-10139-z

|

|

[50]

|

Jiang Y F, Gupta A, Zhang Z C, Wang G Z, Dou Y Q, Chen Y J, et al. VIMA: General robot manipulation with multimodal prompts. arXiv preprint arXiv: 2210.03094, 2022.Jiang Y F, Gupta A, Zhang Z C, Wang G Z, Dou Y Q, Chen Y J, et al. VIMA: General robot manipulation with multimodal prompts. arXiv preprint arXiv: 2210.03094, 2022.

|

|

[51]

|

Huang S Y, Jiang Z K, Dong H, Qiao Y, Gao P, Li H S. Instruct2Act: Mapping multi-modality instructions to robotic actions with large language model. arXiv preprint arXiv: 2305.11176, 2023.Huang S Y, Jiang Z K, Dong H, Qiao Y, Gao P, Li H S. Instruct2Act: Mapping multi-modality instructions to robotic actions with large language model. arXiv preprint arXiv: 2305.11176, 2023.

|

|

[52]

|

Huang W L, Wang C, Zhang R H, Li Y Z, Wu J J, Li F F. VoxPoser: Composable 3D value maps for robotic manipulation with language models. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.Huang W L, Wang C, Zhang R H, Li Y Z, Wu J J, Li F F. VoxPoser: Composable 3D value maps for robotic manipulation with language models. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[53]

|

Brohan A, Brown N, Carbajal J, Chebotar Y, Dabis J, Finn C, et al. RT-1: Robotics transformer for real-world control at scale. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.Brohan A, Brown N, Carbajal J, Chebotar Y, Dabis J, Finn C, et al. RT-1: Robotics transformer for real-world control at scale. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.

|

|

[54]

|

Zitkovich B, Yu T H, Xu S C, Xu P, Xiao T, Xia F, et al. RT-2: Vision-language-action models transfer web knowledge to robotic control. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[55]

|

O'Neill A, Rehman A, Gupta A, Maddukuri A, Gupta A, Padalkar A, et al. Open X-embodiment: Robotic learning datasets and RT-X models. arXiv preprint arXiv: 2310.08864, 2023.O'Neill A, Rehman A, Gupta A, Maddukuri A, Gupta A, Padalkar A, et al. Open X-embodiment: Robotic learning datasets and RT-X models. arXiv preprint arXiv: 2310.08864, 2023.

|

|

[56]

|

Durante Z, Sarkar B, Gong R, Taori R, Noda Y, Tang P, et al. An interactive agent foundation model. arXiv preprint arXiv: 2402.05929, 2024.

|

|

[57]

|

Wang W Y, Lei Y T, Jin S Y, Hager G D, Zhang L J. VIHE: Virtual in-hand eye transformer for 3D robotic manipulation. arXiv preprint arXiv: 2403.11461, 2024.Wang W Y, Lei Y T, Jin S Y, Hager G D, Zhang L J. VIHE: Virtual in-hand eye transformer for 3D robotic manipulation. arXiv preprint arXiv: 2403.11461, 2024.

|

|

[58]

|

Aldaco J, Armstrong T, Baruch R, Bingham J, Chan S, Draper K, et al. ALOHA 2: An enhanced low-cost hardware for bimanual teleoperation. arXiv preprint arXiv: 2405.02292, 2024.Aldaco J, Armstrong T, Baruch R, Bingham J, Chan S, Draper K, et al. ALOHA 2: An enhanced low-cost hardware for bimanual teleoperation. arXiv preprint arXiv: 2405.02292, 2024.

|

|

[59]

|

Wang Y J, Zhang B K, Chen J Y, Sreenath K. Prompt a robot to walk with large language models. arXiv preprint arXiv: 2309.09969, 2023.Wang Y J, Zhang B K, Chen J Y, Sreenath K. Prompt a robot to walk with large language models. arXiv preprint arXiv: 2309.09969, 2023.

|

|

[60]

|

Reed S, Zolna K, Parisotto E, Colmenarejo S G, Novikov A, Barth-Maron G, et al. A generalist agent. arXiv preprint arXiv: 2205.06175, 2022.Reed S, Zolna K, Parisotto E, Colmenarejo S G, Novikov A, Barth-Maron G, et al. A generalist agent. arXiv preprint arXiv: 2205.06175, 2022.

|

|

[61]

|

Li X H, Liu M H, Zhang H B, Yu C J, Xu J, Wu H T, et al. Vision-language foundation models as effective robot imitators. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: OpenReview.net, 2024.

|

|

[62]

|

Li X Q, Zhang M X, Geng Y R, Geng H R, Long Y X, Shen Y, et al. ManipLLM: Embodied multimodal large language model for object-centric robotic manipulation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024.

|

|

[63]

|

Zhen H Y, Qiu X W, Chen P H, Yang J C, Yan X, Du Y L, et al. 3D-VLA: A 3D vision-language-action generative world model. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: OpenReview.net, 2024.

|

|

[64]

|

Wu J L, Yin S F, Feng N Y, He X, Li D, Hao J Y, et al. iVideoGPT: Interactive VideoGPTs are scalable world models. arXiv preprint arXiv: 2405.15223, 2024.Wu J L, Yin S F, Feng N Y, He X, Li D, Hao J Y, et al. iVideoGPT: Interactive VideoGPTs are scalable world models. arXiv preprint arXiv: 2405.15223, 2024.

|

|

[65]

|

Zhang J Z, Wang K Y, Xu R T, Zhou G Z, Hong Y C, Fang X M, et al. NaVid: Video-based VLM plans the next step for vision-and-language navigation. arXiv preprint arXiv: 2402.15852, 2024.Zhang J Z, Wang K Y, Xu R T, Zhou G Z, Hong Y C, Fang X M, et al. NaVid: Video-based VLM plans the next step for vision-and-language navigation. arXiv preprint arXiv: 2402.15852, 2024.

|

|

[66]

|

Mandi Z, Jain S, Song S R. RoCo: Dialectic multi-robot collaboration with large language models. arXiv preprint arXiv: 2307.04738, 2023.Mandi Z, Jain S, Song S R. RoCo: Dialectic multi-robot collaboration with large language models. arXiv preprint arXiv: 2307.04738, 2023.

|

|

[67]

|

Jiao A R, Patel T P, Khurana S, Korol A M, Brunke L, Adajania V K, et al. Swarm-GPT: Combining large language models with safe motion planning for robot choreography design. arXiv preprint arXiv: 2312.01059, 2023.Jiao A R, Patel T P, Khurana S, Korol A M, Brunke L, Adajania V K, et al. Swarm-GPT: Combining large language models with safe motion planning for robot choreography design. arXiv preprint arXiv: 2312.01059, 2023.

|

|

[68]

|

Huang W L, Wang C, Li Y Z, Zhang R H, Li F F. ReKep: Spatio-temporal reasoning of relational keypoint constraints for robotic manipulation. arXiv preprint arXiv: 2409.01652, 2024.Huang W L, Wang C, Li Y Z, Zhang R H, Li F F. ReKep: Spatio-temporal reasoning of relational keypoint constraints for robotic manipulation. arXiv preprint arXiv: 2409.01652, 2024.

|

|

[69]

|

Liu P Q, Orru Y, Vakil J, Paxton C, Shafiullah N M M, Pinto L. OK-robot: What really matters in integrating open-knowledge models for robotics. arXiv preprint arXiv: 2401.12202, 2024.Liu P Q, Orru Y, Vakil J, Paxton C, Shafiullah N M M, Pinto L. OK-robot: What really matters in integrating open-knowledge models for robotics. arXiv preprint arXiv: 2401.12202, 2024.

|

|

[70]

|

Liang J, Huang W L, Xia F, Xu P, Hausman K, Ichter B, et al. Code as policies: Language model programs for embodied control. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). London, United Kingdom: IEEE, 2023.

|

|

[71]

|

Ding Y, Zhang X H, Paxton C, Zhang S Q. Task and motion planning with large language models for object rearrangement. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Detroit, USA: IEEE, 2023.

|

|

[72]

|

Lin K, Agia C, Migimatsu T, Pavone M, Bohg J. Text2Motion: From natural language instructions to feasible plans. Autonomous Robots, 2023, 47(8): 1345−1365 doi: 10.1007/s10514-023-10131-7

|

|

[73]

|

Driess D, Xia F, Sajjadi M S M, Lynch C, Chowdhery A, Ichter B, et al. PaLM-E: An embodied multimodal language model. In: Proceedings of the 40th International Conference on Machine Learning. Honolulu, USA: JMLR.org, 2023.

|

|

[74]

|

Ichter B, Brohan A, Chebotar Y, Finn C, Hausman K, Herzog A, et al. Do as I can, not as I say: Grounding language in robotic affordances. In: Proceedings of the 6th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2023.

|

|

[75]

|

Mu Y, Zhang Q L, Hu M K, Wang W H, Ding M Y, Jin J, et al. EmbodiedGPT: Vision-language pre-training via embodied chain of thought. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.Mu Y, Zhang Q L, Hu M K, Wang W H, Ding M Y, Jin J, et al. EmbodiedGPT: Vision-language pre-training via embodied chain of thought. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.

|

|

[76]

|

Du Y Q, Watkins O, Wang Z H, Colas C, Darrell T, Abbeel P, et al. Guiding pretraining in reinforcement learning with large language models. In: Proceedings of the 40th International Conference on Machine Learning. Honolulu, USA: PMLR, 2023.

|

|

[77]

|

Wang G Z, Xie Y Q, Jiang Y F, Mandlekar A, Xiao C W, Zhu Y K, et al. Voyager: An open-ended embodied agent with large language models. arXiv preprint arXiv: 2305.16291, 2023.Wang G Z, Xie Y Q, Jiang Y F, Mandlekar A, Xiao C W, Zhu Y K, et al. Voyager: An open-ended embodied agent with large language models. arXiv preprint arXiv: 2305.16291, 2023.

|

|

[78]

|

Song C H, Sadler B M, Wu J M, Chao W L, Washington C, Su Y. LLM-planner: Few-shot grounded planning for embodied agents with large language models. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023.

|

|

[79]

|

Ren A Z, Dixit A, Bodrova A, Singh S, Tu S, Brown N, et al. Robots that ask for help: Uncertainty alignment for large language model planners. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[80]

|

Liu H H, Chen A C, Zhu Y K, Swaminathan A, Kolobov A, Cheng C A. Interactive robot learning from verbal correction. arXiv preprint arXiv: 2310.17555, 2023.Liu H H, Chen A C, Zhu Y K, Swaminathan A, Kolobov A, Cheng C A. Interactive robot learning from verbal correction. arXiv preprint arXiv: 2310.17555, 2023.

|

|

[81]

|

Shi L X, Hu Z Y, Zhao T Z, Sharma A, Pertsch K, Luo J L, et al. Yell at your robot: Improving on-the-fly from language corrections. arXiv preprint arXiv: 2403.12910, 2024.Shi L X, Hu Z Y, Zhao T Z, Sharma A, Pertsch K, Luo J L, et al. Yell at your robot: Improving on-the-fly from language corrections. arXiv preprint arXiv: 2403.12910, 2024.

|

|

[82]

|

Zeng A, Attarian M, Ichter B, Choromanski K M, Wong A, Welker S, et al. Socratic models: Composing zero-shot multimodal reasoning with language. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: OpenReview.net, 2023.

|

|

[83]

|

Shah R, R. Martín-Martín R, Zhu Y K. MUTEX: Learning unified policies from multimodal task specifications. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[84]

|

Dai Y P, Peng R, Li S K, Chai J. Think, act, and ask: Open-world interactive personalized robot navigation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Yokohama, Japan: IEEE, 2024.

|

|

[85]

|

Liu F C, Fang K, Abbeel P, Levine S. MOKA: Open-world robotic manipulation through mark-based visual prompting. arXiv preprint arXiv: 2403.03174, 2024.Liu F C, Fang K, Abbeel P, Levine S. MOKA: Open-world robotic manipulation through mark-based visual prompting. arXiv preprint arXiv: 2403.03174, 2024.

|

|

[86]

|

James S, Wada K, Laidlow T, Davison A J. Coarse-to-fine Q-attention: Efficient learning for visual robotic manipulation via discretisation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New Orleans, USA: IEEE, 2022.

|

|

[87]

|

Shridhar M, Manuelli L, Fox D. Perceiver-Actor: A multi-task transformer for robotic manipulation. In: Proceedings of the 6th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2023.

|

|

[88]

|

Qin M H, Li W H, Zhou J W, Wang H Q, Pfister H. LangSplat: 3D language gaussian splatting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024.

|

|

[89]

|

Shorinwa O, Tucker J, Smith A, Swann A, Chen T, Firoozi R, et al. Splat-MOVER: Multi-stage, open-vocabulary robotic manipulation via editable Gaussian splatting. arXiv preprint arXiv: 2405.04378, 2024.

|

|

[90]

|

Yang J N, Chen X W Y, Qian S Y, Madaan N, Iyengar M, Fouhey D F, et al. LLM-Grounder: Open-vocabulary 3D visual grounding with large language model as an agent. arXiv preprint arXiv: 2309.12311, 2023.Yang J N, Chen X W Y, Qian S Y, Madaan N, Iyengar M, Fouhey D F, et al. LLM-Grounder: Open-vocabulary 3D visual grounding with large language model as an agent. arXiv preprint arXiv: 2309.12311, 2023.

|

|

[91]

|

Huang C G, Mees O, Zeng A, Burgard W. Audio visual language maps for robot navigation. arXiv preprint arXiv: 2303.07522, 2023.Huang C G, Mees O, Zeng A, Burgard W. Audio visual language maps for robot navigation. arXiv preprint arXiv: 2303.07522, 2023.

|

|

[92]

|

Gervet T, Xian Z, Gkanatsios N, Fragkiadaki K. Act3D: 3D feature field transformers for multi-task robotic manipulation. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[93]

|

Zhang K F, Li B Y, Hauser K, Li Y Z. AdaptiGraph: Material-adaptive graph-based neural dynamics for robotic manipulation. arXiv preprint arXiv: 2407.07889, 2024.Zhang K F, Li B Y, Hauser K, Li Y Z. AdaptiGraph: Material-adaptive graph-based neural dynamics for robotic manipulation. arXiv preprint arXiv: 2407.07889, 2024.

|

|

[94]

|

Qian S Y, Chen W F, Bai M, Zhou X, Tu Z W, Li L E. AffordanceLLM: Grounding affordance from vision language models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Seattle, USA: IEEE, 2024.

|

|

[95]

|

Ye Y F, Li X T, Gupta A, de Mellon S, Birchfield S, Song J M, et al. Affordance diffusion: Synthesizing hand-object interactions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver, Canada: IEEE, 2023. 22479−22489

|

|

[96]

|

Huang H X, Lin F Q, Hu Y D, Wang S J, Gao Y. CoPa: General robotic manipulation through spatial constraints of parts with foundation models. arXiv preprint arXiv: 2403.08248, 2024.Huang H X, Lin F Q, Hu Y D, Wang S J, Gao Y. CoPa: General robotic manipulation through spatial constraints of parts with foundation models. arXiv preprint arXiv: 2403.08248, 2024.

|

|

[97]

|

Qin Z Y, Fang K, Zhu Y K, Fei-Fei L, Savarese S. KETO: Learning keypoint representations for tool manipulation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020.Qin Z Y, Fang K, Zhu Y K, Fei-Fei L, Savarese S. KETO: Learning keypoint representations for tool manipulation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020.

|

|

[98]

|

Ju Y C, Hu K Z, Zhang G W, Zhang G, Jiang M R, Xu H Z. Robo-ABC: Affordance generalization beyond categories via semantic correspondence for robot manipulation. arXiv preprint arXiv: 2401.07487, 2024.Ju Y C, Hu K Z, Zhang G W, Zhang G, Jiang M R, Xu H Z. Robo-ABC: Affordance generalization beyond categories via semantic correspondence for robot manipulation. arXiv preprint arXiv: 2401.07487, 2024.

|

|

[99]

|

Sundaresan P, Belkhale S, Sadigh D, Bohg J. KITE: Keypoint-conditioned policies for semantic manipulation. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[100]

|

Hong Y N, Zheng Z S, Chen P H, Wang Y, Li J Y, Gan C. MultiPLY: A multisensory object-centric embodied large language model in 3D world. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024.

|

|

[101]

|

Liu H T, Li C Y, Wu Q Y, Lee Y J. Visual instruction tuning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.Liu H T, Li C Y, Wu Q Y, Lee Y J. Visual instruction tuning. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: 2023.

|

|

[102]

|

Yenamandra S, Ramachandran A, Yadav K, Wang A S, Khanna M, Gervet T, et al. HomeRobot: Open-vocabulary mobile manipulation. In: Proceedings of the 37th Conference on Robot Learning. Atlanta, USA: PMLR, 2023.

|

|

[103]

|

Shafiullah N M M, Paxton C, Pinto L, Chintala S, Szlam A. CLIP-fields: Weakly supervised semantic fields for robotic memory. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.Shafiullah N M M, Paxton C, Pinto L, Chintala S, Szlam A. CLIP-fields: Weakly supervised semantic fields for robotic memory. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.

|

|

[104]

|

He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016.

|

|

[105]

|

Lin T Y, Dollár P, Girshick R, He K M, Hariharan B, Belongie S. Feature pyramid networks for object detection. arXiv preprint arXiv: 1612.03144, 2016.Lin T Y, Dollár P, Girshick R, He K M, Hariharan B, Belongie S. Feature pyramid networks for object detection. arXiv preprint arXiv: 1612.03144, 2016.

|

|

[106]

|

Mildenhall B, Srinivasan P P, Tancik M, Barron J T, Ramamoorthi R, Ng R. NeRF: Representing scenes as neural radiance fields for view synthesis. In: Proceedings of the 16th European Conference. Glasgow, UK: Springer, 2020.

|

|

[107]

|

Shen W, Yang G, Yu A L, Wong J, Kaelbling L P, Isola P. Distilled feature fields enable few-shot language-guided manipulation. arXiv preprint arXiv: 2308.07931, 2023.Shen W, Yang G, Yu A L, Wong J, Kaelbling L P, Isola P. Distilled feature fields enable few-shot language-guided manipulation. arXiv preprint arXiv: 2308.07931, 2023.

|

|

[108]

|

Kerbl B, Kopanas G, Leimkuehler T, Drettakis G. 3D Gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics (TOG), 2023, 42(4): Article No. 139

|

|

[109]

|

Fei B, Xu J Y, Zhang R, Zhou Q Y, Yang W D, He Y. 3D Gaussian as a new era: A survey. arXiv preprint arXiv: 2402.07181, 2024.Fei B, Xu J Y, Zhang R, Zhou Q Y, Yang W D, He Y. 3D Gaussian as a new era: A survey. arXiv preprint arXiv: 2402.07181, 2024.

|

|

[110]

|

Kerr J, Kim C M, Goldberg K, Kanazawa A, Tancik M. LERF: Language embedded radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Paris, France: IEEE, 2023.

|

|

[111]

|

Matsuki H, Murai R, Kelly P H J, Davison A J. Gaussian splatting SLAM. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2024.

|

|

[112]

|

Zhu S T, Qin R J, Wang G M, Liu J M, Wang H S. SemGauss-SLAM: Dense semantic Gaussian splatting SLAM. arXiv preprint arXiv: 2403.07494, 2024.Zhu S T, Qin R J, Wang G M, Liu J M, Wang H S. SemGauss-SLAM: Dense semantic Gaussian splatting SLAM. arXiv preprint arXiv: 2403.07494, 2024.

|

|

[113]

|

Hassanin M, Khan S, Tahtali M. Visual affordance and function understanding: A survey. ACM Computing Surveys (CSUR), 2022, 54(3): Article No. 47

|

|

[114]

|

Cui Y C, Niekum S, Gupta A, Kumar V, Rajeswaran A. Can foundation models perform zero-shot task specification for robot manipulation? In: Proceedings of the 4th Learning for Dynamics and Control Conference. Stanford, USA: PMLR, 2022.

|

|

[115]

|

Mandi Z, Bharadhwaj H, Moens V, Song S, Rajeswaran A, Kumar V. CACTI: A framework for scalable multi-task multi-scene visual imitation learning. arXiv preprint arXiv: 2212.05711, 2022.Mandi Z, Bharadhwaj H, Moens V, Song S, Rajeswaran A, Kumar V. CACTI: A framework for scalable multi-task multi-scene visual imitation learning. arXiv preprint arXiv: 2212.05711, 2022.

|

|

[116]

|

Yu T H, Xiao T, Tompson J, Stone A, Wang S, Brohan A, et al. Scaling robot learning with semantically imagined experience. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.Yu T H, Xiao T, Tompson J, Stone A, Wang S, Brohan A, et al. Scaling robot learning with semantically imagined experience. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, South Korea: 2023.

|

|

[117]

|

Siciliano B, Sciavicco L, Villani L, Oriolo G. Robotics: Modelling, Planning and Control. London: Springer, 2009.

|

|

[118]

|

Wei J, Wang X Z, Schuurmans D, Bosma M, Ichter B, Xia F, et al. Chain-of-thought prompting elicits reasoning in large language models. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022.

|

|

[119]

|

Minderer M, Gritsenko A, Stone A, Neumann M, Weissenborn D, Dosovitskiy A, et al. Simple open-vocabulary object detection. In: Proceedings of the 17th European Conference. Tel Aviv, Israel: Springer, 2022.

|

|

[120]

|

Harris C R, Millman K J, van der Walt S J, Gommers R, Virtanen P, Cournapeau D, et al. Array programming with NumPy. Nature, 2020, 585(7825): 357−362 doi: 10.1038/s41586-020-2649-2

|

|

[121]

|

Zhang R R, Han J M, Liu C, Gao P, Zhou A J, Hu X F, et al. LLaMA-adapter: Efficient fine-tuning of language models with zero-init attention. arXiv preprint arXiv: 2303.16199, 2023.Zhang R R, Han J M, Liu C, Gao P, Zhou A J, Hu X F, et al. LLaMA-adapter: Efficient fine-tuning of language models with zero-init attention. arXiv preprint arXiv: 2303.16199, 2023.

|

|

[122]

|

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017.

|

|

[123]

|

Cer D, Yang Y F, Kong S Y, Hua N, Limtiaco N, John R S, et al. Universal sentence encoder. arXiv preprint arXiv: 1803.11175, 2018.Cer D, Yang Y F, Kong S Y, Hua N, Limtiaco N, John R S, et al. Universal sentence encoder. arXiv preprint arXiv: 1803.11175, 2018.

|

|

[124]

|

Tan M X, Le Q V. EfficientNet: Rethinking model scaling for convolutional neural networks. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019.

|

|

[125]

|

Perez E, Strub F, de Vries H, Dumoulin V, Courville A C. FiLM: Visual reasoning with a general conditioning layer. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI Press, 2018.

|

|

[126]

|

Chen X, Djolonga J, Padlewski P, Mustafa B, Changpinyo S, Wu J L, et al. PaLI-X: On scaling up a multilingual vision and language model. arXiv preprint arXiv: 2305.18565, 2023.Chen X, Djolonga J, Padlewski P, Mustafa B, Changpinyo S, Wu J L, et al. PaLI-X: On scaling up a multilingual vision and language model. arXiv preprint arXiv: 2305.18565, 2023.

|

|

[127]

|

Kingma D P, Welling M. Auto-encoding variational Bayes. In: Proceedings of the 2nd International Conference on Learning Representations. Banff, Canada: 2014.Kingma D P, Welling M. Auto-encoding variational Bayes. In: Proceedings of the 2nd International Conference on Learning Representations. Banff, Canada: 2014.

|

|

[128]

|

Devlin J, Chang M W, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv: 1810.04805, 2019.

|

|

[129]

|

Fu Z, Zhao T Z, Finn C. Mobile ALOHA: Learning bimanual mobile manipulation with low-cost whole-body teleoperation. arXiv preprint arXiv: 2401.02117v1, 2024.

|

|

[130]

|

James S, Ma Z C, Arrojo D R, Davison A J. RLBench: The robot learning benchmark and learning environment. IEEE Robotics and Automation Letters, 2020, 5(2): 3019−3026 doi: 10.1109/LRA.2020.2974707

|

|

[131]

|

Xia F, Shen W B, Li C S, Kasimbeg P, Tchapmi M E, Toshev A, et al. Interactive Gibson benchmark: A benchmark for interactive navigation in cluttered environments. IEEE Robotics and Automation Letters, 2020, 5(2): 713−720 doi: 10.1109/LRA.2020.2965078

|

|

[132]

|

Shridhar M, Thomason J, Gordon D, Bisk Y, Han W, Mottaghi R, et al. ALFRED: A benchmark for interpreting grounded instructions for everyday tasks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020.

|

|

[133]

|

Puig X, Ra K, Boben M, Li J M, Wang T W, Fidler S, et al. VirtualHome: Simulating household activities via programs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018.

|

|

[134]

|

Gan C, Zhou S Y, Schwartz J, Alter S, Bhandwaldar A, Gutfreund D, et al. The ThreeDWorld transport challenge: A visually guided task-and-motion planning benchmark towards physically realistic embodied AI. In: Proceedings of the International Conference on Robotics and Automation (ICRA). Philadelphia, USA: IEEE, 2022.

|

|

[135]

|

Weihs L, Deitke M, Kembhavi A, Mottaghi R. Visual room rearrangement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, USA: IEEE, 2021.

|

|

[136]

|

Makoviychuk V, Wawrzyniak L, Guo Y R, Lu M, Storey K, Macklin M, et al. Isaac gym: High performance GPU based physics simulation for robot learning. In: Proceedings of the 1st Neural Information Processing Systems Track on Datasets and Benchmarks. 2021.Makoviychuk V, Wawrzyniak L, Guo Y R, Lu M, Storey K, Macklin M, et al. Isaac gym: High performance GPU based physics simulation for robot learning. In: Proceedings of the 1st Neural Information Processing Systems Track on Datasets and Benchmarks. 2021.

|

|

[137]

|

Wang L R, Ling Y Y, Yuan Z C, Shridhar M, Bao C, Qin Y Z, et al. GenSim: Generating robotic simulation tasks via large language models. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: OpenReview.net, 2024.

|

|

[138]

|

Chi C, Xu Z J, Feng S Y, Cousineau E, Du Y L, Burchfiel B, et al. Diffusion policy: Visuomotor policy learning via action diffusion. arXiv preprint arXiv: 2303.04137, 2024.

|

|

[139]

|

Zhou Z X, Ning X F, Hong K, Fu T Y, Xu J M, Li S Y, et al. A survey on efficient inference for large language models. arXiv preprint arXiv: 2404.14294, 2024.Zhou Z X, Ning X F, Hong K, Fu T Y, Xu J M, Li S Y, et al. A survey on efficient inference for large language models. arXiv preprint arXiv: 2404.14294, 2024.

|

|

[140]

|

Ahn M, Dwibedi D, Finn C, Arenas M G, Gopalakrishnan K, Hausman K, et al. AutoRT: Embodied foundation models for large scale orchestration of robotic agents. arXiv preprint arXiv: 2401.12963, 2024.Ahn M, Dwibedi D, Finn C, Arenas M G, Gopalakrishnan K, Hausman K, et al. AutoRT: Embodied foundation models for large scale orchestration of robotic agents. arXiv preprint arXiv: 2401.12963, 2024.

|

下载:

下载: