An Entity Linking Method for Microblog Based on Semantic Categorization by Word Embeddings

-

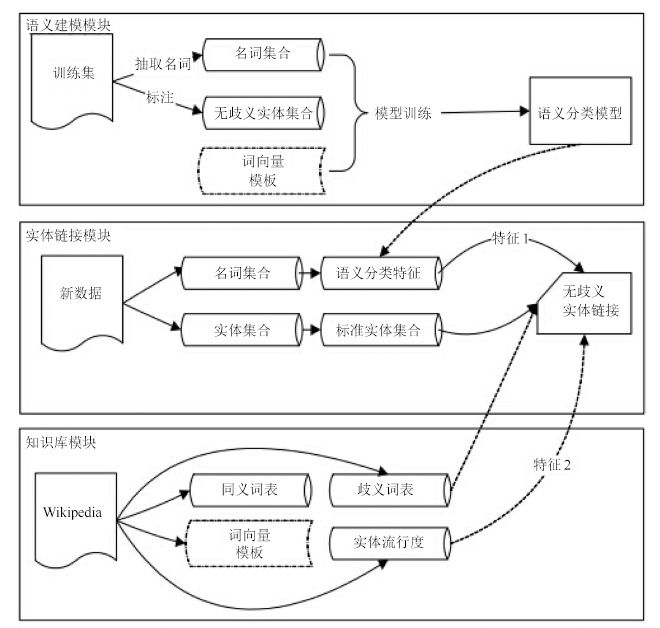

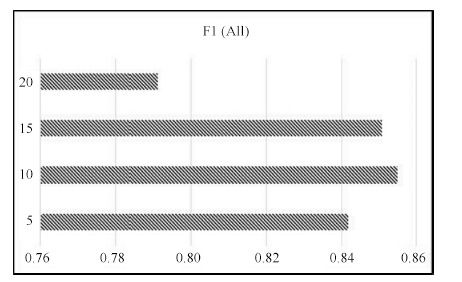

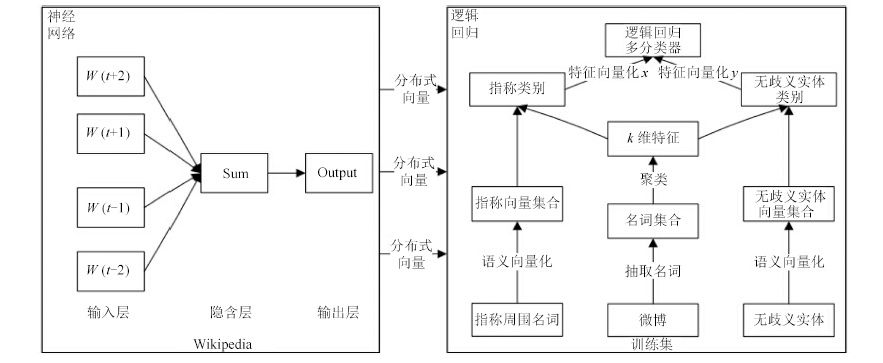

摘要: 微博实体链接是把微博中给定的指称链接到知识库的过程,广泛应用于信息抽取、自动问答等自然语言处理任务(Natural language processing,NLP). 由于微博内容简短,传统长文本实体链接的算法并不能很好地用于微博实体链接任务. 以往研究大都基于实体指称及其上下文构建模型进行消歧,难以识别具有相似词汇和句法特征的候选实体. 本文充分利用指称和候选实体本身所含有的语义信息,提出在词向量层面对任务进行抽象建模,并设计一种基于词向量语义分类的微博实体链接方法. 首先通过神经网络训练词向量模板,然后通过实体聚类获得类别标签作为特征,再通过多分类模型预测目标实体的主题类别来完成实体消歧. 在NLPCC2014公开评测数据集上的实验结果表明,本文方法的准确率和召回率均高于此前已报道的最佳结果,特别是实体链接准确率有显著提升.Abstract: As a widely applied task in natural language processing (NLP), named entity linking (NEL) is to link a given mention to an unambiguous entity in knowledge base. NEL plays an important role in information extraction and question answering. Since contents of microblog are short, traditional algorithms for long texts linking do not fit the microblog linking task well. Precious studies mostly constructed models based on mentions and its context to disambiguate entities, which are difficult to identify candidates with similar lexical and syntactic features. In this paper, we propose a novel NEL method based on semantic categorization through abstracting in terms of word embeddings, which can make full use of semantic involved in mentions and candidates. Initially, we get the word embeddings through neural network and cluster the entities as features. Then, the candidates are disambiguated through predicting the categories of entities by multiple classifiers. Lastly, we test the method on dataset of NLPCC2014, and draw the conclusion that the proposed method gets a better result than the best known work, especially on accurancy.

-

Key words:

- Word embedding /

- entity linking /

- social media processing /

- neural network /

- multiple classifiers

-

表 1 训练集数据统计

Table 1 Statistics in training data

平均每条微博中名词个数 7.91 同一语义类别名词个数超过7的微博 34 同一语义类别名词个数超过6的微博 81 同一语义类别名词个数超过5的微博 207 同一语义类别名词个数超过4的微博 416 同一语义类别名词个数超过3的微博 502 表 2 同义词表举例

Table 2 Examples of synonym lexicon

文中实体表示(Key) 标准实体表示(Value) 迈克尔乔丹 飞人 篮球之神 迈克尔·杰弗里·乔丹 迈克尔·乔丹 Michael Jordan Michael Jeffrey Jordan 乔丹 表 3 歧义词表举例

Table 3 Examples of ambiguity lexicon

标准实体表示(Key) 无歧义真实实体(List) 苹果(果树) 苹果(果实) 苹果 苹果(公司) 苹果(人物) 苹果(动漫角色) 苹果(歌曲) 表 4 实体流行度表举例

Table 4 Examples of entity frequency

无歧义真实实体(Entity) 实体出现次数(Frequency) 苹果(果树) 26 苹果(果实) 39 苹果(公司) 158 苹果(人物) 2 表 5 实体流行度权值

Table 5 Weights of entity frequency

流行度排行 一 二 三 四 五 权值 1 0.8 0.7 0.6 0.5 表 6 实验数据规模

Table 6 Scale of experiment data

数据类型 数据规模 同义词表Key总数 4 293 406 同义词表Value总数 1 948 277 歧义词表Key总数 213 764 歧义词表Value总数 2 354 687 实体总数 4 369 348 表 7 in-KB 实验结果

Table 7 Results of in-KB

系统 准确率 召回率 F1 值 NLPCC 0.7927 0.8488 0.8198 SCWE+EF 0.8137 0.8593 0.8358 EF* 0.7641 0.8142 0.7884 CMEL 0.7951 0.8345 0.8143 表 8 NIL 实验结果

Table 8 Results of NIL

系统 准确率 召回率 F1 值 NLPCC 0.9024 0.8653 0.8835 SCWE+EF 0.9144 0.8763 0.8949 EF* 0.8871 0.8648 0.8758 CMEL 0.8543 0.8694 0.8461 表 9 in-KB 实验结果

Table 9 Results of in-KB

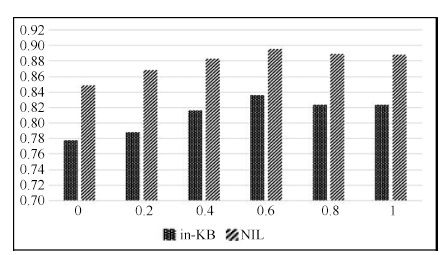

λ值 准确率 召回率 F1 值 0 0.7532 0.8016 0.7766 0.2 0.7621 0.8158 0.788 0.4 0.7943 0.8375 0.8153 0.6 0.8137 0.8593 0.8358 0.8 0.8032 0.8432 0.8227 1 0.7983 0.8488 0.8228 表 10 NIL 实验结果

Table 10 Results of NIL

λ值 准确率 召回率 F1 值 0 0.8432 0.8532 0.8482 0.2 0.8643 0.8713 0.8678 0.4 0.8917 0.8732 0.8824 0.6 0.9148 0.8762 0.8951 0.8 0.9032 0.8754 0.8891 1 0.9013 0.8743 0.8876 -

[1] (中国微博服务. 2014年新浪微博用户发展报告[Online], available: http://www.199it.com/archives/324955.html. November 24, 2015)Chinese Microblog Service. Sina Weibo User Development Report in 2014[Online], available:http://www.199it.com/archives/324955.html. November 24, 2015 [2] Guo Y H, Qin B, Liu T, Li S. Microblog entity linking by leveraging extra posts. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing. Seattle, USA: Association for Computational Linguistic, 2013. 863-868 [3] 杨锦锋, 于秋滨, 关毅, 蒋志鹏. 电子病历命名实体识别和实体关系抽取研究综述. 自动化学报, 2014, 40(8): 1537-1562Yang Jin-Feng, Yu Qiu-Bin, Guan Yi, Jiang Zhi-Peng. An overview of research on electronic medical record oriented named entity recognition and entity relation extraction. Acta Automatica Sinica, 2014, 40(8): 1537-1562 [4] Shen W, Wang J Y, Han J W. Entity linking with a knowledge base: issues, techniques, and solutions. IEEE Transactions on Knowledge and Data Engineering, 2015, 27(2): 443-460 [5] Jiang L, Yu M, Zhou M, Liu X H, Zhao T J. Target-dependent twitter sentiment classification. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies. Portland, Oregon, USA: 2011. 151-160 [6] Shen W, Wang J Y, Luo P, Wang M. Linking named entities in tweets with knowledge base via user interest modeling. In: Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, USA: ACM, 2013. 68-76 [7] Liu X H, Li Y T, Wu H C, Zhou M, Wei F R, Lu Y. Entity linking for tweets. In: Proceedings of the 51st Annual Meeting of the Association of Computational Linguistics. Sofia, Bulgaria: Association for Computational Linguistics, 2013. 1304-1311 [8] 乌达巴拉, 汪增福. 一种基于组合语义的文本情绪分析模型. 自动化学报, 2015, 41(12): 2125-2137Odbal, Wang Zeng-Fu. Emotion analysis model using compositional semantics. Acta Automatica Sinica, 2015, 41(12): 2125-2137 [9] NLPCC[Online], available:http://tcci.ccf.org.cn/conference/2014/pages/page04_sam.html. October 31, 2015 [10] Hachey B, Radford W, Nothman J, Honnibal M, Curran J R. Evaluating entity linking with Wikipedia. Artificial Intelligence, 2013, 194: 130-150 [11] Mikolov T, Chen K, Corrado G, Dean J. Efficient estimation of word representations in vector space. arXiv: 1301.3781, 2013. [12] Hartigan J A, Wong M A. Algorithm AS 136: a k-means clustering algorithm. Journal of the Royal Statistical Society——Series C (Applied Statistics), 1979, 28(1): 100-108 [13] Fernández-Delgado M, Cernadas E, Barro S, Amorim D. Do we need hundreds of classifiers to solve real world classification problems? Journal of Machine Learning Research, 2014, 15: 3133-3181 [14] 毛毅, 陈稳霖, 郭宝龙, 陈一昕. 基于密度估计的逻辑回归模型. 自动化学报, 2014, 40(1): 62-72Mao Yi, Chen Wen-Lin, Guo Bao-Long, Chen Yi-Xin. A novel logistic regression model based on density estimation. Acta Automatica Sinica, 2014, 40(1): 62-72 [15] 周晓剑. 考虑梯度信息的ε-支持向量回归机. 自动化学报, 2014, 40(12): 2908-2915Zhou Xiao-Jian. Enhancing varepsilon-support vector regression with gradient information. Acta Automatica Sinica, 2014, 40(12): 2908-2915 [16] King G, Zeng L C. Logistic regression in rare events data. Political Analysis, 2001, 9(2): 137-163 [17] Guo Y H, Qin B, Li Y Q, Liu T, Lin S. Improving candidate generation for entity linking. In: Proceedings of the 18th International Conference on Applications of Natural Language to Information Systems. Salford, UK: Springer, 2013. 225-236 [18] Wikipedia[Online], available:http://download.wikipedia.comzhwikilate-stzhwiki-latest-pages-articles.xml.bz2. October 31, 2015 [19] 朱敏, 贾真, 左玲, 吴安峻, 陈方正, 柏玉. 中文微博实体链接研究. 北京大学学报(自然科学版), 2014, 50(1): 73-78Zhu Min, Jia Zhen, Zuo Ling, Wu An-Jun, Chen Fang-Zheng, Bai Yu. Research on entity linking of Chinese microblog. Acta Scientiarum Naturalium Universitatis Pekinensis, 2014, 50(1): 73-78 [20] 郭宇航. 基于上下文的实体链指技术研究[博士学位论文], 哈尔滨工业大学, 中国, 2014.Guo Yu-Hang. Research on Context-based Entity Linking Technique[Ph.,D. dissertation], Harbin Institute of Technology, China, 2014. [21] Meng Z Y, Yu D, Xun E D. Chinese microblog entity linking system combining Wikipedia and search engine retrieval results. In: Proceedings of the 3rd CCF Conference on Natural Language Processing and Chinese Computing. Berlin Heidelberg: Springer, 2014. 449-456 -

下载:

下载: