|

[1]

|

Etzioni O. Search needs a shake-up. Nature, 2011, 476(7358) : 25-26

|

|

[2]

|

Lehmann J, Isele R, Jakob M, Jentzsch A, Kontokostas D, Mendes P N, Hellmann S, Morsey M, van Kleef P, Auer S, Bizer C. Dbpedia-a large-scale, multilingual knowledge base extracted from Wikipedia. Semantic Web, 2015, 6(2) : 167-195

|

|

[3]

|

Bollacker K, Evans C, Paritosh P, Sturge T, Taylor J. Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data. Vancouver, Canada: ACM, 2008. 1247-1250

|

|

[4]

|

Suchanek F M, Kasneci G, Weikum G. Yago: a core of semantic knowledge. In: Proceedings of the 16th International Conference on World Wide Web. Alberta, Canada: ACM, 2007. 697-706

|

|

[5]

|

Kwiatkowski T, Zettlemoyer L, Goldwater S, Steedman M. Lexical generalization in CCG grammar induction for semantic parsing. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Scotland, UK: Association for Computational Linguistics, 2011. 1512-1523

|

|

[6]

|

Liang P, Jordan M I, Klein D. Learning dependency-based compositional semantics. Computational Linguistics, 2013, 39(2) : 389-446

|

|

[7]

|

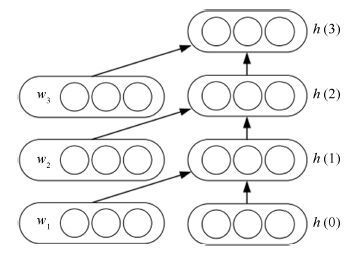

Socher R, Perelygin A, Wu J Y, Chuang J, Manning C D, Ng A Y, Potts C. Recursive deep models for semantic compositionality over a sentiment treebank. In: Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing. Seattle, USA: Association for Computational Linguistics, 2013. 1631-1642

|

|

[8]

|

Cho K, Van Merriënboer B, Gülçehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing. Doha, Qatar: Association for Computational Linguistics, 2014. 1724-1734

|

|

[9]

|

Socher R, Manning C D, Ng A Y. Learning continuous phrase representations and syntactic parsing with recursive neural networks. In: Proceedings of the NIPS-2010 Deep Learning and Unsupervised Feature Learning Workshop. Vancouver, Canada, 2010. 1-9

|

|

[10]

|

Bordes A, Weston J, Usunier N. Open question answering with weakly supervised embedding models. Machine Learning and Knowledge Discovery in Databases. Berlin Heidelberg: Springer, 2014. 165-180

|

|

[11]

|

Bordes A, Chopra S, Weston J. Question answering with subgraph embeddings. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing. Doha, Qatar: Association for Computational Linguistic, 2014. 615-620

|

|

[12]

|

Dong L, Wei F R, Zhou M, Xu K. Question answering over freebase with multi-column convolutional neural networks. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China: ACL, 2015. 260-269

|

|

[13]

|

Nickel M, Tresp V, Kriegel H P. A three-way model for collective learning on multi-relational data. In: Proceedings of the 28th International Conference on Machine Learning. Washington, USA, 2011. 809-816

|

|

[14]

|

Nickel M, Tresp V, Kriegel H P. Factorizing YAGO: scalable machine learning for linked data. In: Proceedings of the 21st International Conference on World Wide Web. Lyon, France: ACM, 2012. 271-280

|

|

[15]

|

Nickel M, Tresp V. Logistic tensor factorization for multi-relational data. In: Proceedings of the 30th International Conference on Machine Learning. Atlanta, USA, 2013.

|

|

[16]

|

Nickel M, Tresp V. Tensor factorization for multi-relational learning. Machine Learning and Knowledge Discovery in Databases. Berlin Heidelberg: Springer, 2013. 617-621

|

|

[17]

|

Nickel M, Murphy K, Tresp V, Gabrilovich E. A review of relational machine learning for knowledge graphs. Proceedings of the IEEE, 2016, 104(1) : 11-33

|

|

[18]

|

Bordes A, Weston J, Collobert R, Bengio Y. Learning structured embeddings of knowledge bases. In: Proceedings of the 25th AAAI Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2011. 301-306

|

|

[19]

|

Bordes A, Glorot X, Weston J, Bengio Y. Joint learning of words and meaning representations for open-text semantic parsing. In: Proceedings of the 15th International Conference on Artificial Intelligence and Statistics. Cadiz, Spain, 2012. 127-135

|

|

[20]

|

Bordes A, Glorot X, Weston J, Bengio Y. A semantic matching energy function for learning with multi-relational data. Machine Learning, 2014, 94(2) : 233-259

|

|

[21]

|

Jenatton R, Le Roux N, Bordes A, Obozinski G. A latent factor model for highly multi-relational data. In: Proceedings of Advances in Neural Information Processing Systems 25. Lake Tahoe, Nevada, United States: Curran Associates, Inc., 2012. 3167-3175

|

|

[22]

|

Sutskever I, Salakhutdinov R, Joshua B T. Modelling relational data using Bayesian clustered tensor factorization. In: Proceedings of Advances in Neural Information Processing Systems 22. Vancouver, Canada, 2009. 1821-1828

|

|

[23]

|

Socher R, Chen D Q, Manning C D, Ng A Y. Reasoning with neural tensor networks for knowledge base completion. In: Proceedings of Advances in Neural Information Processing Systems 26. Stateline, USA: MIT Press, 2013. 926-934

|

|

[24]

|

Bordes A, Usunier N, Garcia-Durán A, Weston J, Yakhnenko O. Translating embeddings for modeling multi-relational data. In: Proceedings of Advances in Neural Information Processing Systems 26. Stateline, USA: MIT Press, 2013. 2787-2795

|

|

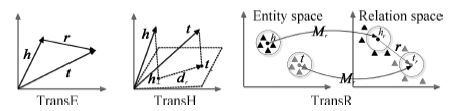

[25]

|

Wang Z, Zhang J W, Feng J L, Chen Z. Knowledge graph embedding by translating on hyperplanes. In: Proceedings of the 28th AAAI Conference on Artificial Intelligence. Québec, Canada: AAAI, 2014. 1112-1119

|

|

[26]

|

Lin Y, Zhang J, Liu Z, Sun M, Liu Y, Zhu X. Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the 29th AAAI Conference on Artificial Intelligence. Austin, USA: AAAI, 2015. 2181-2187

|

|

[27]

|

Ji G L, He S Z, Xu L H, Liu K, Zhao J. Knowledge graph embedding via dynamic mapping matrix. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics. Beijing, China: Association for Computational Linguistics, 2015. 687-696

|

|

[28]

|

Lin Y K, Liu Z Y, Luan H B, Sun M S, Rao S W, Liu S. Modeling relation paths for representation learning of knowledge bases. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal: Association for Computational Linguistics, 2015. 705-714

|

|

[29]

|

Guo S, Wang Q, Wang B, Wang L H, Guo L. Semantically smooth knowledge graph embedding. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China: Association for Computational Linguistics, 2015. 84-94

|

|

[30]

|

He S Z, Liu K, Ji G L, Zhao J. Learning to represent knowledge graphs with Gaussian embedding. In: Proceedings of the 24th ACM International on Conference on Information and Knowledge Management. Melbourne, Australia: ACM, 2015. 623-632.

|

|

[31]

|

Frege G. Jber sinn und bedeutung. Wittgenstein Studien, 1892, 100: 25-50

|

|

[32]

|

Hermann K M. Distributed Representations for Compositional Semantics[Ph.D. dissertation], University of Oxford, 2014

|

|

[33]

|

Socher R, Karpathy A, Le Q V, Manning C D, Ng A Y. Grounded compositional semantics for finding and describing images with sentences. Transactions of the Association for Computational Linguistics, 2014, 2: 207-218

|

|

[34]

|

Socher R, Huang E H, Pennin J, Manning C D, Ng A Y. Dynamic pooling and unfolding recursive autoencoders for paraphrase detection. In: Proceedings of Advances in Neural Information Processing Systems 24. Granada, Spain, 2011. 801-809

|

|

[35]

|

Socher R, Pennington J, Huang E H, Ng A Y, Manning C D. Semi-supervised recursive autoencoders for predicting sentiment distributions. In: Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing. Scotland, UK: Association for Computational Linguistics, 2011. 151-161

|

|

[36]

|

Socher R, Lin C C Y, Ng A Y, Manning C D. Parsing natural scenes and natural language with recursive neural networks. In: Proceedings of the 28th International Conference on Machine Learning. Bellevue, WA, USA, 2011. 129-136

|

|

[37]

|

Socher R, Bauer J, Manning C D, Ng A Y. Parsing with compositional vector grammars. In: Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics. Sofia, Bulgaria: Association for Computational Linguistics, 2013. 455-465

|

|

[38]

|

Mitchell J, Lapata M. Composition in distributional models of semantics. Cognitive Science, 2010, 34(8) : 1388-1429

|

|

[39]

|

Socher R, Huval B, Manning C D, Ng A Y. Semantic compositionality through recursive matrix-vector spaces. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning. Jeju Island, Korea: Association for Computational Linguistics, 2012. 1201-1211

|

|

[40]

|

Elman J L. Finding structure in time. Cognitive Science, 1990, 14(2) : 179-211

|

|

[41]

|

Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult. IEEE Transactions on Neural Networks, 1994, 5(2) : 157-166

|

|

[42]

|

Hochreiter S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 1998, 6(2) : 107-116

|

|

[43]

|

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8) : 1735-1780

|

|

[44]

|

Fukushima K. Neocognitron: a self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 1980, 36(4) : 193-202

|

|

[45]

|

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11) : 2278-2324

|

|

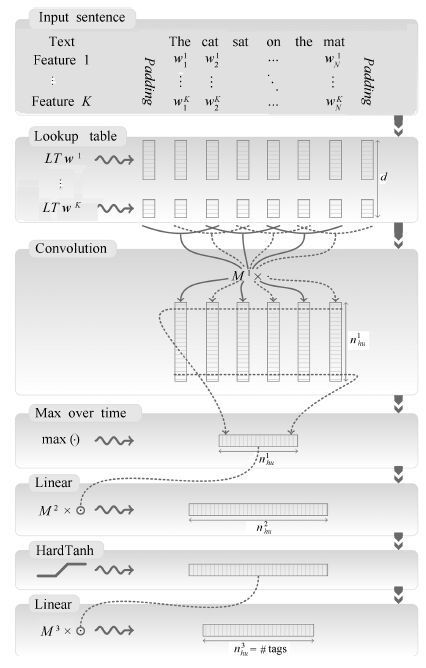

[46]

|

Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P. Natural language processing (almost) from scratch. The Journal of Machine Learning Research, 2011, 12: 2493-2537

|

|

[47]

|

Kalchbrenner N, Grefenstette E, Blunsom P. A convolutional neural network for modelling sentences. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics. Baltimore, USA: Association for Computational Linguistics, 2014. 655-665

|

|

[48]

|

Kim Y. Convolutional neural networks for sentence classification. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing. Doha, Qatar: Association for Computational Linguistics, 2014. 1746-1751

|

|

[49]

|

Zeng D H, Liu K, Lai S W, Zhou G Y, Zhao J. Relation classification via convolutional deep neural network. In: Proceedings of the 25th International Conference on Computational Linguistics. Dublin, Ireland: Association for Computational Linguistics, 2014. 2335-2344

|

|

[50]

|

Fader A, Soderland S, Etzioni O. Identifying relations for open information extraction. In: Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing. Scotland, UK: Association for Computational Linguistics, 2011. 1535-1545

|

|

[51]

|

Yih W T, He X D, Meek C. Semantic parsing for single-relation question answering. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistic. Baltimore, USA: Association for Computational Linguistic, 2014. 643-648

|

|

[52]

|

Fader A, Zettlemoyer L S, Etzioni O. Paraphrase-driven learning for open question answering. In: Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics. Sofia, Bulgaria: Association for Computational Linguistics, 2013. 1608-1618

|

|

[53]

|

Berant J, Liang P. Semantic parsing via paraphrasing. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistic. Baltimore, USA: Association for Computational Linguistic, 2014. 1415-1425

|

|

[54]

|

Yao X C, Van Durme B. Information extraction over structured data: question answering with freebase. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistic. Baltimore, USA: Association for Computational Linguistic, 2014. 956-966

|

|

[55]

|

Zhao H, Lu Z D, Poupart P. Self-adaptive hierarchical sentence model. In: Proceedings of the 24th International Conference on Artificial Intelligence. Buenos Aires, Argentina: AAAI, 2015. 4069-4076

|

|

[56]

|

Luong M T, Pham H, Manning C D. Effective approaches to attention-based neural machine translation. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal: ACL, 2015. 1412-1421

|

|

[57]

|

Fader A, Zettlemoyer L, Etzioni O. Open question answering over curated and extracted knowledge bases. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, USA: ACM, 2014. 1156-1165

|

下载:

下载: