A Review of Intelligent Data Collaborative Inference Techniques for Source-grid-load Systems

-

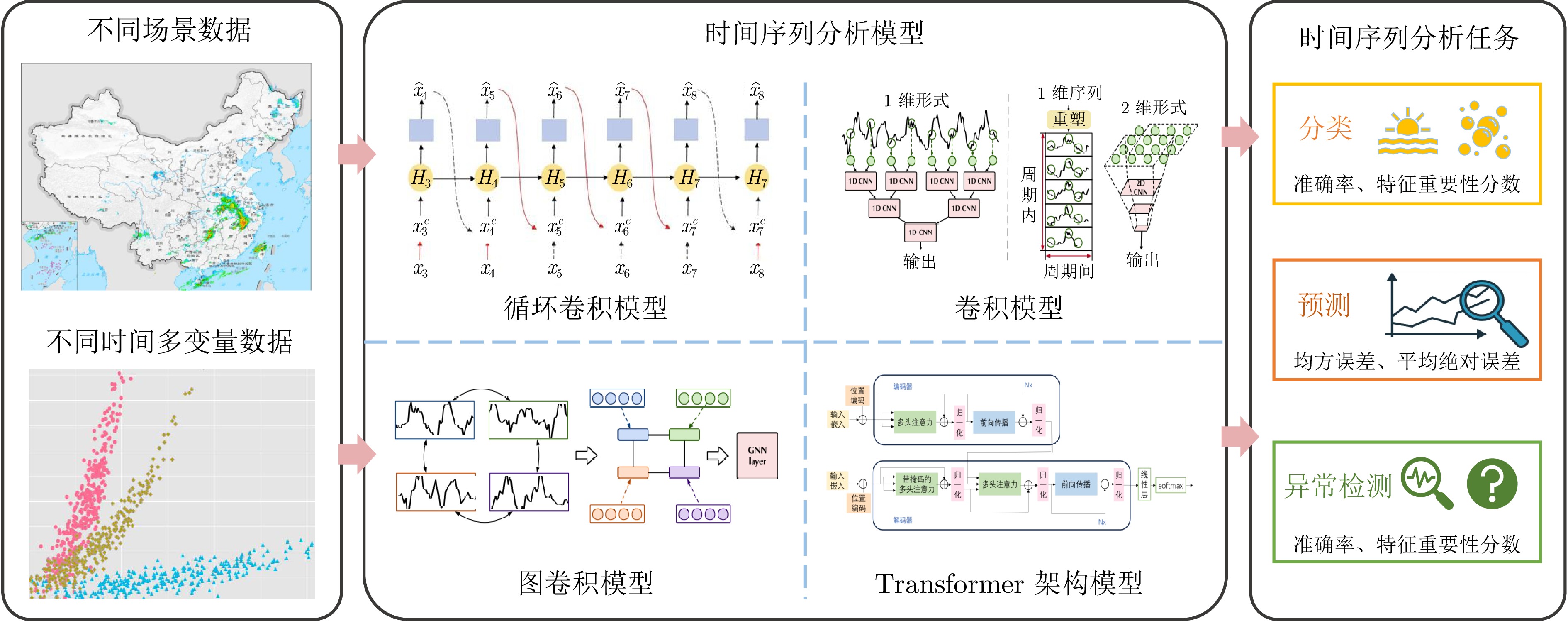

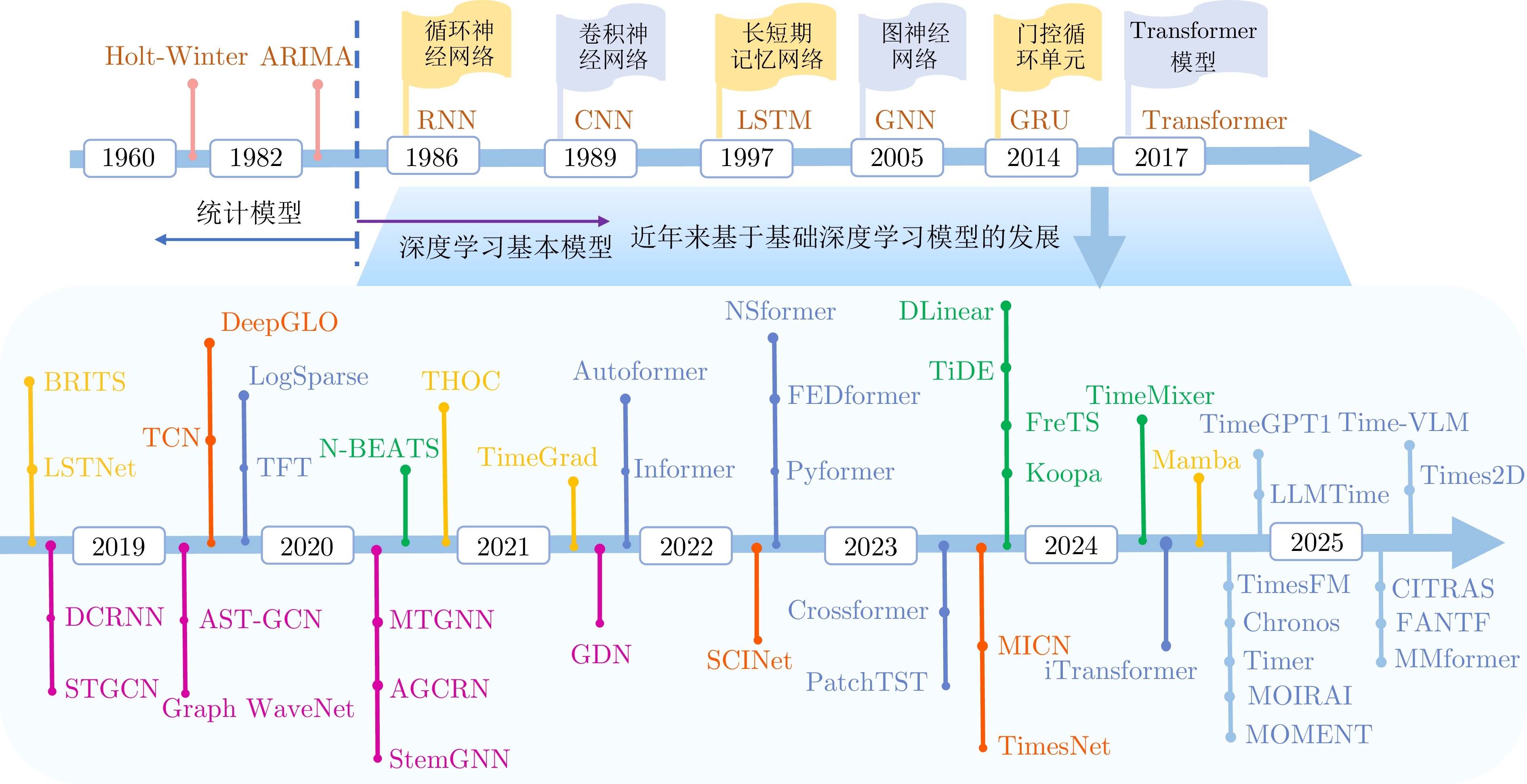

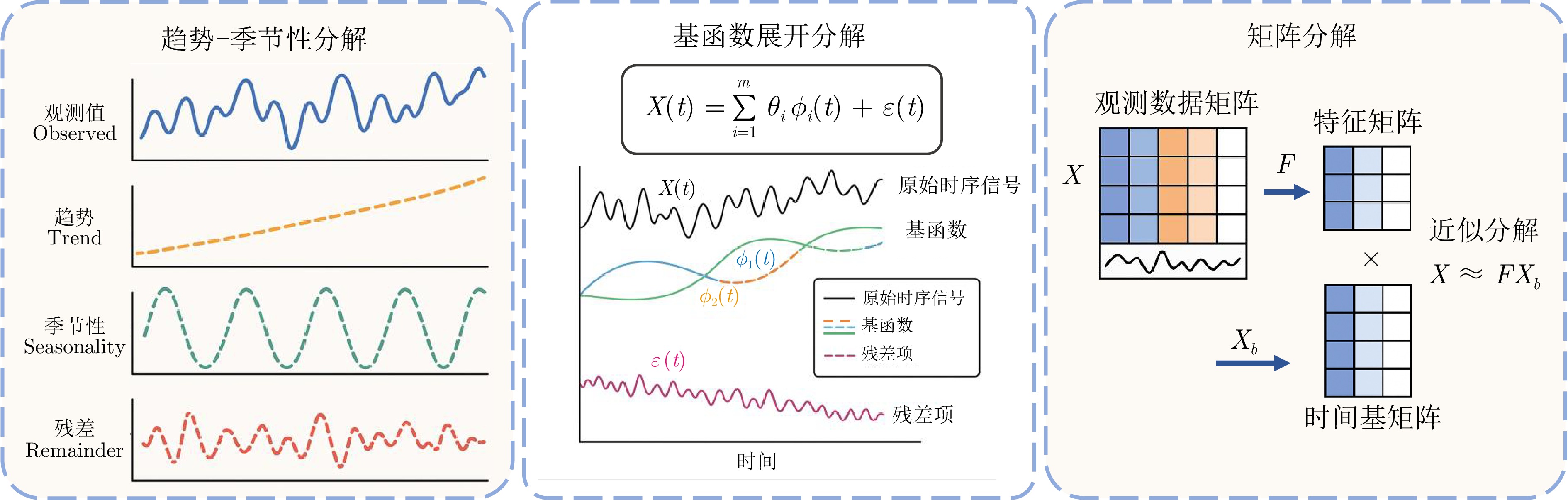

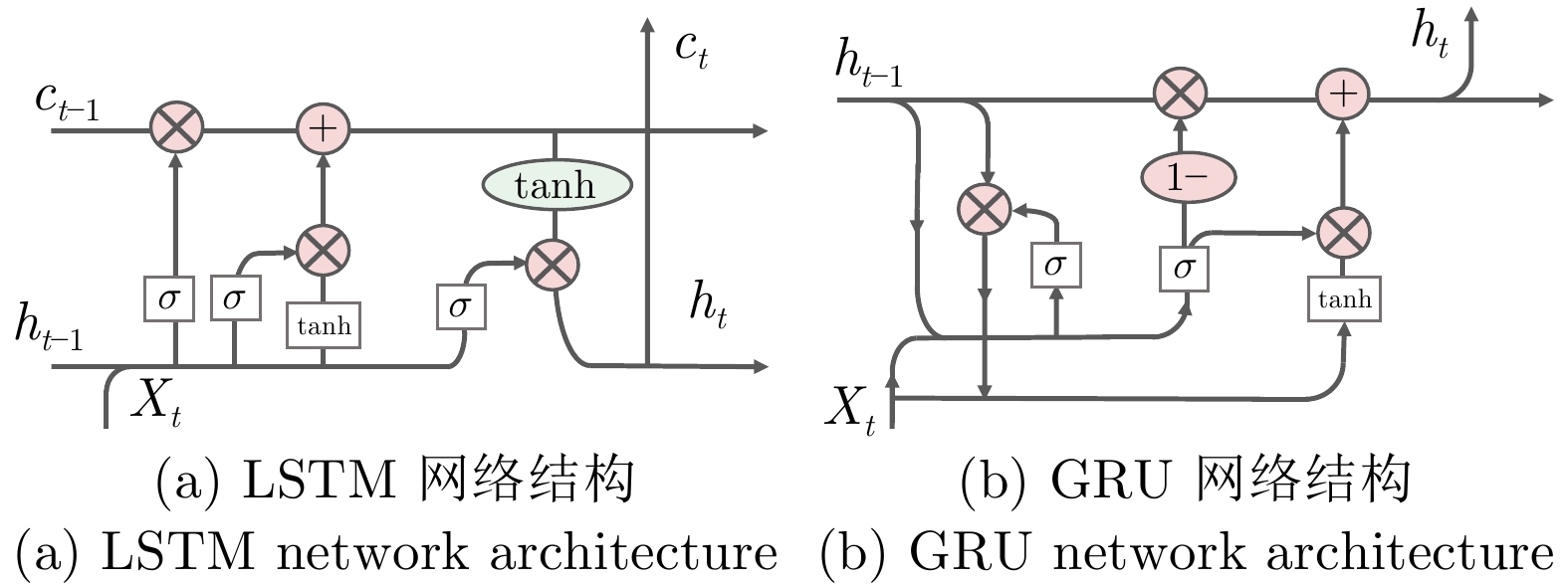

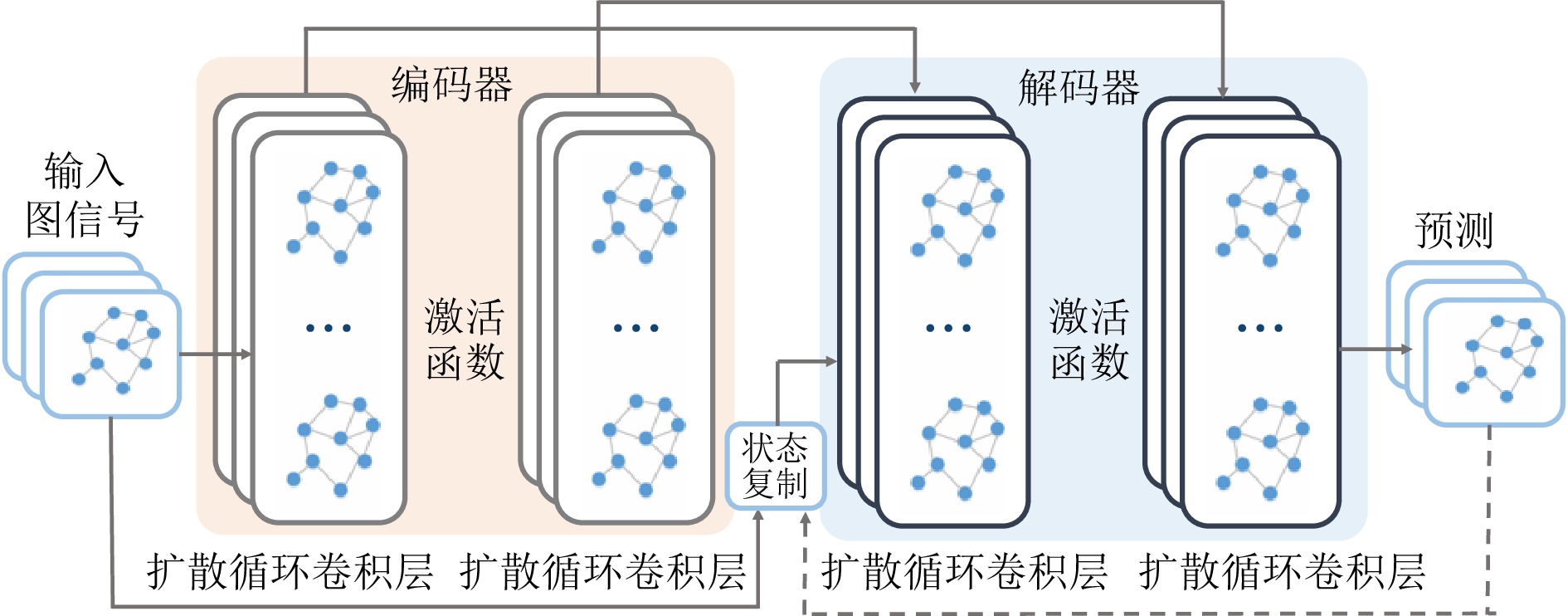

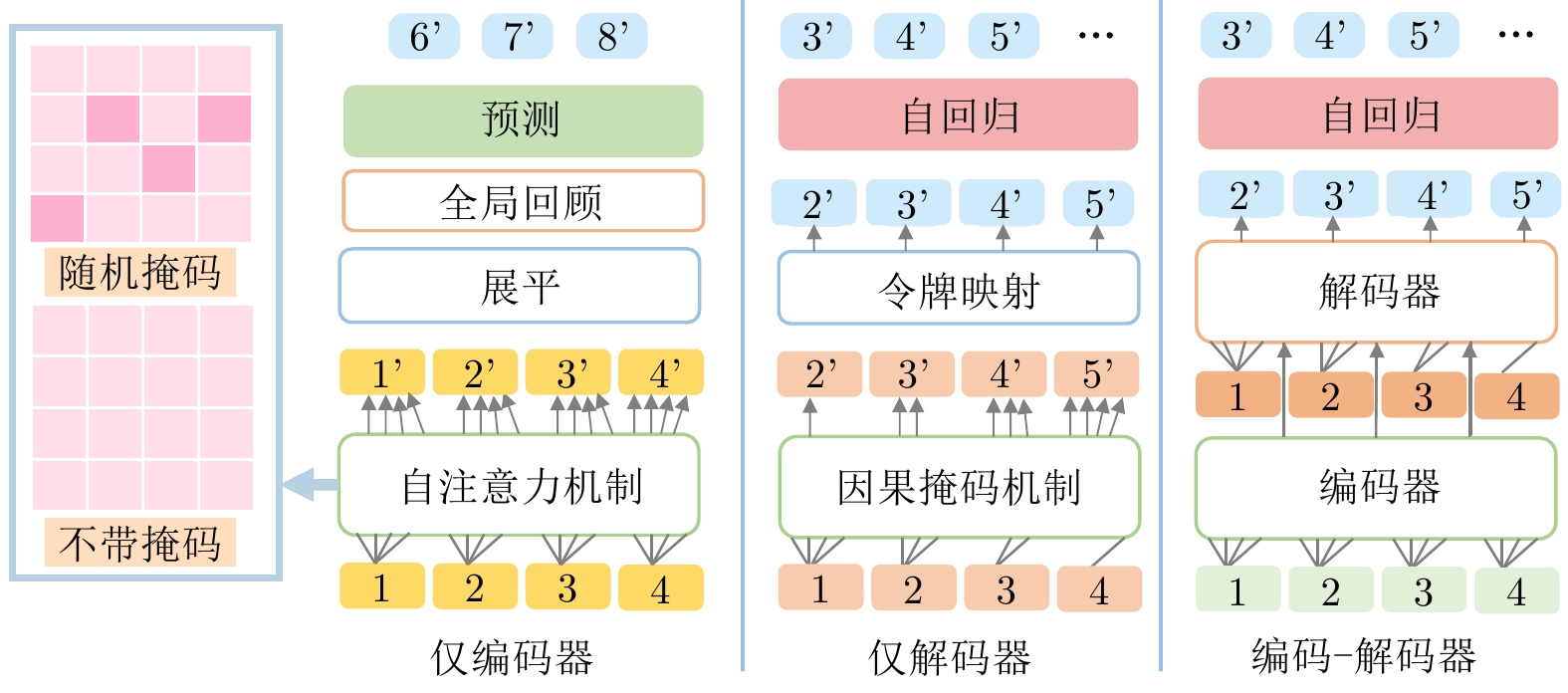

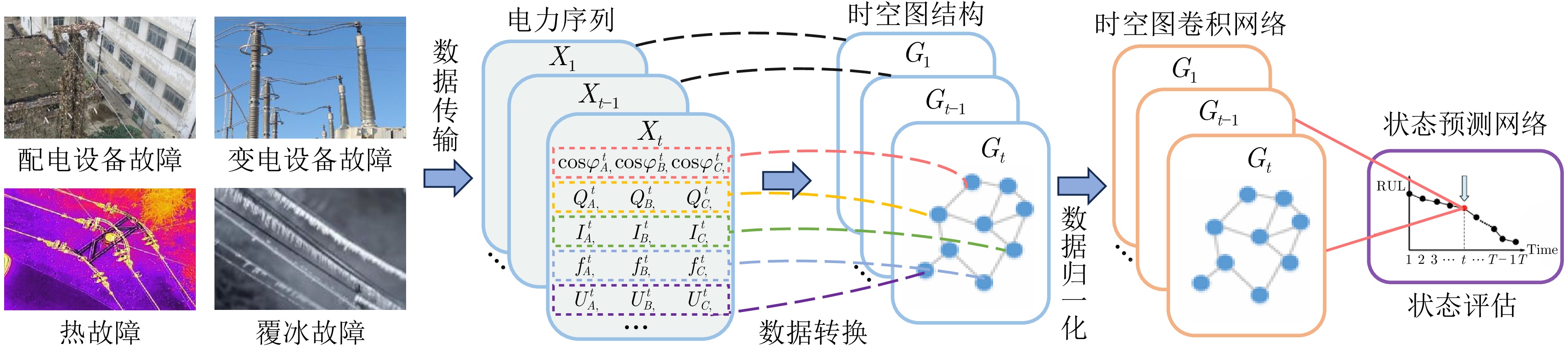

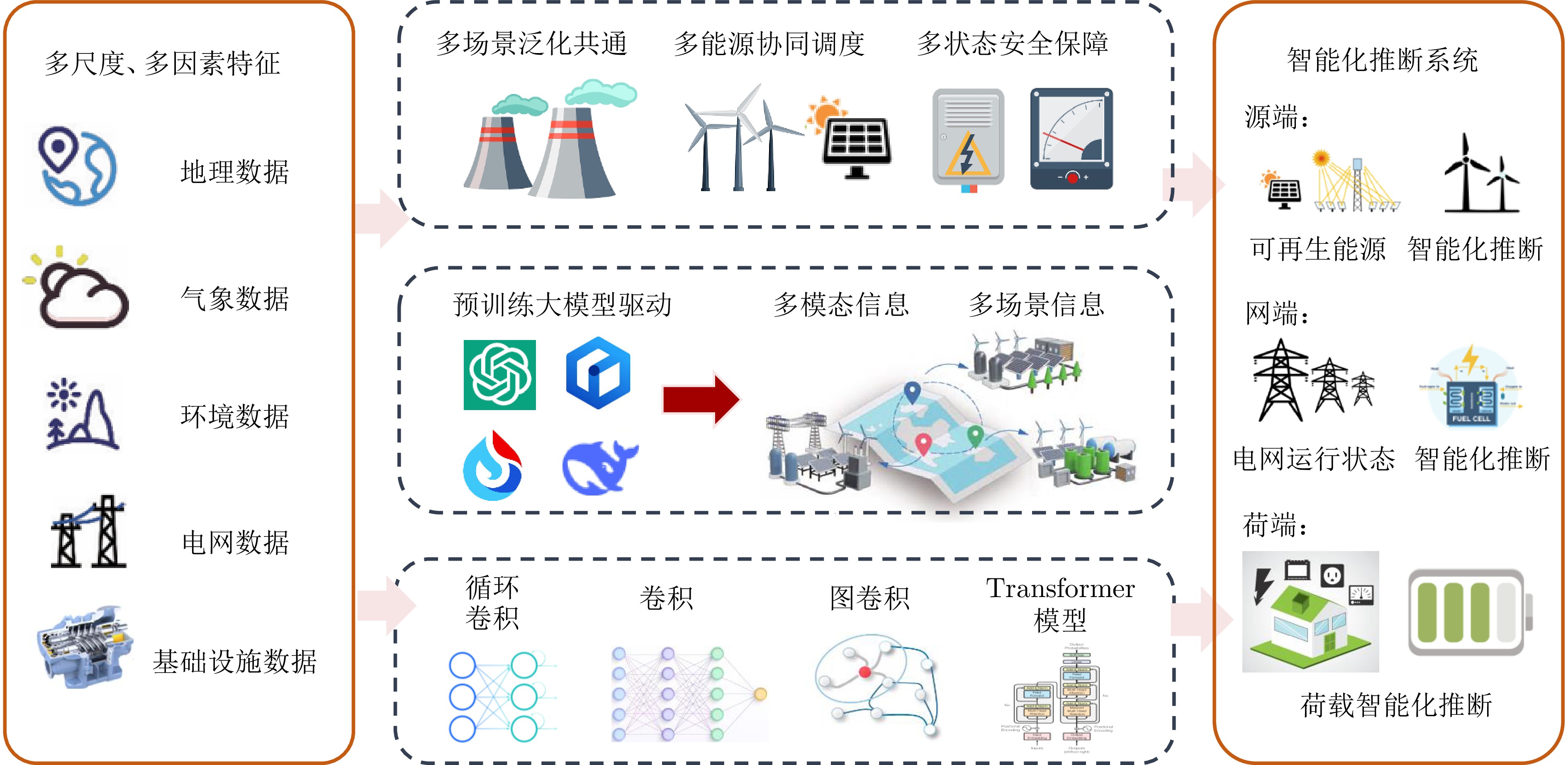

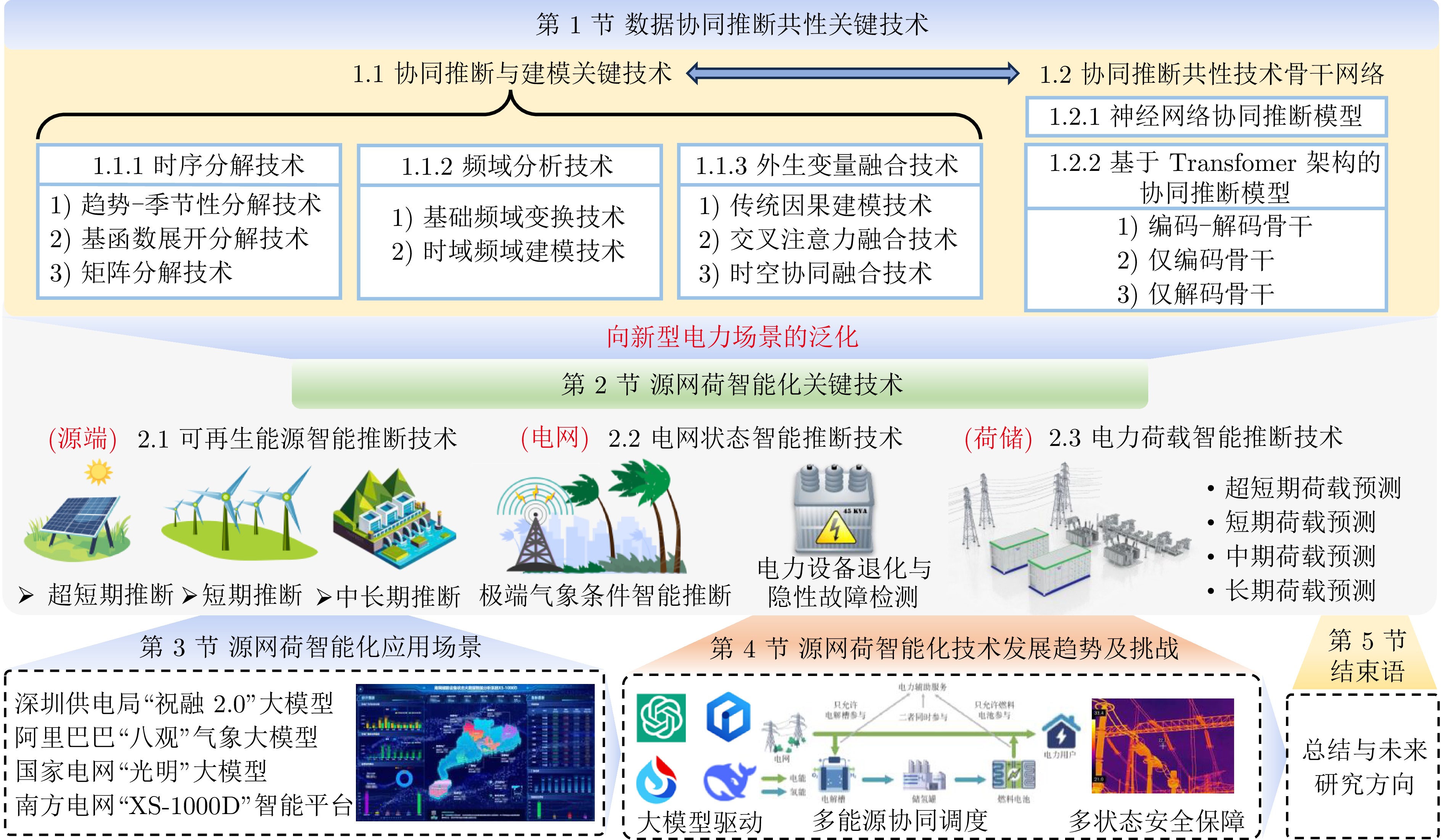

摘要: 随着可再生能源并网比例的持续提升, 风电、光伏等新能源发电形式对电力系统的稳定性与调度智能化提出更高要求. 源网荷储一体化背景下, 如何高效利用多源异构电力数据实现精准预测与协同分析, 已成为关键问题. 近年来, 深度学习、大数据、大模型等技术推动智能化推断技术取得飞跃式进展. 本文首先结合深度学习技术, 对时间序列数据协同推断共性技术研究现状进行阐述, 重点分析趋势−季节性分解、频域建模、外生变量融合等关键方法, 分析基于不同架构的时间序列模型的研究现状. 其次针对源网荷智能化关键技术进行阐述, 进一步梳理源网荷系统中智能预测、状态评估与负荷调度等典型场景中的关键技术路径, 并对其具体应用场景进行分析. 最后, 结合日益复杂的电力系统背景, 对数据协同推断技术的发展方向进行展望.Abstract: With the continuous increase in the proportion of renewable energy integration, new energy generation forms such as wind power and photovoltaic systems have imposed higher requirements on the stability and intelligent dispatch of power systems. Under the integrated framework of source-grid-load-storage, how to efficiently utilize multi-source heterogeneous power data for accurate forecasting and collaborative analysis has become a critical issue. In recent years, technologies such as deep learning, big data, and large-scale models have driven rapid advancements in intelligent inference techniques. This paper first elaborates on the current research status of common technologies for collaborative inference of time series data, combined with deep learning techniques. Key approaches including trend-seasonality decomposition, frequency-domain modeling, and exogenous variable fusion are emphasized, alongside an analysis of time series models based on different architectures. Secondly, the paper discusses the key intelligent technologies for source-grid-load integration, further outlining critical technological pathways in typical scenarios such as intelligent forecasting, state assessment, and load scheduling within the source-grid-load-storage system, with detailed analyses of their specific application contexts. Finally, in light of the increasingly complex power system environment, prospects for the future development of data collaborative inference technologies are presented.

-

表 1 基于交叉注意力的外生变量融合方法

Table 1 Cross-attention-based exogenous variable fusion methods

方法 核心机制 方法特点 Crossformer[12] 通过二维分段嵌入(DSW embedding)保留时空结构信息, 结合两阶段注意力(TSA)模块交替捕获跨时间与跨维度依赖 分层编码解码结构以实现多尺度时空特征提取, 并通过参数共享降低模型复杂度 ExoTST[47] 将历史外生、当前外生与主序列视为三模态输入, 通过分层多头交叉注意力模块逐层融合 对缺失与噪声鲁棒性强, 动态整合多源异质驱动信号 TimeXer[48] 补丁级自注意力先行建模局部时序依赖, 再通过变量级交叉注意力引入外生变量 双流架构兼容局部与全局信息, 适应多模态输入与分布漂移 TGForecaster[49] 利用文本描述与数值序列共同作为查询与键值, 通过交叉注意力实现多模态融合 支持场景化预测, 增强模型可解释性与上下文感知能力 CATS[50] 舍弃自注意力, 仅保留未来−历史跨注意力机制, 以参数共享和可学习查询捕获预测依赖 架构简化, 参数与内存开销低, 长程预测精度优异 表 2 CNN架构时空协同推断模型

Table 2 Spatiotemporal collaborative inference model based on CNN architecture

表 3 AI气象大模型

Table 3 AI-based large-scale meteorological models

模型名称 模型结构 预测时效性 空间分辨率 预测范围 FuXi[147] 级联机器学习天气预报系统 中期15 d 0.25° 6 h FengWu[148] 多模态多任务Transformer 中期10.75 d 0.25° 6 h GraphCast[149] 机器学习+图形神经网络 中期10 d 0.25° 6 h Pangu-Weather[2] 3D多尺度Transformer 短、中期 1 h ~ 7 d 0.25° 6 h NowcastNet[150] 物理约束的深度生成模型 临近3 h 20 km 10 min SwinVRNN[151] 递归神经网络+Transformer 中期 5.6250 °6 h FourCastNet[152] 自适应傅里叶算子Transformer 短、中期 0.25° 6 h DLWP[153] 卷积神经网络 短、中期 1.9° 6 h -

[1] Elkin C, Witherspoon S. Machine learning can boost the value of wind energy [Online], available: https://deepmind.google/discover/blog/machine-learning-can-boost-the-value-of-wind-energy/, July 10, 2025 [2] Bi K F, Xie L X, Zhang H H, Chen X, Gu X T, Tian Q. Pangu-Weather: A 3D high-resolution model for fast and accurate global weather forecast. arXiv preprint arXiv: 2211.02556, 2022. [3] Wen G H, Hu G Q, Hu J Q, Shi X L, Chen G R. Frequency regulation of source-grid-load systems: A compound control strategy. IEEE Transactions on Industrial Informatics, 2016, 12(1): 69−78 doi: 10.1109/TII.2015.2496309 [4] Wen G H, Yu X H, Liu Z W, Yu W W. Adaptive consensus-based robust strategy for economic dispatch of smart grids subject to communication uncertainties. IEEE Transactions on Industrial Informatics, 2018, 14(6): 2484−2496 doi: 10.1109/TII.2017.2772088 [5] Rangapuram S S, Seeger M, Gasthaus J, Stella L, Wang Y Y, Januschowski T. Deep state space models for time series forecasting. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: Curran Associates Inc., 2018. 7796−7805 [6] Li S Y, Jin X Y, Xuan Y, Zhou X Y, Chen W H, Wang Y X, et al. Enhancing the locality and breaking the memory bottleneck of Transformer on time series forecasting. In: Proceedings of the 33rd International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2019. Article No. 471 [7] Bai S J, Kolter J Z, Koltun V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv: 1803.01271, 2018. [8] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 6000−6010 [9] Wang Y X, Wu H X, Dong J X, Liu Y, Long M S, Wang J M. Deep time series models: A comprehensive survey and benchmark. arXiv preprint arXiv: 2407.13278, 2024. [10] Wen Q S, Gao J K, Song X M, Sun L, Xu H, Zhu S H. RobustSTL: A robust seasonal-trend decomposition algorithm for long time series. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 5409−5416 [11] Wu H X, Xu J H, Wang J M, Long M S. Autoformer: Decomposition Transformers with auto-correlation for long-term series forecasting. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: Curran Associates Inc., 2021. Article No. 1717Wu H X, Xu J H, Wang J M, Long M S. Autoformer: Decomposition Transformers with auto-correlation for long-term series forecasting. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: Curran Associates Inc., 2021. Article No. 1717 [12] Zhang Y H, Yan J C. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [13] Cao D F, Jia F R, Arik S O, Pfister T, Zheng Y X, Ye W, et al. TEMPO: Prompt-based generative pre-trained Transformer for time series forecasting. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [14] Du D Z, Su B, Wei Z W. Preformer: Predictive Transformer with multi-scale segment-wise correlations for long-term time series forecasting. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Rhodes Island, Greece: IEEE, 2023. 1−5 [15] Bandara K, Hyndman R J, Bergmeir C. MSTL: A seasonal-trend decomposition algorithm for time series with multiple seasonal patterns. International Journal of Operational Research, 2025, 52(1): 79−98 doi: 10.1504/IJOR.2025.143957 [16] Oreshkin B N, Carpov D, Chapados N, Bengio Y. N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. In: Proceedings of the 8th International Conference on Learning Representations. Addis Ababa, Ethiopia: ICLR, 2020. [17] Challu C, Olivares K G, Oreshkin B N, Ramirez F G, Canseco M M, Dubrawski A. NHITS: Neural hierarchical interpolation for time series forecasting. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI, 2023. 6989−6997 [18] Fan W, Zheng S, Yi X H, Cao W, Fu Y J, Bian J, et al. DEPTS: Deep expansion learning for periodic time series forecasting. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022.Fan W, Zheng S, Yi X H, Cao W, Fu Y J, Bian J, et al. DEPTS: Deep expansion learning for periodic time series forecasting. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022. [19] Xiong L, Chen X, Huang T K, Schneider J, Carbonell J G. Temporal collaborative filtering with Bayesian probabilistic tensor factorization. In: Proceedings of the 10th SIAM International Conference on Data Mining. Columbus, USA: SIAM, 2010. 211−222 [20] Yu H F, Rao N, Dhillon I S. Temporal regularized matrix factorization for high-dimensional time series prediction. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 847−855 [21] Takeuchi K, Kashima H, Ueda N. Autoregressive tensor factorization for spatio-temporal predictions. In: Proceedings of the IEEE International Conference on Data Mining (ICDM). New Orleans, USA: IEEE, 2017. 1105−1110 [22] Chen X Y, Zhang C Y, Zhao X L, Saunier N, Sun L J. Nonstationary temporal matrix factorization for multivariate time series forecasting. arXiv preprint arXiv: 2203.10651, 2022. [23] Chen X Y, Sun L J. Bayesian temporal factorization for multidimensional time series prediction. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(9): 4659−4673 [24] Sen R, Yu H F, Dhillon I. Think globally, act locally: A deep neural network approach to high-dimensional time series forecasting. In: Proceedings of the 33rd International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2019. Article No. 435 [25] Kawabata K, Bhatia S, Liu R, Wadhwa M, Hooi B. SSMF: Shifting seasonal matrix factorization. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: Curran Associates Inc., 2021. Article No. 295Kawabata K, Bhatia S, Liu R, Wadhwa M, Hooi B. SSMF: Shifting seasonal matrix factorization. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. Virtual Event: Curran Associates Inc., 2021. Article No. 295 [26] Cui X L, Shi L, Zhong W, Zou C L. Robust high-dimensional low-rank matrix estimation: Optimal rate and data-adaptive tuning. The Journal of Machine Learning Research, 2023, 24(1): Article No. 350 [27] Luan Z T, Sun D F, Wang H N, Zhang L P. Efficient online prediction for high-dimensional time series via joint tensor tucker decomposition. arXiv preprint arXiv: 2403.18320, 2024. [28] Sneddon I N. Fourier Transforms. New York: Dover Publications, 1995. [29] Xu Z J, Zeng A L, Xu Q. FITS: Modeling time series with 10k parameters. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [30] Zhou T, Ma Z Q, Wen Q S, Wang X, Sun L, Jin R. FEDformer: Frequency enhanced decomposed Transformer for long-term series forecasting. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 27268−27286 [31] Farge M. Wavelet Transforms and their applications to turbulence. Annual Review of Fluid Mechanics, 1992, 24: 395−458 doi: 10.1146/annurev.fl.24.010192.002143 [32] Liu M H, Zeng A L, Chen M X, Xu Z J, Lai Q X, Ma L N, et al. SCINet: Time series modeling and forecasting with sample convolution and interaction. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 421 [33] Wu Z H, Huang N E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Advances in Adaptive Data Analysis, 2009, 1(1): 1−41 doi: 10.1142/S1793536909000047 [34] Dragomiretskiy K, Zosso D. Variational mode decomposition. IEEE Transactions on Signal Processing, 2014, 62(3): 531−544 doi: 10.1109/TSP.2013.2288675 [35] Zhou T, Ma Z Q, Wang X, Wen Q S, Sun L, Yao T, et al. FiLM: Frequency improved Legendre memory model for long-term time series forecasting. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 921 [36] Yang Z J, Yan W W, Huang X L, Mei L. Adaptive temporal-frequency network for time-series forecasting. IEEE Transactions on Knowledge and Data Engineering, 2022, 34(4): 1576−1587 [37] Zhang C L, Zhou T, Wen Q S, Sun L. TFAD: A decomposition time series anomaly detection architecture with time-frequency analysis. In: Proceedings of the 31st ACM International Conference on Information & Knowledge Management. Atlanta, USA: ACM, 2022. 2497−2507 [38] Yao S C, Piao A L, Jiang W J, Zhao Y R, Shao H J, Liu S Z, et al. STFNets: Learning sensing signals from the time-frequency perspective with short-time Fourier neural networks. In: Proceedings of the World Wide Web Conference. San Francisco, USA: ACM, 2019. 2192−2202 [39] Cao D F, Wang Y J, Duan J Y, Zhang C, Zhu X, Huang C, et al. Spectral temporal graph neural network for multivariate time-series forecasting. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2020. Article No. 1491 [40] Yi K, Zhang Q, Fan W, Wang S J, Wang P Y, He H, et al. Frequency-domain MLPs are more effective learners in time series forecasting. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 3349 [41] Wang Z X, Pei C H, Ma M H, Wang X, Li Z H, Pei D, et al. Revisiting VAE for unsupervised time series anomaly detection: A frequency perspective. In: Proceedings of the ACM Web Conference 2024. Singapore: ACM, 2024. 3096−3105 [42] Eldele E, Ragab M, Chen Z H, Wu M, Li X L. TSLANet: Rethinking Transformers for time series representation learning. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 494 [43] Xu T, Wang R J, Meng H, Li M C, Ji Y, Zhang Y, et al. Grid frequency regulation through virtual power plant of integrated energy systems with energy storage. IET Renewable Power Generation, 2024, 18(14): 2277−2293 doi: 10.1049/rpg2.13068 [44] Xing C, Xi X Z, He X, Deng C, Zhang M Q. Collaborative source-grid-load frequency regulation strategy for DC sending-end power grid considering electrolytic aluminum participation. Frontiers in Energy Research, 2024, 12: Article No. 1486319 doi: 10.3389/fenrg.2024.1486319 [45] Box G E P, Jenkins G M, Reinsel G C, Ljung G M. Time Series Analysis: Forecasting and Control (Fifth Edition). Hoboken: John Wiley & Sons, 2015. [46] Qin Y, Song D J, Chen H F, Cheng W, Jiang G F, Cottrell G W. A dual-stage attention-based recurrent neural network for time series prediction. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence. Melbourne, Australia: IJCAI, 2017. 2627−2633 [47] Tayal K, Renganathan A, Jia X W, Kumar V, Lu D. ExoTST: Exogenous-aware temporal sequence Transformer for time series prediction. In: Proceedings of the IEEE International Conference on Data Mining (ICDM). Abu Dhabi, United Arab Emirates: IEEE, 2024. 857−862 [48] Wang Y X, Wu H X, Dong J X, Qin G, Zhang H R, Liu Y, et al. TimeXer: Empowering Transformers for time series forecasting with exogenous variables. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2025. Article No. 15 [49] Xu Z J, Bian Y X, Zhong J Y, Wen X Y, Xu Q. Beyond trend and periodicity: Guiding time series forecasting with textual cues. arXiv preprint arXiv: 2405.13522, 2024. [50] Lu J C, Han X, Sun Y, Yang S H. CATS: Enhancing multivariate time series forecasting by constructing auxiliary time series as exogenous variables. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 1339 [51] Zhu J W, Wang Q J, Tao C, Deng H H, Zhao L, Li H F. AST-GCN: Attribute-augmented spatiotemporal graph convolutional network for traffic forecasting. IEEE Access, 2021, 9: 35973−35983 doi: 10.1109/ACCESS.2021.3062114 [52] O' Donncha F, Hu Y H, Palmes P, Burke M, Filgueira R, Grant J. A spatio-temporal LSTM model to forecast across multiple temporal and spatial scales. Ecological Informatics, 2022, 69: Article No. 101687 doi: 10.1016/j.ecoinf.2022.101687 [53] Miraki A, Parviainen P, Arghandeh R. Electricity demand forecasting at distribution and household levels using explainable causal graph neural network. Energy and AI, 2024, 16: Article No. 100368 doi: 10.1016/j.egyai.2024.100368 [54] Wang Q P, Feng S B, Han M. Causal graph convolution neural differential equation for spatio-temporal time series prediction. Applied Intelligence, 2025, 55(7): Article No. 671 doi: 10.1007/s10489-025-06287-7 [55] Yang K Y, Shi F H. Medium-and long-term load forecasting for power plants based on causal inference and Informer. Applied Sciences, 2023, 13(13): Article No. 7696 doi: 10.3390/app13137696 [56] Elman J L. Finding structure in time. Cognitive Science, 1990, 14(2): 179−211 doi: 10.1207/s15516709cog1402_1 [57] Yin W P, Kann K, Yu M, Schütze H. Comparative study of CNN and RNN for natural language processing. arXiv preprint arXiv: 1702.01923, 2017. [58] Purwins H, Li B, Virtanen T, Schlüter J, Chang S Y, Sainath T. Deep learning for audio signal processing. IEEE Journal of Selected Topics in Signal Processing, 2019, 13(2): 206−219 doi: 10.1109/JSTSP.2019.2908700 [59] Wen R F, Torkkola K, Narayanaswamy B, Madeka D. A multi-horizon quantile recurrent forecaster. arXiv preprint arXiv: 1711.11053, 2017. [60] Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8): 1735−1780 doi: 10.1162/neco.1997.9.8.1735 [61] Chung J, Gulcehre C, Cho K, Bengio Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv: 1412.3555, 2014. [62] Salinas D, Flunkert V, Gasthaus J, Januschowski T. DeepAR: Probabilistic forecasting with autoregressive recurrent networks. International Journal of Forecasting, 2020, 36(3): 1181−1191 doi: 10.1016/j.ijforecast.2019.07.001 [63] Lai G K, Chang W C, Yang Y M, Liu H X. Modeling long-and short-term temporal patterns with deep neural networks. In: Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval. Ann Arbor, USA: ACM, 2018. 95−104 [64] LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521(7553): 436−444 doi: 10.1038/nature14539 [65] Tan M X, Le Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 6105−6114 [66] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 3431−3440 [67] Ding M Y, Huo Y Q, Yi H W, Wang Z, Shi J P, Lu Z W, et al. Learning depth-guided convolutions for monocular 3D object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 11669−11678 [68] Liu C L, Hsaio W H, Tu Y C. Time series classification with multivariate convolutional neural network. IEEE Transactions on Industrial Electronics, 2019, 66(6): 4788−4797 doi: 10.1109/TIE.2018.2864702 [69] Lea C, Vidal R, Reiter A, Hager G D. Temporal convolutional networks: A unified approach to action segmentation. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 47−54 [70] Wang H Q, Peng J, Huang F H, Wang J C, Chen J H, Xiao Y F. MICN: Multi-scale local and global context modeling for long-term series forecasting. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [71] Luo D H, Wang X. ModernTCN: A modern pure convolution structure for general time series analysis. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [72] Wu H X, Hu T G, Liu Y, Zhou H, Wang J M, Long M S. TimesNet: Temporal 2D-variation modeling for general time series analysis. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [73] Nematirad R, Pahwa A, Natarajan B. Times2D: Multi-period decomposition and derivative mapping for general time series forecasting. In: Proceedings of the 39th AAAI Conference on Artificial Intelligence. Philadelphia, USA: AAAI, 2025. 19651−19658 [74] Scarselli F, Gori M, Tsoi A C, Hagenbuchner M, Monfardini G. The graph neural network model. IEEE Transactions on Neural Networks, 2009, 20(1): 61−80 doi: 10.1109/TNN.2008.2005605 [75] Li Y G, Yu R, Shahabi C, Liu Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, Canada: ICLR, 2018. [76] Yu B, Yin H T, Zhu Z X. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence. Stockholm, Sweden: IJCAI, 2018. 3634−3640 [77] Wu Z H, Pan S R, Long G D, Jiang J, Zhang C Q. Graph WaveNet for deep spatial-temporal graph modeling. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence. Macao, China: IJCAI, 2019. 1907−1913 [78] Bai L, Yao L N, Li C, Wang X Z, Wang C. Adaptive graph convolutional recurrent network for traffic forecasting. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2020. Article No. 1494 [79] Liu S, Ji H, Wang M C. Nonpooling convolutional neural network forecasting for seasonal time series with trends. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(8): 2879−2888 doi: 10.1109/TNNLS.2019.2934110 [80] Wang Z G, Yan W Z, Oates T. Time series classification from scratch with deep neural networks: A strong baseline. In: Proceedings of the International Joint Conference on Neural Networks (IJCNN). Anchorage, USA: IEEE, 2017. 1578−1585 [81] Gao J K, Song X M, Wen Q S, Wang P C, Sun L, Xu H. RobustTAD: Robust time series anomaly detection via decomposition and convolutional neural networks. arXiv preprint arXiv: 2002.09545, 2020. [82] Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X H, Unterthiner T, et al. An image is worth 16×16 words: Transformers for image recognition at scale. In: Proceedings of the 9th International Conference on Learning Representations. Virtual Event: ICLR, 2021.Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X H, Unterthiner T, et al. An image is worth 16×16 words: Transformers for image recognition at scale. In: Proceedings of the 9th International Conference on Learning Representations. Virtual Event: ICLR, 2021. [83] Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I, et al. Language models are unsupervised multitask learners. OpenAI Blog, 2019, 1(8): Article No. 9 [84] Drouin A, Marcotte É, Chapados N. TACTiS: Transformer-attentional copulas for time series. In: Proceedings of the 39th International Conference on Machine Learning. Baltimore, USA: PMLR, 2022. 5447−5493 [85] Das A, Kong W, Leach A, Mathur S, Sen R, Yu R. Long-term forecasting with TiDE: Time-series dense encoder. arXiv preprint arXiv: 2304.08424, 2024. [86] Liu S Z, Yu H, Liao C, Li J G, Lin W Y, Liu A X, et al. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022.Liu S Z, Yu H, Liao C, Li J G, Lin W Y, Liu A X, et al. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In: Proceedings of the 10th International Conference on Learning Representations. Virtual Event: ICLR, 2022. [87] Zhou H Y, Zhang S H, Peng J Q, Zhang S, Li J X, Xiong H, et al. Informer: Beyond efficient Transformer for long sequence time-series forecasting. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI, 2021. 11106−11115Zhou H Y, Zhang S H, Peng J Q, Zhang S, Li J X, Xiong H, et al. Informer: Beyond efficient Transformer for long sequence time-series forecasting. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence. Virtual Event: AAAI, 2021. 11106−11115 [88] 邓文丽. 基于改进多头注意力Transformer的行程时间预测方法研究 [硕士学位论文], 哈尔滨工业大学, 中国, 2023.Deng Wen-Li. Research on Travel Time Prediction Method Based on Improved Multi-head Attention Transformer [Master thesis], Harbin Institute of Technology, China, 2023. [89] Liang Y X, Wen H M, Nie Y Q, Jiang Y S, Jin M, Song D J, et al. Foundation models for time series analysis: A tutorial and survey. In: Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. Barcelona, Spain: ACM, 2024. 6555−6565 [90] Nie Y Q, Nguyen N H, Sinthong P, Kalagnanam J. A time series is worth 64 words: Long-term forecasting with Transformers. In: Proceedings of the 11th International Conference on Learning Representations. Kigali, Rwanda: ICLR, 2023. [91] Woo G, Liu C H, Kumar A, Xiong C M, Savarese S, Sahoo D. Unified training of universal time series forecasting Transformers. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 2178 [92] Goswami M, Szafer K, Choudhry A, Cai Y F, Li S, Dubrawski A. MOMENT: A family of open time-series foundation models. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 642 [93] Liu Y, Hu T G, Zhang H R, Wu H X, Wang S Y, Ma L T, et al. iTransformer: Inverted Transformers are effective for time series forecasting. In: Proceedings of the 12th International Conference on Learning Representations. Vienna, Austria: ICLR, 2024. [94] Zhong S R, Ruan W L, Jin M, Li H, Wen Q S, Liang Y X. Time-VLM: Exploring multimodal vision-language models for augmented time series forecasting. arXiv preprint arXiv: 2502.04395, 2025. [95] Tay Y, Dehghani M, Bahri D, Metzler D. Efficient Transformers: A survey. ACM Computing Surveys, 2022, 55(6): Article No. 109 [96] Radford A, Narasimhan K, Salimans T, Sutskever I. Improving Language Understanding by Generative Pre-Training, Technical Report, OpenAI, USA, 2018. [97] Das A, Kong W, Sen R, Zhou Y. A decoder-only foundation model for time-series forecasting. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 404 [98] Liu Y, Zhang H R, Li C Y, Huang X D, Wang J M, Long M S. Timer: Generative pre-trained Transformers are large time series models. In: Proceedings of the 41st International Conference on Machine Learning. Vienna, Austria: JMLR.org, 2024. Article No. 1313 [99] Liu Y, Qin G, Huang X D, Wang J M, Long M S. Timer-XL: Long-context Transformers for unified time series forecasting. In: Proceedings of the 13th International Conference on Learning Representations. Singapore: ICLR, 2025. [100] Xue H, Salim F D. PromptCast: A new prompt-based learning paradigm for time series forecasting. IEEE Transactions on Knowledge and Data Engineering, 2024, 36(11): 6851−6864 doi: 10.1109/TKDE.2023.3342137 [101] Zhou T, Niu P S, Wang X, Sun L, Jin R. One fits all: Power general time series analysis by pretrained LM. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1877 [102] Tan M T, Merrill M A, Gupta V, Althoff T, Hartvigsen T. Are language models actually useful for time series forecasting? In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2025. Article No. 1922 [103] Liu Y, Qin G, Huang X D, Wang J M, Long M S. AutoTimes: Autoregressive time series forecasters via large language models. In: Proceedings of the 38th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2025. Article No. 3882 [104] 国家能源局. 2024 年全国电力工业统计数据 [Online], available: https://www.nea.gov.cn/20250121/097bfd7c1cd3498897639857d86d5dac/c.html, 2025-07-10National Energy Administration. 2024 National electric power industry statistics [Online], available: https://www.nea.gov.cn/20250121/097bfd7c1cd3498897639857d86d5dac/c.html, July 10, 2025 [105] Raza M Q, Nadarajah M, Ekanayake C. On recent advances in PV output power forecast. Solar Energy, 2016, 136: 125−144 doi: 10.1016/j.solener.2016.06.073 [106] Rajagukguk R A, Ramadhan R A A, Lee H J. A review on deep learning models for forecasting time series data of solar irradiance and photovoltaic power. Energies, 2020, 13(24): Article No. 6623 doi: 10.3390/en13246623 [107] Tsai W C, Hong C M, Tu C S, Lin W M, Chen C H. A review of modern wind power generation forecasting technologies. Sustainability, 2023, 15(14): Article No. 10757 doi: 10.3390/su151410757 [108] Dai X R, Liu G P, Hu W S. An online-learning-enabled self-attention-based model for ultra-short-term wind power forecasting. Energy, 2023, 272: Article No. 127173 doi: 10.1016/j.energy.2023.127173 [109] Wu Q Y, Guan F, Lv C, Huang Y Z. Ultra-short-term multi-step wind power forecasting based on CNN-LSTM. IET Renewable Power Generation, 2021, 15(5): 1019−1029 doi: 10.1049/rpg2.12085 [110] Xiang L, Liu J N, Yang X, Hu A J, Su H. Ultra-short term wind power prediction applying a novel model named SATCN-LSTM. Energy Conversion and Management, 2022, 252: Article No. 115036 doi: 10.1016/j.enconman.2021.115036 [111] Wei J Q, Wu X J, Yang T M, Jiao R H. Ultra-short-term forecasting of wind power based on multi-task learning and LSTM. International Journal of Electrical Power & Energy Systems, 2023, 149: Article No. 109073 [112] Li Z, Ye L, Zhao Y N, Pei M, Lu P, Li Y L, et al. A spatiotemporal directed graph convolution network for ultra-short-term wind power prediction. IEEE Transactions on Sustainable Energy, 2023, 14(1): 39−54 doi: 10.1109/TSTE.2022.3198816 [113] Liu X Y, Zhang Y R, Zhen Z, Xu F, Wang F, Mi Z Q. Spatio-temporal graph neural network and pattern prediction based ultra-short-term power forecasting of wind farm cluster. IEEE Transactions on Industry Applications, 2024, 60(1): 1794−1803 doi: 10.1109/TIA.2023.3321267 [114] Lv Y L, Hu Q, Xu H, Lin H Y, Wu Y F. An ultra-short-term wind power prediction method based on spatial-temporal attention graph convolutional model. Energy, 2024, 293: Article No. 130751 doi: 10.1016/j.energy.2024.130751 [115] Wei H, Wang W S, Kao X X. A novel approach to ultra-short-term wind power prediction based on feature engineering and Informer. Energy Reports, 2023, 9: 1236−1250 doi: 10.1016/j.egyr.2022.12.062 [116] Li Q, Ren X Y, Zhang F, Gao L, Hao B. A novel ultra-short-term wind power forecasting method based on TCN and Informer models. Computers and Electrical Engineering, 2024, 120: Article No. 109632 doi: 10.1016/j.compeleceng.2024.109632 [117] Yu M, Niu D, Gao T, Wang K, Sun L, Li M, et al. A novel framework for ultra-short-term interval wind power prediction based on RF-WOA-VMD and BiGRU optimized by the attention mechanism. Energy, 2023, 269: Article No. 126738 doi: 10.1016/j.energy.2023.126738 [118] Zeng J W, Qiao W. Short-term solar power prediction using a support vector machine. Renewable Energy, 2013, 52: 118−127 doi: 10.1016/j.renene.2012.10.009 [119] Sánchez I. Short-term prediction of wind energy production. International Journal of Forecasting, 2006, 22(1): 43−56 doi: 10.1016/j.ijforecast.2005.05.003 [120] Wang J D, Li P, Ran R, Che Y B, Zhou Y. A short-term photovoltaic power prediction model based on the gradient boost decision tree. Applied Sciences, 2018, 8(5): Article No. 689 doi: 10.3390/app8050689 [121] Rahman M M, Shakeri M, Tiong S K, Khatun F, Amin N, Pasupuleti J, et al. Prospective methodologies in hybrid renewable energy systems for energy prediction using artificial neural networks. Sustainability, 2021, 13(4): Article No. 2393 doi: 10.3390/su13042393 [122] Liao W L, Bak-Jensen B, Pillai J R, Yang Z, Liu K P. Short-term power prediction for renewable energy using hybrid graph convolutional network and long short-term memory approach. Electric Power Systems Research, 2022, 211: Article No. 108614 doi: 10.1016/j.jpgr.2022.108614 [123] He Y Y, Wang Y. Short-term wind power prediction based on EEMD-LASSO-QRNN model. Applied Soft Computing, 2021, 105: Article No. 107288 doi: 10.1016/j.asoc.2021.107288 [124] Ding Y F, Chen Z J, Zhang H W, Wang X, Guo Y. A short-term wind power prediction model based on CEEMD and WOA-KELM. Renewable Energy, 2022, 189: 188−198 doi: 10.1016/j.renene.2022.02.108 [125] Chen H P, Wu H Y, Kan T Y, Zhang J H, Li H L. Low-carbon economic dispatch of integrated energy system containing electric hydrogen production based on VMD-GRU short-term wind power prediction. International Journal of Electrical Power & Energy Systems, 2023, 154: Article No. 109420 [126] Zhang D D, Chen B A, Zhu H Y, Goh H H, Dong Y X, Wu T. Short-term wind power prediction based on two-layer decomposition and BiTCN-BiLSTM-attention model. Energy, 2023, 285: Article No. 128762 doi: 10.1016/j.energy.2023.128762 [127] Elizabeth Michael N, Mishra M, Hasan S, Al-Durra A. Short-term solar power predicting model based on multi-step CNN stacked LSTM technique. Energies, 2022, 15(6): Article No. 2150 doi: 10.3390/en15062150 [128] Wu B R, Wang L, Zeng Y R. Interpretable wind speed prediction with multivariate time series and temporal fusion Transformers. Energy, 2022, 252: Article No. 123990 doi: 10.1016/j.energy.2022.123990 [129] Deng J L. Introduction to grey system theory. The Journal of Grey System, 1989, 1(1): 1−24 [130] He X B, Wang Y, Zhang Y Y, Ma X, Wu W Q, Zhang L. A novel structure adaptive new information priority discrete grey prediction model and its application in renewable energy generation forecasting. Applied Energy, 2022, 325: Article No. 119854 doi: 10.1016/j.apenergy.2022.119854 [131] Wang Y, Wang L, Ye L L, Ma X, Wu W Q, Yang Z S, et al. A novel self-adaptive fractional multivariable grey model and its application in forecasting energy production and conversion of China. Engineering Applications of Artificial Intelligence, 2022, 115: Article No. 105319 doi: 10.1016/j.engappai.2022.105319 [132] Qian W Y, Sui A. A novel structural adaptive discrete grey prediction model and its application in forecasting renewable energy generation. Expert Systems With Applications, 2021, 186: Article No. 115761 doi: 10.1016/j.eswa.2021.115761 [133] Ding S, Li R J, Tao Z. A novel adaptive discrete grey model with time-varying parameters for long-term photovoltaic power generation forecasting. Energy Conversion and Management, 2021, 227: Article No. 113644 doi: 10.1016/j.enconman.2020.113644 [134] 任鑫, 王一妹, 王华, 周利, 葛畅, 韩爽. 基于改进卷积−门控网络及Informer的两种中长期风电功率预测方法. 现代电力, 2025, 42(3): 542−549Ren Xin, Wang Yi-Mei, Wang Hua, Zhou Li, Ge Chang, Han Shuang. Two types of medium-long-term wind power forecasting methods based on improved CNN-GRU and Informer. Modern Electric Power, 2025, 42(3): 542−549 [135] 刘大贵, 王维庆, 张慧娥, 丘刚, 郝红岩, 李国庆, 等. 马尔科夫修正的组合模型在新疆风电中长期可用电量预测中的应用. 电网技术, 2020, 44(9): 3290−3296Liu Da-Gui, Wang Wei-Qing, Zhang Hui-E, Qiu Gang, Hao Hong-Yan, Li Guo-Qing, et al. Application of Markov modified combination model mid-long term available quantity of electricity forecasting in Xinjiang wind power. Power System Technology, 2020, 44(9): 3290−3296 [136] Li Q Y, Deng Y X, Liu X, Sun W, Li W T, Li J, et al. Autonomous smart grid fault detection. IEEE Communications Standards Magazine, 2023, 7(2): 40−47 doi: 10.1109/MCOMSTD.0001.2200019 [137] Deng X Z, Ye A S, Zhong J S, Xu D, Yang W W, Song Z F, et al. Bagging-XGBoost algorithm based extreme weather identification and short-term load forecasting model. Energy Reports, 2022, 8: 8661−8674 doi: 10.1016/j.egyr.2022.06.072 [138] Hichri A, Hajji M, Mansouri M, Abodayeh K, Bouzrara K, Nounou H, et al. Genetic-algorithm-based neural network for fault detection and diagnosis: Application to grid-connected photovoltaic systems. Sustainability, 2022, 14(17): Article No. 10518 doi: 10.3390/su141710518 [139] Chai E X, Zeng P L, Ma S C, Xing H, Zhao B. Artificial intelligence approaches to fault diagnosis in power grids: A review. In: Proceedings of the Chinese Control Conference (CCC). Guangzhou, China: IEEE, 2019. 7346−7353 [140] Zhang S L, Wang Y X, Liu M Q, Bao Z J. Data-based line trip fault prediction in power systems using LSTM networks and SVM. IEEE Access, 2018, 6: 7675−7686 doi: 10.1109/ACCESS.2017.2785763 [141] Branco N W, Cavalca M S M, Stefenon S F, Leithardt V R Q. Wavelet LSTM for fault forecasting in electrical power grids. Sensors, 2022, 22(21): Article No. 8323 doi: 10.3390/s22218323 [142] Li P H, Zhang Z J, Grosu R, Deng Z W, Hou J, Rong Y J, et al. An end-to-end neural network framework for state-of-health estimation and remaining useful life prediction of electric vehicle lithium batteries. Renewable and Sustainable Energy Reviews, 2022, 156: Article No. 111843 doi: 10.1016/j.rser.2021.111843 [143] Luo J H, Zhang X. Convolutional neural network based on attention mechanism and Bi-LSTM for bearing remaining life prediction. Applied Intelligence, 2022, 52(1): 1076−1091 doi: 10.1007/s10489-021-02503-2 [144] Zhang Y, Xin Y Q, Liu Z W, Chi M, Ma G J. Health status assessment and remaining useful life prediction of aero-engine based on BiGRU and MMoE. Reliability Engineering & System Safety, 2022, 220: Article No. 108263 [145] Jiang Y C, Dai P W, Fang P C, Zhong R Y, Cao X C. Electrical-STGCN: An electrical spatio-temporal graph convolutional network for intelligent predictive maintenance. IEEE Transactions on Industrial Informatics, 2022, 18(12): 8509−8518 doi: 10.1109/TII.2022.3143148 [146] Jiang H, Li L X, Xian H R, Hu Y L, Huang H H, Wang J Z. Crowd flow prediction for social internet-of-things systems based on the mobile network big data. IEEE Transactions on Computational Social Systems, 2022, 9(1): 267−278 doi: 10.1109/TCSS.2021.3062884 [147] Chen L, Zhong X H, Zhang F, Cheng Y, Xu Y H, Qi Y, et al. FuXi: A cascade machine learning forecasting system for 15-day global weather forecast. npj Climate and Atmospheric Science, 2023, 6(1): Article No. 190 doi: 10.1038/s41612-023-00512-1 [148] Chen K, Han T, Gong J C, Bai L, Ling F H, Luo J J, et al. FengWu: Pushing the skillful global medium-range weather forecast beyond 10 days lead. arXiv preprint arXiv: 2304.02948, 2023. [149] Lam R, Sanchez-Gonzalez A, Willson M, Wirnsberger P, Fortunato M, Alet F, et al. Learning skillful medium-range global weather forecasting. Science, 2023, 382(6677): 1416−1421 doi: 10.1126/science.adi2336 [150] Zhang Y C, Long M S, Chen K Y, Xing L X, Jin R H, Jordan M I, et al. Skilful nowcasting of extreme precipitation with NowcastNet. Nature, 2023, 619(7970): 526−532 doi: 10.1038/s41586-023-06184-4 [151] Hu Y, Chen L, Wang Z B, Li H. SwinVRNN: A data-driven ensemble forecasting model via learned distribution perturbation. Journal of Advances in Modeling Earth Systems, 2023, 15(2): Article No. e2022MS003211 doi: 10.1029/2022MS003211 [152] Pathak J, Subramanian S, Harrington P, Raja S, Chattopadhyay A, Mardani M, et al. FourCastNet: A global data-driven high-resolution weather model using adaptive Fourier neural operators. arXiv preprint arXiv: 2202.11214, 2022. [153] Weyn J A, Durran D R, Caruana R, Cresswell-Clay N. Sub-seasonal forecasting with a large ensemble of deep-learning weather prediction models. Journal of Advances in Modeling Earth Systems, 2021, 13(7): Article No. e2021MS002502 doi: 10.1029/2021MS002502 [154] Li K, Huang W, Hu G Y, Li J. Ultra-short term power load forecasting based on CEEMDAN-SE and LSTM neural network. Energy and Buildings, 2023, 279: Article No. 112666 doi: 10.1016/j.enbuild.2022.112666 [155] Li W, Gao L, Zhuang Q Z, Shu X Y. Prediction of ultra-short-term wind power based on VMD-Transformer-SSA. In: Proceedings of the International Conference on Energy and Electrical Engineering (EEE). Nanchang, China: IEEE, 2024. 1−6 [156] Shi H F, Miao K, Ren X C. Short-term load forecasting based on CNN-BiLSTM with Bayesian optimization and attention mechanism. Concurrency and Computation: Practice and Experience, 2023, 35(17): Article No. e6676 doi: 10.1002/cpe.6676 [157] 任建吉, 位慧慧, 邹卓霖, 侯庭庭, 原永亮, 沈记全, 等. 基于CNN-BiLSTM-Attention的超短期电力负荷预测. 电力系统保护与控制, 2022, 50(8): 108−116Ren Jian-Ji, Wei Hui-Hui, Zou Zhuo-Lin, Hou Ting-Ting, Yuan Yong-Liang, Shen Ji-Quan, et al. Ultra-short-term power load forecasting based on CNN-BiLSTM-Attention. Power System Protection and Control, 2022, 50(8): 108−116 [158] Zeng W H, Li J R, Sun C C, Cao L, Tang X P, Shu S L, et al. Ultra short-term power load forecasting based on similar day clustering and ensemble empirical mode decomposition. Energies, 2023, 16(4): Article No. 1989 doi: 10.3390/en16041989 [159] Li H Z, Liu H Y, Ji H Y, Zhang S Y, Li P F. Ultra-short-term load demand forecast model framework based on deep learning. Energies, 2020, 13(18): Article No. 4900 doi: 10.3390/en13184900 [160] Zhang Q Y, Chen J H, Xiao G, He S Y, Deng K X. TransformGraph: A novel short-term electricity net load forecasting model. Energy Reports, 2023, 9: 2705−2717 doi: 10.1016/j.egyr.2023.01.050 [161] Kong W C, Dong Z Y, Jia Y W, Hill D J, Xu Y, Zhang Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Transactions on Smart Grid, 2019, 10(1): 841−851 doi: 10.1109/TSG.2017.2753802 [162] Lin W X, Wu D, Boulet B. Spatial-temporal residential short-term load forecasting via graph neural networks. IEEE Transactions on Smart Grid, 2021, 12(6): 5373−5384 doi: 10.1109/TSG.2021.3093515 [163] Wang C, Wang Y, Ding Z T, Zheng T, Hu J Y, Zhang K F. A Transformer-based method of multienergy load forecasting in integrated energy system. IEEE Transactions on Smart Grid, 2022, 13(4): 2703−2714 doi: 10.1109/TSG.2022.3166600 [164] Xiao J W, Liu P, Fang H L, Liu X K, Wang Y W. Short-term residential load forecasting with baseline-refinement profiles and Bi-attention mechanism. IEEE Transactions on Smart Grid, 2024, 15(1): 1052−1062 doi: 10.1109/TSG.2023.3290598 [165] Xu H S, Fan G L, Kuang G F, Song Y P. Construction and application of short-term and mid-term power system load forecasting model based on hybrid deep learning. IEEE Access, 2023, 11: 37494−37507 doi: 10.1109/ACCESS.2023.3266783 [166] Zhang S Y, Chen R H, Cao J C, Tan J. A CNN and LSTM-based multi-task learning architecture for short and medium-term electricity load forecasting. Electric Power Systems Research, 2023, 222: Article No. 109507 doi: 10.1016/j.jpgr.2023.109507 [167] Yang G, Du S H, Duan Q L, Su J. A novel data-driven method for medium-term power consumption forecasting based on Transformer-lightGBM. Mobile Information Systems, 2022: Article No. 5465322 [168] Ammar N, Sulaiman M, Nor A F M. Long-term load forecasting of power systems using artificial neural network and ANFIS. ARPN Journal of Engineering and Applied Sciences, 2018, 13(3): 828−834 [169] Kazemzadeh M R, Amjadian A, Amraee T. A hybrid data mining driven algorithm for long term electric peak load and energy demand forecasting. Energy, 2020, 204: Article No. 117948 doi: 10.1016/j.energy.2020.117948 [170] Nalcaci G, Özmen A, Weber G W. Long-term load forecasting: Models based on MARS, ANN and LR methods. Central European Journal of Operations Research, 2019, 27(4): 1033−1049 doi: 10.1007/s10100-018-0531-1 [171] Taheri S, Jooshaki M, Moeini-Aghtaie M. Long-term planning of integrated local energy systems using deep learning algorithms. International Journal of Electrical Power & Energy Systems, 2021, 129: Article No. 106855 [172] Agrawal R K, Muchahary F, Tripathi M M. Long term load forecasting with hourly predictions based on long-short-term-memory networks. In: Proceedings of the IEEE Texas Power and Energy Conference (TPEC). College Station, USA: IEEE, 2018. 1−6 [173] Wang K, Zhang J L, Li X W, Zhang Y X. Long-term power load forecasting using LSTM-Informer with ensemble learning. Electronics, 2023, 12(10): Article No. 2175 doi: 10.3390/electronics12102175 [174] 南网储能. 南网储能公司设备状态大数据智能分析系统 XS-1000D正式发布 V2.0 版本 [Online], available: https://www.chu21.com/html/chunengy-33278.shtml, 2025-04-30China Southern Grid Energy Storage. XS-1000D intelligent analysis system for equipment status of China Southern Grid Energy Storage released V2.0 [Online], available: https://www.chu21.com/html/chunengy-33278.shtml, April 30, 2025 -

下载:

下载: