An Interpretable and Adaptive Robust Neural Network Modeling Method Based on Dual Gaussian Mixture Distribution

-

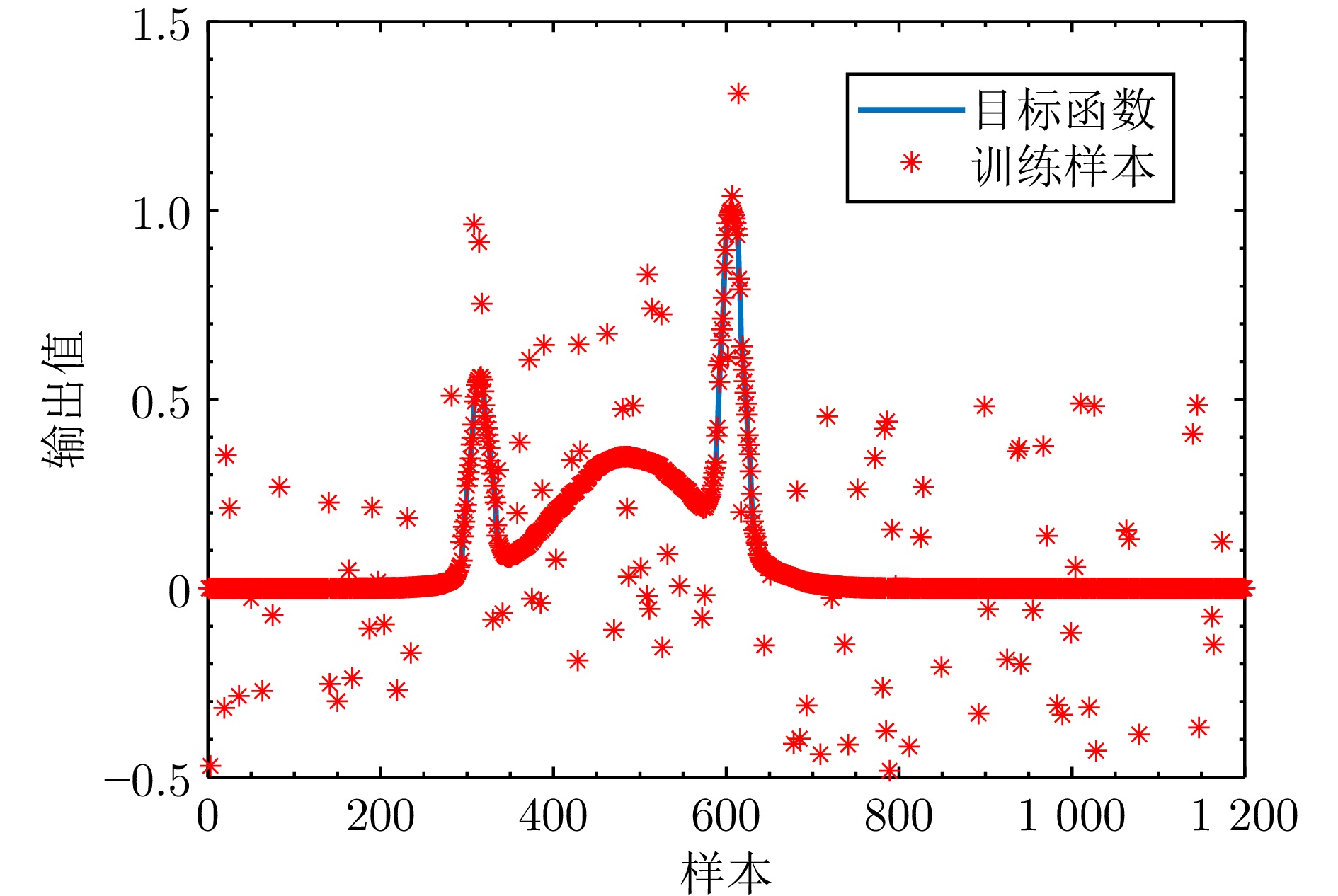

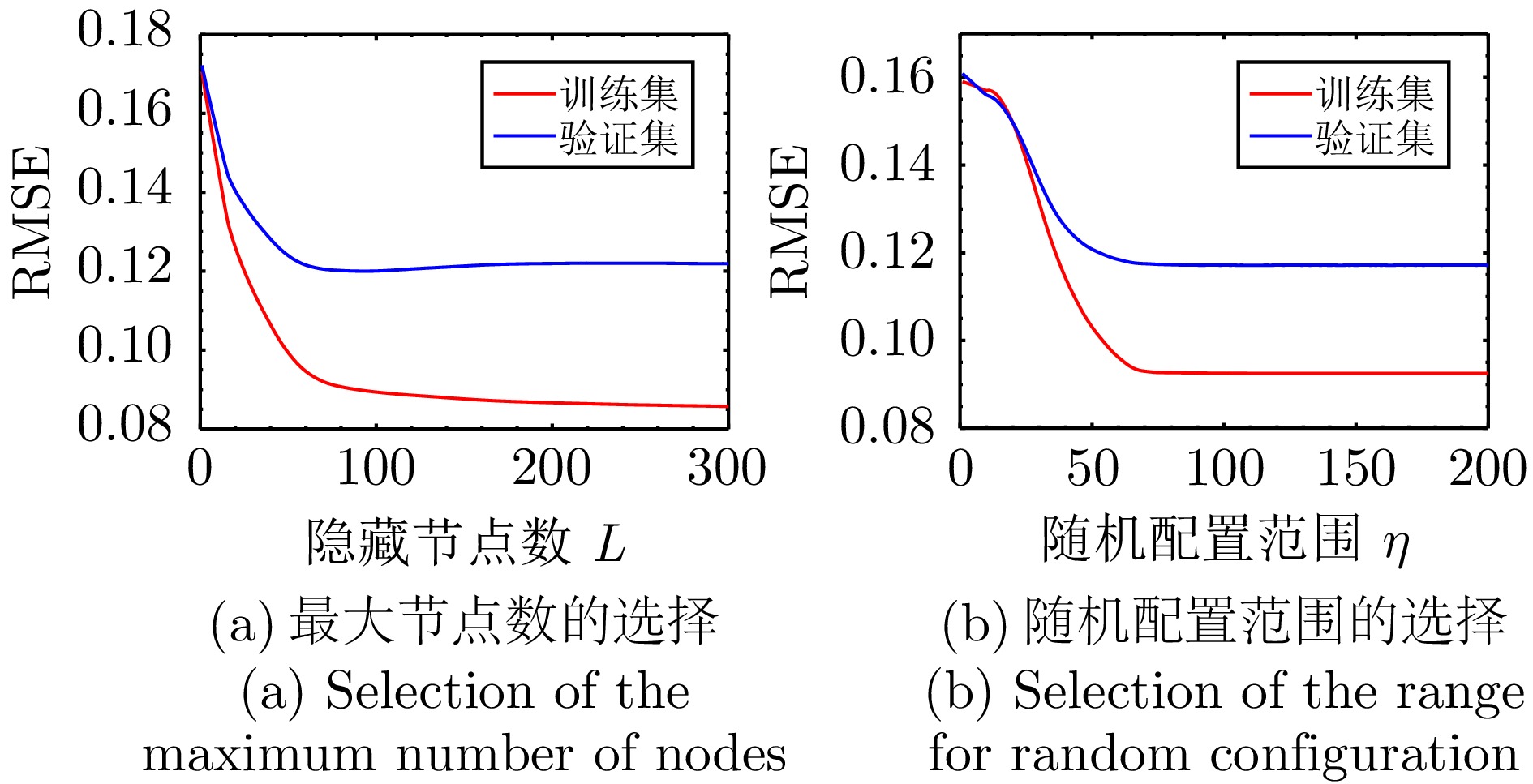

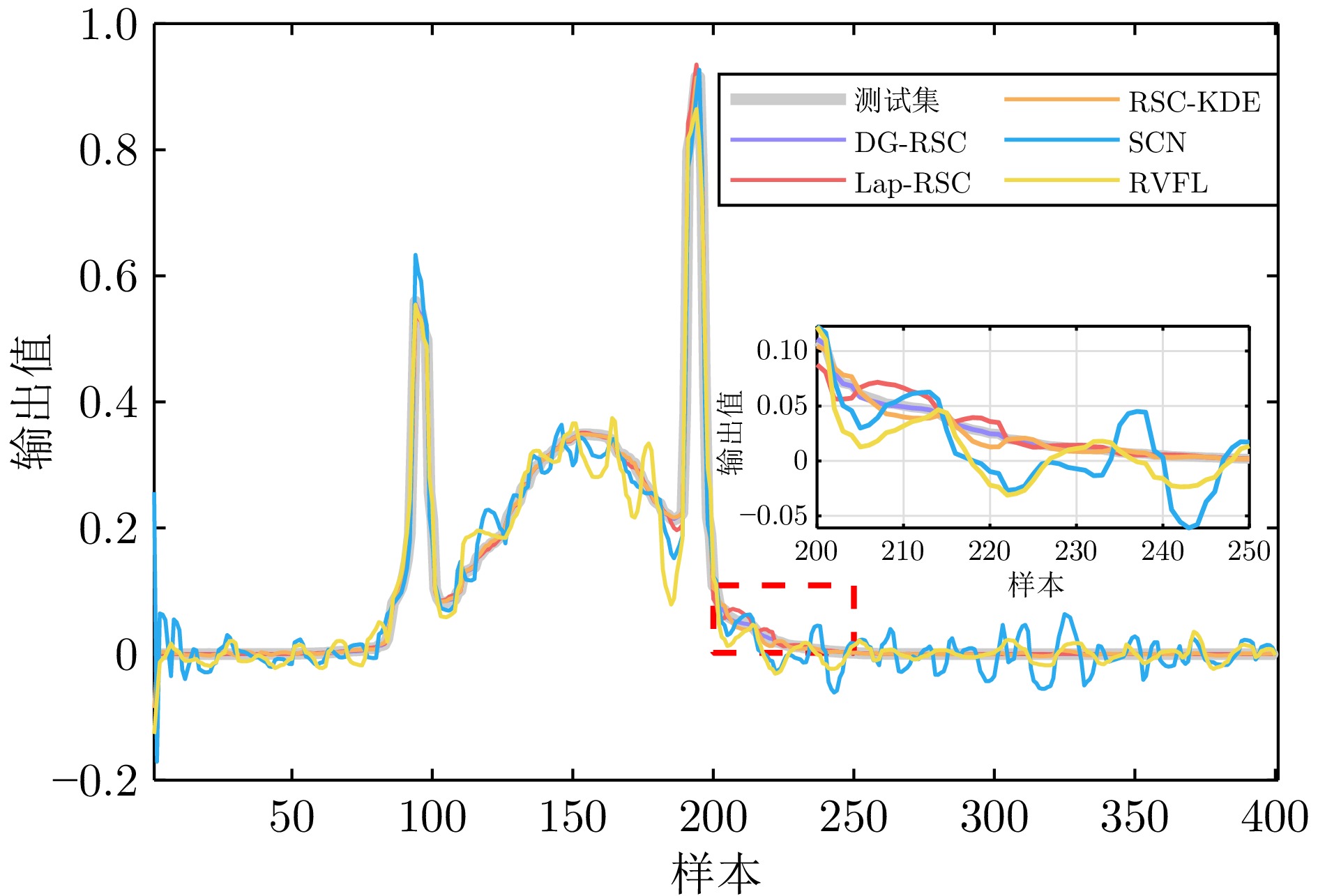

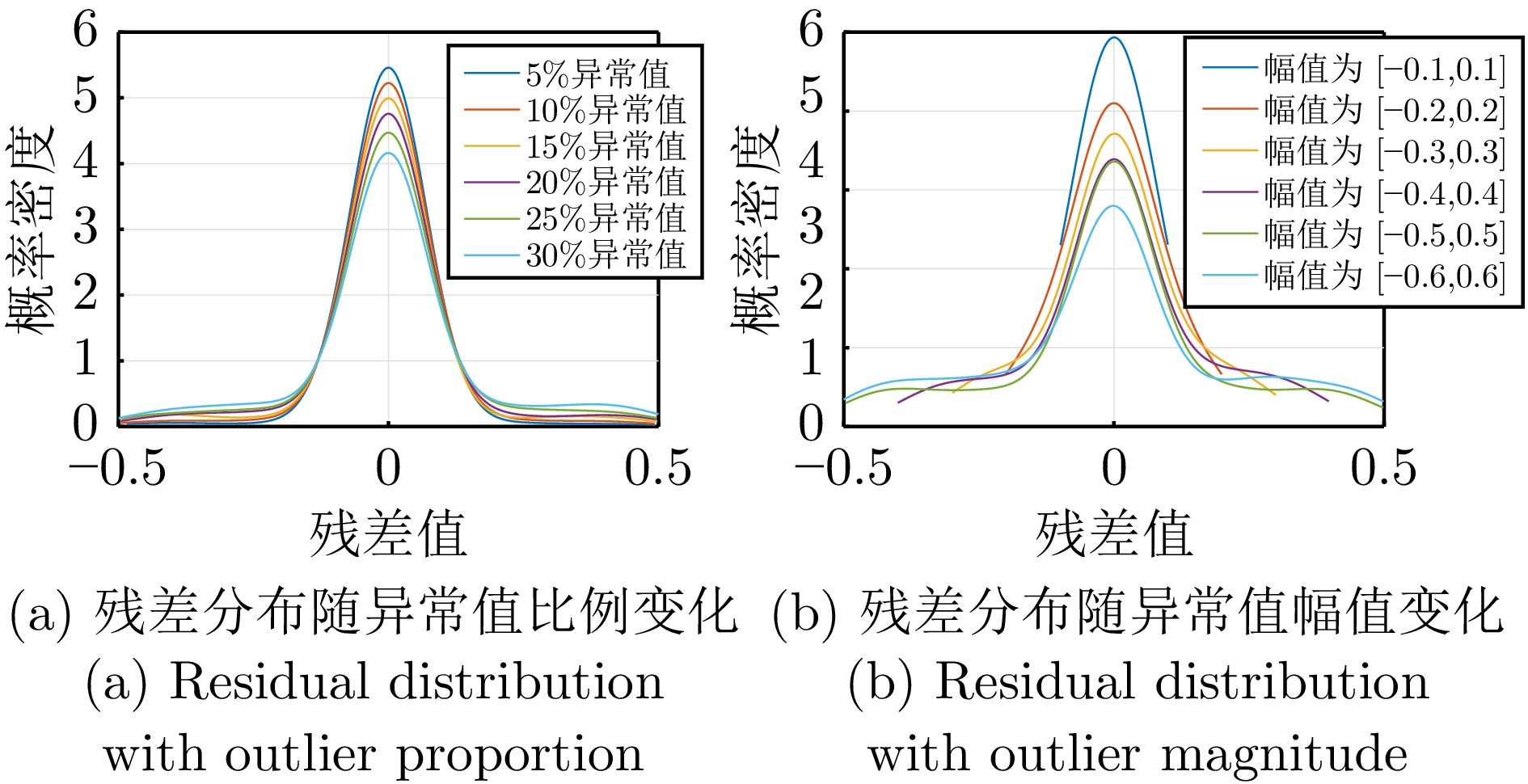

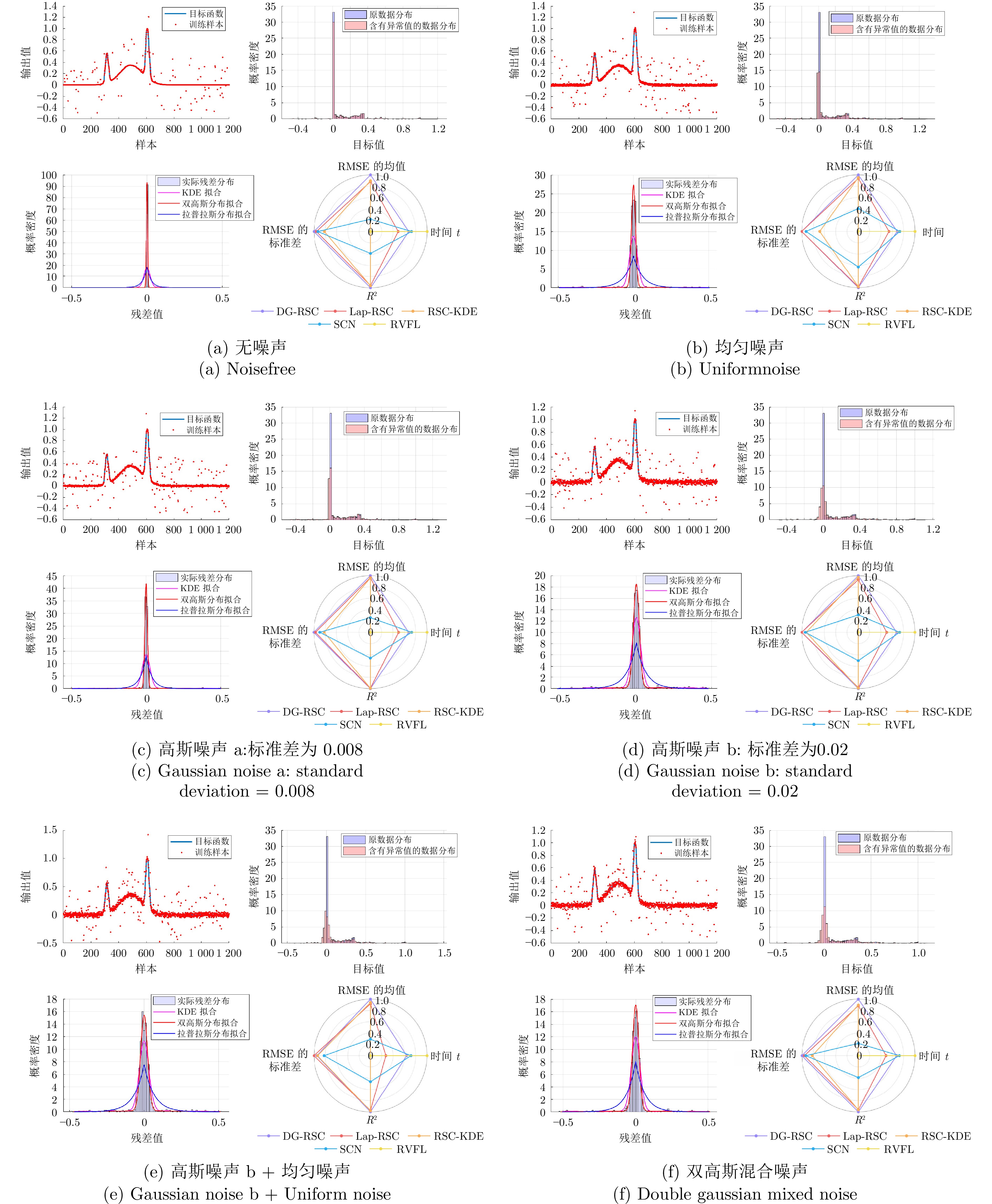

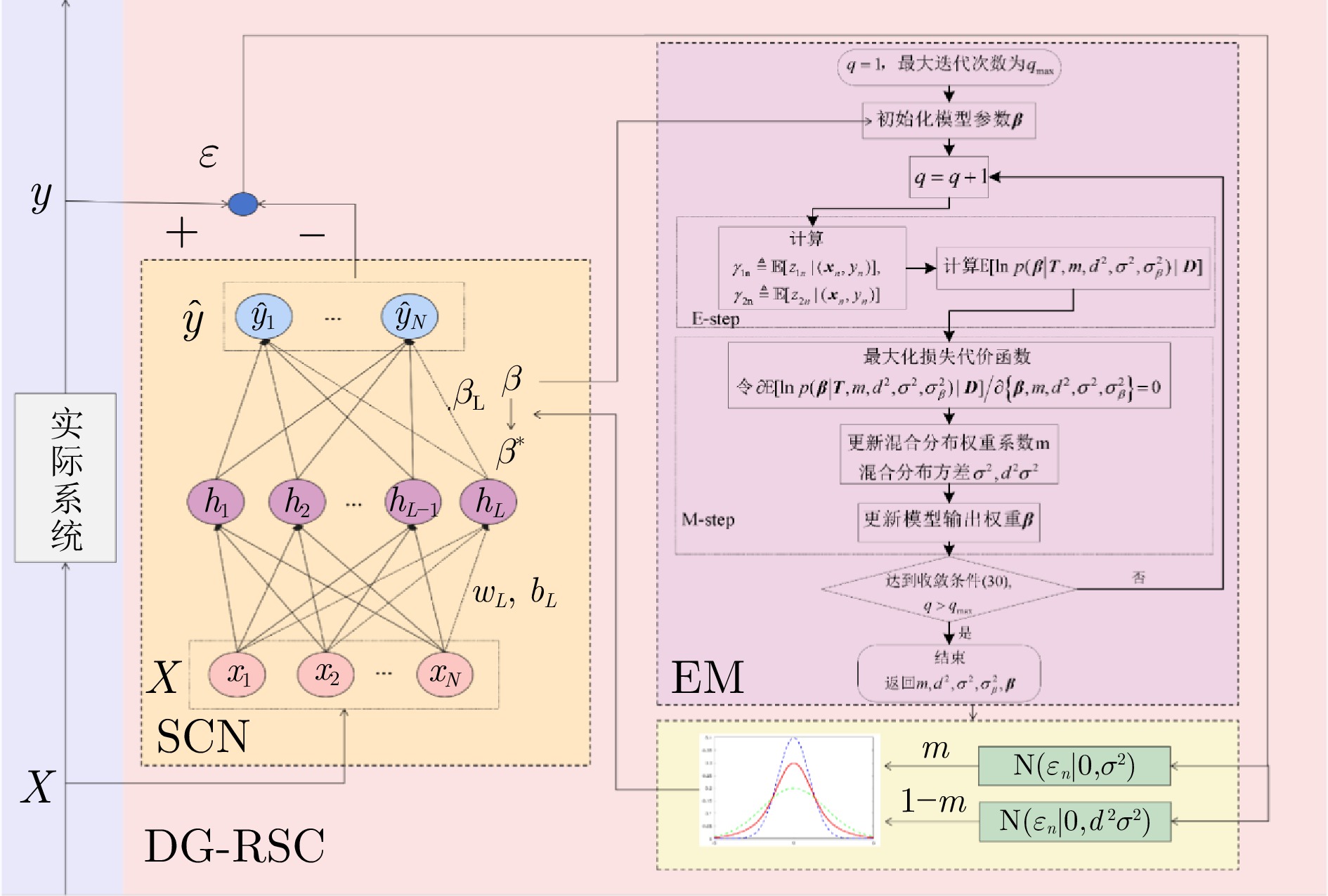

摘要: 工业过程数据常常受到混合噪声干扰, 传统基于单一厚尾分布的鲁棒建模方法在处理混合噪声问题时, 在准确性与可解释性方面均存在一定局限. 基于此, 提出一种混合双高斯分布的可解释鲁棒自适应建模方法. 该方法首先采用随机配置算法构建基础的随机配置网络学习模型, 确定模型的隐含层节点数、输入权重和偏置; 其次为保证模型对混合噪声的鲁棒性, 构建双高斯分布(一大一小方差)加权组合而成的噪声表征模型; 随后利用期望最大化方法自适应迭代学习随机配置网络输出权值和混合高斯模型噪声参数, 最终形成基于双高斯分布混合鲁棒建模方法. 该方法具有以下优势: 噪声模型能够通过参数自适应学习逼近实际混合噪声特性, 其中大方差高斯分量负责对异常噪声进行粗调, 小方差高斯分量则用于精细拟合主体噪声, 从而增强模型的可解释性; 在网络模型输出权值估计过程中, 通过为每个输出数据点自适应分配惩罚权重, 保障模型的鲁棒性能. 为验证所提方法的有效性, 分别在函数仿真、基准数据集和工业实例上设计多组对比实验, 结果均表明所提方法具备良好的可靠性与实用性.Abstract: Industrial process data are often contaminated by mixed noise interference. Traditional robust modeling methods based on single heavy-tailed distributions exhibit certain limitations in both accuracy and interpretability when dealing with mixed noise problems. To address these issues, an interpretable robust adaptive modeling method based on a mixed dual Gaussian distribution is proposed. First, The proposed method begins by constructing a base learning model by using the stochastic configuration network (SCN) framework to determine the number of hidden nodes, input weights, and biases. Secondly, to ensure robustness against mixed noise, a noise characterization model is established through a weighted combination of dual Gaussian distribution with large and small variances. And then the expectation-maximization algorithm is employed to adaptively and iteratively learn both the output weights of the SCN and the parameters of the Gaussian mixture model, ultimately forming the robust stochastic configuration network model based on a dual Gaussian distribution. The proposed method offers two main advantages: The noise model can approximate the characteristics of actual mixed noise through adaptive parameter learning, where the large-variance Gaussian component handles coarse approximation of anomalous noise while the small-variance Gaussian component achieves fine-grained characterization of dominant noise, thereby enhancing interpretability; During the estimation of network output weights, the model ensures robust performance by adaptively assigning penalty weights to each output data point. To validate the effectiveness of the proposed method, multiple comparative experiments are conducted on function approximation, benchmark datasets, and an industrial case study. The results consistently demonstrate that the proposed method achieves satisfactory reliability and practicality.

-

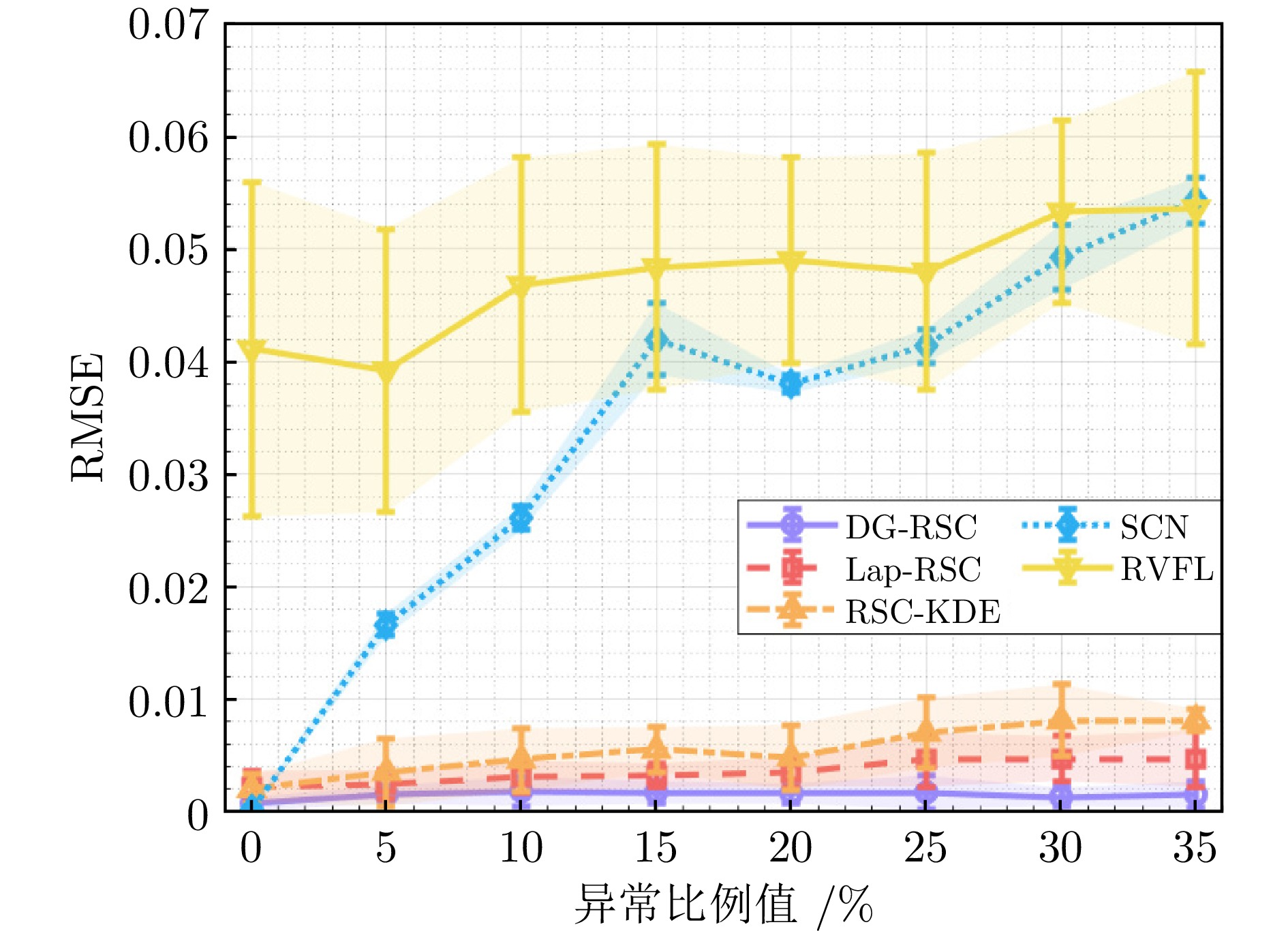

表 1 不同异常值比例下各算法的性能比较

Table 1 Performance comparison of various algorithms under different proportions of outliers

算法 异常值比例 0% 5% 10% 15% $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ 0.0007\pm0.0006 $ $ {{1.0000}} $ $ 0.3908 $ $ {{0.0015\pm0.0008}} $ $ {{0.9999}} $ $ 0.4449 $ $ {{0.0018\pm0.0013}} $ $ {{0.9998}} $ $ 0.4734 $ $ {{0.0017\pm0.0010}} $ $ {{0.9998}} $ $ 0.4435 $ Lap-RSC $ 0.0022\pm0.0014 $ $ 0.9997 $ $ 0.4270 $ $ 0.0024\pm0.0013 $ $ 0.9997 $ $ 0.4804 $ $ 0.0031\pm0.0014 $ $ 0.9995 $ $ 0.4993 $ $ 0.0032\pm0.0011 $ $ 0.9995 $ $ 0.5353 $ RSC-KDE $ 0.0020\pm0.0013 $ $ 0.9998 $ $ 1.4134 $ $ 0.0035\pm0.0030 $ $ 0.9991 $ $ 1.4207 $ $ 0.0046\pm0.0028 $ $ 0.9988 $ $ 1.4343 $ $ 0.0055\pm0.0020 $ $ 0.9986 $ $ 1.4232 $ SCN $ {{0.0002\pm0.0002}} $ $ {{1.0000}} $ $ 0.3752 $ $ 0.0166\pm0.0010 $ $ 0.9887 $ $ 0.3813 $ $ 0.0261\pm0.0011 $ $ 0.9720 $ $ 0.3813 $ $ 0.0420\pm0.0032 $ $ 0.9271 $ $ 0.3827 $ RVFL $ 0.0411\pm0.0149 $ $ 0.9217 $ $ {{0.0060}} $ $ 0.0392\pm0.0126 $ $ 0.9305 $ $ {{0.0059}} $ $ 0.0468\pm0.0113 $ $ 0.9048 $ $ {{0.0051}} $ $ 0.0484\pm0.0109 $ $ 0.8988 $ $ {{0.0059}} $ 算法 异常值比例 20% 25% 30% 35% $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ {{0.0016\pm0.0009}} $ $ {{0.9999}} $ $ 0.4460 $ $ {{0.0017\pm0.0015}} $ $ {{0.9998}} $ $ 0.4682 $ $ {{0.0013\pm0.0008}} $ $ {{0.9999}} $ $ 0.4750 $ $ {{0.0015\pm0.0012}} $ $ {{0.9999}} $ $ 0.5624 $ Lap-RSC $ 0.0035\pm0.0013 $ $ 0.9994 $ $ 0.5460 $ $ 0.0046\pm0.0024 $ $ 0.9989 $ $ 0.6427 $ $ 0.0047\pm0.0020 $ $ 0.9989 $ $ 0.6431 $ $ 0.0047\pm0.0025 $ $ 0.9988 $ $ 1.2807 $ RSC-KDE $ 0.0048\pm0.0029 $ $ 0.9987 $ $ 1.4272 $ $ 0.0070\pm0.0031 $ $ 0.9976 $ $ 1.4269 $ $ 0.0081\pm0.0032 $ $ 0.9969 $ $ 1.4212 $ $ 0.0081\pm0.0010 $ $ 0.9973 $ $ 1.5324 $ SCN $ 0.0380\pm0.0008 $ $ 0.9408 $ $ 0.3811 $ $ 0.0414\pm0.0015 $ $ 0.9295 $ $ 0.3773 $ $ 0.0493\pm0.0029 $ $ 0.8997 $ $ 0.3755 $ $ 0.0543\pm0.0020 $ $ 0.8786 $ $ 0.4412 $ RVFL $ 0.0490\pm0.0091 $ $ 0.8979 $ $ {{0.0059}} $ $ 0.0480\pm0.0105 $ $ 0.9009 $ $ {{0.0060}} $ $ 0.0533\pm0.0081 $ $ 0.8808 $ $ {{0.0060}} $ $ 0.0536\pm0.0121 $ $ 0.8762 $ $ {{0.0059}} $ 表 2 不同异常值幅值下各算法的性能比较

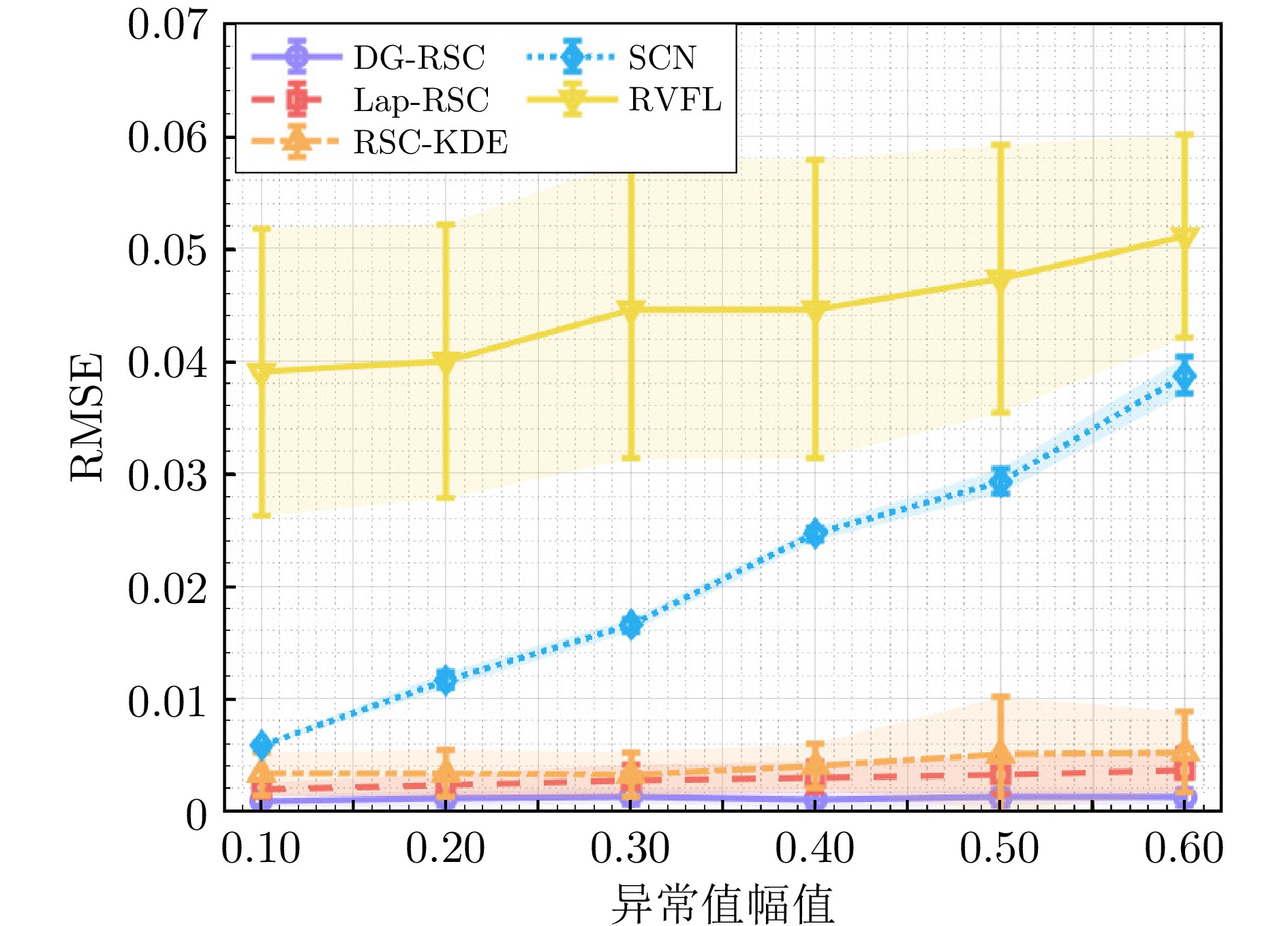

Table 2 Performance comparison of various algorithms under different amplitudes of outliers

算法 异常值幅值区间 $ [-0.1,\; 0.1] $ $ [-0.2,\; 0.2] $ $ [-0.3,\; 0.3] $ $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ {{0.0009\pm0.0005}} $ $ {{1.0000}} $ $ 0.4775 $ $ {{0.0011\pm0.0007}} $ $ {{0.9999}} $ $ 0.4821 $ $ {{0.0013\pm0.0007}} $ $ {{0.9999}} $ $ 0.4930 $ Lap-RSC $ 0.0019\pm0.0006 $ $ 0.9998 $ $ 0.4732 $ $ 0.0023\pm0.0010 $ $ 0.9997 $ $ 0.5072 $ $ 0.0027\pm0.0014 $ $ 0.9996 $ $ 0.5361 $ RSC-KDE $ 0.0033\pm0.0019 $ $ 0.9994 $ $ 1.4052 $ $ 0.0033\pm0.0021 $ $ 0.9994 $ $ 1.4365 $ $ 0.0032\pm0.0020 $ $ 0.9994 $ $ 1.4642 $ SCN $ 0.0058\pm0.0002 $ $ 0.9986 $ $ 0.3940 $ $ 0.0116\pm0.0007 $ $ 0.9945 $ $ 0.4006 $ $ 0.0165\pm0.0006 $ $ 0.9888 $ $ 0.4118 $ RVFL $ 0.0390\pm0.0128 $ $ 0.9309 $ $ {{0.0061}} $ $ 0.0400\pm0.0122 $ $ 0.9281 $ $ {{0.0059}} $ $ 0.0446\pm0.0133 $ $ 0.9111 $ $ {{0.0062}} $ 算法 异常值幅值区间 $ [-0.4,\; 0.4] $ $ [-0.5,\; 0.5] $ $ [-0.6,\; 0.6] $ $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ {{0.0010\pm0.0007}} $ $ {{0.9999}} $ $ 0.4838 $ $ {{0.0012\pm0.0007}} $ $ {{0.9999}} $ $ 0.4792 $ $ {{0.0012\pm0.0007}} $ $ {{0.9999}} $ $ 0.4807 $ Lap-RSC $ 0.0030\pm0.0013 $ $ 0.9996 $ $ 0.5169 $ $ 0.0032\pm0.0017 $ $ 0.9995 $ $ 0.5924 $ $ 0.0036\pm0.0019 $ $ 0.9993 $ $ 0.5941 $ RSC-KDE $ 0.0040\pm0.0029 $ $ 0.9990 $ $ 1.4204 $ $ 0.0050\pm0.0051 $ $ 0.9979 $ $ 1.4233 $ $ 0.0052\pm0.0036 $ $ 0.9984 $ $ 1.4227 $ SCN $ 0.0246\pm0.0012 $ $ 0.9751 $ $ 0.3822 $ $ 0.0293\pm0.0011 $ $ 0.9646 $ $ 0.3954 $ $ 0.0387\pm0.0016 $ $ 0.9385 $ $ 0.3835 $ RVFL $ 0.0446\pm0.0127 $ $ 0.9118 $ $ {{0.0055}} $ $ 0.0473\pm0.0119 $ $ 0.9023 $ $ {{0.0060}} $ $ 0.0511\pm0.0090 $ $ 0.8895 $ $ {{0.0060}} $ 表 3 不同背景噪声条件下各算法的性能比较

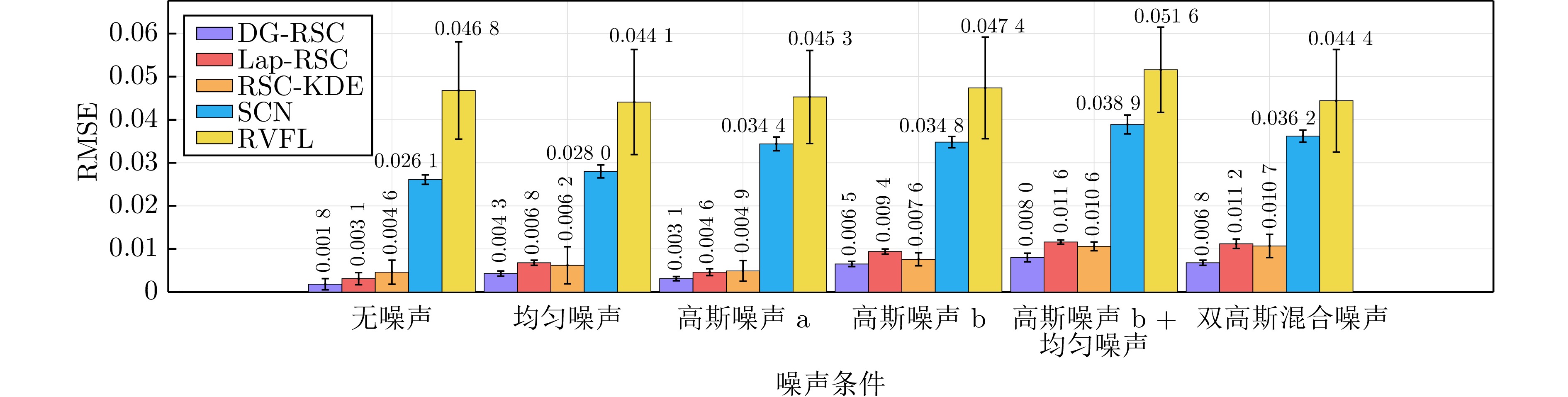

Table 3 Performance comparison of various algorithms under different background noise conditions

算法 噪声条件 无噪声 均匀噪声$ {\rm{U}}(-0.02,\; 0.02) $ 高斯噪声a: 标准差为0.008 $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ {{0.0018 \pm 0.0013}} $ $ {{0.9998}} $ $ 0.4734 $ $ {{0.0043 \pm 0.0006}} $ $ {{0.9992}} $ $ 0.4663 $ $ {{0.0031 \pm 0.0005}} $ $ {{0.9996}} $ $ 0.4788 $ Lap-RSC $ 0.0031 \pm 0.0014 $ $ 0.9995 $ $ 0.4993 $ $ 0.0068 \pm 0.0006 $ $ 0.9981 $ $ 0.6788 $ $ 0.0046 \pm 0.0008 $ $ 0.9991 $ $ 0.7359 $ RSC-KDE $ 0.0046 \pm 0.0028 $ $ 0.9988 $ $ 1.4343 $ $ 0.0062 \pm 0.0043 $ $ 0.9977 $ $ 1.4729 $ $ 0.0049 \pm 0.0024 $ $ 0.9988 $ $ 1.4621 $ SCN $ 0.0261 \pm 0.0011 $ $ 0.9720 $ $ 0.3813 $ $ 0.0280 \pm 0.0015 $ $ 0.9677 $ $ 0.4001 $ $ 0.0344 \pm 0.0016 $ $ 0.9512 $ $ 0.3993 $ RVFL $ 0.0468 \pm 0.0113 $ $ 0.9048 $ $ {{0.0051}} $ $ 0.0441 \pm 0.0122 $ $ 0.9141 $ $ {{0.0056}} $ $ 0.0453 \pm 0.0108 $ $ 0.9108 $ $ {{0.0054}} $ 算法 噪声条件 高斯噪声b: 标准差为0.02 高斯噪声b+均匀噪声 双高斯混合噪声: 高斯噪声a+高斯噪声b $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) $ RMSE $ $ R^2 $ 时间$ t $(s) DG-RSC $ {{0.0065 \pm 0.0006 }} $ $ {{0.9983}} $ $ 0.4755 $ $ {{0.0080 \pm 0.0010}} $ $ {{0.9973}} $ $ 0.5691 $ $ {{0.0068 \pm 0.0006}} $ $ {{0.9981}} $ $ 0.4801 $ Lap-RSC $ 0.0094 \pm 0.0006 $ $ 0.9964 $ $ 0.7329 $ $ 0.0116 \pm 0.0005 $ $ 0.9944 $ $ 1.1424 $ $ 0.0112 \pm 0.0011 $ $ 0.9948 $ $ 0.7550 $ RSC-KDE $ 0.0076 \pm 0.0015 $ $ 0.9975 $ $ 1.4677 $ $ 0.0106 \pm 0.0011 $ $ 0.9953 $ $ 1.5677 $ $ 0.0107 \pm 0.0027 $ $ 0.9950 $ $ 1.4722 $ SCN $ 0.0348 \pm 0.0013 $ $ 0.9502 $ $ 0.3998 $ $ 0.0389 \pm 0.0022 $ $ 0.9377 $ $ 0.4492 $ $ 0.0362 \pm 0.0014 $ $ 0.9461 $ $ 0.4092 $ RVFL $ 0.0474 \pm 0.0118 $ $ 0.9020 $ $ {{0.0055}} $ $ 0.0516 \pm 0.0099 $ $ 0.8866 $ $ {{0.0059}} $ $ 0.0444 \pm 0.0119 $ $ 0.9132 $ $ {{0.0055}} $ 表 4 不同数据量下五种算法运行时间对比(s)

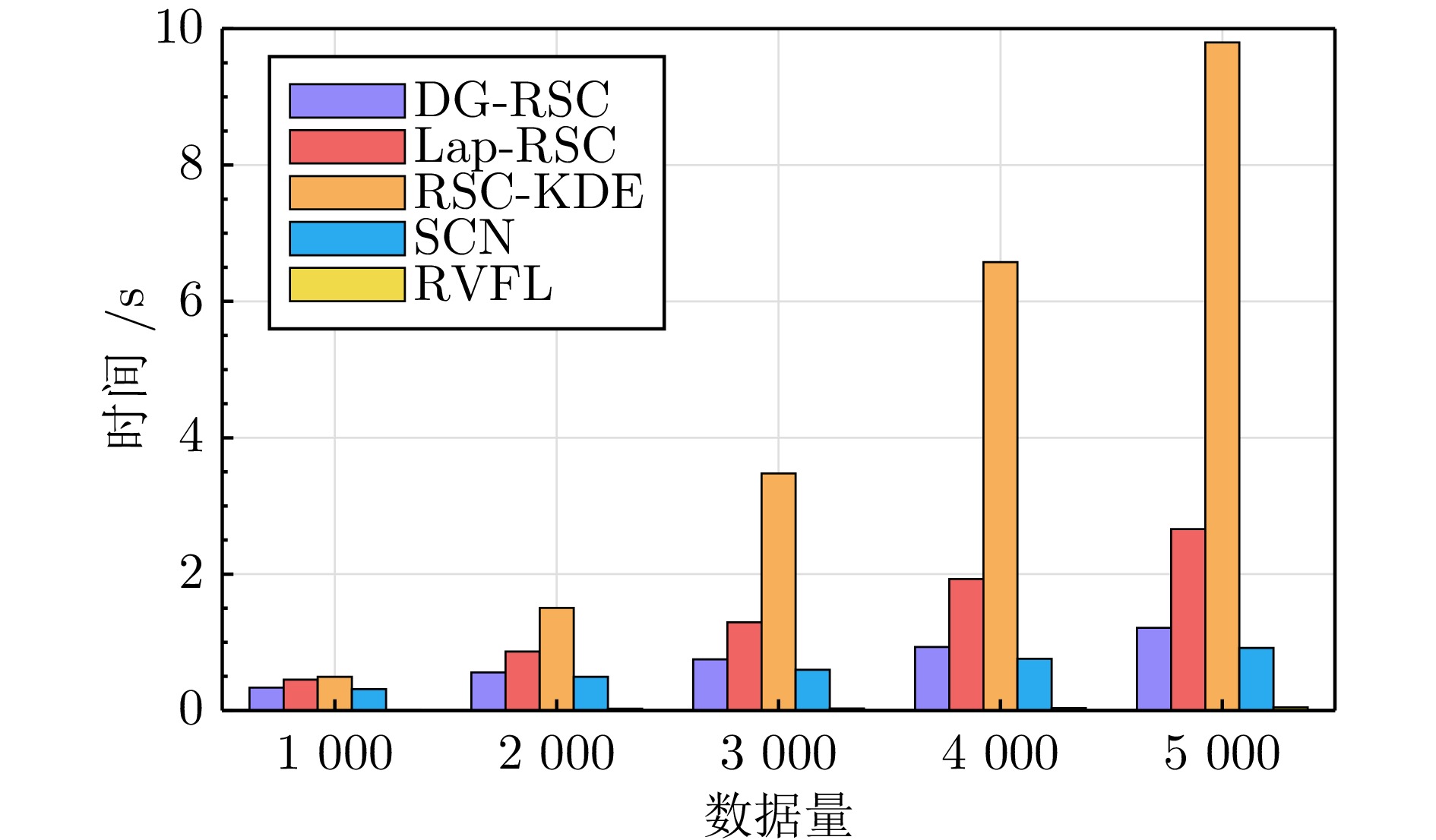

Table 4 Comparison of running time of five algorithms under different data volumes (s)

算法 数据量 1000 2000 3000 4000 5000 10000 DG-RSC 0.3342 0.5570 0.7510 0.9320 1.2130 2.3480 Lap-RSC 0.4510 0.8640 1.2940 1.9290 2.6610 7.3280 RSC-KDE 0.4930 1.5040 3.4770 6.5760 9.7990 39.4130 SCN 0.3140 0.4940 0.5960 0.7590 0.9160 1.3980 RVFL 0.0120 0.0270 0.0300 0.0350 0.0450 0.0690 表 5 不同数据集上各算法在不同异常值比例下的性能比较

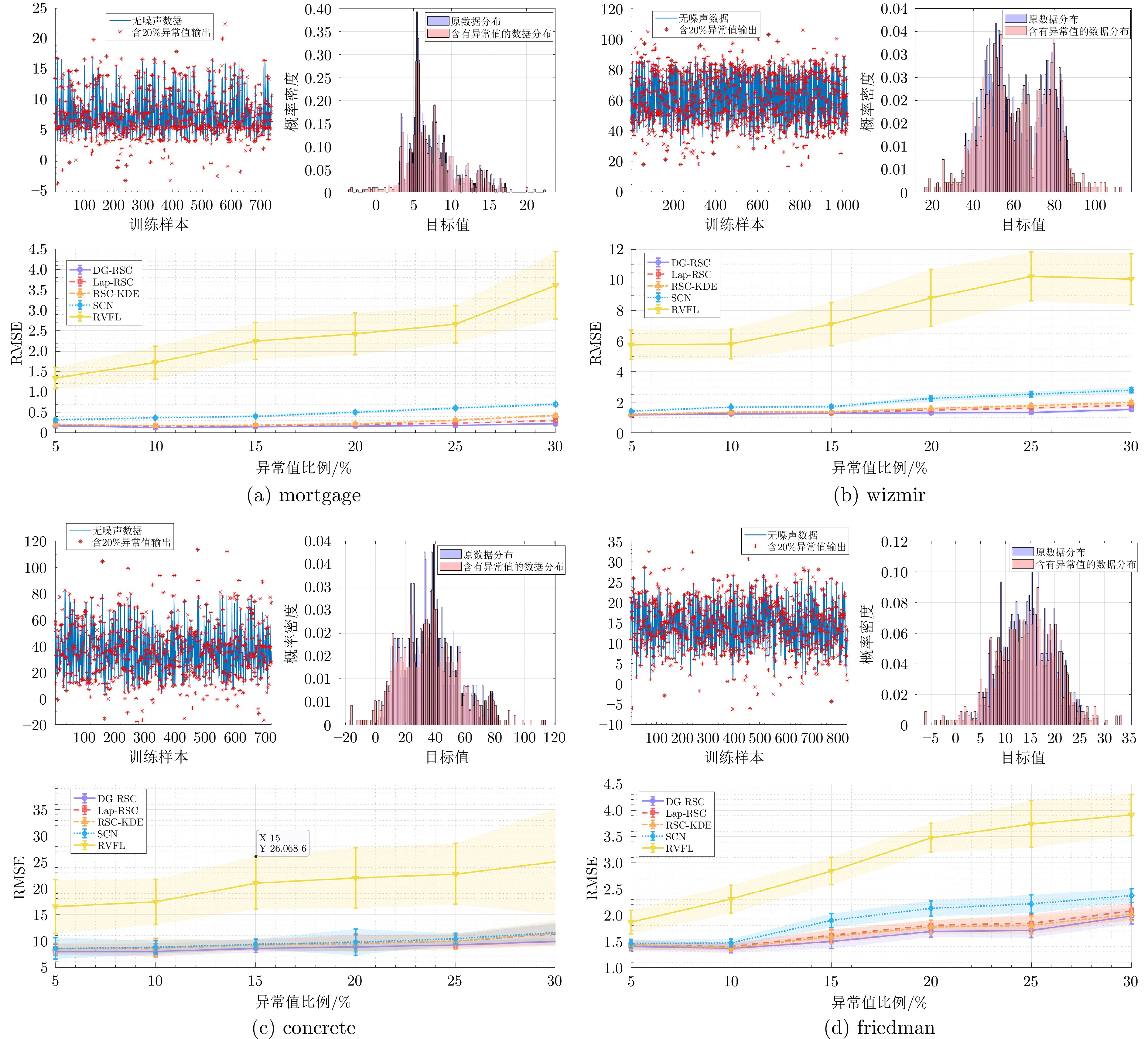

Table 5 Performance comparison of various algorithms on different datasets under different proportions of outliers

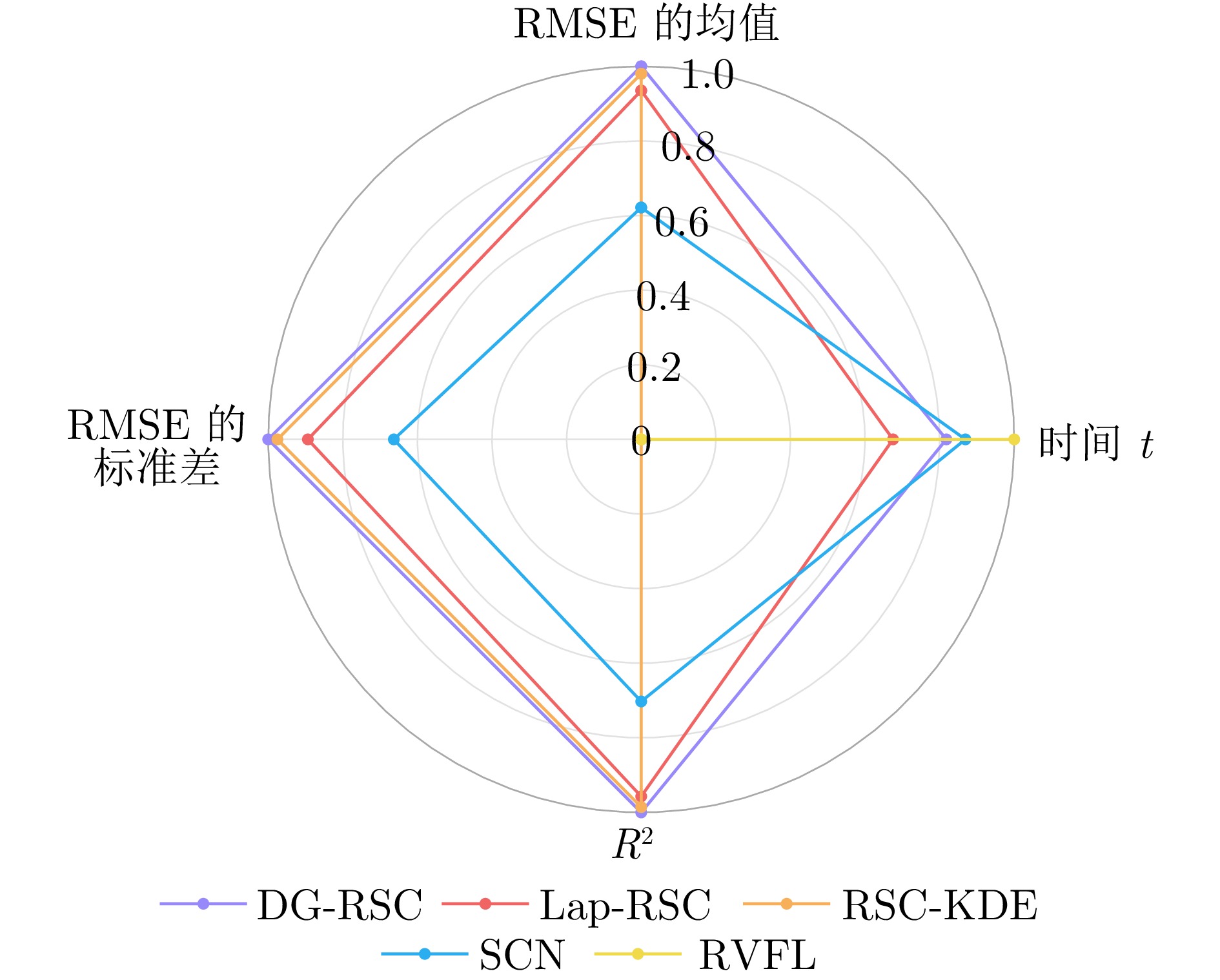

数据集 算法 异常值比例 5% 10% 15% 20% 25% 30% mortgage DG-RSC $ {{0.1693 \pm 0.0146}} $ $ {{0.1288 \pm 0.0077}} $ $ {{0.1475 \pm 0.0107}} $ $ {{0.1610 \pm 0.0137}} $ $ {{0.1780 \pm 0.0143}} $ $ {{0.2203 \pm 0.0160}} $ Lap-RSC $ 0.2096 \pm 0.0180 $ $ 0.1651 \pm 0.0109 $ $ 0.1748 \pm 0.0116 $ $ 0.2067 \pm 0.0218 $ $ 0.2359 \pm 0.0183 $ $ 0.3026 \pm 0.0324 $ RSC-KDE $ 0.2028 \pm 0.0167 $ $ 0.1734 \pm 0.0122 $ $ 0.1937 \pm 0.0139 $ $ 0.2188 \pm 0.0270 $ $ 0.3097 \pm 0.0308 $ $ 0.4224 \pm 0.0407 $ SCN $ 0.3160 \pm 0.0212 $ $ 0.3665 \pm 0.0209 $ $ 0.4043 \pm 0.0285 $ $ 0.5044 \pm 0.0388 $ $ 0.6053 \pm 0.0323 $ $ 0.6992 \pm 0.0390 $ RVFL $ 1.3390 \pm 0.2602 $ $ 1.7169 \pm 0.4050 $ $ 2.2481 \pm 0.4556 $ $ 2.4249 \pm 0.5135 $ $ 2.6632 \pm 0.4525 $ $ 3.6114 \pm 0.8223 $ wizmir DG-RSC $ {{1.1599 \pm 0.0278}} $ $ {{1.2191 \pm 0.0480}} $ $ {{1.2768 \pm 0.0744}} $ $ {{1.2929 \pm 0.1112}} $ $ {{1.3273 \pm 0.1198}} $ $ {{1.5219 \pm 0.1154}} $ Lap-RSC $ 1.1854 \pm 0.0301 $ $ 1.3120 \pm 0.0569 $ $ 1.3264 \pm 0.0554 $ $ 1.4668 \pm 0.0938 $ $ 1.6086 \pm 0.0966 $ $ 1.7960 \pm 0.1103 $ RSC-KDE $ 1.1956 \pm 0.0412 $ $ 1.3341 \pm 0.0414 $ $ 1.3622 \pm 0.0626 $ $ 1.5806 \pm 0.1048 $ $ 1.7738 \pm 0.1093 $ $ 1.9796 \pm 0.1162 $ SCN $ 1.4138 \pm 0.0669 $ $ 1.6717 \pm 0.0757 $ $ 1.7020 \pm 0.0994 $ $ 2.2305 \pm 0.1795 $ $ 2.5042 \pm 0.1816 $ $ 2.7765 \pm 0.1873 $ RVFL $ 5.7541 \pm 0.9706 $ $ 5.8140 \pm 0.9805 $ $ 7.1110 \pm 1.4120 $ $ 8.8179 \pm 1.8619 $ $ 10.2235 \pm 1.6002 $ $ 10.0458 \pm 1.6678 $ concrete DG-RSC $ {{7.9185 \pm 0.7009}} $ $ {{7.9660 \pm 0.8634}} $ $ {{8.5684 \pm 0.8181}} $ $ {{8.8093 \pm 0.7868}} $ $ {{9.1673 \pm 0.7154}} $ $ {{9.8396 \pm 0.7480}} $ Lap-RSC $ 8.4440 \pm 0.9009 $ $ 8.5939 \pm 0.8985 $ $ 9.1946 \pm 1.0774 $ $ 9.5724 \pm 1.6293 $ $ 9.9237 \pm 1.1928 $ $ 11.2736 \pm 1.9617 $ RSC-KDE $ 8.5060 \pm 1.0370 $ $ 8.6741 \pm 1.7534 $ $ 9.2001 \pm 0.9336 $ $ 9.4323 \pm 1.5510 $ $ 9.8884 \pm 1.5258 $ $ 11.4299 \pm 2.4740 $ SCN $ 8.5538 \pm 2.0450 $ $ 8.7493 \pm 1.2632 $ $ 9.3206 \pm 0.9936 $ $ 9.7409 \pm 2.5026 $ $ 10.3615 \pm 1.0438 $ $ 11.5356 \pm 1.8829 $ RVFL $ 16.5065 \pm 5.1480 $ $ 17.4224 \pm 4.3511 $ $ 21.0452 \pm 5.2337 $ $ 21.9883 \pm 5.7898 $ $ 22.7519 \pm 5.8314 $ $ 25.0754 \pm 10.0859 $ friedman DG-RSC $ {{1.4065 \pm 0.0868}} $ $ {{1.3628 \pm 0.0820}} $ $ {{1.4965 \pm 0.1323}} $ $ {{1.6856 \pm 0.1087}} $ $ {{1.7088 \pm 0.1383}} $ $ {{1.9808 \pm 0.1496}} $ Lap-RSC $ 1.4309 \pm 0.0896 $ $ 1.3970 \pm 0.0728 $ $ 1.6113 \pm 0.1123 $ $ 1.8054 \pm 0.0942 $ $ 1.8456 \pm 0.1609 $ $ 2.0729 \pm 0.1621 $ RSC-KDE $ 1.4353 \pm 0.0937 $ $ 1.3801 \pm 0.0735 $ $ 1.5864 \pm 0.1275 $ $ 1.7740 \pm 0.1308 $ $ 1.8056 \pm 0.1713 $ $ 2.0176 \pm 0.1270 $ SCN $ 1.4535 \pm 0.0804 $ $ 1.4663 \pm 0.0743 $ $ 1.8940 \pm 0.1321 $ $ 2.1229 \pm 0.1491 $ $ 2.2084 \pm 0.1748 $ $ 2.3715 \pm 0.1332 $ RVFL $ 1.8656 \pm 0.2222 $ $ 2.3011 \pm 0.2700 $ $ 2.8438 \pm 0.2599 $ $ 3.4747 \pm 0.2742 $ $ 3.7376 \pm 0.4453 $ $ 3.9099 \pm 0.3894 $ 表 6 各算法在磨矿粒度预测上的性能表现

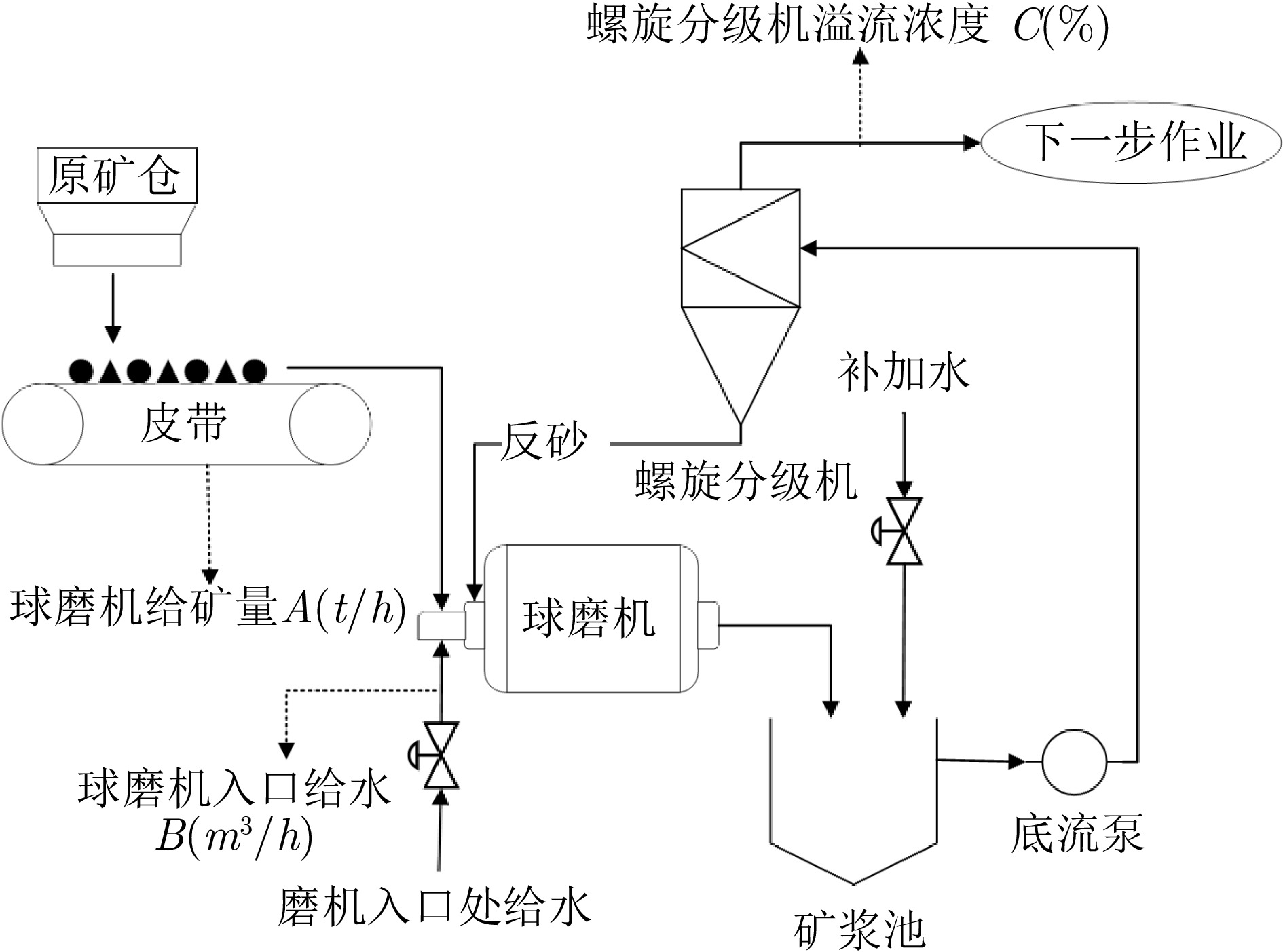

Table 6 The performance of each algorithm in the prediction of grinding particle size

算法 $ RMSE $ $ R^2 $ 时间$ t $/s DG-RSC $ {{0.2457\pm0.0007}} $ $ {{0.9912}} $ $ 0.0896 $ Lap-RSC $ 0.2623\pm0.0037 $ $ 0.9900 $ $ 0.1546 $ RSC-KDE $ 0.2508 \pm 0.0014 $ $ 0.9908 $ $ 0.4614 $ SCN $ 0.3423 \pm 0.0102 $ $ 0.9829 $ $ 0.0664 $ RVFL $ 0.5009 \pm 0.0286 $ $ 0.9633 $ $ {{0.0069}} $ -

[1] Peter O, Anup P, Nelson S M, Samuel A A, Christian O O, Diarah R S. Integration of AI and IoT in smart manufacturing: exploring technological, ethical, and legal frontiers. Procedia Computer Science, 2025, 253: 654−660 doi: 10.1016/j.procs.2025.01.127 [2] Zhao C, Zhao T N, Sun H, Yang H N. Study on droplet freezing process based on the combination of multiwavelength absorption spectroscopy and data-driven model. Measurement, 2025, 255: 1−12 doi: 10.1016/j.measurement.2025.118083 [3] Agostino M, Alessandro P, Vito S. An efficient cardiovascular disease prediction model through AI-driven IoT technology. Computers in Biology and Medicine, 2024, 183: 1−13 [4] 代伟, 张政煊, 杨春雨, 马小平. 基于SCN数据模型的SISO非线性自适应控制. 自动化学报, 2024, 50(10): 2002−2012 doi: 10.16383/j.aas.c210174Dai W, Zhang Z X, Yang C Y, Ma X P. Adaptive control of SISO nonlinear system using data-driven SCN model. Acta Automatica Sinica, 2024, 50(10): 2002−2012 doi: 10.16383/j.aas.c210174 [5] Lee S, Sohn I. Accurate prediction of high-temperature ionic melt viscosity through data-driven modeling enhanced with explainable AI. Journal of Materials Science and Technology, 2025, 248: 110−118 [6] Xie C R, Chen X. A robust data-driven approach for modeling industrial systems with non-stationary and sparsely sampled data streams. Journal of Process Control, 2025, 150: 1−16 doi: 10.1016/j.jprocont.2025.103425 [7] Xu L B, Zhang G, Huang X X, He L. Adaptive stochastic resonance in an improved Izhikevich neuron model driven by multiplicative and additive Gaussian noise and its application in fault diagnosis of wind turbines. Renewable Energy, 2025, 253: 1−24 [8] Cheng Y K, Zhan H F, Yu J H, Wang R. Data-driven online prediction and control method for injection molding product quality. Journal of Manufacturing Processes, 2025, 145: 252−273 doi: 10.1016/j.jmapro.2025.04.054 [9] Zhang L M Q, Zhu L P, Wen W J, Li J Y, Zhang C. Three-stage composite outlier identification of wind power data: integrating physical rules with regression learning and mathematical morphology. IEEE Transactions on Instrumentation and Measurement, 2025, 74: 1−13 doi: 10.1109/tim.2025.3568938 [10] Zhang C, Li Z B, Ge Y D, Liu Q L, Suo L M, Song S H, Peng T. Enhancing short-term wind speed prediction based on an outlier-robust ensemble deep random vector functional link network with AOA-optimized VMD. Energy, 2024, 296: 1−15 doi: 10.1016/j.energy.2024.131173 [11] Jia W N, Li X F, Bi D J, Xie Y L. Maximum mixture correntropy based Student-t kernel adaptive filtering for indoor positioning of Internet of Things. Information Sciences, 2025, 696: 1−21 doi: 10.1016/j.ins.2024.121729 [12] Liu W T, Li S Y. Monitoring large-scale industrial systems for wastewater treatment processes with process noise using data-driven NARX approach. Control Engineering Practice, 2025, 16: 1−10 doi: 10.1016/j.conengprac.2025.106321 [13] Wang X Y, Yan X Z, Luo Q H. Adaptive square-root extended cubature kalman filter based on huber M-estimation for multi-AUV cooperative navigation. Measurement, 2025, 249: 1−14 [14] Luo B, Gao X L. High-dimensional robust approximated M-estimators for mean regression with asymmetric data. Journal of Multivariate Analysis, 2022, 192: 1−21 [15] Cao B H, Yin Q H, Guo Y H, Yang J, Zhang L B, Wang Z Q, Tyagi M, Zhou X. Field data analysis and risk assessment of shallow gas hazards based on neural networks during industrial deep-water drilling. Reliability Engineering & System Safety, 2023, 232: 1−16 doi: 10.1016/j.ress.2022.109079 [16] Yu Z H, Zhang Z Y, Jiang Q C, Yan X F. Neural network-based hybrid modeling approach incorporating Bayesian optimization with industrial soft sensor application. Knowledge-Based Systems, 2024, 301: 1−12 doi: 10.1016/j.knosys.2024.112341 [17] Fang X H, Song Q H, Wang X J, Li Z Y, Ma H F, Liu Z Q. An intelligent tool wear monitoring model based on knowledge-data-driven physical-informed neural network for digital twin milling. Mechanical Systems and Signal Processing, 2025, 232: 1−30 doi: 10.1016/j.ymssp.2025.112736 [18] Yu F, Su D, He S Q, Wu Y Y, Zhang S K, Yin H G. Resonant tunneling diode cellular neural network with memristor coupling and its application in police forensic digital image protection. Chinese Physics B, 2025, 34(5): 1−30 doi: 10.1088/1674-1056/adb8bb [19] 谭帅, 王一帆, 姜庆超, 侍洪波, 宋冰. 基于不同故障传播路径差异化的故障诊断方法. 自动化学报, 2025, 51(1): 161−173 doi: 10.16383/j.aas.c240151Tan S, Wang Y F, Jiang Q C, Shi H B, Song B. Fault propagation path-aware network: a fault diagnosis method. Acta Automatica Sinica, 2025, 51(1): 161−173 doi: 10.16383/j.aas.c240151 [20] Pao Y H, Takefuji Y. Functional-link net computing: theory, system architecture, and functionalities. Computer, 1992, 25(5): 76−79 doi: 10.1109/2.144401 [21] Wang D H, Li M. Stochastic configuration networks: fundamentals and algorithms. IEEE Transactions on Cybernetics, 2017, 47(10): 3346−3479 doi: 10.1109/tcyb.2017.2734043 [22] Sun K, Yang C P, Gao C, Wu X L, Zhao J J. Development of an online updating stochastic configuration network for the soft-sensing of the semi-autogenous ball mill crusher system. IEEE Transactions on Instrumentation and Measurement, 2024, 73: 1−11 doi: 10.1109/tim.2023.3348909 [23] Yan A J, Hu K C, Wang D H. Monitoring model based on data-driven optimization stochastic configuration network and its applications. IEEE Sensors Journal, 2025, 25(6): 10087−10096 doi: 10.1109/JSEN.2025.3538942 [24] Guo Y N, Pu J Y, He J L, Jiao B T, Ji J J, Yang S X. Adaptive stochastic configuration network based on online active learning for evolving data streams. Information Sciences, 2025, 711: 1−16 [25] Zhu J Z, Zhang L, Zhang D, Chen Y X. Probabilistic wind power prediction using incremental Bayesian stochastic configuration network under concept drift environment. IEEE Transactions on Industry Applications, 2025, 61(1): 1399−1409 doi: 10.1109/TIA.2024.3462696 [26] Wang D H, Tian P X, Dai W, Yu G. Predicting particle size of copper ore grinding with stochastic configuration networks. IEEE Transactions on Industrial Informatics, 2024, 20(11): 12969−12978 doi: 10.1109/TII.2024.3431039 [27] Wang D H, Li M. Robust stochastic configuration networks with kernel density estimation for uncertain data regression. Information Sciences, 2017, 412-413: 210−222 doi: 10.1016/j.ins.2017.05.047 [28] Yan A J, Guo J C, Wang D H. Robust stochastic configuration networks for industrial data modelling with Student's-t mixture distribution. Information Sciences, 2022, 606: 493−505 doi: 10.1016/j.ins.2022.05.105 [29] Lu J, Ding J. Mixed-distribution-based robust stochastic configuration networks for prediction interval construction. IEEE Transactions on Industrial Informatics, 2020, 16(8): 5099−5109 doi: 10.1109/TII.2019.2954351 [30] Liu X, Liu X Q, Dai W. Robust SCN for data-driven modeling based on heavy-tailed noise distribution. IEEE Transactions on Instrumentation and Measurement, 2025, 74: 1−13 doi: 10.1109/tim.2025.3547532 [31] 刘鑫. 时滞取值概率未知下的线性时滞系统辨识方法. 自动化学报, 2023, 49(10): 2136−2144Liu X. Identification of linear time-delay systems with unknown delay distributions in its value range. Acta Automatica Sinica, 2023, 49(10): 2136−2144 [32] Chen X, Zhao S, Liu F, Tao C B. Laplace distribution based online identification of linear systems with robust recursive expectation-maximization algorithm. IEEE Transactions on Industrial Informatics, 2023, 19(8): 9028−9036 doi: 10.1109/TII.2022.3225026 [33] He J C, Peng B, Wang G. A non-linear non-Gaussian filtering framework based on the Gaussian noise model jump assumption. Automatica, 2025, 178: 1−7 doi: 10.1016/j.automatica.2025.112360 [34] Li Z H, Hou B, Wu Z T, Guo Z X, Ren B, Guo X P, et al. Complete rotated localization loss based on super-Gaussian distribution for remote sensing images. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61(8): 1−14 doi: 10.1109/tgrs.2023.3305578 [35] Jin X, Huang B. Robust identification of piecewise/switching autoregressive exogenous process. AIChE Journal, 2010, 56(7): 1829−1844 doi: 10.1002/aic.12112 [36] Schneider F, Papaioannou I, Sudret B, Müller G. Maximum a posteriori estimation for linear structural dynamics models using Bayesian optimization with rational polynomial chaos expansions. Computer Methods in Applied Mechanics and Engineering, 2024, 432: 1−22 doi: 10.1016/j.cma.2024.117418 [37] Alcalá-Fdez J, Fernandez A, Luengo J, Derrac J, García S, Sánchez L, Herrera F. KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. Multiple-Valued Logic and Soft Computing, 2011, 17(2-3): 255−287 [38] 姚雷, 吴迪, 谢永鑫. 磨矿过程理论及设备研究进展. 选煤技术, 2023, 51(2): 1−8 doi: 10.16447/j.cnki.cpt.2023.02.001Yao L, Wu D, Xie Y X. Progress of research on grinding process theory and equipment. Coal Preparation Technology, 2023, 51(2): 1−8 doi: 10.16447/j.cnki.cpt.2023.02.001 [39] Guo W, Guo K Q. Effect of solid concentration on particle size distribution and grinding kinetics in stirred mills. Minerals, 2024, 14(7): 1−15 doi: 10.3390/min14070720 -

计量

- 文章访问数: 4

- HTML全文浏览量: 3

- 被引次数: 0

下载:

下载: