|

[1]

|

Harjunkoski I, Maravelias C T, Bongers P, Castro P M, Engell S, Grossmann I E, et al. Scope for industrial applications of production scheduling models and solution methods. Computers & Chemical Engineering, 2014, 62: 161−193

|

|

[2]

|

Castro P M, Grossmann I E, Zhang Q. Expanding scope and computational challenges in process scheduling. Computers & Chemical Engineering, 2018, 114: 14−42

|

|

[3]

|

Hou Y, Wu N Q, Zhou M C, Li Z W. Pareto-optimization for scheduling of crude oil operations in refinery via genetic algorithm. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 2017, 47(3): 517−530 doi: 10.1109/TSMC.2015.2507161

|

|

[4]

|

Gao K Z, Huang Y, Sadollah A, Wang L. A review of energy-efficient scheduling in intelligent production systems. Complex & Intelligent Systems, 2020, 6(2): 237−249

|

|

[5]

|

Fathollahi-Fard A M, Woodward L, Akhrif O. A distributed permutation flow-shop considering sustainability criteria and real-time scheduling. Journal of Industrial Information Integration, 2024, 39: Article No. 100598 doi: 10.1016/j.jii.2024.100598

|

|

[6]

|

Sutton R S, Barto A G. Reinforcement Learning: An Introduction. Cambridge: MIT Press, 1998.

|

|

[7]

|

Esteso A, Peidro D, Mula J, Díaz-Madroñero M. Reinforcement learning applied to production planning and control. International Journal of Production Research, 2023, 61(16): 5772−5789 doi: 10.1080/00207543.2022.2104180

|

|

[8]

|

Dogru O, Xie J Y, Prakash O, Chiplunkar R, Soesanto J, Chen H T, et al. Reinforcement learning in process industries: Review and perspective. IEEE/CAA Journal of Automatica Sinica, 2024, 11(2): 283−300 doi: 10.1109/JAS.2024.124227

|

|

[9]

|

Nian R, Liu J F, Huang B. A review on reinforcement learning: Introduction and applications in industrial process control. Computers & Chemical Engineering, 2020, 139: Article No. 106886

|

|

[10]

|

Bellman R. A Markovian decision process. Journal of Mathematics and Mechanics, 1957, 6(5): 679−684

|

|

[11]

|

Sutton R S. Learning to predict by the methods of temporal differences. Machine Learning, 1988, 3(1): 9−44 doi: 10.1023/A:1022633531479

|

|

[12]

|

Watkins C J C H, Dayan P. Q-learning. Machine Learning, 1992, 8(3−4): 279−292

|

|

[13]

|

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, et al. Playing Atari with deep reinforcement learning. arXiv preprint arXiv: 1312.5602, 2013.

|

|

[14]

|

van Hasselt H, Guez A, Silver D. Deep reinforcement learning with double Q-learning. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, USA: AAAI Press, 2016.

|

|

[15]

|

Wang Z Y, Schaul T, Hessel M, van Hasselt H, Lanctot M, de Freitas N. Dueling network architectures for deep reinforcement learning. In: Proceedings of the 33rd International Conference on Machine Learning. New York, USA: JMLR.org, 2016. 1995−2003

|

|

[16]

|

Williams R J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine Learning, 1992, 8(3−4): 229−256 doi: 10.1023/A:1022672621406

|

|

[17]

|

Konda V R, Tsitsiklis J N. Actor-citic agorithms. In: Proceedings of the 13th International Conference on Neural Information Processing Systems. Denver, USA: MIT Press, 1999. 1008−1014

|

|

[18]

|

Mnih V, Badia A P, Mirza M, Graves A, Harley T, Lillicrap T P, et al. Asynchronous methods for deep reinforcement learning. In: Proceedings of the 33rd International Conference on Machine Learning. New York, USA: JMLR.org, 2016. 1928−1937

|

|

[19]

|

Schulman J, Levine S, Moritz P, Jordan M, Abbeel P. Trust region policy optimization. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: JMLR.org, 2015. 1889−1897

|

|

[20]

|

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. arXiv preprint arXiv: 1707.06347, 2017.

|

|

[21]

|

Lillicrap T P, Hunt J J, Pritzel A, Heess N, Erez T, Tassa Y, et al. Continuous control with deep reinforcement learning. arXiv preprint arXiv: 1509.02971, 2015.

|

|

[22]

|

Fujimoto S, van Hoof H, Meger D. Addressing function approximation error in actor-critic methods. arXiv preprint arXiv: 1802.09477, 2018.

|

|

[23]

|

Haarnoja T, Zhou A, Abbeel P, Levine S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. arXiv preprint arXiv: 1801.01290, 2018.

|

|

[24]

|

Gao X Y, Peng D, Kui G F, Pan J, Zuo X, Li F F. Reinforcement learning based optimization algorithm for maintenance tasks scheduling in coalbed methane gas field. Computers & Chemical Engineering, 2023, 170: Article No. 108131

|

|

[25]

|

Nikita S, Tiwari A, Sonawat D, Kodamana H, Rathore A S. Reinforcement learning based optimization of process chromatography for continuous processing of biopharmaceuticals. Chemical Engineering Science, 2021, 230: Article No. 116171 doi: 10.1016/j.ces.2020.116171

|

|

[26]

|

Cheng Y, Huang Y X, Pang B, Zhang W D. ThermalNet: A deep reinforcement learning-based combustion optimization system for coal-fired boiler. Engineering Applications of Artificial Intelligence, 2018, 74: 303−311 doi: 10.1016/j.engappai.2018.07.003

|

|

[27]

|

Shao Z, Si F Q, Kudenko D, Wang P, Tong X Z. Predictive scheduling of wet flue gas desulfurization system based on reinforcement learning. Computers & Chemical Engineering, 2020, 141: Article No. 107000

|

|

[28]

|

Hubbs C D, Li C, Sahinidis N V, Grossmann I E, Wassick J M. A deep reinforcement learning approach for chemical production scheduling. Computers & Chemical Engineering, 2020, 141: Article No. 106982

|

|

[29]

|

Powell B K M, Machalek D, Quah T. Real-time optimization using reinforcement learning. Computers & Chemical Engineering, 2020, 143: Article No. 107077

|

|

[30]

|

Liu C, Ding J L, Sun J Y. Reinforcement learning based decision making of operational indices in process industry under changing environment. IEEE Transactions on Industrial Informatics, 2021, 17(4): 2727−2736 doi: 10.1109/TII.2020.3005207

|

|

[31]

|

Adams D, Oh D H, Kim D W, Lee C H, Oh M. Deep reinforcement learning optimization framework for a power generation plant considering performance and environmental issues. Journal of Cleaner Production, 2021, 291: Article No. 125915 doi: 10.1016/j.jclepro.2021.125915

|

|

[32]

|

Pravin P S, Luo Z Y, Li L Y, Wang X N. Learning-based scheduling of industrial hybrid renewable energy systems. Computers & Chemical Engineering, 2022, 159: Article No. 107665

|

|

[33]

|

Song G, Ifaei P, Ha J, Kang D, Won W, Liu J J, et al. The AI circular hydrogen economist: Hydrogen supply chain design via hierarchical deep multi-agent reinforcement learning. Chemical Engineering Journal, 2024, 497: Article No. 154464 doi: 10.1016/j.cej.2024.154464

|

|

[34]

|

Wang H, He H W, Bai Y F, Yue H W. Parameterized deep Q-network based energy management with balanced energy economy and battery life for hybrid electric vehicles. Applied Energy, 2022, 320: Article No. 119270 doi: 10.1016/j.apenergy.2022.119270

|

|

[35]

|

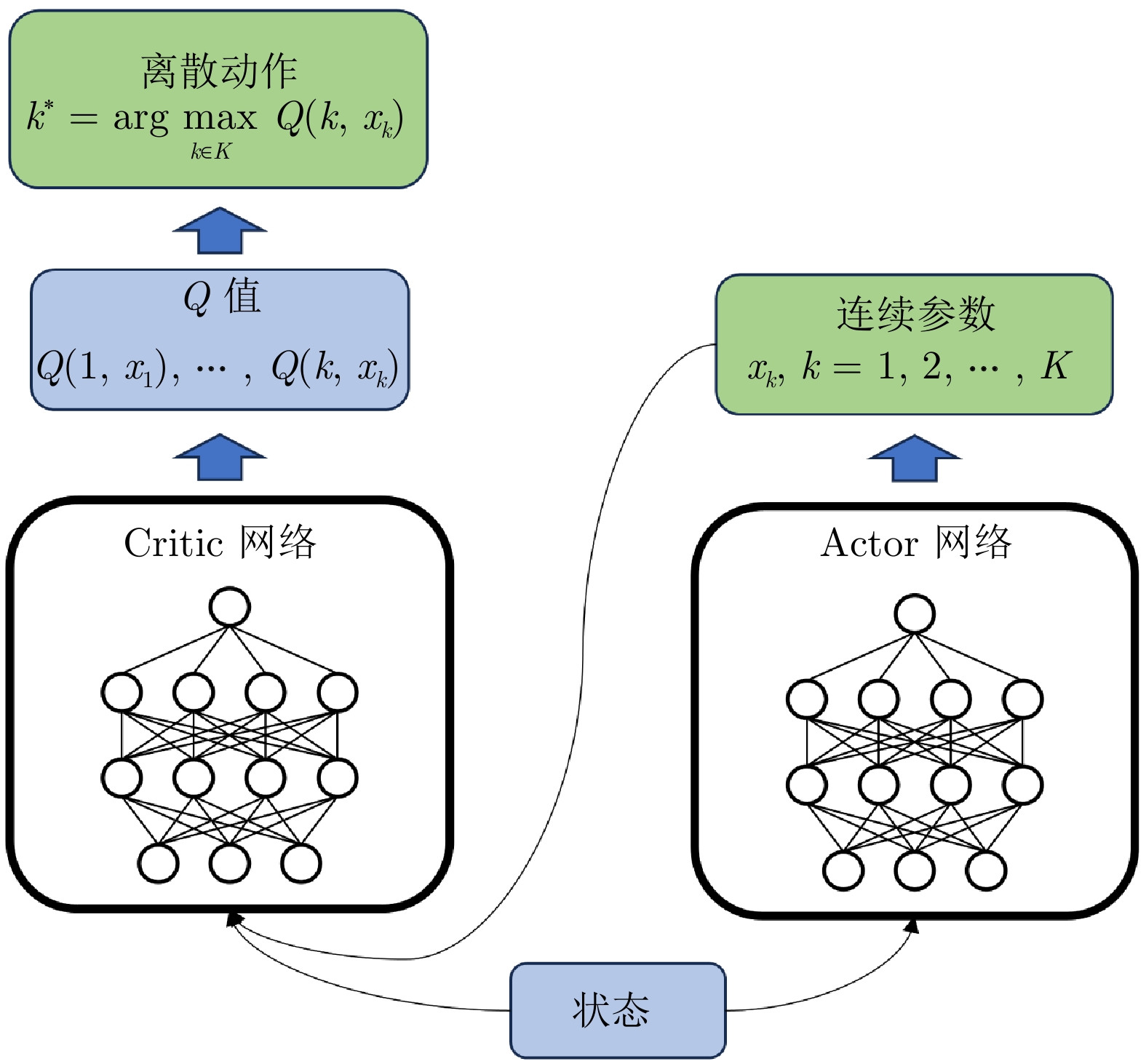

Campos G, El-Farra N H, Palazoglu A. Soft actor-critic deep reinforcement learning with hybrid mixed-integer actions for demand responsive scheduling of energy systems. Industrial & Engineering Chemistry Research, 2022, 61(24): 8443−8461

|

|

[36]

|

Li L J, Yang X, Yang S P, Xu X W. Optimization of oxygen system scheduling in hybrid action space based on deep reinforcement learning. Computers & Chemical Engineering, 2023, 171: Article No. 108168

|

|

[37]

|

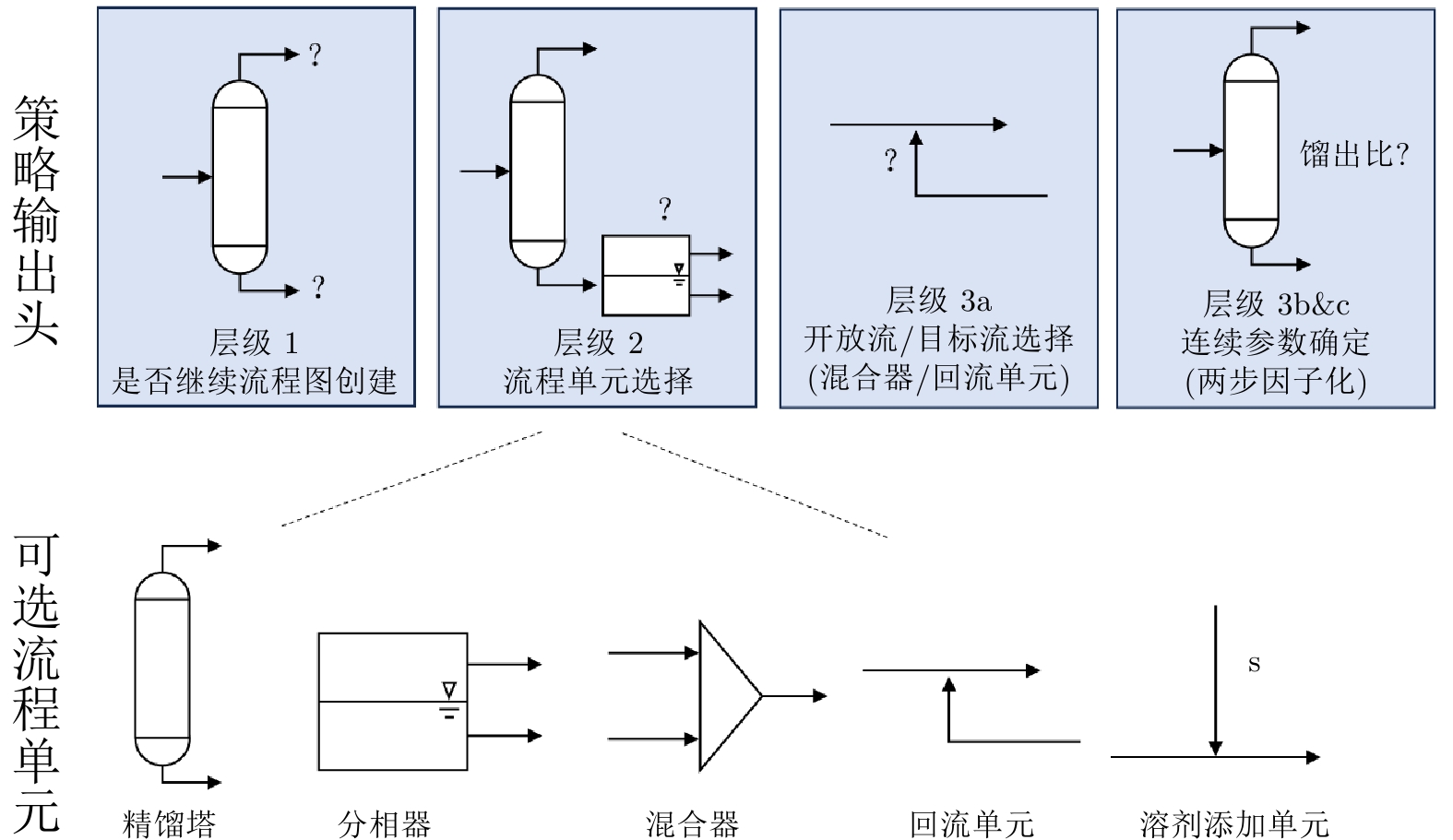

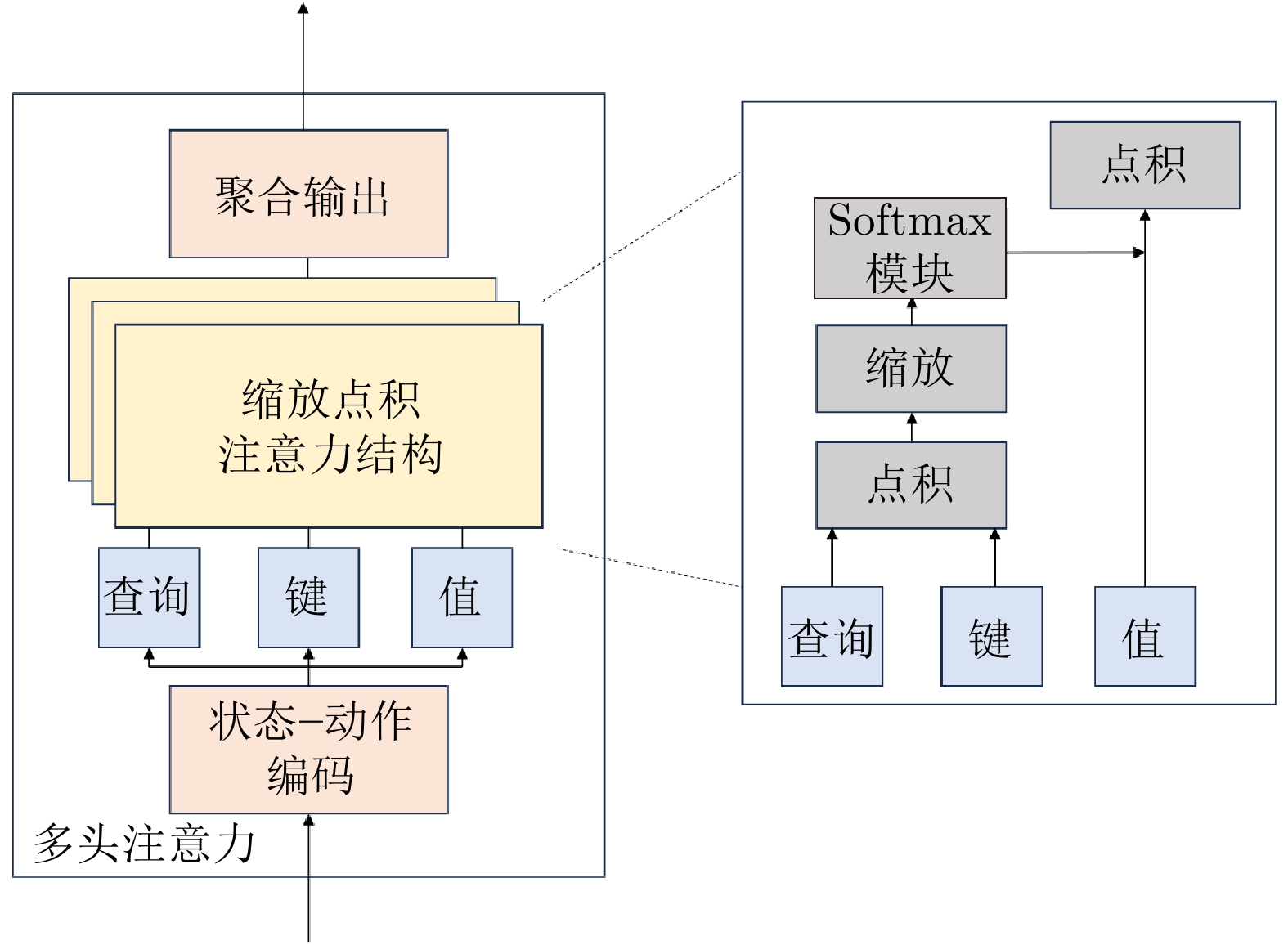

Stops L, Leenhouts R, Gao Q H, Schweidtmann A M. Flowsheet generation through hierarchical reinforcement learning and graph neural networks. AIChE Journal, 2023, 69(1): Article No. e17938 doi: 10.1002/aic.17938

|

|

[38]

|

Masson W, Ranchod P, Konidaris G. Reinforcement learning with parameterized actions. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, USA: AAAI Press, 2016. 1934−1940

|

|

[39]

|

Göttl Q, Pirnay J, Burger J, Grimm D G. Deep reinforcement learning enables conceptual design of processes for separating azeotropic mixtures without prior knowledge. Computers & Chemical Engineering, 2025, 194: Article No. 108975

|

|

[40]

|

Zhang H K, Liu X Y, Sun D S, Dabiri A, de Schutter B. Integrated reinforcement learning and optimization for railway timetable rescheduling. IFAC-PapersOnLine, 2024, 58(10): 310−315 doi: 10.1016/j.ifacol.2024.07.358

|

|

[41]

|

Che G, Zhang Y Y, Tang L X, Zhao S N. A deep reinforcement learning based multi-objective optimization for the scheduling of oxygen production system in integrated iron and steel plants. Applied Energy, 2023, 345: Article No. 121332 doi: 10.1016/j.apenergy.2023.121332

|

|

[42]

|

Shakya A K, Pillai G, Chakrabarty S. Reinforcement learning algorithms: A brief survey. Expert Systems With Applications, 2023, 231: Article No. 120495 doi: 10.1016/j.eswa.2023.120495

|

|

[43]

|

Panzer M, Bender B. Deep reinforcement learning in production systems: A systematic literature review. International Journal of Production Research, 2022, 60(13): 4316−4341 doi: 10.1080/00207543.2021.1973138

|

|

[44]

|

Ladosz P, Weng L L, Kim M, Oh H. Exploration in deep reinforcement learning: A survey. Information Fusion, 2022, 85: 1−22 doi: 10.1016/j.inffus.2022.03.003

|

|

[45]

|

Knox W B, Stone P. Interactively shaping agents via human reinforcement: The TAMER framework. In: Proceedings of the 5th International Conference on Knowledge Capture. Redondo Beach, USA: Association for Computing Machinery, 2009. 9−16

|

|

[46]

|

Christiano P F, Leike J, Brown T B, Martic M, Legg S, Amodei D. Deep reinforcement learning from human preferences. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 4302−4310

|

|

[47]

|

Devlin S, Grześ M, Kudenko D. An empirical study of potential-based reward shaping and advice in complex, multi-agent systems. Advances in Complex Systems, 2011, 14(2): 251−278 doi: 10.1142/S0219525911002998

|

|

[48]

|

Grześ M, Kudenko D. Plan-based reward shaping for reinforcement learning. In: Proceedings of the 4th International IEEE Conference Intelligent Systems. Varna, Bulgaria: IEEE, 2008. 10-22−10-29

|

|

[49]

|

Grześ M, Kudenko D. Multigrid reinforcement learning with reward shaping. In: Proceedings of the 18th International Conference on Artificial Neural Networks. Prague, Czech Republic: Springer, 2008. 357−366

|

|

[50]

|

Du Y, Li J Q, Chen X L, Duan P Y, Pan Q K. Knowledge-based reinforcement learning and estimation of distribution algorithm for flexible job shop scheduling problem. IEEE Transactions on Emerging Topics in Computational Intelligence, 2023, 7(4): 1036−1050 doi: 10.1109/TETCI.2022.3145706

|

|

[51]

|

Gui Y, Tang D B, Zhu H H, Zhang Y, Zhang Z Q. Dynamic scheduling for flexible job shop using a deep reinforcement learning approach. Computers & Industrial Engineering, 2023, 180: Article No. 109255

|

|

[52]

|

Chow Y, Ghavamzadeh M, Janson L, Pavone M. Risk-constrained reinforcement learning with percentile risk criteria. The Journal of Machine Learning Research, 2017, 18(1): 6070−6120

|

|

[53]

|

Luo L, Yan X S. Scheduling of stochastic distributed hybrid flow-shop by hybrid estimation of distribution algorithm and proximal policy optimization. Expert Systems With Applications, 2025, 271: Article No. 126523 doi: 10.1016/j.eswa.2025.126523

|

|

[54]

|

Li H P, Wan Z Q, He H B. Real-time residential demand response. IEEE Transactions on Smart Grid, 2020, 11(5): 4144−4154 doi: 10.1109/TSG.2020.2978061

|

|

[55]

|

Dai J T, Ji J M, Yang L, Zheng Q, Pan G. Augmented proximal policy optimization for safe reinforcement learning. In: Proceedings of the 37th AAAI Conference on Artificial Intelligence. Washington, USA: AAAI Press, 2023. 7288−7295

|

|

[56]

|

Gu S D, Yang L, Du Y L, Chen G, Walter F, Wang J, et al. A review of safe reinforcement learning: Methods, theories, and applications. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(12): 11216−11235 doi: 10.1109/TPAMI.2024.3457538

|

|

[57]

|

Yu D J, Zou W J, Yang Y J, Ma H T, Li S E, Yin Y M, et al. Safe model-based reinforcement learning with an uncertainty-aware reachability certificate. IEEE Transactions on Automation Science and Engineering, 2024, 21(3): 4129−4142 doi: 10.1109/TASE.2023.3292388

|

|

[58]

|

Kou P, Liang D L, Wang C, Wu Z H, Gao L. Safe deep reinforcement learning-based constrained optimal control scheme for active distribution networks. Applied Energy, 2020, 264: Article No. 114772 doi: 10.1016/j.apenergy.2020.114772

|

|

[59]

|

Song Y, Zhang B, Wen C B, Wang D, Wei G L. Model predictive control for complicated dynamic systems: A survey. International Journal of Systems Science, 2025, 56(9): 2168−2193 doi: 10.1080/00207721.2024.2439473

|

|

[60]

|

Zanon M, Gros S. Safe reinforcement learning using robust MPC. IEEE Transactions on Automatic Control, 2021, 66(8): 3638−3652 doi: 10.1109/TAC.2020.3024161

|

|

[61]

|

Sui Y N, Gotovos A, Burdick J W, Krause A. Safe exploration for optimization with Gaussian processes. In: Proceedings of the 32nd International Conference on International Conference on Machine Learning. Lille, France: JMLR.org, 2015. 997−1005

|

|

[62]

|

Turchetta M, Berkenkamp F, Krause A. Safe exploration in finite Markov decision processes with Gaussian processes. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 4312−4320

|

|

[63]

|

Yang H K, Bernal D E, Franzoi R E, Engineer F G, Kwon K, Lee S, et al. Integration of crude-oil scheduling and refinery planning by Lagrangean decomposition. Computers & Chemical Engineering, 2020, 138: Article No. 106812

|

|

[64]

|

Castro P M. Optimal scheduling of a multiproduct batch chemical plant with preemptive changeover tasks. Computers & Chemical Engineering, 2022, 162: Article No. 107818

|

|

[65]

|

Franzoi R E, Menezes B C, Kelly J D, Gut J A W, Grossmann I E. Large-scale optimization of nonconvex MINLP refinery scheduling. Computers & Chemical Engineering, 2024, 186: Article No. 108678

|

|

[66]

|

Sun L, Lin L, Li H J, Gen M. Large scale flexible scheduling optimization by a distributed evolutionary algorithm. Computers & Industrial Engineering, 2019, 128: 894−904

|

|

[67]

|

Zhang W T, Du W, Yu G, He R C, Du W L, Jin Y C. Knowledge-assisted dual-stage evolutionary optimization of large-scale crude oil scheduling. IEEE Transactions on Emerging Topics in Computational Intelligence, 2024, 8(2): 1567−1581 doi: 10.1109/TETCI.2024.3353590

|

|

[68]

|

Xu M, Mei Y, Zhang F F, Zhang M J. Genetic programming with lexicase selection for large-scale dynamic flexible job shop scheduling. IEEE Transactions on Evolutionary Computation, 2024, 28(5): 1235−1249 doi: 10.1109/TEVC.2023.3244607

|

|

[69]

|

Zheng Y, Wang J, Wang C M, Huang C Y, Yang J F, Xie N. Strategic bidding of wind farms in medium-to-long-term rolling transactions: A bi-level multi-agent deep reinforcement learning approach. Applied Energy, 2025, 383: Article No. 125265 doi: 10.1016/j.apenergy.2024.125265

|

|

[70]

|

Liu Y S, Fan J X, Shen W M. A deep reinforcement learning approach with graph attention network and multi-signal differential reward for dynamic hybrid flow shop scheduling problem. Journal of Manufacturing Systems, 2025, 80: 643−661 doi: 10.1016/j.jmsy.2025.03.028

|

|

[71]

|

Bashyal A, Boroukhian T, Veerachanchai P, Naransukh M, Wicaksono H. Multi-agent deep reinforcement learning based demand response and energy management for heavy industries with discrete manufacturing systems. Applied Energy, 2025, 392: Article No. 125990 doi: 10.1016/j.apenergy.2025.125990

|

|

[72]

|

de Mars P, O'Sullivan A. Applying reinforcement learning and tree search to the unit commitment problem. Applied Energy, 2021, 302: Article No. 117519 doi: 10.1016/j.apenergy.2021.117519

|

|

[73]

|

Zhu L W, Takami G, Kawahara M, Kanokogi H, Matsubara T. Alleviating parameter-tuning burden in reinforcement learning for large-scale process control. Computers & Chemical Engineering, 2022, 158: Article No. 107658

|

|

[74]

|

Hu R, Huang Y F, Wu X, Qian B, Wang L, Zhang Z Q. Collaborative Q-learning hyper-heuristic evolutionary algorithm for the production and transportation integrated scheduling of silicon electrodes. Swarm and Evolutionary Computation, 2024, 86: Article No. 101498 doi: 10.1016/j.swevo.2024.101498

|

|

[75]

|

Chen X, Li Y B, Wang K P, Wang L, Liu J, Wang J, et al. Reinforcement learning for distributed hybrid flowshop scheduling problem with variable task splitting towards mass personalized manufacturing. Journal of Manufacturing Systems, 2024, 76: 188−206 doi: 10.1016/j.jmsy.2024.07.011

|

|

[76]

|

Bouton M, Julian K D, Nakhaei A, Fujimura K, Kochenderfer M J. Decomposition methods with deep corrections for reinforcement learning. Autonomous Agents and Multi-agent Systems, 2019, 33(3): 330−352 doi: 10.1007/s10458-019-09407-z

|

|

[77]

|

Chen Y D, Ding J L, Chen Q D. A reinforcement learning based large-scale refinery production scheduling algorithm. IEEE Transactions on Automation Science and Engineering, 2024, 21(4): 6041−6055 doi: 10.1109/TASE.2023.3321612

|

|

[78]

|

Bonnans J F. Lectures on stochastic programming: Modeling and theory. SIAM Review, 2011, 53(1): 181−183

|

|

[79]

|

Li Z K, Ierapetritou M G. Robust optimization for process scheduling under uncertainty. Industrial & Engineering Chemistry Research, 2008, 47(12): 4148−4157

|

|

[80]

|

Glomb L, Liers F, Rösel F. A rolling-horizon approach for multi-period optimization. European Journal of Operational Research, 2022, 300(1): 189−206 doi: 10.1016/j.ejor.2021.07.043

|

|

[81]

|

Qasim M, Wong K Y, Komarudin. A review on aggregate production planning under uncertainty: Insights from a fuzzy programming perspective. Engineering Applications of Artificial Intelligence, 2024, 128(C): Article No. 107436

|

|

[82]

|

Wu X Q, Yan X F, Guan D H, Wei M Q. A deep reinforcement learning model for dynamic job-shop scheduling problem with uncertain processing time. Engineering Applications of Artificial Intelligence, 2024, 131: Article No. 107790 doi: 10.1016/j.engappai.2023.107790

|

|

[83]

|

Ruiz-Rodríguez M L, Kubler S, Robert J, le Traon Y. Dynamic maintenance scheduling approach under uncertainty: Comparison between reinforcement learning, genetic algorithm simheuristic, dispatching rules. Expert Systems With Applications, 2024, 248: Article No. 123404 doi: 10.1016/j.eswa.2024.123404

|

|

[84]

|

Rangel-Martinez D, Ricardez-Sandoval L A. Recurrent reinforcement learning strategy with a parameterized agent for online scheduling of a state task network under uncertainty. Industrial & Engineering Chemistry Research, 2025, 64(13): 7126−7140

|

|

[85]

|

Huang M Y, He R C, Dai X, Du W L, Qian F. Reinforcement learning based gasoline blending optimization: Achieving more efficient nonlinear online blending of fuels. Chemical Engineering Science, 2024, 300: Article No. 120574 doi: 10.1016/j.ces.2024.120574

|

|

[86]

|

Peng W L, Lin X J, Li H T. Critical chain based proactive-reactive scheduling for resource-constrained project scheduling under uncertainty. Expert Systems With Applications, 2023, 214: Article No. 119188 doi: 10.1016/j.eswa.2022.119188

|

|

[87]

|

Grumbach F, Müller A, Reusch P, Trojahn S. Robust-stable scheduling in dynamic flow shops based on deep reinforcement learning. Journal of Intelligent Manufacturing, 2024, 35(2): 667−686 doi: 10.1007/s10845-022-02069-x

|

|

[88]

|

Huang J P, Gao L, Li X Y. A hierarchical multi-action deep reinforcement learning method for dynamic distributed job-shop scheduling problem with job arrivals. IEEE Transactions on Automation Science and Engineering, 2025, 22: 2501−2513 doi: 10.1109/TASE.2024.3380644

|

|

[89]

|

Infantes G, Roussel S, Pereira P, Jacquet A, Benazera E. Learning to solve job shop scheduling under uncertainty. In: Proceedings of the 21st International Conference on Integration of Constraint Programming, Artificial Intelligence, and Operations Research. Uppsala, Sweden: Springer, 2024. 329−345

|

|

[90]

|

de Puiseau C W, Meyes R, Meisen T. On reliability of reinforcement learning based production scheduling systems: A comparative survey. Journal of Intelligent Manufacturing, 2022, 33(4): 911−927 doi: 10.1007/s10845-022-01915-2

|

|

[91]

|

Lee C Y, Huang Y T, Chen P J. Robust-optimization-guiding deep reinforcement learning for chemical material production scheduling. Computers & Chemical Engineering, 2024, 187: Article No. 108745

|

|

[92]

|

Rangel-Martinez D, Ricardez-Sandoval L A. A recurrent reinforcement learning strategy for optimal scheduling of partially observable job-shop and flow-shop batch chemical plants under uncertainty. Computers & Chemical Engineering, 2024, 188: Article No. 108748

|

下载:

下载: