|

[1]

|

Qiao H, Chen J H, Huang X. A survey of brain-inspired intelligent robots: Integration of vision, decision, motion control, and musculoskeletal systems. IEEE Transactions on Cybernetics, 2022, 52(10): 11267−11280 doi: 10.1109/TCYB.2021.3071312

|

|

[2]

|

Bermudez-Contreras E, Clark B J, Wilber A. The neuroscience of spatial navigation and the relationship to artificial intelligence. Frontiers in Computational Neuroscience, 2020, 14: Article No. 63 doi: 10.3389/fncom.2020.00063

|

|

[3]

|

Poulter S, Hartley T, Lever C. The neurobiology of mammalian navigation. Current Biology, 2018, 28(17): R1023−R1042 doi: 10.1016/j.cub.2018.05.050

|

|

[4]

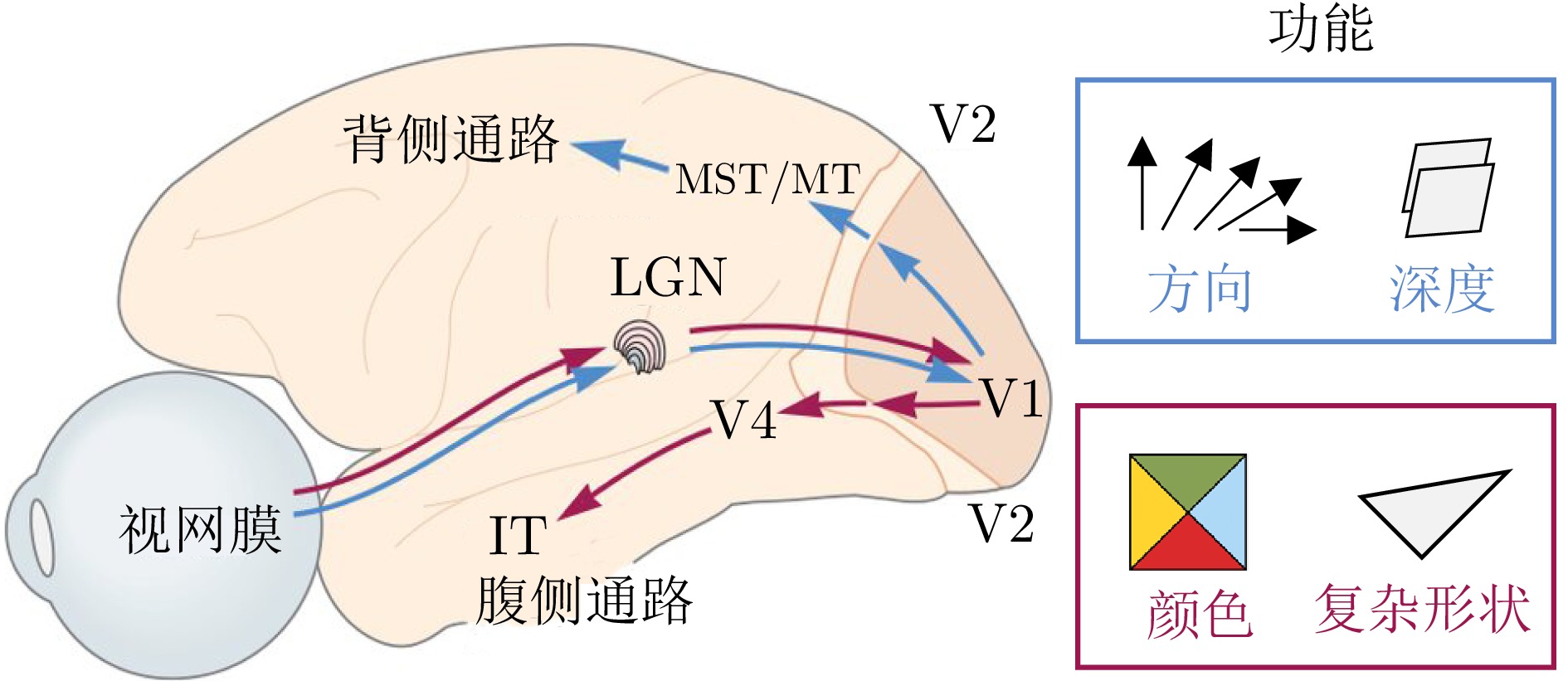

|

孟志林, 赵冬晔, 斯白露, 戴士杰. 基于哺乳动物空间认知机制的机器人导航综述. 机器人, 2023, 45(4): 496−512Meng Zhi-Lin, Zhao Dong-Ye, Si Bai-Lu, Dai Shi-Jie. A survey on robot navigation based on mammalian spatial cognition. Robot, 2023, 45(4): 496−512

|

|

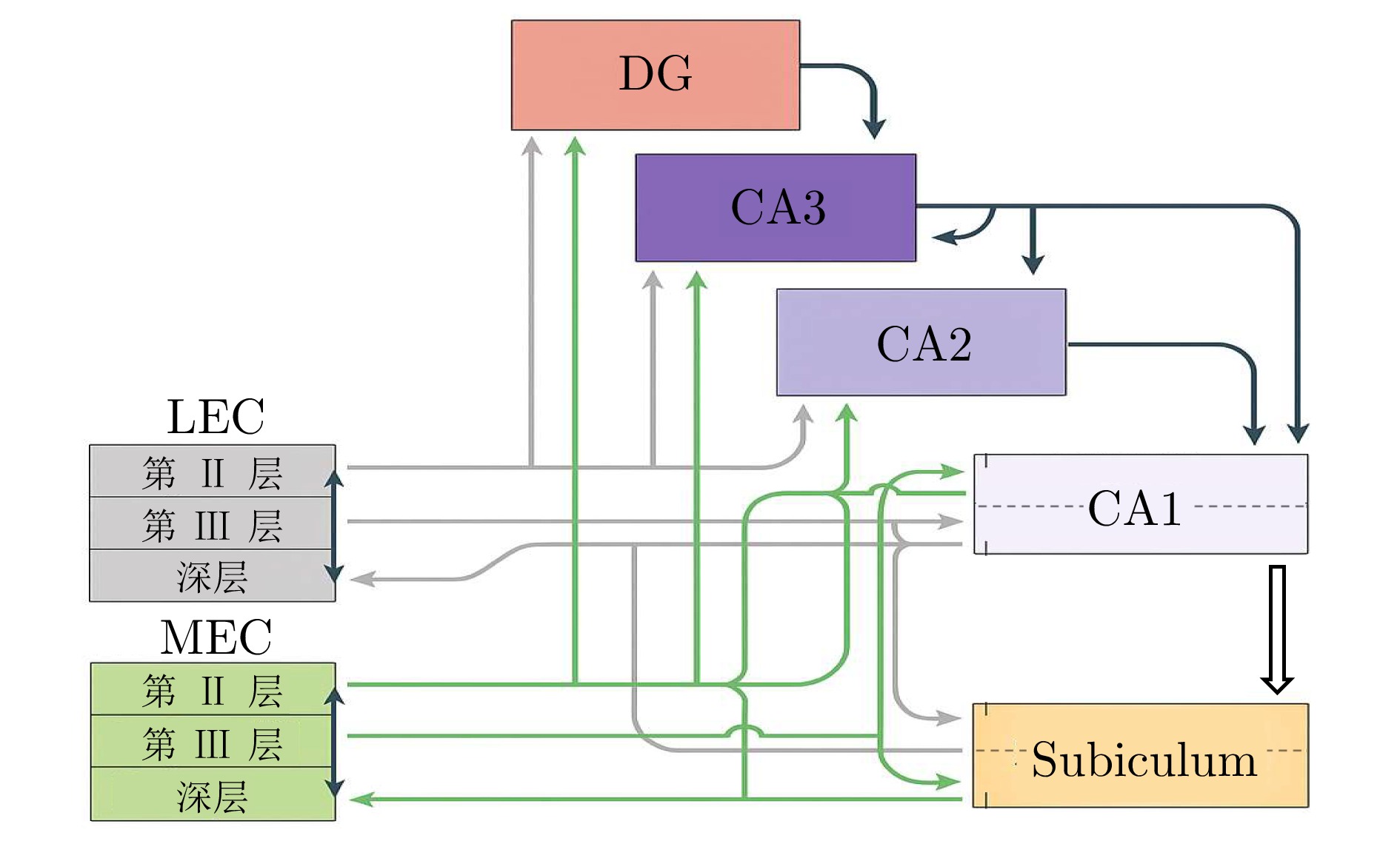

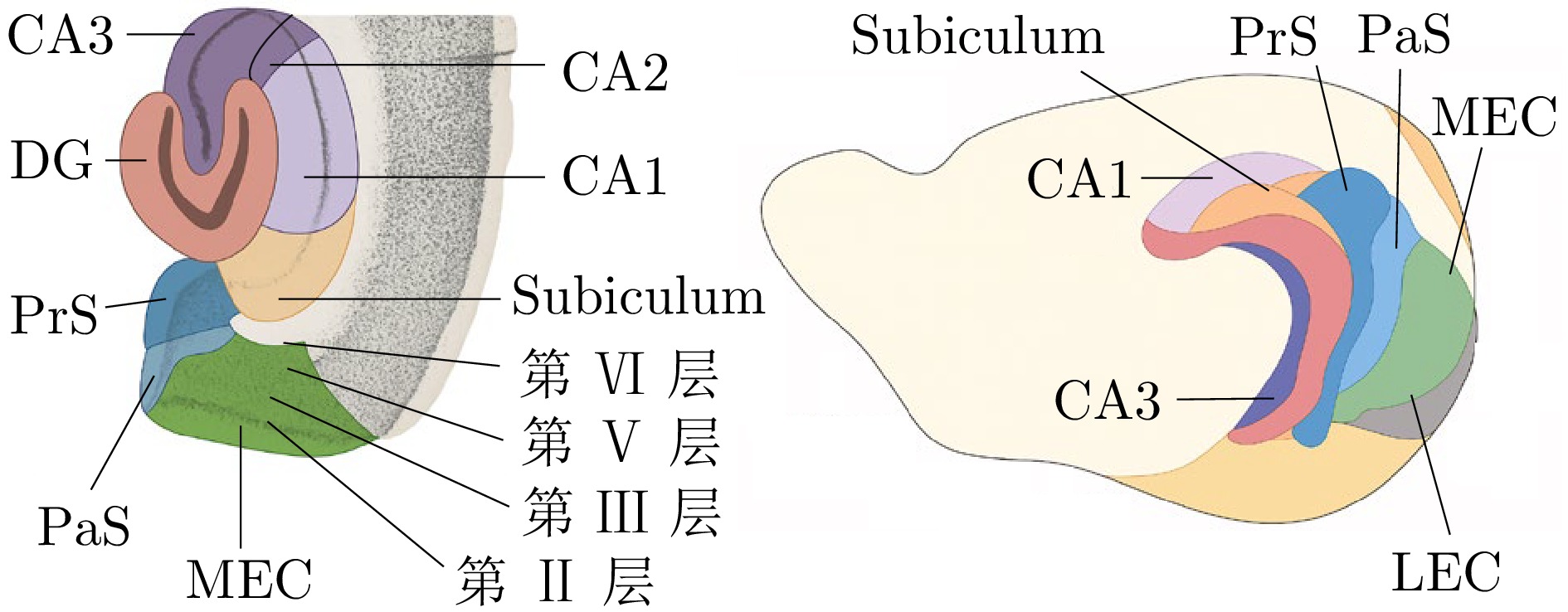

[5]

|

Heald J B, Lengyel M, Wolpert D M. Contextual inference in learning and memory. Trends in Cognitive Sciences, 2023, 27(1): 43−64 doi: 10.1016/j.tics.2022.10.004

|

|

[6]

|

Rolls E T. The hippocampus, ventromedial prefrontal cortex, and episodic and semantic memory. Progress in Neurobiology, 2022, 217: Article No. 102334 doi: 10.1016/j.pneurobio.2022.102334

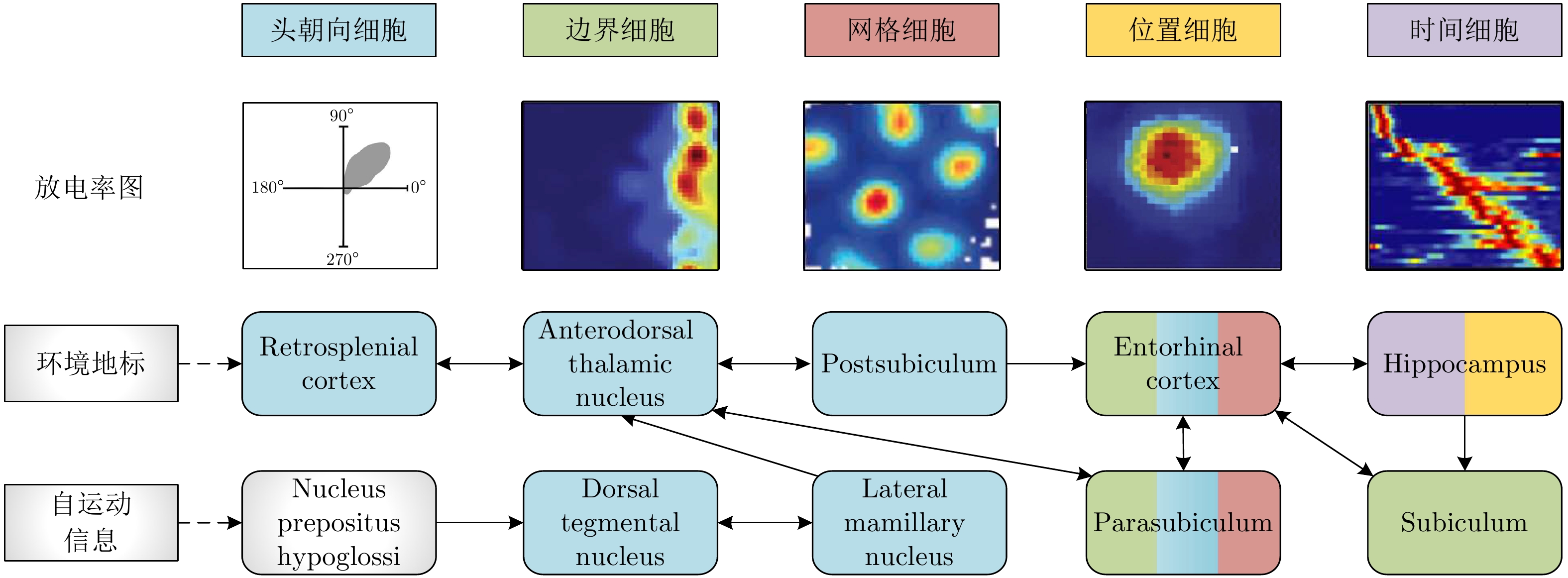

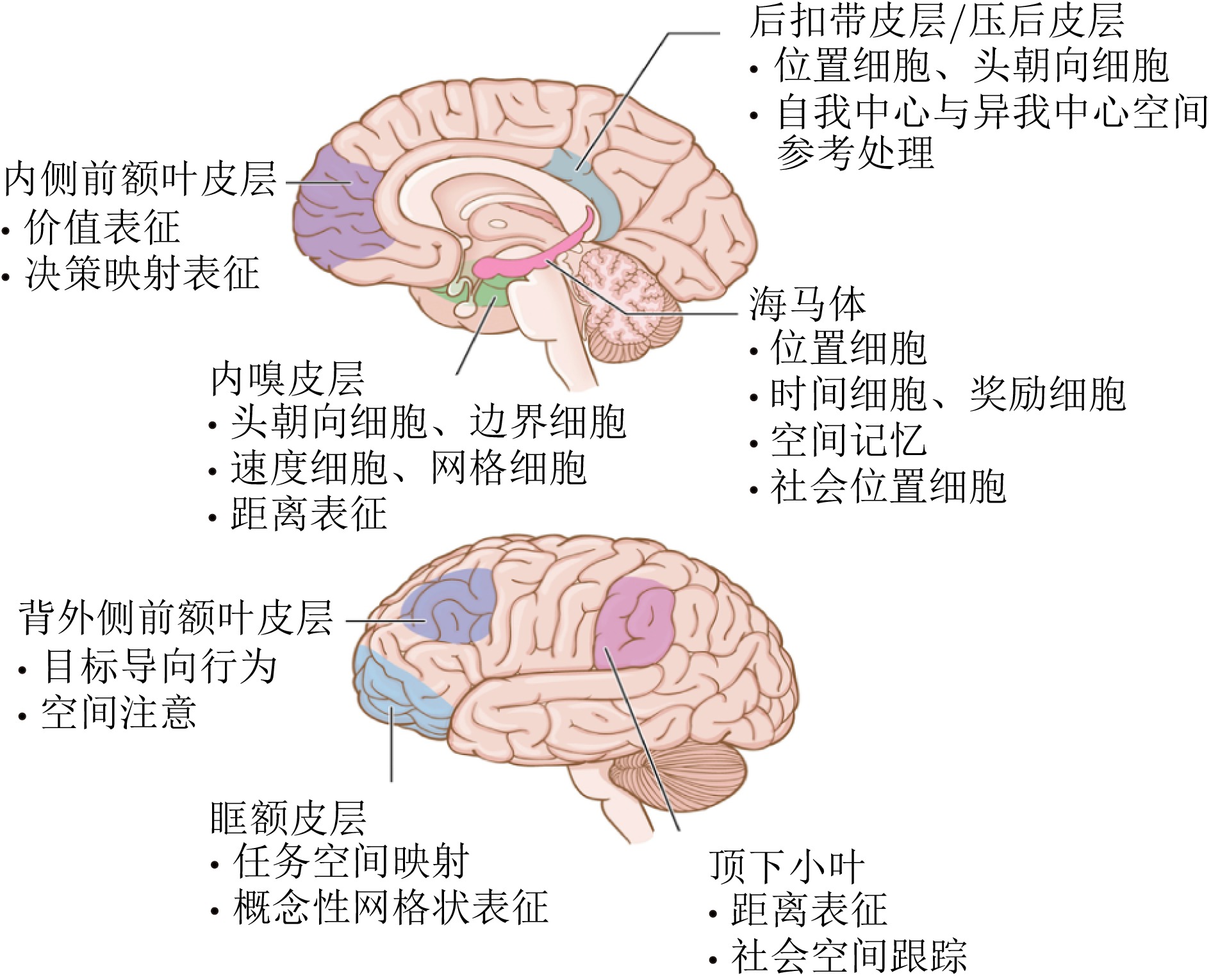

|

|

[7]

|

Whittington J C R, McCaffary D, Bakermans J J W, Behrens T E J. How to build a cognitive map. Nature Neuroscience, 2022, 25(10): 1257−1272 doi: 10.1038/s41593-022-01153-y

|

|

[8]

|

O'Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Oxford: Oxford University Press, 1978.

|

|

[9]

|

朱祥维, 沈丹, 肖凯, 马岳鑫, 廖祥, 古富强, 等. 类脑导航的机理、算法、实现与展望. 航空学报, 2023, 44(19): Article No. 28569Zhu Xiang-Wei, Shen Dan, Xiao Kai, Ma Yue-Xin, Liao Xiang, Gu Fu-Xiang, et al. Mechanisms, algorithms, implementation and perspectives of braininspired navigation. Acta Aeronautica et Astronautica Sinica, 2023, 44(19): Article No. 28569

|

|

[10]

|

李卫斌, 秦晨浩, 张天一, 毛鑫, 杨东浩, 纪文搏, 等. 综述: 类脑智能导航建模技术及其应用. 系统工程与电子技术, 2024, 46(11): 3844−3861Li Wei-Bin, Qin Chen-Hao, Zhang Tian-Yi, Mao Xin, Yang Dong-Hao, Ji Wen-Bo, et al. Review: Brain-inspired intelligent navigation modeling technology and its application. Systems Engineering and Electronics, 2024, 46(11): 3844−3861

|

|

[11]

|

杨闯, 刘建业, 熊智, 赖际舟, 熊骏. 由感知到动作决策一体化的类脑导航技术研究现状与未来发展. 航空学报, 2020, 41(1): Article No. 023280Yang Chuang, Liu Jian-Ye, Xiong Zhi, Lai Ji-Zhou, Xiong Jun. Brain-inspired navigation technology integrating perception and action decision: A review and outlook. Acta Aeronautica et Astronautica Sinica, 2020, 41(1): Article No. 023280

|

|

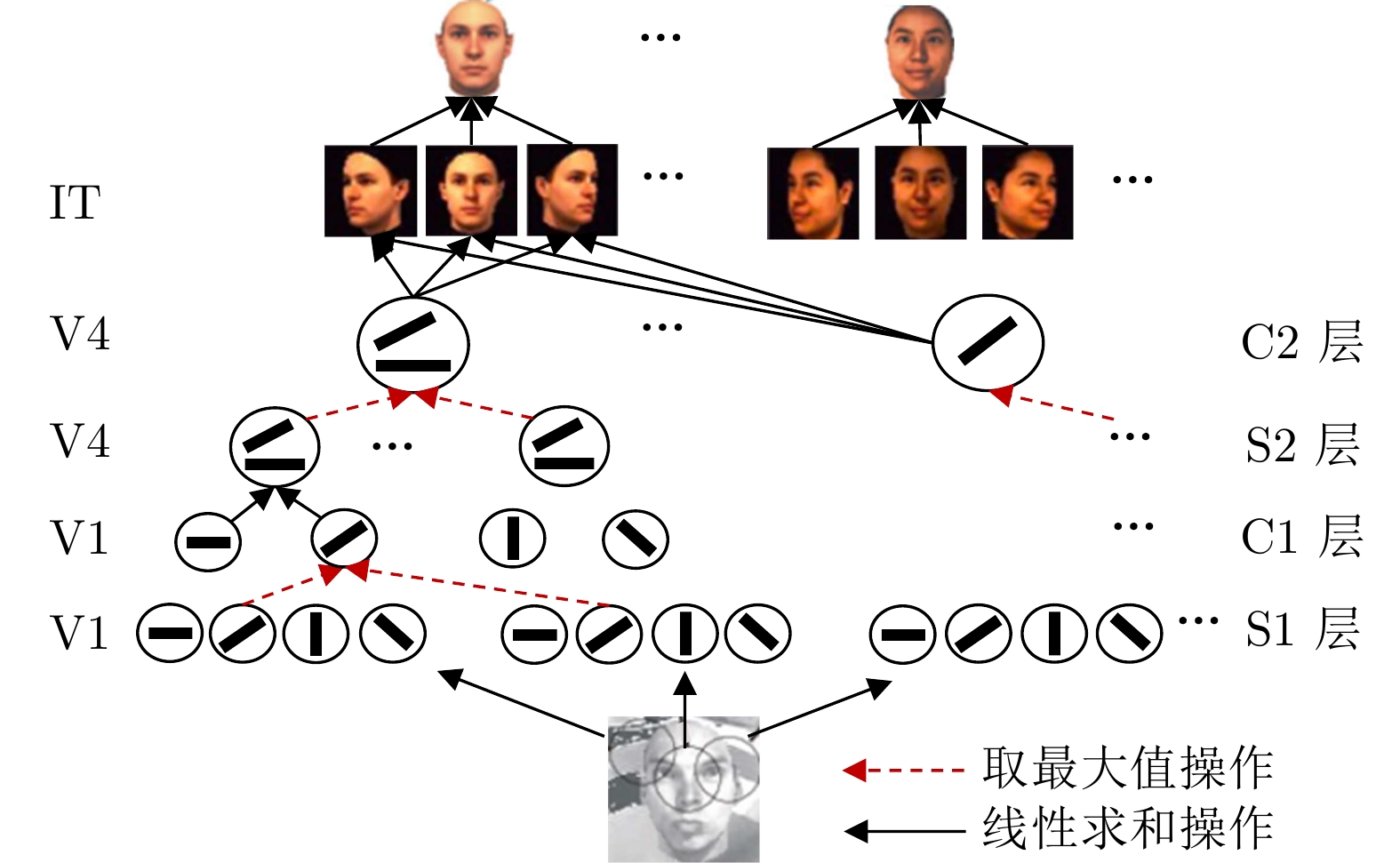

[12]

|

Bai Y N, Shao S L, Zhang J, Zhao X Z, Fang C X, Wang T, et al. A review of brain-inspired cognition and navigation technology for mobile robots. Cyborg and Bionic Systems, 2024, 5: Article No. 0128

|

|

[13]

|

Tolman E C. Cognitive maps in rats and men. Psychological Review, 1948, 55(4): 189−208 doi: 10.1037/h0061626

|

|

[14]

|

O'Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Research, 1971, 34(1): 171−175 doi: 10.1016/0006-8993(71)90358-1

|

|

[15]

|

Hafting T, Fyhn M, Molden S, Moser M B, Moser E I. Microstructure of a spatial map in the entorhinal cortex. Nature, 2005, 436(7052): 801−806 doi: 10.1038/nature03721

|

|

[16]

|

Schafer M, Schiller D. Navigating social space. Neuron, 2018, 100(2): 476−489 doi: 10.1016/j.neuron.2018.10.006

|

|

[17]

|

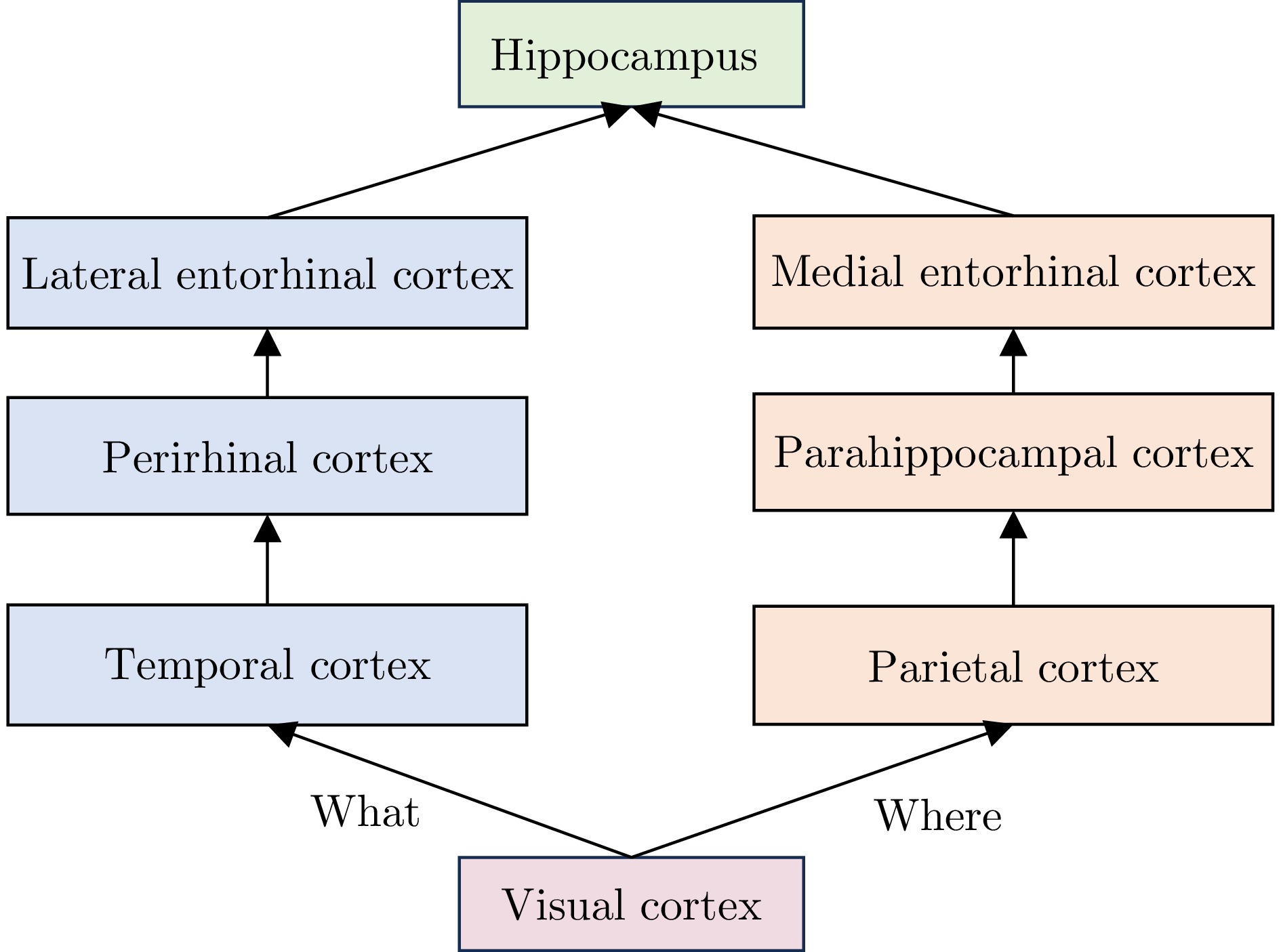

Goodale M A, Milner A D. Separate visual pathways for perception and action. Trends in Neurosciences, 1992, 15(1): 20−25 doi: 10.1016/0166-2236(92)90344-8

|

|

[18]

|

Kravitz D J, Saleem K S, Baker C I, Ungerleider L G, Mishkin M. The ventral visual pathway: An expanded neural framework for the processing of object quality. Trends in Cognitive Sciences, 2013, 17(1): 26−49 doi: 10.1016/j.tics.2012.10.011

|

|

[19]

|

Rolls E T, Deco G, Huang C C, Feng J F. Multiple cortical visual streams in humans. Cerebral Cortex, 2023, 33(7): 3319−3349 doi: 10.1093/cercor/bhac276

|

|

[20]

|

Bracci S, Op de Beeck H P. Understanding human object vision: A picture is worth a thousand representations. Annual Review of Psychology, 2023, 74(1): 113−135 doi: 10.1146/annurev-psych-032720-041031

|

|

[21]

|

Igarashi K M, Lu L, Colgin L L, Moser M B, Moser E I. Coordination of entorhinal-hippocampal ensemble activity during associative learning. Nature, 2014, 510(7503): 143−147 doi: 10.1038/nature13162

|

|

[22]

|

Strange B A, Witter M P, Lein E S, Moser E I. Functional organization of the hippocampal longitudinal axis. Nature Reviews Neuroscience, 2014, 15(10): 655−669 doi: 10.1038/nrn3785

|

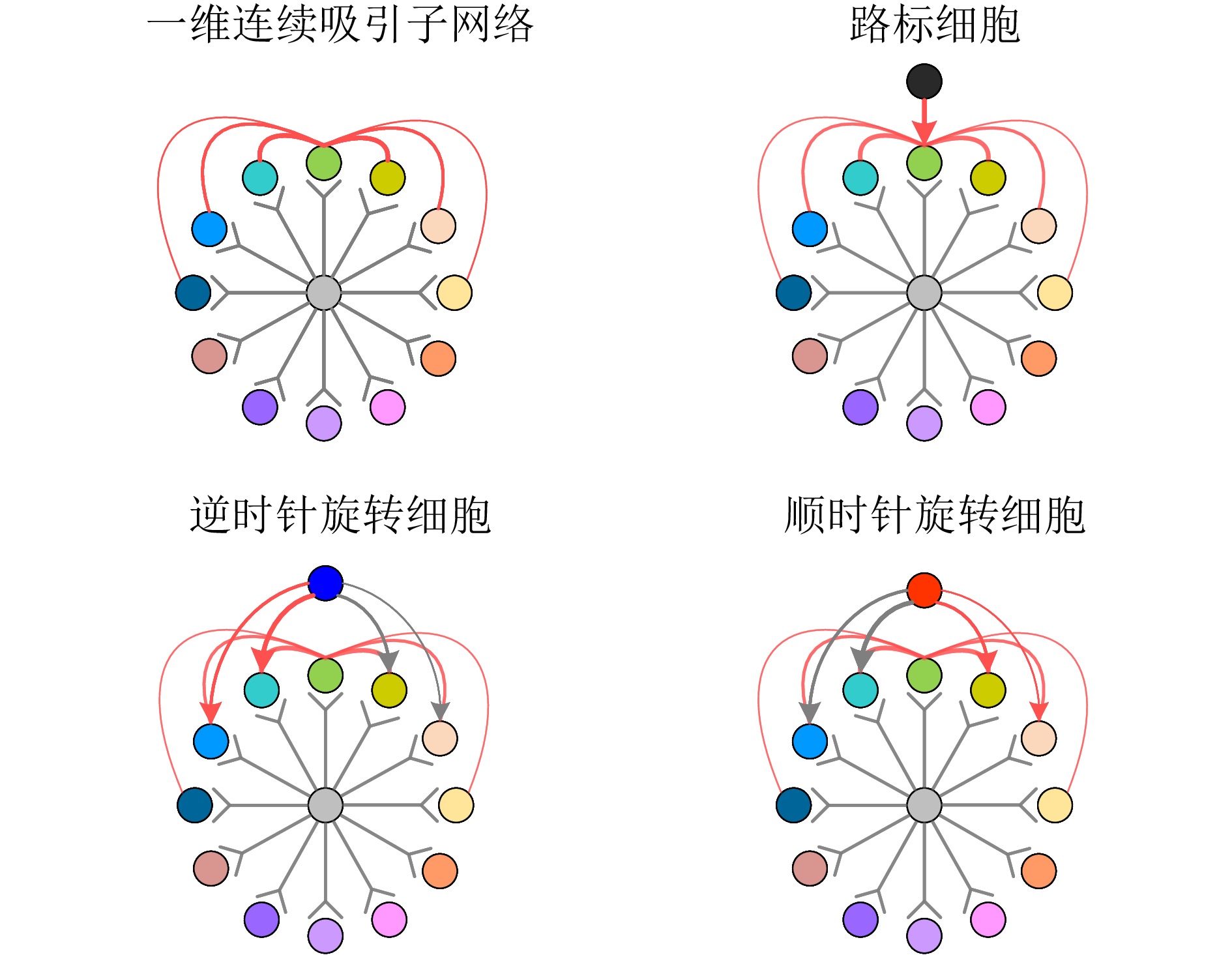

|

[23]

|

Gunn B G, Pruess B S, Gall C M, Lynch G. Input/output relationships for the primary hippocampal circuit. The Journal of Neuroscience, 2025, 45(2): Article No. e0130242024 doi: 10.1523/JNEUROSCI.0130-24.2024

|

|

[24]

|

Moser E I, Roudi Y, Witter M P, Kentros C, Bonhoeffer T, Moser M B. Grid cells and cortical representation. Nature Reviews Neuroscience, 2014, 15(7): 466−481 doi: 10.1038/nrn3766

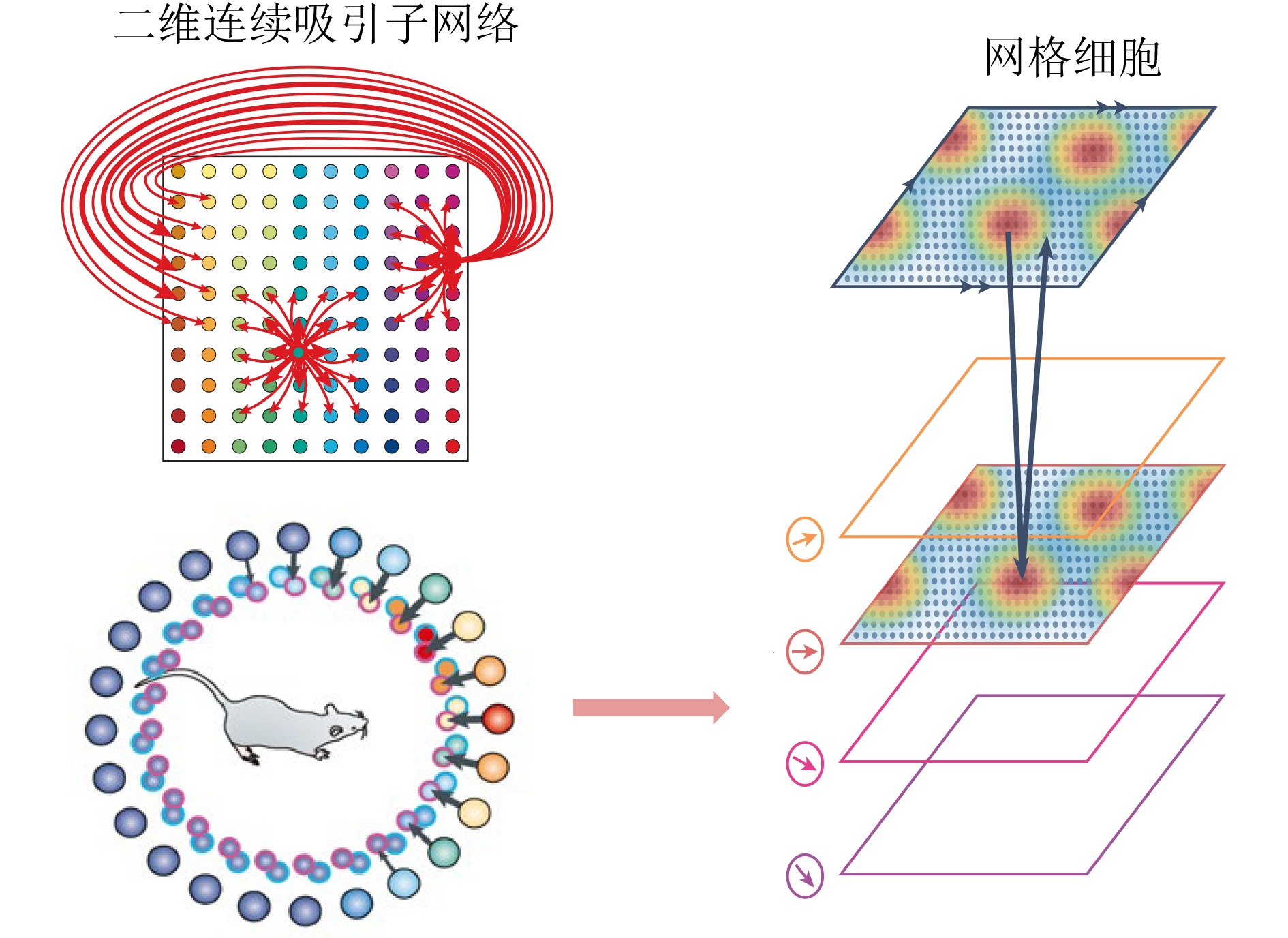

|

|

[25]

|

Igarashi K M. Entorhinal cortex dysfunction in Alzheimer's disease. Trends in Neurosciences, 2023, 46(2): 124−136 doi: 10.1016/j.tins.2022.11.006

|

|

[26]

|

Li T Y, Arleo A, Sheynikhovich D. Modeling place cells and grid cells in multi-compartment environments: Entorhinal-hippocampal loop as a multisensory integration circuit. Neural Networks, 2020, 121: 37−51 doi: 10.1016/j.neunet.2019.09.002

|

|

[27]

|

Sharma A, Nair I R, Yoganarasimha D. Attractor-like dynamics in the subicular complex. The Journal of Neuroscience, 2022, 42(40): 7594−7614 doi: 10.1523/JNEUROSCI.2048-20.2022

|

|

[28]

|

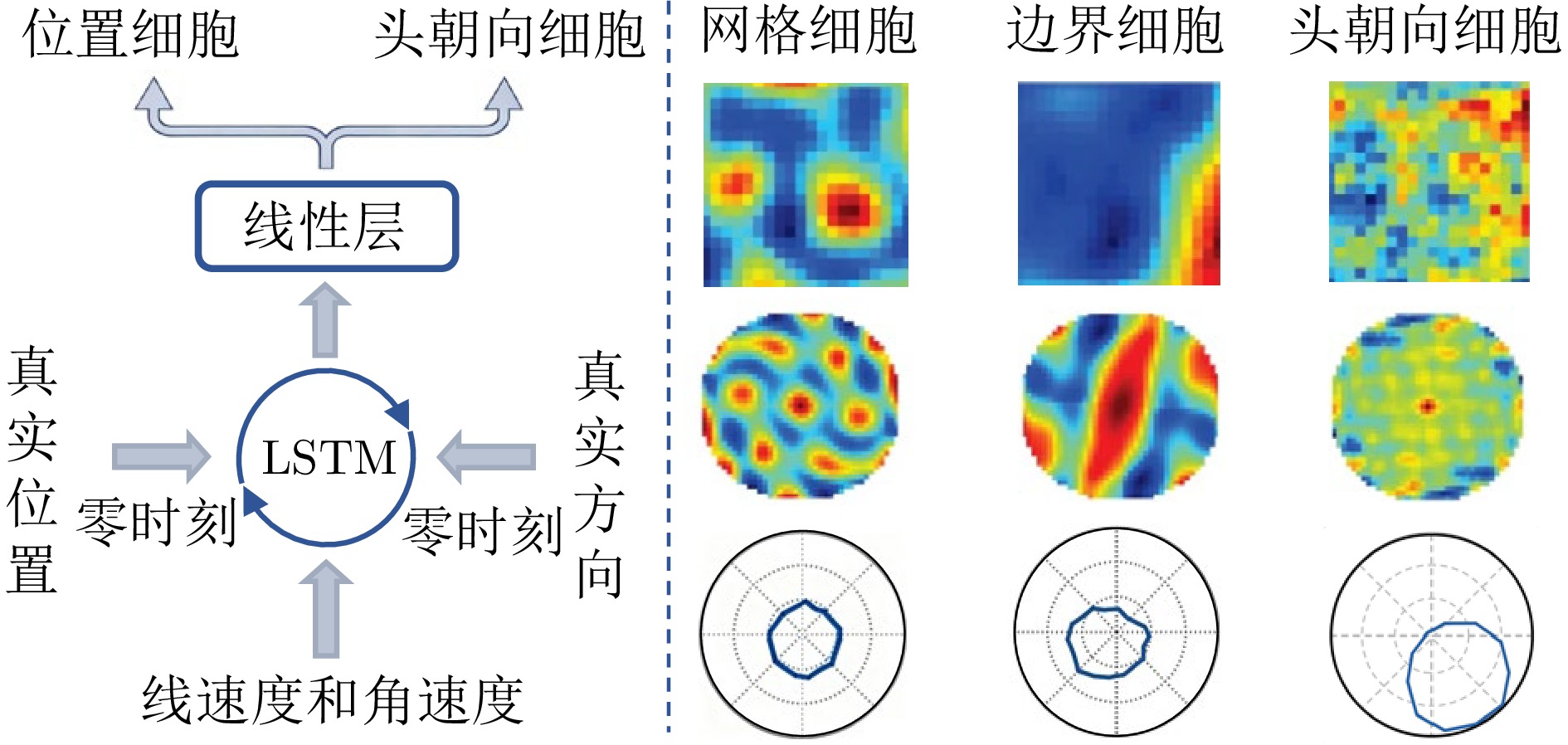

Cossart R, Khazipov R. How development sculpts hippocampal circuits and function. Physiological Reviews, 2022, 102(1): 343−378 doi: 10.1152/physrev.00044.2020

|

|

[29]

|

Grieves R M, Jeffery K J. The representation of space in the brain. Behavioural Processes, 2017, 135: 113−131 doi: 10.1016/j.beproc.2016.12.012

|

|

[30]

|

Moser E I, Kropff E, Moser M B. Place cells, grid cells, and the brain's spatial representation system. Annual Review of Neuroscience, 2008, 31: 69−89 doi: 10.1146/annurev.neuro.31.061307.090723

|

|

[31]

|

O'Keefe J, Conway D H. Hippocampal place units in the freely moving rat: Why they fire where they fire. Experimental Brain Research, 1978, 31(4): 573−590

|

|

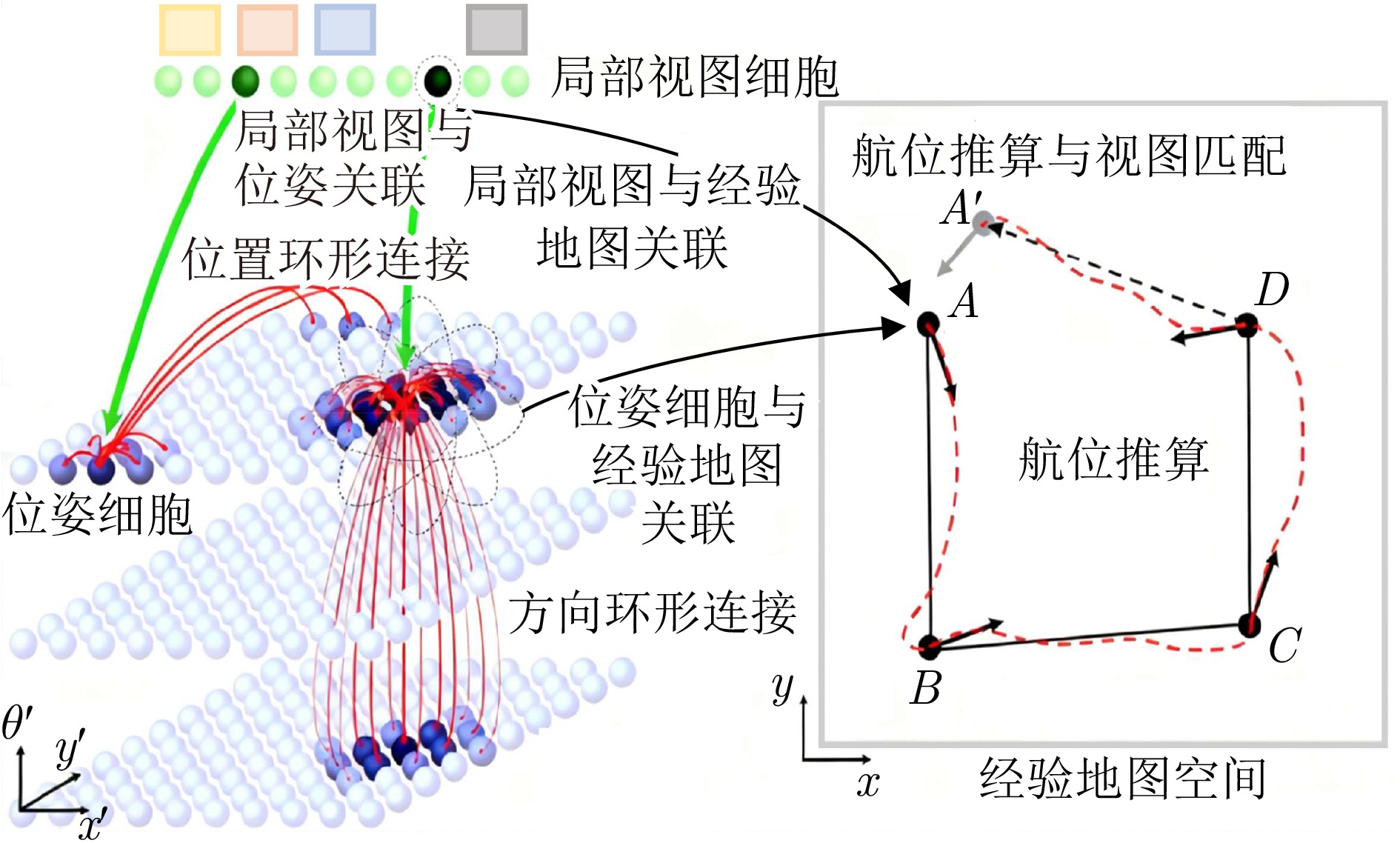

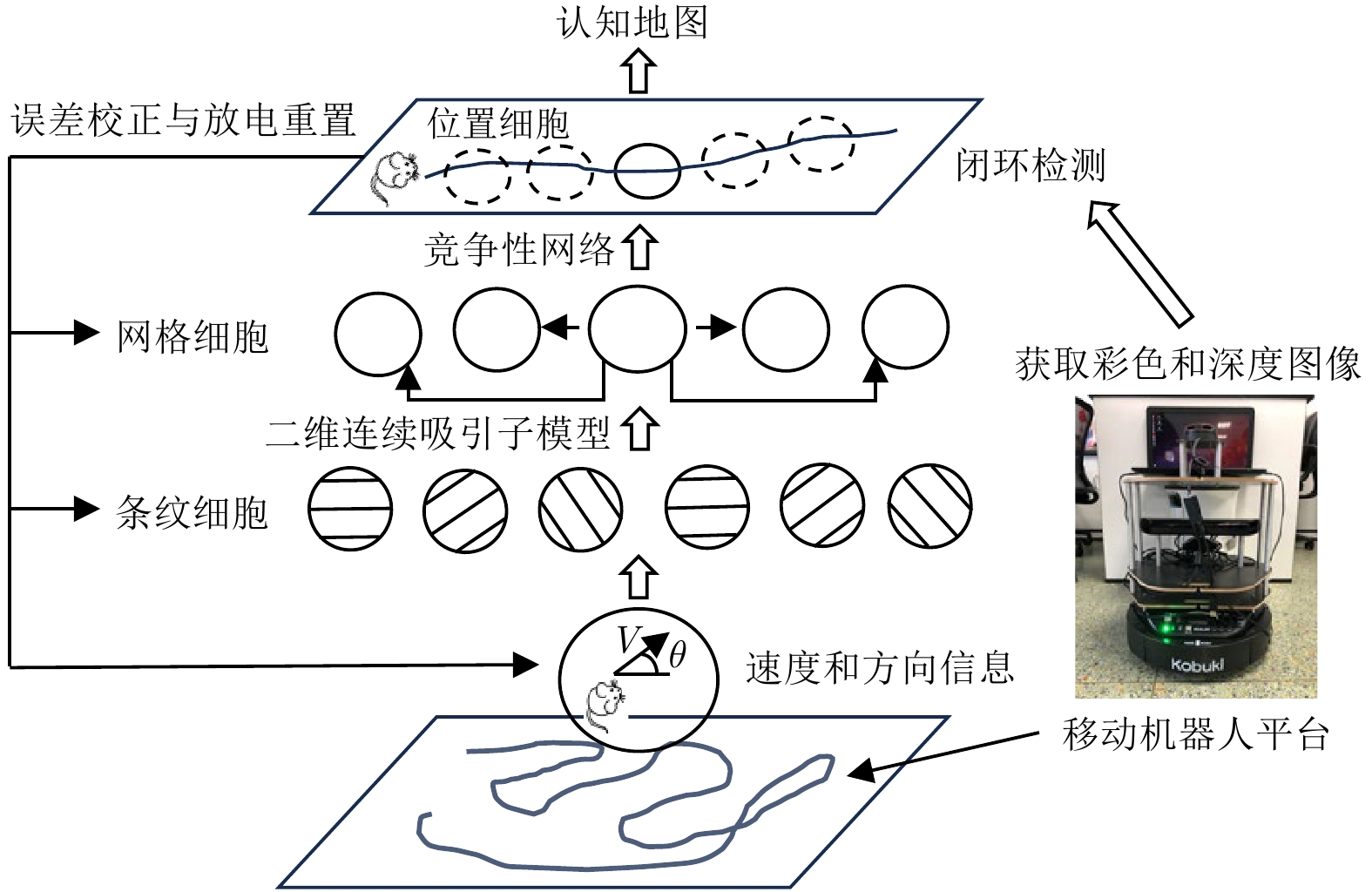

[32]

|

Mcnaughton B L, Battaglia F P, Jensen O, Moser E I, Moser M B. Path integration and the neural basis of the ‘cognitive map’. Nature Reviews Neuroscience, 2006, 7(8): 663−678 doi: 10.1038/nrn1932

|

|

[33]

|

Madhav M S, Jayakumar R P, Li B Y, Lashkari S G, Wright K, Savelli F, et al. Control and recalibration of path integration in place cells using optic flow. Nature Neuroscience, 2024, 27(8): 1599−1608 doi: 10.1038/s41593-024-01681-9

|

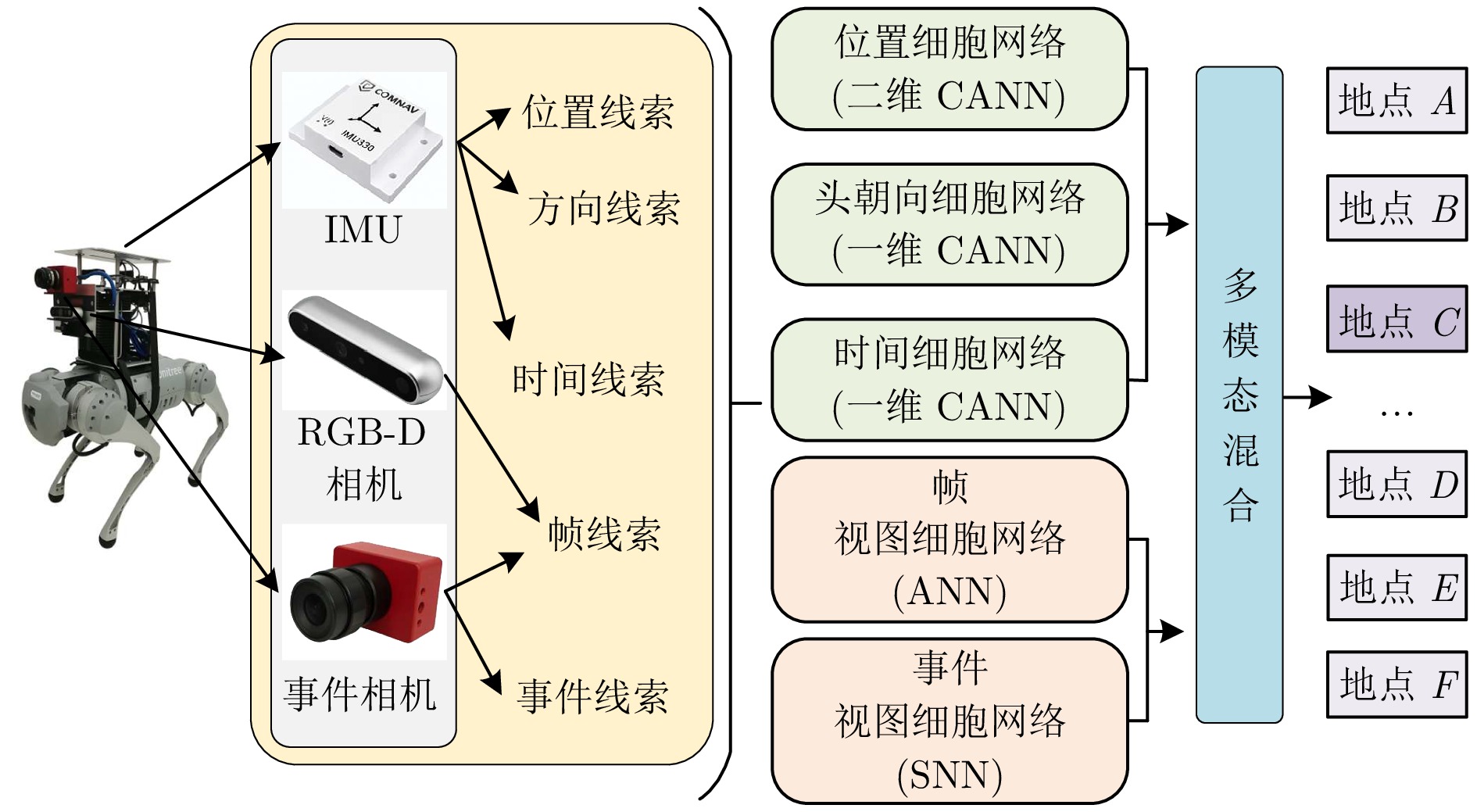

|

[34]

|

Ranck J J B. Head direction cells in the deep cell layer of dorsal presubiculum in freely moving rats. Electrical Activity of the Archicortex, 1985: 217−220

|

|

[35]

|

Taube J S, Muller R U, Ranck J B. Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. The Journal of Neuroscience, 1990, 10(2): 420−435 doi: 10.1523/JNEUROSCI.10-02-00420.1990

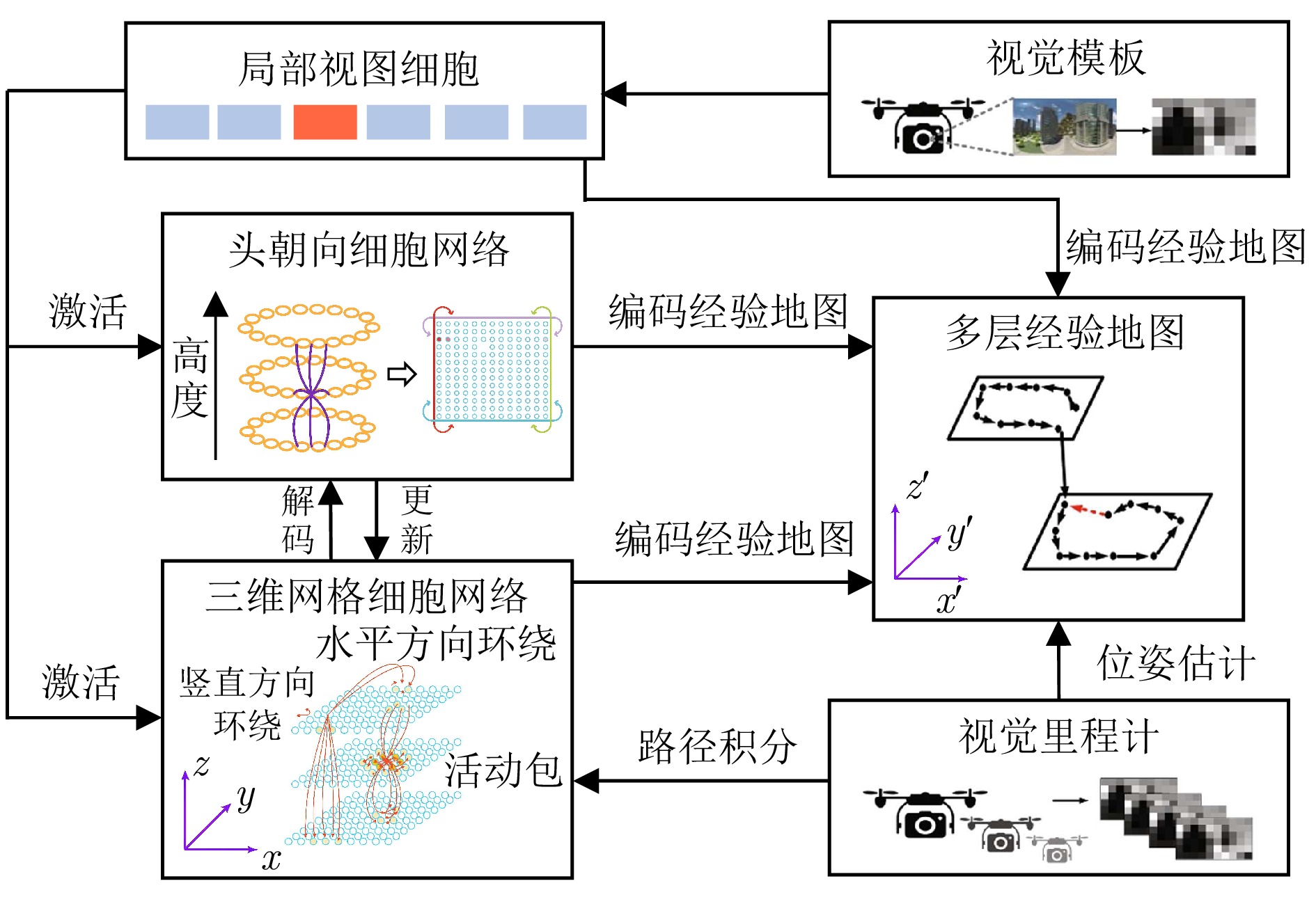

|

|

[36]

|

Taube J S. The head direction signal: Origins and sensory-motor integration. Annual Review of Neuroscience, 2007, 30(1): 181−207 doi: 10.1146/annurev.neuro.29.051605.112854

|

|

[37]

|

Peyrache A, Lacroix M M, Petersen P C, Buzsáki G. Internally organized mechanisms of the head direction sense. Nature Neuroscience, 2015, 18(4): 569−575 doi: 10.1038/nn.3968

|

|

[38]

|

Seelig J D, Jayaraman V. Neural dynamics for landmark orientation and angular path integration. Nature, 2015, 521(7551): 186−191 doi: 10.1038/nature14446

|

|

[39]

|

Green J, Adachi A, Shah K K, Hirokawa J D, Magani P S, Maimon G. A neural circuit architecture for angular integration in Drosophila. Nature, 2017, 546(7656): 101−106 doi: 10.1038/nature22343

|

|

[40]

|

Turner-Evans D B, Jensen K T, Ali S, Paterson T, Sheridan A, Ray R P, et al. The neuroanatomical ultrastructure and function of a biological ring attractor. Neuron, 2020, 108(1): 145−163 doi: 10.1016/j.neuron.2020.08.006

|

|

[41]

|

Savelli F, Knierim J J. Origin and role of path integration in the cognitive representations of the hippocampus: Computational insights into open questions. Journal of Experimental Biology, 2019, 222(S1): Article No. jeb188912

|

|

[42]

|

Ajabi Z, Keinath A T, Wei X X, Brandon M P. Population dynamics of head-direction neurons during drift and reorientation. Nature, 2023, 615(7954): 892−899 doi: 10.1038/s41586-023-05813-2

|

|

[43]

|

Dong L L, Fiete I R. Grid cells in cognition: Mechanisms and function. Annual Review of Neuroscience, 2024, 47: 345−368 doi: 10.1146/annurev-neuro-101323-112047

|

|

[44]

|

Sargolini F, Fyhn M, Hafting T, McNaughton B L, Witter M P, Moser M B, et al. Conjunctive representation of position, direction, and velocity in entorhinal cortex. Science, 2006, 312(5774): 758−762 doi: 10.1126/science.1125572

|

|

[45]

|

Ginosar G, Aljadeff J, Las L, Derdikman D, Ulanovsky N. Are grid cells used for navigation? On local metrics, subjective spaces, and black holes. Neuron, 2023, 111(12): 1858−1875 doi: 10.1016/j.neuron.2023.03.027

|

|

[46]

|

Solstad T, Boccara C N, Kropff E, Moser M B, Moser E I. Representation of geometric borders in the entorhinal cortex. Science, 2008, 322(5909): 1865−1868 doi: 10.1126/science.1166466

|

|

[47]

|

Hartley T, Burgess N, Lever C, Cacucci F, O'Keefe J. Modeling place fields in terms of the cortical inputs to the hippocampus. Hippocampus, 2000, 10(4): 369−379 doi: 10.1002/1098-1063(2000)10:4<369::AID-HIPO3>3.0.CO;2-0

|

|

[48]

|

Barry C, Lever C, Hayman R, Hartley T, Burton S, O'Keefe J, et al. The boundary vector cell model of place cell firing and spatial memory. Reviews in the Neurosciences, 2006, 17(1−2): 71−97

|

|

[49]

|

Lever C, Burton S, Jeewajee A, O'Keefe J, Burgess N. Boundary vector cells in the subiculum of the hippocampal formation. The Journal of Neuroscience, 2009, 29(31): 9771−9777 doi: 10.1523/JNEUROSCI.1319-09.2009

|

|

[50]

|

van Wijngaarden J B G, Babl S S, Ito H T. Entorhinal-retrosplenial circuits for allocentric-egocentric transformation of boun-dary coding. eLife, 2020, 9: Article No. e59816 doi: 10.7554/eLife.59816

|

|

[51]

|

Tulving E. Précis of elements of episodic memory. Behavioral and Brain Sciences, 1984, 7(2): 223−238 doi: 10.1017/S0140525X0004440X

|

|

[52]

|

Manns J R, Howard M W, Eichenbaum H. Gradual changes in hippocampal activity support remembering the order of events. Neuron, 2007, 56(3): 530−540 doi: 10.1016/j.neuron.2007.08.017

|

|

[53]

|

MacDonald C J, Lepage K Q, Eden U T, Eichenbaum H. Hippocampal “time cells” bridge the gap in memory for discontiguous events. Neuron, 2011, 71(4): 737−749 doi: 10.1016/j.neuron.2011.07.012

|

|

[54]

|

Eichenbaum H. Time cells in the hippocampus: A new dimension for mapping memories. Nature Reviews Neuroscience, 2014, 15(11): 732−744 doi: 10.1038/nrn3827

|

|

[55]

|

Umbach G, Kantak P, Jacobs J, Kahana M, Pfeiffer B E, Sperling M, et al. Time cells in the human hippocampus and entorhinal cortex support episodic memory. Proceedings of the National Academy of Sciences of the United States of America, 2020, 117(45): 28463−28474

|

|

[56]

|

Riesenhuber M, Poggio T. Hierarchical models of object recognition in cortex. Nature Neuroscience, 1999, 2(11): 1019−1025 doi: 10.1038/14819

|

|

[57]

|

Serre T, Wolf L, Bileschi S, Riesenhuber M, Poggio T. Robust object recognition with cortex-like mechanisms. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(3): 411−426 doi: 10.1109/TPAMI.2007.56

|

|

[58]

|

Liu C, Sun F C. HMAX model: A survey. In: Proceedings of the International Joint Conference on Neural Networks. Killarney, Ireland: IEEE, 2015. 1−7

|

|

[59]

|

Parra L A, Díaz D E M, Ramos F. Computational framework of the visual sensory system based on neuroscientific evidence of the ventral pathway. Cognitive Systems Research, 2023, 77: 62−87 doi: 10.1016/j.cogsys.2022.10.004

|

|

[60]

|

Zhang H Z, Lu Y F, Kang T K, Lim M T. B-HMAX: A fast binary biologically inspired model for object recognition. Neurocomputing, 2016, 218: 242−250 doi: 10.1016/j.neucom.2016.08.051

|

|

[61]

|

Cherloo M N, Shiri M, Daliri M R. An enhanced HMAX model in combination with SIFT algorithm for object recognition. Signal, Image and Video Processing, 2020, 14(2): 425−433 doi: 10.1007/s11760-019-01572-8

|

|

[62]

|

Shariatmadar Z S, Faez K, Namin A S. Sal-HMAX: An enhanced HMAX model in conjunction with a visual attention mechanism to improve object recognition task. IEEE Access, 2021, 9: 154396−154412 doi: 10.1109/ACCESS.2021.3127928

|

|

[63]

|

Deco G, Rolls E T. A neurodynamical cortical model of visual attention and invariant object recognition. Vision Research, 2004, 44(6): 621−642 doi: 10.1016/j.visres.2003.09.037

|

|

[64]

|

Rolls E T. Invariant visual object and face recognition: Neural and computational bases, and a model, VisNet. Frontiers in Computational Neuroscience, 2012, 6: Article No. 35

|

|

[65]

|

Rolls E T, Mills W P C. Non-accidental properties, metric invariance, and encoding by neurons in a model of ventral stream visual object recognition, VisNet. Neurobiology of Learning and Memory, 2018, 152: 20−31 doi: 10.1016/j.nlm.2018.04.017

|

|

[66]

|

Rolls E T. Learning invariant object and spatial view representations in the brain using slow unsupervised learning. Frontiers in Computational Neuroscience, 2021, 15: Article No. 686239 doi: 10.3389/fncom.2021.686239

|

|

[67]

|

Rolls E T. A theory and model of scene representations with hippocampal spatial view cells. Hippocampus, 2025, 35(3): Article No. e70013 doi: 10.1002/hipo.70013

|

|

[68]

|

Hubel D H, Wiesel T N. Receptive fields, binocular interaction and functional architecture in the cat's visual cortex. The Journal of Physiology, 1962, 160(1): 106−154 doi: 10.1113/jphysiol.1962.sp006837

|

|

[69]

|

Kriegeskorte N. Deep neural networks: A new framework for modeling biological vision and brain information processing. Annual Review of Vision Science, 2015, 1(1): 417−446 doi: 10.1146/annurev-vision-082114-035447

|

|

[70]

|

Tadeusiewicz R. Neural networks as a tool for modeling of biological systems. Bio-Algorithms and Med-Systems, 2015, 11(3): 135−144 doi: 10.1515/bams-2015-0021

|

|

[71]

|

Laskar M N U, Giraldo L G S, Schwartz O. Deep neural networks capture texture sensitivity in V2. Journal of Vision, 2020, 20(7): Article No. 21 doi: 10.1167/jov.20.7.21

|

|

[72]

|

Chang C I, Liang C C, Hu P F. Iterative random training sampling convolutional neural network for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: Article No. 5513526

|

|

[73]

|

Wang S Y, Qu Z, Li C J, Gao L Y. BANet: Small and multi-object detection with a bidirectional attention network for traffic scenes. Engineering Applications of Artificial Intelligence, 2023, 117: Article No. 105504 doi: 10.1016/j.engappai.2022.105504

|

|

[74]

|

Liang M, Hu X L. Recurrent convolutional neural network for object recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 3367−3375

|

|

[75]

|

Yang X, Yan J, Wang W, Li S Y, Hu B, Lin J. Brain-inspired models for visual object recognition: An overview. Artificial Intelligence Review, 2022, 55(7): 5263−5311 doi: 10.1007/s10462-021-10130-z

|

|

[76]

|

方维, 朱耀宇, 黄梓涵, 姚满, 余肇飞, 田永鸿. 脉冲深度学习梯度替代算法研究综述. 计算机学报, 2025, 48(8): 1885−1922Fang Wei, Zhu Yao-Yu, Huang Zi-Han, Yao Man, Yu Zhao-Fei, Tian Yong-Hong. Review of surrogate gradient methods in spiking deep learning. Chinese Journal of Computers, 2025, 48(8): 1885−1922

|

|

[77]

|

Chen T, Wang L D, Li J, Duan S K, Huang T W. Improving spiking neural network with frequency adaptation for image classification. IEEE Transactions on Cognitive and Developmental Systems, 2024, 16(3): 864−876 doi: 10.1109/TCDS.2023.3308347

|

|

[78]

|

Su Q Y, Chou Y H, Hu Y F, Li J N, Mei S J, Zhang Z Y, et al. Deep directly-trained spiking neural networks for object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Paris, France: IEEE, 2023. 6532−6542

|

|

[79]

|

王瑞东, 王睿, 张天栋, 王硕. 机器人类脑智能研究综述. 自动化学报, 2024, 50(8): 1485−1501Wang Rui-Dong, Wang Rui, Zhang Tian-Dong, Wang Shuo. A survey of research on robotic brain-inspired intelligence. Acta Automatica Sinica, 2024, 50(8): 1485−1501

|

|

[80]

|

Liu J X, Huo H, Hu W T, Fang T. Brain-inspired hierarchical spiking neural network using unsupervised STDP rule for image classification. In: Proceedings of the 10th International Conference on Machine Learning and Computing. Macao, China: ACM, 2018. 230−235

|

|

[81]

|

Orchard G, Meyer C, Etienne-Cummings R, Posch C, Thakor N, Benosman R. HFirst: A temporal approach to object recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(10): 2028−2040 doi: 10.1109/TPAMI.2015.2392947

|

|

[82]

|

Zhou Q, Li X H. A bio-inspired hierarchical spiking neural network with reward-modulated STDP learning rule for AER object recognition. IEEE Sensors Journal, 2022, 22(16): 16323−16338 doi: 10.1109/JSEN.2022.3189679

|

|

[83]

|

Zhang Y Q, Feng L C, Shan H W, Yang L Y, Zhu Z M. An AER-based spiking convolution neural network system for image classification with low latency and high energy efficiency. Neurocomputing, 2024, 564: Article No. 126984 doi: 10.1016/j.neucom.2023.126984

|

|

[84]

|

Cao Y Q, Chen Y, Khosla D. Spiking deep convolutional neural networks for energy-efficient object recognition. International Journal of Computer Vision, 2015, 113(1): 54−66 doi: 10.1007/s11263-014-0788-3

|

|

[85]

|

Mozafari M, Ganjtabesh M, Nowzari-Dalini A, Thorpe S J, Masquelier T. Bio-inspired digit recognition using reward-modulated spike-timing-dependent plasticity in deep convolutional networks. Pattern Recognition, 2019, 94: 87−95 doi: 10.1016/j.patcog.2019.05.015

|

|

[86]

|

Lichtsteiner P, Posch C, Delbruck T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE Journal of Solid-State Circuits, 2008, 43(2): 566−576 doi: 10.1109/JSSC.2007.914337

|

|

[87]

|

Brandli C, Berner R, Yang M, Liu S C, Delbruck T. A 240 × 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333−2341 doi: 10.1109/JSSC.2014.2342715

|

|

[88]

|

李家宁, 田永鸿. 神经形态视觉传感器的研究进展及应用综述. 计算机学报, 2021, 44(6): 1258−1286Li Jia-Ning, Tian Yong-Hong. Recent advances in neuromorphic vision sensors: A survey. Chinese Journal of Computers, 2021, 44(6): 1258−1286

|

|

[89]

|

Gallego G, Delbrück T, Orchard G, Bartolozzi C, Taba B, Censi A, et al. Event-based vision: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(1): 154−180 doi: 10.1109/TPAMI.2020.3008413

|

|

[90]

|

Aitsam M, Davies S, di Nuovo A. Event camera-based real-time gesture recognition for improved robotic guidance. In: Proceedings of the International Joint Conference on Neural Networks. Yokohama, Japan: IEEE, 2024. 1−8

|

|

[91]

|

Daugman J G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. Journal of the Optical Society of America A, 1985, 2(7): 1160−1169 doi: 10.1364/JOSAA.2.001160

|

|

[92]

|

Adelson E H, Bergen J R. Spatiotemporal energy models for the perception of motion. Journal of the Optical Society of America A, 1985, 2(2): 284−299 doi: 10.1364/JOSAA.2.000284

|

|

[93]

|

Yedjour H, Yedjour D. A spatiotemporal energy model based on spiking neurons for human motion perception. Cognitive Neurodynamics, 2024, 18(4): 2015−2029 doi: 10.1007/s11571-024-10068-2

|

|

[94]

|

Heeger D J. Model for the extraction of image flow. Journal of the Optical Society of America A, 1987, 4(8): 1455−1471 doi: 10.1364/JOSAA.4.001455

|

|

[95]

|

DeAngelis G C, Ohzawa I, Freeman R D. Spatiotemporal organization of simple-cell receptive fields in the cat's striate cortex. Ⅱ. Linearity of temporal and spatial summation. Journal of Neurophysiology, 1993, 69(4): 1118−1135 doi: 10.1152/jn.1993.69.4.1118

|

|

[96]

|

Simoncelli E P, Heeger D J. A model of neuronal responses in visual area MT. Vision Research, 1998, 38(5): 743−761 doi: 10.1016/S0042-6989(97)00183-1

|

|

[97]

|

Rust N C, Mante V, Simoncelli E P, Movshon J A. How MT cells analyze the motion of visual patterns. Nature Neuroscience, 2006, 9(11): 1421−1431 doi: 10.1038/nn1786

|

|

[98]

|

Solari F, Chessa M, Medathati N V K, Kornprobst P. What can we expect from a V1-MT feedforward architecture for optical flow estimation? Signal Processing: Image Communication, 2015, 39: 342−354 doi: 10.1016/j.image.2015.04.006

|

|

[99]

|

Sun Z T, Chen Y J, Yang Y H, Nishida S. Modelling human visual motion processing with trainable motion energy sensing and a self-attention network. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1058

|

|

[100]

|

Yang Y H, Sun Z T, Fukiage T, Nishida S Y. HuPerFlow: A comprehensive benchmark for human vs. machine motion estimation comparison. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville, USA: IEEE, 2025. 22799−22808

|

|

[101]

|

Bayerl P, Neumann H. Disambiguating visual motion through contextual feedback modulation. Neural Computation, 2004, 16(10): 2041−2066 doi: 10.1162/0899766041732404

|

|

[102]

|

Bouecke J D, Tlapale E, Kornprobst P, Neumann H. Neural mechanisms of motion detection, integration, and segregation: From biology to artificial image processing systems. EURASIP Journal on Advances in Signal Processing, 2011, 2011(1): Article No. 781561 doi: 10.1155/2011/781561

|

|

[103]

|

Layton O W, Fajen B R. A neural model of MST and MT explains perceived object motion during self-motion. Journal of Neuroscience, 2016, 36(31): 8093−8102 doi: 10.1523/JNEUROSCI.4593-15.2016

|

|

[104]

|

Solari F, Caramenti M, Chessa M, Pretto P, Bülthoff H H, Bresciani J P. A biologically-inspired model to predict perceived visual speed as a function of the stimulated portion of the visual field. Frontiers in Neural Circuits, 2019, 13: Article No. 68 doi: 10.3389/fncir.2019.00068

|

|

[105]

|

Yumurtaci S, Layton O W. Modeling physiological sources of heading bias from optic flow. eNeuro, 2021, 8(6): Article No. ENEURO.0307−21.2021 doi: 10.1523/ENEURO.0307-21.2021

|

|

[106]

|

Layton O W, Steinmetz S T. Accuracy optimized neural networks do not effectively model optic flow tuning in brain area MSTd. Frontiers in Neuroscience, 2024, 18: Article No. 1441285 doi: 10.3389/fnins.2024.1441285

|

|

[107]

|

Killian N J, Jutras M J, Buffalo E A. A map of visual space in the primate entorhinal cortex. Nature, 2012, 491(7426): 761−764 doi: 10.1038/nature11587

|

|

[108]

|

Giocomo L M, Hussaini S A, Zheng F, Kandel E, Moser M B, Moser E. Grid cells use HCN1 channels for spatial scaling. Cell, 2011, 147(5): 1159−1170 doi: 10.1016/j.cell.2011.08.051

|

|

[109]

|

Domnisoru C, Kinkhabwala A A, Tank D W. Membrane potential dynamics of grid cells. Nature, 2013, 495(7440): 199−204 doi: 10.1038/nature11973

|

|

[110]

|

Khona M, Fiete I R. Attractor and integrator networks in the brain. Nature Reviews Neuroscience, 2022, 23(12): 744−766 doi: 10.1038/s41583-022-00642-0

|

|

[111]

|

Hulse B K, Jayaraman V. Mechanisms underlying the neural computation of head direction. Annual Review of Neuroscience, 2020, 43(1): 31−54 doi: 10.1146/annurev-neuro-072116-031516

|

|

[112]

|

Zhang K. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: A theory. The Journal of Neuroscience, 1996, 16(6): 2112−2126 doi: 10.1523/JNEUROSCI.16-06-02112.1996

|

|

[113]

|

Samsonovich A, McNaughton B L. Path integration and cognitive mapping in a continuous attractor neural network model. The Journal of Neuroscience, 1997, 17(15): 5900−5920 doi: 10.1523/JNEUROSCI.17-15-05900.1997

|

|

[114]

|

Wu S, Wong K Y M, Fung C C A, Mi Y Y, Zhang W H. Continuous attractor neural networks: Candidate of a canonical model for neural information representation. F1000Research, DOI: 10.12688/f1000research.7387.1

|

|

[115]

|

Ocko S A, Hardcastle K, Giocomo L M, Ganguli S. Emergent elasticity in the neural code for space. Proceedings of the National Academy of Sciences of the United States of America, 2018, 115(50): E11798−E11806

|

|

[116]

|

Bing Z S, Sewisy A E, Zhuang G Z, Walter F, Morin F O, Huang K. Toward cognitive navigation: Design and implementation of a biologically inspired head direction cell network. IEEE Transactions on Neural Networks and Learning Systems, 2022, 33(5): 2147−2158 doi: 10.1109/TNNLS.2021.3128380

|

|

[117]

|

Bicanski A, Burgess N. Environmental anchoring of head direction in a computational model of retrosplenial cortex. The Journal of Neuroscience, 2016, 36(46): 11601−11618 doi: 10.1523/JNEUROSCI.0516-16.2016

|

|

[118]

|

Bing Z S, Nitschke D, Zhuang G H, Huang K, Knoll A. Towards cognitive navigation: A biologically inspired calibration mechanism for the head direction cell network. Journal of Automation and Intelligence, 2023, 2(1): 31−41 doi: 10.1016/j.jai.2023.100020

|

|

[119]

|

Gardner R J, Hermansen E, Pachitariu M, Burak Y, Baas N A, Dunn B A, et al. Toroidal topology of population activity in grid cells. Nature, 2022, 602(7895): 123−128 doi: 10.1038/s41586-021-04268-7

|

|

[120]

|

Widloski J, Marder M P, Fiete I R. Inferring circuit mechanisms from sparse neural recording and global perturbation in grid cells. eLife, 2018, 7: Article No. e33503 doi: 10.7554/eLife.33503

|

|

[121]

|

Burak Y, Fiete I R. Accurate path integration in continuous attractor network models of grid cells. PLoS Computational Biology, 2009, 5(2): Article No. e1000291 doi: 10.1371/journal.pcbi.1000291

|

|

[122]

|

Fuhs M C, Touretzky D S. A spin glass model of path integration in rat medial entorhinal cortex. The Journal of Neuroscience, 2006, 26(16): 4266−4276 doi: 10.1523/JNEUROSCI.4353-05.2006

|

|

[123]

|

Benas S, Fernandez X, Kropff E. Modeled grid cells aligned by a flexible attractor. eLife, 2024, 12: Article No. RP89851 doi: 10.7554/eLife.89851.3

|

|

[124]

|

Campbell M G, Ocko S A, Mallory C S, Low I I C, Ganguli S, Giocomo L M. Principles governing the integration of landmark and self-motion cues in entorhinal cortical codes for navigation. Nature Neuroscience, 2018, 21(8): 1096−1106 doi: 10.1038/s41593-018-0189-y

|

|

[125]

|

Langdon C, Genkin M, Engel T A. A unifying perspective on neural manifolds and circuits for cognition. Nature Reviews Neuroscience, 2023, 24(6): 363−377 doi: 10.1038/s41583-023-00693-x

|

|

[126]

|

Tsodyks M, Sejnowski T. Associative memory and hippocampal place cells. International Journal of Neural Systems, 1995, 6(S1): 81−86

|

|

[127]

|

Solstad T, Moser E I, Einevoll G T. From grid cells to place cells: A mathematical model. Hippocampus, 2006, 16(12): 1026−1031 doi: 10.1002/hipo.20244

|

|

[128]

|

Sharma S, Chandra S, Fiete I R. Content addressable memory without catastrophic forgetting by heteroassociation with a fixed scaffold. In: Proceedings of the 39th International Conference on Machine Learning. Maryland, USA: JMLR.org, 2022. 19658−19682

|

|

[129]

|

Whittington J C R, Muller T H, Mark S, Chen G F, Barry C, Burgess N, et al. The Tolman-Eichenbaum machine: Unifying space and relational memory through generalization in the hippocampal formation. Cell, 2020, 183(5): 1249−1263 doi: 10.1016/j.cell.2020.10.024

|

|

[130]

|

Yim M Y, Sadun L A, Fiete I R, Taillefumier T. Place-cell capacity and volatility with grid-like inputs. eLife, 2021, 10: Article No. e62702 doi: 10.7554/eLife.62702

|

|

[131]

|

Agmon H, Burak Y. A theory of joint attractor dynamics in the hippocampus and the entorhinal cortex accounts for artificial remapping and grid cell field-to-field variability. eLife, 2020, 9: Article No. e56894 doi: 10.7554/eLife.56894

|

|

[132]

|

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521(7553): 436−444 doi: 10.1038/nature14539

|

|

[133]

|

Yamins D L K, Dicarlo J J. Using goal-driven deep learning models to understand sensory cortex. Nature Neuroscience, 2016, 19(3): 356−365 doi: 10.1038/nn.4244

|

|

[134]

|

Graves A, Mohamed A R, Hinton G. Speech recognition with deep recurrent neural networks. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing. Vancouver, Canada: IEEE, 2013. 6645−6649

|

|

[135]

|

Theis L, Bethge M. Generative image modeling using spatial LSTMs. In: Proceedings of the 29th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2015. 1927−1935

|

|

[136]

|

Low I I C, Giocomo L M, Williams A H. Remapping in a recurrent neural network model of navigation and context inference. eLife, 2023, 12: Article No. RP86943 doi: 10.7554/eLife.86943

|

|

[137]

|

Oord A V D, Kalchbrenner N, Kavukcuoglu K. Pixel recurrent neural networks. In: Proceedings of the 33rd International Conference on Machine Learning. New York, USA: JMLR.org, 2016. 1747−1756

|

|

[138]

|

Cueva C J, Wei X X. Emergence of grid-like representations by training recurrent neural networks to perform spatial localization. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, Canada: ICLR, 2018.

|

|

[139]

|

Banino A, Barry C, Uria B, Blundell C, Lillicrap T, Mirowski P, et al. Vector-based navigation using grid-like representations in artificial agents. Nature, 2018, 557(7705): 429−433 doi: 10.1038/s41586-018-0102-6

|

|

[140]

|

Aziz A, Sreeharsha P S S, Natesh R, Chakravarthy V S. An integrated deep learning-based model of spatial cells that combines self-motion with sensory information. Hippocampus, 2022, 32(10): 716−730 doi: 10.1002/hipo.23461

|

|

[141]

|

Sorscher B, Mel G C, Ocko S A, Giocomo L M, Ganguli S. A unified theory for the computational and mechanistic origins of grid cells. Neuron, 2023, 111(1): 121−137 doi: 10.1016/j.neuron.2022.10.003

|

|

[142]

|

Bellec G, Scherr F, Subramoney A, Hajek E, Salaj D, Legenstein R, et al. A solution to the learning dilemma for recurrent networks of spiking neurons. Nature Communications, 2020, 11(1): Article No. 3625 doi: 10.1038/s41467-020-17236-y

|

|

[143]

|

Li X, Chen X Y L, Guo R B, Wu Y J, Zhou Z T, Yu F W, et al. NeuroVE: Brain-inspired linear-angular velocity estimation with spiking neural networks. IEEE Robotics and Automation Letters, 2025, 10(3): 2375−2382 doi: 10.1109/LRA.2025.3529319

|

|

[144]

|

Sun Z, Cutsuridis V, Caiafa C F, Solé-Casals J. Brain simulation and spiking neural networks. Cognitive Computation, 2023, 15(4): 1103−1105 doi: 10.1007/s12559-023-10156-1

|

|

[145]

|

Stentiford R, Knowles T C, Pearson M J. A spiking neural network model of rodent head direction calibrated with landmark free learning. Frontiers in Neurorobotics, 2022, 16: Article No. 867019 doi: 10.3389/fnbot.2022.867019

|

|

[146]

|

Kreiser R, Cartiglia M, Martel J N P, Conradt J, Sandamirskaya Y. A neuromorphic approach to path integration: A head-direction spiking neural network with vision-driven reset. In: Proceedings of the IEEE International Symposium on Circuits and Systems. Florence, Italy: IEEE, 2018. 1−5

|

|

[147]

|

Knowles T C, Summerton A G, Whiting J G H, Pearson M J. Ring attractors as the basis of a biomimetic navigation system. Biomimetics, 2023, 8(5): Article No. 399 doi: 10.3390/biomimetics8050399

|

|

[148]

|

Sutton N M, Gutiérrez-Guzmán B E, Dannenberg H, Ascoli G A. A continuous attractor model with realistic neural and synaptic properties quantitatively reproduces grid cell physiology. International Journal of Molecular Sciences, 2024, 25(11): Article No. 6059 doi: 10.3390/ijms25116059

|

|

[149]

|

Furber S B, Galluppi F, Temple S, Plana L A. The spinnaker project. Proceedings of the IEEE, 2014, 102(5): 652−665 doi: 10.1109/JPROC.2014.2304638

|

|

[150]

|

Akopyan F, Sawada J, Cassidy A, Alvarez-Icaza R, Arthur J, Merolla P, et al. TrueNorth: Design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2015, 34(10): 1537−1557 doi: 10.1109/TCAD.2015.2474396

|

|

[151]

|

Davies M, Srinivasa N, Lin T H, Chinya G, Cao Y Q, Choday S H, et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro, 2018, 38(1): 82−99 doi: 10.1109/MM.2018.112130359

|

|

[152]

|

Pei J, Deng L, Song S, Zhao M G, Zhang Y H, Wu S, et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature, 2019, 572(7767): 106−111 doi: 10.1038/s41586-019-1424-8

|

|

[153]

|

张铁林, 徐波. 脉冲神经网络研究现状及展望. 计算机学报, 2021, 44(9): 1767−1785Zhang Tie-Lin, Xu Bo. Research advances and perspectives on spiking neural networks. Chinese Journal of Computers, 2021, 44(9): 1767−1785

|

|

[154]

|

Bachelder I A, Waxman A M. A view-based neurocomputational system for relational map-making and navigation in visual environments. Robotics and Autonomous Systems, 1995, 16(2−4): 267−289 doi: 10.1016/0921-8890(95)00051-8

|

|

[155]

|

Schmajuk N A, Buhusi C V. Spatial and temporal cognitive mapping: A neural network approach. Trends in Cognitive Sciences, 1997, 1(3): 109−114 doi: 10.1016/S1364-6613(97)89057-2

|

|

[156]

|

Milford M J, Wyeth G F, Prasser D. RatSLAM: A hippocampal model for simultaneous localization and mapping. In: Proceedings of the IEEE International Conference on Robotics and Automation. New Orleans, USA: IEEE, 2004. 403−408

|

|

[157]

|

Milford M J, Wyeth G F. Mapping a suburb with a single camera using a biologically inspired SLAM system. IEEE Transactions on Robotics, 2008, 24(5): 1038−1053 doi: 10.1109/TRO.2008.2004520

|

|

[158]

|

Glover A J, Maddern W P, Milford M J, Wyeth G F. FAB-MAP + RatSLAM: Appearance-based SLAM for multiple times of day. In: Proceedings of the IEEE International Conference on Robotics and Automation. Anchorage, USA: IEEE, 2010. 3507−3512

|

|

[159]

|

Tian B, Shim V A, Yuan M L, Srinivasan C, Tang H J, Li H Z. RGB-D based cognitive map building and navigation. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Tokyo, Japan: IEEE, 2013. 1562−1567

|

|

[160]

|

于乃功, 苑云鹤, 李倜, 蒋晓军, 罗子维. 一种基于海马认知机理的仿生机器人认知地图构建方法. 自动化学报, 2018, 44(1): 52−73Yu Nai-Gong, Yuan Yun-He, Li Ti, Jiang Xiao-Jun, Luo Zi-Wei. A cognitive map building algorithm by means of cognitive mechanism of hippocampus. Acta Automatica Sinica, 2018, 44(1): 52−73

|

|

[161]

|

Ball D, Heath S, Wiles J, Wyeth G, Corke P, Milford M. OpenRatSLAM: An open source brain-based SLAM system. Autonomous Robots, 2013, 34(3): 149−176 doi: 10.1007/s10514-012-9317-9

|

|

[162]

|

de Souza Muñoz M E, Menezes M C, de Freitas E P, Cheng S, de Almeida Ribeiro P R, de Almeida Neto A, et al. xRatSLAM: An extensible RatSLAM computational framework. Sensors, 2022, 22(21): Article No. 8305 doi: 10.3390/s22218305

|

|

[163]

|

Yuan M L, Tian B, Shim V A, Tang H J, Li H Z. An entorhinal-hippocampal model for simultaneous cognitive map building. In: Proceedings of the 29th AAAI Conference on Artificial Intelligence. Austin, USA: AAAI, 2015. 586−592

|

|

[164]

|

Yu N G, Zhai Y J, Yuan Y H, Wang Z X. A bionic robot navigation algorithm based on cognitive mechanism of hippocampus. IEEE Transactions on Automation Science and Engineering, 2019, 16(4): 1640−1652 doi: 10.1109/TASE.2019.2909638

|

|

[165]

|

Yu N G, Liao Y S, Yu H J, Sie O. Construction of the rat brain spatial cell firing model on a quadruped robot. CAAI Transactions on Intelligence Technology, 2022, 7(4): 732−743 doi: 10.1049/cit2.12091

|

|

[166]

|

Joseph T, Fischer T, Milford M. Trajectory tracking via multiscale continuous attractor networks. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Detroit, USA: IEEE, 2023. 368−375

|

|

[167]

|

Struckmeier O, Tiwari K, Salman M, Pearson M J, Kyrki V. ViTa-SLAM: A bio-inspired visuo-tactile SLAM for navigation while interacting with aliased environments. In: Proceedings of the IEEE International Conference on Cyborg and Bionic Systems. Munich, Germany: IEEE, 2019. 97−103

|

|

[168]

|

Salman M, Pearson M J. Whisker-RatSLAM applied to 6D object identification and spatial localisation. In: Proceedings of the 7th International Conference on Biomimetic and Biohybrid Systems. Paris, France: Springer, 2018. 403−414

|

|

[169]

|

Zhang W H, Wu S. Reciprocally coupled local estimators implement Bayesian information integration distributively. In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: Curran Associates Inc., 2013. 19−27

|

|

[170]

|

Zeng T P, Tang F Z, Ji D X, Si B L. NeuroBayesSLAM: Neurobiologically inspired Bayesian integration of multisensory information for robot navigation. Neural Networks, 2020, 126: 21−35 doi: 10.1016/j.neunet.2020.02.023

|

|

[171]

|

Tang H J, Yan R, Tan K C. Cognitive navigation by neuro-inspired localization, mapping, and episodic memory. IEEE Transactions on Cognitive and Developmental Systems, 2018, 10(3): 751−761 doi: 10.1109/TCDS.2017.2776965

|

|

[172]

|

Tang G Z, Michmizos K P. Gridbot: An autonomous robot controlled by a spiking neural network mimicking the brain's navigational system. In: Proceedings of the International Conference on Neuromorphic Systems. Knoxville, USA: ACM, 2018. Article No. 4

|

|

[173]

|

Tang G Z, Shah A, Michmizos K P. Spiking neural network on neuromorphic hardware for energy-efficient unidimensional SLAM. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Macao, China: IEEE, 2019. 4176−4181

|

|

[174]

|

Yu F W, Shang J G, Hu Y J, Milford M. NeuroSLAM: A brain-inspired SLAM system for 3D environments. Biological Cybernetics, 2019, 113(5): 515−545

|

|

[175]

|

Shen D, Liu G L, Li T C, Yu F W, Gu F Q, Xiao K, et al. ORB-NeuroSLAM: A brain-inspired 3-D SLAM system based on ORB features. IEEE Internet of Things Journal, 2024, 11(7): 12408−12418 doi: 10.1109/JIOT.2023.3335417

|

|

[176]

|

Muller R U, Stead M, Pach J. The hippocampus as a cognitive graph. The Journal of General Physiology, 1996, 107(6): 663−694 doi: 10.1085/jgp.107.6.663

|

|

[177]

|

Stachenfeld K L, Botvinick M M, Gershman S J. The hippocampus as a predictive map. Nature Neuroscience, 2017, 20(11): 1643−1653 doi: 10.1038/nn.4650

|

|

[178]

|

Bush D, Barry C, Manson D, Burgess N. Using grid cells for navigation. Neuron, 2015, 87(3): 507−520 doi: 10.1016/j.neuron.2015.07.006

|

|

[179]

|

阮晓钢, 柴洁, 武悦, 张晓平, 黄静. 基于海马体位置细胞的认知地图构建与导航. 自动化学报, 2021, 47(3): 666−677Ruan Xiao-Gang, Chai Jie, Wu Yue, Zhang Xiao-Ping, Huang Jing. Cognitive map construction and navigation based on hippocampal place cells. Acta Automatica Sinica, 2021, 47(3): 666−677

|

|

[180]

|

武悦, 阮晓钢, 黄静, 柴洁. 一种改进的皮层网络环境认知模型. 自动化学报, 2021, 47(6): 1401−1411Wu Yue, Ruan Xiao-Gang, Huang Jing, Chai Jie. An improved cortical network model for environment cognition. Acta Automatica Sinica, 2021, 47(6): 1401−1411

|

|

[181]

|

Edvardsen V, Bicanski A, Burgess N. Navigating with grid and place cells in cluttered environments. Hippocampus, 2020, 30(3): 220−232 doi: 10.1002/hipo.23147

|

|

[182]

|

Zou Q, Cong M, Liu D, Du Y. A neurobiologically inspired mapping and navigating framework for mobile robots. Neurocomputing, 2021, 460: 181−194 doi: 10.1016/j.neucom.2021.07.025

|

|

[183]

|

Zou Q, Wu C D, Cong M, Liu D. Brain cognition mechanism-inspired hierarchical navigation method for mobile robots. Journal of Bionic Engineering, 2024, 21(2): 852−865 doi: 10.1007/s42235-023-00449-4

|

|

[184]

|

Lowry S, Sünderhauf N, Newman P, Leonard J, Cox D, Corke P, et al. Visual place recognition: A survey. IEEE Transactions on Robotics, 2016, 32(1): 1−19 doi: 10.1109/TRO.2015.2496823

|

|

[185]

|

Yin P, Jiao J H, Zhao S Q, Xu L Y, Huang G Q, Choset H, et al. General place recognition survey: Toward real-world auton-omy. IEEE Transactions on Robotics, 2025, 41: 3019−3038 doi: 10.1109/TRO.2025.3550771

|

|

[186]

|

Yu N G, Wang L, Jiang X J, Yuan Y H. An improved bioinspired cognitive map-building system based on episodic memory recognition. International Journal of Advanced Robotic Systems, 2020, 17(3): Article No. 1729881420930948 doi: 10.1177/1729881420930948

|

|

[187]

|

Chen Z T, Jacobson A, Sünderhauf N, Upcroft B, Liu L Q, Shen C H, et al. Deep learning features at scale for visual place recognition. In: Proceedings of the IEEE International Conference on Robotics and Automation. Singapore: IEEE, 2017. 3223−3230

|

|

[188]

|

Arandjelovic R, Gronat P, Torii A, Pajdla T, Sivic J. NetVLAD: CNN architecture for weakly supervised place recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 5297−5307

|

|

[189]

|

Ma J Y, Zhang J, Xu J T, Ai R, Gu W H, Chen X Y L. OverlapTransformer: An efficient and yaw-angle-invariant Transformer network for LiDAR-based place recognition. IEEE Robotics and Automation Letters, 2022, 7(3): 6958−6965 doi: 10.1109/LRA.2022.3178797

|

|

[190]

|

Chancán M, Hernandez-Nunez L, Narendra A, Barron A B, Milford M. A hybrid compact neural architecture for visual place recognition. IEEE Robotics and Automation Letters, 2020, 5(2): 993−1000 doi: 10.1109/LRA.2020.2967324

|

|

[191]

|

Hussaini S, Milford M, Fischer T. Spiking neural networks for visual place recognition via weighted neuronal assignments. IEEE Robotics and Automation Letters, 2022, 7(2): 4094−4101 doi: 10.1109/LRA.2022.3149030

|

|

[192]

|

Hussaini S, Milford M, Fischer T. Ensembles of compact, region-specific & regularized spiking neural networks for scalable place recognition. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). London, United Kingdom: IEEE, 2023. 4200−4207

|

|

[193]

|

Hussaini S, Milford M, Fischer T. Applications of spiking neural networks in visual place recognition. IEEE Transactions on Robotics, 2025, 41: 518−537 doi: 10.1109/TRO.2024.3508053

|

|

[194]

|

Hines A D, Stratton P G, Milford M, Fischer T. VPRTempo: A fast temporally encoded spiking neural network for visual place recognition. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Yokohama, Japan: IEEE, 2024. 10200−10207

|

|

[195]

|

Yu F W, Wu Y J, Ma S C, Xu M K, Li H Y, Qu H Y, et al. Brain-inspired multimodal hybrid neural network for robot place recognition. Science Robotics, 2023, 8(78): Article No. eabm6996 doi: 10.1126/scirobotics.abm6996

|

下载:

下载: