-

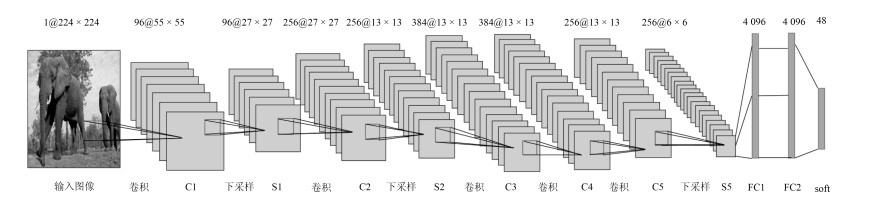

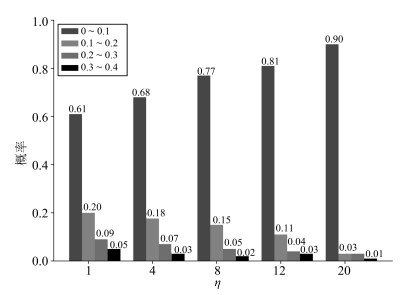

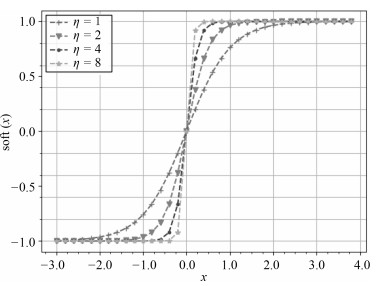

摘要: 哈希学习能够在保持数据之间语义相似性的同时, 将高维数据投影到低维的二值空间中以降低数据维度实现快速检索. 传统的监督型哈希学习算法主要是将手工设计特征作为模型输入, 通过分类和量化生成哈希码. 手工设计特征缺乏自适应性且独立于量化过程使得检索的准确率不高. 本文提出了一种基于点对相似度的深度非松弛哈希算法, 在卷积神经网络的输出端使用可导的软阈值函数代替常用的符号函数使准哈希码非线性接近-1或1, 将网络输出的结果直接用于计算训练误差, 在损失函数中使用$\ell_1$范数约束准哈希码的各个哈希位接近二值编码. 模型训练完成之后, 在网络模型外部使用符号函数, 通过符号函数量化生成低维的二值哈希码, 在低维的二值空间中进行数据的存储与检索. 在公开数据集上的实验表明, 本文的算法能够有效地提取图像特征并准确地生成二值哈希码, 且在准确率上优于其他算法.Abstract: Hashing aims to project high-dimensional data into low-dimensional binary space and at the same time preserve the semantic similarity in the original space for fast retrieval. Traditional supervised hashing methods adopt hand-crafted features for hashing function learning and then generate hash codes by classification and quantization. The lack of adaptability and independence of the quantization procedure lead to low retrieval precision of supervised hashing methods with hand-crafted features in image retrieval. In this paper, we present a novel deep non-relaxation hashing method based on point pair similarity. In our method, a differentiable soft thresholding function is used to encourage hash-like codes to approach -1 or 1 nonlinearly at the output of the convolutional neural network instead of the widely used symbol function for quantization. The output of the soft thresholding function is directly used to compute the error of the network training, and a loss function is elaborately designed with ${\ell_1}{\rm \text{-}norm}$ to constrain each bit of hash-like codes to be as binary as possible. Finally, the symbol function is added outside the trained network model to generate binary hash codes for image storage and retrieval in the low-dimensional binary space. Extensive experiments on two large-scale public datasets show that our method can effectively learn image features and generate accurate binary hash codes, and outperform the state-of-the-art methods in terms of mean average precision.

-

Key words:

- Hash learning /

- non-relaxation /

- convolutional neural network (CNN) /

- cross entropy

1) 本文责任编委 张敏灵 -

表 1 各种算法在CIFAR-10上的MAP

Table 1 The MAP of different algorithms on CIFAR-10

算法 12 bit 24 bit 32 bit 48 bit DNRH 0.726 0.749 0.753 0.768 DPSH 0.713 0.727 0.744 0.757 DSH 0.616 0.651 0.661 0.676 DHN 0.555 0.594 0.603 0.621 FP-CNNH 0.612 0.639 0.625 0.616 NINH 0.552 0.566 0.558 0.581 CNNH 0.439 0.511 0.509 0.532 SDH 0.285 0.329 0.341 0.356 KSH 0.316 0.390 0.412 0.458 MLH 0.182 0.195 0.207 0.211 BRE 0.159 0.181 0.193 0.196 ITQ 0.162 0.169 0.172 0.175 SH 0.127 0.128 0.126 0.129 表 2 各种算法在NUS-WIDE上的MAP

Table 2 The MAP of different algorithms on NUS-WIDE

算法 12 bit 24 bit 32 bit 48 bit DNRH 0.769 0.792 0.804 0.814 DPSH 0.747 0.788 0.792 0.806 DSH 0.548 0.551 0.558 0.562 DHN 0.708 0.735 0.748 0.758 FP-CNNH 0.622 0.628 0.631 0.625 NINH 0.674 0.697 0.713 0.715 CNNH 0.618 0.621 0.619 0.620 SDH 0.568 0.600 0.608 0.637 KSH 0.556 0.572 0.581 0.588 MLH 0.500 0.514 0.520 0.522 BRE 0.485 0.525 0.530 0.544 ITQ 0.452 0.468 0.472 0.477 SH 0.454 0.406 0.405 0.400 表 3 $\ell_1$范数和软阈值函数约束在CIFAR-10上的MAP

Table 3 The MAP of $\ell_1$-norm and soft threshold function constraint on CIFAR-10

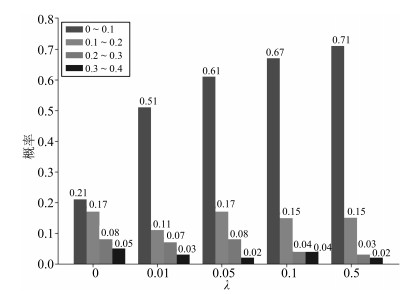

算法 12 bit 24 bit 36 bit 48 bit 交叉熵$+~\ell_1$范数+软阈值 0.726 0.749 0.753 0.768 DPSH 0.713 0.727 0.744 0.757 交叉熵+软阈值 0.613 0.636 0.662 0.688 交叉熵$+~\ell_1$范数 0.606 0.621 0.660 0.671 表 4 $\lambda$的不同取值对应的MAP

Table 4 The MAP on different $\lambda$

$\lambda$ CIFAR-10数据集 NUS-WIDE数据集 0 0.635 0.661 0.01 0.720 0.773 0.05 0.768 0.814 0.1 0.742 0.756 0.5 0.695 0.680 -

[1] 李武军, 周志华. 大数据哈希学习: 现状与趋势. 科学通报, 2015, 60(5-6): 485-490 https://www.cnki.com.cn/Article/CJFDTOTAL-KXTB2015Z1011.htmLi Wu-Jun, Zhou Zhi-Hua. Learning to hash for big data: current status and future trends. Chinese Science Bulletin, 2015, 60(5-6): 485-490 https://www.cnki.com.cn/Article/CJFDTOTAL-KXTB2015Z1011.htm [2] Raginsky M, Lazebnik S. Locality-sensitive binary codes from shift-invariant kernels. In: Proceedings of the 2009 Neural Information Processing Systems. Vancouver, BC, Canada: Curran Associates, Inc, 2009. 1509-1517 [3] Indyk P, Motwani R. Approximate nearest neighbor: towards removing the curse of dimensionality. In: Proceedings of the 30th Annual ACM Symposium on Theory of Computing. New York, NY, USA: ACM, 1998. 604-613 [4] Datar M, Immorlica N, Indyk P, Mirrokni V. Locality-sensitive hashing scheme based on p-stable distributions. In: Proceedings of the 20th Annual Symposium on Computational Geometry. New York, NY, USA: ACM, 2004. 253-262 [5] Kulis B, Grauman K. Kernelized locality-sensitive hashing. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(6): 1092-1104 doi: 10.1109/TPAMI.2011.219 [6] Weiss Y, Torralba A, Fergus R. Spectral hashing. In: Proceedings of the 22nd Annual Conference on Neural Information Processing Systems. British Columbia, Canada: MIT, 2008. 1753-1760 [7] Wold S, Esbensen K, Geladi P. Principal component analysis. Chemometrics Intelligent Laboratory Systems, 1987, 2(13): 37-52 http://www.stat.columbia.edu/~fwood/Teaching/w4315/Fall2009/pca.pdf [8] Gong Y, Lazebnik S. Iterative quantization: a procrustean approach to learning binary codes. In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition. Colorado, USA: IEEE, 2011. 817-824 [9] Norouzi M, Fleet D. Minimal loss hashing for compact binary codes. In: Proceedings of the 2011 International Conference on Machine Learning. Bellevue, Washington, USA: Omnipress, 2011. 353-360 [10] Liu W, Wang J, Ji R, Jiang Y, Chang S. Supervised hashing with kernels. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, Rhode Island, USA: IEEE, 2012. 2074-2081 [11] Shen F, Shen C, Liu W, Shen H. Supervised discrete hashing. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 37-45 [12] Alex K, Ilya S, Geoffrey E. ImageNet Classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing. USA: Curran Associates Inc, 2012. 1097-1105 [13] Zhao F, Huang Y, Wang L, Tan T. Deep semantic ranking based hashing for multi-label image retrieval. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, MA, USA: IEEE, 2015. 1556-1564 [14] 罗建豪, 吴建鑫. 基于深度卷积特征的细粒度图像分类研究综述. 自动化学报, 2017, 43(8): 1306-1318 doi: 10.16383/j.aas.2017.c160425Luo Jian-Hao, Wu Jian-Xin. A survey on fine-grained image categorization using deep convolutional features. Acta Automatica Sinica, 2017, 43(8): 1306-1318 doi: 10.16383/j.aas.2017.c160425 [15] 张慧, 王坤峰, 王飞跃. 深度学习在目标视觉检测中的应用进展与展望. 自动化学报, 2017, 43(8): 1289-1305 doi: 10.16383/j.aas.2017.c160822Zhang Hui, Wang Kun-Feng, Wang Fei-Yue. Advances and perspectives on applications of deep learning invisual object detection. Acta Automatica Sinica, 2017, 43(8): 1289-1305 doi: 10.16383/j.aas.2017.c160822 [16] 尹宏鹏, 陈波, 柴毅, 刘兆栋. 基于视觉的目标检测与跟踪综述. 自动化学报, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823Yin Hong-Peng, Chen Bo, Chai Yi, Liu Zhao-Dong. Vision-based object detection and tracking: a review. Acta Automatica Sinica, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823 [17] Xia R, Pan Y, Lai H, Liu C, Yan S. Supervised hashing for image retrieval via image representation learning. In: Proceedings of the 28th AAAI Conference on Artificial Intelligence. CA: AAAI, 2014. 2156-2162 [18] Liu H, Wang R, Shan S, Chen X. Deep supervised hashing for fast image retrieval. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. New York, USA: IEEE, 2016. 2064-2072 [19] Li W, Wang S, Kang W. Feature learning based deep supervised hashing with pairwise labels. In: Proceedings of the 25th International Joint Conference on Artificial Intelligence. New York, USA: AAAI Press, 2016. 1711-1717 [20] Cao Z, Long M, Wang J, Philip S. HashNet: deep learning to hash by continuation. In: Proceedings of the 2017 International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5609-5618 [21] Zhang P, Zhang W, Li W, Guo M. Supervised hashing with latent factor models. In: Proceedings ofthe 37th international ACM SIGIR conference on Research and development in information retrieval. New York, NY, USA: ACM, 2014. 173-182 [22] Kulis B, Darrell T. Learning to hash with binary reconstructive embeddings. In: Proceedings of the 2009 Advances in Neural Information Processing Systems. USA: Curran Associates Inc, 2009, 22: 1042-1050 [23] 刘冶, 潘炎, 夏榕楷, 刘荻, 印鉴. FP-CNNH: 一种基于深度卷积神经网络的快速图像哈希算法. 计算机科学, 2016, 43(9): 39-46 https://www.cnki.com.cn/Article/CJFDTOTAL-JSJA201609008.htmLiu Ye, Pan Yan, Xia Rong-Kai, Liu Di, Yin Jian. FP-CNNH: A fast image hashing algorithm based on deep convolutional neural network. Computer Science, 2016, 43(9): 39-46 https://www.cnki.com.cn/Article/CJFDTOTAL-JSJA201609008.htm [24] Lai H, Pan Y, Liu Y, Yan S. Simultaneous feature learning and hash coding with deep neural networks. In: Proceedings of the 2015 International Conference on Computer Vision and Pattern Recognition. Boston, MA, USA: IEEE, 2015. 3270-3278 [25] Zhu H, Long M, Wang J, Cao Y. Deep hashing network for efficient similarity retrieval. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, Arizona: AAAI Press, 2016. 2415-2421 [26] Abouelnaga Y, Ali O, Rady H, Moustafa M. CIFAR-10: KNN-Based ensemble of classifiers. In: Proceedings of the 2016 International Conference on Computational Science and Computational Intelligence. Las Vegas, NV, USA: IEEE, 2016. 1192-1195 [27] Chua T, Tang J, Hong R, Li H, Luo Z, Zheng Y. NUS-WIDE: A real-world web image database from National University of Singapore. In: Proceedings of the 2009 ACM International Conference on Image and Video Retrieval. New York, NY, USA: ACM, 2009. 368-375 [28] 姚涛, 孔祥维, 付海燕, Tian Qi. 基于映射字典学习的跨模态哈希检索. 自动化学报, 2018, 44(8): 1475-1485 doi: 10.16383/j.aas.2017.c160433Yao Tao, Kong Xiang-Wei, Fu Hai-Yan, Tian Qi. Projective dictionary learning hashing for cross-modal retrieval. Acta Automatica Sinica, 2018, 44(8): 1475-1485 doi: 10.16383/j.aas.2017.c160433 -

下载:

下载: